Aircraft Target Detection from Remote Sensing Images under Complex Meteorological Conditions

Abstract

1. Introduction

2. Algorithm Introduction

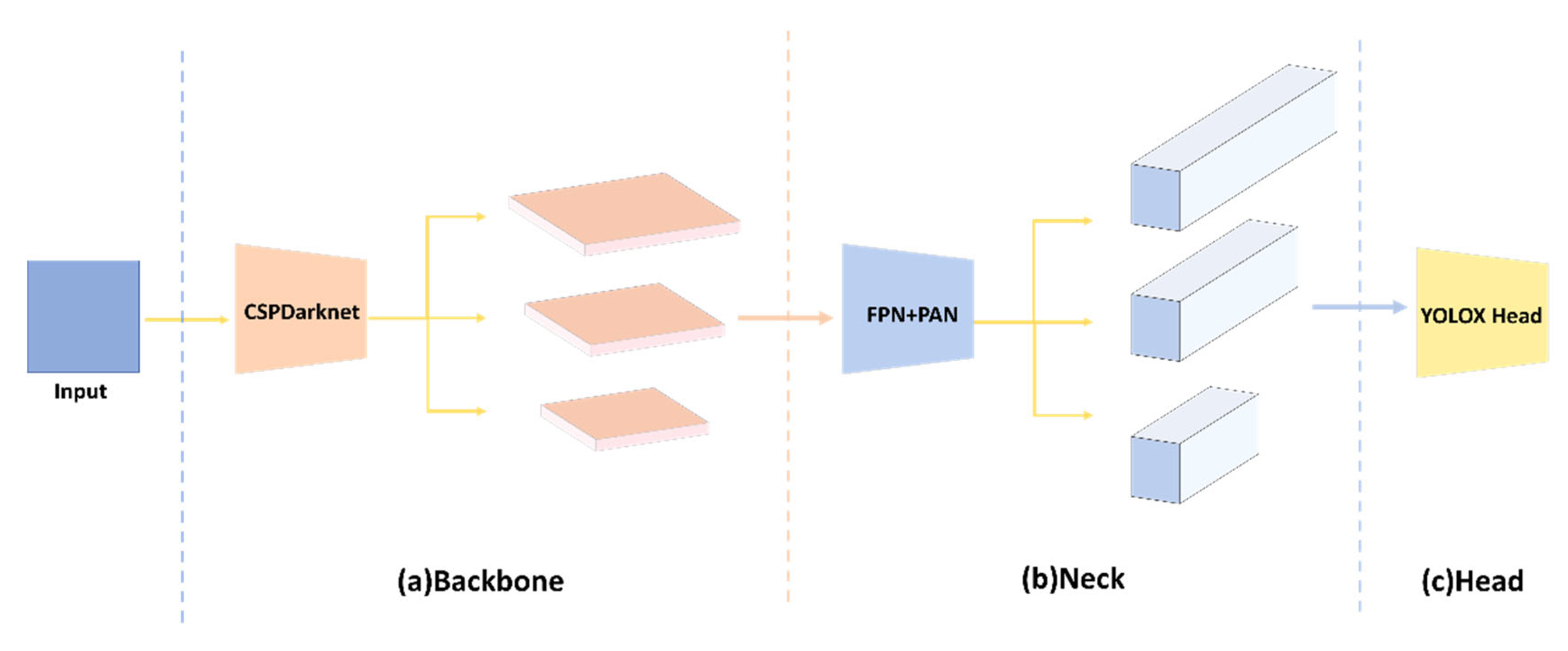

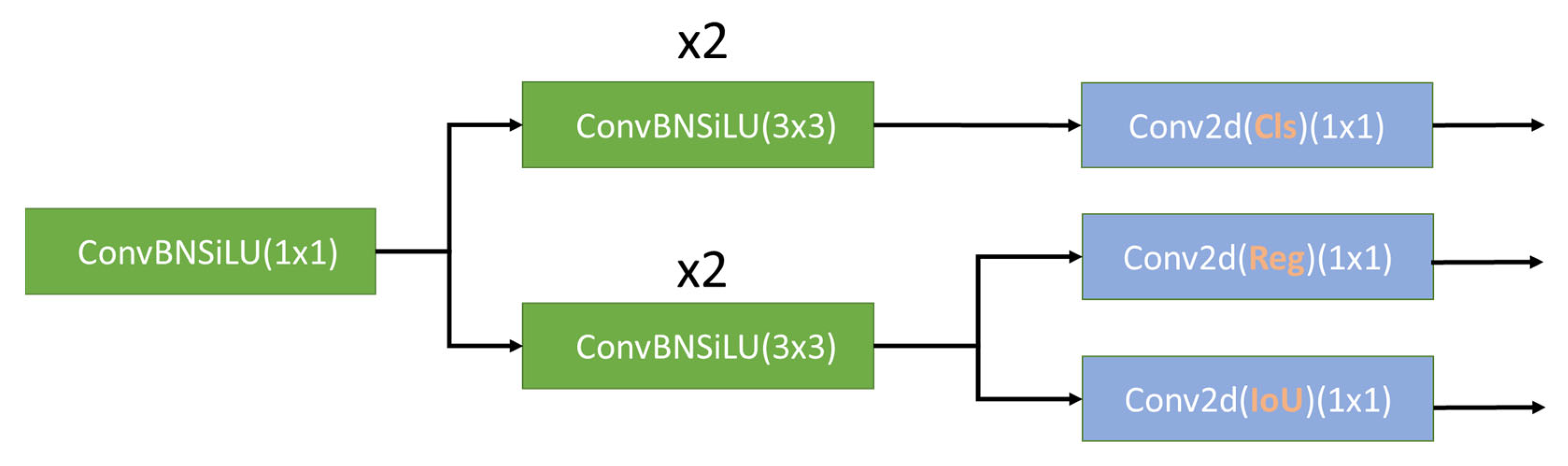

2.1. YOLOX Algorithm

2.2. YOLOX-DD Algorithm

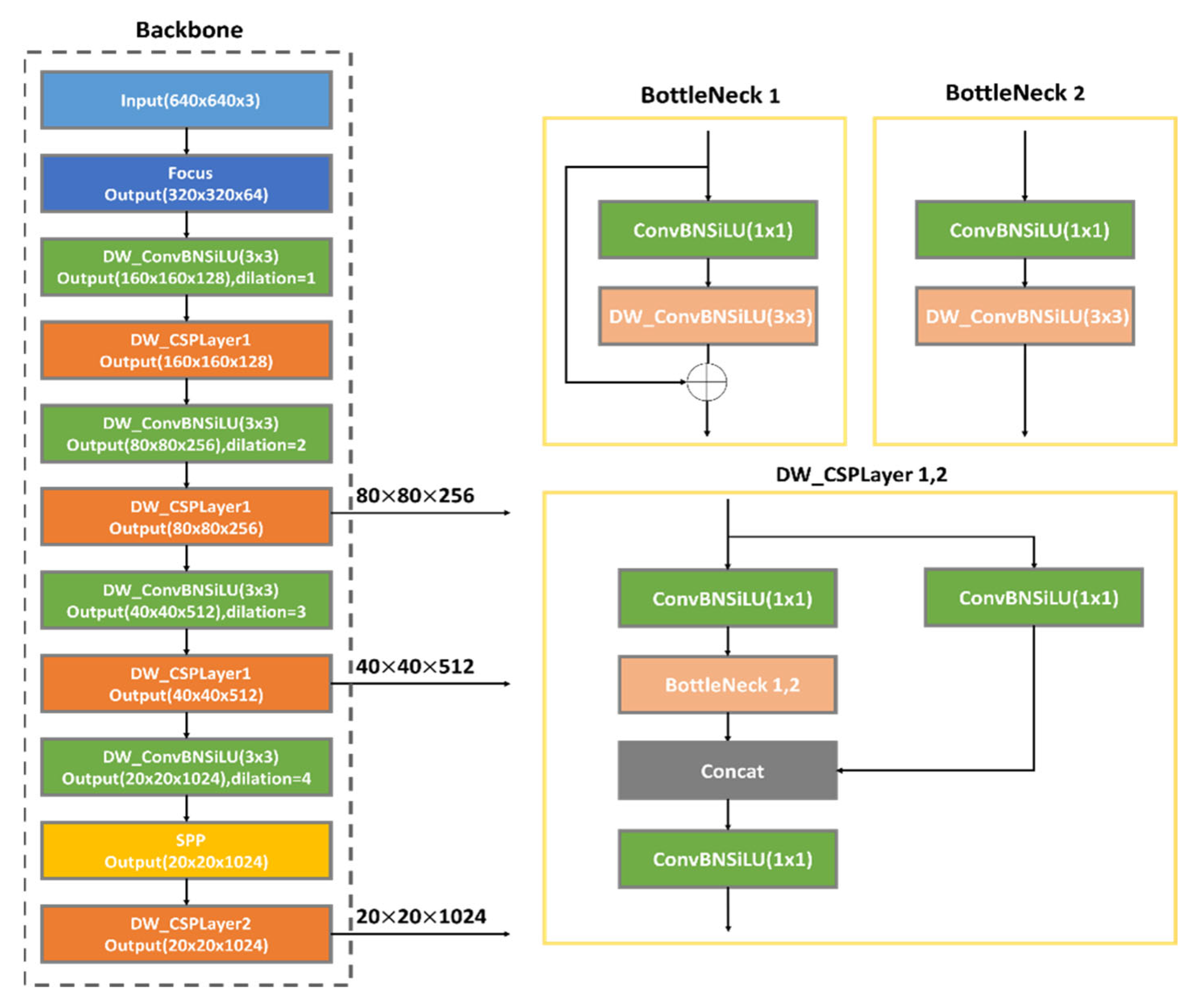

2.2.1. YOLOX-DD Backbone Network Structure

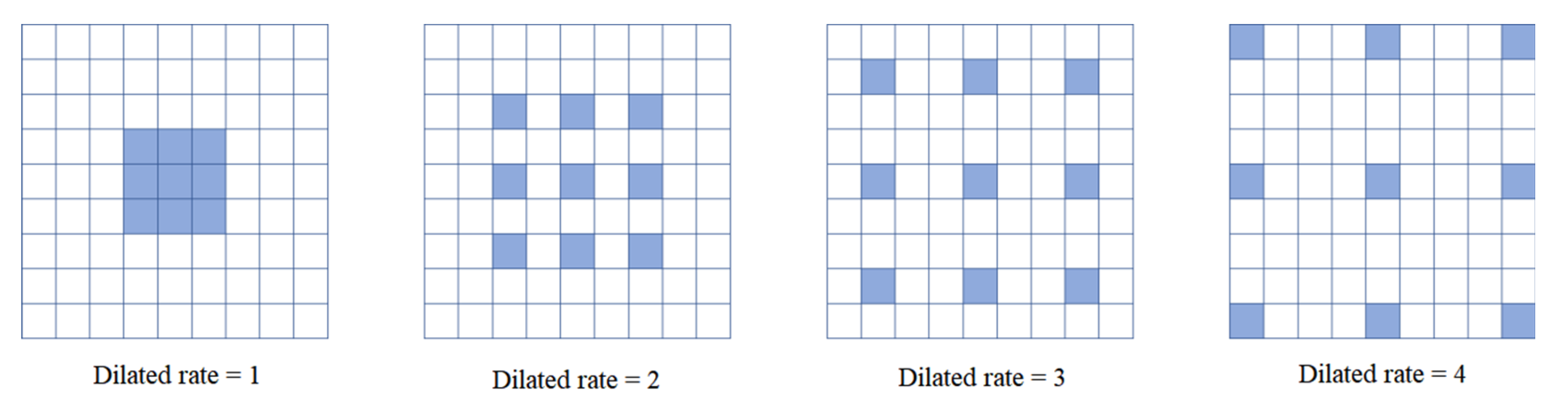

2.2.2. Dilated Convolution

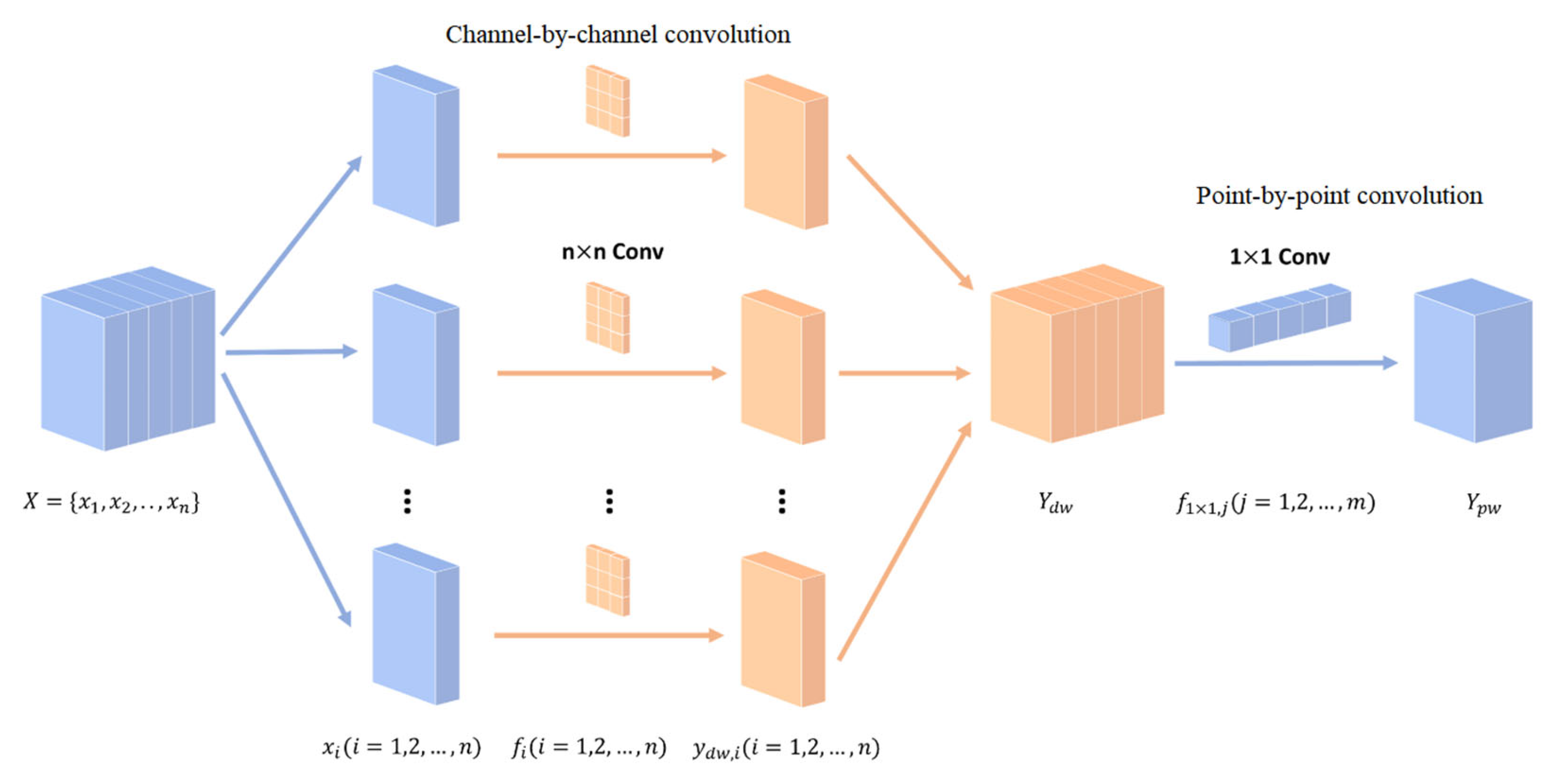

2.2.3. Depth-Separable Convolution

3. Experimental Results and Analysis

3.1. Surface Aircraft Dataset

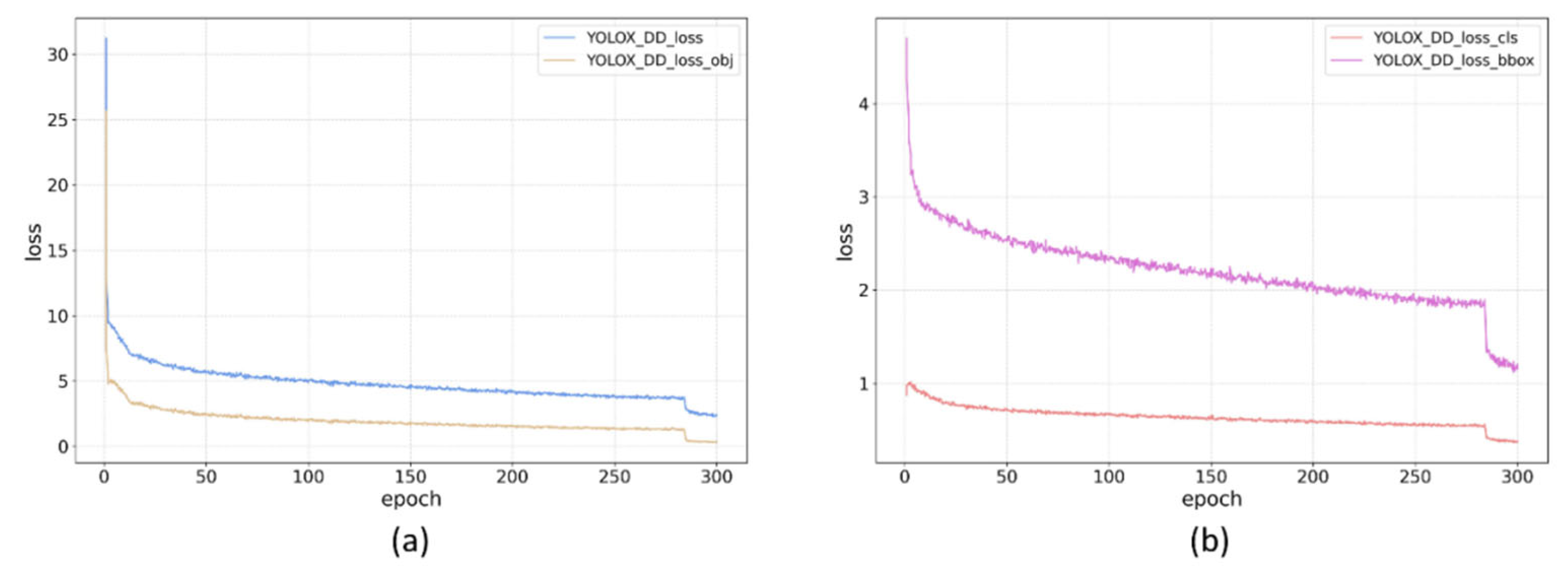

3.2. Network Training

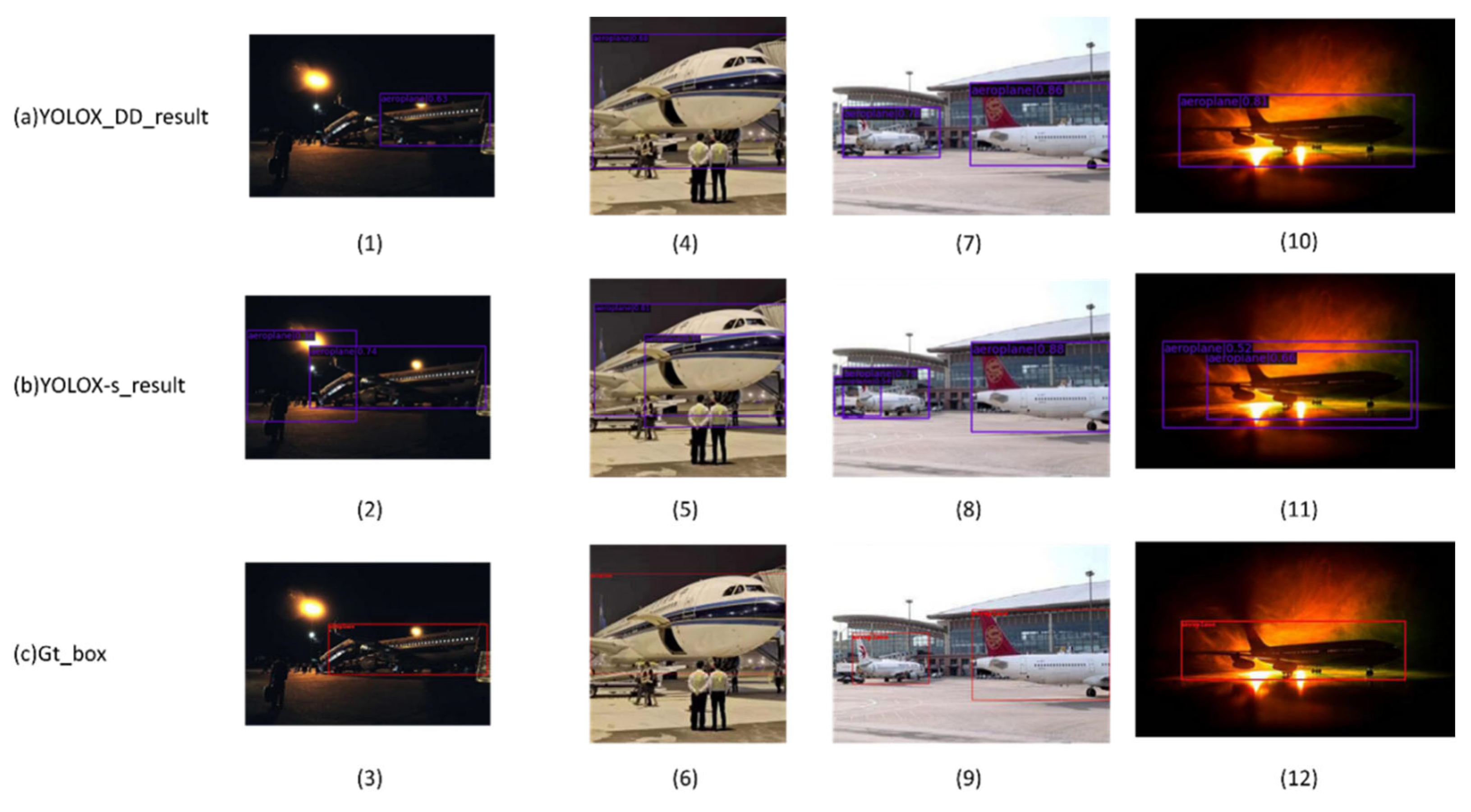

3.3. Results and Analysis

3.4. Ablation Experiments

3.5. Comparison of Different Models

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cheng, G.; Han, J. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object Detection in Optical Remote Sensing Images: A Survey and a New Benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Wu, Q.; Sun, H.; Sun, X.; Zhang, D.; Fu, K.; Wang, H. Aircraft Recognition in High-Resolution Optical Satellite Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2014, 12, 112–116. [Google Scholar]

- Bai, X.; Zhang, H.; Zhou, J. VHR Object Detection Based on Structural Feature Extraction and Query Expansion. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6508–6520. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-Based Fully Convolutional Networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Zhao, A.; Fu, K.; Sun, H.; Sun, X.; Li, F.; Zhang, D.; Wang, H. An effective method based on acf for aircraft detection in remote sensing images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 744–748. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An Incremental Improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1804–2767. [Google Scholar]

- Chen, Q.; Wang, Y.; Yang, T.; Zhang, X.; Cheng, J.; Sun, J. You Only Look One-Level Feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13039–13048. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Megvii Technology, YOLOX: Exceeding YOLO Series in 2021. arXiv 2021, arXiv:2107.08430v2. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 20 July 2023).

- Liu, W.; Ren, G.; Yu, R.; Guo, S.; Zhu, J.; Zhang, L. Image-Adaptive YOLO for Object Detection in Adverse Weather Conditions. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 22 February–1 March 2022; Volume 36, pp. 1792–1800. [Google Scholar]

- Li, C.; Qu, Z.; Wang, S.; Liu, L. A method of cross-layer fusion multi-object detection and recognition based on improved faster R-CNN model in complex traffic environment. Pattern Recognit. Lett. 2021, 145, 127–134. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, Z.; Huang, X. Weakly perceived object detection based on an improved CenterNet. Math. Biosci. Eng. 2022, 19, 12833–12851. [Google Scholar] [CrossRef] [PubMed]

| Category | Shade | Fog | Night | Rain | Sand | Snow |

|---|---|---|---|---|---|---|

| Train | 126 | 109 | 73 | 39 | 27 | 43 |

| Test | 28 | 31 | 19 | 10 | 8 | 10 |

| Total | 154 | 140 | 92 | 49 | 35 | 53 |

| Original |  |  |  |  |  |  |

| Labeled |  |  |  |  |  |  |

| Category | Improved Model AP/% | Original Model AP/% |

|---|---|---|

| Surface plane dataset (Test 420) | 94.8 | 92.7 |

| Obscuration (Test 28) | 73.1 | 68.5 |

| Fog (Test 31) | 82.6 | 87 |

| Night (Test 19) | 95.5 | 92.1 |

| Rainy (Test 10) | 95.5 | 88.7 |

| Sandy (Test 8) | 97.2 | 87.7 |

| Snowy (Test 10) | 92.3 | 90.6 |

| Params/M | 92.3 | 8.94 |

| Dila_conv | DW_conv | DW_csp | AP (Aeroplane)/% | Params/M |

|---|---|---|---|---|

| - | - | - | 92.7 | 8.94 |

| √ | - | - | 94.7 | 8.94 |

| - | √ | - | 92.3 | 7.55 |

| - | - | √ | 92.7 | 7.92 |

| - | √ | √ | 92.9 | 6.54 |

| √ | √ | √ | 94.8 | 6.54 |

| Algorithm Model | AP (Aeroplane)/% | Params/M |

|---|---|---|

| YOLOX-DD | 94.8 | 6.54 |

| YOLOv3 | 84.8 (−10.0) | 3.67 |

| Faster-R CNN | 86.0 (−8.8) | 41.13 |

| Center-Net | 83.9 (−10.9) | 14.43 |

| YOLOF | 91.5 (−3.3) | 42.09 |

| SSD | 92.6 (−2.2) | 23.88 |

| Algorithm Model | Shade AP/% | Fog AP/% | Night AP/% | Rain AP/% | Sand AP/% | Snow AP/% |

|---|---|---|---|---|---|---|

| YOLOX_DD | 73.1 | 82.6 | 95.5 | 74.4 | 97.2 | 92.3 |

| YOLOv3 | 57.3 | 78.5 | 78.3 | 84.8 | 76.9 | 95.1 |

| Faster-R CNN | 51.2 | 67.9 | 90.0 | 36.3 | 76.9 | 92.3 |

| Center-Net | 60.3 | 77.8 | 86.8 | 68.4 | 80.8 | 78.5 |

| YOLOF | 65.5 | 80.3 | 91.1 | 79.4 | 78.1 | 95.7 |

| SSD | 67.2 | 89.5 | 80.9 | 79.1 | 84.3 | 100.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, D.; Li, T.; Pan, Z.; Guo, J. Aircraft Target Detection from Remote Sensing Images under Complex Meteorological Conditions. Sustainability 2023, 15, 11463. https://doi.org/10.3390/su151411463

Zhong D, Li T, Pan Z, Guo J. Aircraft Target Detection from Remote Sensing Images under Complex Meteorological Conditions. Sustainability. 2023; 15(14):11463. https://doi.org/10.3390/su151411463

Chicago/Turabian StyleZhong, Dan, Tiehu Li, Zhang Pan, and Jinxiang Guo. 2023. "Aircraft Target Detection from Remote Sensing Images under Complex Meteorological Conditions" Sustainability 15, no. 14: 11463. https://doi.org/10.3390/su151411463

APA StyleZhong, D., Li, T., Pan, Z., & Guo, J. (2023). Aircraft Target Detection from Remote Sensing Images under Complex Meteorological Conditions. Sustainability, 15(14), 11463. https://doi.org/10.3390/su151411463