Intrusion Detection in Healthcare 4.0 Internet of Things Systems via Metaheuristics Optimized Machine Learning

Abstract

:1. Introduction

- A robust healthcare security system based on the XGBoost technique optimized by the proposed MFA.

- Modification proposals for the FA swarm metaheuristic.

- Improvements validated through extensive testing on a simulated dataset with comparison to eight other XGBoost-metaheuristic optimized solutions.

- Performance optimization based on the SHAP feature importance which clearly indicates which features contribute to which predicted class.

- Best performance interpretation using SHAP analysis for better transparency.

2. Background and Preliminaries

2.1. Literature Review

2.2. Extreme Gradient Boosting

2.3. Metaheuristics Approaches and Applications

2.4. Shapley Additive Explanations

3. Materials and Methods

3.1. Original Firefly Algorithm

- Initialization,

- Brightness calculation,

- Firefly movement,

- Brightness update,

- Steps 3 and 4 are repeated until satisfactory convergence or a defined number of iterations is reached.

- represents the distance between fireflies defined as i and j,

- is the attraction factor of fireflies,

- represents the absorption factor of light,

- determines the randomness factor,

- while represents the random vector.

3.2. Proposed Modified Firefly Algorithm

| Algorithm 1 Pseudocode of the suggested MFA |

|

4. Experiments

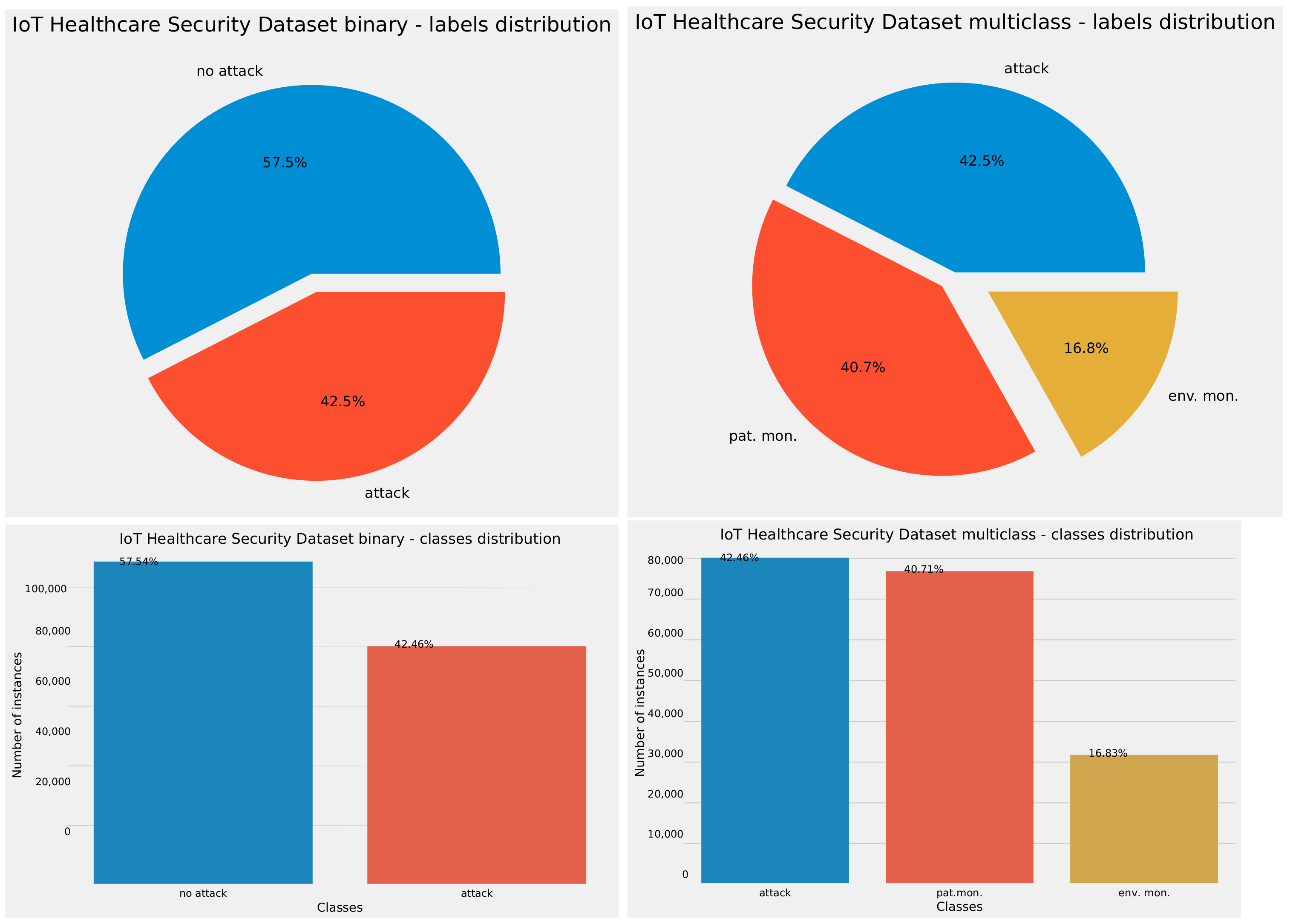

4.1. Datasets

- frame.time_delta,

- tcp.time_delta,

- tcp.flags.ack,

- tcp.flags.push,

- tcp.flags.reset,

- mqtt.hdrflags,

- mqtt.msgtype,

- mqtt.qos,

- mqtt.retain, and

- mqtt.ver.

- Class 0—No attack, environment monitoring,

- Class 1—No attack, patient monitoring, and

- Class 2—Attack.

4.2. Experimental Setup

- learning rate (), search limits: , continuous variable,

- , search limits: , continuous variable,

- subsample, search limits: , continuous variable,

- collsample_bytree, search limits: , continuous variable,

- max_depth, search limits: , integer variable and

- , search limits: , continuous variable.

4.3. Performance Metrics

5. Simulation Results, Comparative Analysis, Validation, and Interpretation

5.1. Experimental Findings and Comparative Analyssis

5.2. Statistical Validation

5.3. Best Models Results Interpretation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wehde, M. Healthcare 4.0. IEEE Eng. Manag. Rev. 2019, 47, 24–28. [Google Scholar] [CrossRef]

- Hathaliya, J.J.; Tanwar, S. An exhaustive survey on security and privacy issues in Healthcare 4.0. Comput. Commun. 2020, 153, 311–335. [Google Scholar] [CrossRef]

- Hathaliya, J.J.; Tanwar, S.; Tyagi, S.; Kumar, N. Securing electronics healthcare records in healthcare 4.0: A biometric-based approach. Comput. Electr. Eng. 2019, 76, 398–410. [Google Scholar] [CrossRef]

- Amjad, A.; Kordel, P.; Fernandes, G. A Review on Innovation in Healthcare Sector (Telehealth) through Artificial Intelligence. Sustainability 2023, 15, 6655. [Google Scholar] [CrossRef]

- Krishnamoorthy, S.; Dua, A.; Gupta, S. Role of emerging technologies in future IoT-driven Healthcare 4.0 technologies: A survey, current challenges and future directions. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 361–407. [Google Scholar] [CrossRef]

- Ali, M.H.; Jaber, M.M.; Abd, S.K.; Rehman, A.; Awan, M.J.; Damaševičius, R.; Bahaj, S.A. Threat Analysis and Distributed Denial of Service (DDoS) Attack Recognition in the Internet of Things (IoT). Electronics 2022, 11, 494. [Google Scholar] [CrossRef]

- Padmashree, A.; Krishnamoorthi, M. Decision Tree with Pearson Correlation-based Recursive Feature Elimination Model for Attack Detection in IoT Environment. Inf. Technol. Control 2022, 51, 771–785. [Google Scholar] [CrossRef]

- Rana, S.K.; Rana, S.K.; Nisar, K.; Ag Ibrahim, A.A.; Rana, A.K.; Goyal, N.; Chawla, P. Blockchain technology and Artificial Intelligence based decentralized access control model to enable secure interoperability for healthcare. Sustainability 2022, 14, 9471. [Google Scholar] [CrossRef]

- Guezzaz, A.; Azrour, M.; Benkirane, S.; Mohy-Eddine, M.; Attou, H.; Douiba, M. A lightweight hybrid intrusion detection framework using machine learning for edge-based IIoT security. Int. Arab. J. Inf. Technol. 2022, 19, 822–830. [Google Scholar] [CrossRef]

- Wen, Y.; Liu, L. Comparative Study on Low-Carbon Strategy and Government Subsidy Model of Pharmaceutical Supply Chain. Sustainability 2023, 15, 8345. [Google Scholar] [CrossRef]

- Ksibi, A.; Mhamdi, H.; Ayadi, M.; Almuqren, L.; Alqahtani, M.S.; Ansari, M.D.; Sharma, A.; Hedi, S. Secure and Fast Emergency Road Healthcare Service Based on Blockchain Technology for Smart Cities. Sustainability 2023, 15, 5748. [Google Scholar] [CrossRef]

- Panagiotou, D.K.; Dounis, A.I. An ANFIS-Fuzzy Tree-GA Model for a Hospital’s Electricity Purchasing Decision-Making Process Integrated with Virtual Cost Concept. Sustainability 2023, 15, 8419. [Google Scholar] [CrossRef]

- Joyce, T.; Herrmann, J.M. A review of no free lunch theorems, and their implications for metaheuristic optimisation. Nat.-Inspired Algorithms Appl. Optim. 2018, 744, 27–51. [Google Scholar]

- Venckauskas, A.; Stuikys, V.; Damasevicius, R.; Jusas, N. Modelling of Internet of Things units for estimating security-energy-performance relationships for quality of service and environment awareness. Secur. Commun. Netw. 2016, 9, 3324–3339. [Google Scholar] [CrossRef]

- Ahmad, W.; Rasool, A.; Javed, A.R.; Baker, T.; Jalil, Z. Cyber security in iot-based cloud computing: A comprehensive survey. Electronics 2022, 11, 16. [Google Scholar] [CrossRef]

- Thamilarasu, G.; Odesile, A.; Hoang, A. An intrusion detection system for internet of medical things. IEEE Access 2020, 8, 181560–181576. [Google Scholar] [CrossRef]

- Hussain, F.; Abbas, S.G.; Shah, G.A.; Pires, I.M.; Fayyaz, U.U.; Shahzad, F.; Garcia, N.M.; Zdravevski, E. A framework for malicious traffic detection in IoT healthcare environment. Sensors 2021, 21, 3025. [Google Scholar] [CrossRef]

- Hussain, F.; Hussain, R.; Hassan, S.A.; Hossain, E. Machine learning in IoT security: Current solutions and future challenges. IEEE Commun. Surv. Tutor. 2020, 22, 1686–1721. [Google Scholar] [CrossRef]

- Dadkhah, S.; Mahdikhani, H.; Danso, P.K.; Zohourian, A.; Truong, K.A.; Ghorbani, A.A. Towards the development of a realistic multidimensional IoT profiling dataset. In Proceedings of the 2022 19th Annual International Conference on Privacy, Security & Trust (PST), Fredericton, NB, Canada, 22–24 August 2022; IEEE: New York City, NY, USA, 2022; pp. 1–11. [Google Scholar]

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H.; Chen, K.; Mitchell, R.; Cano, I.; Zhou, T.; et al. Xgboost: Extreme gradient boosting. R Package Version 0.4-2 2015, 1, 1–4. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Gupta, A.; Gusain, K.; Popli, B. Verifying the value and veracity of extreme gradient boosted decision trees on a variety of datasets. In Proceedings of the 2016 11th International Conference on Industrial and Information Systems (ICIIS), Roorkee, India, 3–4 December 2016; IEEE: New York City, NY, USA, 2016; pp. 457–462. [Google Scholar]

- Wu, Y.; Zhang, Q.; Hu, Y.; Sun-Woo, K.; Zhang, X.; Zhu, H.; Li, S. Novel binary logistic regression model based on feature transformation of XGBoost for type 2 Diabetes Mellitus prediction in healthcare systems. Future Gener. Comput. Syst. 2022, 129, 1–12. [Google Scholar] [CrossRef]

- Stegherr, H.; Heider, M.; Hähner, J. Classifying Metaheuristics: Towards a unified multi-level classification system. Nat. Comput. 2020, 21, 155–171. [Google Scholar] [CrossRef]

- Emmerich, M.; Shir, O.M.; Wang, H. Evolution strategies. In Handbook of Heuristics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 89–119. [Google Scholar]

- Fausto, F.; Reyna-Orta, A.; Cuevas, E.; Andrade, Á.G.; Perez-Cisneros, M. From ants to whales: Metaheuristics for all tastes. Artif. Intell. Rev. 2020, 53, 753–810. [Google Scholar] [CrossRef]

- Khurma, R.A.; Aljarah, I.; Sharieh, A.; Elaziz, M.A.; Damaševičius, R.; Krilavičius, T. A Review of the Modification Strategies of the Nature Inspired Algorithms for Feature Selection Problem. Mathematics 2022, 10, 464. [Google Scholar] [CrossRef]

- Beni, G. Swarm intelligence. In Complex Social and Behavioral Systems: Game Theory and Agent-Based Models; Springer: New York, NY, USA, 2020; pp. 791–818. [Google Scholar]

- Abraham, A.; Guo, H.; Liu, H. Swarm intelligence: Foundations, perspectives and applications. In Swarm Intelligent Systems; Springer: Berlin/Heidelberg, Germany, 2006; pp. 3–25. [Google Scholar]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; IEEE: New York, NY, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Yang, X.S. A new metaheuristic bat-inspired algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar]

- Yang, X.S.; Gandomi, A.H. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Yang, X.S. Firefly algorithms for multimodal optimization. In Proceedings of the International Symposium on Stochastic Algorithms, Sapporo, Japan, 26–28 October 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 169–178. [Google Scholar]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Zivkovic, M.; Bacanin, N.; Venkatachalam, K.; Nayyar, A.; Djordjevic, A.; Strumberger, I.; Al-Turjman, F. COVID-19 cases prediction by using hybrid machine learning and beetle antennae search approach. Sustain. Cities Soc. 2021, 66, 102669. [Google Scholar] [CrossRef]

- Bacanin, N.; Jovanovic, L.; Zivkovic, M.; Kandasamy, V.; Antonijevic, M.; Deveci, M.; Strumberger, I. Multivariate energy forecasting via metaheuristic tuned long-short term memory and gated recurrent unit neural networks. Inf. Sci. 2023, 642, 119122. [Google Scholar] [CrossRef]

- Al-Qaness, M.A.; Ewees, A.A.; Abualigah, L.; AlRassas, A.M.; Thanh, H.V.; Abd Elaziz, M. Evaluating the applications of dendritic neuron model with metaheuristic optimization algorithms for crude-oil-production forecasting. Entropy 2022, 24, 1674. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Molnar, C. Interpretable Machine Learning; Lulu. Com: Morrisville, NC, USA, 2020. [Google Scholar]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef] [PubMed]

- Stojić, A.; Stanić, N.; Vuković, G.; Stanišić, S.; Perišić, M.; Šoštarić, A.; Lazić, L. Explainable extreme gradient boosting tree-based prediction of toluene, ethylbenzene and xylene wet deposition. Sci. Total Environ. 2019, 653, 140–147. [Google Scholar] [CrossRef] [PubMed]

- Bezdan, T.; Cvetnic, D.; Gajic, L.; Zivkovic, M.; Strumberger, I.; Bacanin, N. Feature Selection by Firefly Algorithm with Improved Initialization Strategy. In Proceedings of the 7th Conference on the Engineering of Computer Based Systems, Novi Sad, Serbia, 26–27 May 2021; pp. 1–8. [Google Scholar]

- Bacanin, N.; Zivkovic, M.; Bezdan, T.; Venkatachalam, K.; Abouhawwash, M. Modified firefly algorithm for workflow scheduling in cloud-edge environment. Neural Comput. Appl. 2022, 34, 9043–9068. [Google Scholar] [CrossRef]

- Liang, J.J.; Qu, B.; Gong, D.; Yue, C. Problem Definitions and Evaluation Criteria for the CEC 2019 Special Session on Multimodal Multiobjective Optimization; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, 2019. [Google Scholar]

- Liang, J.; Suganthan, P.; Qu, B.; Gong, D.; Yue, C. Problem Definitions and Evaluation Criteria for the CEC 2020 Special Session on Multimodal Multiobjective Optimization; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China, 2019. [Google Scholar] [CrossRef]

- Hussain, F. IoT Healthcare Security Dataset. 2023. Available online: https://www.kaggle.com/datasets/faisalmalik/iot-healthcare-security-dataset (accessed on 14 July 2023).

- Vaccari, I.; Chiola, G.; Aiello, M.; Mongelli, M.; Cambiaso, E. MQTTset, a new dataset for machine learning techniques on MQTT. Sensors 2020, 20, 6578. [Google Scholar] [CrossRef] [PubMed]

- Goldberg, D.E.; Richardson, J. Genetic algorithms with sharing for multimodal function optimization. In Proceedings of the Genetic Algorithms and Their Applications: Proceedings of the Second International Conference on Genetic Algorithms, Hillsdale, NJ, USA, 28–31 July 1987; Lawrence Erlbaum: Hillsdale, NJ, USA, 1987; Volume 4149. [Google Scholar]

- Mirjalili, S. Genetic algorithm. In Evolutionary Algorithms and Neural Networks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 43–55. [Google Scholar]

- Karaboga, D.; Basturk, B. On the performance of artificial bee colony (ABC) algorithm. Appl. Soft Comput. 2008, 8, 687–697. [Google Scholar] [CrossRef]

- Khishe, M.; Mosavi, M.R. Chimp optimization algorithm. Expert Syst. Appl. 2020, 149, 113338. [Google Scholar] [CrossRef]

- Gurrola-Ramos, J.; Hernàndez-Aguirre, A.; Dalmau-Cedeño, O. COLSHADE for real-world single-objective constrained optimization problems. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Zhao, J.; Zhang, B.; Guo, X.; Qi, L.; Li, Z. Self-Adapting Spherical Search Algorithm with Differential Evolution for Global Optimization. Mathematics 2022, 10, 4519. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M.N. A review on evaluation metrics for data classification evaluations. Int. J. Data Min. Knowl. Manag. Process. 2015, 5, 1–11. [Google Scholar]

- Arabameri, A.; Chen, W.; Lombardo, L.; Blaschke, T.; Tien Bui, D. Hybrid computational intelligence models for improvement gully erosion assessment. Remote Sens. 2020, 12, 140. [Google Scholar] [CrossRef]

- Pepe, M.S.; Janes, H.; Longton, G.M.; Leisenring, W.; Newcomb, P. Limitations of the Odds Ratio in Gauging the Performance of a Diagnostic or Prognostic Marker. Am. J. Epidemiol. 2004, 159, 882–890. [Google Scholar] [CrossRef] [PubMed]

- Van Den Eeckhaut, M.; Vanwalleghem, T.; Poesen, J.; Govers, G.; Verstraeten, G.; Vandekerckhove, L. Prediction of landslide susceptibility using rare events logistic regression: A case-study in the Flemish Ardennes (Belgium). Geomorphology 2006, 76, 392–410. [Google Scholar] [CrossRef]

- Warrens, M.J. Five ways to look at Cohen’s kappa. J. Psychol. Psychother. 2015, 5, 4. [Google Scholar] [CrossRef]

- LaTorre, A.; Molina, D.; Osaba, E.; Poyatos, J.; Del Ser, J.; Herrera, F. A prescription of methodological guidelines for comparing bio-inspired optimization algorithms. Swarm Evol. Comput. 2021, 67, 100973. [Google Scholar] [CrossRef]

- Shapiro, S.S.; Francia, R. An approximate analysis of variance test for normality. J. Am. Stat. Assoc. 1972, 67, 215–216. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 196–202. [Google Scholar]

| Classification Error (Objective) | Cohen’s Kappa | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Best | Worst | Mean | Median | Std | Var | Best | Worst | Mean | Median | Std | Var |

| XG-MFA | 0.003003 | 0.003091 | 0.003036 | 0.003030 | 0.993852 | 0.993672 | 0.993785 | 0.993798 | ||||

| XG-FA | 0.003038 | 0.003303 | 0.003153 | 0.003153 | 0.993780 | 0.993236 | 0.993545 | 0.993544 | ||||

| XG-GA | 0.003038 | 0.003197 | 0.003096 | 0.003091 | 0.993781 | 0.993454 | 0.993662 | 0.993671 | ||||

| XG-PSO | 0.003074 | 0.003215 | 0.003125 | 0.003109 | 0.993708 | 0.993418 | 0.993604 | 0.993635 | ||||

| XG-ABC | 0.003109 | 0.003374 | 0.003213 | 0.003171 | 0.993636 | 0.993092 | 0.993422 | 0.993508 | ||||

| XG-SSA | 0.003038 | 0.003233 | 0.003138 | 0.003144 | 0.993781 | 0.993381 | 0.993576 | 0.993563 | ||||

| XG-ChOA | 0.003038 | 0.003180 | 0.003105 | 0.003100 | 0.993780 | 0.993490 | 0.993644 | 0.993653 | ||||

| XG-COLSHADE | 0.003021 | 0.003215 | 0.003102 | 0.003091 | 0.993817 | 0.993419 | 0.993649 | 0.993671 | ||||

| XG-SASS | 0.003021 | 0.003162 | 0.003105 | 0.003118 | 0.993815 | 0.993526 | 0.993644 | 0.993616 | ||||

| Method | Metric | 0 | 1 | Macro Avg | Weighted Avg |

|---|---|---|---|---|---|

| XG-MFA | precision | 0.996567 | 0.997582 | 0.997074 | 0.996998 |

| recall | 0.998219 | 0.995341 | 0.996780 | 0.996997 | |

| f1-score | 0.997392 | 0.996460 | 0.996926 | 0.996996 | |

| XG-FA | precision | 0.996628 | 0.997416 | 0.997022 | 0.996962 |

| recall | 0.998096 | 0.995424 | 0.996760 | 0.996962 | |

| f1-score | 0.997362 | 0.996419 | 0.996890 | 0.996961 | |

| XG-GA | precision | 0.996780 | 0.997208 | 0.996994 | 0.996962 |

| recall | 0.997943 | 0.995632 | 0.996787 | 0.996962 | |

| f1-score | 0.997361 | 0.996420 | 0.996890 | 0.996961 | |

| XG-PSO | precision | 0.996536 | 0.997457 | 0.996997 | 0.996927 |

| recall | 0.998127 | 0.995299 | 0.996713 | 0.996926 | |

| f1-score | 0.997331 | 0.996377 | 0.996854 | 0.996927 | |

| XG-ABC | precision | 0.996506 | 0.997415 | 0.996960 | 0.996892 |

| recall | 0.998096 | 0.995258 | 0.996677 | 0.996891 | |

| f1-score | 0.997300 | 0.996335 | 0.996818 | 0.996891 | |

| XG-SSA | precision | 0.996841 | 0.997125 | 0.996983 | 0.996962 |

| recall | 0.997882 | 0.995715 | 0.996798 | 0.996962 | |

| f1-score | 0.997361 | 0.996420 | 0.996890 | 0.996961 | |

| XG-ChOA | precision | 0.996293 | 0.997872 | 0.997083 | 0.996964 |

| recall | 0.998434 | 0.994966 | 0.996700 | 0.996962 | |

| f1-score | 0.997362 | 0.996417 | 0.996890 | 0.996961 | |

| XG-COLSHADE | precision | 0.996719 | 0.997333 | 0.997026 | 0.996980 |

| recall | 0.998035 | 0.995549 | 0.996792 | 0.996979 | |

| f1-score | 0.997377 | 0.996440 | 0.996908 | 0.996979 | |

| XG-SASS | precision | 0.996020 | 0.998288 | 0.997154 | 0.996983 |

| recall | 0.998741 | 0.994592 | 0.996667 | 0.996979 | |

| f1-score | 0.997379 | 0.996437 | 0.996908 | 0.996979 | |

| support | 32,571 | 24,038 | 56,609 | 56,609 |

| Method | Learning Rate | Min Child Weight | Subsample | Colsample by Tree | Max Depth | Gamma |

|---|---|---|---|---|---|---|

| XG-MFA | 0.826864 | 1.781749 | 0.801824 | 0.663691 | 10 | 0.120070 |

| XG-FA | 0.900000 | 1.128921 | 0.793675 | 0.871647 | 10 | 0.800000 |

| XG-GA | 0.788252 | 1.000000 | 1.000000 | 1.000000 | 10 | 0.000000 |

| XG-PSO | 0.602643 | 1.000000 | 0.680449 | 1.000000 | 10 | 0.800000 |

| XG-ABC | 0.900000 | 1.000000 | 0.673743 | 1.000000 | 7 | 0.323973 |

| XG-SSA | 0.748588 | 1.216229 | 1.000000 | 1.000000 | 10 | 0.023409 |

| XG-ChOA | 0.774825 | 1.000000 | 1.000000 | 1.000000 | 10 | 0.567155 |

| XG-COLSHADE | 0.900000 | 2.324410 | 0.826696 | 0.781224 | 10 | 0.259289 |

| XG-SASS | 0.900000 | 1.000000 | 0.980985 | 0.632832 | 8 | 0.149104 |

| Cohen’s Kappa (Objective) | Classification Error | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Best | Worst | Mean | Median | Std | Var | Best | Worst | Mean | Median | Std | Var |

| XG-MFA | 0.993817 | 0.993707 | 0.993780 | 0.993780 | 0.003021 | 0.003074 | 0.003038 | 0.003038 | ||||

| XG-FA | 0.993746 | 0.993345 | 0.993577 | 0.993581 | 0.003056 | 0.003250 | 0.003138 | 0.003136 | ||||

| XG-GA | 0.993707 | 0.993454 | 0.993621 | 0.993635 | 0.003074 | 0.003197 | 0.003116 | 0.003109 | ||||

| XG-PSO | 0.993744 | 0.993526 | 0.993644 | 0.993689 | 0.003056 | 0.003162 | 0.003105 | 0.003083 | ||||

| XG-ABC | 0.993707 | 0.993200 | 0.993413 | 0.993381 | 0.003074 | 0.003321 | 0.003217 | 0.003233 | ||||

| XG-SSA | 0.993745 | 0.993562 | 0.993690 | 0.993707 | 0.003056 | 0.003144 | 0.003083 | 0.003074 | ||||

| XG-ChOA | 0.993744 | 0.993164 | 0.993585 | 0.993617 | 0.003056 | 0.003339 | 0.003133 | 0.003118 | ||||

| XG-COLSHADE | 0.993780 | 0.993164 | 0.993545 | 0.993581 | 0.003038 | 0.003339 | 0.003153 | 0.003136 | ||||

| XG-SASS | 0.993781 | 0.993345 | 0.993604 | 0.993617 | 0.003038 | 0.003250 | 0.003125 | 0.003118 | ||||

| Method | Metric | 0 | 1 | Macro Avg | Weighted Avg |

|---|---|---|---|---|---|

| XG-MFA | precision | 0.996811 | 0.997208 | 0.997010 | 0.996980 |

| recall | 0.997943 | 0.995674 | 0.996808 | 0.996979 | |

| f1-score | 0.997376 | 0.996440 | 0.996908 | 0.996979 | |

| XG-FA | precision | 0.996780 | 0.997167 | 0.996973 | 0.996944 |

| recall | 0.997912 | 0.995632 | 0.996772 | 0.996944 | |

| f1-score | 0.997346 | 0.996399 | 0.996872 | 0.996944 | |

| XG-GA | precision | 0.996080 | 0.998080 | 0.997080 | 0.996929 |

| recall | 0.998588 | 0.994675 | 0.996631 | 0.996926 | |

| f1-score | 0.997332 | 0.996376 | 0.996853 | 0.996926 | |

| XG-PSO | precision | 0.996475 | 0.997581 | 0.997028 | 0.996945 |

| recall | 0.998219 | 0.995216 | 0.996718 | 0.996944 | |

| f1-score | 0.997347 | 0.996397 | 0.996872 | 0.996943 | |

| XG-ABC | precision | 0.996262 | 0.997830 | 0.997046 | 0.996928 |

| recall | 0.998403 | 0.994925 | 0.996664 | 0.996926 | |

| f1-score | 0.997332 | 0.996375 | 0.996854 | 0.996926 | |

| XG-SSA | precision | 0.996780 | 0.997167 | 0.996973 | 0.996944 |

| recall | 0.997912 | 0.995632 | 0.996772 | 0.996944 | |

| f1-score | 0.997346 | 0.996399 | 0.996872 | 0.996944 | |

| XG-ChOA | precision | 0.996597 | 0.997415 | 0.997006 | 0.996945 |

| recall | 0.998096 | 0.995382 | 0.996739 | 0.996944 | |

| f1-score | 0.997346 | 0.996398 | 0.996872 | 0.996944 | |

| XG-COLSHADE | precision | 0.996323 | 0.997831 | 0.997077 | 0.996963 |

| recall | 0.998403 | 0.995008 | 0.996706 | 0.996962 | |

| f1-score | 0.997362 | 0.996417 | 0.996890 | 0.996961 | |

| XG-SASS | precision | 0.996719 | 0.997291 | 0.997005 | 0.996962 |

| recall | 0.998004 | 0.995549 | 0.996777 | 0.996962 | |

| f1-score | 0.997361 | 0.996419 | 0.996890 | 0.996961 | |

| support | 32,571 | 24,038 | 56,609 | 56,609 |

| Method | Learning Rate | Min Child Weight | Subsample | Colsample by Tree | Max Depth | Gamma |

|---|---|---|---|---|---|---|

| XG-MFA | 0.900000 | 1.000000 | 0.993956 | 0.790192 | 10 | 0.166214 |

| XG-FA | 0.900000 | 1.000000 | 1.000000 | 1.000000 | 10 | 0.000000 |

| XG-GA | 0.900000 | 1.929015 | 1.000000 | 0.821090 | 10 | 0.800000 |

| XG-PSO | 0.900000 | 1.000000 | 0.979482 | 1.000000 | 10 | 0.061248 |

| XG-ABC | 0.625776 | 1.000000 | 0.627028 | 0.970949 | 10 | 0.371569 |

| XG-SSA | 0.900000 | 1.000000 | 1.000000 | 1.000000 | 10 | 0.000000 |

| XG-ChOA | 0.876269 | 1.576144 | 0.660126 | 0.766469 | 10 | 0.800000 |

| XG-COLSHADE | 0.874638 | 1.584114 | 1.000000 | 1.000000 | 10 | 0.000000 |

| XG-SASS | 0.900000 | 1.298750 | 0.885388 | 1.000000 | 10 | 0.358750 |

| Classification Error (Objective) | Cohen’s Kappa | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Best | Worst | Mean | Median | Std | Var | Best | Worst | Mean | Median | Std | Var |

| XG-MFA | 0.008232 | 0.008462 | 0.008322 | 0.008320 | 0.986843 | 0.986475 | 0.986699 | |||||

| XG-FA | 0.008320 | 0.008797 | 0.008473 | 0.008409 | 0.986704 | 0.985939 | 0.986458 | 0.986560 | ||||

| XG-GA | 0.008356 | 0.008603 | 0.008446 | 0.008435 | 0.986645 | 0.986251 | 0.986499 | 0.986516 | ||||

| XG-PSO | 0.008409 | 0.008727 | 0.008499 | 0.008444 | 0.986559 | 0.986052 | 0.986415 | 0.986502 | ||||

| XG-ABC | 0.008426 | 0.008992 | 0.008687 | 0.008691 | 0.986532 | 0.985628 | 0.986114 | 0.986107 | ||||

| XG-SSA | 0.008356 | 0.008550 | 0.008446 | 0.008426 | 0.986643 | 0.986334 | 0.986500 | 0.986532 | ||||

| XG-ChOA | 0.008373 | 0.008568 | 0.008464 | 0.008462 | 0.986615 | 0.986305 | 0.986471 | 0.986474 | ||||

| XG-COLSHADE | 0.008303 | 0.008479 | 0.008375 | 0.008364 | 0.986729 | 0.986445 | 0.986612 | 0.986629 | ||||

| XG-SASS | 0.008391 | 0.008850 | 0.008515 | 0.008506 | 0.986588 | 0.985855 | 0.986391 | 0.986405 | ||||

| Method | Metric | 0 | 1 | 2 | Macro Avg | Weighted Avg |

|---|---|---|---|---|---|---|

| XG-MFA | precision | 0.976150 | 0.992045 | 0.997705 | 0.988633 | 0.991773 |

| recall | 0.975126 | 0.995747 | 0.994550 | 0.988474 | 0.991768 | |

| f1-score | 0.975638 | 0.993892 | 0.996125 | 0.988552 | 0.991768 | |

| XG-FA | precision | 0.974445 | 0.992598 | 0.997663 | 0.988235 | 0.991693 |

| recall | 0.976490 | 0.995140 | 0.994384 | 0.988671 | 0.991680 | |

| f1-score | 0.975467 | 0.993867 | 0.996021 | 0.988451 | 0.991686 | |

| XG-GA | precision | 0.975531 | 0.992129 | 0.997580 | 0.988413 | 0.991650 |

| recall | 0.974916 | 0.995617 | 0.994467 | 0.988333 | 0.991644 | |

| f1-score | 0.975223 | 0.993870 | 0.996021 | 0.988371 | 0.991645 | |

| XG-PSO | precision | 0.975925 | 0.992340 | 0.997082 | 0.988449 | 0.991591 |

| recall | 0.974286 | 0.995140 | 0.995050 | 0.988158 | 0.991591 | |

| f1-score | 0.975105 | 0.993738 | 0.996065 | 0.988303 | 0.991590 | |

| XG-ABC | precision | 0.975525 | 0.991957 | 0.997580 | 0.988354 | 0.991579 |

| recall | 0.974706 | 0.995487 | 0.994509 | 0.988234 | 0.991574 | |

| f1-score | 0.975115 | 0.993719 | 0.996042 | 0.988292 | 0.991574 | |

| XG-SSA | precision | 0.976835 | 0.991917 | 0.997247 | 0.988666 | 0.991642 |

| recall | 0.973657 | 0.995834 | 0.994758 | 0.988083 | 0.991644 | |

| f1-score | 0.975243 | 0.993871 | 0.996001 | 0.988372 | 0.991640 | |

| XG-ChOA | precision | 0.976331 | 0.992086 | 0.997248 | 0.988555 | 0.991626 |

| recall | 0.974076 | 0.995573 | 0.994800 | 0.988150 | 0.991627 | |

| f1-score | 0.975202 | 0.993827 | 0.996022 | 0.988350 | 0.991624 | |

| XG-COLSHADE | precision | 0.976441 | 0.992257 | 0.997206 | 0.988635 | 0.991697 |

| recall | 0.974391 | 0.995530 | 0.994883 | 0.988268 | 0.991697 | |

| f1-score | 0.975415 | 0.993891 | 0.996043 | 0.988450 | 0.991695 | |

| XG-SASS | precision | 0.976028 | 0.992257 | 0.997164 | 0.988483 | 0.991609 |

| recall | 0.974286 | 0.995443 | 0.994600 | 0.988177 | 0.991609 | |

| f1-score | 0.975156 | 0.993847 | 0.995981 | 0.988328 | 0.991607 | |

| support | 9528 | 23,043 | 24,038 | 56,609 | 56,609 |

| Method | Learning Rate | Min Child Weight | Subsample | Colsample by Tree | Max Depth | Gamma |

|---|---|---|---|---|---|---|

| XG-MFA | 0.558224 | 1.390646 | 1.000000 | 0.754489 | 10 | 0.800000 |

| XG-FA | 0.900000 | 2.356392 | 1.000000 | 1.000000 | 7 | 0.800000 |

| XG-GA | 0.532608 | 1.671261 | 1.000000 | 1.000000 | 9 | 0.595526 |

| XG-PSO | 0.900000 | 1.209953 | 1.000000 | 0.965372 | 10 | 0.162178 |

| XG-ABC | 0.516726 | 1.000000 | 0.796465 | 0.842297 | 10 | 0.518501 |

| XG-SSA | 0.643381 | 1.000000 | 1.000000 | 0.864255 | 10 | 0.087284 |

| XG-ChOA | 0.650355 | 1.000000 | 1.000000 | 0.800611 | 10 | 0.000000 |

| XG-COLSHADE | 0.578265 | 1.981063 | 1.000000 | 0.761847 | 10 | 0.010795 |

| XG-SASS | 0.900000 | 2.028573 | 1.000000 | 1.000000 | 10 | 0.800000 |

| Cohen’s kappa (Objective) | Classification Error | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Best | Worst | Mean | Median | Std | Var | Best | Worst | Mean | Median | Std | Var |

| XG-MFA | 0.986785 | 0.986417 | 0.986670 | 0.986670 | 0.008267 | 0.008497 | 0.008339 | 0.008338 | ||||

| XG-FA | 0.986646 | 0.986416 | 0.986585 | 0.986589 | 0.008356 | 0.008497 | 0.008393 | 0.008391 | ||||

| XG-GA | 0.986646 | 0.985630 | 0.986348 | 0.986446 | 0.008356 | 0.008992 | 0.008541 | 0.008479 | ||||

| XG-PSO | 0.986532 | 0.986136 | 0.986369 | 0.986405 | 0.008426 | 0.008674 | 0.008528 | 0.008506 | ||||

| XG-ABC | 0.986502 | 0.986052 | 0.986224 | 0.986207 | 0.008444 | 0.008727 | 0.008618 | 0.008629 | ||||

| XG-SSA | 0.986644 | 0.986078 | 0.986418 | 0.986460 | 0.008356 | 0.008709 | 0.008497 | 0.008470 | ||||

| XG-ChOA | 0.986589 | 0.986303 | 0.986457 | 0.986460 | 0.008391 | 0.008568 | 0.008473 | 0.008470 | ||||

| XG-COLSHADE | 0.986645 | 0.986277 | 0.986511 | 0.986546 | 0.008356 | 0.008585 | 0.008439 | 0.008417 | ||||

| XG-SASS | 0.986702 | 0.986563 | 0.986603 | 0.986589 | 0.008320 | 0.008409 | 0.008382 | 0.008391 | ||||

| Method | Metric | 0 | 1 | 2 | Macro Avg | Weighted Avg |

|---|---|---|---|---|---|---|

| XG-MFA | precision | 0.976446 | 0.991833 | 0.997704 | 0.988661 | 0.991736 |

| recall | 0.974601 | 0.996094 | 0.994342 | 0.988346 | 0.991733 | |

| f1-score | 0.975523 | 0.993959 | 0.996020 | 0.988501 | 0.991731 | |

| XG-FA | precision | 0.975333 | 0.992213 | 0.997580 | 0.988375 | 0.991651 |

| recall | 0.975231 | 0.995357 | 0.994592 | 0.988393 | 0.991644 | |

| f1-score | 0.975282 | 0.993782 | 0.996084 | 0.988383 | 0.991646 | |

| XG-GA | precision | 0.975142 | 0.992085 | 0.997788 | 0.988338 | 0.991655 |

| recall | 0.975756 | 0.995400 | 0.994342 | 0.988499 | 0.991644 | |

| f1-score | 0.975449 | 0.993740 | 0.996062 | 0.988417 | 0.991647 | |

| XG-PSO | precision | 0.975223 | 0.992467 | 0.997206 | 0.988300 | 0.991578 |

| recall | 0.974916 | 0.995226 | 0.994675 | 0.988272 | 0.991574 | |

| f1-score | 0.975070 | 0.993846 | 0.995939 | 0.988285 | 0.991574 | |

| XG-ABC | precision | 0.976521 | 0.991830 | 0.997247 | 0.988533 | 0.991554 |

| recall | 0.973447 | 0.995704 | 0.994758 | 0.987970 | 0.991556 | |

| f1-score | 0.974981 | 0.993763 | 0.996001 | 0.988247 | 0.991552 | |

| XG-SSA | precision | 0.976333 | 0.992086 | 0.997289 | 0.988570 | 0.991644 |

| recall | 0.974181 | 0.995617 | 0.994758 | 0.988186 | 0.991644 | |

| f1-score | 0.975256 | 0.993849 | 0.996022 | 0.988376 | 0.991642 | |

| XG-ChOA | precision | 0.975126 | 0.992086 | 0.997704 | 0.988305 | 0.991617 |

| recall | 0.975126 | 0.995573 | 0.994342 | 0.988347 | 0.991609 | |

| f1-score | 0.975126 | 0.993827 | 0.996020 | 0.988324 | 0.991611 | |

| XG-COLSHADE | precision | 0.975535 | 0.992086 | 0.997621 | 0.988414 | 0.991651 |

| recall | 0.975126 | 0.995573 | 0.994425 | 0.988375 | 0.991644 | |

| f1-score | 0.975331 | 0.993827 | 0.996021 | 0.988393 | 0.991645 | |

| XG-SASS | precision | 0.975436 | 0.992173 | 0.997663 | 0.988424 | 0.991687 |

| recall | 0.975231 | 0.995704 | 0.994342 | 0.988426 | 0.991680 | |

| f1-score | 0.975333 | 0.993935 | 0.996000 | 0.988423 | 0.991681 | |

| support | 9528 | 23,043 | 24,038 | 56,609 | 56,609 |

| Method | Learning Rate | Min Child Weight | Subsample | Colsample by Tree | Max Depth | Gamma |

|---|---|---|---|---|---|---|

| XG-MFA | 0.780152 | 1.000000 | 1.000000 | 1.000000 | 9 | 0.517589 |

| XG-FA | 0.900000 | 1.265482 | 1.000000 | 0.983060 | 9 | 0.222017 |

| XG-GA | 0.506328 | 1.000000 | 0.816145 | 0.945690 | 10 | 0.800000 |

| XG-PSO | 0.837106 | 1.000000 | 1.000000 | 1.000000 | 10 | 0.559077 |

| XG-ABC | 0.566515 | 2.084170 | 0.989990 | 1.000000 | 10 | 0.569104 |

| XG-SSA | 0.897294 | 1.103403 | 1.000000 | 1.000000 | 10 | 0.612487 |

| XG-ChOA | 0.900000 | 1.000000 | 1.000000 | 1.000000 | 9 | 0.800000 |

| XG-COLSHADE | 0.465601 | 3.091252 | 1.000000 | 1.000000 | 9 | 0.800000 |

| XG-SASS | 0.900000 | 1.107956 | 1.000000 | 1.000000 | 9 | 0.800000 |

| Problem | XG-MFA | XG-FA | XG-GA | XG-PSO | XG-ABC | XG-SSA | XG-ChOA | XG-COLSHADE | XG-SASS |

|---|---|---|---|---|---|---|---|---|---|

| Binary Error | 0.048 | 0.041 | 0.045 | 0.038 | 0.036 | 0.032 | 0.043 | 0.026 | 0.019 |

| Binary Kappa | 0.042 | 0.042 | 0.040 | 0.036 | 0.039 | 0.033 | 0.045 | 0.035 | 0.026 |

| Multiclass Error | 0.021 | 0.030 | 0.042 | 0.031 | 0.043 | 0.025 | 0.019 | 0.020 | 0.023 |

| Multiclass Kappa | 0.035 | 0.038 | 0.021 | 0.040 | 0.035 | 0.029 | 0.042 | 0.027 | 0.030 |

| Problem/p-Values | XG-FA | XG-GA | XG-PSO | XG-ABC | XG-SSA | XG-ChOA | XG-COLSHADE | XG-SASS |

|---|---|---|---|---|---|---|---|---|

| Binary Error | 0.016 | 0.037 | 0.028 | 0.008 | 0.025 | 0.031 | 0.034 | 0.031 |

| Binary Kappa | 0.025 | 0.036 | 0.026 | 0.027 | 0.031 | 0.029 | 0.040 | 0.039 |

| Multiclass Error | 0.034 | 0.035 | 0.030 | 0.019 | 0.035 | 0.035 | 0.041 | 0.027 |

| Multiclass Kappa | 0.040 | 0.026 | 0.028 | 0.024 | 0.042 | 0.036 | 0.038 | 0.033 |

| ML Classifier | Precision | Recall | Accuracy | F1-Score |

|---|---|---|---|---|

| NB | 79.6712 | 99.7052 | 52.1821 | 68.5093 |

| KNN | 99.6501 | 99.6865 | 99.4872 | 99.5868 |

| RF | 99.7069 | 99.7954 | 99.5121 | 99.6534 |

| AB | 99.5547 | 99.4457 | 99.5037 | 99.4748 |

| LogR | 95.2879 | 90.3515 | 99.5036 | 94.7071 |

| DT | 99.6945 | 99.7992 | 99.4788 | 99.6389 |

| XG-MFA | 99.6998 | 99.6997 | 99.6997 | 99.6996 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Savanović, N.; Toskovic, A.; Petrovic, A.; Zivkovic, M.; Damaševičius, R.; Jovanovic, L.; Bacanin, N.; Nikolic, B. Intrusion Detection in Healthcare 4.0 Internet of Things Systems via Metaheuristics Optimized Machine Learning. Sustainability 2023, 15, 12563. https://doi.org/10.3390/su151612563

Savanović N, Toskovic A, Petrovic A, Zivkovic M, Damaševičius R, Jovanovic L, Bacanin N, Nikolic B. Intrusion Detection in Healthcare 4.0 Internet of Things Systems via Metaheuristics Optimized Machine Learning. Sustainability. 2023; 15(16):12563. https://doi.org/10.3390/su151612563

Chicago/Turabian StyleSavanović, Nikola, Ana Toskovic, Aleksandar Petrovic, Miodrag Zivkovic, Robertas Damaševičius, Luka Jovanovic, Nebojsa Bacanin, and Bosko Nikolic. 2023. "Intrusion Detection in Healthcare 4.0 Internet of Things Systems via Metaheuristics Optimized Machine Learning" Sustainability 15, no. 16: 12563. https://doi.org/10.3390/su151612563

APA StyleSavanović, N., Toskovic, A., Petrovic, A., Zivkovic, M., Damaševičius, R., Jovanovic, L., Bacanin, N., & Nikolic, B. (2023). Intrusion Detection in Healthcare 4.0 Internet of Things Systems via Metaheuristics Optimized Machine Learning. Sustainability, 15(16), 12563. https://doi.org/10.3390/su151612563