Vehicle Detection and Classification via YOLOv8 and Deep Belief Network over Aerial Image Sequences

Abstract

:1. Introduction

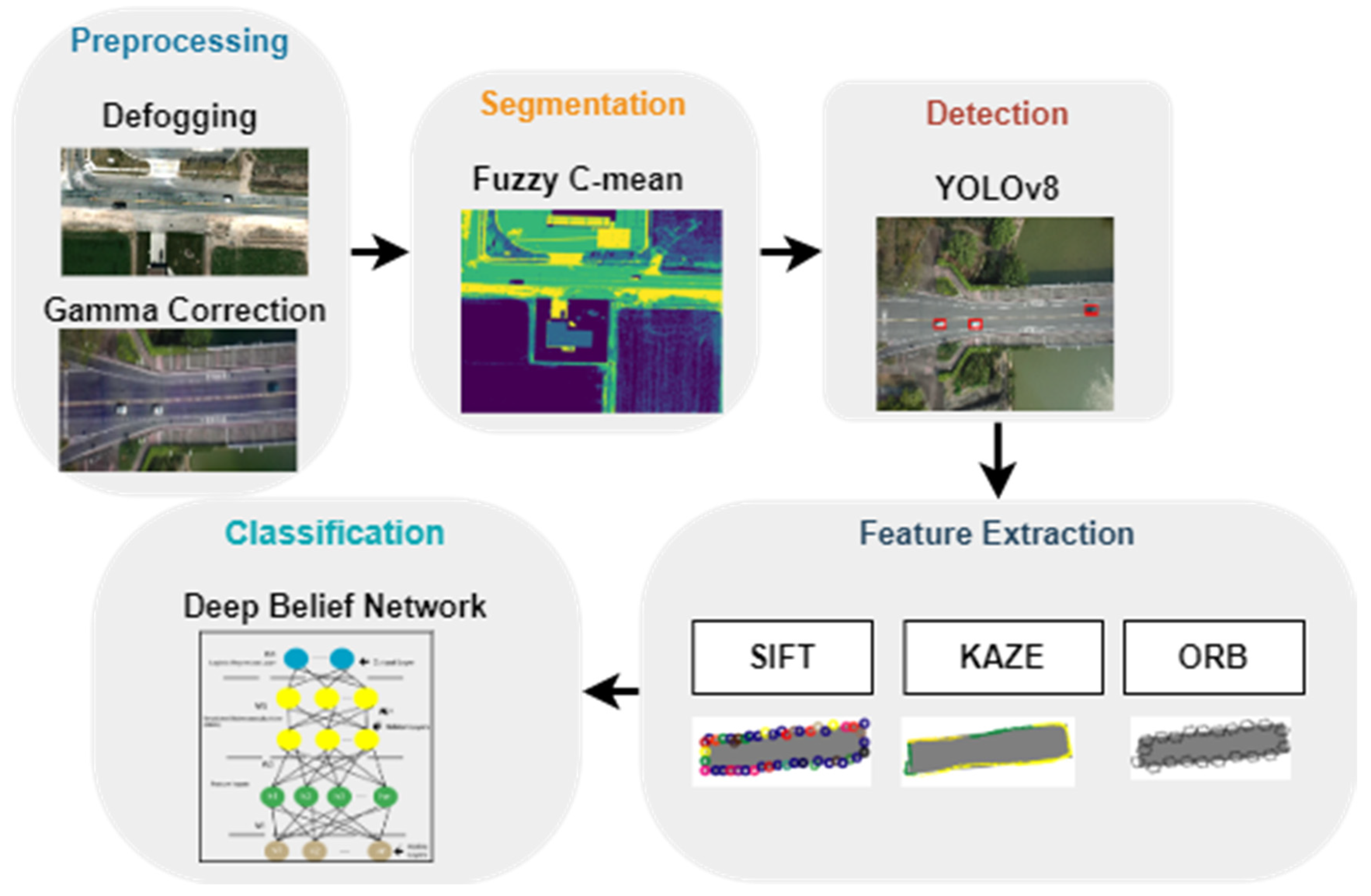

- Our model combines the pre-processing methodologies with the segmentation technique to prepare images before passing them to the detection phase to reduce model complexity.

- We used the newest YOLOv8, which has improved architecture to enhance vehicle detection in segmented images as it can effectively detect objects of varying sizes.

- To classify vehicles, multiple features, including SIFT, ORB, and KAZE features, are extracted. Combining scale and rotation invariant, 2D and fast and robust local feature vectors are effective in classifying vehicles in aerial images.

- The proposed system uses a deep learning-based DBN classifier to achieve higher classification accuracy.

2. Related Work

3. Proposed System Methodology

3.1. Images Pre-Processing

3.2. Fuzzy C-Mean Segmentation

3.3. Vehicle Detection via YOLOv8

3.4. Feature Extraction

3.4.1. SIFT Features

3.4.2. KAZE Features

3.4.3. ORB Features

3.5. Classification via DBN

| Algorithm 1: Classification via DBN |

| Input: I = {i1, i2, ……, in}; image fames Output: C : the classification; ← []: Vehicle Detections ← []: Feature Vector Method: Video = VideoReader (‘videopath’) img_frame = read (video) for k = 1 to size (img_frame) resize_img = imresize (img_framek, 768 × 768) seg_img = FCM (resize_img) ← YOLOv8 (seg_img) for s = 1 to size D F ← SIFT (Ds) F ← KAZE (Ds) F ← ORB (Ds) veh-class = DBN (F) end for return veh-class return img_frame |

4. Experimental Setup and Evaluation

4.1. Dataset Description

4.1.1. VEDAI Dataset

4.1.2. VAID Dataset

4.2. Performance Metric and Experimental Outcome

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rafique, A.A.; Al-Rasheed, A.; Ksibi, A.; Ayadi, M.; Jalal, A.; Alnowaiser, K.; Meshref, H.; Shorfuzzaman, M.; Gochoo, M.; Park, J. Smart Traffic Monitoring Through Pyramid Pooling Vehicle Detection and Filter-Based Tracking on Aerial Images. IEEE Access 2023, 11, 2993–3007. [Google Scholar] [CrossRef]

- Qureshi, A.M.; Jalal, A. Vehicle Detection and Tracking Using Kalman Filter Over Aerial Images. In Proceedings of the 2023 4th International Conference on Advancements in Computational Sciences (ICACS), Lahore, Pakistan, 20–22 February 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Yang, M.; Wang, Y.; Wang, C.; Liang, Y.; Yang, S.; Wang, L.; Wang, S. Digital Twin-Driven Industrialization Development of Underwater Gliders. IEEE Trans. Ind. Inform. 2023, 19, 9680–9690. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, S.; Zhang, L.; Pan, G.; Yu, J. Multi-UUV Maneuvering Counter-Game for Dynamic Target Scenario Based on Fractional-Order Recurrent Neural Network. IEEE Trans. Cybern. 2022, 53, 4015–4028. [Google Scholar] [CrossRef]

- Zhou, D.; Sheng, M.; Li, J.; Han, Z. Aerospace Integrated Networks Innovation for Empowering 6G: A Survey and Future Challenges. IEEE Commun. Surv. Tutor. 2023, 25, 975–1019. [Google Scholar] [CrossRef]

- Jiang, S.; Zhao, C.; Zhu, Y.; Wang, C.; Du, Y. A Practical and Economical Ultra-Wideband Base Station Placement Approach for Indoor Autonomous Driving Systems. J. Adv. Transp. 2022, 2022, 3815306. [Google Scholar] [CrossRef]

- Schreuder, M.; Hoogendoorn, S.P.; Van Zulyen, H.J.; Gorte, B.; Vosselman, G. Traffic Data Collection from Aerial Imagery. In Proceedings of the 2003 IEEE International Conference on Intelligent Transportation Systems, Shanghai, China, 12–15 October 2003; Volume 1, pp. 779–784. [Google Scholar] [CrossRef]

- Ahmed, A.; Jalal, A.; Rafique, A.A. Salient Segmentation Based Object Detection and Recognition Using Hybrid Genetic Transform. In Proceedings of the 2019 International Conference on Applied and Engineering Mathematics (ICAEM), Taxila, Pakistan, 27–29 August 2019; pp. 203–208. [Google Scholar]

- Farooq, A.; Jalal, A.; Kamal, S. Dense RGB-D Map-Based Human Tracking and Activity Recognition Using Skin Joints Features and Self-Organizing Map. KSII Trans. Internet Inf. Syst. 2015, 9, 1856–1869. [Google Scholar] [CrossRef]

- Hsieh, J.W.; Yu, S.H.; Chen, Y.S.; Hu, W.F. Automatic Traffic Surveillance System for Vehicle Tracking and Classification. IEEE Intell. Transp. Syst. Mag. 2006, 7, 175–187. [Google Scholar] [CrossRef]

- Bai, X.; Huang, M.; Xu, M.; Liu, J. Reconfiguration Optimization of Relative Motion Between Elliptical Orbits Using Lyapunov-Floquet Transformation. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 923–936. [Google Scholar] [CrossRef]

- Min, H.; Fang, Y.; Wu, X.; Lei, X.; Chen, S.; Teixeira, R.; Zhu, B.; Zhao, X.; Xu, Z. A Fault Diagnosis Framework for Autonomous Vehicles with Sensor Self-Diagnosis. Expert Syst. Appl. 2023, 224, 120002. [Google Scholar] [CrossRef]

- Zhang, X.; Wen, S.; Yan, L.; Feng, J.; Xia, Y. A Hybrid-Convolution Spatial–Temporal Recurrent Network For Traffic Flow Prediction. Comput. J. 2022, 10, bxac171. [Google Scholar] [CrossRef]

- Li, B.; Zhou, X.; Ning, Z.; Guan, X.; Yiu, K.F.C. Dynamic Event-Triggered Security Control for Networked Control Systems with Cyber-Attacks: A Model Predictive Control Approach. Inf. Sci. 2022, 612, 384–398. [Google Scholar] [CrossRef]

- Xu, J.; Park, H.; Guo, K.; Zhang, X. The Alleviation of Perceptual Blindness during Driving in Urban Areas Guided by Saccades Recommendation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16386–16396. [Google Scholar] [CrossRef]

- Xu, J.; Guo, K.; Zhang, X.; Sun, P.Z.H. Left Gaze Bias between LHT and RHT: A Recommendation Strategy to Mitigate Human Errors in Left- and Right-Hand Driving. IEEE Trans. Intell. Veh. 2023, 1, 1–12. [Google Scholar] [CrossRef]

- Qureshi, A.M.; Butt, A.H.; Jalal, A. Highway Traffic Surveillance Over UAV Dataset via Blob Detection and Histogram of Gradient. In Proceedings of the 2023 4th International Conference on Advancements in Computational Sciences (ICACS), Lahore, Pakistan, 20–22 February 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Torres, D.L.; Turnes, J.N.; Vega, P.J.S.; Feitosa, R.Q.; Silva, D.E.; Marcato Junior, J.; Almeida, C. Deforestation Detection with Fully Convolutional Networks in the Amazon Forest from Landsat-8 and Sentinel-2 Images. Remote Sens. 2021, 13, 5084. [Google Scholar] [CrossRef]

- Chen, P.C.; Chiang, Y.C.; Weng, P.Y. Imaging Using Unmanned Aerial Vehicles for Agriculture Land Use Classification. Agriculture 2020, 10, 416. [Google Scholar] [CrossRef]

- Munawar, H.S.; Ullah, F.; Qayyum, S.; Khan, S.I.; Mojtahedi, M. UAVs in Disaster Management: Application of Integrated Aerial Imagery and Convolutional Neural Network for Flood Detection. Sustainability 2021, 13, 7547. [Google Scholar] [CrossRef]

- Zhang, J.; Ye, G.; Tu, Z.; Qin, Y.; Qin, Q.; Zhang, J.; Liu, J. A Spatial Attentive and Temporal Dilated (SATD) GCN for Skeleton-Based Action Recognition. CAAI Trans. Intell. Technol. 2022, 7, 46–55. [Google Scholar] [CrossRef]

- Ma, X.; Dong, Z.; Quan, W.; Dong, Y.; Tan, Y. Real-Time Assessment of Asphalt Pavement Moduli and Traffic Loads Using Monitoring Data from Built-in Sensors: Optimal Sensor Placement and Identification Algorithm. Mech. Syst. Signal Process. 2023, 187, 109930. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Q.; Peng, W.; Xu, H.; Li, X.; Xu, W. Disparity-Based Multiscale Fusion Network for Transportation Detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18855–18863. [Google Scholar] [CrossRef]

- Chen, J.; Xu, M.; Xu, W.; Li, D.; Peng, W.; Xu, H. A Flow Feedback Traffic Prediction Based on Visual Quantified Features. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10067–10075. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, Y.; Qian, L.; Zhang, X.; Diao, S.; Liu, X.; Cao, J.; Huang, H. A Lightweight Ship Target Detection Model Based on Improved YOLOv5s Algorithm. PLoS ONE 2023, 18, e0283932. [Google Scholar] [CrossRef]

- Arinaldi, A.; Pradana, J.A.; Gurusinga, A.A. Detection and Classification of Vehicles for Traffic Video Analytics. Procedia Comput. Sci. 2018, 144, 259–268. [Google Scholar] [CrossRef]

- Aqel, S.; Hmimid, A.; Sabri, M.A.; Aarab, A. Road Traffic: Vehicle Detection and Classification. In Proceedings of the 2017 Intelligent Systems and Computer Vision (ISCV), Venice, Italy, 17–19 April 2017. [Google Scholar] [CrossRef]

- Sarikan, S.S.; Ozbayoglu, A.M.; Zilci, O. Automated Vehicle Classification with Image Processing and Computational Intelligence. Procedia Comput. Sci. 2017, 114, 515–522. [Google Scholar] [CrossRef]

- Tan, Y.; Xu, Y.; Das, S.; Chaudhry, A. Vehicle Detection and Classification in Aerial Imagery. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 86–90. [Google Scholar] [CrossRef]

- Hamzenejadi, M.H.; Mohseni, H. Fine-Tuned YOLOv5 for Real-Time Vehicle Detection in UAV Imagery: Architectural Improvements and Performance Boost. Expert Syst. Appl. 2023, 231, 120845. [Google Scholar] [CrossRef]

- Ozturk, M.; Cavus, E. Vehicle Detection in Aerial Imaginary Using a Miniature CNN Architecture. In Proceedings of the 2021 International Conference on INnovations in Intelligent SysTems and Applications (INISTA), Kocaeli, Turkey, 25–27 August 2021. [Google Scholar] [CrossRef]

- Roopa Chandrika, R.; Gowri Ganesh, N.S.; Mummoorthy, A.; Karthick Raghunath, K.M. Vehicle Detection and Classification Using Image Processing. In Proceedings of the 2019 International Conference on Emerging Trends in Science and Engineering (ICESE), Hyderabad, India, 18–19 September 2019. [Google Scholar] [CrossRef]

- Kumar, S.; Jain, A.; Rani, S.; Alshazly, H.; Idris, S.A.; Bourouis, S. Deep Neural Network Based Vehicle Detection and Classification of Aerial Images. Intell. Autom. Soft Comput. 2022, 34, 119–131. [Google Scholar] [CrossRef]

- Zhang, X.; Zhu, X. Vehicle Detection in the Aerial Infrared Images via an Improved Yolov3 Network. In Proceedings of the 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 19–21 July 2019; pp. 372–376. [Google Scholar] [CrossRef]

- Javid, A.; Nekoui, M.A. Adaptive Control of Time-Delayed Bilateral Teleoperation Systems with Uncertain Kinematic and Dynamics. Cogent Eng. 2018, 6, 1631604. [Google Scholar] [CrossRef]

- Lu, S.; Ding, Y.; Liu, M.; Yin, Z.; Yin, L.; Zheng, W. Multiscale Feature Extraction and Fusion of Image and Text in VQA. Int. J. Comput. Intell. Syst. 2023, 16, 54. [Google Scholar] [CrossRef]

- Cheng, B.; Zhu, D.; Zhao, S.; Chen, J. Situation-Aware IoT Service Coordination Using the Event-Driven SOA Paradigm. IEEE Trans. Netw. Serv. Manag. 2016, 13, 349–361. [Google Scholar] [CrossRef]

- Shen, Y.; Ding, N.; Zheng, H.T.; Li, Y.; Yang, M. Modeling Relation Paths for Knowledge Graph Completion. IEEE Trans. Knowl. Data Eng. 2021, 33, 3607–3617. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, L. SA-FPN: An Effective Feature Pyramid Network for Crowded Human Detection. Appl. Intell. 2022, 52, 12556–12568. [Google Scholar] [CrossRef]

- Zhao, Z.; Xu, G.; Zhang, N.; Zhang, Q. Performance Analysis of the Hybrid Satellite-Terrestrial Relay Network with Opportunistic Scheduling over Generalized Fading Channels. IEEE Trans. Veh. Technol. 2022, 71, 2914–2924. [Google Scholar] [CrossRef]

- Chen, J.; Wang, Q.; Cheng, H.H.; Peng, W.; Xu, W. A Review of Vision-Based Traffic Semantic Understanding in ITSs. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19954–19979. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L.; Su, Y.; Gao, G.; Liu, Y.; Na, Z.; Xu, Q.Z.; Ding, T.; Xiao, L.; Li, L.; et al. A Space Crawling Robotic Bio-Paw (SCRBP) Enabled by Triboelectric Sensors for Surface Identification. Nano Energy 2023, 105, 108013. [Google Scholar] [CrossRef]

- Yu, J.; Shi, Z.; Dong, X.; Li, Q.; Lv, J.; Ren, Z. Impact Time Consensus Cooperative Guidance Against the Maneuvering Target: Theory and Experiment. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 4590–4603. [Google Scholar] [CrossRef]

- Fang, Y.; Min, H.; Wu, X.; Wang, W.; Zhao, X.; Mao, G. On-Ramp Merging Strategies of Connected and Automated Vehicles Considering Communication Delay. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15298–15312. [Google Scholar] [CrossRef]

- Balasamy, K.; Shamia, D. Feature Extraction-Based Medical Image Watermarking Using Fuzzy-Based Median Filter. IETE J. Res. 2021, 69, 83–91. [Google Scholar] [CrossRef]

- Somvanshi, S.S.; Kunwar, P.; Tomar, S.; Singh, M. Comparative Statistical Analysis of the Quality of Image Enhancement Techniques. Int. J. Image Data Fusion 2018, 9, 131–151. [Google Scholar] [CrossRef]

- Zaman Khan, R. Hand Gesture Recognition: A Literature Review. Int. J. Artif. Intell. Appl. 2012, 3, 161–174. [Google Scholar] [CrossRef]

- Liu, W.; Zhou, F.; Lu, T.; Duan, J.; Qiu, G. Image Defogging Quality Assessment: Real-World Database and Method. IEEE Trans. Image Process. 2021, 30, 176–190. [Google Scholar] [CrossRef] [PubMed]

- Kong, X.; Chen, Q.; Gu, G.; Ren, K.; Qian, W.; Liu, Z. Particle Filter-Based Vehicle Tracking via HOG Features after Image Stabilisation in Intelligent Drive System. IET Intell. Transp. Syst. 2019, 13, 942–949. [Google Scholar] [CrossRef]

- Xu, G.; Su, J.; Pan, H.; Zhang, Z.; Gong, H. An Image Enhancement Method Based on Gamma Correction. In Proceedings of the 2009 Second International Symposium on Computational Intelligence and Design, Washington, DC, USA, 12–14 December 2009; Volume 1, pp. 60–63. [Google Scholar] [CrossRef]

- Veluchamy, M.; Subramani, B. Image Contrast and Color Enhancement Using Adaptive Gamma Correction and Histogram Equalization. Optik 2019, 183, 329–337. [Google Scholar] [CrossRef]

- Liu, H.; Yuan, H.; Liu, Q.; Hou, J.; Zeng, H.; Kwong, S. A Hybrid Compression Framework for Color Attributes of Static 3D Point Clouds. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 1564–1577. [Google Scholar] [CrossRef]

- Luo, J.; Wang, G.; Li, G.; Pesce, G. Transport Infrastructure Connectivity and Conflict Resolution: A Machine Learning Analysis. Neural Comput. Appl. 2022, 34, 6585–6601. [Google Scholar] [CrossRef]

- Liu, Q.; Yuan, H.; Hamzaoui, R.; Su, H.; Hou, J.; Yang, H. Reduced Reference Perceptual Quality Model with Application to Rate Control for Video-Based Point Cloud Compression. IEEE Trans. Image Process. 2021, 30, 6623–6636. [Google Scholar] [CrossRef] [PubMed]

- Yang, B.; Wang, J.; Clark, R.; Hu, Q.; Wang, S.; Markham, A.; Trigoni, N. Learning Object Bounding Boxes for 3D Instance Segmentation on Point Clouds. arXiv 2019, arXiv:1906.01140. [Google Scholar]

- Rafique, A.A.; Gochoo, M.; Jalal, A.; Kim, K. Maximum Entropy Scaled Super Pixels Segmentation for Multi-Object Detection and Scene Recognition via Deep Belief Network. Multimed. Tools Appl. 2022, 82, 13401–13430. [Google Scholar] [CrossRef]

- Li, J.; Han, L.; Zhang, C.; Li, Q.; Liu, Z. Spherical Convolution Empowered Viewport Prediction in 360 Video Multicast with Limited FoV Feedback. ACM Trans. Multimed. Comput. Commun. Appl. 2023, 19, 1–23. [Google Scholar] [CrossRef]

- Liang, X.; Huang, Z.; Yang, S.; Qiu, L. Device-Free Motion & Trajectory Detection via RFID. ACM Trans. Embed. Comput. Syst. 2018, 17, 1–27. [Google Scholar] [CrossRef]

- Jalal, A.; Ahmed, A.; Rafique, A.A.; Kim, K. Scene Semantic Recognition Based on Modified Fuzzy C-Mean and Maximum Entropy Using Object-to-Object Relations. IEEE Access 2021, 9, 27758–27772. [Google Scholar] [CrossRef]

- Miao, J.; Zhou, X.; Huang, T.Z. Local Segmentation of Images Using an Improved Fuzzy C-Means Clustering Algorithm Based on Self-Adaptive Dictionary Learning. Appl. Soft Comput. 2020, 91, 106200. [Google Scholar] [CrossRef]

- Jun, M.; Yuanyuan, L.; Huahua, L.; You, M. Single-Image Dehazing Based on Two-Stream Convolutional Neural Network. J. Artif. Intell. Technol. 2022, 2, 100–110. [Google Scholar] [CrossRef]

- Yu, H.; Wu, Z.; Wang, S.; Wang, Y.; Ma, X. Spatiotemporal Recurrent Convolutional Networks for Traffic Prediction in Transportation Networks. Sensors 2017, 17, 1501. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.; Xu, M.; Zhu, S.; Lin, M.; Li, G. A Low-Profile Programmable Beam Scanning Array Antenna. In Proceedings of the 2021 International Conference on Microwave and Millimeter Wave Technology (ICMMT), Nanjing, China, 23–26 May 2021. [Google Scholar] [CrossRef]

- Zong, C.; Wan, Z. Container Ship Cell Guide Accuracy Check Technology Based on Improved 3d Point Cloud Instance Segmentation. Brodogradnja 2022, 73, 23–35. [Google Scholar] [CrossRef]

- Han, Y.; Wang, B.; Guan, T.; Tian, D.; Yang, G.; Wei, W.; Tang, H.; Chuah, J.H. Research on Road Environmental Sense Method of Intelligent Vehicle Based on Tracking Check. IEEE Trans. Intell. Transp. Syst. 2023, 24, 1261–1275. [Google Scholar] [CrossRef]

- Cao, B.; Zhang, W.; Wang, X.; Zhao, J.; Gu, Y.; Zhang, Y. A Memetic Algorithm Based on Two_Arch2 for Multi-Depot Heterogeneous-Vehicle Capacitated Arc Routing Problem. Swarm Evol. Comput. 2021, 63, 100864. [Google Scholar] [CrossRef]

- Dai, X.; Xiao, Z.; Jiang, H.; Chen, H.; Min, G.; Dustdar, S.; Cao, J. A Learning-Based Approach for Vehicle-to-Vehicle Computation Offloading. IEEE Internet Things J. 2023, 10, 7244–7258. [Google Scholar] [CrossRef]

- Xiao, Z.; Fang, H.; Jiang, H.; Bai, J.; Havyarimana, V.; Chen, H.; Jiao, L. Understanding Private Car Aggregation Effect via Spatio-Temporal Analysis of Trajectory Data. IEEE Trans. Cybern. 2023, 53, 2346–2357. [Google Scholar] [CrossRef] [PubMed]

- Mi, C.; Huang, S.; Zhang, Y.; Zhang, Z.; Postolache, O. Design and Implementation of 3-D Measurement Method for Container Handling Target. J. Mar. Sci. Eng. 2022, 10, 1961. [Google Scholar] [CrossRef]

- Jiang, H.; Chen, S.; Xiao, Z.; Hu, J.; Liu, J.; Dustdar, S. Pa-Count: Passenger Counting in Vehicles Using Wi-Fi Signals. IEEE Trans. Mob. Comput. 2023, 1, 1–14. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, W.; Zhou, X.; Liao, Q.; Luo, Q.; Ni, L.M. FraudTrip: Taxi Fraudulent Trip Detection from Corresponding Trajectories. IEEE Internet Things J. 2021, 8, 12505–12517. [Google Scholar] [CrossRef]

- Tian, H.; Pei, J.; Huang, J.; Li, X.; Wang, J.; Zhou, B.; Qin, Y.; Wang, L. Garlic and Winter Wheat Identification Based on Active and Passive Satellite Imagery and the Google Earth Engine in Northern China. Remote Sens. 2020, 12, 3539. [Google Scholar] [CrossRef]

- Yang, M.; Wang, H.; Hu, K.; Yin, G.; Wei, Z. IA-Net: An Inception-Attention-Module-Based Network for Classifying Underwater Images From Others. IEEE J. Ocean. Eng. 2022, 47, 704–717. [Google Scholar] [CrossRef]

- Shi, Y.; Hu, J.; Wu, Y.; Ghosh, B.K. Intermittent Output Tracking Control of Heterogeneous Multi-Agent Systems over Wide-Area Clustered Communication Networks. Nonlinear Anal. Hybrid Syst. 2023, 50, 101387. [Google Scholar] [CrossRef]

- Lu, S.; Liu, M.; Yin, L.; Yin, Z.; Liu, X.; Zheng, W. The Multi-Modal Fusion in Visual Question Answering: A Review of Attention Mechanisms. PeerJ Comput. Sci. 2023, 9, e1400. [Google Scholar] [CrossRef] [PubMed]

- Lou, H.; Duan, X.; Guo, J.; Liu, H.; Gu, J.; Bi, L.; Chen, H. DC-YOLOv8: Small-Size Object Detection Algorithm Based on Camera Sensor. Electronics 2023, 12, 2323. [Google Scholar] [CrossRef]

- Zhang, X.; Fang, S.; Shen, Y.; Yuan, X.; Lu, Z. Hierarchical Velocity Optimization for Connected Automated Vehicles with Cellular Vehicle-to-Everything Communication at Continuous Signalized Intersections. IEEE Trans. Intell. Transp. Syst. 2023, 1, 1–12. [Google Scholar] [CrossRef]

- Tang, J.; Ren, Y.; Liu, S. Real-Time Robot Localization, Vision, and Speech Recognition on Nvidia Jetson TX1. arXiv 2017, arXiv:1705.10945. [Google Scholar]

- Guo, F.; Zhou, W.; Lu, Q.; Zhang, C. Path Extension Similarity Link Prediction Method Based on Matrix Algebra in Directed Networks. Comput. Commun. 2022, 187, 83–92. [Google Scholar] [CrossRef]

- Wang, B.; Zhang, Y.; Zhang, W. A Composite Adaptive Fault-Tolerant Attitude Control for a Quadrotor UAV with Multiple Uncertainties. J. Syst. Sci. Complex. 2022, 35, 81–104. [Google Scholar] [CrossRef]

- Ahmad, F. Deep Image Retrieval Using Artificial Neural Network Interpolation and Indexing Based on Similarity Measurement. CAAI Trans. Intell. Technol. 2022, 7, 200–218. [Google Scholar] [CrossRef]

- Hassan, F.S.; Gutub, A. Improving Data Hiding within Colour Images Using Hue Component of HSV Colour Space. CAAI Trans. Intell. Technol. 2022, 7, 56–68. [Google Scholar] [CrossRef]

- Dong, Y.; Guo, W.; Zha, F.; Liu, Y.; Chen, C.; Sun, L. A Vision-Based Two-Stage Framework for Inferring Physical Properties of the Terrain. Appl. Sci. 2020, 10, 6473. [Google Scholar] [CrossRef]

- Bawankule, R.; Gaikwad, V.; Kulkarni, I.; Kulkarni, S.; Jadhav, A.; Ranjan, N. Visual Detection of Waste Using YOLOv8. In Proceedings of the 2023 International Conference on Sustainable Computing and Smart Systems (ICSCSS), Coimbatore, India, 14–16 June 2023; pp. 869–873. [Google Scholar] [CrossRef]

- Wen, C.; Huang, Y.; Davidson, T.N. Efficient Transceiver Design for MIMO Dual-Function Radar-Communication Systems. IEEE Trans. Signal Process. 2023, 71, 1786–1801. [Google Scholar] [CrossRef]

- Wen, C.; Huang, Y.; Zheng, L.; Liu, W.; Davidson, T.N. Transmit Waveform Design for Dual-Function Radar-Communication Systems via Hybrid Linear-Nonlinear Precoding. IEEE Trans. Signal Process. 2023, 71, 2130–2145. [Google Scholar] [CrossRef]

- Ning, Z.; Wang, T.; Zhang, K. Dynamic Event-Triggered Security Control and Fault Detection for Nonlinear Systems with Quantization and Deception Attack. Inf. Sci. 2022, 594, 43–59. [Google Scholar] [CrossRef]

- Yu, S.; Zhao, C.; Song, L.; Li, Y.; Du, Y. Understanding Traffic Bottlenecks of Long Freeway Tunnels Based on a Novel Location-Dependent Lighting-Related Car-Following Model. Tunn. Undergr. Sp. Technol. 2023, 136, 105098. [Google Scholar] [CrossRef]

- Peng, J.; Wang, N.; El-Latif, A.A.A.; Li, Q.; Niu, X. Finger-Vein Verification Using Gabor Filter and SIFT Feature Matching. In Proceedings of the 2012 Eighth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Piraeus/Athens, Greece, 18–20 July 2022; pp. 45–48. [Google Scholar] [CrossRef]

- Hua, Y.; Lin, J.; Lin, C. An Improved SIFT Feature Matching Algorithm. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010; pp. 6109–6113. [Google Scholar] [CrossRef]

- Yawen, T.; Jinxu, G. Research on Vehicle Detection Technology Based on SIFT Feature. In Proceedings of the 2018 8th International Conference on Electronics Information and Emergency Communication (ICEIEC), Beijing, China, 15–17 June 2018; pp. 274–278. [Google Scholar] [CrossRef]

- Xiaohui, H.; Qiuhua, K.; Qianhua, C.; Yun, X.; Weixing, Z.; Li, Y. A Coherent Pattern Mining Algorithm Based on All Contiguous Column Bicluster. J. Artif. Intell. Technol. 2022, 2, 80–92. [Google Scholar] [CrossRef]

- Alhwarin, F.; Wang, C.J.; Ristic-Durrant, D.; Gräser, A. Improved SIFT-Features Matching for Object Recognition. In Proceedings of the Visions of Computer Science—BCS International Academic Conference (VOCS), London, UK, 22–24 September 2008. [Google Scholar] [CrossRef]

- Battiato, S.; Gallo, G.; Puglisi, G.; Scellato, S. SIFT Features Tracking for Video Stabilization. In Proceedings of the 14th International Conference on Image Analysis and Processing (ICIAP 2007), Modena, Italy, 10–14 September 2007; pp. 825–830. [Google Scholar] [CrossRef]

- Mu, K.; Hui, F.; Zhao, X. Multiple Vehicle Detection and Tracking in Highway Traffic Surveillance Video Based on Sift Feature Matching. J. Inf. Process. Syst. 2016, 12, 183–195. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE Features. Lect. Notes Comput. Sci. 2012, 7577, 214–227. [Google Scholar] [CrossRef]

- Sharma, T.; Jain, A.; Verma, N.K.; Vasikarla, S. Object Counting Using KAZE Features under Different Lighting Conditions for Inventory Management. In Proceedings of the 2019 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 15–17 October 2019. [Google Scholar] [CrossRef]

- Dai, X.; Xiao, Z.; Jiang, H.; Lui, J.C.S. UAV-Assisted Task Offloading in Vehicular Edge Computing Networks. IEEE Trans. Mob. Comput. 2023, 1, 1–18. [Google Scholar] [CrossRef]

- Zhang, Z.; Guo, D.; Zhou, S.; Zhang, J.; Lin, Y. Flight Trajectory Prediction Enabled by Time-Frequency Wavelet Transform. Nat. Commun. 2023, 14, 5258. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Xiao, P.; Zhao, Z.T.; Liu, Z.; Yu, J.; Hu, X.Y.; Chu, H.B.; Xu, J.J.; Liu, M.Y.; Zou, Q.; et al. A Wearable Localized Surface Plasmons Antenna Sensor for Communication and Sweat Sensing. IEEE Sens. J. 2023, 23, 11591–11599. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar] [CrossRef]

- Salakhutdinov, R.; Murray, I. On the Quantitative Analysis of Deep Belief Networks. In Proceedings of the 25th international conference on Machine learning, New York, NY, USA, 5–9 July 2008; pp. 872–879. [Google Scholar] [CrossRef]

- Zheng, M.; Zhi, K.; Zeng, J.; Tian, C.; You, L. A Hybrid CNN for Image Denoising. J. Artif. Intell. Technol. 2022, 2, 93–99. [Google Scholar] [CrossRef]

- Li, C.; Wang, Y.; Zhang, X.; Gao, H.; Yang, Y.; Wang, J. Deep Belief Network for Spectral–Spatial Classification of Hyperspectral Remote Sensor Data. Sensors 2019, 19, 204. [Google Scholar] [CrossRef]

- Qi, M.; Cui, S.; Chang, X.; Xu, Y.; Meng, H.; Wang, Y.; Yin, T. Multi-Region Nonuniform Brightness Correction Algorithm Based on L-Channel Gamma Transform. Secur. Commun. Netw. 2022, 2022, 2675950. [Google Scholar] [CrossRef]

- Liu, A.A.; Zhai, Y.; Xu, N.; Nie, W.; Li, W.; Zhang, Y. Region-Aware Image Captioning via Interaction Learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 3685–3696. [Google Scholar] [CrossRef]

- Li, Q.K.; Lin, H.; Tan, X.; Du, S. H∞Consensus for Multiagent-Based Supply Chain Systems under Switching Topology and Uncertain Demands. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 4905–4918. [Google Scholar] [CrossRef]

- Ma, K.; Li, Z.; Liu, P.; Yang, J.; Geng, Y.; Yang, B.; Guan, X. Reliability-Constrained Throughput Optimization of Industrial Wireless Sensor Networks with Energy Harvesting Relay. IEEE Internet Things J. 2021, 8, 13343–13354. [Google Scholar] [CrossRef]

- Yao, Y.; Shu, F.; Li, Z.; Cheng, X.; Wu, L. Secure Transmission Scheme Based on Joint Radar and Communication in Mobile Vehicular Networks. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10027–10037. [Google Scholar] [CrossRef]

- Xu, J.; Guo, K.; Sun, P.Z.H. Driving Performance Under Violations of Traffic Rules: Novice vs. Experienced Drivers. IEEE Trans. Intell. Veh. 2022, 7, 908–917. [Google Scholar] [CrossRef]

- Xu, J.; Pan, S.; Sun, P.Z.H.; Park, S.H.; Guo, K. Human-Factors-in-Driving-Loop: Driver Identification and Verification via a Deep Learning Approach Using Psychological Behavioral Data. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3383–3394. [Google Scholar] [CrossRef]

- Xu, J.; Park, S.H.; Zhang, X.; Hu, J. The Improvement of Road Driving Safety Guided by Visual Inattentional Blindness. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4972–4981. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle Detection in Aerial Imagery: A Small Target Detection Benchmark. J. Vis. Commun. Image Represent. 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Lin, H.Y.; Tu, K.C.; Li, C.Y. VAID: An Aerial Image Dataset for Vehicle Detection and Classification. IEEE Access 2020, 8, 212209–212219. [Google Scholar] [CrossRef]

- Wang, B.; Xu, B. A Feature Fusion Deep-Projection Convolution Neural Network for Vehicle Detection in Aerial Images. PLoS ONE 2021, 16, e0250782. [Google Scholar] [CrossRef]

- Mandal, M.; Shah, M.; Meena, P.; Devi, S.; Vipparthi, S.K. AVDNet: A Small-Sized Vehicle Detection Network for Aerial Visual Data. IEEE Geosci. Remote Sens. Lett. 2019, 17, 494–498. [Google Scholar] [CrossRef]

- du Terrail, J.O.; Jurie, F. Faster RER-CNN: Application to the Detection of Vehicles in Aerial Images. arXiv 2018, arXiv:1809.07628. [Google Scholar]

- Wang, B.; Gu, Y. An Improved FBPN-Based Detection Network for Vehicles in Aerial Images. Sensors 2020, 20, 4709. [Google Scholar] [CrossRef]

- Hou, S.; Fan, L.; Zhang, F.; Liu, B. An Improved Lightweight YOLOv5 for Remote Sensing Images. In Proceedings of the 32nd International Conference on Artificial Neural Networks, Heraklion, Greece, 26–29 September 2023; pp. 77–89. [Google Scholar] [CrossRef]

| Authors | Methodology |

|---|---|

| Arinaldi et al. [26] | The paper implements two different methodologies for vehicle detection and classification. The first method uses a Mixture of Gaussian (MoG), combined with a Support Vector Machine Classifier (SVM) classifier. The other method only uses faster Recurrent Convolutional Neural Network (RCNN). However, there was still a large number of vehicles that were left undetected. |

| Aqel et al. [27] | This study uses the background subtraction method to detect moving autos. To lower the occurrences of false positives, morphological corrections are performed. In the end, the classification is accomplished using the invariant Charlier moments. The method uses conventional image processing techniques, that limits its applicability to diverse traffic scenarios. Also, the background subtraction method will eliminate the cars which are not in motion, thus reducing the true positives. |

| Sarikan et al. [28] | The model uses a K-nearest neighbor classifier to automatically detect and classify vehicles. For feature extraction, windows and hollow areas of the vehicles are constructed to classify it as a motorcycle or car. The model is not applicable for broader views and dense traffic conditions. |

| Tan et al. [29] | The authors presented a method to classify vehicles using a Convolutional Neural Network (CNN). It uses an aerial image dataset. The proposed model firstly determines whether the area contains any vehicle or not by evaluating motion changes, feature matching and heat maps. Then, the classification is conducted using the classification layer of inception-v3 and AlexNet. |

| Hamzenejadi et al. [30] | This paper presents real-time vehicle detection solution based on Yolov5. The existing model is improved by adding attention mechanism and a new concept of ghost convolution. The experimental results prove the efficiency of the YOLO model in object detection models. |

| Ozturk et al. [31] | In this paper, a vehicle detection method has been presented. The vehicles are detected via miniature CNN architecture combined with morphological corrections. The model requires intensive post-processing to achieve good results. Also, the accuracy is not consistent on other datasets. |

| Roopa Chandrika et al. [32] | A model for vehicle recognition and classification has been presented. The model incorporates adaptive background subtraction along with binary label segmentation to locate vehicles. The approach is not suitable for stationary car detection or during traffic jam conditions. |

| Kumar et al. [33] | A new approach that uses You Only Look Once (YOLO) with Long Short-Term Memory (LSTM) to detect and classify vehicles. To reduce the model complexity, the images are segmented into binary labels in the pre-processing stage. The detected vehicles are also counted by counting the bounding boxes and classified into lightweight and heavy-weight vehicles. |

| Zhang et al. [34] | The paper proposes a method that uses an improved YOLOv3 algorithm to detect vehicles. The pre-trained YOLO network is trained with a new structure to improve the accuracy of the detection method. However, YOLOv3 is one of the oldest versions. The detection results can be improved by using the newest architectures. |

| Vehicle Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Pickup | 0.985 | 0.967 | 0.975 |

| Tractor | 0.991 | 0.987 | 0.988 |

| Vans | 0.941 | 0.958 | 0.949 |

| s | 0.907 | 0.910 | 0.908 |

| Truck | 0.934 | 0.971 | 0.952 |

| Camping Car | 0.956 | 0.945 | 0.950 |

| Plane | 0.977 | 0.936 | 0.956 |

| Boat | 0.965 | 0.971 | 0.968 |

| Others | 0.962 | 0.934 | 0.947 |

| Mean | 0.957 | 0.953 | 0.955 |

| Vehicle Class | Precision | Recall | F1-Score |

|---|---|---|---|

| Sedan | 0.963 | 0.974 | 0.968 |

| Minibus | 0.986 | 0.965 | 0.975 |

| Truck | 0.975 | 0.989 | 0.982 |

| PickupTruck | 0.988 | 0.946 | 0.967 |

| Bus | 0.941 | 0.978 | 0.959 |

| Cement Truck | 0.944 | 0.912 | 0.927 |

| Trailer | 0.973 | 0.956 | 0.964 |

| Car | 0.945 | 0.901 | 0.922 |

| Mean | 0.964 | 0.953 | 0.958 |

| Vehicle Class | Pickup | Tractor | Vans | Car | Truck | Camping Car | Plane | Boat | Others |

|---|---|---|---|---|---|---|---|---|---|

| Pickup | 0.98 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Tractor | 0.02 | 0.97 | 0 | 0 | 0 | 0.01 | 0 | 0 | 0 |

| Vans | 0 | 0.01 | 0.95 | 0.02 | 0 | 0.02 | 0 | 0 | 0 |

| Car | 0 | 0 | 0.04 | 0.93 | 0 | 0.02 | 0 | 0 | 0.01 |

| Truck | 0 | 0.03 | 0 | 0 | 0.97 | 0 | 0 | 0 | 0 |

| Camping Car | 0.02 | 0 | 0.03 | 0.02 | 0.01 | 0.92 | 0 | 0 | 0 |

| Plane | 0 | 0 | 0 | 0 | 0 | 0 | 0.96 | 0 | 0.04 |

| Boat | 0 | 0 | 0 | 0 | 0 | 0 | 0.01 | 0.95 | 0.04 |

| Others | 0 | 0 | 0 | 0 | 0 | 0 | 0.01 | 0.02 | 0.97 |

| Mean = 95.6% | |||||||||

| Vehicle Class | Sedan | Minibus | Truck | Pickup Truck | Bus | Cement Truck | Trailer | Car |

|---|---|---|---|---|---|---|---|---|

| Sedan | 0.98 | 0.01 | 0.01 | 0 | 0 | 0 | 0 | 0 |

| Minibus | 0 | 0.95 | 0.02 | 0 | 0.03 | 0 | 0 | 0 |

| Truck | 0 | 0.01 | 0.99 | 0 | 0 | 0 | 0 | 0 |

| Pickup Truck | 0 | 0.01 | 0 | 0.96 | 0.02 | 0 | 0.01 | 0 |

| Bus | 0.01 | 0.02 | 0 | 0 | 0.97 | 0 | 0 | 0 |

| Cement Truck | 0.01 | 0 | 0 | 0 | 0 | 0.99 | 0 | 0 |

| Trailer | 0.01 | 0 | 0 | 0.01 | 0 | 0.01 | 0.98 | 0 |

| Car | 0.03 | 0.01 | 0.01 | 0 | 0 | 0 | 0.02 | 0.93 |

| Mean = 94.6% | ||||||||

| Methods | VEDAI | VAID |

|---|---|---|

| Wang et al. [115] | 93.96 | - |

| Mandal et al. [116] | 51.95 | - |

| Terrail et al. [117] | 83.50 | - |

| Wang et al. [118] | 91.27 | - |

| Lin et al. [114] | - | 89.3 |

| Rafique et al. [1] | 92.2 | - |

| Hou et al. [119] | 75.54 | - |

| Our proposed Model | 95.6 | 94.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al Mudawi, N.; Qureshi, A.M.; Abdelhaq, M.; Alshahrani, A.; Alazeb, A.; Alonazi, M.; Algarni, A. Vehicle Detection and Classification via YOLOv8 and Deep Belief Network over Aerial Image Sequences. Sustainability 2023, 15, 14597. https://doi.org/10.3390/su151914597

Al Mudawi N, Qureshi AM, Abdelhaq M, Alshahrani A, Alazeb A, Alonazi M, Algarni A. Vehicle Detection and Classification via YOLOv8 and Deep Belief Network over Aerial Image Sequences. Sustainability. 2023; 15(19):14597. https://doi.org/10.3390/su151914597

Chicago/Turabian StyleAl Mudawi, Naif, Asifa Mehmood Qureshi, Maha Abdelhaq, Abdullah Alshahrani, Abdulwahab Alazeb, Mohammed Alonazi, and Asaad Algarni. 2023. "Vehicle Detection and Classification via YOLOv8 and Deep Belief Network over Aerial Image Sequences" Sustainability 15, no. 19: 14597. https://doi.org/10.3390/su151914597

APA StyleAl Mudawi, N., Qureshi, A. M., Abdelhaq, M., Alshahrani, A., Alazeb, A., Alonazi, M., & Algarni, A. (2023). Vehicle Detection and Classification via YOLOv8 and Deep Belief Network over Aerial Image Sequences. Sustainability, 15(19), 14597. https://doi.org/10.3390/su151914597