A Comprehensive Study of Emotional Responses in AI-Enhanced Interactive Installation Art

Abstract

:1. Introduction

2. Literature Review

2.1. Artificial Intelligence in Interactive Installation Art

2.2. Emotion and Well-Being

2.3. Research Dimension: Sensory Stimulation, Experience, and Engagement

2.3.1. Sensory Stimulation

2.3.2. Multi-Dimension Interactive

2.3.3. Engagement

2.4. Emotion Measurement in Interactive Installation Art

2.4.1. Emotion Recognition Technology

2.4.2. Emotion Model

2.4.3. Emotion Recognition Models

- (1)

- Machine-Learning Approaches:

- (2)

- Deep-Learning Models:

- (3)

- Hybrid Models:

2.5. Discussion

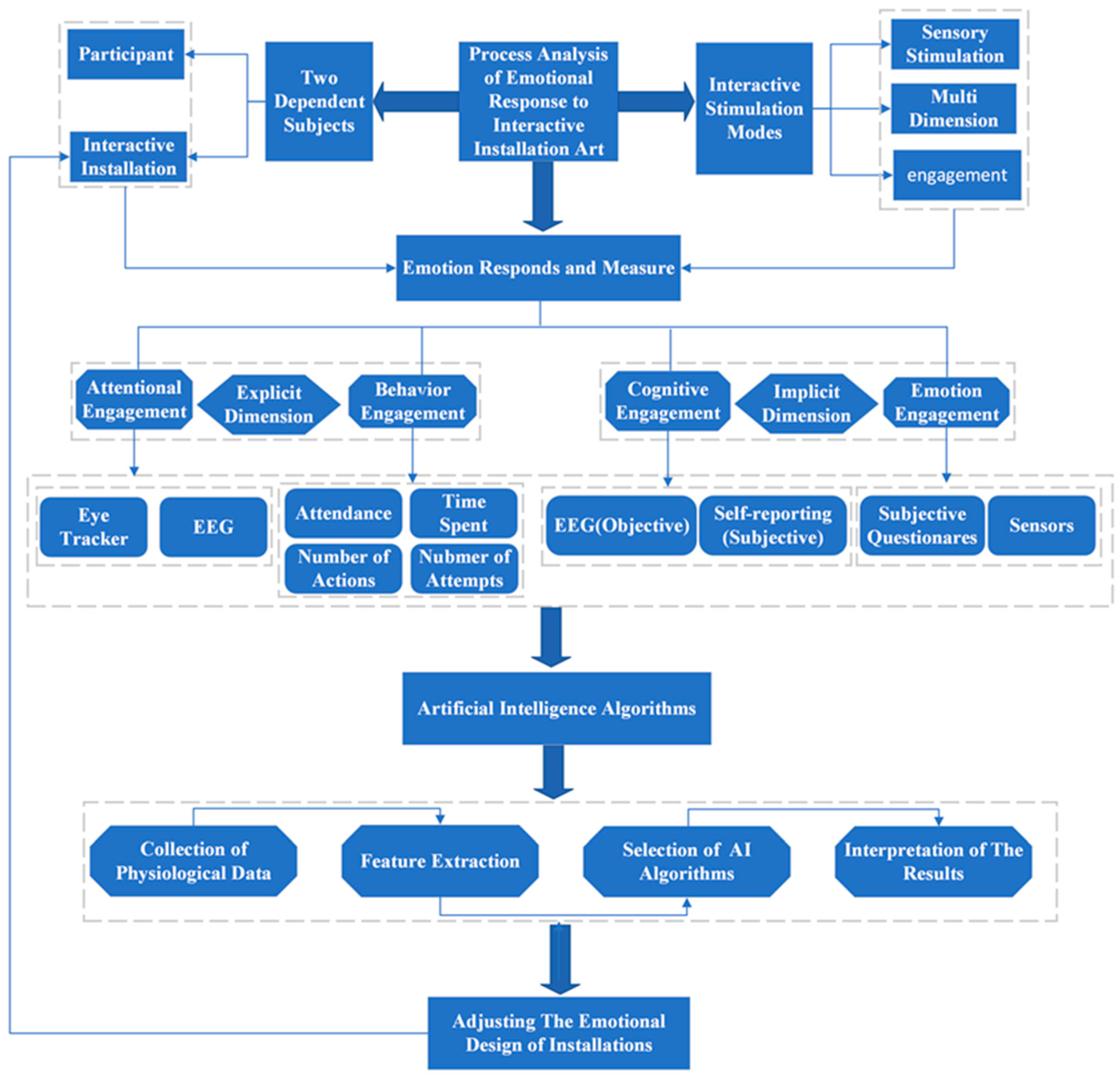

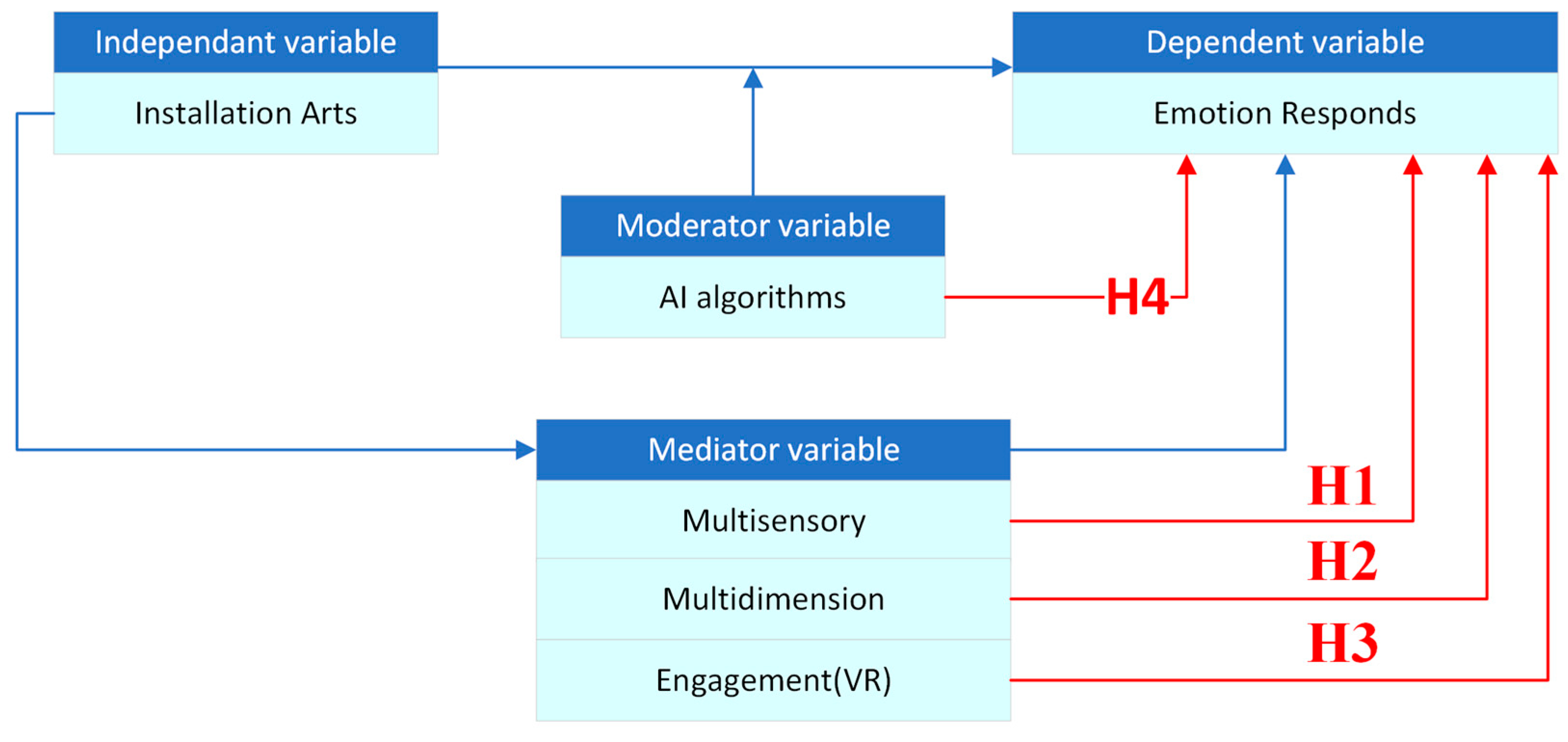

3. Framework and Hypothesis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Stallabrass, J. Digital Commons: Art and Utopia in the Internet Age. Art J. 2010, 69, 40–55. [Google Scholar]

- Huhtamo, E. Trouble at the Interface, or the Identity Crisis of Interactive Art. Estet. J. Ital. Aesthet. 2004. Available online: https://www.mediaarthistory.org/refresh/Programmatic%20key%20texts/pdfs/Huhtamo.pdf (accessed on 12 October 2022).

- Irvin, S. Interactive Art: Action and Participation. In Aesthetics of Interaction in Digital Art; MIT Press: Cambridge, MA, USA, 2013; pp. 85–103. [Google Scholar]

- Giannetti, C. Aesthetics of Digital Art; University of Minnesota Press: Minneapolis, MN, USA, 2015. [Google Scholar]

- Patel, S.V.; Tchakerian, R.; Morais, R.L.; Zhang, J.; Cropper, S. The Emoting City Designing feeling and artificial empathy in mediated environments. In Proceedings of the ECAADE 2020: Anthropologic—Architecture and Fabrication in the Cognitive Age, Berlin, Germany, 16–17 September 2020; Volume 2, pp. 261–270. [Google Scholar]

- Cao, Y.; Han, Z.; Kong, R.; Zhang, C.; Xie, Q. Technical Composition and Creation of Interactive Installation Art Works under the Background of Artificial Intelligence. Math. Probl. Eng. 2021, 2021, 7227416. [Google Scholar] [CrossRef]

- Tidemann, A. [Self.]: An Interactive Art Installation that Embodies Artificial Intelligence and Creativity. In Proceedings of the 2015 ACM SIGCHI Conference on Creativity and Cognition, Glasgow, UK, 22–25 June 2015; pp. 181–184. [Google Scholar]

- Gao, Z.; Lin, L. The intelligent integration of interactive installation art based on artificial intelligence and wireless network communication. Wirel. Commun. Mob. Comput. 2021, 2021, 3123317. [Google Scholar] [CrossRef]

- Ronchi, G.; Benghi, C. Interactive light and sound installation using artificial intelligence. Int. J. Arts Technol. 2014, 7, 377–379. [Google Scholar] [CrossRef]

- Raptis, G.E.; Kavvetsos, G.; Katsini, C. Mumia: Multimodal interactions to better understand art contexts. Appl. Sci. 2021, 11, 2695. [Google Scholar] [CrossRef]

- Pelowski, M.; Leder, H.; Mitschke, V.; Specker, E.; Gerger, G.; Tinio, P.P.; Husslein-Arco, A. Capturing aesthetic experiences with installation art: An empirical assessment of emotion, evaluations, and mobile eye tracking in Olafur Eliasson’s “Baroque, Baroque!”. Front. Psychol. 2018, 9, 1255. [Google Scholar] [CrossRef]

- Manovich, L. Defining AI Arts: Three Proposals. Catalog. Saint-Petersburg: Hermitage Museum, June 2019. pp. 1–9. Available online: https://www.academia.edu/download/60633037/Manovich.Defining_AI_arts.201920190918-80396-1vdznon.pdf (accessed on 12 October 2022).

- Rajapakse, R.P.C.J.; Tokuyama, Y. Thoughtmix: Interactive watercolor generation and mixing based on EEG data. In Proceedings of the International Conference on Artificial Life and Robotics, Online, 21–24 January 2021; pp. 728–731. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85108839509&partnerID=40&md5=05392d3ad25a40e51753f7bb8fa37cde (accessed on 12 October 2022).

- Akten, M.; Fiebrink, R.; Grierson, M. Learning to see: You are what you see. In Proceedings of the ACM SIGGRAPH 2019 Art Gallery, SIGGRAPH 2019, Los Angeles, CA, USA, 28 June 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Xu, S.; Wang, Z. DIFFUSION: Emotional Visualization Based on Biofeedback Control by EEG Feeling, listening, and touching real things through human brainwave activity. Artnodes 2021, 28. [Google Scholar] [CrossRef]

- Savaş, E.B.; Verwijmeren, T.; van Lier, R. Aesthetic experience and creativity in interactive art. Art Percept. 2021, 9, 167–198. [Google Scholar] [CrossRef]

- Duarte, E.F.; Baranauskas, M.C.C. An Experience with Deep Time Interactive Installations within a Museum Scenario; Institute of Computing, University of Campinas: Campinas, Brazil, 2020. [Google Scholar]

- Szubielska, M.; Imbir, K.; Szymańska, A. The influence of the physical context and knowledge of artworks on the aesthetic experience of interactive installations. Curr. Psychol. 2021, 40, 3702–3715. [Google Scholar] [CrossRef]

- Lim, Y.; Donaldson, J.; Jung, H.; Kunz, B.; Royer, D.; Ramalingam, S.; Thirumaran, S.; Stolterman, E. Emotional Experience and Interaction Design. In Affect and Emotion in Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Capece, S.; Chivăran, C. The sensorial dimension of the contemporary museum between design and emerging technologies*. IOP Conf. Ser. Mater. Sci. Eng. 2020, 949, 012067. [Google Scholar] [CrossRef]

- Rajcic, N.; McCormack, J. Mirror ritual: Human-machine co-construction of emotion. In Proceedings of the TEI 2020—Proceedings of the 14th International Conference on Tangible, Embedded, and Embodied Interaction, Sydney, Australia, 9–12 February 2020; pp. 697–702. [Google Scholar] [CrossRef]

- Her, J.J. An analytical framework for facilitating interactivity between participants and interactive artwork: Case studies in MRT stations. Digit. Creat. 2014, 25, 113–125. [Google Scholar] [CrossRef]

- Random International. Rain Room. 2013. Available online: https://www.moma.org/calendar/exhibitions/1352 (accessed on 23 September 2022).

- Scherer, K.R. What are emotions? And how can they be measured? Soc. Sci. Inf. 2005, 44, 695–729. [Google Scholar] [CrossRef]

- Fragoso Castro, J.; Bernardino Bastos, P.; Alvelos, H. Emotional resonance at art interactive installations: Social reconnection among individuals through identity legacy elements uncover. In Proceedings of the 10th International Conference on Digital and Interactive Arts, Aveiro, Portugal, 13–15 October 2021; pp. 1–6. [Google Scholar]

- Reason, D.T. Deeper than Reason; Clarendon Press: Oxford, UK, 2008. [Google Scholar]

- Guyer, P. Autonomy and Integrity in Kant’s Aesthetics. Monist 1983, 66, 167–188. [Google Scholar] [CrossRef]

- Carrier, D. Perspective as a convention: On the views of Nelson Goodman and Ernst Gombrich. Leonardo 1980, 13, 283–287. [Google Scholar] [CrossRef]

- Schindler, I.; Hosoya, G.; Menninghaus, W.; Beermann, U.; Wagner, V.; Eid, M.; Scherer, K.R. Measuring aesthetic emotions: A review of the literature and a new assessment tool. PLoS ONE 2017, 12, e0178899. [Google Scholar] [CrossRef]

- Pittera, D.; Gatti, E.; Obrist, M. I’m sensing in the rain: Spatial incongruity in visual-tactile mid-air stimulation can elicit ownership in VR users. In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings 2019, Glasgow, UK, 4–9 May 2019; pp. 1–15. [Google Scholar] [CrossRef]

- Ablart, D.; Vi, C.T.; Gatti, E.; Obrist, M. The how and why behind a multi-sensory art display. Interactions 2017, 24, 38–43. [Google Scholar] [CrossRef]

- Canbeyli, R. Sensory stimulation via the visual, auditory, olfactory, and gustatory systems can modulate mood and depression. Eur. J. Neurosci. 2022, 55, 244–263. [Google Scholar] [CrossRef]

- Gilroy, S.P.; Leader, G.; McCleery, J.P. A pilot community-based randomized comparison of speech generating devices and the picture exchange communication system for children diagnosed with autism spectrum disorder. Autism Res. 2018, 11, 1701–1711. [Google Scholar] [CrossRef]

- Gilbert, J.K.; Stocklmayer, S. The design of interactive exhibits to promote the making of meaning. Mus. Manag. Curatorship 2001, 19, 41–50. [Google Scholar] [CrossRef]

- Jiang, M.; Bhömer, M.T.; Liang, H.N. Exploring the design of interactive smart textiles for emotion regulation. In HCI International 2020–Late Breaking Papers: Digital Human Modeling and Ergonomics, Mobility and Intelligent Environments: 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020; Proceedings 22; Springer International Publishing: Cham, Switzerland, 2020; pp. 298–315. [Google Scholar]

- Sadka, O.; Antle, A. Interactive technologies for emotion-regulation training: Opportunities and challenges. In Proceedings of the CHI EA’20: Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–12. [Google Scholar]

- Waller, C.L.; Oprea, T.I.; Giolitti, A.; Marshall, G.R. Three-dimensional QSAR of human immunodeficiency virus (I) protease inhibitors. 1. A CoMFA study employing experimentally-determined alignment rules. J. Med. Chem. 1993, 36, 4152–4160. [Google Scholar] [CrossRef]

- Cappelen, B.; Andersson, A.P. Cultural Artefacts with Virtual Capabilities Enhance Self-Expression Possibilities for Children with Special Needs. In Transforming our World Through Design, Diversity, and Education; IOS Press: Amsterdam, The Netherlands, 2018; pp. 634–642. [Google Scholar]

- Schreuder, E.; van Erp, J.; Toet, A.; Kallen, V.L. Emotional Responses to Multi-sensory Environmental Stimuli: A Conceptual Framework and Literature Review. SAGE Open 2016, 6, 2158244016630591. [Google Scholar] [CrossRef]

- De Alencar, T.S.; Rodrigues, K.R.; Barbosa, M.; Bianchi, R.G.; de Almeida Neris, V.P. Emotional response evaluation of users in ubiquitous environments: An observational case study. In Proceedings of the 13th International Conference on Advances in Computer Entertainment Technology, Osaka, Japan, 9–12 November 2016; pp. 1–12. [Google Scholar]

- Velasco, C.; Obrist, M. Multi-sensory Experiences: A Primer. Front. Comput. Sci. 2021, 3, 614524. [Google Scholar] [CrossRef]

- Obrist, M.; Van Brakel, M.; Duerinck, F.; Boyle, G. Multi-sensory experiences and spaces. In Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, ISS 2017, Brighton, UK, 17–20 October 2017; pp. 469–472. [Google Scholar] [CrossRef]

- Vi, C.T.; Ablart, D.; Gatti, E.; Velasco, C.; Obrist, M. Not just seeing, but also feeling art: Mid-air haptic experiences integrated into a multi-sensory art exhibition. Int. J. Hum.-Comput. Stud. 2017, 108, 1–14. [Google Scholar] [CrossRef]

- Brianza, G.; Tajadura-Jiménez, A.; Maggioni, E.; Pittera, D.; Bianchi-Berthouze, N.; Obrist, M. As Light as Your Scent: Effects of Smell and Sound on Body Image Perception. In Proceedings of the IFIP Conference on Human-Computer Interaction, Paphos, Cyprus, 2–6 September 2019; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics. Springer: Cham, Switzerland, 2019; Volume 11749, pp. 179–202. [Google Scholar] [CrossRef]

- Brooks, J.; Lopes, P.; Amores, J.; Maggioni, E.; Matsukura, H.; Obrist, M.; Lalintha Peiris, R.; Ranasinghe, N. Smell, Taste, and Temperature Interfaces. In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings, Yokohama, Japan, 8–13 May 2021. [Google Scholar] [CrossRef]

- Zald, D.H. The human amygdala and the emotional evaluation of sensory stimuli. Brain Res. Rev. 2003, 41, 88–123. [Google Scholar] [CrossRef]

- Anadol, R. Space in the Mind of a Machine: Immersive Narratives. Archit. Des. 2022, 92, 28–37. [Google Scholar] [CrossRef]

- Liu, J. Science popularization-oriented art design of interactive installation based on the protection of endangered marine life-the blue whales. J. Phys. Conf. Ser. 2021, 1827, 012116–012118. [Google Scholar] [CrossRef]

- De Bérigny, C.; Gough, P.; Faleh, M.; Woolsey, E. Tangible User Interface Design for Climate Change Education in Interactive Installation Art. Leonardo 2014, 47, 451–456. [Google Scholar] [CrossRef]

- Fortin, C.; Hennessy, K. Designing Interfaces to Experience Interactive Installations Together. In Proceedings of the International Symposium on Electronic Art, Vancouver, BC, Canada, 14–19 August 2015. [Google Scholar]

- Gu, S.; Lu, Y.; Kong, Y.; Huang, J.; Xu, W. Diversifying Emotional Experience by Layered Interfaces in Affective Interactive Installations. In Proceedings of the 2021 DigitalFUTURES: The 3rd International Conference on Computational Design and Robotic Fabrication (CDRF 2021), Shanghai, China, 3–4 July 2021; Springer: Singapore, 2022; Volume 3, pp. 221–230. [Google Scholar]

- Saidi, H.; Serrano, M.; Irani, P.; Hurter, C.; Dubois, E. On-body tangible interaction: Using the body to support tangible manipulations for immersive environments. In Proceedings of the Human-Computer Interaction–INTERACT 2019: 17th IFIP TC 13 International Conference, Paphos, Cyprus, 2–6 September 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 471–492. [Google Scholar]

- Edmonds, E. Art, interaction, and engagement. In Proceedings of the International Conference on Information Visualisation, London, UK, 13–15 July 2011; pp. 451–456. [Google Scholar] [CrossRef]

- Röggla, T.; Wang, C.; Perez Romero, L.; Jansen, J.; Cesar, P. Tangible air: An interactive installation for visualising audience engagement. In Proceedings of the 2017 ACM SIGCHI Conference on Creativity and Cognition, Singapore, 27–30 June 2017; pp. 263–265. [Google Scholar]

- Vogt, T.; Andr, E.; Wagner, J.; Gilroy, S.; Charles, F.; Cavazza, M. Real-time vocal emotion recognition in art installations and interactive storytelling: Experiences and lessons learned from CALLAS and IRIS. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009. [Google Scholar]

- Turk, M. Multimodal interaction: A review. Pattern Recognit. Lett. 2014, 36, 189–195. [Google Scholar] [CrossRef]

- Ismail, A.W.; Sunar, M.S. Multimodal fusion: Gesture and speech input in augmented reality environment. In Computational Intelligence in Information Systems: Proceedings of the Fourth INNS Symposia Series on Computational Intelligence in Information Systems (INNS-CIIS 2014), Brunei, Brunei, 7–9 November 2014; Springer International Publishing: Cham, Switzerland, 2015; pp. 245–254. [Google Scholar]

- Zhang, W.; Ren, D.; Legrady, G. Cangjie’s Poetry: An Interactive Art Experience of a Semantic Human-Machine Reality. Proc. ACM Comput. Graph. Interact. Tech. 2021, 4, 19. [Google Scholar] [CrossRef]

- Pan, J.; He, Z.; Li, Z.; Liang, Y.; Qiu, L. A review of multimodal emotion recognition. CAAI Trans. Intell. Syst. 2020, 7. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Avetisyan, H.; Bruna, O.; Holub, J. Overview of existing algorithms for emotion classification. Uncertainties in evaluations of accuracies. J. Phys. Conf. Ser. 2016, 772, 012039. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Gupta, A.; Jain, P.; Rani, A.; Yadav, J. Classification of human emotions from EEG signals using SVM and LDA Classifiers. In Proceedings of the 2015 2nd International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 19–20 February 2015; pp. 180–185. [Google Scholar]

- Mano, L.Y.; Giancristofaro, G.T.; Faiçal, B.S.; Libralon, G.L.; Pessin, G.; Gomes, P.H.; Ueyama, J. Exploiting the use of ensemble classifiers to enhance the precision of user’s emotion classification. In Proceedings of the 16th International Conference on Engineering Applications of Neural Networks (INNS), Rhodes Island, Greece, 25–28 September 2015; pp. 1–7. [Google Scholar]

- Tu, G.; Wen, J.; Liu, H.; Chen, S.; Zheng, L.; Jiang, D. Exploration meets exploitation: Multitask learning for emotion recognition based on discrete and dimensional models. Knowl.-Based Syst. 2022, 235, 107598. [Google Scholar] [CrossRef]

- Seo, Y.-S.; Huh, J.-H. Automatic Emotion-Based Music Classification for Supporting Intelligent IoT Applications. Sensors 2019, 21, 164. [Google Scholar] [CrossRef]

- Cooney, M. Robot Art, in the Eye of the Beholder?: Personalized Metaphors Facilitate Communication of Emotions and Creativity. Front. Robot. AI 2021, 8, 668986. [Google Scholar] [CrossRef]

- Gilroy, S.W.; Cavazza, M.; Chaignon, R.; Mäkelä, S.M.; Niranen, M.; André, E.; Vogt, T.; Urbain, J.; Seichter, H.; Billinghurst, M.; et al. An effective model of user experience for interactive art. In Proceedings of the 2008 International Conference on Advances in Computer Entertainment Technology, ACE 2008, Yokohama, Japan, 3–5 December 2008; pp. 107–110. [Google Scholar] [CrossRef]

- Işik, Ü.; Güven, A. Classification of emotion from physiological signals via artificial intelligence techniques. In Proceedings of the 2019 Medical Technologies Congress (TIPTEKNO), Izmir, Turkey, 3–5 October 2019; pp. 1–4. [Google Scholar]

- Nasoz, F.; Alvarez, K.; Lisetti, C.L.; Finkelstein, N. Emotion recognition from physiological signals using wireless sensors for presence technologies. Cogn. Technol. Work. 2004, 6, 4–14. [Google Scholar] [CrossRef]

- Suhaimi, N.S.; Mountstephens, J.; Teo, J. A Dataset for Emotion Recognition Using Virtual Reality and EEG (DER-VREEG): Emotional State Classification Using Low-Cost Wearable VR-EEG Headsets. Big Data Cogn. Comput. 2022, 6, 16. [Google Scholar] [CrossRef]

- Yu, M.; Xiao, S.; Hua, M.; Wang, H.; Chen, X.; Tian, F.; Li, Y. EEG-based emotion recognition in an immersive virtual reality environment: From local activity to brain network features. Biomed. Signal Process. Control 2022, 72, 103349. [Google Scholar] [CrossRef]

- Suhaimi, N.S.; Mountstephens, J.; Teo, J. Explorations of A Real-Time VR Emotion Prediction System Using Wearable Brain-Computer Interfacing. J. Phys. Conf. Ser. 2021, 2129, 012064. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Higuera-Trujillo, J.L.; Greco, A.; Guixeres, J.; Llinares, C.; Scilingo, E.P.; Valenza, G. Affective computing in virtual reality: Emotion recognition from brain and heartbeat dynamics using wearable sensors. Sci. Rep. 2018, 8, 13657. [Google Scholar] [CrossRef]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2017, 10, 417–429. [Google Scholar] [CrossRef]

- Wang, X.; Ren, Y.; Luo, Z.; He, W.; Hong, J.; Huang, Y. Deep learning-based EEG emotion recognition: Current trends and future perspectives. Front. Psychol. 2023, 14, 1126994. [Google Scholar] [CrossRef]

- Marín-Morales, J.; Llinares, C.; Guixeres, J.; Alcañiz, M. Emotion recognition in immersive virtual reality: From statistics to affective computing. Sensors 2020, 20, 5163. [Google Scholar] [CrossRef]

- Ji, Y.; Dong, S.Y. Deep learning-based self-induced emotion recognition using EEG. Front. Neurosci. 2022, 16, 985709. [Google Scholar] [CrossRef]

- Cai, J.; Xiao, R.; Cui, W.; Zhang, S.; Liu, G. Application of electroencephalography-based machine learning in emotion recognition: A review. Front. Syst. Neurosci. 2021, 15, 729707. [Google Scholar] [CrossRef]

- Khan, A.R. Facial emotion recognition using conventional machine learning and deep learning methods: Current achievements, analysis and remaining challenges. Information 2022, 13, 268. [Google Scholar] [CrossRef]

- Lozano-Hemmer, R. 2006. Pulse Room [Installation]. Available online: https://www.lozano-hemmer.com/pulse_room.php (accessed on 12 October 2022).

- Cai, Y.; Li, X.; Li, J. Emotion Recognition Using Different Sensors, Emotion Models, Methods and Datasets: A Comprehensive Review. Sensors 2023, 23, 2455. [Google Scholar] [CrossRef]

- Domingues, D.; Miosso, C.J.; Rodrigues, S.F.; Silva Rocha Aguiar, C.; Lucena, T.F.; Miranda, M.; Rocha, A.F.; Raskar, R. Embodiments, visualizations, and immersion with enactive affective systems. Eng. Real. Virtual Real. 2014, 9012, 90120J. [Google Scholar] [CrossRef]

- Ratliff, M.S.; Patterson, E. Emotion recognition using facial expressions with active appearance models. In Proceedings of the HCI’08: 3rd IASTED International Conference on Human Computer Interaction, Innsbruck, Austria, 17–19 March 2008. [Google Scholar]

- Teng, Z.; Ren, F.; Kuroiwa, S. The emotion recognition through classification with the support vector machines. WSEAS Trans. Comput. 2006, 5, 2008–2013. [Google Scholar]

- Hossain, M.S.; Muhammad, G. Emotion recognition using deep learning approach from audio–visual emotional big data. Inf. Fusion 2019, 49, 69–78. [Google Scholar] [CrossRef]

- Teo, J.; Chia, J.T.; Lee, J.Y. Deep learning for emotion recognition in affective virtual reality and music applications. Int. J. Recent Technol. Eng. 2019, 8, 219–224. [Google Scholar] [CrossRef]

- Tashu, T.M.; Hajiyeva, S.; Horvath, T. Multimodal emotion recognition from art using sequential co-attention. J. Imaging 2021, 7, 157. [Google Scholar] [CrossRef]

- Verma, G.; Verma, H. Hybrid-deep learning model for emotion recognition using facial expressions. Rev. Socionetwork Strateg. 2020, 14, 171–180. [Google Scholar] [CrossRef]

- Atanassov, A.V.; Pilev, D.; Tomova, F.; Kuzmanova, V.D. Hybrid System for Emotion Recognition Based on Facial Expressions and Body Gesture Recognition. In Proceedings of the International Conference on Applied Informatics, Jakarta, Indonesia, 17–19 December 2021. [Google Scholar] [CrossRef]

- Yaddaden, Y.; Adda, M.; Bouzouane, A.; Gouin-Vallerand, C. Hybrid-Based Facial Expression Recognition Approach for Human-Computer Interaction. In Proceedings of the 2018 2nd International Conference on Computer Science and Artificial Intelligence, Shenzhen, China, 8–10 December 2018; pp. 1–6. [Google Scholar]

- Padhy, N.; Singh, S.K.; Kumari, A.; Kumar, A. A Literature Review on Image and Emotion Recognition: Proposed Model. In Smart Intelligent Computing and Applications: Proceedings of the Third International Conference on Smart Computing and Informatics, 2019, Shimla, India, 15–16 June 2019; Springer: Singapore, 2019; Volume 2, pp. 341–354. [Google Scholar]

- Ma, X. Data-Driven Approach to Human-Engaged Computing Definition of Engagement. International SERIES on Information Systems and Management in Creative eMedia (CreMedia), (2017/2), 43-47.2018. Available online: https://core.ac.uk/download/pdf/228470682.pdf (accessed on 12 October 2022).

- Richey, R.C.; Klein, J.D. Design and Development Research; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2007. [Google Scholar]

| Country | Famous Artist | Theme and Concept | Expressed Emotion | Significant Exhibition and Installation |

|---|---|---|---|---|

| United States | Bill Viola | Human emotions, spirituality, existentialism | Contemplation, introspection | “Bill Viola: The Moving Portrait” at the National Portrait Gallery, 2017–2018<br>- “Bill Viola: A Retrospective” at the Guggenheim Museum, 2017 |

| Janet Cardiff and George Bures Miller | Perception, memory, spatial experience | Eerie, introspective | “Janet Cardiff and George Bures Miller: The Murder of Crows” at the Art Gallery of Ontario, 2019–2020<br>- “Janet Cardiff and George Bures Miller: Lost in the Memory Palace” at the Museum of Contemporary Art, Chicago, 2013 | |

| Rafael Lozano-Hemmer | perception, deception and surveillance | Curiosity and intrigue | “Rafael Lozano-Hemmer: Common Measures” at the Crystal Bridges Museum of American Art (2022) | |

| United Kingdom | Anish Kapoor | Identity, space, spirituality | Transcendent, awe | “Anish Kapoor: Symphony for a Beloved Sun” at The Hayward Gallery (2003) |

| Rachel Whiteread | Memory, absence, domestic spaces | Haunting, melancholic | “Rachel Whiteread” at Tate Britain, 2017–2018<br>- “Rachel Whiteread: Internal Objects” at the Gagosian Gallery, 2019 | |

| Antony Gormley | Human existence, body, space | Contemplative, introspective | -“Antony Gormley” at the Royal Academy of Arts, 2019<br>- “Antony Gormley: Field for the British Isles” at the Tate Modern, 2019–2020 | |

| Tracey Emin | Personal narratives, sexuality, vulnerability | Raw, intimate | “Tracey Emin: Love Is What You Want” at Hayward Gallery (2011) | |

| Germany | Olafur Eliasson | Perception, nature, environmental issues | Sensational, immersive | “Olafur Eliasson: In Real Life” at Tate Modern (2019) |

| Rebecca Horn | Transformation, body as a metaphor, time | Poetic, evocative | -“Rebecca Horn: Body Fantasies” at the Martin-Gropius-Bau, 2019<br>- “Rebecca Horn: Performances” at Tate Modern, 2019 | |

| Wolfgang Laib | Transience, spirituality, simplicity | Serene, meditative | “Wolfgang Laib: A Retrospective” at MoMA (2013–2014) | |

| Anselm Kiefer | History, memory, identity | Evocative, contemplative | -“Anselm Kiefer: Für Andrea Emo” at the Gagosian Gallery, 2019<br>- “Anselm Kiefer: For Louis-Ferdinand Céline” at the Royal Academy of Arts, 2020–2021 | |

| China | Ai Weiwei | Politics, social issues, cultural heritage | Provocative, defiant | “Ai Weiwei: According to What?” at the Hirshhorn Museum and Sculpture Garden (2012) |

| Cai Guo-Qiang | Nature, Chinese culture, cosmology | Explosive, sublime | “Cai Guo-Qiang: Odyssey and Homecoming” at the Palace Museum, Beijing, 2019<br>- “Cai Guo-Qiang: Falling Back to Earth” at the Queensland Art Gallery, 2013–2014 | |

| Xu Bing | Language, identity, globalization | Thought-provoking, questioning | “Xu Bing: Tobacco Project” at the Virginia Museum of Fine Arts (2011) | |

| Yin Xiuzhen | Urbanization, memory, personal narratives | Reflective, nostalgic | “Yin Xiuzhen: Sky Patch” at Ullens Center for Contemporary Art (2014) |

| Modal Mixture Mode Public Data | Dataset | Author and Literature | Modality and Feature Extraction | Classification Algorithm | Accuracy/% (Category/#) |

|---|---|---|---|---|---|

| A mix of behavioral modes | eNTER FACE05 | Nguyen et al. | Voice + face: three-dimensional series | DBN | 90.85 (Avg./6) |

| convolutional neural network | |||||

| Dobrisek et al. | Voice: open SMILE: Face: | Support Vector Machines | 77.5 (Avg./6) | ||

| Construction and matching subspace | |||||

| Zhang et al. | Voice: CNN Face: 3D-CNN | Support Vector Machines | 85.97 (Avg./6) | ||

| Hybrid of multiple neurophysiological modalities | DEAP | Koelstra et al. | EEG+PPS: WELCHS method | Native Bayes | 58.6 (Valence/2) |

| Tang et al. | EEG: frequency-domain differential entropy; PPS | Support Vector Machines | 83.8 (Valence/2) | ||

| Time Domain Statistical Features | 83.2 (Arousal/2) | ||||

| Yin et al. | EEG+PPS: | Ensemble SAE | 83 (Valence/2) | ||

| stacked autoencoders | 84.1 (Arousal) | ||||

| Behavioral performance mixed with neurophysiological modality | MAHNOB-HCI | Soleymani et al. | EEG: frequency-domain power spectral density | Support Vector Machines | 76.4 (Valence/2) 68.5 (Arousal) |

| Eye movements: pupillary power spectrum blinking | |||||

| Gaze at isotemporal statistical features | |||||

| Huang et al. | EEG: Wavelet transform to extract power spectrum | SVM (EEG) | 75.2 (Valence/2) | ||

| Face: CNN Deep Feature Extraction | CNN (expression) | 74.1 (Arousal) | |||

| Koelstra et al. | EEG: Support Vector Machine Recursive Feature Elimination | Native Bayes | 73 (Valence/2) | ||

| Face: Action Unit Mapping | 68.5 (Arousal) |

| NO | Paper | Research Questions | Research Methodology | Research Dimension | Research Findings |

|---|---|---|---|---|---|

| 1 | Suhaimi et al. (2022) [70] | Can a low-cost wearable VR-EEG headset be used for emotion recognition? How accurate can the system predict emotional states in real time? | Machine-learning algorithm | Emotion recognition using VR-EEG signals | A system has been developed that predicts a person’s emotional state with high accuracy in real time (83.6% average). This system can adjust a virtual reality experience based on the predicted emotional stale by changing colors and textures in the environment. |

| 2 | Yu et al. (2022) [71] | What are the most effective EEG features for emotion recognition in an immersive VR environment? How do different machine learning classifiers perform in classifying emotional states based on these features? | Machine-learning algorithm | Emotion recognition using VR-EEG signals | both local brain activity and network features car effectively capture emotional information in EEG signals, but network features perform slightly better in classifying emotions, The proposed approach showed comparable or better results than existing studies on EEG-based emotion recognition in virtual reality. |

| 3 | Suhaimi et al. (2021) [72] | Can a real-time VR emotion prediction system using wearable BCI technology be developed? How accurately can the system predict the emotional state of the user based on their EEG signals? | Machine-learning algorithm | Emotion recognition using VR-BCI signals | A system was created that can accurately predict a user’s emotional state in real time while using virtual reality. With an average accuracy of 83.6%. the system can adjust the virtual environment’s colors and textures to match the users emotional slate. |

| 5 | Marín-Morales et al. (2018) [73] | How can wearable sensors be used to recognize emotions from brain and heartbeat dynamics in virtual reality environments? | Machine learning approach | Brain and heartbeat dynamics | The model’s accuracy was 75.00% along the arousal dimension and 71.21% along the valence dimension 1. |

| 6 | Zheng, Zhu, and Lu (2017) [74] | How can stable patterns be identified over time for emotion recognition from EEG signals? | Empirical study | EEG signals, emotion recognition | Stable patterns can be identified over time for emotion recognition from EEG signals . |

| 7 | Wang et al. (2023) [75] | how deep learning techniques can be applied to EEG emotion recognition and what are the challenges and opportunities in this field. | literature survey | EEG emotion recognition, | a review of EEG emotion recognition benchmark datasets, an analysis of deep learning techniques |

| 8 | Marín-Morales et al. (2020) [76] | how to recognize emotions from brain and heartbeat dynamics using wearable sensors in immersive virtual reality | systematic review of emotion recognition research using physiological and behavioral measures | using wearable sensors in immersive virtual reality | the use of wearable sensors in immersive virtual reality is a promising approach for emotion recognition, and that machine learning techniques can be used to classify emotions with high accuracy1. |

| 9 | Ji and Dong (2022) [77] | how to classify self-induced emotions from EEG signals, especially recorded during recalling specific memories or imagining emotional situations. | Deep learning technology | the classification of emotions from EEG signals | selecting key channels based on signal statistics can reduce the computational complexity by 89% without decreasing the classification accuracy. |

| 10 | Cai et al. (2021) [78] | how to use machine learning to extract feature vectors related to emotional states from EEG signals and construct a classifier to separate emotions into discrete states to realize emotion recognition | electroencephalography-based machine learning | electroencephalography-based machine learning | using machine learning to extract feature vectors related to emotional states from EEG signals and constructing a classifier to separate emotions into discrete states has a broad development prospect1. |

| 11 | Khan, A. R. (2022) [79] | How to recognize emotions from facial expressions using machine learning or deep learning techniques | review of the literature | facial emotion recognition using machine learning or deep learning techniques | deep learning techniques have achieved better performance than conventional machine learning techniques in facial emotion recognition. |

| 12 | Lozano-Hemmer, R. (2016) [80] | How can emotion recognition technology be used to crealecreate a collective emotional portraltportrait of the audience? | Computer vision and custom software | Social engagement | Reading Emotions used computer vision to analyze the emotional responses of visitors and create a collective emotional portrait of the audience. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Ibrahim, Z. A Comprehensive Study of Emotional Responses in AI-Enhanced Interactive Installation Art. Sustainability 2023, 15, 15830. https://doi.org/10.3390/su152215830

Chen X, Ibrahim Z. A Comprehensive Study of Emotional Responses in AI-Enhanced Interactive Installation Art. Sustainability. 2023; 15(22):15830. https://doi.org/10.3390/su152215830

Chicago/Turabian StyleChen, Xiaowei, and Zainuddin Ibrahim. 2023. "A Comprehensive Study of Emotional Responses in AI-Enhanced Interactive Installation Art" Sustainability 15, no. 22: 15830. https://doi.org/10.3390/su152215830

APA StyleChen, X., & Ibrahim, Z. (2023). A Comprehensive Study of Emotional Responses in AI-Enhanced Interactive Installation Art. Sustainability, 15(22), 15830. https://doi.org/10.3390/su152215830