Vector Autoregression Model-Based Forecasting of Reference Evapotranspiration in Malaysia

Abstract

1. Introduction

2. State of the Art

2.1. Penman–Montieth Empirical Formula

2.2. Machine Learning Model Performance

2.2.1. Artificial Neural Network (ANN) Performance

2.2.2. Extreme Learning Machine (ELM) Performance

2.2.3. Support Vector Machine (SVM) Performance

2.2.4. Gene Expression Programming (GEP) Performance

2.2.5. Autoregression (AR) Performance

2.2.6. Deep Learning Performance

2.2.7. Adaptive Neuro-Fuzzy Inference System (ANFIS)

2.2.8. Auto Encoder-Decoder Bidirectional LSTM

2.3. Critical Analysis

3. Preliminaries

3.1. Evapotranspiration

3.2. Embedded System

3.3. Forecast Results with Historical Data

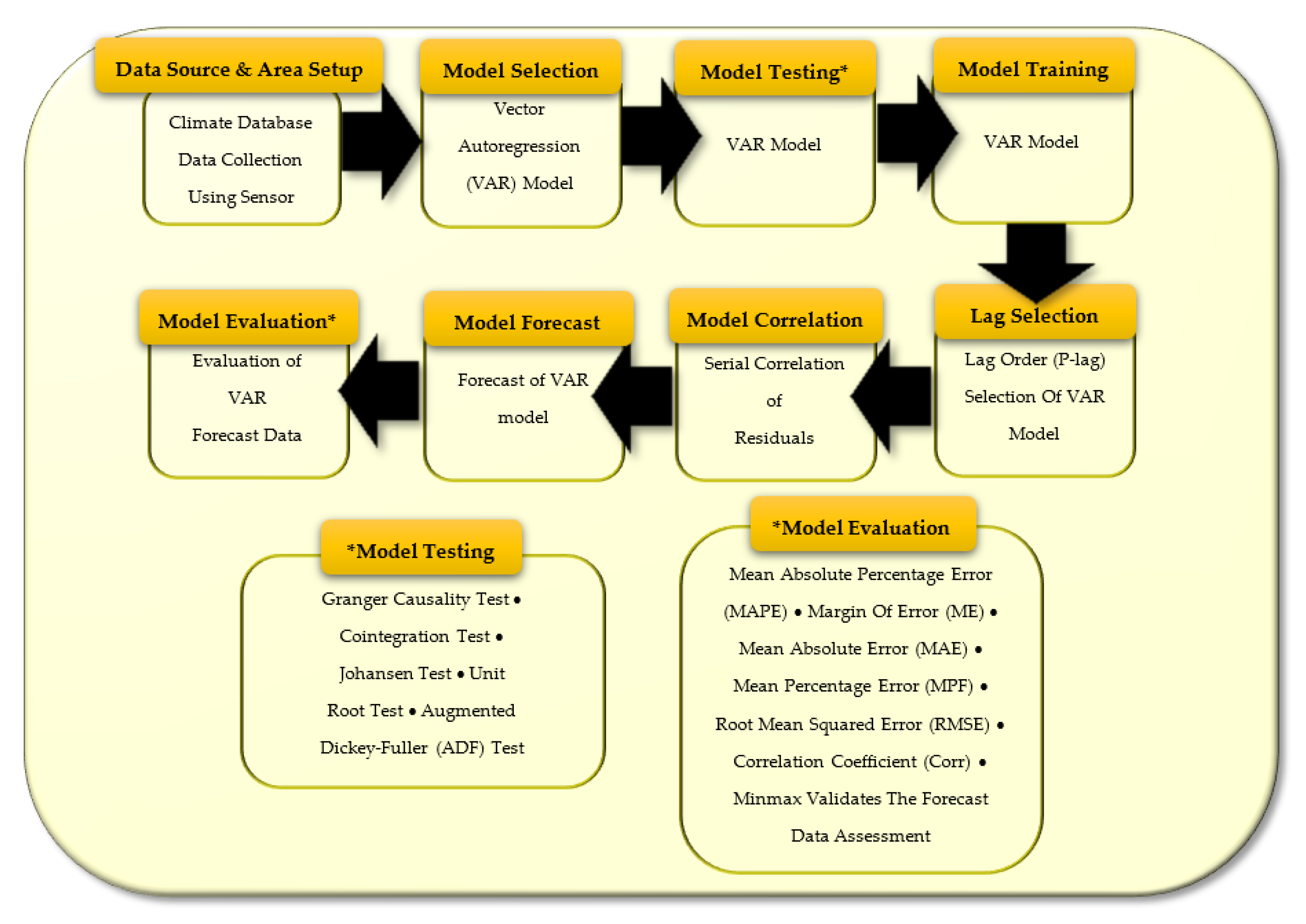

4. Materials and Methods

4.1. Climate Database and Study Area

4.2. Vector Autoregression (VAR) Model

4.3. Various Tests

- Granger causality test: This test is used to determine whether one time series is useful in forecasting another time series. It can be used to determine if there is a causal relationship between the variables in the VAR model and if the model is correctly specified.

- Cointegration test: This test is used to determine if there is a long-term relationship between the variables in the VAR model. It can be used to confirm that the variables in the VAR model are cointegrated and that the model is correctly specified.

- Johansen test: This test is used to determine the number of cointegrating relationships between the variables in the VAR model. It can be used to confirm that the variables in the VAR model are cointegrated and that the model is correctly specified.

- Unit root test: This test is used to determine whether the variables in the VAR model are non-stationary or stationary. It can be used to confirm that the variables in the VAR model are stationary and that the model is correctly specified.

- Augmented Dickey–Fuller (ADF) test: This test is used to determine whether a time series has a unit root or not. It can be used to confirm that the variables in the VAR model are stationary and that the model is correctly specified.

- Granger causality test:The null hypothesis in a Granger causality test is that the past values of one time series (X) do not have any significant information for predicting the future values of another time series (Y), beyond what can be already predicted by the past values of Y alone. This can be stated mathematically as H0: x = 0, where x represents the coefficients of the lagged values of X in the forecasting equation for Y. The null hypothesis is that these coefficients are equal to zero, indicating that past values of X do not contain any additional information for predicting future values of Y.Alternatively, the null hypothesis can also be stated as H0: X does not Granger cause Y. This means that the past values of X do not have a causal effect on the future values of Y.It is worth noting that if the null hypothesis is rejected in a Granger causality test, it does not necessarily mean that there is a causal relationship between the two time series, but only that the past values of one series contain additional information that can be used to predict the future values of the other series. If the probability value is less than any level, then the hypothesis would be rejected at that level. Stationary time series perform the Granger causality test with two or more variables. Non-stationary time series perform the test using differences with some lags, which are chosen based on information criteria, such as Akaike information criterion (AIC), Bayesian information criterion (BIC), Akaike’s final prediction error (FPE), or Hannan–Quinn information criterion (HQIC) [51]. The null Granger causality hypothesis is dismissed if a regression with a significance level of 0.05 has not maintained any lagged values or p-values of an explanatory variable.

- Cointegration Test: The cointegration test is used to assess if there is a long-term statistical association between many time series. The cointegration test analyzes two of the non-stationary time series, namely, variance and means that vary over time, which allows long-term parameter estimation or equilibrium in the unit root variables method. If a linear combination of such variables has a lower integration order, two sets of variables are cointegrated. Integration order (d) is the number of differences appropriate for converting non-stationary time series into stationary time series. The basic principle on which the model of vector autoregression (VAR) is based is the cointegration test. Several tests, including the Engle–Granger test, the Phillips–Ouliaris test, and the Johansen test, can be used to detect the cointegration of variables. Johansen’s test was used in this situation [52].

- Johansen Test: The Johansen test is used to test the cointegration of a few different non-stationary time series data relationships. The Johansen test is an improvement over the Engle–Granger test, facilitating the cointegration of more than one relationship. It removes the issue of choosing a dependent variable and the problems caused by errors from one point to the next. As such, the test can distinguish many cointegrating vectors. Due to unreliable output results with restricted sample size, the Johansen test is vulnerable to asymptotic or large sample size properties. The Johansen test has two main types: trace and maximum eigenvalue tests. The trace test determines the combination number of linearity in time series results. The null hypothesis is set to zero; using the trace test to test for cointegration in a sample, it tests whether the null hypothesis is denied. If it is denied, it can be concluded that the analysis has a cointegration relationship. Therefore, the null hypothesis should be discounted to justify a cointegration relationship in the analysis. Simultaneously, the maximum eigenvalue test defines the eigenvalue as a non-zero vector; the scalar factor shifts when a linear transformation is applied. The maximum eigenvalue test is very likely to be Johansen’s trace test. The most significant difference between the maximum eigenvalue test and the Johansen trace test is the null hypothesis [52]. A trace test is employed in this case.

- Unit root test: Unit root tests are tests for stationarity in a time sequence. A time series is said to be stationary if a shift in time does not cause a change in the shape of the distribution. The origins of units are the cause of non-stationary structures. If a time series has a unit root, it implies a systemic pattern that is unpredictable [53]. Differentiating the series once or several times before it becomes stationary, the augmented Dickey–Fuller (ADF) test is used to transform non-stationary time series into stationary time series. Differentiating reduces by one the time series period. The length needed by vector autoregression must be the same for the all time series so that the difference will apply to the all time series.

- Augmented Dickey–Fuller (ADF) Test: The augmented Dickey–Fuller (ADF) test is a statistic used to test whether a given time series is stationary or non-stationary [54,55]. It is a standard statistical measure in the static analysis of a sequence. It is an augmented version of the Dickey–Fuller test for larger and more complex time series models.

4.4. Select Lag Order (P-Lag) of VAR Model

4.5. Training of the VAR Model

4.6. Serial Correlation of Residuals

4.7. Forecast of the VAR Model

4.8. Evaluation of VAR Forecast Data

5. Results and Discussion

5.1. Performance of the VAR Model

5.1.1. Status of Augmented Dickey–Fuller Test

5.1.2. Lag Order with Information Criteria AIC, BIC, FPE, and HQIC

5.1.3. Correlation Matrix of Residuals

5.2. Serial Correlation of Residuals (Errors) Using Durbin–Watson Statistic

5.3. Forecast Result for Climate Variable and Evapotranspiration

5.4. Evaluation of Forecast Results

5.5. Climate Data Acquired from DHT11 Sensor

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kei, H.M. Department of Statistics Malaysia Press Release; Department of Statistics Malaysia Putrajaya: Putrajaya, Malaysia, 2018; pp. 5–9. [Google Scholar]

- Mahidin, D. Department of Statistics Malaysia Press Release; Department of Statistics Malaysia: Putrajaya, Malaysia, 2019; pp. 5–9. [Google Scholar]

- Shiri, J. Modeling reference evapotranspiration in island environments: Assessing the practical implications. J. Hydrol. 2019, 570, 265–280. [Google Scholar] [CrossRef]

- Fida, M.; Li, P.; Wang, Y.; Alam, S.; Nsabimana, A. Water contamination and human health risks in Pakistan: A review. Exp. Health 2022, 1–21. [Google Scholar] [CrossRef]

- Al-shareeda, M.A.; Anbar, M.; Manickam, S.; Hasbullah, I.H.; Abdullah, N.; Hamdi, M.M.; Al-Hiti, A.S. NE-CPPA: A new and efficient conditional privacy-preserving authentication scheme for vehicular ad hoc networks (VANETs). Appl. Math. 2020, 14, 1–10. [Google Scholar]

- Abdullah, M.H.S.B.; Shahimi, S.; Arifin, A. Independent Smallholders’ Perceptions towards MSPO Certification in Sabah, Malaysia. J. Manaj. Hutan Trop. 2022, 28, 241. [Google Scholar] [CrossRef]

- Luo, W.; Chen, M.; Kang, Y.; Li, W.; Li, D.; Cui, Y.; Khan, S.; Luo, Y. Analysis of crop water requirements and irrigation demands for rice: Implications for increasing effective rainfall. Agric. Water Manag. 2022, 260, 107285. [Google Scholar] [CrossRef]

- Al-shareeda, M.M.A.; Anbar, M.; Alazzawi, M.A.; Manickam, S.; Hasbullah, I.H. Security schemes based conditional privacy-preserving in vehicular ad hoc networks. Indones. J. Electr. Eng. Comput. Sci. 2020, 21. [Google Scholar] [CrossRef]

- Segovia-Cardozo, D.A.; Franco, L.; Provenzano, G. Detecting crop water requirement indicators in irrigated agroecosystems from soil water content profiles: An application for a citrus orchard. Sci. Total. Environ. 2022, 806, 150492. [Google Scholar] [CrossRef]

- Al-Shareeda, M.A.; Manickam, S.; Laghari, S.A.; Jaisan, A. Replay-Attack Detection and Prevention Mechanism in Industry 4.0 Landscape for Secure SECS/GEM Communications. Sustainability 2022, 14, 15900. [Google Scholar] [CrossRef]

- Klt, K. Plant Growth and Yield as Affected by Wet Soil Conditions due to Flooding or Over-Irrigation; NebGuide: Lincoln City, OR, USA, 2004. [Google Scholar]

- Sindane, J.T.; Modley, L.A.S. The impacts of poor water quality on the residential areas of Emfuleni local municipality: A case study of perceptions in the Rietspruit River catchment in South Africa. Urban Water J. 2022, 1–11. [Google Scholar] [CrossRef]

- Al-Shareeda, M.A.; Manickam, S.; Saare, M.A. DDoS attacks detection using machine learning and deep learning techniques: Analysis and comparison. Bull. Electr. Eng. Inform. 2023, 12, 930–939. [Google Scholar] [CrossRef]

- Kunkel, K.E.; Easterling, D.; Ballinger, A.; Bililign, S.; Champion, S.M.; Corbett, D.R.; Dello, K.D.; Dissen, J.; Lackmann, G.; Luettich, R., Jr.; et al. North Carolina Climate Science Report; North Carolina Institute for Climate Studies: Asheville, NC, USA, 2020; Volume 233, p. 236. [Google Scholar]

- Al-Shareeda, M.A.; Manickam, S. COVID-19 Vehicle Based on an Efficient Mutual Authentication Scheme for 5G-Enabled Vehicular Fog Computing. Int. J. Environ. Res. Public Health 2022, 19, 15618. [Google Scholar] [CrossRef] [PubMed]

- Valiantzas, J.D. Simplified forms for the standardized FAO-56 Penman–Monteith reference evapotranspiration using limited weather data. J. Hydrol. 2013, 505, 13–23. [Google Scholar] [CrossRef]

- Muhammad, M.K.I.; Nashwan, M.S.; Shahid, S.; Ismail, T.B.; Song, Y.H.; Chung, E.S. Evaluation of empirical reference evapotranspiration models using compromise programming: A case study of Peninsular Malaysia. Sustainability 2019, 11, 4267. [Google Scholar] [CrossRef]

- Woli, P.; Paz, J.O. Evaluation of various methods for estimating global solar radiation in the southeastern United States. J. Appl. Meteorol. Climatol. 2012, 51, 972–985. [Google Scholar] [CrossRef]

- Exner-Kittridge, M.G.; Rains, M.C. Case study on the accuracy and cost/effectiveness in simulating reference evapotranspiration in West-Central Florida. J. Hydrol. Eng. 2010, 15, 696–703. [Google Scholar] [CrossRef]

- Paca, V.H.d.M.; Espinoza-Dávalos, G.E.; Hessels, T.M.; Moreira, D.M.; Comair, G.F.; Bastiaanssen, W.G. The spatial variability of actual evapotranspiration across the Amazon River Basin based on remote sensing products validated with flux towers. Ecol. Process. 2019, 8, 1–20. [Google Scholar] [CrossRef]

- Kumar, M.; Raghuwanshi, N.; Singh, R. Artificial neural networks approach in evapotranspiration modeling: A review. Irrig. Sci. 2011, 29, 11–25. [Google Scholar] [CrossRef]

- Wang, W.g.; Zou, S.; Luo, Z.h.; Zhang, W.; Chen, D.; Kong, J. Prediction of the reference evapotranspiration using a chaotic approach. Sci. World J. 2014, 2014, 347625. [Google Scholar] [CrossRef]

- Ferreira, L.B.; da Cunha, F.F.; de Oliveira, R.A.; Fernandes Filho, E.I. Estimation of reference evapotranspiration in Brazil with limited meteorological data using ANN and SVM–A new approach. J. Hydrol. 2019, 572, 556–570. [Google Scholar] [CrossRef]

- Yamaç, S.S.; Todorovic, M. Estimation of daily potato crop evapotranspiration using three different machine learning algorithms and four scenarios of available meteorological data. Agric. Water Manag. 2020, 228, 105875. [Google Scholar] [CrossRef]

- Feng, Y.; Peng, Y.; Cui, N.; Gong, D.; Zhang, K. Modeling reference evapotranspiration using extreme learning machine and generalized regression neural network only with temperature data. Comput. Electron. Agric. 2017, 136, 71–78. [Google Scholar] [CrossRef]

- Zhu, B.; Feng, Y.; Gong, D.; Jiang, S.; Zhao, L.; Cui, N. Hybrid particle swarm optimization with extreme learning machine for daily reference evapotranspiration prediction from limited climatic data. Comput. Electron. Agric. 2020, 173, 105430. [Google Scholar] [CrossRef]

- Granata, F. Evapotranspiration evaluation models based on machine learning algorithms—A comparative study. Agric. Water Manag. 2019, 217, 303–315. [Google Scholar] [CrossRef]

- Chen, Z.; Zhu, Z.; Jiang, H.; Sun, S. Estimating daily reference evapotranspiration based on limited meteorological data using deep learning and classical machine learning methods. J. Hydrol. 2020, 591, 125286. [Google Scholar] [CrossRef]

- Mohammadi, B.; Mehdizadeh, S. Modeling daily reference evapotranspiration via a novel approach based on support vector regression coupled with whale optimization algorithm. Agric. Water Manag. 2020, 237, 106145. [Google Scholar] [CrossRef]

- Mehdizadeh, S.; Behmanesh, J.; Khalili, K. Using MARS, SVM, GEP and empirical equations for estimation of monthly mean reference evapotranspiration. Comput. Electron. Agric. 2017, 139, 103–114. [Google Scholar] [CrossRef]

- Yassin, M.A.; Alazba, A.; Mattar, M.A. Artificial neural networks versus gene expression programming for estimating reference evapotranspiration in arid climate. Agric. Water Manag. 2016, 163, 110–124. [Google Scholar] [CrossRef]

- Wang, S.; Lian, J.; Peng, Y.; Hu, B.; Chen, H. Generalized reference evapotranspiration models with limited climatic data based on random forest and gene expression programming in Guangxi, China. Agric. Water Manag. 2019, 221, 220–230. [Google Scholar] [CrossRef]

- Abdallah, W.; Abdallah, N.; Marion, J.M.; Oueidat, M.; Chauvet, P. A vector autoregressive methodology for short-term weather forecasting: Tests for Lebanon. Appl. Sci. 2020, 2, 1555. [Google Scholar] [CrossRef]

- Bedi, J. Transfer learning augmented enhanced memory network models for reference evapotranspiration estimation. Knowl.-Based Syst. 2022, 237, 107717. [Google Scholar] [CrossRef]

- Aghelpour, P.; Norooz-Valashedi, R. Predicting daily reference evapotranspiration rates in a humid region, comparison of seven various data-based predictor models. Stoch. Environ. Res. Risk Assess. 2022, 36, 4133–4155. [Google Scholar] [CrossRef]

- Karbasi, M.; Jamei, M.; Ali, M.; Malik, A.; Yaseen, Z.M. Forecasting weekly reference evapotranspiration using Auto Encoder Decoder Bidirectional LSTM model hybridized with a Boruta-CatBoost input optimizer. Comput. Electron. Agric. 2022, 198, 107121. [Google Scholar] [CrossRef]

- Chen, Z.; Fiandrino, C.; Kantarci, B. On blockchain integration into mobile crowdsensing via smart embedded devices: A comprehensive survey. J. Syst. Archit. 2021, 115, 102011. [Google Scholar] [CrossRef]

- Li, Q.; Tan, D.; Ge, X.; Wang, H.; Li, Z.; Liu, J. Understanding security risks of embedded devices through fine-grained firmware fingerprinting. IEEE Trans. Dependable Secur. Comput. 2021, 19, 4099–4112. [Google Scholar] [CrossRef]

- Cox, S. Steps to make Raspberry Pi Supercomputer; University of Southampton: Southampton, UK, 2013. [Google Scholar]

- Kapoor, P.; Barbhuiya, F.A. Cloud based weather station using IoT devices. In Proceedings of the 2019 IEEE Region 10 Conference (TENCON 2019), Kerala, India, 17–20 October 2019; pp. 2357–2362. [Google Scholar]

- Alkandari, A.A.; Moein, S. Implementation of monitoring system for air quality using raspberry PI: Experimental study. Indones. J. Electr. Eng. Comput. Sci. 2018, 10, 43–49. [Google Scholar] [CrossRef]

- Pardeshi, V.; Sagar, S.; Murmurwar, S.; Hage, P. Health monitoring systems using IoT and Raspberry Pi—A review. In Proceedings of the 2017 International Conference on Innovative Mechanisms for Industry Applications (ICIMIA), Karnataka, India, 21–23 February 2017; pp. 134–137. [Google Scholar]

- Mehdizadeh, S. Estimation of daily reference evapotranspiration (ET0) using artificial intelligence methods: Offering a new approach for lagged ET0 data-based modeling. J. Hydrol. 2018, 559, 794–812. [Google Scholar] [CrossRef]

- Alves, W.B.; Rolim, G.d.S.; Aparecido, L.E.d.O. Reference evapotranspiration forecasting by artificial neural networks. Eng. Agric. 2017, 37, 1116–1125. [Google Scholar] [CrossRef]

- Karbasi, M. Forecasting of multi-step ahead reference evapotranspiration using wavelet-Gaussian process regression model. Water Resour. Manag. 2018, 32, 1035–1052. [Google Scholar] [CrossRef]

- Landeras, G.; Ortiz-Barredo, A.; López, J.J. Forecasting weekly evapotranspiration with ARIMA and artificial neural network models. J. Irrig. Drain. Eng. 2009, 135, 323–334. [Google Scholar] [CrossRef]

- Broughton, G.; Janota, J.; Blaha, J.; Rouček, T.; Simon, M.; Vintr, T.; Yang, T.; Yan, Z.; Krajník, T. Embedding Weather Simulation in Auto-Labelling Pipelines Improves Vehicle Detection in Adverse Conditions. Sensors 2022, 22, 8855. [Google Scholar] [CrossRef]

- Stock, J.H.; Watson, M.W. Vector autoregressions. J. Econ. Perspect. 2001, 15, 101–115. [Google Scholar] [CrossRef]

- Zivot, E.; Wang, J. Vector autoregressive models for multivariate time series. In Modeling Financial Time Series with S-PLUS®; Springer: Berlin/Heidelberg, Germany, 2006; pp. 385–429. [Google Scholar]

- Hyndman, R.; Athanasopoulos, G. Forecasting: Principles and Practice, 2nd ed.; OTexts: Melbourne, Australia, 2018. [Google Scholar]

- Winker, P.; Maringer, D. Optimal lag structure selection in VEC-models. Contrib. Econ. Anal. 2004, 269, 213–234. [Google Scholar]

- Maddala, G.S.; Kim, I.M. Unit Roots, Cointegration, and Structural Change; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Glen, S. Unit root: Simple definition, unit root tests. Statistics How To: Elementary Statistics for the Rest of Us. 2016. Available online: https://www.statisticshowto.com/unit-root/ (accessed on 2 February 2023).

- Mushtaq, R. Augmented Dickey Fuller Test. 2011. Available online: https://ssrn.com/abstract=1911068 (accessed on 2 February 2023).

- Paparoditis, E.; Politis, D.N. The asymptotic size and power of the augmented Dickey–Fuller test for a unit root. Econom. Rev. 2018, 37, 955–973. [Google Scholar] [CrossRef]

- Ozcicek, O.; Douglas Mcmillin, W. Lag length selection in vector autoregressive models: Symmetric and asymmetric lags. Appl. Econ. 1999, 31, 517–524. [Google Scholar] [CrossRef]

- Lange, A.; Dalheimer, B.; Herwartz, H.; Maxand, S. svars: An R package for data-driven identification in multivariate time series analysis. J. Stat. Softw. 2021, 97, 1–34. [Google Scholar] [CrossRef]

- Lütkepohl, H. New Introduction to Multiple time Series Analysis; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Draper, N.R.; Smith, H. Serial correlation in the residuals and the Durbin–Watson test. In Applied Regression Analysis; Wiley: Hoboken, NJ, USA, 1998; pp. 179–203. [Google Scholar]

| 20 Years | 1 Year | 1 Year (1st Diff) | 2 Months | 2 Months (1st Diff) | |

|---|---|---|---|---|---|

| Temp | 0 | 0.0004 | 0 | 0.0007 | 0 |

| Humd | 0 | 0.2608 | 0 | 0.0041 | 0.0001 |

| Wdspd | 0 | 0 | 0 | 0.0083 | 0.0001 |

| Sund | 0 | 0.0012 | 0 | 0 | 0 |

| Prsr | 0 | 0.0007 | 0 | 0.0002 | 0 |

| Et | 0 | 0.0006 | 0 | 0 | 0 |

| Lag Order | AIC | BIC | FPE | HQIC |

|---|---|---|---|---|

| 1 | 8.210 | 8.248 | 3640.524 | 8.217 |

| 2 | 7.803 | 7.892 | 2447.757 | 7.839 |

| 3 | 7.625 | 7.755 | 2049.741 | 7.671 |

| 4 | 7.543 | 7.714 | 1886.554 | 7.602 |

| 5 | 7.475 | 7.687 | 1764.001 | 7.549 |

| 6 | 7.423 | 7.676 | 1673.840 | 7.511 |

| 7 | 7.374 | 7.668 | 1593.902 | 7.476 |

| 8 | 7.333 | 7.669 | 1530.399 | 7.449 |

| 9 | 7.318 | 7.695 | 1507.648 | 7.449 |

| Lag Order | AIC | BIC | FPE | HQIC |

|---|---|---|---|---|

| 1 | 7.855 | 8.359 | 2578.308 | 8.056 |

| 2 | 7.395 | 8.333 | 1629.099 | 7.770 |

| 3 | 7.243 | 8.617 | 1399.368 | 7.792 |

| 4 | 7.167 | 8.980 | 1298.417 | 7.891 |

| 5 | 7.280 | 9.532 | 1457.304 | 8.181 |

| 6 | 7.312 | 10.007 | 1509.350 | 8.390 |

| 7 | 7.286 | 10.426 | 1476.551 | 8.542 |

| 8 | 7.233 | 10.819 | 1407.522 | 8.667 |

| 9 | 7.327 | 11.362 | 1557.474 | 8.941 |

| Lag Order | AIC | BIC | FPE | HQIC |

|---|---|---|---|---|

| 1 | 7.855 | 8.359 | 2578.308 | 8.057 |

| 2 | 7.395 | 8.333 | 1629.098 | 7.770 |

| 3 | 7.243 | 8.617 | 1399.369 | 7.792 |

| 4 | 7.167 | 8.979 | 1298.417 | 7.891 |

| 5 | 7.280 | 9.533 | 1457.304 | 8.181 |

| 6 | 7.312 | 10.007 | 1509.350 | 8.390 |

| 7 | 7.286 | 10.426 | 1476.551 | 8.542 |

| 8 | 7.232 | 10.819 | 1407.522 | 8.667 |

| 9 | 7.327 | 11.362 | 1557.474 | 8.941 |

| temp | humd | wdspd | B | prsr | et | |

|---|---|---|---|---|---|---|

| temp | 1.000 | −0.770 | 0.065 | 0.486 | −0.227 | 0.697 |

| humd | −0.770 | 1.000 | −0.285 | −0.575 | 0.157 | −0.767 |

| wdspd | 0.065 | −0.285 | 1.000 | 0.275 | −0.0561 | 0.322 |

| sund | 0.486 | −0.574 | 0.275 | 1.000 | −0.119 | 0.739 |

| prsr | −0.227 | 0.157 | −0.0561 | −0.119 | 1.000 | −0.178 |

| Et | 0.698 | −0.767 | 0.322 | 0.734 | −0.178 | 1.00 |

| temp | humd | wdspd | B | prsr | et | |

|---|---|---|---|---|---|---|

| temp | 1.000 | −0.904 | 0.261 | 0.778 | 0.142 | 0.830 |

| humd | −0.907 | 1.000 | −0.396 | −0.781 | −0.140 | −0.897 |

| wdspd | 0.261 | −0.396 | 1.000 | 0.172 | −0.041 | 0.269 |

| sund | 0.778 | −0.781 | 0.172 | 1.000 | 0.163 | 0.774 |

| prsr | 0.143 | −0.140 | −0.041 | 0.160 | 1.000 | 0.020 |

| Et | 0.830 | −0.897 | 0.269 | 0.770 | 0.022 | 1.000 |

| temp | humd | wdspd | B | prsr | et | |

|---|---|---|---|---|---|---|

| temp | 1.000 | −0.815 | 0.071 | 0.574 | −0.312 | 0.750 |

| humd | −0.815 | 1.000 | −0.291 | −0.672 | 0.176 | −0.803 |

| wdspd | 0.071 | −0.291 | 1.000 | 0.232 | −0.017 | 0.304 |

| sund | 0.573 | −0.670 | 0.232 | 1.000 | −0.160 | 0.748 |

| prsr | −0.311 | 0.176 | −0.017 | −0.160 | 1.000 | −0.272 |

| Et | 0.760 | −0.804 | 0.304 | 0.748 | −0.272 | 1.000 |

| Variable/Year | 20-Years | 1-Year | 2-Months |

|---|---|---|---|

| temp | 2.02 | 1.99 | 2.2 |

| Humd | 2.02 | 1.98 | 2.21 |

| Wdspd | 2.03 | 2.02 | 2.49 |

| Sund | 2.03 | 2.02 | 1.96 |

| prsr | 2.0 | 2.02 | 1.55 |

| Et | 2.03 | 1.99 | 2.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, P.S.; Fadzil, L.M.; Manickam, S.; Al-Shareeda, M.A. Vector Autoregression Model-Based Forecasting of Reference Evapotranspiration in Malaysia. Sustainability 2023, 15, 3675. https://doi.org/10.3390/su15043675

Hou PS, Fadzil LM, Manickam S, Al-Shareeda MA. Vector Autoregression Model-Based Forecasting of Reference Evapotranspiration in Malaysia. Sustainability. 2023; 15(4):3675. https://doi.org/10.3390/su15043675

Chicago/Turabian StyleHou, Phon Sheng, Lokman Mohd Fadzil, Selvakumar Manickam, and Mahmood A. Al-Shareeda. 2023. "Vector Autoregression Model-Based Forecasting of Reference Evapotranspiration in Malaysia" Sustainability 15, no. 4: 3675. https://doi.org/10.3390/su15043675

APA StyleHou, P. S., Fadzil, L. M., Manickam, S., & Al-Shareeda, M. A. (2023). Vector Autoregression Model-Based Forecasting of Reference Evapotranspiration in Malaysia. Sustainability, 15(4), 3675. https://doi.org/10.3390/su15043675