1. Introduction

The revolutionary technique of confocal microscopy is considered one of the most useful optical imaging techniques in Biotechnology. It utilizes point illumination through a spatial pinhole to avoid out-of-focus signals [

1]. The technique is based on a laser that provides the excitation light to produce fluorescence with high intensities from the focal spot. In vitro and ex vivo samples are analyzed by Fluorescence confocal microscopy (FCM) in biotechnology laboratories globally. Some of the advantages of FCM include higher optical resolution with better contrast in the live image of a sample and the possibility of reconstruction of a 3D image. One of the particularly important uses of FCM is the demonstration of co-localization of two endogenous varicolored labeled proteins intracellularly [

1,

2].

FCM imaging is an addition to the biotechnology research field, but analyzing a large number of images that are resulted from each experiment remains challenging. Accurate subcellular object segmentation is very important in image analysis. For instance, it is required to quantify and characterize different parameters associated with tiny organelles in the cell and to set very high requirements for the accuracy of analysis of the image in order to correctly interpret cellular phenotypes [

3]. In addition, images containing cellular structures that are obtained using FCM require accurate detection to help analyze accurately [

4,

5]. FCM allows determining the concentration of adsorbed protein perfectly within a stationary phase particle as long as proteins are labeled with a fluorescent probe [

6]. Fluorescent labeling of specific cellular structures is a revolutionary advancement in cell imaging technology as it enables automation in the image acquisition of tiny subcellular objects [

7,

8].

With the popularity of imaging throughput and the huge amount of data acquired in biotechnology laboratories, the challenge of analyzing images and interpreting collected information has to be moved from visual interpretation to more automated methods. Previously, an assay was developed involving transient expression in

Nicotiana benthamiana utilizing FCM. A reporter protein labeled with Green Fluorescent Protein (GFP) was used to detect the silencing suppression activity of viral protein P6 of the cauliflower mosaic virus (CaMV) [

9]. However, the assay can be used to detect a wide range of silencing suppressor proteins from any pathogens. In the assay, a simple visual method was used to analyze FCM images acquired from each test. The method is dependent on the vision of human workers to determine the spatial distribution of the green or red fluorescent protein. Utilizing visual quantification methods to quantify confocal images with naked human eyes is the standard approach to estimating the spatial distribution of fluorescence in images resulting from the confocal microscope imaging system. Visual quantification of the spatial distribution method of pathogen silencing suppressor functions is remarkably reproducible [

9]. This method provides fast and conventional measures. However, the drawback of this approach is that it is very time-consuming when the number of images acquired is too high. Moreover, the natural differences between the two workers’ visions make the visual assessment less accurate. In this context, automated object classification and detection in images become critical.

Various image processing techniques have been used to improve biotechnology imaging. For example, autofluorescence from untargeted components contributes to noise, so denoising steps are important to interpret results accurately [

10]. Several algorithms have already been proposed to solve different issues in automated image analyses. Among these algorithms is the Feature Point Detection algorithm, which discriminates non-particles and detects percentile [

4]. There is also an h-dome detection algorithm that filters h-dome morphology [

11], a Kernel Method algorithm that estimates Kernel density with a family of kernels [

12], a Sub-Pixel Localization algorithm that fits Gaussian kernels to focal intensity maxima [

5], a Local Comparison algorithm that maximizes between direction-specific convolutions of image [

13], Morphometry algorithm [

13], Top-Hat Filtering algorithm that filters top-hat and entropy-based thresholding [

14], Multiscale wavelets that estimate wavelets coefficients of the multiscale product [

15], and Source Extractor algorithm that applies convolution for background clipped image [

16]. However, most of the above-mentioned algorithms are not suitable for accurate assessment of the spatial distribution of a mix of fluorescent proteins in the same image as they suffer from major drawbacks, including false detection of noise that could affect the interpretation of the results.

In this work, a novel image processing algorithm is presented to analyze FCM images by assessing the spatial distribution of a mix of fluorescent proteins in a sustainable way that decreases the time and resources required to visually analyze FCM images. The proposed work also identified the series of specific denoising steps for the image. The steps used in image processing to analyze the spatial distribution of protein aggregates in biological samples have not been reported previously. The dataset used in this work was obtained from our previous work [

9]. The FCM images were captured at varying time points.

3. Results

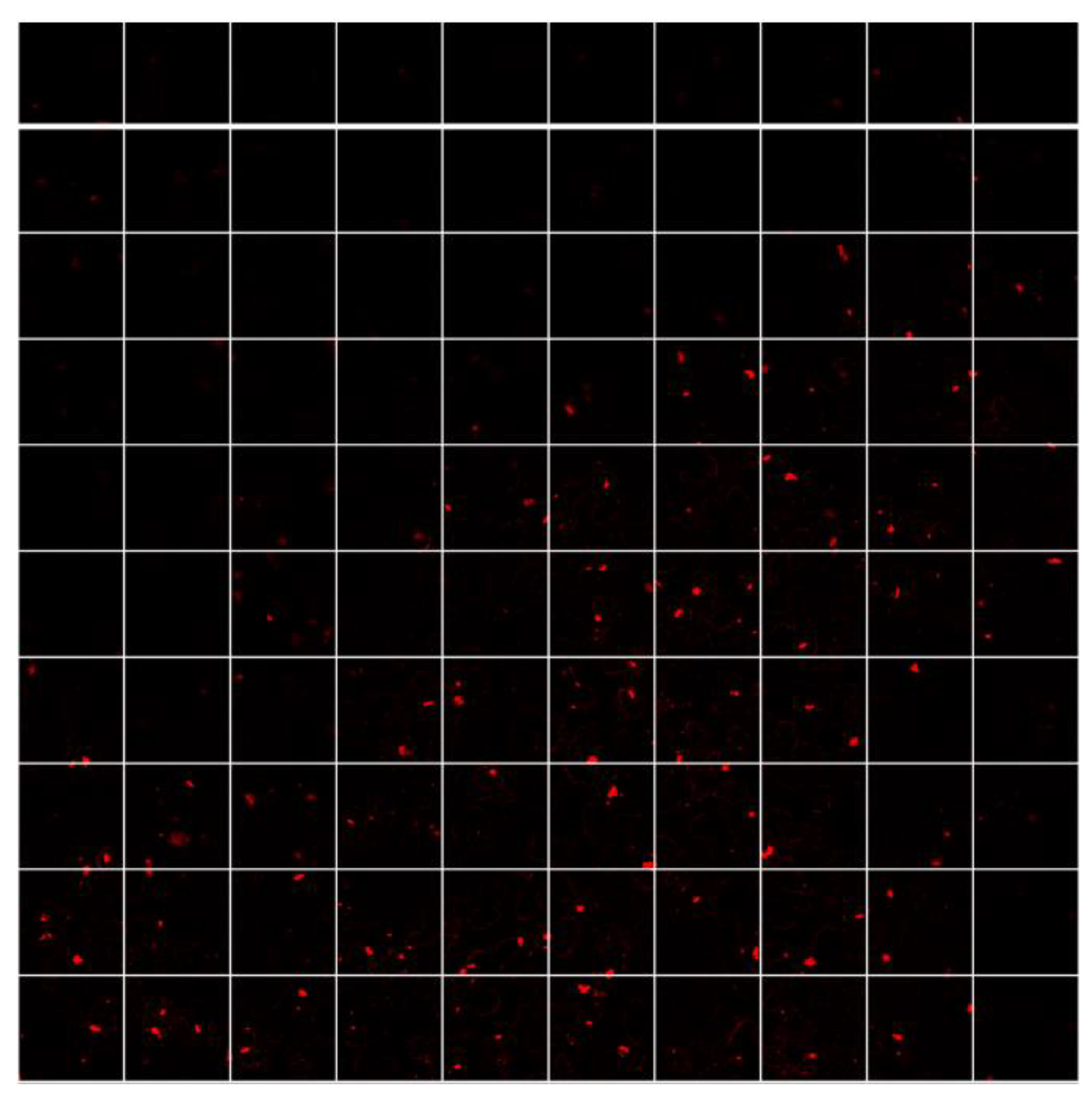

The intelligent machine spatial distribution quantification system is tested with collected data from 100 confocal microscope images of three types, as depicted in

Table 1.

The data collection is designed to provide three expert readings to compare them with the reading of the system of every image type, besides the experts’ opinion about the image type to train and test the intelligent selector. The raters choose the image class from three types; red channel FCM (1), green channel FCM (2), and actual FCM (3).

Table 2 shows samples of the data collection.

The four-fold technique is used to train the adaptive neuro-fuzzy system while keeping 20% of the dataset for an accurate system evaluation. The average of raters’ opinions about the image class is used as a label to train and test the intelligent selector. Hence the adaptive neuro-fuzzy system architecture has one output with integer values. Classes are labeled as follows: class (1) with (1), class (2) with (15), and class (3) with (30). The system is then trained to the integer values, and

Figure 10 clearly shows the training dataset with the system output after training is passed.

The adaptive neuro-fuzzy system is tested with 20 new samples never seen before to make an unbiased decision about the system’s performance. The results, as seen in

Figure 11, prove that the performance of tracking the right classes is achieved in the testing samples and can be achieved when the system deals with new samples other than any of the 100 collected samples.

The system tracking ability is high enough to achieve high accuracy in the output fuzzy membership layer. The classification accuracy is 100% in total and 100% for each class, as seen from the confusion matrix in

Figure 12. Accordingly, the intelligent selector works perfectly in activating the suitable algorithm for the FCM image.

The intelligent machine spatial distribution quantification system is tested with the collected dataset. The test produces GFP and RFP percentages as a response output of the system to one of the FCM images. The system output responses to the 100 FCM images are collected and compared to the average expert’s opinion to evaluate the novel system. The test results show a high correlation between the system output and the experts’ estimation based on the method published in [

9]. The correlation between them shows an ICC of 99% for RFP percentage estimation and 97% for GFP percentage estimation. This subjective and objective evaluation of RFP and GFP appears highly correlated, as can be noticed clearly in

Figure 13 and

Figure 14, respectively.

In order to study the efficiency of the three algorithms individually, the algorithms were tested based on the dataset

Table 1. For the Red Channel Intelligent machine spatial distribution quantification algorithm, the correlation between the experts’ rates and the tested algorithm shows an ICC of 99% for RFP and GFP percentage estimation. This subjective and objective evaluation of RFP and GFP appears highly correlated, as can be noticed clearly in

Figure 15.

For the green channel intelligent machine spatial distribution quantification algorithm, the correlation between the experts’ rates and the tested algorithm shows an ICC of 99% for RFP and GFP percentage estimation. This subjective and objective evaluation of RFP and GFP appears highly correlated, as can be noticed clearly in

Figure 16.

The third essential part is the actual image intelligent machine spatial distribution quantification algorithm (AIMSDQA). This subjective and objective evaluation of RFP and GFP have a reasonable correlation, as can be inferred from

Figure 17. The correlation between the experts’ rates and the tested algorithm shows an ICC of 94% for RFP and GFP percentage estimation.

The results of AIMSDQA show greater values than the raters’ estimations in most cases. This fact leads to further investigations to discover that the rater’s naked eye can sometimes miss many details easily found by the algorithm, as in

Figure 18, when the actual image has yellow spots meaning there are red and green colors in the same place, which refers to co-localization of two proteins in the same spot. This figure shows the output images for counting the RFP and GFP ratio estimation.

4. Discussion

The sample images were gathered with FCM from various experiments and several time points. More than a hundred different images were subjected to the image processing algorithm. Despite the associated drawbacks, previously, the protein expressions were found utilizing fSPT, CLSM, AUC with FDS, and FCM [

20,

21,

22,

23,

24,

25]. Common issues related to such methods would be fluorescent particle size limitation, operation optimization requirements, the necessity to use particle standards, dilution of the sample prior to performing the measurement, and even the effect of using the centrifuge machine could change the results [

21,

24,

26]. In the proposed approach, there was no need for preparations that might cause issues when performing the measurements.

The intricacy of the automatic process of a specific confocal microscope image with a suitable algorithm inspires the design of an accurate, intelligent selector proficient at achieving smart selection. As a result, the intelligent selector was successfully designed to directly process raw confocal microscope images imported from the microscope without prior knowledge of the user parameters settings. The intelligent selector is based on a novel multiclass adaptive neuro-fuzzy classifier (MANFC) which is designed based on the collected dataset in

Table 1. The selector is tested with fresh samples to find out its realistic accuracy. The designed intelligent selector approves its high performance by the test confusion matrix with 100% accuracies shown in

Figure 12. The selector is trusted to activate the appropriate processing algorithm to extract the spatial distribution of red and green colors as a percentage.

Interclass correlation coefficients were used to complete an objective comparison. The method produces an extensive understanding of the correlation between AIMSDQA findings and the experts’ readings. Furthermore, it is possible to run the calculations in parallel for window individual computations; however, the related sampling and value range settings remain independent from such calculations.

In order to facilitate an objective comparison of the method, intraclass correlation coefficients were used to provide a deep thought about the correlation between the experts’ readings (subjective estimation) and IMSDQA readings to evaluate RFP and GFP ratio objectively. The intelligent machine spatial distribution quantification system proves to be highly correlated with subjective estimation, with ICC of 99% for RFP percentage estimation and 97% for GFP percentage estimation. Hence, the hypothesis of accepting the system reading is achieved. The reason for obtaining a higher correlation in REP estimation than in GEP is clarified using

Figure 13 compared to

Figure 14. From these figures, it is obvious that the data points value in

Figure 13 of the REP correlation is clustered around zero and 90, while in

Figure 14, the data points are distributed along the correlation line. The proposed algorithm is built based on three processing algorithms to obtain the RFP and GFP percentage estimation, as shown in

Figure 3. Thus, further testing the system regarding these units is important to improve the general insight into any individual drawbacks. The red and green channel intelligent machine spatial distribution quantification algorithm reached an ICC of 99%. This is also proven when the tracking and correlation ability were analyzed in

Figure 15 and

Figure 16. These two processing units were near the subjective tracking estimations and the correlation line in the Figures. The actual image intelligent machine spatial distribution quantification algorithm achieves an ICC of 94% with almost acceptable hypotheses, which can be explained by

Figure 17. The tracking and correlation are provided in

Figure 17, where type dispersal around the correlation line with adequate tracking ability can be noticed. Therefore, in

Figure 18, further investigation was performed with the probability of grey background in live FCM images resulting in inaccurate quantification for specific samples.

Lastly, the proposed algorithm provided a more effortless and faster methodology for quantifying and analyzing FCM images, showing high accuracy compared to the traditional analysis method. Further, this methodology does not require special sample preparations or extensive optimization of instrument settings. Apart from analyzing the spatial distribution of fluorescence in the samples, the proposed algorithm with fluorescence microscopy can also be used to analyze visible and sub-visible aggregates. The methodology offers significant advantages over other common approaches. Fluorescence sizing from 1 µm backward cannot be differentiated using a simple visual method due to the limitation of human vision, but it could be efficiently detected using the proposed method without any requirement of complex sample preparation steps. Moreover, unlike black backgrounds, when analyzing images with a grey background (actual image), it is not easy for human vision to differentiate the green or red colors from a grey background. The grey background in live FCM images may result in inaccurate quantification for particular samples. Fluorescence microscopy analysis prevents false detection of dust, air bubbles, and non-proteinaceous particles that could plague the study [

27]. Overall, it can be concluded that the proposed algorithm can successfully denoise the fluorescence microscope images. The proposed methodology can be effectively used as a cheap, sustainable, and complementary technique to traditional approaches. However, the results of this work show a promising path to quantifying and analyzing FCM images sustainably and quickly. It is essential to use every effort in order to build sustainable biotechnology laboratories [

28,

29,

30]; the current results add to the knowledge of sustainable biotechnology by providing a methodology that saves time and labor in the laboratory.

4.1. Sustainability Evaluation of the Technique Proposed

In general, designing algorithms can contribute to sustainability in a number of ways. In the proposed algorithm, this work focused on analyzing confocal microscope images. Image processing algorithms are designed to perform various tasks such as image enhancement, object recognition, and feature extraction automatically. These algorithms can process images faster and with more accuracy than humans in many cases, and they are particularly useful for tasks that require repetitive or time-consuming image analysis. However, it is important to note that algorithms are not capable of replacing human intuition, creativity, and judgment in all cases. In some situations, human input may be required to validate the results of image processing algorithms or to make decisions based on the results of the processing. With this regard, three experts evaluated the results in the obtained images (

Table 2).

4.2. Sustainability with Regard to Time-Saving

Algorithms can save time in image processing by automating many tasks that would otherwise require human effort. For example, algorithms can quickly analyze a large number of images and identify patterns or features in images that may be difficult for a human to detect. This can include detecting edges and lines, identifying objects or patterns, and recognizing shapes and textures. Algorithms can also be designed to perform complex image manipulations, such as adjusting brightness and contrast, enhancing color or texture, and removing noise, much more efficiently than a human could. By automating these tasks, algorithms can significantly reduce the time required for image processing, freeing up time for more creative or high-level tasks. In the proposed algorithm, the average computation cost of the intelligent machine spatial distribution quantification system is 0.1 s to one raw confocal microscope image and provides the objective evaluation of it. The experiment is implemented on a Windows 10, 64-bit intel machine with a 2.50 GHz core i5 CPU and 8 GB RAM. This is much faster than the regular time consumed by one expert who evaluated the same amount of data in at least 3 min. Moreover, in the case of time required to analyze large amounts of data, the usual time spent by humans in the laboratory is 8 h per day. In the case of a machine, it could continue for a longer time, in some cases up to 24 h per day.

4.3. Sustainability in Labor

The proposed algorithm contributes to saving human effort in biotechnology laboratories by automating the image processing and streamlining many tasks that would otherwise require manual effort. This includes tasks such as image recognition, object detection, segmentation, and classification, which are repetitive for humans to perform and require more than one expert to check and validate the results. By using algorithms, these tasks can be accomplished much faster and with a higher degree of accuracy and consistency, freeing up human resources to focus on more strategic and creative tasks in the laboratory. Additionally, algorithms can analyze vast amounts of data and perform complex calculations, enabling them to make decisions and draw insights that would be beyond the capacity of human effort alone. Another aspect to be taken into consideration is the performance of humans. The accuracy of analysis by humans may vary depending on the time that the expert does the analysis. If the person is sick or can not perform perfectly for any reason, the evaluation of the image will not be as accurate as the healthy person.

5. Conclusions

Firstly, and most importantly, the field of biotechnology lacks image processing algorithms to analyze the huge FCM continuing outcomes. To the best of our knowledge, many published studies have an insufficient methodology to ensure accurate results of fluorescence quantification and protein co-localization. This work proposed an algorithm that can quantify the spatial distribution of fluorescence in FCM images as well as detect the co-localization of GFP/RFP labeled proteins with high accuracy in detecting GFP or RFP separately on a black or grey (live image) background. This work applied a dataset that was previously quantified using a visual quantification technique to train the proposed algorithm to classify the presence of fluorescence severity, investigating the existence of proteins alone or colocalized with other proteins.

The main contribution of this work was the identification of the fluorescence of proteins from different backgrounds of images and the discrimination of different object shapes. Additionally, the suggested use of the proposed method can help to allow more clarity in interpreting and comparing the results, aiming to enable biologists to analyze a large number of FCM images in a short time. These contributions can improve further research to give tools for biologists to utilize this method when evaluating a subject, more than simply finding a single or colocalized protein.

The limitations of this study lie mainly in the number of samples in the dataset. However, it can be speculated about these by collecting and subjectively evaluating more from the actual FCMs. For further work, the estimation ability of the actual image intelligent machine spatial distribution quantification algorithm will be improved.