Emotional Value in Online Education: A Framework for Service Touchpoint Assessment

Abstract

:1. Introduction

- System analysis is largely concerned with the interface design and functional module analysis of the online education platform, including the evaluation of the platform’s interaction experience and research on the platform’s virtual space. Nian et al. [10] designed a new mobile education platform by analyzing the emotional states of students and investigating the effect of mobile online education platforms in fostering students’ independent learning. Using questionnaires, Hu et al. [11] investigated the elements that influence students’ engagement in peer-assisted English learning (PAEL) based on online education platforms and evaluated students’ online learning process from two perspectives: surface participation and deep participation. It is concluded that the online education platform has issues, including a low degree of knowledge structure, poor interaction quality, weak interaction intensity, and an inactive collaboration atmosphere. Amara et al. [12] combined augmented reality and virtual reality technologies to construct a 3D interactive e-learning platform, offering a dynamic interactive experience that merges real and virtual worlds and helps to boost students’ involvement and happiness with online learning. Using questionnaire surveys, Liu et al. [13] looked at the potential user needs of the online scene experience, combined text, and image information, and focused on the differences between the online education platform and the offline education space to improve the user’s pleasure with the online education platform, with industrial design students serving as a sample population for the study.

- User research focuses mostly on analyzing user behavior and needs. In comparison to system analysis, it is more adaptable. Flow theory, distributed cognition, gamification design, and service co-creation are the study methodologies. Based on the successful DeLone and McLean model and process theory of IS, Li et al. [14] used MOOCs as the research object, constructed a model for optimizing system characteristics and process experience, and confirmed that flow experience and learning effects have a positive impact on continuous willingness. Han et al. [15] contributed to enhancing the concentration and immersion of online learners by utilizing flow theory. They extracted nine factors from the flow theory, including challenge, reaction speed, sense of control, and clear objectives, and used a structural equation model to examine the impact of these nine factors on user experience in the online education process. Yan et al. [16] introduced gamification theory to online education, focusing on the potential impact of differences in learning styles on learners’ behavioral intentions, and added “perceived learning tasks” as an external variable into the theoretical framework. Taking the CAID experimental course as the research object, Zhang et al. [17] optimized the online education user experience based on the service co-creation theory and built an online flipped classroom model according to the peak final decision, suggesting ways to enhance the online education service experience.

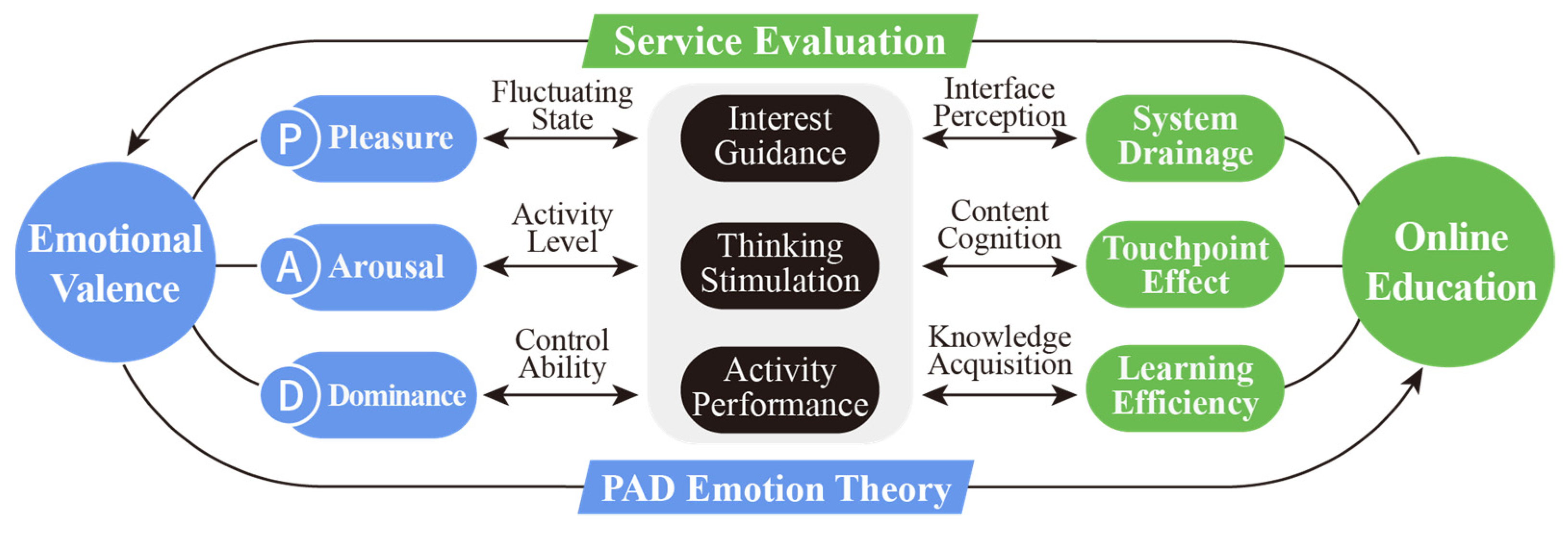

2. Research Ideas and Framework

2.1. Theoretical Basis

2.2. PADSI Five-Dimensional Evaluation Model Construction

2.3. Online Education Service Evaluation Process Design

- Construction of the evaluation index system. We decompose user behavior by dividing online education service phases through system deconstruction and user behavior tracking techniques. We gather service touchpoints in the target system based on user behavior and then generate an interactive touchpoint matrix. The service touchpoints collected through cluster analysis are converted into relevant service evaluation indicators, and a reasonable evaluation index system is generated via expert analysis.

- Process of the service evaluation experiment. Many service recipients of an online education platform are chosen to undertake an emotional valence analysis and a perceptual cognition study on the online education system. On the one hand, the emotional assessment questionnaire is constructed in conjunction with the SAM scale and the evaluation index system, and through the questionnaire survey, the emotional valence of service users is determined. On the other hand, with the help of the scale method and analytic hierarchy process, we evaluate the satisfaction and importance of the same group of people to complete a deeper perceptual cognition. The perceptual cognition outcomes of online education system services are derived from the two factors of user demands and user expectations.

- Results of evaluation and optimization strategies. We summarize and evaluate the evaluation data to visualize the evaluation outcomes. Meanwhile, utilizing the collected data, a detailed analysis of the corresponding service touchpoints is conducted. We explore the relationships among user emotional valence, satisfaction, and importance using single-factor analysis, comparative evaluation, and inductive analysis to propose effective iterative strategies and optimization directions for the current online education system to enhance the learning experience and efficiency.

3. Online Education Service Evaluation System Construction

3.1. Interaction Contact Matrix Output and Cluster Analysis

3.2. Service Evaluation Index Transformation and System Establishment

4. Online Education Service Evaluation Experiment

4.1. Evaluation of Experimental Design

4.2. Dimensions of Emotional Valence

- Design of emotional evaluation questionnaire. To enhance the readability and effectiveness of the questionnaire, the SAM image emotion scale was introduced to evaluate user emotions. The SAM scale presents the pleasure, activation, and dominance of the user’s emotions in the form of images and focuses more on the subject’s feeling and perception of emotional reactions, thereby helping the subject to evaluate their own emotions more rapidly and intuitively, as depicted in Figure 5.

- Emotional evaluation result output. Corresponding to the issues related to the design of evaluation indicators for online education platforms and combining them with the SAM scale, a 1–9 emotional evaluation questionnaire is generated, and the scoring level is directly proportional to the emotional valence. Using the task method to test the index emotion, the sample of the task scale is shown in Figure 6, and the results are shown in Table 3.

4.3. Dimensions of Perceptual Cognition

- Evaluation of service touchpoints’ satisfaction

- 2.

- Evaluation of service touchpoints importance

5. Evaluation Results and Optimization Strategies

5.1. Service Evaluation Data Integration

5.2. Visual Output of Evaluation Results

5.3. Results Analysis and Optimization Suggestions

- In the process of online education services, the average value of user pleasure, activation, and dominance is basically consistent with the changing trend of satisfaction, showing a positive correlation. This suggests that user satisfaction has a substantial effect on emotional valence. High levels of service touchpoint satisfaction are associated with high levels of user pleasure, arousal, and dominance of that touchpoint and vice versa. Therefore, designers might begin with user emotions and optimize the lower emotional valence points, such as T4 Ad Recommendation, T8 Extended Reading, T17 Homework Correction, etc., to increase user pleasure.

- The importance of learning resource touchpoints is generally high. Among them, T5 Course Videos and T6 Courseware Materials are important touch points at level 5, and T7 Bibliography and T9 After-school Materials are important touch points at level 4, indicating that the course quality and class experience of online education platforms is the primary factors affecting their service quality and the core touchpoint that draws users into the system. From the values of emotional valence and satisfaction, among the current system service touchpoints, only T5 Course Videos and T9 After-school Materials have high user emotional valence and satisfaction, while the remaining three touchpoints are all below the median value. Hence, the iteration of the follow-up service system should maximize the contact points of learning materials to suit the needs of users. For instance, we provide courseware materials for students to review after class; consolidate knowledge and supplement notes; provide electronic versions of bibliographies related to course learning, which are convenient for students to download and share; publish class practice materials to the course platform to assist students in studying after class.

- Visual guidance touchpoints are the least important. Among them, the emotional valence and user satisfaction of the T1 Interface Design and T2 Navigation Settings are high, indicating that most users are satisfied with the current system interface design and visual navigation and can maintain it. While the user’s emotional pleasure and activation degree of the T3 Course Reminder is above the average value, the user’s emotional dominance and satisfaction are lower, indicating that the current course reminder function makes users have a strong sense of being manipulated, resulting in low user satisfaction. In the follow-up design, attention should be paid to reducing the compulsion of course reminders and adopting a more personalized and customizable way to remind users to complete online learning tasks.

- The variation points in the service evaluation results deserve attention. For example, the user satisfaction and emotional pleasure of T14 Student Notes and T17 Homework Correction are low, while the emotional activation is above average. It demonstrates that the functions of student notes and homework comments can easily stimulate users’ learning initiative and activity; however, the current system is not optimal for these two functions, and the user experience is subpar; therefore, the subsequent design must be optimized and improved. Moreover, the dominance of T17 Homework Correction is low, indicating that it is difficult for users to subjectively communicate homework comments during the learning process and that this function is poorly controlled. Corresponding channels can be added during platform iterations to meet user needs in a timely and effective manner. T18 Online Q&A provides a high amount of emotional enjoyment, activation, and domination for the user, but the satisfaction is the lowest. It shows that the current online education platform teachers’ question-answering function modules are not well constructed, and most of them are in the one-way message reply to mode. Customers are dissatisfied due to their inability to resolve uncertainty and challenges faced in course learning in a timely and effective manner. In terms of importance, T17 ≈ T18 > T14. In the subsequent design, therefore, online Q&A and assignment commenting should be addressed first, and student notes should be introduced as a new function to differentiate the platform.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Butnaru, G.I.; Nita, V.; Anichiti, A.; Brinza, G. The Effectiveness of Online Education during COVID-19 Pandemic-A Comparative Analysis between the Perceptions of Academic Students and High School Students from Romania. Sustainability 2021, 13, 5311. [Google Scholar] [CrossRef]

- Fernandez-Batanero, J.M.; Montenegro-Rueda, M.; Fernandez-Cerero, J.; Tadeu, P. Online education in higher education: Emerging solutions in crisis times. Heliyon 2022, 8, e10139. [Google Scholar] [CrossRef] [PubMed]

- Kim, G.-C.; Gurvitch, R. Online Education Research Adopting the Community of Inquiry Framework: A Systematic Review. Quest 2020, 72, 395–409. [Google Scholar] [CrossRef]

- Sun, A.; Chen, X. Online education and its effective practice: A research review. J. Inf. Technol. Educ. 2016, 15, 157–190. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, S.-Y.; Pan, Y.-H. A preliminary study on the evaluation method of online design education. Des. Manuf. 2022, 16, 7–12. [Google Scholar]

- Wong, M.S.; Jackson, S. User Satisfaction Evaluation of Malaysian e-Government Education Services. In Proceedings of the 2017 International Conference on Engineering, Technology and Innovation (ICE/ITMC), Madeira, Portugal, 27–29 June 2017; IEEE: New York, NY, USA, 2017; pp. 531–537. [Google Scholar]

- Granić, A.; Ćukušić, M. Usability testing and expert inspections complemented by educational evaluation: A case study of an e-learning platform. J. Educ. Technol. Soc. 2011, 14, 107–123. [Google Scholar]

- Sangrà, A.; Vlachopoulos, D.; Cabrera, N. Building an inclusive definition of e-learning: An approach to the conceptual framework. Int. Rev. Res. Open Distrib. Learn. 2012, 13, 145–159. [Google Scholar] [CrossRef] [Green Version]

- Bozkurt, A.; Bozkaya, M. Evaluation criteria for interactive e-books for open and distance learning. Int. Rev. Res. Open Distrib. Learn. 2015, 16, 58–82. [Google Scholar] [CrossRef]

- Nian, L.-H.; Wei, J.; Yin, C.-B. The promotion role of mobile online education platform in students’ self-learning. Int. J. Contin. Eng. Educ. Life Long Learn. 2019, 29, 56–71. [Google Scholar] [CrossRef]

- Hu, H.; Wang, X.; Zhai, Y.; Hu, J. Evaluation of factors affecting student participation in peer-assisted English learning based on online education platform. Int. J. Emerg. Technol. Learn. 2021, 16, 72–87. [Google Scholar] [CrossRef]

- Amara, K.; Zenati, N.; Djekoune, O.; Anane, M.; Aissaoui, I.K.; Bedla, H.R. i-DERASSA: E-learning Platform based on Augmented and Virtual Reality interaction for Education and Training. In Proceedings of the 2021 International Conference on Artificial Intelligence for Cyber Security Systems and Privacy (AI-CSP), El Oued, Algeria, 20–21 November 2021; IEEE: New York, NY, USA, 2021; pp. 1–9. [Google Scholar]

- Liu, Z.; Han, Z. Exploring Trends of Potential User Experience of Online Classroom on Virtual Platform for Higher Education during COVID-19 Epidemic: A Case in China. In Proceedings of the IEEE International Conference on Teaching, Assessment, and Learning for Engineering (IEEE TALE), Takamatsu, Japan, 8–11 December 2020; IEEE Shikoku Sect. Electr Network: New York, NY, USA, 2020; pp. 742–747. [Google Scholar]

- Li, M.F.; Wang, T.; Lu, W.; Wang, M.K. Optimizing the Systematic Characteristics of Online Learning Systems to Enhance the Continuance Intention of Chinese College Students. Sustainability 2022, 14, 11774. [Google Scholar] [CrossRef]

- Han, J.D.; Wang, Y. User Experience Design of Online Education Based on Flow Theory. In Proceedings of the 12th Int Conf on Appl Human Factors and Ergon (AHFE)/Virtual Conf on Usabil and User Experience, Human Factors and Wearable Technologies, Human Factors in Virtual Environm and Game Design, and Human Factors and Assist Technol, Electr Network, 25–29 July 2021; Electr Network: New York, NY, USA, 2021; pp. 219–227. [Google Scholar]

- Yan, H.Q.; Zhang, H.F.; Su, S.D.; Lam, J.F.I.; Wei, X.Y. Exploring the Online Gamified Learning Intentions of College Students: A Technology-Learning Behavior Acceptance Model. Appl. Sci. 2022, 12, 12966. [Google Scholar] [CrossRef]

- Zhang, C.; Xiao, C. Research on Course Experience Optimization of Online Education Based on Service Encounter. Design, User Experience, and Usability: UX Research and Design. In Proceedings of the 10th International Conference, DUXU 2021, Held as Part of the 23rd HCI International Conference, HCII 2021, Virtual Event, 24–29 July 2021; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2021; pp. 652–665. [Google Scholar]

- Rasmussen, A.S.; Berntsen, D. Emotional valence and the functions. Mem. Cogn. 2009, 37, 477–492. [Google Scholar] [CrossRef] [PubMed]

- Meiselman, H.L. A review of the current state of emotion research in product development. Food Res. Int. 2015, 76, 192–199. [Google Scholar] [CrossRef]

- Chamberlain, L.; Broderick, A.J. The application of physiological observation methods to emotion research. Qual. Mark. Res. Int. J. 2007, 10, 199–216. [Google Scholar] [CrossRef] [Green Version]

- Tian, M.; Yanjun, X. The study of emotion in tourist experience: Current research progress. Tour. Hosp. Prospect. 2019, 3, 82. [Google Scholar]

- Zhao, Y.; Xie, D.; Zhou, R.; Wang, N.; Yang, B. Evaluating Users’ Emotional Experience in Mobile Libraries: An Emotional Model Based on the Pleasure-Arousal-Dominance Emotion Model and the Five Factor Model. Front. Psychol. 2022, 13, 942198. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Yin, J.; Zhang, X. The study of a five-dimensional emotional model for facial emotion recognition. Mob. Inf. Syst. 2020, 2020, 8860608. [Google Scholar] [CrossRef]

- Chen, T.; Peng, L.; Jing, B.; Wu, C.; Yang, J.; Cong, G. The impact of the COVID-19 pandemic on user experience with online education platforms in China. Sustainability 2020, 12, 7329. [Google Scholar] [CrossRef]

- Clatworthy, S. Service Innovation Through Touch-points: Development of an Innovation Toolkit for the First Stages of New Service Development. Int. J. Des. 2011, 5, 15–28. [Google Scholar]

| Target | Guideline | Touchpoint | Index | Indicator Interpretation | Number |

|---|---|---|---|---|---|

| Evaluation elements of online education platform services | Visual Guidance | Color Style | Interface Design | Interface color style, icon design, transition animation, and other visual factors | T1 |

| Icon Design | |||||

| Transition Animation | |||||

| Navigation Settings | Navigation Settings | Navigation bar location and content classification | T2 | ||

| Functional Area | |||||

| Course Appointment | Course Reminder | Schedule design, class reminder, course appointment, etc. | T3 | ||

| Class Reminder | |||||

| Ad Recommendation | Ad Recommendation | Platform course recommendation and carousel advertisement | T4 | ||

| Learning Resources | Course Room | Course Videos | Course quality and type | T5 | |

| Interface Control | |||||

| Courseware | Courseware | Materials such as courseware text or slides | T6 | ||

| Bibliography | Bibliography | Course-related books | T7 | ||

| Extended Reading | Extended Reading | Course-related materials or similar courses | T8 | ||

| After-school Materials | After-school Materials | Exercises or cases involved in the course | T9 | ||

| After-school Assessment | Unit Test | Unit Test | After the unit is tested | T10 | |

| Homework | Homework | Single-session post-test or practice questions | T11 | ||

| Final Examination | Final Examination | Post-course assessment or final assignment | T12 | ||

| Answer Collection | Answer Collection | Answers to the text class of after-school exercises | T13 | ||

| Student Notes | Student Notes | In-class or after-class key notes | T14 | ||

| Interactive Feedback | Course Forum | Course Interaction | Course-related forums or online social groups | T15 | |

| Post Published | |||||

| Interactive Discussion | |||||

| Share Lessons | Share Lessons | Course link, poster, or trial sharing | T16 | ||

| Homework Correction | Homework Correction | Job accuracy and completion analysis | T17 | ||

| Q&A | Online Q&A | Teachers answer course-related questions online | T18 | ||

| Questions for Help | |||||

| Content Query | |||||

| Questionnaire | Questionnaire | Course Experience and Satisfaction Survey | T19 |

| Evaluation System | Emotional Valence Dimension | Perceptual Cognitive Dimension | |

|---|---|---|---|

| Experimental Materials | Tencent Classroom | Tencent Classroom | |

| Sample Selection | New Concept English (Volume 1) Design Introductory Classroom Principles of Aesthetics | New Concept English (Volume 1) Design Introductory Classroom Principles of Aesthetics | |

| Evaluation Method | Undergraduate students majoring in design | Undergraduate students majoring in design | |

| Comment Content | PAD emotion theory, SAM scale | Satisfaction Questionnaire | Analytic Hierarchy Matrix |

| Data Range | Pleasure, Arousal, Dominance | Satisfaction | Importance |

| Evaluation System | 1–9 | 1–5 | 0–1 |

| Number | Index | Pleasure | Arousal | Dominance |

|---|---|---|---|---|

| T1 | Interface Design | 6.14 | 5.00 | 7.00 |

| T2 | Navigation Settings | 5.05 | 5.26 | 6.57 |

| T3 | Course Reminder | 5.29 | 5.57 | 2.81 |

| T4 | Ad Recommendation | 2.05 | 3.00 | 2.10 |

| T5 | Course Videos | 7.24 | 6.86 | 7.05 |

| T6 | Courseware | 3.52 | 4.62 | 2.95 |

| T7 | Bibliography | 3.81 | 3.52 | 6.71 |

| T8 | Extended Reading | 3.10 | 2.82 | 5.76 |

| T9 | After-school Materials | 5.67 | 6.70 | 7.00 |

| T10 | Unit Test | 4.54 | 6.83 | 3.51 |

| T11 | Homework | 6.23 | 6.01 | 4.67 |

| T12 | Final Examination | 6.87 | 5.45 | 2.10 |

| T13 | Answer Collection | 3.00 | 2.99 | 2.05 |

| T14 | Student Notes | 3.00 | 5.67 | 7.43 |

| T15 | Course Interaction | 3.42 | 2.65 | 4.51 |

| T16 | Share Lessons | 7.23 | 6.87 | 7.50 |

| T17 | Homework Correction | 2.43 | 6.71 | 3.05 |

| T18 | Online Q & A | 7.85 | 6.78 | 7.54 |

| T19 | Questionnaire | 5.03 | 3.56 | 2.13 |

| No. | Satisfaction | Importance | No. | Satisfaction | Importance | No. | Satisfaction | Importance |

|---|---|---|---|---|---|---|---|---|

| T1 | 3.24 | 0.013 | T8 | 1.83 | 0.0225 | T15 | 2.11 | 0.0243 |

| T2 | 3.19 | 0.0243 | T9 | 3.65 | 0.0493 | T16 | 4.10 | 0.0212 |

| T3 | 2.52 | 0.0203 | T10 | 3.54 | 0.0316 | T17 | 1.57 | 0.0768 |

| T4 | 1.76 | 0.0031 | T11 | 3.38 | 0.0709 | T18 | 2.16 | 0.089 |

| T5 | 3.43 | 0.2966 | T12 | 2.45 | 0.019 | T19 | 2.65 | 0.0084 |

| T6 | 1.86 | 0.1358 | T13 | 2.14 | 0.0334 | |||

| T7 | 1.92 | 0.0524 | T14 | 1.98 | 0.0091 |

| Index | Pleasure | Arousal | Dominance | Satisfaction | Importance | Importance Ranking |

|---|---|---|---|---|---|---|

| (1–9) | (1–5) | (0–1) | ||||

| T1 Interface Design | 6.14 | 5.00 | 7.00 | 3.24 | 0.013 | 15 |

| T2 Navigation Settings | 5.05 | 5.26 | 6.57 | 3.19 | 0.0243 | 10 |

| T3 Course Reminder | 5.29 | 5.57 | 2.81 | 2.52 | 0.0203 | 13 |

| T4 Ad Recommendation | 2.05 | 3.00 | 2.10 | 1.76 | 0.0031 | 18 |

| T5 Course Videos | 7.24 | 6.86 | 7.05 | 3.43 | 0.2966 | 1 |

| T6 Courseware | 3.52 | 4.62 | 2.95 | 1.86 | 0.1358 | 2 |

| T7 Bibliography | 3.81 | 3.52 | 6.71 | 1.92 | 0.0524 | 6 |

| T8 Extended Reading | 3.10 | 2.82 | 5.76 | 1.83 | 0.0225 | 11 |

| T9 After-school Materials | 5.67 | 6.70 | 7.00 | 3.65 | 0.0493 | 7 |

| T10 Unit Test | 4.54 | 6.83 | 3.51 | 3.54 | 0.0316 | 9 |

| T11 Homework | 6.23 | 6.01 | 4.67 | 3.38 | 0.0709 | 5 |

| T12 Final Examination | 6.87 | 5.45 | 2.10 | 2.45 | 0.019 | 14 |

| T13 Answer Collection | 3.00 | 2.99 | 2.05 | 2.14 | 0.0334 | 8 |

| T14 Student Notes | 3.00 | 5.67 | 7.43 | 1.98 | 0.0091 | 16 |

| T15 Course Interaction | 3.42 | 2.65 | 4.51 | 2.11 | 0.0243 | 10 |

| T16 Share Lessons | 7.23 | 6.87 | 7.50 | 4.10 | 0.0212 | 12 |

| T17 Homework Correction | 2.43 | 6.71 | 3.05 | 1.57 | 0.0768 | 4 |

| T18 Online Q & A | 7.85 | 6.78 | 7.54 | 2.16 | 0.089 | 3 |

| T19 Questionnaire | 5.03 | 3.56 | 2.13 | 2.65 | 0.0084 | 17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, X.; Song, N. Emotional Value in Online Education: A Framework for Service Touchpoint Assessment. Sustainability 2023, 15, 4772. https://doi.org/10.3390/su15064772

He X, Song N. Emotional Value in Online Education: A Framework for Service Touchpoint Assessment. Sustainability. 2023; 15(6):4772. https://doi.org/10.3390/su15064772

Chicago/Turabian StyleHe, Xuemei, and Ning Song. 2023. "Emotional Value in Online Education: A Framework for Service Touchpoint Assessment" Sustainability 15, no. 6: 4772. https://doi.org/10.3390/su15064772

APA StyleHe, X., & Song, N. (2023). Emotional Value in Online Education: A Framework for Service Touchpoint Assessment. Sustainability, 15(6), 4772. https://doi.org/10.3390/su15064772