Research on the Multiobjective and Efficient Ore-Blending Scheduling of Open-Pit Mines Based on Multiagent Deep Reinforcement Learning

Abstract

:1. Introduction

1.1. Background and Motivation

1.2. Research Status

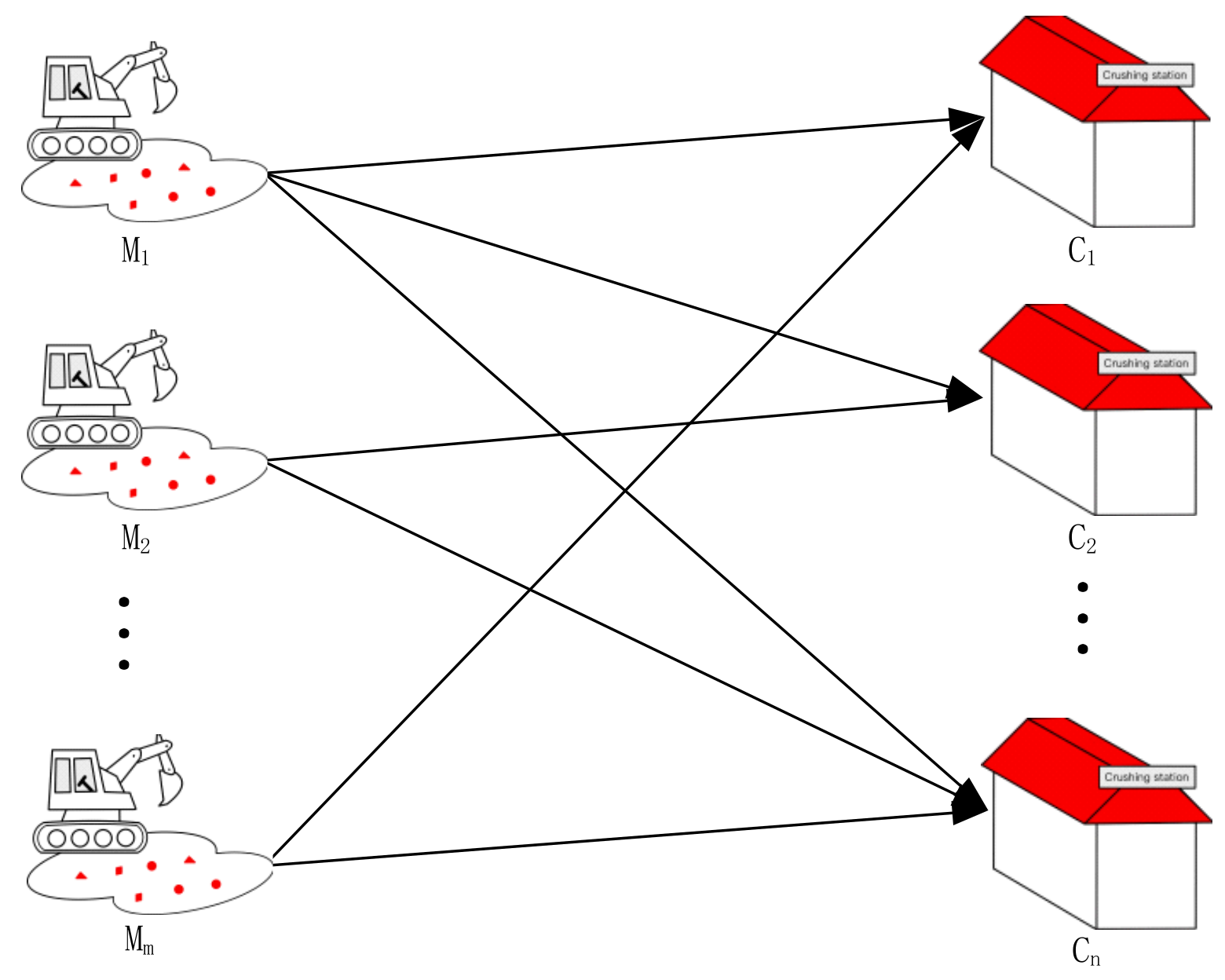

2. Definition and Modeling of Open-Pit Polymetallic Multiobjective Ore-Matching Problem

2.1. Problem Definition

2.2. Problem Modeling

2.2.1. Objective Function

2.2.2. Constraints

3. Multimetal Multiobjective Ore Allocation Algorithm Design for Open-Pit Mine Based on Multiagent Deep Reinforcement Learning

3.1. Establishment of Multimetal and Multiobjective Ore-blending model in Open-Pit Mines Based on Multiagent Deep Reinforcement Learning

3.2. Multiagent Deep Intensive Learning Multimetal Multiobjective Allocation Algorithm in Open-Pit Mines

| Algorithm 1: Multiobjective efficient ore-blending scheduling algorithm for open-pit mines based on multiagent deep reinforcement learning |

| Initialize MaxEpisode, MaxTime, MaxAgent, and other parameters |

| for i = 0 to MaxEpisode Initialize the open pit multimetal and multiobjective ore-blending environment |

| for j = 0 to MaxTime |

| Based on the environmental input, the ore-blending scheduling instruction is selected for each agent |

| The agent executes the instruction and returns the reward along with the new state of the ore-blending environment |

| The experience of into the experience pool |

| for k=0 to MaxAgent |

| Mini-batch samples are randomly drawn from the experience pool |

| The Q value of the Critic network is calculated according to Equation (14) |

| The network parameters of Critic are updated by minimizing Equation (15) |

| The Actor network parameters are updated by maximizing Equation (16) |

| The Actor target network is updated in a soft update manner according to Equation (17) |

| The target network of Critic is updated in a soft update manner according to Equation (17) |

| end for |

| end for |

| end for |

4. Engineering Application and Result Analysis

4.1. Experimental Data and Parameters

4.2. Experimental Steps

4.3. Result Analysis

4.3.1. Analysis of Ore Blending and Scheduling Results

4.3.2. Comparison of Optimization Methods

5. Conclusions

- (1)

- Aiming at the multimetal and multiobjective ore-blending optimization problem of open-pit mines, we comprehensively considered the influencing factors that have not been considered in the traditional ore-blending model. The ore grade and lithology index are taken as the objective function, and the oxidation rate, ore quantity, grade, and lithology deviation are taken as constraints. The multimetal and multiobjective ore-blending optimization model of open-pit mines was established, which is more in line with the actual production and operation of a mine, effectively improving the comprehensive utilization rate of open-pit mineral resources;

- (2)

- In this study, a deep reinforcement learning algorithm based on actor-critic was used to optimize the multimetal and multiobjective ore-blending process in an open-pit mine. The ore allocation optimization problem was transformed into a partially observable Markov decision process, and the action taken by the agent was scored by the environment in each time granularity. The agent optimizes the strategy according to the score, and the strategy sequence of each agent is sequentially inferred. At the same time, the multimetal multiobjective ore-blending optimization method of open-pit mining based on multiagent deep reinforcement theory can make online decisions and undertake offline training, obtaining the current ore-blending strategy in real-time and this can be fed into production operations;

- (3)

- A simulation experiment method was used to test the ore-blending optimization model, and the ore-blending process was liberated from the traditional static ore-blending method. The changes in ore grade, lithology, oxidation rate, and ore quantity within each time granularity in the ore-blending optimization process can be observed in real-time, which makes the ore-blending process dynamic and helps researchers to clarify the logic behind the ore-blending optimization process. The experimental results show that the algorithm can significantly reduce the waiting time in the case of ensuring calculation accuracy, and this has strong practical value.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ke, L.H.; He, X.Z.; Ye, Y.C.; He, Y.Y. Situations and development trends of ore blending optimization technology. China Min. Mag. 2017, 26, 77–82. [Google Scholar]

- Wu, L.C.; Wang, L.G.; Peng, P.A.; Wang, Z.; Chen, Z.Q. Optimization methods for ore blending in open-pit mine. Min. Metall. Eng. 2012, 32, 8–12. [Google Scholar]

- Chen, P.N.; Zhang, D.J.; Li, Z.G.; Zhang, P.; Zhou, Y.S. Digital optimization model & technical procedure of ore blending of Kun Yang phosphate. J. Wuhan Inst. Technol. 2012, 34, 37–40. [Google Scholar]

- Ke, L.H.; He, Y.Y.; Ye, Y.C.; He, X.Z. Research on ore blending scheme of Wulongquan mine of Wugang. Min. Metall. Eng. 2016, 36, 22–25. [Google Scholar]

- Wang, L.G.; Song, H.Q.; Bi, L.; Chen, X. Optimization of open pit multielement ore blending based on goal programming. J. Northeast. Univ. 2017, 38, 1031–1036. [Google Scholar]

- Li, G.Q.; Li, B.; Hu, N.L.; Hou, J.; Xiu, G.L. Optimization model of mining operation scheduling for underground metal mines. Chin. J. Eng. 2017, 39, 342–348. [Google Scholar]

- Yao, X.L.; Hu, N.L.; Zhou, L.H.; Li, Y. Ore blending of underground mines based on an immune clone selection optimization algorithm. Chin. J. Eng. 2011, 33, 526–531. [Google Scholar]

- Li, Z.G.; Cui, Z.Q. Multi-objective optimization of mine ore blending based on genetic algorithm. J. Guangxi Univ. Nat. Sci. Ed. 2013, 38, 1230–1238. [Google Scholar]

- Li, N.; Ye, H.W.; Wu, H.; Wang, L.G.; Lei, T.; Wang, Q.Z. Ore blending for mine production based on hybrid particle swarm optimization algorithm. Min. Metall. Eng. 2017, 37, 126–130. [Google Scholar]

- Gu, Q.H.; Zhang, W.; Cheng, P.; Zhang, Y.H. Optimization model of ore blending of limestone open-pit mine based on fuzzy multi-objective. Min. Res. Dev. 2021, 41, 181–187. [Google Scholar]

- Huang, L.Q.; He, B.Q.; Wu, Y.C.; Shen, H.M.; Lan, X.P.; Shang, X.Y.; Li, S.; Lin, W.X. Optimization research on the polymetallic multi-objective ore blending for unbalanced grade in Shizhuyuan mine. Min. Res. Dev. 2021, 41, 193–198. [Google Scholar]

- Feng, Q.; Li, Q.; Wang, Y.Z.; Quan, W. Application of constrained multi-objective particle swarm optimization to sinter proportioning optimizatio. Control Theory Appl. 2022, 39, 923–932. [Google Scholar]

- Rummery, G.A.; Niranjan, M. On-Line Q-Learning Using Connectionist Systems; University of Cambridge, Department of Engineering: Cambridge, UK, 1994. [Google Scholar]

- Watkins, C.; Dayan, P. Q-learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Pan, F.; Bao, H. Research progress of automatic driving control technology based on reinforcement learning. J. Image Graph. 2021, 26, 28–35. [Google Scholar]

- Xu, J.; Zhu, Y.K.; Xing, C.X. Research on financial trading algorithm based on deep reinforcement learning. Comput. Eng. Appl. 2022, 58, 276–285. [Google Scholar]

- Shen, Y.; Han, J.P.; Li, L.X.; Wang, F.Y. AI in game intelligence—from multi-role game to parallel game. Chin. J. Intell. Sci. Technol. 2020, 2, 205–213. [Google Scholar]

- Qu, Z.X.; Fang, H.N.; Xiao, H.C.; Zhang, J.P.; Yuan, Y.; Zhang, C. An on-orbit object detection method based on deep learning for optical remote sensing image. Aerosp. Control Appl. 2022, 48, 105–115. [Google Scholar]

- Ma, P.C. Research on speech recognition based on deep learning. China Comput. Commun. 2021, 33, 178–180. [Google Scholar]

- Zhang, K.; Feng, X.H.; Guo, Y.R.; Su, Y.K.; Zhao, K.; Zhao, Z.B.; Ma, Z.Y.; Ding, Q.L. Overview of deep convolutional neural networks for image classification. J. Image Graph. 2021, 26, 2305–2325. [Google Scholar]

- Deng, Z.L.; Zhang, Q.W.; Cao, H.; Gu, Z.Y. A scheduling optimization method based on depth intensive study. J. Northwestern Polytech. Univ. 2017, 35, 1047–1053. [Google Scholar]

- He, F.K.; Zhou, W.T.; Zhao, D.X. Optimized deep deterministic policy gradient algorithm. Comput. Eng. Appl. 2019, 55, 151–156. [Google Scholar]

- Liao, X.M.; Yan, S.H.; Shi, J.; Tan, Z.Y.; Zhao, Z.L.; Li, Z. Deep reinforcement learning based resource allocation algorithm in cellular networks. J. Commun. 2019, 40, 11–18. [Google Scholar]

- Liu, G.N.; Qu, J.M.; Li, X.L.; Wu, J.J. Dynamic ambulance redeployment based on deep reinforcement learning. J. Manag. Sci. China 2020, 23, 39–53. [Google Scholar] [CrossRef]

- Kui, H.B.; He, S.C. Multi-objective optimal control strategy for plug-in diesel electric hybrid vehicles based on deep reinforcement learning. J. Chongqing Jiaotong Univ. Nat. Sci. 2021, 40, 44–52. [Google Scholar]

- Hu, D.R.; Peng, Y.G.; Wei, W.; Xiao, T.T.; Cai, T.T.; Xi, W. Multi-timescale deep reinforcement learning for reactive power optimization of distribution network. In Proceedings of the 7th World Congress on Civil, Structural, and Environmental Engineering (CSEE’22), Virtual, 10–12 April 2022; Volume 42, pp. 5034–5045. [Google Scholar]

- Lowe, R.; Wu, Y.; Tamar, A. Multi-agent actor-critic for mixed cooperative-competitive environments. In Proceedings of the 31st International Conference on Neural Information Processing System, Long Beach, CA, USA, 4–9 December 2017; pp. 6382–6393. [Google Scholar]

- Li, J.N.; Yuan, L.; Chai, T.Y.; Lewis, F.L. Consensus of nonlinear multiagent systems with uncertainties using reinforcement learning based sliding mode control. IEEE Trans. Circuits Syst. I Regul. Pap. 2023, 70, 424–434. [Google Scholar] [CrossRef]

- Dao, P.N.; Liu, Y.C. Adaptive reinforcement learning in control design for cooperating manipulator systems. Asian J. Control 2022, 24, 1088–1103. [Google Scholar] [CrossRef]

- Vu, V.T.; Pham, T.L.; Dao, P.N. Disturbance observer-based adaptive reinforcement learning for perturbed uncertain surface vessels. ISA Trans. 2022, 130, 277–292. [Google Scholar] [CrossRef]

| Symbol | Explain |

|---|---|

| The number of sites producing ore | |

| The number of sites receiving ore | |

| The types of metals in ores | |

| Lithology of ore | |

| The i-th ore-producing site | |

| The j-th ore-receiving site | |

| The amount of ore transported from the i-producing site to the j-receiving site | |

| Ore grade of metal k at the i-th ore-producing site | |

| Target grade of metal k at the j-th receiving site | |

| Oxidation rate of ore at the i-th ore-producing site | |

| Target lithology ratio of the j-th site receiving ore |

| Name | Ore Grade/% | Oxidation Rate/% | Lithology | Minimum Task/Truck | Maximum Task/Truck | ||

|---|---|---|---|---|---|---|---|

| Mo | Cu | ||||||

| M1 | 0.159 | 0.075 | 0.004 | 6.8 | skarn | 2 | 60 |

| M2 | 0.079 | 0.096 | 0.006 | 19.9 | skarn | 2 | 60 |

| M3 | 0.064 | 0.075 | 0.025 | 11.2 | diopside feldspar hornstone | 2 | 60 |

| M4 | 0.06 | 0.055 | 0.023 | 8.6 | skarn | 2 | 60 |

| M5 | 0.127 | 0.1 | 0.01 | 4 | diopside feldspar hornstone | 2 | 60 |

| M6 | 0.134 | 0.123 | 0.04 | 2.7 | skarn | 2 | 60 |

| M7 | 0.06 | 0.095 | 0.016 | 16.4 | skarn | 2 | 60 |

| M8 | 0.077 | 0.134 | 0.012 | 24.5 | diopside feldspar hornstone | 2 | 60 |

| M9 | 0.099 | 0.074 | 0.004 | 7.9 | wollastonite hornstone | 2 | 60 |

| Name | Ore Grade/% | Oxidation Rate/% | Wollastonite Hornstone | Skarn | Diopside Feldspar Hornstone | Minimum Task/Truck | Maximum Task/Truck | ||

|---|---|---|---|---|---|---|---|---|---|

| Mo | Cu | ||||||||

| C1 | 0.094 | 0.098 | 0.018 | 12.2 | 0 | 0.569 | 0.431 | 50 | 160 |

| C2 | 0.091 | 0.066 | 0.012 | 8 | 0.429 | 0.571 | 0 | 50 | 160 |

| C3 | 0.103 | 0.108 | 0.015 | 12.7 | 0 | 0.521 | 0.479 | 50 | 160 |

| Ore Production Site | Ore-Blending Quantity /T | ||

|---|---|---|---|

| 900 | 600 | 0 | |

| 950 | 0 | 550 | |

| 1250 | 0 | 250 | |

| 700 | 800 | 0 | |

| 950 | 0 | 550 | |

| 1100 | 0 | 400 | |

| 1000 | 0 | 500 | |

| 1050 | 0 | 450 | |

| 0 | 1500 | 0 | |

| Ore Receiving Site | Ore Grade/% | Oxidation Rate/% | Skarn | Diopside Feldspar Hornstone | Wollastonite Hornstone | ||

|---|---|---|---|---|---|---|---|

| Mo | Cu | ||||||

| −0.001 | 0.002 | 0.000 | 0.309 | −0.020 | 0.020 | 0.000 | |

| −0.010 | −0.003 | 0.003 | 0.134 | 0.088 | 0.000 | −0.088 | |

| 0.011 | 0.003 | −0.001 | −0.726 | −0.016 | −0.016 | 0.000 | |

| Algorithm | Ore Grade/% | Lithology | ||||

|---|---|---|---|---|---|---|

| Mo | Wo3 | Cu | Skarn | Diopside Feldspar Hornstone | Wollastonite Hornstone | |

| MADDPG | 0.022 | 0.01 | 0.005 | 0.11 | 0.031 | 0.079 |

| DDPG | 0.01 | 0.019 | 0.006 | 0.202 | 0.296 | 0.159 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Z.; Liu, G.; Wang, L.; Gu, Q.; Chen, L. Research on the Multiobjective and Efficient Ore-Blending Scheduling of Open-Pit Mines Based on Multiagent Deep Reinforcement Learning. Sustainability 2023, 15, 5279. https://doi.org/10.3390/su15065279

Feng Z, Liu G, Wang L, Gu Q, Chen L. Research on the Multiobjective and Efficient Ore-Blending Scheduling of Open-Pit Mines Based on Multiagent Deep Reinforcement Learning. Sustainability. 2023; 15(6):5279. https://doi.org/10.3390/su15065279

Chicago/Turabian StyleFeng, Zhidong, Ge Liu, Luofeng Wang, Qinghua Gu, and Lu Chen. 2023. "Research on the Multiobjective and Efficient Ore-Blending Scheduling of Open-Pit Mines Based on Multiagent Deep Reinforcement Learning" Sustainability 15, no. 6: 5279. https://doi.org/10.3390/su15065279