Sustainable Road Pothole Detection: A Crowdsourcing Based Multi-Sensors Fusion Approach

Abstract

:1. Introduction

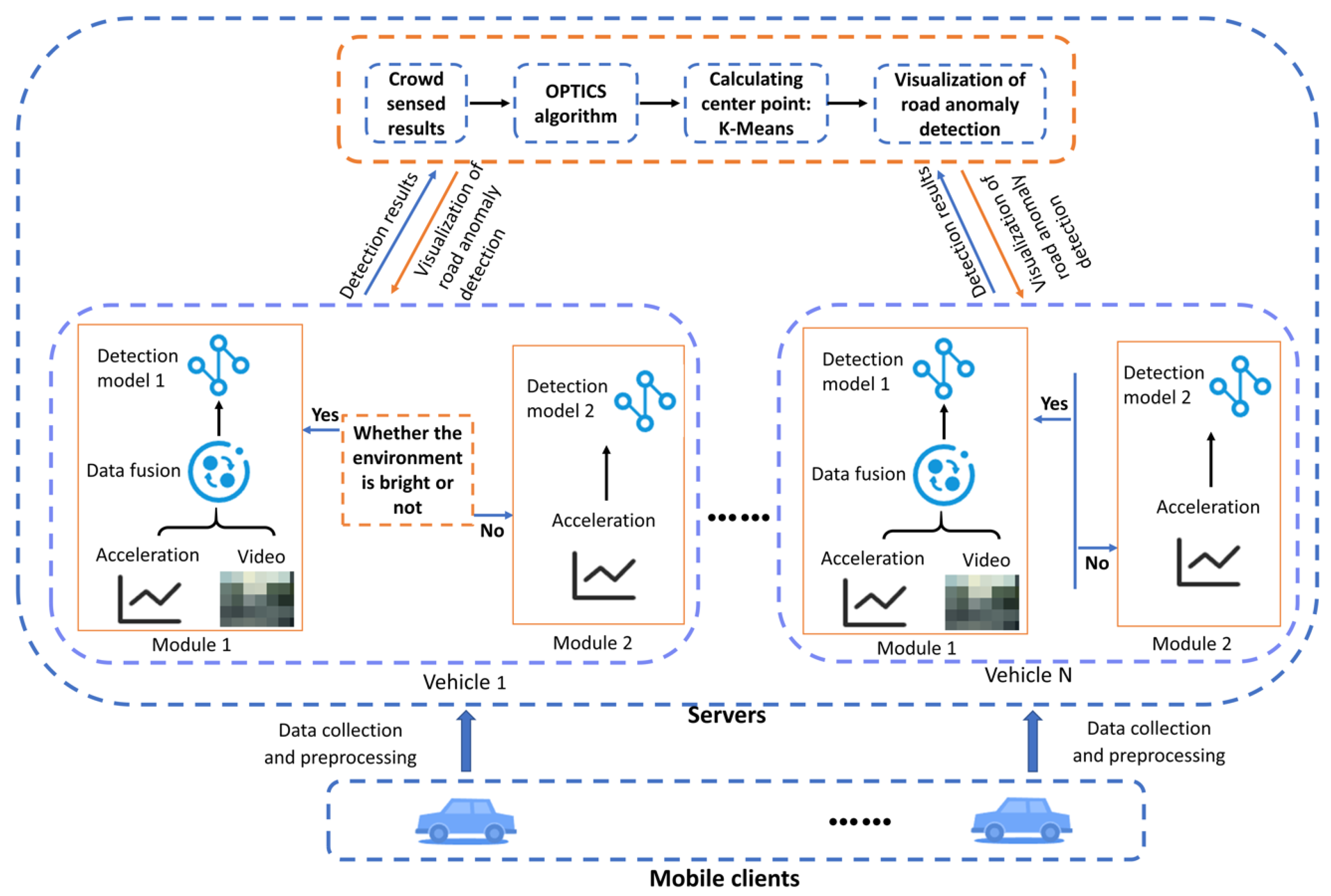

- A data-driven automatic classification model, based on the LSTM network, is used to realize road pothole detection. The LSTM network is capable of creating a nonlinear relationship between the output of the previous signal and the input of the current signal, thus conveying the information in the time series without information loss.

- The ordering points to identify the clustering structure (OPTICS) clustering method, is used to improve the accuracy of road anomaly detection. Compared to the well-established k-means algorithm, the OPTICS algorithm does not require a preset number of clusters, and can cluster data with an arbitrary shape of the sample distribution. Compared to the increasingly widely used density-based spatial clustering of applications with noise (DBSCAN) algorithm, OPTICS can accurately detect each cluster in the sample points with different densities, making it more suitable for integrating crowd-sensing results and further improving detection accuracy.

- A road pothole detection system, involving data fusion between acceleration measurements and video frames, is developed. The data fusion features encoding video and acceleration data into real-valued vectors and then projecting them into a common space, to facilitate further adoption of learning-based approaches.

2. Related Work

3. Methodology

3.1. Data Collection

3.2. Data Preprocessing

3.2.1. Resampling

3.2.2. Accelerometer Reorientation

3.2.3. Data Smoothing

3.2.4. Labeling

3.2.5. Dataset Construction

3.3. Detection Module Based on Acceleration Data

3.3.1. Feature Extraction

3.3.2. Traditional Machine Learning

3.3.3. Deep Learning Approach

3.4. Fusion of Acceleration Data with Video Data on the Individual Vehicle

3.4.1. Video Side

3.4.2. Acceleration Side

3.4.3. Detection

3.5. Fusion of Multi-Vehicle Detection Results

- Core distance: Set x ∈ X. For a given and minPts, the minimum neighborhood radius that makes x a core point is called the core distance of x. The mathematical expression iswhere represents the nodes in the set , that is the i-th nearest neighbor to node x. For example, denotes the nearest node to node x in the set .

- Reachable distance: Set ∈ X, for a given and minPts, the reachable distance of with respect to is defined as

| Algorithm 1 The steps of the OPTICS. |

Input: sample set , neighborhood parameters ( = inf, minPts)

|

4. Experiments

5. Result and Discussion

5.1. Comparison with State of the Art

5.2. Optimized Detection Results by Mining Crowd-Sensing Data

5.3. Comparison of Accelerations Measured at Different Phone Positions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vittorio, A.; Rosolino, V.; Teresa, I.; Vittoria, C.M.; Vincenzo, P.G.; Francesco, D.M. Automated Sensing System for Monitoring of Road Surface Quality by Mobile Devices. Procedia Soc. Behav. Sci. 2014, 111, 242–251. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Ma, Z.; Song, X.; Wu, J.; Liu, S.; Chen, X.; Guo, X. Road Surface Defects Detection Based on IMU Sensor. IEEE Sens. J. 2022, 22, 2711–2721. [Google Scholar] [CrossRef]

- Chang, J.R.; Chang, K.T.; Chen, D.H. Application of 3D Laser Scanning on Measuring Pavement Roughness. J. Test. Eval. 2006, 34. [Google Scholar] [CrossRef]

- del Río-Barral, P.; Soilán, M.; González-Collazo, S.M.; Arias, P. Pavement Crack Detection and Clustering via Region-Growing Algorithm from 3D MLS Point Clouds. Remote Sens. 2022, 14, 5866. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Yu, Y.; Chapman, M.; Wang, H.; Wang, C.; Zhai, R. Iterative Tensor Voting for Pavement Crack Extraction Using Mobile Laser Scanning Data. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1527–1537. [Google Scholar] [CrossRef]

- Carlos, M.R.; González, L.C.; Wahlström, J.; Cornejo, R.; Martínez, F. Becoming Smarter at Characterizing Potholes and Speed Bumps from Smartphone Data—Introducing a Second-Generation Inference Problem. IEEE Trans. Mob. Comput. 2021, 20, 366–376. [Google Scholar] [CrossRef]

- Daraghmi, Y.A.; Wu, T.H.; Ik, T.U. Crowdsourcing-Based Road Surface Evaluation and Indexing. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4164–4175. [Google Scholar] [CrossRef]

- Maeda, H.; Sekimoto, Y.; Seto, T.; Kashiyama, T.; Omata, H. Road Damage Detection and Classification Using Deep Neural Networks with Smartphone Images. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 1127–1141. [Google Scholar] [CrossRef] [Green Version]

- Sun, T.; Pan, W.; Wang, Y.; Liu, Y. Region of Interest Constrained Negative Obstacle Detection and Tracking with a Stereo Camera. IEEE Sens. J. 2022, 22, 3616–3625. [Google Scholar] [CrossRef]

- Wang, D.; Liu, Z.; Gu, X.; Wu, W.; Chen, Y.; Wang, L. Automatic Detection of Pothole Distress in Asphalt Pavement Using Improved Convolutional Neural Networks. Remote Sens. 2022, 14, 3892. [Google Scholar] [CrossRef]

- Zhang, Y.; Zuo, Z.; Xu, X.; Wu, J.; Zhu, J.; Zhang, H.; Wang, J.; Tian, Y. Road damage detection using UAV images based on multi-level attention mechanism. Autom. Constr. 2022, 144, 104613. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, F.Y.; Wang, K.; Lin, W.H.; Xu, X.; Chen, C. Data-Driven Intelligent Transportation Systems: A Survey. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1624–1639. [Google Scholar] [CrossRef]

- Botshekan, M.; Roxon, J.; Wanichkul, A.; Chirananthavat, T.; Chamoun, J.; Ziq, M.; Anini, B.; Daher, N.; Awad, A.; Ghanem, W.; et al. Roughness-induced vehicle energy dissipation from crowdsourced smartphone measurements through random vibration theory. Data-Centric Eng. 2020, 1, e16. [Google Scholar] [CrossRef]

- Chen, D.; Chen, N.; Zhang, X.; Guan, Y. Real-Time Road Pothole Mapping Based on Vibration Analysis in Smart City. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6972–6984. [Google Scholar] [CrossRef]

- Andren, P. Power spectral density approximations of longitudinal road profiles. Int. J. Veh. Des. 2006, 40, 2–14. [Google Scholar] [CrossRef]

- Du, Y.; Chen, J.; Zhao, C.; Liu, C.; Liao, F.; Chan, C.Y. Comfortable and energy-efficient speed control of autonomous vehicles on rough pavements using deep reinforcement learning. Transp. Res. Part C Emerg. Technol. 2022, 134, 103489. [Google Scholar] [CrossRef]

- Perttunen, M.; Mazhelis, O.; Cong, F.; Kauppila, M.; Leppänen, T.; Kantola, J.; Collin, J.; Pirttikangas, S.; Haverinen, J.; Ristaniemi, T.; et al. Distributed Road Surface Condition Monitoring Using Mobile Phones. In Proceedings of the Ubiquitous Intelligence and Computing, Banff, AL, Canada, 2–4 September 2011; Hsu, C.H., Yang, L.T., Ma, J., Zhu, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 64–78. [Google Scholar]

- Mednis, A.; Strazdins, G.; Zviedris, R.; Kanonirs, G.; Selavo, L. Real time pothole detection using Android smartphones with accelerometers. In Proceedings of the 2011 International Conference on Distributed Computing in Sensor Systems and Workshops (DCOSS), Barcelona, Spain, 27–29 June 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Basavaraju, A.; Du, J.; Zhou, F.; Ji, J. A Machine Learning Approach to Road Surface Anomaly Assessment Using Smartphone Sensors. IEEE Sens. J. 2020, 20, 2635–2647. [Google Scholar] [CrossRef]

- Liu, Z.; Gu, X.; Chen, J.; Wang, D.; Chen, Y.; Wang, L. Automatic recognition of pavement cracks from combined GPR B-scan and C-scan images using multiscale feature fusion deep neural networks. Autom. Constr. 2023, 146, 104698. [Google Scholar] [CrossRef]

- Ruan, S.; Li, S.; Lu, C.; Gu, Q. A Real-Time Negative Obstacle Detection Method for Autonomous Trucks in Open-Pit Mines. Sustainability 2023, 15, 120. [Google Scholar] [CrossRef]

- Akagic, A.; Buza, E.; Omanovic, S. Pothole detection: An efficient vision based method using RGB color space image segmentation. In Proceedings of the 2017 40th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 22–26 May 2017; pp. 1104–1109. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, F.; Daniel Zhang, Y.; Zhu, Y.J. Road crack detection using deep convolutional neural network. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3708–3712. [Google Scholar] [CrossRef]

- Salaudeen, H.; Çelebi, E. Pothole Detection Using Image Enhancement GAN and Object Detection Network. Electronics 2022, 11, 1882. [Google Scholar] [CrossRef]

- Wang, E.; Yang, Y.; Wu, J.; Liu, W.; Wang, X. An Efficient Prediction-Based User Recruitment for Mobile Crowdsensing. IEEE Trans. Mob. Comput. 2018, 17, 16–28. [Google Scholar] [CrossRef]

- Wang, X.; Zheng, X.; Zhang, Q.; Wang, T.; Shen, D. Crowdsourcing in ITS: The State of the Work and the Networking. IEEE Trans. Intell. Transp. Syst. 2016, 17, 1596–1605. [Google Scholar] [CrossRef]

- Wahlström, J.; Skog, I.; Händel, P. Smartphone-Based Vehicle Telematics: A Ten-Year Anniversary. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2802–2825. [Google Scholar] [CrossRef] [Green Version]

- Miyajima, C.; Takeda, K. Driver-Behavior Modeling Using On-Road Driving Data: A new application for behavior signal processing. IEEE Signal Process. Mag. 2016, 33, 14–21. [Google Scholar] [CrossRef]

- Gillespie, T.D. Everything You Always Wanted to Know about the IRI, But Were Afraid to Ask! In Proceedings of the Road Profile Users Group Meeting, Lincoln, NE, USA, 22–24 September 1992; p. 14. [Google Scholar]

- Eriksson, J.; Girod, L.; Hull, B.; Newton, R.; Madden, S.; Balakrishnan, H. The pothole patrol: Using a mobile sensor network for road surface monitoring. In Proceedings of the 6th International Conference on Mobile Systems, Applications, and Services—MobiSys ’08, Breckenridge, CO, USA, 17–20 June 2008; p. 29. [Google Scholar] [CrossRef]

- Li, X.; Goldberg, D.W. Toward a mobile crowdsensing system for road surface assessment. Comput. Environ. Urban Syst. 2018, 69, 51–62. [Google Scholar] [CrossRef]

- Carlos, M.R.; Aragón, M.E.; González, L.C.; Escalante, H.J.; Martínez, F. Evaluation of Detection Approaches for Road Anomalies Based on Accelerometer Readings—Addressing Who’s Who. IEEE Trans. Intell. Transp. Syst. 2018, 19, 3334–3343. [Google Scholar] [CrossRef]

- Kalim, F.; Jeong, J.P.; Ilyas, M.U. CRATER: A Crowd Sensing Application to Estimate Road Conditions. IEEE Access 2016, 4, 8317–8326. [Google Scholar] [CrossRef]

- Lima, L.C.; Amorim, V.J.P.; Pereira, I.M.; Ribeiro, F.N.; Oliveira, R.A.R. Using Crowdsourcing Techniques and Mobile Devices for Asphaltic Pavement Quality Recognition. In Proceedings of the 2016 VI Brazilian Symposium on Computing Systems Engineering (SBESC), João Pessoa, Brazil, 1–4 November 2016; pp. 144–149. [Google Scholar] [CrossRef]

- Li, X.; Huo, D.; Goldberg, D.W.; Chu, T.; Yin, Z.; Hammond, T. Embracing Crowdsensing: An Enhanced Mobile Sensing Solution for Road Anomaly Detection. ISPRS Int. J. Geo-Inf. 2019, 8, 412. [Google Scholar] [CrossRef] [Green Version]

- Chen, K.; Lu, M.; Tan, G.; Wu, J. CRSM: Crowdsourcing Based Road Surface Monitoring. In Proceedings of the 2013 IEEE 10th International Conference on High Performance Computing and Communications & 2013 IEEE International Conference on Embedded and Ubiquitous Computing, Zhangjiajie, China, 13–15 November 2013; pp. 2151–2158. [Google Scholar] [CrossRef]

- Fan, R.; Bocus, M.J.; Zhu, Y.; Jiao, J.; Wang, L.; Ma, F.; Cheng, S.; Liu, M. Road Crack Detection Using Deep Convolutional Neural Network and Adaptive Thresholding. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 474–479. [Google Scholar] [CrossRef] [Green Version]

- Ramesh, A.; Nikam, D.; Balachandran, V.N.; Guo, L.; Wang, R.; Hu, L.; Comert, G.; Jia, Y. Cloud-Based Collaborative Road-Damage Monitoring with Deep Learning and Smartphones. Sustainability 2022, 14, 8682. [Google Scholar] [CrossRef]

- Dong, J.; Li, X.; Xu, C.; Yang, X.; Yang, G.; Wang, X.; Wang, M. Dual Encoding for Video Retrieval by Text. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 4065–4080. [Google Scholar] [CrossRef]

- Wu, C.; Wang, Z.; Hu, S.; Lepine, J.; Na, X.; Ainalis, D.; Stettler, M. An automated machine-learning approach for road pothole detection using smartphone sensor data. Sensors 2020, 20, 5564. [Google Scholar] [CrossRef] [PubMed]

- Taspinar. A Guide for Using the Wavelet Transform in Machine Learning. Available online: https://ataspinar.com/2018/04/04/machine-learning-with-signal-processing-techniques/ (accessed on 13 June 2022).

- Ren, L.; Cui, J.; Sun, Y.; Cheng, X. Multi-bearing remaining useful life collaborative prediction: A deep learning approach. J. Manuf. Syst. 2017, 43, 248–256. [Google Scholar] [CrossRef]

- Sayers, M.W.; Gillespie, T.D.; Queiroz, C.A.V. The International Road Roughness Experiment: Establishing Correlation and a Calibration Standard for Measurements; Number No. 45 in World Bank Technical Paper; World Bank: Washington, DC, USA, 1986. [Google Scholar]

- Cervantes, J.; Garcia-Lamont, F.; Rodríguez-Mazahua, L.; Lopez, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Strobl, C.; Malley, J.; Tutz, G. An Introduction to Recursive Partitioning: Rationale, Application, and Characteristics of Classification and Regression Trees, Bagging, and Random Forests. Psychol. Methods 2009, 14, 323–348. [Google Scholar] [CrossRef] [Green Version]

- Vos, K.; Peng, Z.; Jenkins, C.; Shahriar, M.R.; Borghesani, P.; Wang, W. Vibration-based anomaly detection using LSTM/SVM approaches. Mech. Syst. Signal Process. 2022, 169, 108752. [Google Scholar] [CrossRef]

- Sáez, J.A.; Luengo, J.; Stefanowski, J.; Herrera, F. SMOTE–IPF: Addressing the noisy and borderline examples problem in imbalanced classification by a re-sampling method with filtering. Inf. Sci. 2015, 291, 184–203. [Google Scholar] [CrossRef]

- El-Wakeel, A.S.; Li, J.; Noureldin, A.; Hassanein, H.S.; Zorba, N. Towards a Practical Crowdsensing System for Road Surface Conditions Monitoring. IEEE Internet Things J. 2018, 5, 4672–4685. [Google Scholar] [CrossRef]

- Hahsler, M.; Piekenbrock, M.; Doran, D. dbscan: Fast Density-Based Clustering with R. J. Stat. Softw. 2019, 91, 1–30. [Google Scholar] [CrossRef] [Green Version]

| Scenario | Speed (m/s) | Vehicle Type |

|---|---|---|

| 1 | 30–45 | Passenger car |

| 2 | 45–65 | Passenger car |

| 3 | 30–45 | Sport utility vehicle |

| 4 | 45–65 | Sport utility vehicle |

| Predicted Value | |||

|---|---|---|---|

| Positive | Negative | ||

| True value | Positive | True positive | False negative |

| Negative | False positive | True negative | |

| Classifiers | Accuracy for Training Set | Accuracy for Training Set | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| SVM | 0.829 | 0.818 | 0.851 | 0.734 | 0.788 |

| RF | 0.859 | 0.838 | 0.885 | 0.750 | 0.812 |

| LSTM | 0.961 | 0.957 | 0.897 | 0.813 | 0.853 |

| Joint optimization model | 0.999 | 0.965 | 0.893 | 0.821 | 0.856 |

| The Position of the Smartphone | Detection Method | Accuracy on Training Set | Accuracy on Testing Set | Precision | Recall | F1 Score |

|---|---|---|---|---|---|---|

| Smartphone placed in the holder of the phone | Threshold-based method | 0.744 | 0.734 | 0.470 | 0.306 | 0.371 |

| SVM | 0.810 | 0.783 | 0.830 | 0.708 | 0.764 | |

| RF | 0.882 | 0.875 | 0.875 | 0.706 | 0.782 | |

| LSTM | 0.999 | 0.821 | 0.833 | 0.797 | 0.815 | |

| Joint optimization model | 0.999 | 0.927 | 0.866 | 0.799 | 0.831 | |

| Smartphone placed in the compartment of the car door | Threshold-based method | 0.755 | 0.738 | 0.474 | 0.336 | 0.394 |

| SVM | 0.812 | 0.779 | 0.824 | 0.705 | 0.706 | |

| RF | 0.999 | 0.822 | 0.837 | 0.796 | 0.816 | |

| LSTM | 0.999 | 0.875 | 0.863 | 0.815 | 0.838 | |

| Joint optimization model | 0.999 | 0.886 | 0.865 | 0.808 | 0.836 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xin, H.; Ye, Y.; Na, X.; Hu, H.; Wang, G.; Wu, C.; Hu, S. Sustainable Road Pothole Detection: A Crowdsourcing Based Multi-Sensors Fusion Approach. Sustainability 2023, 15, 6610. https://doi.org/10.3390/su15086610

Xin H, Ye Y, Na X, Hu H, Wang G, Wu C, Hu S. Sustainable Road Pothole Detection: A Crowdsourcing Based Multi-Sensors Fusion Approach. Sustainability. 2023; 15(8):6610. https://doi.org/10.3390/su15086610

Chicago/Turabian StyleXin, Hanyu, Yin Ye, Xiaoxiang Na, Huan Hu, Gaoang Wang, Chao Wu, and Simon Hu. 2023. "Sustainable Road Pothole Detection: A Crowdsourcing Based Multi-Sensors Fusion Approach" Sustainability 15, no. 8: 6610. https://doi.org/10.3390/su15086610