A Methodological Literature Review of Acoustic Wildlife Monitoring Using Artificial Intelligence Tools and Techniques

Abstract

:1. Introduction

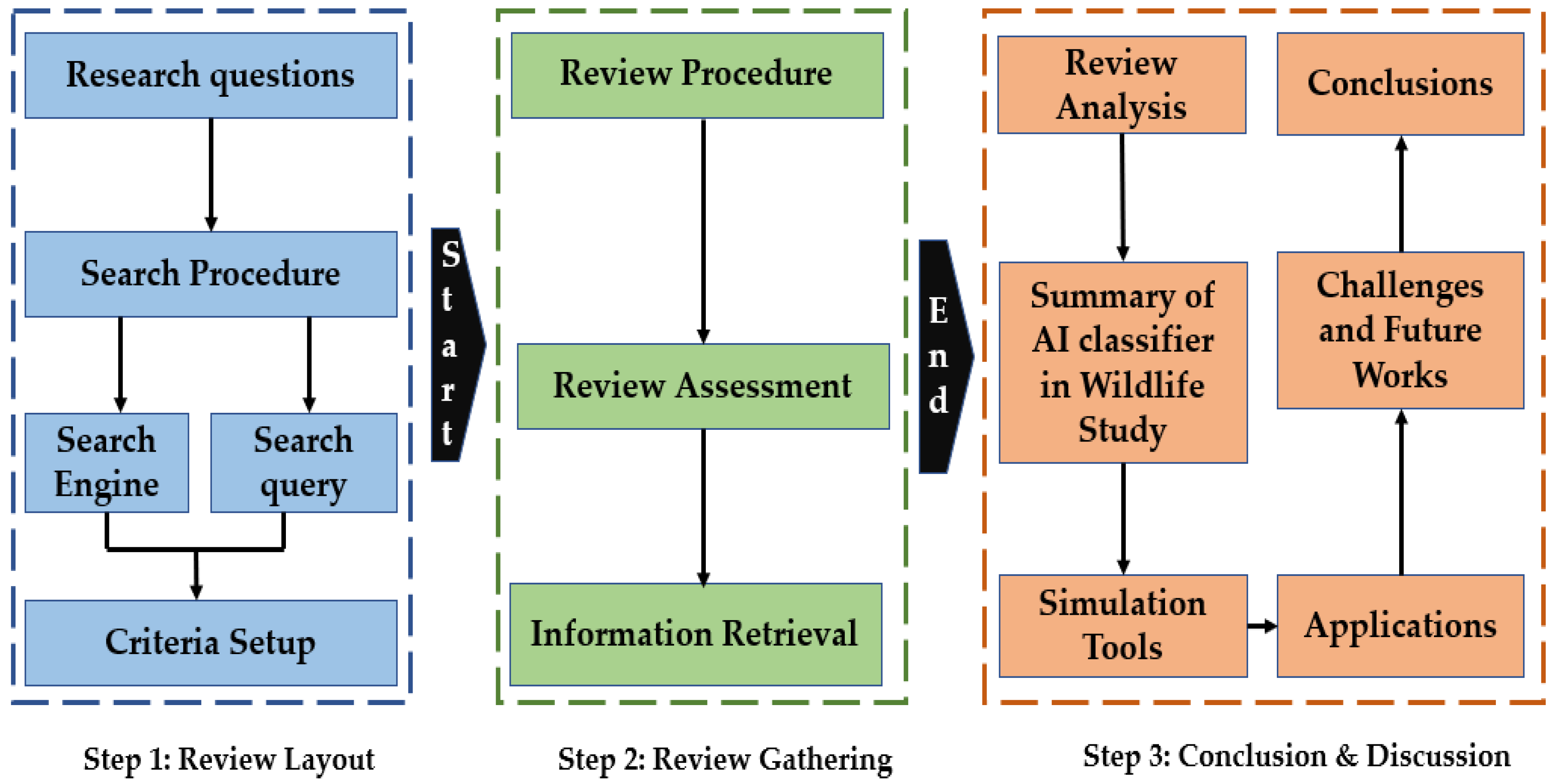

2. Materials and Methods

2.1. Review Procedure

2.2. Research Questions

2.3. Selection Criteria Benchmarking

2.4. Data Gathering Insights

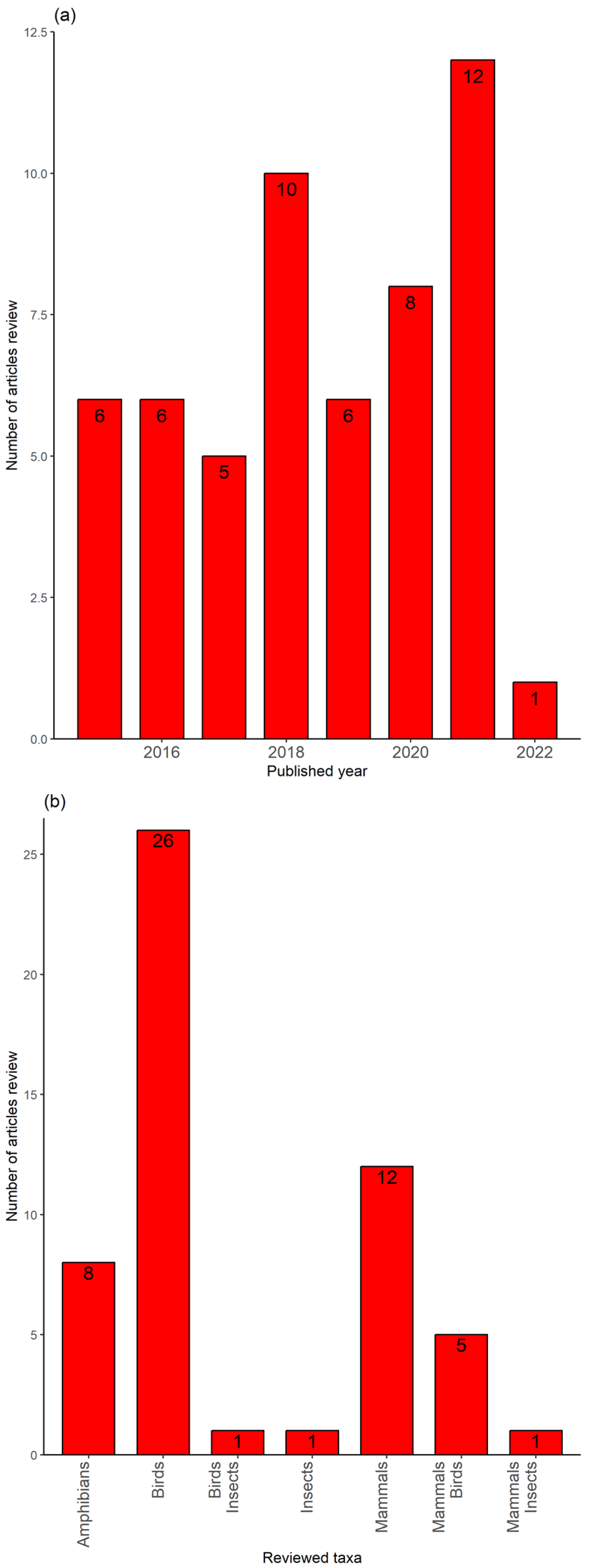

3. Results

3.1. Summary of the Study Field with In-Depth Findings

3.1.1. Summary of Current Work on AI-Assisted Wildlife Acoustic Monitoring in Marine Ecosystems

3.1.2. Summary on Bioacoustics Monitoring of Forest Environments Amidst AI Methods

| Citations | Data | Taxa | AI Features | AI Classifiers |

|---|---|---|---|---|

| Himawan et al. [30] | Koala calls extracted | Mammals | Constant q-spectrogram | CNN + RNN or CRNN |

| Xie et al. [22] | 24 h calls recordings | Amphibians | Multi-view spectrograms | CNN |

| Xie et al. [23] | 1 h recordings | Amphibians | Mel-spectrogram | CNN-LSTM |

| Xie et al. [26] | 24 h frog calls recordings | Amphibians | Shannon entropy, spectral peak track, harmonic index, and oscillation | GMM |

| Bergler et al. [19] | 9000 h of vocalization recordings | Mammals | Mel-spectrogram | DNN |

| Adavanne et al. [31] | 500 frames per clip | Bird | Dominant frequency, log Mel-band energy | CNN + RNN |

| Allen et al. [20] | 187,000 h of vocalizations data | Mammals | Mel-spectrogram | CNN |

| Ruff et al. [11] | owl audio collections | Birds | Mel-spectrogram | CNN |

| Znidersic et al. [5] | 30 days of audio recordings | Bird | LDFC spectrogram | RF Regression model |

| Prince et al. [32] | Bats: 128,000 samples of audio Cicada: 8192 vocalization data for training Gun-shoot: 32,768 vocalization data for training | Mammals, insects | Mel-spectrogram, MSFB, MFCC | CNN, HMM |

| Zhang et al. [33] | audio at 44.1 kHz | Birds | Mel- spectrogram, STFT, MFCT, CT | SFIM based on deep CNN |

| Madhavi and Pamnani [34] | 30 bird species vocalization recordings | Birds | MFCC | DRNN |

| Stowell et al. [35] | Data from Chernobyl Exclusion Zone (CEZ) repository | Birds | Mel-spectrograms, MFCCs | GMM, RF based on decision tree |

| Yang et al. [27] | Bird species 1200 syllabi | Birds | Wavelet packet decomposition | Cubic SVM, RF, RT |

| Castor et al. [28] | Bioacoustics with 48 KHz | Birds | MFCCs | Deep multi-instance learning architecture |

| Zhong et al. [36] | 48 KHz audio recordings | Birds | Mel-spectrogram | Deep CNN |

| Zhao et al. [37] | Xeno-canto acoustic repository for vocalization | Birds | Mel-band-pass filter bank | GMM, event-based model |

| Ventura et al. [38] | Xeno-canto acoustic repository for vocalization | Birds | MFCCs | Audio parameterization, GMM, SBM, ROI model |

| de Oliveira et al. [29] | 48 KHz acoustic recordings | Birds | Mel-spectrogram | HMM/GMM, GMM, SBM |

| Gan et al. [24] | 24 h audio | Amphibians | LDFC spectrogram | SVM |

| Xie et al. [25] | 512 audio samples | Amphibians | Linear Predictive Coefficient, MFCC, Wavelet-based features | Multi-label learning algorithm |

| Ruff et al. [39] | 12 s calls recordings | Birds and mammals | Spectrogram | CNN |

| Ramli and Jaafar [40] | 675 calls samples | Amphibians | Short-time energy (STE) and short-time average zero (STAZCR) | SBM |

| Cramer et al. [41] | vocalization of 14 birds classes | Birds | Mel-spectrogram | TaxoNet: deep CNN |

| Lostanlen et al. [42] | 10 h audio recordings | Birds | Mel-spectrogram | Context-adaptive Neural Network (CN-NN) |

| Nanni et al. [43] | 2814 audio samples | Birds, mammals | Spectrogram, harmonic | CNN |

| Nanni et al. [44] | Xeno-canto archives, online | Birds and mammals | Spectrogram | CNN |

| Nanni et al. [45] | Xeno-canto archives, online data | Birds and mammals | Spectrogram | CNN |

| Pandeya et al. [46] | Online database | Mammals | Mel-spectrogram | CNN, Convolutional Deep Belief Network (CDBN) |

| González-Hernández et al. [47] | Online database | Mammals | Whole spectrogram, the spectrogram of thump signal, octave analysis coefficient | Artificial Neural Network (ANN) |

| Incze et al. [48] | Xeno-canto archives for calls | Birds | Spectrogram | CNN |

| Zhang et al. [49] | 2762 call events | Birds | Spectrogram | GMM, SVM |

| Sprengel et al. [50] | Above 33,000 calls events | Birds | Spectrogram | CNN |

| Lasseck [51] | Xeno-canto archive for birds vocalization | Birds | Spectrogram | Deep CNN |

| Zhang and Li [52] | Freesound repository for calls recordings | Birds | Mel-scaled wavelet packet decomposition sub-band cepstral coefficients (MWSCC) | SVM |

| Stowell et al. [53] | 12 species audios | Birds | Mel-spectrogram | Random Forest, HMM |

| Salamon et al. [54] | 5428 calls recordings | Birds | MFCCs | Deep CNN |

| Noda et al. [55] | audio from 88 species of insects | Insects | MFCCs and linear frequency cepstral coefficients (LFCCs) | SVM |

| Ntalampiras [56] | Xeno-canto archives | Birds | Mel-scale filterbank, Short-time Fourier transform (STFT) | HMM, Randon Forest |

| Szenicer et al. [57] | 8000 h of audio signal | Mammals | Spectrogram | CNN |

| Turesson et al. [12] | 44.1 KHz frequency vocalization using microphone | Mammals | Time–frequency spectrogram | Optimum Path Forest, Multi-layer Artificial Neural Network, SVM, K-Nearest Neighbors, Logistic Regression, AdaBoost |

| Knight et al. [58] | Audio clip from 19 bird species | Birds | Spectrogram | CNN |

| Chalmers et al. [59] | Five distinct bird species audio clip 2104 individuals | Birds | Mel-frequency cepstrum (MFC) | Multi-layer Perceptrons |

| Zhang et al. [60] | 1435 one-minute audio clips | Birds and Insects | Spectrogram | K-Nearest Neighbor, Decision Tree, Multi-layer Perceptrons |

| Jaafar and Ramli [10] | 675 audios from 15 frog species | Amphibians | Multi-frequency cepstrum coefficient (MFCC) | K-Nearest Neighbor with Fuzzy Distance weighting |

| Bedoya et al. [13] | 30 individuals with 54 recorders | Birds | Spectrogram | CNN |

| Brodie et al. [61] | 512 audio samples per frames from 2584 total frames | Mammals | Spectrogram | SVM |

| Bermant et al. [9] | 650 spectrogram images | Mammals | Spectrogram | CNN, LSTM-RNN |

| Zeppelzauer et al. [62] | 335 min of audio recordings, annotated 635 rambles | Mammals | Spectrogram | SVM |

| Lopez-Tello et al. [6] | Audio recordings of birds and mammals | Birds and Mammals | MFCC | SVM |

| do Nascimento et al. [14] | 24 h vocals recordings from six recorders | Mammals | Spectrogram with fast Fourier transformation | Linear Mixed Model |

| Yip et al. [63] | Vocalization during breeding season between 5:00–8:00 am | Birds | Spectrogram | K-Means Clustering |

| Dufourq et al. [64] | Eight song meter recorders from 1 March to 20 august 2016 | Mammals | Spectrogram | CNN |

| Aodha et al. [65] | 1024 audio samples between 5 KHz and 13 KHz frequency | Mammals | Spectrogram with fast Fourier transform | CNN |

3.1.3. Performance Metrics: Different Performance Measures Are Frequently Used to Assess Acoustic Wildlife Monitoring Systems

3.2. Summary of Wildlife Acoustic Monitoring Features Amidst AI Classifiers

3.2.1. Summary of Strengths and Weakness for Bioacoustics Monitoring Amidst Bioacoustics’ Features

3.2.2. Classification Methods’ Comparison and Resources Required

Classifier in Acoustic Monitoring through Acoustics

3.2.3. Resources Required for AI Monitoring of Wildlife through Acoustics

3.2.4. Summary of Strengths and Weakness for Acoustic Wildlife Monitoring Using AI Classifier

3.3. Acoustic Wildlife Monitoring Simulation Tools

3.4. Uses of Acoustic Wildlife Monitoring Amidst AI Methods

3.5. Active versus Passive Sensing Methods in Acoustic Monitoring in Wildlife

3.6. Practitioner Consideration

3.7. Challenges and Future Works

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Campos, I.B.; Landers, T.J.; Lee, K.D.; Lee, W.G.; Friesen, M.R.; Gaskett, A.C.; Ranjard, L. Assemblage of Focal Species Recognizers—AFSR: A technique for decreasing false indications of presence from acoustic automatic identification in a multiple species context. PLoS ONE 2019, 14, e0212727. [Google Scholar] [CrossRef] [PubMed]

- Digby, A.; Towsey, M.; Bell, B.D.; Teal, P.D. A practical comparison of manual and autonomous methods for acoustic monitoring. Methods Ecol. Evol. 2013, 4, 675–683. [Google Scholar] [CrossRef]

- Knight, E.C.; Hannah, K.C.; Foley, G.J.; Scott, C.D.; Brigham, R.M.; Bayne, E. Recommendations for acoustic recognizer performance assessment with application to five common automated signal recognition programs. Avian Conserv. Ecol. 2017, 12, 14. [Google Scholar] [CrossRef]

- Jahn, O.; Ganchev, T.; Marques, M.I.; Schuchmann, K.-L. Automated Sound Recognition Provides Insights into the Behavioral Ecology of a Tropical Bird. PLoS ONE 2017, 12, e0169041. [Google Scholar] [CrossRef]

- Znidersic, E.; Towsey, M.; Roy, W.; Darling, S.E.; Truskinger, A.; Roe, P.; Watson, D.M. Using visualization and machine learning methods to monitor low detectability species—The least bittern as a case study. Ecol. Inform. 2019, 55, 101014. [Google Scholar] [CrossRef]

- Lopez-Tello, C.; Muthukumar, V. Classifying Acoustic Signals for Wildlife Monitoring and Poacher Detection on UAVs. In Proceedings of the 21st Euromicro Conference on Digital System Design (DSD), Prague, Czech Republic, 29–31 August 2018; pp. 685–690. [Google Scholar] [CrossRef]

- Steinberg, B.Z.; Beran, M.J.; Chin, S.H.; Howard, J.H., Jr. A neural network approach to source localization. J. Acoust. Soc. Am. 1991, 90, 2081–2090. [Google Scholar] [CrossRef]

- Gibb, R.; Browning, E.; Glover-Kapfer, P.; Jones, K.E. Emerging opportunities and challenges for passive acoustics in ecological assessment and monitoring. Methods Ecol. Evol. 2018, 10, 169–185. [Google Scholar] [CrossRef]

- Bermant, P.C.; Bronstein, M.M.; Wood, R.J.; Gero, S.; Gruber, D.F. Deep Machine Learning Techniques for the Detection and Classification of Sperm Whale Bioacoustics. Sci. Rep. 2019, 9, 12588. [Google Scholar] [CrossRef]

- Jaafar, H.; Ramli, D.A. Effect of Natural Background Noise and Man-Made Noise on Automated Frog Calls Identification System. J. Trop. Resour. Sustain. Sci. (JTRSS) 2015, 3, 208–213. [Google Scholar] [CrossRef]

- Ruff, Z.J.; Lesmeister, D.B.; Duchac, L.S.; Padmaraju, B.K.; Sullivan, C.M. Automated identification of avian vocalizations with deep convolutional neural networks. Remote Sens. Ecol. Conserv. 2019, 6, 79–92. [Google Scholar] [CrossRef]

- Turesson, H.K.; Ribeiro, S.; Pereira, D.R.; Papa, J.P.; de Albuquerque, V.H.C. Machine Learning Algorithms for Automatic Classification of Marmoset Vocalizations. PLoS ONE 2016, 11, e0163041. [Google Scholar] [CrossRef]

- Bedoya, C.L.; Molles, L.E. Acoustic Censusing and Individual Identification of Birds in the Wild. bioRxiv 2021. [Google Scholar] [CrossRef]

- Nascimento, L.A.D.; Pérez-Granados, C.; Beard, K.H. Passive Acoustic Monitoring and Automatic Detection of Diel Patterns and Acoustic Structure of Howler Monkey Roars. Diversity 2021, 13, 566. [Google Scholar] [CrossRef]

- Bao, J.; Xie, Q. Artificial intelligence in animal farming: A systematic literature review. J. Clean. Prod. 2022, 331, 129956. [Google Scholar] [CrossRef]

- Sharma, S.; Sato, K.; Gautam, B.P. Bioacoustics Monitoring of Wildlife using Artificial Intelligence: A Methodological Literature Review. In Proceedings of the 2022 International Conference on Networking and Network Applications (NaNA), Urumqi, China, 3–5 December 2022; pp. 1–9. [Google Scholar]

- Kitchenham, B.A.; Charters, S.M. Guidelines for Performing Systematic Literature Review in Software Engineering. EBSE Technical Report, EBSE-2007-01. 2007. Available online: https://www.researchgate.net/publication/302924724_Guidelines_for_performing_Systematic_Literature_Reviews_in_Software_Engineering (accessed on 22 April 2023).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Bergler, C.; Schröter, H.; Cheng, R.X.; Barth, V.; Weber, M.; Nöth, E.; Hofer, H.; Maier, A. ORCA-SPOT: An Automatic Killer Whale Sound Detection Toolkit Using Deep Learning. Sci. Rep. 2019, 9, 10997. [Google Scholar] [CrossRef]

- Allen, A.N.; Harvey, M.; Harrell, L.; Jansen, A.; Merkens, K.P.; Wall, C.C.; Cattiau, J.; Oleson, E.M. A Convolutional Neural Network for Automated Detection of Humpback Whale Song in a Diverse, Long-Term Passive Acoustic Dataset. Front. Mar. Sci. 2021, 8, 607321. [Google Scholar] [CrossRef]

- Sugai, L.S.M.; Silva, T.S.F.; Ribeiro, J.W.; Llusia, D. Terrestrial Passive Acoustic Monitoring: Review and Perspectives. Bioscience 2018, 69, 15–25. [Google Scholar] [CrossRef]

- Xie, J.; Zhu, M.; Hu, K.; Zhang, J.; Hines, H.; Guo, Y. Frog calling activity detection using lightweight CNN with multi-view spectrogram: A case study on Kroombit tinker frog. Mach. Learn. Appl. 2021, 7, 100202. [Google Scholar] [CrossRef]

- Xie, J.; Hu, K.; Zhu, M.; Guo, Y. Bioacoustic signal classification in continuous recordings: Syllable-segmentation vs sliding-window. Expert Syst. Appl. 2020, 152, 113390. [Google Scholar] [CrossRef]

- Gan, H.; Zhang, J.; Towsey, M.; Truskinger, A.; Stark, D.; van Rensburg, B.J.; Li, Y.; Roe, P. A novel frog chorusing recognition method with acoustic indices and machine learning. Future Gener. Comput. Syst. 2021, 125, 485–495. [Google Scholar] [CrossRef]

- Xie, J.; Michael, T.; Zhang, J.; Roe, P. Detecting Frog Calling Activity Based on Acoustic Event Detection and Multi-label Learning. Procedia Comput. Sci. 2016, 80, 627–638. [Google Scholar] [CrossRef]

- Xie, J.; Towsey, M.; Yasumiba, K.; Zhang, J.; Roe, P. Detection of anuran calling activity in long field recordings for bio-acoustic monitoring. In Proceedings of the 10th International Conference on Intelligence Sensors, Sensor Network and Information (ISSNIP), Singapore, 7–9 April 2015. [Google Scholar]

- Yang, S.; Friew, R.; Shi, Q. Acoustic classification of bird species using wavelets and learning algorithm. In Proceedings of the 13th International Conference on Machine Learning and Computing (ICMLC), New York, NY, USA, 26 February–1 March 2021. [Google Scholar]

- Castor, J.; Vargas-Masis, R.; Alfaro-Rojan, D.U. Understanding variable performance on deep MIL framework for the acoustic detection of Tropical birds. In Proceedings of the 6th Latin America High Performance Computing Conference (CARLA), Cuenca, Ecuador, 2–4 September 2020. [Google Scholar]

- de Oliveira, A.G.; Ventura, T.M.; Ganchev, T.D.; de Figueiredo, J.M.; Jahn, O.; Marques, M.I.; Schuchmann, K.-L. Bird acoustic activity detection based on morphological filtering of the spectrogram. Appl. Acoust. 2015, 98, 34–42. [Google Scholar] [CrossRef]

- Himawan, I.; Towsey, M.; Law, B.; Roe, P. Deep learning techniques for koala activity detection. In Proceedings of the 19th Annual Conference of the International Speech Communication Association (INTERSPEECH), Hyderabad, India, 2–6 September 2018. [Google Scholar]

- Adavanne, S.; Drossos, K.; Cakir, E.; Virtanen, T. Stacked convolutional and recurrent neural networks for bird audio detection. In Proceedings of the 25th Europena Signal Processing Conference (EUSIPCO), Greek Island, Greece, 28 August–2 September 2017. [Google Scholar]

- Prince, P.; Hill, A.; Piña Covarrubias, E.; Doncaster, P.; Snaddon, J.L.; Rogers, A. Deploying Acoustic Detection Algorithms on Low-Cost, Open-Source Acoustic Sensors for Environmental Monitoring. Sensors 2019, 19, 553. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Zhang, L.; Chen, H.; Xie, J. Bird Species Identification Using Spectrogram Based on Multi-Channel Fusion of DCNNs. Entropy 2021, 23, 1507. [Google Scholar] [CrossRef]

- Madhavi, A.; Pamnani, R. Deep learning based audio classifier for bird species. Int. J. Sci. Res. 2018, 3, 228–233. [Google Scholar]

- Stowell, D.; Wood, M.D.; Pamuła, H.; Stylianou, Y.; Glotin, H. Automatic acoustic detection of birds through deep learning: The first Bird Audio Detection challenge. Methods Ecol. Evol. 2018, 10, 368–380. [Google Scholar] [CrossRef]

- Zhong, M.; Taylor, R.; Bates, N.; Christey, D.; Basnet, H.; Flippin, J.; Palkovitz, S.; Dodhia, R.; Ferres, J.L. Acoustic detection of regionally rare bird species through deep convolutional neural networks. Ecol. Inform. 2021, 64, 101333. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, S.-H.; Xu, Z.-Y.; Bellisario, K.; Dai, N.-H.; Omrani, H.; Pijanowski, B.C. Automated bird acoustic event detection and robust species classification. Ecol. Inform. 2017, 39, 99–108. [Google Scholar] [CrossRef]

- Ventura, T.M.; de Oliveira, A.G.; Ganchev, T.D.; de Figueiredo, J.M.; Jahn, O.; Marques, M.I.; Schuchmann, K.-L. Audio parameterization with robust frame selection for improved bird identification. Expert Syst. Appl. 2015, 42, 8463–8471. [Google Scholar] [CrossRef]

- Ruff, Z.J.; Lesmeister, D.B.; Appel, C.L.; Sullivan, C.M. Workflow and convolutional neural network for automated identification of animal sounds. Ecol. Indic. 2021, 124, 107419. [Google Scholar] [CrossRef]

- Ramli, D.A.; Jaafar, H. Peak finding algorithm to improve syllable segmentation for noisy bioacoustics sound signal. Procedia Comput. Sci. 2016, 96, 100–109. [Google Scholar] [CrossRef]

- Cramer, A.L.; Lostanlen, V.; Farnsworth, A.; Salamon, J.; Bello, J.P. Chirping up the Right Tree: Incorporating Biological Taxonomies into Deep Bioacoustic Classifiers. In Proceedings of the 45th International Conference on Acoustic, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020. [Google Scholar]

- Lostanlen, V.; Salamon, J.; Farnsworth, A.; Kelling, S.; Bello, J.P. Robust sound event detection in bioacoustic sensor networks. PLoS ONE 2019, 14, e0214168. [Google Scholar] [CrossRef]

- Nanni, L.; Costa, Y.M.G.; Aguiar, R.L.; Mangolin, R.B.; Brahnam, S.; Silla, C.N., Jr. Ensemble of convolutional neural networks to improve animal audio classification. EURASIP J. Audio Speech Music Process. 2020, 2020, 8. [Google Scholar] [CrossRef]

- Nanni, L.; Maguolo, G.; Paci, M. Data augmentation approaches for improving animal audio classification. Ecol. Inform. 2020, 57, 101084. [Google Scholar] [CrossRef]

- Nanni, L.; Maguolo, G.; Brahnam, S.; Paci, M. An Ensemble of Convolutional Neural Networks for Audio Classification. Appl. Sci. 2021, 11, 5796. [Google Scholar] [CrossRef]

- Pandeya, Y.R.; Kim, D.; Lee, J. Domestic Cat Sound Classification Using Learned Features from Deep Neural Nets. Appl. Sci. 2018, 8, 1949. [Google Scholar] [CrossRef]

- González-Hernández, F.R.; Sánchez-Fernández, L.P.; Suárez-Guerra, S.; Sánchez-Pérez, L.A. Marine mammal sound classification based on a parallel recognition model and octave analysis. Appl. Acoust. 2017, 119, 17–28. [Google Scholar] [CrossRef]

- Incze, A.; Jancsa, H.; Szilagyi, Z.; Farkas, A.; Sulyok, C. Bird sound recognition using a convolutional neural network. In Proceedings of the 16th International Symposium on Intelligent Systems and Informatics (SISI), Subotica, Serbia, 13–19 September 2018; pp. 000295–000300. [Google Scholar]

- Zhang, S.-H.; Zhao, Z.; Xu, Z.-Y.; Bellisario, K.; Pijanowski, B.C. Automatic Bird Vocalization Identification Based on Fusion of Spectral Pattern and Texture Features. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 271–275. [Google Scholar]

- Sprengel, E.; Jaggi, M.; Kilcher, Y.; Hofmann, T. Audio Based Species Identification Using Deep Learning Techniques; CEUR: Berlin, Germany, 2016. [Google Scholar]

- Lasseck, M. Audio Based Bird Species Identification Using Deep Convolutional Neural Networks; CEUR: Berlin, Germany, 2018. [Google Scholar]

- Zhang, X.; Li, Y. Adaptive energy detection for bird sound detection in complex environments. Neurocomputing 2015, 155, 108–116. [Google Scholar] [CrossRef]

- Stowell, D.; Benetos, E.; Gill, L.F. On-Bird Sound Recordings: Automatic Acoustic Recognition of Activities and Contexts. IEEE/ACM Trans. Audio Speech Lang. Process. 2017, 25, 1193–1206. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P.; Farnsworth, A.; Kelling, S. Fusing shallow and deep learning for bioacoustic bird species classification. In Proceedings of the 2017 IEEE International Conference on Acoustic, Speech and Signal Processing (ICASSP), New Oreans, LA, USA, 5–9 March 2017; pp. 141–145. [Google Scholar]

- Noda, J.J.; Travieso, C.M.; Sanchez-Rodriguez, D.; Dutta, M.K.; Singh, A. Using bioacoustic signals and Support Vector Machine for automatic classification of insects. In Proceedings of the IEEE International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 11–12 February 2016; pp. 656–659. [Google Scholar] [CrossRef]

- Ntalampiras, S. Bird species identification via transfer learning from music genres. Ecol. Inform. 2018, 44, 76–81. [Google Scholar] [CrossRef]

- Szenicer, A.; Reinwald, M.; Moseley, B.; Nissen-Meyer, T.; Muteti, Z.M.; Oduor, S.; McDermott-Roberts, A.; Baydin, A.G.; Mortimer, B. Seismic savanna: Machine learning for classifying wildlife and behaviours using ground-based vibration field recordings. Remote Sens. Ecol. Conserv. 2021, 8, 236–250. [Google Scholar] [CrossRef]

- Knight, E.C.; Hernandez, S.P.; Bayne, E.M.; Bulitko, V.; Tucker, B. Pre-processing spectrogram parameters improve the accuracy of bioacoustic classification using convolutional neural networks. Bioacoustics 2019, 29, 337–355. [Google Scholar] [CrossRef]

- Chalmers, C.; Fergus, P.; Wich, S.; Longmore, S.N. Modelling Animal Biodiversity Using Acoustic Monitoring and Deep Learning. In Proceedings of the 2021 International Joint Conference on Neural Network (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, L.; Towsey, M.; Xie, J.; Zhang, J.; Roe, P. Using multi-label classification for acoustic pattern detection and assisting bird species surveys. Appl. Acoust. 2016, 110, 91–98. [Google Scholar] [CrossRef]

- Brodie, S.; Allen-Ankins, S.; Towsey, M.; Roe, P.; Schwarzkopf, L. Automated species identification of frog choruses in environmental recordings using acoustic indices. Ecol. Indic. 2020, 119, 106852. [Google Scholar] [CrossRef]

- Zeppelzauer, M.; Hensman, S.; Stoeger, A.S. Towards an automated acoustic detection system for free-ranging elephants. Bioacoustics 2014, 24, 13–29. [Google Scholar] [CrossRef]

- Yip, D.A.; Mahon, C.L.; MacPhail, A.G.; Bayne, E.M. Automated classification of avian vocal activity using acoustic indices in regional and heterogeneous datasets. Methods Ecol. Evol. 2021, 12, 707–719. [Google Scholar] [CrossRef]

- Dufourq, E.; Durbach, I.; Hansford, J.P.; Hoepfner, A.; Ma, H.; Bryant, J.V.; Stender, C.S.; Li, W.; Liu, Z.; Chen, Q.; et al. Automated detection of Hainan gibbon calls for passive acoustic monitoring. Remote Sens. Ecol. Conserv. 2021, 7, 475–487. [Google Scholar] [CrossRef]

- Mac Aodha, O.; Gibb, R.; Barlow, K.E.; Browning, E.; Firman, M.; Freeman, R.; Harder, B.; Kinsey, L.; Mead, G.R.; Newson, S.E.; et al. Bat detective—Deep learning tools for bat acoustic signal detection. PLoS Comput. Biol. 2018, 14, e1005995. [Google Scholar] [CrossRef]

- Mohammed, R.A.; Ali, A.E.; Hassan, N.F. Advantages and disadvantages of automatic speaker recognition systems. J. Al-Qadisiyah Comput. Sci. Math. 2019, 11, 21–30. [Google Scholar]

- Melo, I.; Llusia, D.; Bastos, R.P.; Signorelli, L. Active or passive acoustic monitoring? Assessing methods to track anuran communities in tropical savanna wetlands. Ecol. Indic. 2021, 132, 108305. [Google Scholar] [CrossRef]

- Goeau, H.; Glotin, H.; Vellinga, W.P.; Planque, R.; Joly, A. LifeCLFF bird identification task 2016: The arrival of deep learning. In CLEF: Conference and Labs of the Evaluation Forum, Évora, Portugal, 5–8 September 2016; CEUR: Berlin, Germany, 2016; pp. 440–449. [Google Scholar]

| Features | Strength | Weakness | Research Gap |

|---|---|---|---|

| Multi-view spectrogram | Improved accuracy, better sound differentiation, efficient detection and classification, multi-information capture, improved understanding of audio signals | reduces species recognition, not good quality for low-quality audio signals | recognize calling behavior, categorize species? |

| Mel-spectrogram | reduce background noises, higher signal-to-noise ratio, improved audio feature extraction | limited frequency range, computational complexity, lack of interpretability | applicability to the larger database? |

| Constant q-spectrogram | improved acoustic resolution reduces artifacts and distortions, better for complex sounds analysis | costly computation, lacks inverse transform, difficult data structure | replicability to other species |

| MFCC | simple, effective performance, adaptable, captured major features, weighted data value | less effective in noisy settings, filter influenced | applicable to larger databases? |

| LDFC spectrogram | auditory structure details, call recognition improved, species recognition boosted, improved signal details, robust noise reduction, better data understanding | limits detection accuracy, increased computation time, time-consuming analysis, limited frequency range, complex data interpretation | Multi-species supports? |

| Classification Methods | Strengths/Weakness |

|---|---|

| CNN and HMM |

|

| CNN and CNN + RNN |

|

| CNN-LSTM and SBM |

|

| GMM and HMM |

|

| Classification Methods | Strength | Weakness | Research Gaps |

|---|---|---|---|

| CNN | improves noise detection, automated feature learning, open-source software, reduce human error, improved species detection | high computational cost, need large labeled data, lacks temporal modeling | reliable on call counting? |

| CNN + RNN or CRNN | Accurate temporal modeling, reduces false positives, effectively filters noise | computationally demanding, requires labeled data, and may miss some signals. | enhancement of evaluation metrics |

| CNN-LSTM | improved temporal modeling, reduced false positive rate, better background noise handling | time-consuming labeling, complex network structure, high computational cost | unbalanced dataset suitability? |

| HMM | real-time processing speed, low false positive rate, simple model complexity | low accuracy rate, vulnerable to noise, simple modeling | evaluate multi-species performance |

| GMM | precise feature modeling, real-time processing ability, and fewer parameters needed | complexity in modeling, false positive with noise | conduct performance investigation detailed |

| Background | Active Acoustic Monitoring | Passive Acoustic Monitoring |

|---|---|---|

| Introduction | Detects the vocalization that wildlife emits naturally | Detects vocalization bounced off concerned species from the transmitted acoustic signal |

| Strengths | Non-invasive, less likely to disturb wildlife | Ability to target particular wildlife species |

| Weakness | Limited to vocalization produced naturally | Invasive, potential to affect wildlife species’ behavior |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, S.; Sato, K.; Gautam, B.P. A Methodological Literature Review of Acoustic Wildlife Monitoring Using Artificial Intelligence Tools and Techniques. Sustainability 2023, 15, 7128. https://doi.org/10.3390/su15097128

Sharma S, Sato K, Gautam BP. A Methodological Literature Review of Acoustic Wildlife Monitoring Using Artificial Intelligence Tools and Techniques. Sustainability. 2023; 15(9):7128. https://doi.org/10.3390/su15097128

Chicago/Turabian StyleSharma, Sandhya, Kazuhiko Sato, and Bishnu Prasad Gautam. 2023. "A Methodological Literature Review of Acoustic Wildlife Monitoring Using Artificial Intelligence Tools and Techniques" Sustainability 15, no. 9: 7128. https://doi.org/10.3390/su15097128