Costly “Greetings” from AI: Effects of Product Recommenders and Self-Disclosure Levels on Transaction Costs

Abstract

:1. Introduction

2. Background and Hypothesis Development

2.1. Background

2.2. Hypothesis Development

2.2.1. The Effect of Product Recommender Subject on Transaction Cost

2.2.2. The Mediating Effect of Emotional Support

2.2.3. The Moderating Effect of Self-Disclosure Level

3. Method

3.1. Participant

3.2. Research Design

3.3. Procedure

3.4. Data

3.4.1. Dependent Variable

3.4.2. Independent Variable

3.4.3. Mediators

3.5. Mathematical Model

3.6. Ethical Consideration

4. Results

4.1. Manipulation Check

4.2. Hypothesis Tests

4.2.1. Test of H1

4.2.2. Test of H2

4.2.3. Test of H3

4.2.4. The Moderated Mediation Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, M.-H.; Rust, R.T. Artificial intelligence in service. J. Serv. Res. 2018, 21, 155–172. [Google Scholar] [CrossRef]

- Schuetzler, R.M.; Grimes, G.M.; Scott Giboney, J. The impact of chatbot conversational skill on engagement and perceived humanness. J. Manag. Inf. Syst. 2020, 37, 875–900. [Google Scholar] [CrossRef]

- Bouguezzi, S.S. Milos How Does the Amazon Recommendation System Work? Available online: https://www.baeldung.com/cs/amazon-recommendation-system (accessed on 10 July 2024).

- Langfelder, N. Generative AI: Revolutionizing Retail through Hyper-Personalization. Available online: https://www.data-axle.com/resources/blog/generative-ai-revolutionizing-retail-through-hyper-personalization/ (accessed on 20 July 2024).

- Khattar, V. Famous Beauty Brands Using Chatbot Technology. Available online: https://www.skin-match.com/beauty-technology/famous-beauty-brands-using-chatbot-technology (accessed on 10 July 2024).

- Nussey, S. EXCLUSIVE SoftBank Shrinks Robotics Business, Stops Pepper Production-Sources. Available online: https://www.reuters.com/technology/exclusive-softbank-shrinks-robotics-business-stops-pepper-production-sources-2021-06-28/ (accessed on 10 July 2024).

- Hoffman, G. Anki, Jibo, and Kuri: What We Can Learn from Social Robots that Didn’t Make It. Available online: https://spectrum.ieee.org/anki-jibo-and-kuri-what-we-can-learn-from-social-robotics-failures (accessed on 10 July 2024).

- Kaur, D.; Uslu, S.; Rittichier, K.J.; Durresi, A. Trustworthy artificial intelligence: A review. ACM Comput. Surv. CSUR 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Gray, H.M.; Gray, K.; Wegner, D.M. Dimensions of mind perception. Science 2007, 315, 619. [Google Scholar] [CrossRef]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Overcoming algorithm aversion: People will use imperfect algorithms if they can (even slightly) modify them. Manag. Sci. 2018, 64, 1155–1170. [Google Scholar] [CrossRef]

- Commerford, B.P.; Dennis, S.A.; Joe, J.R.; Ulla, J.W. Man versus machine: Complex estimates and auditor reliance on artificial intelligence. J. Account. Res. 2022, 60, 171–201. [Google Scholar] [CrossRef]

- Glikson, E.; Woolley, A.W. Human trust in artificial intelligence: Review of empirical research. Acad. Manag. Ann. 2020, 14, 627–660. [Google Scholar] [CrossRef]

- Lee, Y.-C.; Yamashita, N.; Huang, Y. Designing a chatbot as a mediator for promoting deep self-disclosure to a real mental health professional. Proc. ACM Hum. Comput. Interact. 2020, 4, 1–27. [Google Scholar] [CrossRef]

- Tsumura, T.; Yamada, S. Influence of agent’s self-disclosure on human empathy. PLoS ONE 2023, 18, e0283955. [Google Scholar] [CrossRef]

- Saffarizadeh, K.; Keil, M.; Boodraj, M.; Alashoor, T. “My Name is Alexa. What’s Your Name?” The Impact of Reciprocal Self-Disclosure on Post-Interaction Trust in Conversational Agents. J. Assoc. Inf. Syst. 2024, 25, 528–568. [Google Scholar] [CrossRef]

- Correll, S.J.; Ridgeway, C.L. Expectation states theory. In Handbook of Social Psychology; Springer: Berlin/Heidelberg, Germany, 2003; pp. 29–51. [Google Scholar]

- Al-Natour, S.; Benbasat, I.; Cenfetelli, R. Designing online virtual advisors to encourage customer self-disclosure: A theoretical model and an empirical test. J. Manag. Inf. Syst. 2021, 38, 798–827. [Google Scholar] [CrossRef]

- Antaki, C.; Barnes, R.; Leudar, I. Self-disclosure as a situated interactional practice. Br. J. Soc. Psychol. 2005, 44, 181–199. [Google Scholar] [CrossRef] [PubMed]

- Greene, K.; Derlega, V.J.; Mathews, A. Chapter 22: Self-disclosure in personal relationships. In The Cambridge Handbook of Personal Relationships; Cambridge University Press: Cambridge, UK, 2006; pp. 409–427. [Google Scholar]

- Bigras, É.; Léger, P.-M.; Sénécal, S. Recommendation agent adoption: How recommendation presentation influences employees’ perceptions, behaviors, and decision quality. Appl. Sci. 2019, 9, 4244. [Google Scholar] [CrossRef]

- Bouayad, L.; Padmanabhan, B.; Chari, K. Can recommender systems reduce healthcare costs? The role of time pressure and cost transparency in prescription choice. MIS Q. 2020, 44, 1859–1903. [Google Scholar] [CrossRef]

- Al-Natour, S.; Benbasat, I. The adoption and use of IT artifacts: A new interaction-centric model for the study of user-artifact relationships. J. Assoc. Inf. Syst. 2009, 10, 2. [Google Scholar] [CrossRef]

- Lee, J.; Lee, D.; Lee, J.-G. Influence of rapport and social presence with an AI psychotherapy chatbot on users’ self-disclosure. Int. J. Hum. Comput. Interact. 2024, 40, 1620–1631. [Google Scholar] [CrossRef]

- Meng, J.; Dai, Y. Emotional support from AI chatbots: Should a supportive partner self-disclose or not? J. Comput.-Mediat. Commun. 2021, 26, 207–222. [Google Scholar] [CrossRef]

- Kim, T.W.; Jiang, L.; Duhachek, A.; Lee, H.; Garvey, A. Do you mind if I ask you a personal question? How AI service agents alter consumer self-disclosure. J. Serv. Res. 2022, 25, 649–666. [Google Scholar] [CrossRef]

- Longoni, C.; Cian, L. When do we trust AI’s recommendations more than people’s. Harv. Bus. Rev. 2020, 23. [Google Scholar]

- Belanche, D.; Casaló, L.V.; Flavián, C.; Schepers, J. Service robot implementation: A theoretical framework and research agenda. Serv. Ind. J. 2020, 40, 203–225. [Google Scholar] [CrossRef]

- Kotler, P.; Kartajaya, H.; Setiawan, I. Marketing 6.0: The Future Is Immersive; John Wiley & Sons: Hoboken, NJ, USA, 2023. [Google Scholar]

- Dongbo, M.; Miniaoui, S.; Fen, L.; Althubiti, S.A.; Alsenani, T.R. Intelligent chatbot interaction system capable for sentimental analysis using hybrid machine learning algorithms. Inf. Process. Manag. 2023, 60, 103440. [Google Scholar] [CrossRef]

- Leo, X.; Huh, Y.E. Who gets the blame for service failures? Attribution of responsibility toward robot versus human service providers and service firms. Comput. Hum. Behav. 2020, 113, 106520. [Google Scholar] [CrossRef]

- You, S.; Yang, C.L.; Li, X. Algorithmic versus human advice: Does presenting prediction performance matter for algorithm appreciation? J. Manag. Inf. Syst. 2022, 39, 336–365. [Google Scholar] [CrossRef]

- Devaraj, S.; Fan, M.; Kohli, R. Antecedents of B2C channel satisfaction and preference: Validating e-commerce metrics. Inf. Syst. Res. 2002, 13, 316–333. [Google Scholar] [CrossRef]

- Filiz, I.; Judek, J.R.; Lorenz, M.; Spiwoks, M. The extent of algorithm aversion in decision-making situations with varying gravity. PLoS ONE 2023, 18, e0278751. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.; Lee, H.; Kim, M.Y.; Kim, S.; Duhachek, A. AI increases unethical consumer behavior due to reduced anticipatory guilt. J. Acad. Mark. Sci. 2023, 51, 785–801. [Google Scholar] [CrossRef]

- Huo, W.; Zheng, G.; Yan, J.; Sun, L.; Han, L. Interacting with medical artificial intelligence: Integrating self-responsibility attribution, human–computer trust, and personality. Comput. Hum. Behav. 2022, 132, 107253. [Google Scholar] [CrossRef]

- Filieri, R.; Lin, Z.; Li, Y.; Lu, X.; Yang, X. Customer emotions in service robot encounters: A hybrid machine-human intelligence approach. J. Serv. Res. 2022, 25, 614–629. [Google Scholar] [CrossRef]

- Berger, B.; Adam, M.; Rühr, A.; Benlian, A. Watch me improve—Algorithm aversion and demonstrating the ability to learn. Bus. Inf. Syst. Eng. 2021, 63, 55–68. [Google Scholar] [CrossRef]

- Chen, R.; Sharma, S.K. Self-disclosure at social networking sites: An exploration through relational capitals. Inf. Syst. Front. 2013, 15, 269–278. [Google Scholar] [CrossRef]

- Lee, J.; Lee, D. User perception and self-disclosure towards an AI psychotherapy chatbot according to the anthropomorphism of its profile picture. Telemat. Inform. 2023, 85, 102052. [Google Scholar] [CrossRef]

- Ho, A.; Hancock, J.; Miner, A.S. Psychological, relational, and emotional effects of self-disclosure after conversations with a chatbot. J. Commun. 2018, 68, 712–733. [Google Scholar] [CrossRef]

- Schmalz, S.; Orth, U.R. Brand attachment and consumer emotional response to unethical firm behavior. Psychol. Mark. 2012, 29, 869–884. [Google Scholar] [CrossRef]

- Feeney, B.C.; Collins, N.L. A new look at social support: A theoretical perspective on thriving through relationships. Personal. Soc. Psychol. Rev. 2015, 19, 113–147. [Google Scholar] [CrossRef]

- Collins, N.L.; Feeney, B.C. Working models of attachment shape perceptions of social support: Evidence from experimental and observational studies. J. Personal. Soc. Psychol. 2004, 87, 363. [Google Scholar] [CrossRef] [PubMed]

- Pessoa, L. On the relationship between emotion and cognition. Nat. Rev. Neurosci. 2008, 9, 148–158. [Google Scholar] [CrossRef]

- Rafaeli, A.; Erez, A.; Ravid, S.; Derfler-Rozin, R.; Treister, D.E.; Scheyer, R. When customers exhibit verbal aggression, employees pay cognitive costs. J. Appl. Psychol. 2012, 97, 931. [Google Scholar] [CrossRef]

- Burleson, B.R. The experience and effects of emotional support: What the study of cultural and gender differences can tell us about close relationships, emotion, and interpersonal communication. Pers. Relatsh. 2003, 10, 1–23. [Google Scholar] [CrossRef]

- Lawler, E.J.; Thye, S.R. Social exchange theory of emotions. In Handbooks of Sociology and Social Research; Springer: Boston, MA, USA, 2006; pp. 295–320. [Google Scholar]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People; Cambridge University: Cambridge, UK, 1996; Volume 10, pp. 19–36. [Google Scholar]

- Tong, S.; Jia, N.; Luo, X.; Fang, Z. The Janus face of artificial intelligence feedback: Deployment versus disclosure effects on employee performance. Strateg. Manag. J. 2021, 42, 1600–1631. [Google Scholar] [CrossRef]

- Ruan, Y.; Mezei, J. When do AI chatbots lead to higher customer satisfaction than human frontline employees in online shopping assistance? Considering product attribute type. J. Retail. Consum. Serv. 2022, 68, 103059. [Google Scholar] [CrossRef]

- Zhai, M.; Chen, Y. How do relational bonds affect user engagement in e-commerce livestreaming? The mediating role of trust. J. Retail. Consum. Serv. 2023, 71, 103239. [Google Scholar] [CrossRef]

- Bues, M.; Steiner, M.; Stafflage, M.; Krafft, M. How mobile in-store advertising influences purchase intention: Value drivers and mediating effects from a consumer perspective. Psychol. Mark. 2017, 34, 157–174. [Google Scholar] [CrossRef]

- Gao, T.T.; Rohm, A.J.; Sultan, F.; Pagani, M. Consumers un-tethered: A three-market empirical study of consumers’ mobile marketing acceptance. J. Bus. Res. 2013, 66, 2536–2544. [Google Scholar] [CrossRef]

- Troshani, I.; Rao Hill, S.; Sherman, C.; Arthur, D. Do we trust in AI? Role of anthropomorphism and intelligence. J. Comput. Inf. Syst. 2021, 61, 481–491. [Google Scholar] [CrossRef]

- Grewal, D.; Guha, A.; Satornino, C.B.; Schweiger, E.B. Artificial intelligence: The light and the darkness. J. Bus. Res. 2021, 136, 229–236. [Google Scholar] [CrossRef]

- Agarwal, U.A.; Narayana, S.A. Impact of relational communication on buyer–supplier relationship satisfaction: Role of trust and commitment. Benchmarking Int. J. 2020, 27, 2459–2496. [Google Scholar] [CrossRef]

- Kichan, N.; Baker, J.; Norita, A.; Jahyun, G. Dissatisfaction, disconfirmation, and distrust: An empirical examination of value co-destruction through negative electronic word-of-mouth (eWOM). Inf. Syst. Front. 2020, 22, 113–130. [Google Scholar]

- Cohen, J. Quantitative methods in psychology: A power primer. Psychol. Bull. 1992, 112, 1155–1159. [Google Scholar] [CrossRef]

- Wang, C.; Chen, J.; Xie, P. Observation or interaction? Impact mechanisms of gig platform monitoring on gig workers’ cognitive work engagement. Int. J. Inf. Manag. 2022, 67, 102548. [Google Scholar] [CrossRef]

- Li, H.; Xie, X.; Zou, Y.; Wang, T. “Take action, buddy!”: Self–other differences in passive risk-taking for health and safety. J. Exp. Soc. Psychol. 2024, 110, 104542. [Google Scholar] [CrossRef]

- Jongepier, F.; Klenk, M. The Philosophy of Online Manipulation; Taylor & Francis: Abingdon, UK, 2022. [Google Scholar]

- Coons, C.; Weber, M. Manipulation: Theory and Practice; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Koohikamali, M.; Peak, D.A.; Prybutok, V.R. Beyond self-disclosure: Disclosure of information about others in social network sites. Comput. Hum. Behav. 2017, 69, 29–42. [Google Scholar] [CrossRef]

- Li-Barber, K.T. Self-disclosure and student satisfaction with Facebook. Comput. Hum. Behav. 2012, 28, 624–630. [Google Scholar]

- Kort-Butler, L. The Encyclopedia of Juvenile Delinquency and Justice; Wiley-Blackwell: Oxford, UK, 2017; pp. 1–4. [Google Scholar]

- Kessler, R.C.; Kendler, K.S.; Heath, A.; Neale, M.C.; Eaves, L.J. Kessler Perceived Social Support Scale. J. Personal. Soc. Psychol. 1992. [Google Scholar] [CrossRef]

- Lakey, B.; Cassady, P.B. Cognitive processes in perceived social support. J. Personal. Soc. Psychol. 1990, 59, 337. [Google Scholar] [CrossRef]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach; The Guilford Press: London, UK; New York, NY, USA, 2013. [Google Scholar]

- Bobek, D.D.; Hageman, A.M.; Radtke, R.R. The effects of professional role, decision context, and gender on the ethical decision making of public accounting professionals. Behav. Res. Account. 2015, 27, 55–78. [Google Scholar] [CrossRef]

- Commerford, B.P.; Hatfield, R.C.; Houston, R.W. The effect of real earnings management on auditor scrutiny of management’s other financial reporting decisions. Account. Rev. 2018, 93, 145–163. [Google Scholar] [CrossRef]

- Kim, M.; Sudhir, K.; Uetake, K.; Canales, R. When salespeople manage customer relationships: Multidimensional incentives and private information. J. Mark. Res. 2019, 56, 749–766. [Google Scholar] [CrossRef]

- Daugherty, P.R.; Wilson, H.J. Human+ Machine: Reimagining Work in the Age of AI; Harvard Business Press: Boston, MA, USA, 2018. [Google Scholar]

- Guha, A.; Grewal, D.; Kopalle, P.K.; Haenlein, M.; Schneider, M.J.; Jung, H.; Moustafa, R.; Hegde, D.R.; Hawkins, G. How artificial intelligence will affect the future of retailing. J. Retail. 2021, 97, 28–41. [Google Scholar] [CrossRef]

- Bigman, Y.E.; Gray, K. People are averse to machines making moral decisions. Cognition 2018, 181, 21–34. [Google Scholar] [CrossRef]

- Gray, K.; Wegner, D.M. Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition 2012, 125, 125–130. [Google Scholar] [CrossRef]

- Burton, J.W.; Stein, M.K.; Jensen, T.B. A systematic review of algorithm aversion in augmented decision making. J. Behav. Decis. Mak. 2020, 33, 220–239. [Google Scholar] [CrossRef]

| Author | Method | Main Findings |

|---|---|---|

| Boudorf et al. [3] | Randomized controlled trial | When using digital advice, consumers were willing to pay 14% less for popular brand plans and 37% less for plans with higher star ratings, compared to having only basic product information. |

| Kim et al. [34] | Experiment | Consumers are more likely to engage in unethical behaviors when interacting with AI agents, due to reduced anticipatory feelings of guilt. |

| Leo and Huh [30] | Experiment | When service fails, people attribute less responsibility toward a service provider if it is a robot rather than a human. People attribute more blame toward a service firm when a robot delivers a failed service than when a human does. |

| Huo et al. [35] | Survey | Patients’ self-responsibility attribution is positively related to human–computer trust (HCT) and sequentially enhances the acceptance of medical AI for independent diagnosis and treatment. |

| You et al. [31] | Experiment | Individuals follow algorithmic advice more than identical human advice due to higher trust in algorithms, and this trust remains unchanged even when they are informed of the algorithm’s prediction errors. |

| Filieri et al. [36] | Machine learning | The majority of customer interactions with service robots were positive, and robots that moved triggered more emotional responses than stationary ones. |

| Berger et al. [37] | Experiment | For an objective and non-personal decision task, human decision makers exhibit algorithm aversion if they are familiar with the advisor’s performance and the advisor errs. |

| Commerfold et al. [11] | Experiment | Auditors proposed smaller adjustments to management’s complex estimates when receiving contradictory evidence from an AI system rather than a human specialist. This effect was particularly pronounced when the estimates were based on relatively objective inputs. |

| Filiz et al. [33] | Experiment | Algorithm aversion occurs more frequently as the seriousness of the decision’s consequences increases. |

| Condition | Subjects | ||

|---|---|---|---|

| AI | Human | ||

| Self- Disclosure Level | Low | Group A | Group C |

| (N = 18) | (N = 19) | ||

| High | Group B | Group D | |

| (N = 22) | (N = 19) | ||

| Independent Variable: Transaction Cost | ||||||

|---|---|---|---|---|---|---|

| Panel A: Descriptives | ||||||

| Subject | N | Mean | Std. Dev | Std. E | Min | Max |

| AI | 32 | 4.090 | 1.940 | 0.343 | 1 | 7 |

| Human | 32 | 3.410 | 1.915 | 0.339 | 1 | 7 |

| Total | 64 | 3.750 | 1.944 | 0.243 | 1 | 7 |

| Panel B: One-way ANOVA (one-tailed) | ||||||

| SS | df | MS | F | Sig. | ||

| Between Groups | 7.563 | 1 | 7.563 | 2.035 | 0.080 | |

| Within Groups | 230.438 | 62 | 3.717 | |||

| Total | 238 | 63 | ||||

| Effect | t | p | LLCI | ULCI | |

|---|---|---|---|---|---|

| Total effect | −0.6875 | −1.4264 | 0.1588 | −1.4923 | 0.1173 |

| Direct effect | −0.3348 | −0.7457 | 0.4587 | −1.0849 | 0.4152 |

| Indirect effect | −0.3527 | - | - | −0.7857 | −0.0151 |

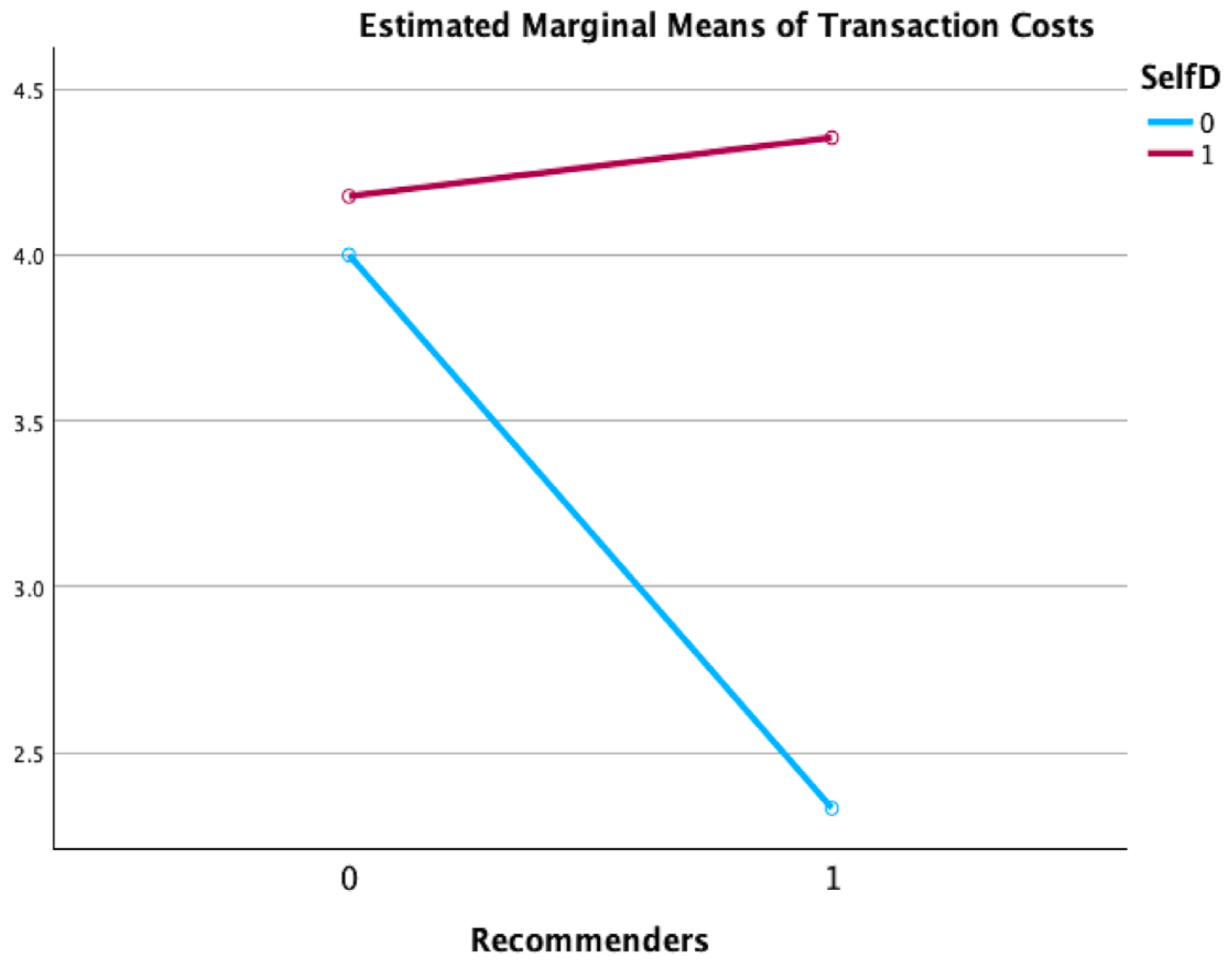

| Panel A: Descriptive Statistics: mean, standard deviation, n cell | ||||

| Self-Disclosure | ||||

| Subjects | Low | High | Overall | |

| AI | 4.00 | 4.18 | 4.09 | |

| 1.604 | 2.243 | 1.94 | ||

| 15 | 17 | 32 | ||

| A | C | |||

| Human | 2.33 | 4.35 | 3.41 | |

| 0.976 | 2.06 | 1.915 | ||

| 15 | 17 | 32 | ||

| B | D | |||

| Overall | 3.17 | 4.26 | 3.75 | |

| 1.555 | 2.122 | 1.944 | ||

| 30 | 34 | 64 | ||

| Panel B: Conventional ANOVA | ||||

| Source | Sum of Squares | df | F | p |

| Subjects | 8.848 | 1 | 2.685 | 0.053 |

| Self-Disclosure | 19.216 | 1 | 5.832 | 0.010 |

| Subjects × Self-Dis | 13.536 | 1 | 4.108 | 0.024 |

| Error | 197.686 | 60 | ||

| Panel C: Simple Effect | ||||

| df | MS | F | p | |

| Low: AI versus Human | 1, 60 | 20.833 | 6.323 | 0.008 |

| High: AI versus Human | 1, 60 | 0.265 | 0.080 | 0.389 |

| Panel A: Descriptive Statistics: mean, standard deviation, n cell | ||||

| Self-Disclosure | ||||

| Subjects | Low | High | Overall | |

| AI | 2.33 | 3.29 | 2.84 | |

| 1.291 | 1.896 | 1.687 | ||

| 15 | 17 | 32 | ||

| A | C | |||

| Human | 4.33 | 2.94 | 3.59 | |

| 1.447 | 1.919 | 1.829 | ||

| 15 | 17 | 32 | ||

| B | D | |||

| Overall | 3.33 | 3.12 | 3.22 | |

| 1.688 | 1.887 | 1.786 | ||

| 30 | 34 | 64 | ||

| Panel B: Direct Path | ||||

| Coef | SE | t | p | |

| Subjects → Emotional Support | 2.0000 | 0.6131 | 3.2622 | 0.0018 |

| Subjects × Self-Dis | −2.3529 | 0.8411 | −2.7974 | 0.0069 |

| →Emotional Support | ||||

| Emotional Support → Transaction Cost | −0.4702 | 0.1267 | −3.7107 | 0.0004 |

| Panel C: Conditional Indirect Path by Self-Disclosure Levels | ||||

| Subjects → Emotional Support → Transaction Cost | ||||

| Assigned Self-Disclosure Levels | Effect | BootSE | BootLLCI | BootULCI |

| Low | −0.9404 | 0.3702 | −1.7858 | −0.3401 |

| High | 0.1660 | 0.3081 | −0.4675 | 0.7584 |

| Pairwise Contrast | 1.1064 | 0.4881 | 0.2662 | 2.1809 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Tu, Y.; Zeng, S. Costly “Greetings” from AI: Effects of Product Recommenders and Self-Disclosure Levels on Transaction Costs. Sustainability 2024, 16, 8236. https://doi.org/10.3390/su16188236

Chen Y, Tu Y, Zeng S. Costly “Greetings” from AI: Effects of Product Recommenders and Self-Disclosure Levels on Transaction Costs. Sustainability. 2024; 16(18):8236. https://doi.org/10.3390/su16188236

Chicago/Turabian StyleChen, Yasheng, Yuhong Tu, and Siyao Zeng. 2024. "Costly “Greetings” from AI: Effects of Product Recommenders and Self-Disclosure Levels on Transaction Costs" Sustainability 16, no. 18: 8236. https://doi.org/10.3390/su16188236