Beyond GIS Layering: Challenging the (Re)use and Fusion of Archaeological Prospection Data Based on Bayesian Neural Networks (BNN)

Abstract

1. Introduction

2. Methodology and Case Study Area

2.1. Case Study Area

2.2. Data Soruces

2.3. Methodology

3. Results

3.1. Neural Netowork Regression Values

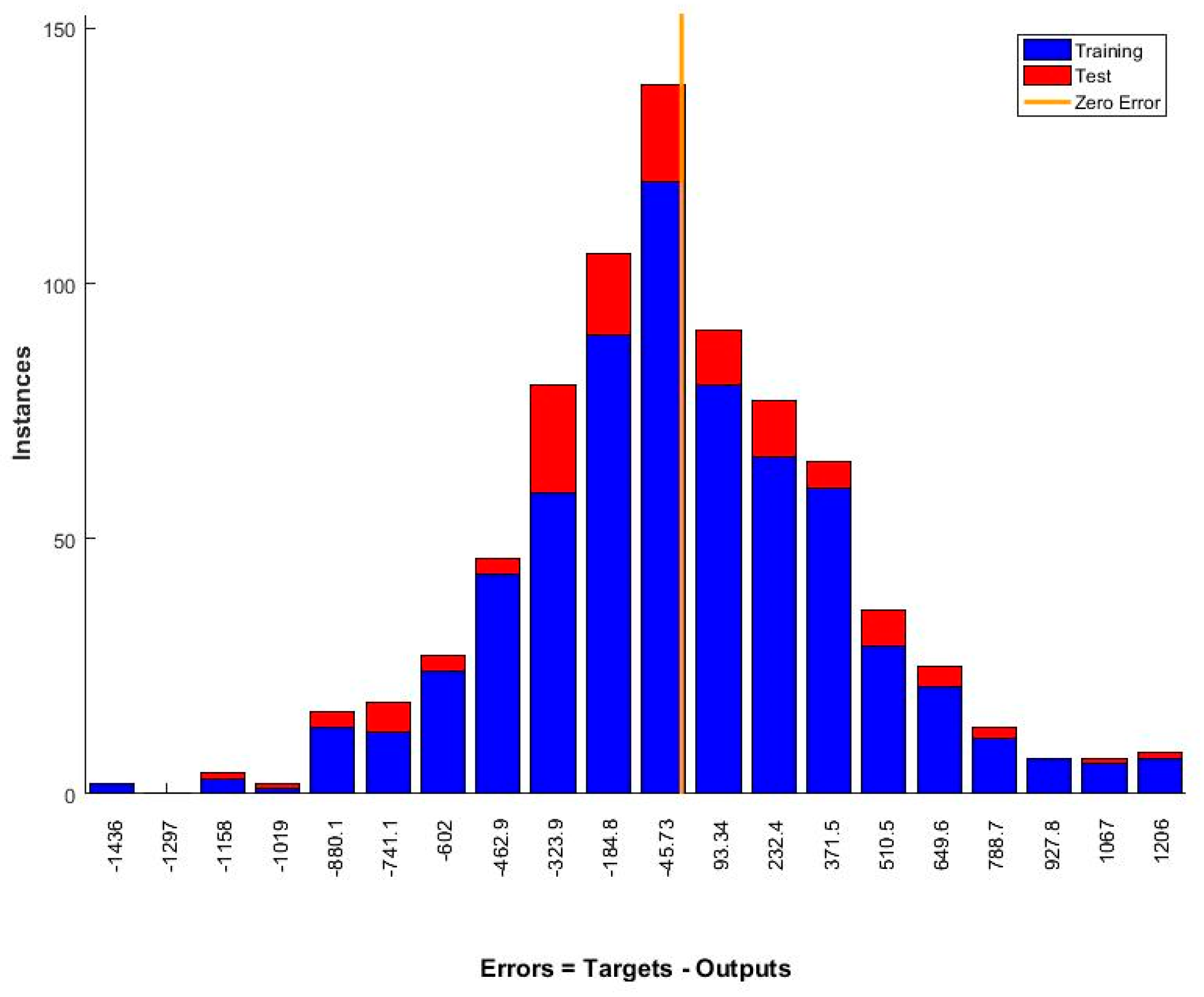

3.2. Errors in Neural Netoworks

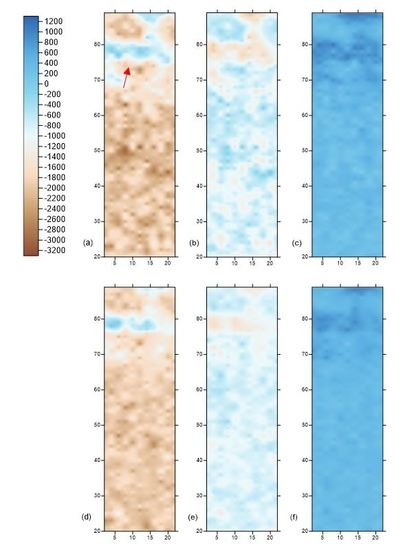

3.3. Comparison with Real GPR Slices

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Banaszek, Ł.; Cowley, D.C.; Middleton, M. Towards National Archaeological Mapping. Assessing Source Data and Methodology—A Case Study from Scotland. Geosciences 2018, 8, 272. [Google Scholar] [CrossRef]

- Opitz, R.; Herrmann, J. Recent Trends and Long-standing Problems in Archaeological Remote Sensing. J. Comput. Appl. Archaeol. 2018, 1, 19–41. [Google Scholar] [CrossRef]

- Leisz, S.J. An overview of the application of remote sensing to archaeology during the twentieth century. In Mapping Archaeological Landscapes from Space; Springer: New York, NY, USA, 2013; pp. 11–19. [Google Scholar]

- Filzwieser, R.; Olesen, L.H.; Verhoeven, G.; Mauritsen, E.S.; Neubauer, W.; Trinks, I.; Nowak, M.; Nowak, R.; Schneidhofer, P.; Nau, E.; et al. Integration of Complementary Archaeological Prospection Data from a Late Iron Age Settlement at Vesterager—Denmark. J. Archaeol. Method Theory 2018, 25, 313–333. [Google Scholar] [CrossRef]

- Agapiou, A.; Lysandrou, V.; Sarris, A.; Papadopoulos, N.; Hadjimitsis, D.G. Fusion of Satellite Multispectral Images Based on Ground-Penetrating Radar (GPR) Data for the Investigation of Buried Concealed Archaeological Remains. Geosciences 2017, 7, 40. [Google Scholar] [CrossRef]

- Gharbia, R.; Hassanien, A.E.; El-Baz, A.H.; Elhoseny, M.; Gunasekaran, M. Multi-spectral and panchromatic image fusion approach using stationary wavelet transform and swarm flower pollination optimization for remote sensing applications. Future Gener. Comput. Syst. 2018, 88, 501–511. [Google Scholar] [CrossRef]

- Alexakis, D.; Sarris, A.; Astaras, T.; Albanakis, Κ. Detection of neolithic settlements in thessaly (Greece) through multispectral and hyperspectral satellite imagery. Sensors 2009, 9, 1167–1187. [Google Scholar] [CrossRef] [PubMed]

- Alexakis, A.; Sarris, A.; Astaras, T.; Albanakis, K. Integrated GIS, remote sensing and geomorphologic approaches for the reconstruction of the landscape habitation of Thessaly during the Neolithic period. J. Archaeol. Sci. 2011, 38, 89–100. [Google Scholar] [CrossRef]

- Traviglia, A.; Cottica, D. Remote sensing applications and archaeological research in the Northern Lagoon of Venice: The case of the lost settlement of Constanciacus. J. Archaeol. Sci. 2011, 38, 2040–2050. [Google Scholar] [CrossRef]

- Gallo, D.; Ciminale, M.; Becker, H.; Masini, N. Remote sensing techniques for reconstructing a vast Neolithic settlement in Southern Italy. J. Archaeol. Sci. 2009, 36, 43–50. [Google Scholar] [CrossRef]

- Yu, L.; Zhang, Y.; Nie, Y.; Zhang, W.; Gao, H.; Bai, X.; Liu, F.; Hategekimana, Y.; Zhu, J. Improved detection of archaeological features using multi-source data in geographically diverse capital city sites. J. Cult. Herit. 2018, 33, 145–158. [Google Scholar] [CrossRef]

- Nsanziyera, F.A.; Lechgar, H.; Fal, S.; Maanan, M.; Saddiqi, O.; Oujaa, A.; Rhinane, H. Remote-sensing data-based Archaeological Predictive Model (APM) for archaeological site mapping in desert area, South Morocco. C. R. Geosci. 2018, 350, 319–330. [Google Scholar] [CrossRef]

- Morehart, T.C.; Millhauser, K.J. Monitoring cultural landscapes from space: Evaluating archaeological sites in the Basin of Mexico using very high resolution satellite imagery. J. Archaeol. Sci. Rep. 2016, 10, 363–376. [Google Scholar] [CrossRef]

- Lasaponara, R.; Masini, N. Beyond modern landscape features: New insights in the archaeological area of Tiwanaku in Bolivia from satellite data. Int. J. Appl. Earth Obs. Geoinform. 2014, 26, 464–471. [Google Scholar] [CrossRef]

- Agapiou, A.; Lysandrou, V.; Hadjimitsis, D.G. Optical Remote Sensing Potentials for Looting Detection. Geosciences 2017, 7, 98. [Google Scholar] [CrossRef]

- Di Maio, R.; La Manna, M.; Piegari, E.; Zara, A.; Bonetto, J. Reconstruction of a Mediterranean coast archaeological site by integration of geophysical and archaeological data: The eastern district of the ancient city of Nora (Sardinia, Italy). J. Archaeol. Sci. Rep. 2018, 20, 230–238. [Google Scholar] [CrossRef]

- Balkaya, Ç.; Kalyoncuoğlu, Ü.Y.; Özhanlı, M.; Merter, G.; Çakmak, O.; Talih Güven, İ. Ground-penetrating radar and electrical resistivity tomography studies in the biblical Pisidian Antioch city, southwest Anatolia. Archaeol. Prospect. 2018, 1–16. [Google Scholar] [CrossRef]

- Küçükdemirci, M.; Özer, E.; Piro, S.; Baydemir, N.; Zamuner, D. An application of integration approaches for archaeo-geophysical data: Case study from Aizanoi. Archaeol. Prospect. 2018, 25, 33–44. [Google Scholar] [CrossRef]

- Gustavsen, L.; Cannell, R.J.S.; Nau, E.; Tonning, C.; Trinks, I.; Kristiansen, M.; Gabler, M.; Paasche, K.; Gansum, T.; Hinterleitner, A.; et al. Archaeological prospection of a specialized cooking-pit site at Lunde in Vestfold, Norway. Archaeol. Prospect. 2018, 25, 17–31. [Google Scholar] [CrossRef]

- Bevan, A. The data deluge. Antiquity 2015, 89, 1473–1484. [Google Scholar] [CrossRef]

- Orengo, H.; Petrie, C. Large-Scale, Multi-Temporal Remote Sensing of Palaeo-River Networks: A Case Study from Northwest India and its Implications for the Indus Civilisation. Remote Sens. 2017, 9, 735. [Google Scholar] [CrossRef]

- Agapiou, A. Remote sensing heritage in a petabyte-scale: Satellite data and heritage Earth Engine© applications. Int. J. Digit. Earth 2017, 10, 85–102. [Google Scholar] [CrossRef]

- Liss, B.; Howland, D.M.; Levy, E.T. Testing Google Earth Engine for the automatic identification and vectorization of archaeological features: A case study from Faynan, Jordan. J. Archaeol. Sci. Rep. 2017, 15, 299–304. [Google Scholar] [CrossRef]

- Gyucha, A.; Yerkes, W.R.; Parkinson, A.W.; Sarris, A.; Papadopoulos, N.; Duffy, R.P.; Salisbury, B.R. Settlement Nucleation in the Neolithic: A Preliminary Report of the Körös Regional Archaeological Project’s Investigations at Szeghalom-Kovácshalom and Vésztő-Mágor. In Neolithic and Copper Age between the Carpathians and the Aegean Sea: Chronologies and Technologies from the 6th to the 4th Millennium BCE; Hansen, S., Raczky, P., Anders, A., Reingruber, A., Eds.; International Workshop Budapest 2012; Dr. Rudolf Habelt: Bonn, Germany, 2012; pp. 129–142. [Google Scholar]

- Hegedűs, K. Vésztő-Mágori-domb. In Magyarország Régészeti Topográfiája VI; Ecsedy, I., Kovács, L., Maráz, B., Torma, I., Eds.; Békés Megye Régészeti Topográfiája: A Szeghalmi Járás 1982 IV/1; Akadémiai Kiadó: Budapest, Hungary, 1982; pp. 184–185. [Google Scholar]

- Hegedűs, K.; Makkay, J. Vésztő-Mágor: A Settlement of the Tisza Culture. In The Late Neolithic of the Tisza Region: A Survey of Recent Excavations and Their Findings; Tálas, L., Raczky, P., Eds.; Szolnok County Museums: Szolnok, Hungary, 1987; pp. 85–104. [Google Scholar]

- Makkay, J. Vésztő–Mágor. In Ásatás a Szülőföldön; Békés Megyei Múzeumok Igazgatósága: Békéscsaba, Hungary, 2004. [Google Scholar]

- Parkinson, W.A. Tribal Boundaries: Stylistic Variability and Social Boundary Maintenance during the Transition to the Copper Age on the Great Hungarian Plain. J. Anthropol. Archaeol. 2006, 25, 33–58. [Google Scholar] [CrossRef]

- Juhász, I. A Csolt nemzetség monostora. In A középkori Dél-Alföld és Szer; Kollár, T., Ed.; Csongrád Megyei Levéltár: Szeged, Hungary, 2000; pp. 281–304. [Google Scholar]

- Sarris, A.; Papadopoulos, N.; Agapiou, A.; Salvi, M.C.; Hadjimitsis, D.G.; Parkinson, A.; Yerkes, R.W.; Gyucha, A.; Duffy, R.P. Integration of geophysical surveys, ground hyperspectral measurements, aerial and satellite imagery for archaeological prospection of prehistoric sites: The case study of Vészt˝o-Mágor Tell, Hungary. J. Archaeol. Sci. 2013, 40, 1454–1470. [Google Scholar] [CrossRef]

- Agapiou, A.; Sarris, A.; Papadopoulos, N.; Alexakis, D.D.; Hadjimitsis, D.G. 3D pseudo GPR sections based on NDVI values: Fusion of optical and active remote sensing techniques at the Vészto-Mágor tell, Hungary. In Archaeological Research in the Digital Age, Proceedings of the 1st Conference on Computer Applications and Quantitative Methods in Archaeology Greek Chapter (CAA-GR), Rethymno Crete, Greece, 6–8 March 2014; Papadopoulos, C., Paliou, E., Chrysanthi, A., Kotoula, E., Sarris, A., Eds.; Institute for Mediterranean Studies-Foundation of Research and Technology (IMS-Forth): Rethymno, Greece, 2015. [Google Scholar]

- Agapiou, A. Development of a Novel Methodology for the Detection of Buried Archaeological Remains Using Remote Sensing Techniques. Ph.D. Thesis, Cyprus University of Technology, Limassol, Cyprus, 2012. (In Greek). Available online: http://ktisis.cut.ac.cy/handle/10488/6950 (accessed on 7 November 2018).

- Neal, R.M. Bayesian Learning for Neural Networks; Springer Science & Business Media: New York, NY, USA, 2012; p. 118. [Google Scholar]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W.; Harlan, J.C. Monitoring the Vernal Advancements and Retrogradation (Greenwave Effect) of Nature Vegetation; NASA/GSFC Final Report; NASA: Greenbelt, MD, USA, 1974. [Google Scholar]

- Roujean, J.L.; Breon, F.M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Gamon, J.A.; Surfus, J.S. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Richardson, A.J.; Wiegand, C.L. Distinguishing vegetation from soil background information. Photogramm. Eng. Remote Sens. 1977, 43, 15–41. [Google Scholar]

- Pearson, R.L.; Miller, L.D. Remote Mapping of Standing Crop Biomass and Estimation of the Productivity of the Short Grass Prairie, Pawnee National Grasslands, Colorado. In Proceedings of the 8th International Symposium on Remote Sensing of the Environment, Ann Arbor, MI, USA, 2–6 October 1972; pp. 1357–1381. [Google Scholar]

- Baret, F.; Guyot, G. Potentials and limits of vegetation indices for LAI and APAR assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanré, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Pinty, B.; Verstraete, M.M. GEMI: A non-linear index to monitor global vegetation from satellites. Plant Ecol. 1992, 101, 15–20. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Gong, P.; Pu, R.; Biging, G.S.; Larrieu, M.R. Estimation of forest leaf area index using vegetation indices derived from Hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef]

- Kim, M.S.; Daughtry, C.S.T.; Chappelle, E.W.; McMurtrey, J.E., III; Walthall, C.L. The Use of High Spectral Resolution Bands for Estimating Absorbed Photosynthetically Active Radiation (APAR). In Proceedings of the 6th Symposium on Physical Measurements and Signatures in Remote Sensing, Val D’Isere, France, 17–21 January 1994. [Google Scholar]

- Zarco-Tejada, P.J.; Berjón, A.; López-Lozano, R.; Miller, J.R.; Martín, P.; Cachorro, V.; González, M.R.; de Frutos, A. Assessing vineyard condition with hyperspectral indices: Leaf and canopy reflectance simulation in a row-structured discontinuous canopy. Remote Sens. Environ. 2005, 99, 271–287. [Google Scholar] [CrossRef]

- Gandia, S.; Fernández, G.; García, J.C.; Moreno, J. Retrieval of Vegetation Biophysical Variables from CHRIS/PROBA Data in the SPARC Campaing. In Proceedings of the 4th ESA CHRIS PROBA Workshop, Frascati, Italy, 28–30 April 2004; pp. 40–48. [Google Scholar]

- Daughtry, C.S.T.; Walthall, C.L.; Kim, M.S.; de Colstoun, E.B.; McMurtrey, J.E. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Castro-Esau, K.L.; Sánchez-Azofeifa, G.A.; Rivard, B. Comparison of spectral indices obtained using multiple spectroradiometers. Remote Sens. Environ. 2006, 103, 276–288. [Google Scholar] [CrossRef]

- Chen, J.; Cihlar, J. Retrieving leaf area index of boreal conifer forests using Landsat Thematic Mapper. Remote Sens. Environ. 1996, 55, 153–162. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P.J. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Quantitative estimation of chlorophyll-a using reflectance spectra: Experiments with autumn chestnut and maple leaves. J. Photochem. Photobiol. B Biol. 1994, 22, 247–252. [Google Scholar] [CrossRef]

- Guyot, G.; Baret, F.; Major, D.J. High spectral resolution: Determination of spectral shifts between the red and near infrared. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 1988, 11, 750–760. [Google Scholar]

- Peñuelas, J.; Filella, I.; Lloret, P.; Munoz, F.; Vilajeliu, M. Reflectance assessment of mite effects on apple trees. Int. J. Remote Sens. 1995, 16, 2727–2733. [Google Scholar] [CrossRef]

- Penuelas, J.; Baret, F.; Filella, I. Semi-empirical indices to assess carotenoids/chlorophyll-a ratio from leaf spectral reflectance. Photosynthetica 1995, 31, 221–230. [Google Scholar]

- Vincini, M.; Frazzi, E.; D’Alessio, P. Angular Dependence of Maize and Sugar Beet Vis from Directional CHRIS/PROBA Data. In Proceedings of the 4th ESA CHRIS PROBA Workshop, Frascati, Italy, 19–21 September 2006; pp. 19–21. [Google Scholar]

- Jordan, C.F. Derivation of leaf area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of chlorophyll a, chlorophyll b, chlorophyll a+b, and total carotenoid content in eucalyptus leaves. Remote Sens. Environ. 1998, 66, 111–121. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Vogelmann, J.E.; Rock, B.N.; Moss, D.M. Red edge spectral measurements from sugar maple leaves. Int. J. Remote Sens. 1993, 14, 1563–1575. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Pushnik, J.C.; Dobrowski, S.; Ustin, S.L. Steady-state chlorophyll a fluorescence detection from canopy derivative reflectance and double-peak red-edge effects. Remote Sens. Environ. 2003, 84, 283–294. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N.; Chivkunova, O.B. Optical properties and nondestructive estimation of anthocyanin content in plant leaves. Photochem. Photobiol. 2001, 74, 38–45. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Zur, Y.; Chivkunova, O.B.; Merzlyak, M.N. Assessing carotenoid content in plant leaves with reflectance spectroscopy. Photochem. Photobiol. 2002, 75, 272–281. [Google Scholar] [CrossRef]

- Lichtenthaler, H.K.; Lang, M.; Sowinska, M.; Heisel, F.; Miehe, J.A. Detection of vegetation stress via a new high resolution fluorescence imaging system. J. Plant Physiol. 1996, 148, 599–612. [Google Scholar] [CrossRef]

- Peñuelas, J.; Gamon, J.A.; Fredeen, A.L.; Merino, J.; Field, C.B. Reflectance indices associated with physiological changes in nitrogen- and water-limited sunflower leaves. Remote Sens. Environ. 1994, 48, 135–146. [Google Scholar] [CrossRef]

- Barnes, J.D.; Balaguer, L.; Manrique, E.; Elvira, S.; Davison, A.W. A reappraisal of the use of DMSO for the extraction and determination of chlorophylls a and b in lichens and higher plants. Environ. Exp. Bot. 1992, 32, 85–100. [Google Scholar] [CrossRef]

- Gamon, J.A.; Serrano, L.; Surfus, J.S. The photochemical reflectance index: An optical indicator of photosynthetic radiation use efficiency across species, functional types, and nutrient levels. Oecologia 1997, 112, 492–501. [Google Scholar] [CrossRef] [PubMed]

- Filella, I.; Amaro, T.; Araus, J.L.; Peñuelas, J. Relationship between photosynthetic radiation-use efficiency of barley canopies and the photochemical reflectance index (PRI). Physiol. Plant. 1996, 96, 211–216. [Google Scholar] [CrossRef]

- Merzlyak, M.N.; Gitelson, A.A.; Chivkunova, O.B.; Rakitin, V.Y. Nondestructive optical detection of pigment changes during leaf senescence and fruit ripening. Physiol. Plant. 1999, 106, 135–141. [Google Scholar] [CrossRef]

- White, D.C.; Williams, M.; Barr, S.L. Detecting sub-surface soil disturbance using hyperspectral first derivative band rations of associated vegetation stress. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 27, 243–248. [Google Scholar]

- Peñuelas, J.; Filella, I.; Biel, C.; Serrano, L.; Savé, R. The reflectance at the 950–970 nm region as an indicator of plant water status. Int. J. Remote Sens. 1993, 14, 1887–1905. [Google Scholar] [CrossRef]

- Gnana Sheela, K.; Deepa, S.N. Review on Methods to Fix Number of Hidden Neurons in Neural Networks. Math. Probl. Eng. 2013, 2013, 425740. [Google Scholar] [CrossRef]

- Cao, W.; Wang, X.; Ming, Z.; Gao, J. A review on neural networks with random weights. Neurocomputing 2018, 275, 278–287. [Google Scholar] [CrossRef]

- Giovanis, G.D.; Papaioannou, I.; Straub, D.; Papadopoulos, V. Bayesian updating with subset simulation using artificial neural networks. Comput. Methods Appl. Mech. Eng. 2017, 319, 124–145. [Google Scholar] [CrossRef]

- Cerra, D.; Agapiou, A.; Cavalli, R.M.; Sarris, A. An Objective Assessment of Hyperspectral Indicators for the Detection of Buried Archaeological Relics. Remote Sens. 2018, 10, 500. [Google Scholar] [CrossRef]

- Agapiou, A.; Hadjimitsis, D.G.; Alexakis, D.D. Evaluation of Broadband and Narrowband Vegetation Indices for the Identification of Archaeological Crop Marks. Remote Sens. 2012, 4, 3892–3919. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32 Pt A, 75–89. [Google Scholar] [CrossRef]

- Vaiopoulos, A.D. Developing Matlab scripts for image analysis and quality assessment. In Proceedings of the SPIE 8181, Earth Resources and Environmental Remote Sensing/GIS Applications II, 81810B, Prague, Czech Republic, 26 October 2011. [Google Scholar] [CrossRef]

- Agapiou, A.; Lysandrou, V. Remote sensing archaeology: Tracking and mapping evolution in European scientific literature from 1999 to 2015. J. Archaeol. Sci. Rep. 2015, 4, 192–200. [Google Scholar] [CrossRef]

- Tapete, D.; Cigna, F. Trends and perspectives of space-borne SAR remote sensing for archaeological landscape and cultural heritage applications. J. Archaeol. Sci. Rep. 2017, 14, 716–726. [Google Scholar] [CrossRef]

- Chen, F.; Lasaponara, R.; Masini, N. An overview of satellite synthetic aperture radar remote sensing in archaeology: From site detection to monitoring. J. Cult. Herit. 2017, 23, 5–11. [Google Scholar] [CrossRef]

| No | Vegetation Index | Equation | Reference |

|---|---|---|---|

| 1 | NDVI (Normalized Difference Vegetation Index) | (pNIR − pred)/(pNIR + pred) | [34] |

| 2 | RDVI (Renormalized Difference Vegetation Index) | (pNIR − pred)/(pNIR + pred)1/2 | [35] |

| 3 | IRG (Red Green Ratio Index) | pRed − pgreen | [36] |

| 4 | PVI (Perpendicular Vegetation Index) | (pNIR − α pred − b)/(1 + α2) pNIR,soil = α pred,soil + b | [37] |

| 5 | RVI (Ratio Vegetation Index) | pred/pNIR | [38] |

| 6 | TSAVI (Transformed Soil Adjusted Vegetation Index) | [α(pNIR − α pNIR − b)]/[(pred + α pNIR − αb + 0.08(1 + α2))] pNIR,soil = α pred,soil + b | [39] |

| 7 | MSAVI (Modified Soil Adjusted Vegetation Index) | [2 pNIR + 1-[(2 pNIR + 1)2 − 8(pNIR − pred)]1/2]/2 | [40] |

| 8 | ARVI (Atmospherically Resistant Vegetation Index) | (pNIR − prb)/(pNIR + prb), prb = pred − γ (pblue − pred) | [41] |

| 9 | GEMI (Global Environment Monitoring Index) | n(1 − 0.25n)(pred − 0.125)/(1 − pred) n = [2(pNIR2 − pred2) + 1.5 pNIR + 0.5 pred]/(pNIR + pred + 0.5) | [42] |

| 10 | SARVI (Soil and Atmospherically Resistant Vegetation Index) | (1 + 0.5) (pNIR − prb)/(pNIR + prb + 0.5) prb = pred − γ (pblue − pred) | [40] |

| 11 | OSAVI (Optimized Soil Adjusted Vegetation Index) | (pNIR − pred)/(pNIR + pred + 0.16) | [43] |

| 12 | DVI (Difference Vegetation Index) | pNIR − pred | [44] |

| 13 | SR × NDVI (Simple Ratio x Normalized Difference Vegetation Index | (pNIR2 − pred)/(pNIR + pred2) | [45] |

| No | Vegetation Index | Equation | Reference |

|---|---|---|---|

| 1 | CARI (Chlorophyll Absorption Ratio Index) | p700|α670 + p670 + b|/[p670(α2 + 1)0.5 α = (p700 − p550)/150 b = p550 − 550 α | [46] |

| 2 | GI (Greenness Index) | p554/p677 | [47] |

| 3 | GVI (Greenness Vegetation Index) | (p682 − p553)/(p682 + p553) | [48] |

| 4 | MCARI (Modified Chlorophyll Absorption Ratio Index) | [(P700 − P670) − 0.2(P700 − P550)](P700/P670) | [49] |

| 5 | MCARI2 (Modified Chlorophyll Absorption Ratio Index) | 1.2[2.5(p800 − p670) − 1.3(p800 − p550)] | [50] |

| 6 | mNDVI (Modified Normalized Difference Vegetation Index) | (p800 − p680)/(p800 + p680 − 2 p445) | [51] |

| 7 | SR705 (Simple Ratio, Estimation of chlorophyll content) | p750/p705 | [52] |

| 8 | mNDVI2 (Modified Normalized Difference Vegetation Index) | (p750 − p705)/(p750 + p705 − 2 p445) | [51] |

| 9 | MSAVI (Improved Soil Adjusted Vegetation Index) | [2 p800 + 1 − [(2 p800 + 1)2 − 8(p800 − p670)]1/2]/2 | [34] |

| 10 | mSR (Modified Simple Ratio) | (p800 − p445)/(p680 − p445) | [51] |

| 11 | mSR2 (Modified Simple Ratio) | (p800 − p445)/(p680 − p445) | [51] |

| 12 | mSR3 (Modified Simple Ratio) | (p800/p670 − 1)/(p800/p670 + 1)0.5 | [53] |

| 13 | MTCI (MERIS Terrestrial Chlorophyll Index) | (p754 − p709)/(p709 − p681) | [54] |

| 14 | mTVI (modified Triangular Vegetation Index) | 1.2[1.2(p800 − p550) − 2.5(p670 − p550)] | [28] |

| 15 | NDVI (Normalized Difference Vegetation Index) | (p800 − p670)/(p800 + p670) | [34] |

| 16 | NDVI2 (Normalized Difference Vegetation Index) | (p750 − p705)/(p750 + p705) | [55] |

| 17 | OSAVI (Optimized Soil Adjusted Vegetation Index) | 1.16(p800 − p670)/(p800 + p670 + 0.16) | [43] |

| 18 | RDVI (Renormalized Difference Vegetation Index) | (p800 − p670)/(p800 + p670)0.5 | [35] |

| 19 | REP(Red-Edge Position) | 700 + 40[(p670 + p780)/2 − p700]/(p740 − p700) | [56] |

| 20 | SIPI (Structure Insensitive Pigment Index) | (p800 − p450)/(p800 − p650) | [57] |

| 21 | SIPI2 (Structure Insensitive Pigment Index) | (p800 − p440)/(p800 − p680) | [57] |

| 22 | SIPI3(Structure Insensitive Pigment Index) | (p800 − p445)/(p800 − p680) | [58] |

| 23 | SPVI (Spectral polygon vegetation index) | 0.4[3.7(p800 − p670) − 1.2|p530 − p670|] | [59] |

| 24 | SR (Simple Ratio) | p800/p680 | [60] |

| 25 | SR1 (Simple Ratio) | p750/p700 | [61] |

| 26 | SR2 (Simple Ratio) | p752/p690 | [61] |

| 27 | SR3 (Simple Ratio) | p750/p550 | [61] |

| 28 | SR4 (Simple Ratio) | p672/p550 | [62] |

| 29 | TCARI (Transformed Chlorophyll Absorption Ratio Index) | 3[(p700 − p670) − 0.2(p700 − p550)(p700/p670)] | [63] |

| 30 | TSAVI (Transformed Soil Adjusted Vegetation Index) | [α(p875 − α p680 − b)]/[(p680 + α p875 – αb + 0.08(1 + α2))] α = 1.062 b = 0.022 | [43] |

| 31 | TVI (Triangular Vegetation Index) | 0.5[120(p750 − p550) − 200(p670 − p550)] | [64] |

| 32 | VOG (Vogelmann Indices) | p740/p720 | [65] |

| 33 | VOG2 (Vogelmann Indices) | (p734 − p747)/(p715 + p726) | [66] |

| 34 | ARI (Anthocyanin Reflectance Index) | (1/p550) − (1/p700) | [67] |

| 35 | ARI2 (Anthocyanin Reflectance Index 2) | p800(1/p550) − (1/p700) | [67] |

| 36 | BGI (Blue Green Pigment Index) | p450/p550 | [47] |

| 37 | BRI (Blue Red Pigment Index) | p450/p690 | [47] |

| 38 | CRI (Carotenoid Reflectance Index) | (1/p510) − (1/p550) | [68] |

| 39 | RGI (Red/Green Index) | p690/p550 | [47] |

| 40 | CI (Curvature Index) | p675. p690/p2683 | [47] |

| 41 | LIC (Curvature Index) | p440/p690 | [69] |

| 42 | NPCI (Normalized Pigment Chlorophyll index) | (p680 − p430)/(p680 + p430) | [70] |

| 43 | NPQI (Normalized Phaeophytinization Index) | (p415 − p435)/(p415 + p435) | [71] |

| 44 | PRI (Photochemical Reflectance Index) | (p531 − p570)/(p531 + p570) | [72] |

| 45 | PRI2 (Photochemical Reflectance Index) | (p570 − p539)/(p570 + p539) | [73] |

| 46 | PSRI (Plant Senescence Reflectance Index) | (p680 − p500)/p750 | [74] |

| 47 | SR5 (Simple Ratio) | p690/p655 | [47] |

| 48 | SR6(Simple Ratio) | P685/p655 | [47] |

| 49 | VS (Vegetation Stress ratio) | P725/p702 | [75] |

| 50 | MVSR (Modified Vegetation Stress ratio) | P723/p700 | [75] |

| 51 | fWBI (floating Water Band Index) | p900/min p920−980 | [76] |

| 52 | WI (Water Index) | p900/p970 | [76] |

| 53 | SG (Sum Green Index) | mean of reflectance across the 500 nm to 600 nm | [36] |

| Method | Layer 0.00–0.20 m | Layer 0.20–0.40 m | Layer 0.40–0.60 m |

|---|---|---|---|

| Bias | 0.11 | 0.06 | 0.08 |

| Entropy | 0.70 | 0.59 | 0.59 |

| ERGAS | 2.74 | 1.80 | 2.10 |

| RASE | 11.84 | 7.43 | 8.88 |

| RMSE | 23.30 | 13.75 | 16.22 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Agapiou, A.; Sarris, A. Beyond GIS Layering: Challenging the (Re)use and Fusion of Archaeological Prospection Data Based on Bayesian Neural Networks (BNN). Remote Sens. 2018, 10, 1762. https://doi.org/10.3390/rs10111762

Agapiou A, Sarris A. Beyond GIS Layering: Challenging the (Re)use and Fusion of Archaeological Prospection Data Based on Bayesian Neural Networks (BNN). Remote Sensing. 2018; 10(11):1762. https://doi.org/10.3390/rs10111762

Chicago/Turabian StyleAgapiou, Athos, and Apostolos Sarris. 2018. "Beyond GIS Layering: Challenging the (Re)use and Fusion of Archaeological Prospection Data Based on Bayesian Neural Networks (BNN)" Remote Sensing 10, no. 11: 1762. https://doi.org/10.3390/rs10111762

APA StyleAgapiou, A., & Sarris, A. (2018). Beyond GIS Layering: Challenging the (Re)use and Fusion of Archaeological Prospection Data Based on Bayesian Neural Networks (BNN). Remote Sensing, 10(11), 1762. https://doi.org/10.3390/rs10111762