Remote Sensing Images Stripe Noise Removal by Double Sparse Regulation and Region Separation

Abstract

:1. Introduction

2. Stripe Noise Properties Analysis

2.1. Stripe Variation Property

2.2. Stripe Structure Property

3. Methodology

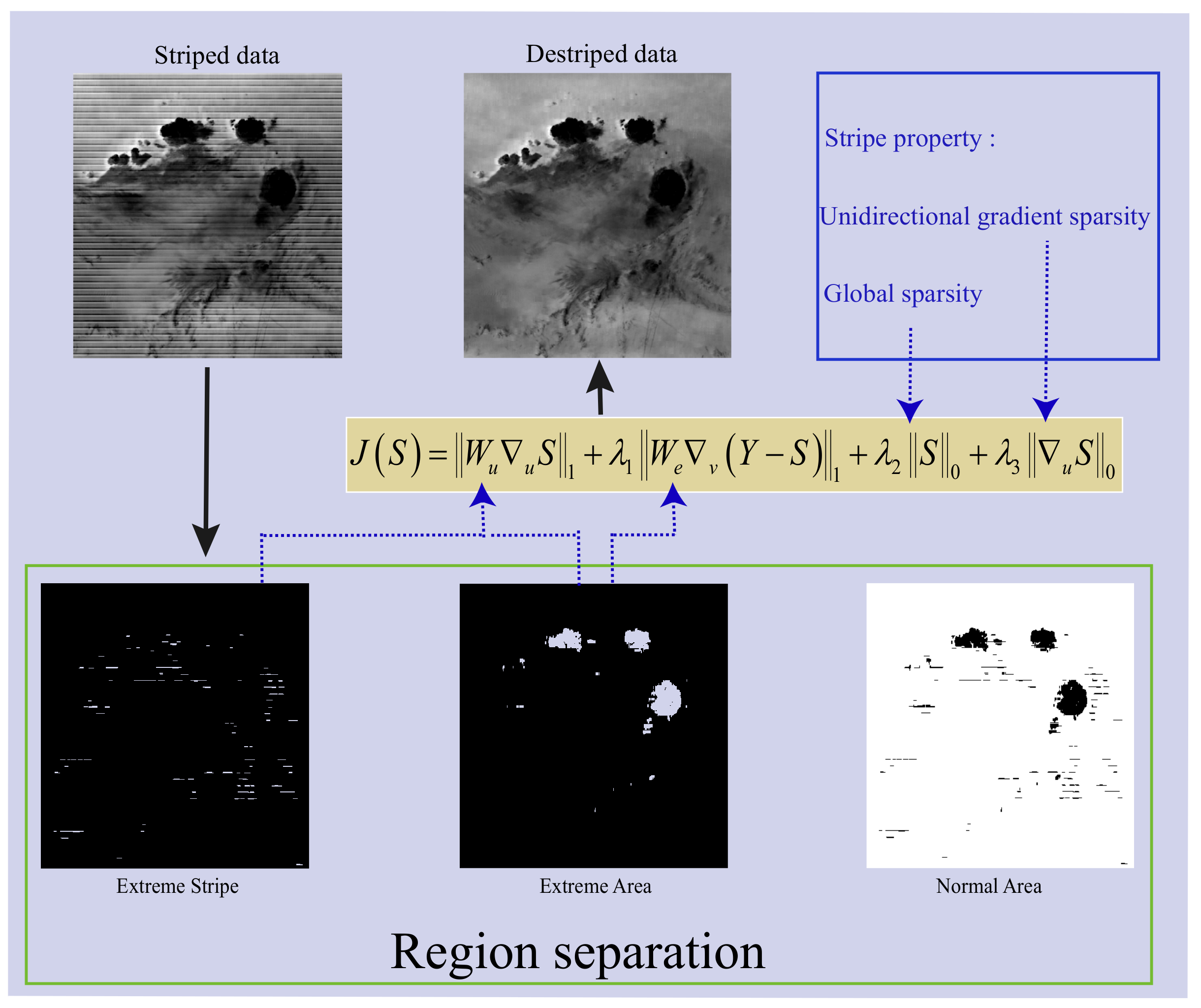

3.1. Region Separation

3.2. Proposed Weighted Double Sparse UV Model

3.3. Model Optimization

| Algorithm 1: The proposed WDSUV algorithm |

| Input: stripe noise image Y Output: destriped image X Initialize: Set , , , , . Detect extreme area and extreme stripe area. Calculate weight matrix and . While and update using (13), update using (16) update using (18), update by solving (21), update , , by (23), (24), (25), k = . End while Destriped image X = Y−S. |

4. Experiment Results

4.1. Simulated Experiments

4.2. Real Experiments

4.2.1. Visual Comparison

4.2.2. Qualitative Analysis

5. Discussion

5.1. Analysis of Parameters

5.2. Limitation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ADMM | alternating direction method of multipliers |

| MODIS | Moderate resolution imaging spectrometer |

| AVIRIS | Airborne Visible InfraRed Imaging Spectrometer |

| SSA | Strong stripe area |

| EA | Extreme area |

| SSIM | Structural similarity |

| PSNR | Peak signal-to-noise ratio |

| MICV | Mean of inverse coefficient of variation |

| MMRD | Mean of mean relative deviation |

References

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X.; Du, B. Hyperspectral Remote Sensing Image Subpixel Target Detection Based on Supervised Metric Learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4955–4965. [Google Scholar] [CrossRef]

- Zhu, D.; Wang, B.; Zhang, L. Airport Target Detection in Remote Sensing Images: A New Method Based on Two-Way Saliency. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1096–1100. [Google Scholar]

- Huang, Z.; Zhang, Y.; Li, Q.; Zhang, T.; Sang, N.; Hong, H. Progressive dual-domain filter for enhancing and denoising optical remote sensing images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 759–763. [Google Scholar] [CrossRef]

- Bian, X.; Chen, C.; Xu, Y.; Du, Q. Robust Hyperspectral Image Classification by MultiLayer SpatialSpectral Sparse Representations. Remote Sens. 2016, 8, 985. [Google Scholar] [CrossRef]

- Jiang, J.; Chen, C.; Yu, Y.; Jiang, X.; Ma, J. Spatial-aware collaborative representation for hyperspectral remote sensing image classification. IEEE Geosci. Remote Sens. Lett. 2017, 14, 404–408. [Google Scholar] [CrossRef]

- Li, C.; Ma, Y.; Mei, X.; Liu, C.; Ma, J. Hyperspectral image classification with robust sparse representation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 641–645. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J. Object detection in optical remote sensing images based on weakly supervised learning and high-level feature learning. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3325–3337. [Google Scholar] [CrossRef]

- Carfantan, H.; Idier, J. Statistical Linear Destriping of Satellite-Based Pushbroom-Type Images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1860–1871. [Google Scholar] [CrossRef] [Green Version]

- Bouali, M.; Ignatov, A. Estimation of Detector Biases in MODIS thermal emissive bands. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4339–4348. [Google Scholar] [CrossRef]

- Gadallah, F.L.; Csillag, F.; Smith, E.J.M. Destriping multisensor imagery with moment matching. Int. J. Remote Sens. 2000, 21, 2505–2511. [Google Scholar] [CrossRef]

- Shen, H.; Jiang, W.; Zhang, H.; Zhang, L. A piece-wise approach to removing the nonlinear and irregular stripes in MODIS data. Int. J. Remote Sens. 2014, 35, 44–53. [Google Scholar] [CrossRef]

- Tendero, Y.; Landeau, S.; Gilles, J. Non-uniformity Correction of Infrared Images by Midway Equalization. Image Proc. Line 2012, 2, 134–146. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Shao, Y.; Guo, H.; Wang, W.; Zhu, B. Destriping CMODIS data by power filtering. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2119–2124. [Google Scholar] [CrossRef]

- Cao, Y.; He, Z.; Yang, J.; Ye, X.; Cao, Y. A multi-scale non-uniformity correction method based on wavelet decomposition and guided filtering for uncooled long wave infrared camera. Signal Proc. Image Commun. 2018, 60, 13–21. [Google Scholar] [CrossRef]

- Münch, B.; Trtik, P.; Marone, F.; Stampanoni, M. Stripe and ring artifact removal with combined wavelet—Fourier filtering. Opt. Exp. 2009, 17, 8567–8591. [Google Scholar] [CrossRef]

- Jorge Torres, S.O.I. Wavelet analysis for the elimination of striping noise in satellite images. Opt. Eng. 2001, 40, 1309–1315. [Google Scholar]

- Bouali, M.; Ladjal, S. Toward optimal destriping of MODIS data using a unidirectional variational model. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2924–2935. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, T. Structure-guided unidirectional variation de-striping in the infrared bands of MODIS and hyperspectral images. Infrared Phys. Technol. 2016, 77, 132–143. [Google Scholar] [CrossRef]

- Zhou, G.; Fang, H.; Lu, C.; Wang, S.; Zuo, Z.; Hu, J. Robust destriping of MODIS and hyperspectral data using a hybrid unidirectional total variation model. Optik Int. J. Light Electron. Opt. 2015, 126, 838–845. [Google Scholar] [CrossRef]

- Wang, M.; Zheng, X.; Pan, J.; Wang, B. Unidirectional total variation destriping using difference curvature in MODIS emissive bands. Infrared Phys. Technol. 2016, 75, 1–11. [Google Scholar] [CrossRef]

- Chang, Y.; Fang, H.; Yan, L.; Liu, H. Robust destriping method with unidirectional total variation and framelet regularization. Opt. Exp. 2013, 21, 23307–23323. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; He, C.; Fang, H.; Wang, X. Iteratively reweighted unidirectional variational model for stripe non-uniformity correction. Infrared Phys. Technol. 2016, 75, 107–116. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.Z.; Deng, L.J.; Zhao, X.L.; Wang, M. Group sparsity based regularization model for remote sensing image stripe noise removal. Neurocomputing 2017, 267, 95–106. [Google Scholar] [CrossRef]

- Liu, X.; Lu, X.; Shen, H.; Yuan, Q.; Jiao, Y.; Zhang, L. Stripe Noise Separation and Removal in Remote Sensing Images by Consideration of the Global Sparsity and Local Variational Properties. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3049–3060. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Wu, T.; Zhong, S. Remote Sensing Image Stripe Noise Removal: From Image Decomposition Perspective. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7018–7031. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, T.Z.; Zhao, X.L.; Deng, L.J.; Huang, J. Stripe noise removal of remote sensing images by total variation regularization and group sparsity constraint. Remote Sens. 2017, 9, 559. [Google Scholar] [CrossRef]

- Dou, H.X.; Huang, T.Z.; Deng, L.J.; Zhao, X.L.; Huang, J. Directional l0 Sparse Modeling for Image Stripe Noise Removal. Remote Sens. 2018, 10, 361. [Google Scholar] [CrossRef]

- Lu, X.; Wang, Y.; Yuan, Y. Graph-Regularized Low-Rank Representation for Destriping of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 4009–4018. [Google Scholar] [CrossRef]

- Zhang, H.; He, W.; Zhang, L.; Shen, H.; Yuan, Q. Hyperspectral image restoration using low-rank matrix recovery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4729–4743. [Google Scholar] [CrossRef]

- Ma, J.; Li, C.; Ma, Y.; Wang, Z. Hyperspectral Image Denoising Based on Low-Rank Representation and Superpixel Segmentation. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3086–3090. [Google Scholar]

- Kuang, X.; Sui, X.; Chen, Q.; Gu, G. Single Infrared Image Stripe Noise Removal Using Deep Convolutional Networks. IEEE Photonics J. 2017, 9, 1–13. [Google Scholar] [CrossRef]

- Wang, S.P. Stripe noise removal for infrared image by minimizing difference between columns. Infrared Phys. Technol. 2016, 77, 58–64. [Google Scholar] [CrossRef]

- Xu, Z.; Sun, J. Image Inpainting by Patch Propagation Using Patch Sparsity. IEEE Trans. Image Proc. 2010, 19, 1153–1165. [Google Scholar]

- Cheng, Q.; Shen, H.; Zhang, L.; Li, P. Inpainting for remotely sensed images with a multichannel nonlocal total variation model. IEEE Trans. Geosci. Remote Sens. 2014, 52, 175–187. [Google Scholar] [CrossRef]

- Cai, J.F.; Chan, R.H.; Shen, Z. A framelet-based image inpainting algorithm. Appl. Comput. Harmonic Anal. 2008, 24, 131–149. [Google Scholar] [CrossRef]

- Wahlberg, B.; Boyd, S.; Annergren, M.; Wang, Y. An ADMM Algorithm for a Class of Total Variation Regularized Estimation Problems. IFAC Proc. Vol. 2012, 45, 83–88. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Bredies, K.; Lorenz, D.A. Linear convergence of iterative soft-thresholding. J. Fourier Anal. Appl. 2008, 14, 813–837. [Google Scholar] [CrossRef]

- Blumensath, T.; Davies, M.E. Iterative thresholding for sparse approximations. J. Fourier Anal. Appl. 2008, 14, 629–654. [Google Scholar] [CrossRef]

- Cao, Y.; Yang, M.Y.; Tisse, C. Effective Strip Noise Removal for Low-Textured Infrared Images Based on 1-D Guided Filtering. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 2176–2188. [Google Scholar] [CrossRef]

- Image and Video Denoising by Sparse 3D Transform-Domain Collaborative Filtering. Available online: http://www.cs.tut.fi//~foi/GCF-BM3D/ (accessed on 23 March 2018).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Proc. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Huang, Z.; Li, Q.; Fang, H.; Zhang, T.; Sang, N. Iterative weighted nuclear norm for X-ray cardiovascular angiogram image denoising. Signal Image Video Proc. 2017, 11, 1445–1452. [Google Scholar] [CrossRef]

- Ghimpeteanu, G.; Batard, T.; Bertalmio, M.; Levine, S. A Decomposition Framework for Image Denoising Algorithms. IEEE Trans. Image Proc. 2016, 25, 388–399. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Proc. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- MODIS Data. Available online: https://modis.gsfc.nasa.gov/data/ (accessed on 30 January 2018).

- AVIRIS Data. Available online: http://aviris.jpl.nasa.gov/ (accessed on 30 January 2018).

- A Freeware Multispectral Image Data Analysis System. Available online: https://engineering.purdue.edu/~biehl/MultiSpec/hyperspectral.html (accessed on 30 January 2018).

- Gloabal Digital Product Sample. Available online: http://www.digitalglobe.com/product-samples (accessed on 30 January 2018).

- Changyi’s Homepage on Escience.cn. Available online: http://www.escience.cn/people/changyi/index.html (accessed on 23 March 2018).

- Hyperion Data. Available online: https://eo1.usgs.gov/sensors/hyperion (accessed on 30 January 2018).

| Images | Index | Degrade | BM3D | SNRCNN | GF-Based | WAFT | UV | HUTV | SUV | WDSUV |

|---|---|---|---|---|---|---|---|---|---|---|

| Terra MODIS | PSNR | 26.0840 | 25.5200 | 27.2117 | 32.0181 | 31.0007 | 32.6417 | 31.7010 | 38.3245 | 41.0911 |

| data S1 | SSIM | 0.7324 | 0.5870 | 0.7703 | 0.8918 | 0.8890 | 0.8878 | 0.8806 | 0.9786 | 0.9848 |

| Terra MODIS | PSNR | 23.4022 | 23.3515 | 24.5212 | 39.3852 | 40.8607 | 40.9761 | 36.2624 | 46.7106 | 48.2076 |

| data S2 | SSIM | 0.4382 | 0.3484 | 0.4270 | 0.9472 | 0.9498 | 0.9567 | 0.8924 | 0.9842 | 0.9904 |

| AVIRIS hyperspectral | PSNR | 26.2208 | 25.7951 | 27.3176 | 35.5988 | 33.6703 | 34.2757 | 31.8994 | 39.5019 | 47.1249 |

| data S3 | SSIM | 0.6409 | 0.4165 | 0.6849 | 0.9540 | 0.9104 | 0.9077 | 0.8734 | 0.9389 | 0.9856 |

| Washington DC | PSNR | 24.2427 | 24.1440 | 25.6211 | 34.6449 | 32.4722 | 33.9846 | 29.4783 | 37.9291 | 43.9164 |

| Mall S4 | SSIM | 0.6750 | 0.6392 | 0.7008 | 0.9481 | 0.9060 | 0.9237 | 0.8304 | 0.9700 | 0.9883 |

| Aqua MODIS | PSNR | 22.3573 | 22.3398 | 23.3799 | 30.6260 | 28.4963 | 27.5805 | 28.6585 | 35.2163 | 36.4584 |

| data S5 | SSIM | 0.5670 | 0.4939 | 0.5951 | 0.7893 | 0.7902 | 0.8502 | 0.8070 | 0.9681 | 0.9832 |

| Washington DC | PSNR | 25.0689 | 24.9520 | 26.1792 | 33.0079 | 31.4577 | 31.2565 | 31.8940 | 32.6320 | 36.9800 |

| multispectral S6 | SSIM | 0.6135 | 0.4813 | 0.6322 | 0.8858 | 0.8611 | 0.8720 | 0.8587 | 0.9120 | 0.9547 |

| Image | Method | r = 0.1 | r = 0.4 | r = 0.6 | r = 0.8 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intensity | Intensity | Intensity | Intensity | ||||||||||||||

| 10 | 30 | 50 | 80 | 10 | 30 | 50 | 80 | 10 | 30 | 50 | 80 | 10 | 30 | 50 | 80 | ||

| MODIS data S1 periodical stripe | Degrade | 38.3636 | 28.9416 | 24.6571 | 20.9765 | 32.3406 | 22.9182 | 18.6465 | 14.9819 | 30.5788 | 21.1535 | 16.8783 | 13.2156 | 29.3288 | 19.9101 | 15.6230 | 11.9539 |

| BM3D | 37.9730 | 28.7184 | 24.1826 | 20.5160 | 32.2882 | 22.8704 | 18.5387 | 14.8898 | 30.5463 | 21.1186 | 16.8067 | 13.1609 | 29.3075 | 19.8858 | 15.5710 | 11.9200 | |

| SNRCNN | 39.4908 | 31.8395 | 26.3301 | 21.8917 | 37.0035 | 25.2144 | 19.6972 | 15.4788 | 35.3156 | 23.1266 | 17.6214 | 13.4484 | 34.6232 | 21.3435 | 16.2456 | 12.1758 | |

| GF-based | 39.0138 | 37.9688 | 36.2370 | 30.0010 | 38.3812 | 34.3600 | 30.4248 | 26.1004 | 37.7721 | 32.2284 | 28.8698 | 24.0200 | 37.6521 | 31.7981 | 27.4815 | 22.3266 | |

| WAFT | 42.4278 | 37.0602 | 30.6437 | 30.1540 | 39.4671 | 34.9552 | 29.2543 | 27.0905 | 38.9408 | 33.5375 | 28.1855 | 25.1431 | 38.6607 | 32.7633 | 27.2272 | 23.5700 | |

| UV | 38.4699 | 35.6250 | 34.8370 | 33.8500 | 38.2752 | 33.8801 | 32.3190 | 28.6162 | 37.9590 | 32.3228 | 29.6661 | 24.8966 | 37.9119 | 32.0520 | 28.9193 | 24.1231 | |

| HUTV | 39.5704 | 36.2796 | 34.2204 | 30.4702 | 38.7103 | 33.8595 | 30.5498 | 26.6128 | 37.3328 | 28.6134 | 27.4082 | 23.3219 | 38.0095 | 31.4505 | 26.2719 | 23.0960 | |

| SUV | 48.4850 | 44.9149 | 43.0147 | 38.0228 | 41.0528 | 39.9256 | 35.8973 | 33.3458 | 35.7053 | 37.4478 | 32.9375 | 27.0810 | 34.7791 | 35.5368 | 30.9388 | 26.2138 | |

| WDSUV | 50.2697 | 45.3239 | 44.2248 | 42.2322 | 43.2668 | 40.0832 | 39.4022 | 36.1014 | 39.1392 | 38.9162 | 36.8020 | 32.3301 | 39.7454 | 39.4813 | 35.7192 | 30.3441 | |

| MODIS data S1 nonperiodical stripe | Degrade | 38.4219 | 28.9951 | 24.7108 | 21.0400 | 32.3184 | 22.9023 | 18.6161 | 14.9338 | 30.5989 | 21.1907 | 16.9180 | 13.2833 | 29.3416 | 19.9244 | 15.6378 | 11.9716 |

| BM3D | 37.9733 | 28.7510 | 24.2181 | 20.5611 | 32.2694 | 22.8528 | 18.5077 | 14.8385 | 30.5693 | 21.1577 | 16.8473 | 13.2296 | 29.3240 | 19.8993 | 15.5856 | 11.9358 | |

| SNRCNN | 39.3618 | 31.3672 | 26.0140 | 21.7015 | 36.5788 | 24.9534 | 19.5394 | 15.3081 | 34.7035 | 22.8145 | 17.6132 | 13.5403 | 33.5670 | 21.2727 | 16.1483 | 12.1258 | |

| GF-based | 38.9359 | 36.6797 | 34.0862 | 29.7559 | 38.0441 | 33.5266 | 28.6602 | 25.4274 | 36.6564 | 29.9535 | 26.7176 | 23.0023 | 36.2714 | 29.6554 | 26.1259 | 21.8469 | |

| WAFT | 41.8208 | 35.7550 | 31.5855 | 27.1679 | 40.1222 | 33.6273 | 29.4164 | 25.1599 | 36.2671 | 30.0575 | 26.0868 | 23.7940 | 36.2781 | 29.1690 | 25.5951 | 26.0789 | |

| UV | 38.4710 | 36.5816 | 33.6151 | 30.8673 | 38.0733 | 34.3455 | 30.6966 | 26.8573 | 37.4262 | 31.0819 | 26.7207 | 22.7429 | 37.0838 | 30.4512 | 25.8733 | 21.6048 | |

| HUTV | 39.5271 | 35.3252 | 32.5130 | 30.3248 | 38.4048 | 33.4225 | 29.1997 | 26.6952 | 36.6916 | 29.2569 | 26.8509 | 22.2469 | 36.4255 | 28.3136 | 25.3522 | 20.7944 | |

| SUV | 46.0676 | 44.3400 | 38.9258 | 37.7311 | 38.7371 | 39.6975 | 35.2263 | 31.9187 | 34.2789 | 31.5409 | 27.8705 | 23.3751 | 31.5149 | 29.8870 | 26.1507 | 21.5511 | |

| WDSUV | 47.0051 | 47.0919 | 43.4787 | 41.7685 | 40.9192 | 40.4263 | 38.2084 | 33.3118 | 38.1593 | 34.0324 | 30.7349 | 26.0789 | 34.8083 | 32.5101 | 30.1833 | 24.5578 | |

| MODIS data S2 periodical stripe | Degrade | 38.1441 | 28.6469 | 24.2530 | 20.2865 | 32.1235 | 22.6300 | 18.2398 | 14.2714 | 30.3622 | 20.8668 | 16.4764 | 12.5067 | 29.1128 | 19.6175 | 15.2292 | 11.2611 |

| BM3D | 37.6601 | 28.5117 | 24.1461 | 20.2234 | 32.0130 | 22.5990 | 18.2129 | 14.2588 | 30.2816 | 20.8425 | 16.4587 | 12.4992 | 29.0472 | 19.5990 | 15.2163 | 11.2565 | |

| SNRCNN | 42.9764 | 32.4129 | 26.1812 | 21.2479 | 39.9476 | 25.1809 | 19.3562 | 14.7615 | 37.4029 | 23.0149 | 17.2241 | 12.6765 | 36.7278 | 21.0633 | 15.8601 | 11.4613 | |

| GF-based | 45.6167 | 43.3258 | 41.5718 | 37.6521 | 44.3715 | 40.0237 | 37.8644 | 32.5072 | 43.2628 | 39.3043 | 35.9049 | 29.4985 | 44.6783 | 38.8118 | 34.0477 | 27.3518 | |

| WAFT | 47.2401 | 42.8725 | 37.1036 | 36.8526 | 43.4684 | 41.3821 | 35.8032 | 33.5217 | 43.1177 | 39.9001 | 34.7649 | 31.5304 | 43.4207 | 40.6491 | 34.7274 | 30.5963 | |

| UV | 43.4176 | 43.2263 | 43.0491 | 42.2975 | 43.1667 | 41.5545 | 40.1779 | 36.6793 | 42.9954 | 40.1732 | 37.9465 | 31.1208 | 43.3713 | 40.7350 | 37.9465 | 31.7282 | |

| HUTV | 44.1750 | 39.2730 | 38.8229 | 35.0612 | 43.3081 | 38.6896 | 34.7521 | 30.6133 | 41.4480 | 36.7318 | 32.5494 | 30.1304 | 43.0293 | 37.4793 | 31.6226 | 30.2447 | |

| SUV | 55.2534 | 52.6987 | 51.4506 | 47.8067 | 44.8470 | 44.5794 | 43.9645 | 39.8285 | 40.5483 | 43.1501 | 39.9061 | 34.3684 | 40.0209 | 40.1130 | 39.5030 | 34.7828 | |

| WDSUV | 54.7737 | 53.4641 | 47.4867 | 45.7451 | 46.4718 | 44.6292 | 44.9953 | 41.3835 | 43.2174 | 46.3007 | 41.4233 | 38.3327 | 41.5090 | 41.0232 | 39.9654 | 36.4712 | |

| MODIS data S2 nonperiodical stripe | Degrade | 38.1462 | 28.6688 | 24.2906 | 20.3206 | 32.1241 | 22.6317 | 18.2447 | 14.2745 | 30.3607 | 20.8547 | 16.4605 | 12.4907 | 29.1120 | 19.6114 | 15.2223 | 11.2544 |

| BM3D | 37.6041 | 28.5296 | 24.1831 | 20.2541 | 32.0126 | 22.6000 | 18.2186 | 14.2614 | 30.2843 | 20.8317 | 16.4432 | 12.4833 | 29.0511 | 19.5934 | 15.2093 | 11.2493 | |

| SNRCNN | 42.2972 | 31.7122 | 25.8306 | 21.0435 | 39.3622 | 24.8819 | 19.2232 | 14.6275 | 36.3498 | 22.5742 | 17.1694 | 12.7137 | 35.1416 | 21.0001 | 15.7317 | 11.3596 | |

| GF-based | 44.3271 | 39.5764 | 36.9224 | 34.6063 | 43.7647 | 37.0518 | 34.6596 | 30.4640 | 39.8603 | 33.3486 | 29.5833 | 25.0746 | 39.9661 | 35.2912 | 29.6666 | 25.5680 | |

| WAFT | 45.5348 | 38.9978 | 36.4346 | 34.4136 | 43.1718 | 37.0130 | 34.0479 | 29.3317 | 39.1531 | 30.9575 | 30.5014 | 27.7590 | 39.7103 | 33.6935 | 31.3340 | 28.4894 | |

| UV | 43.1941 | 40.7837 | 38.1597 | 34.5783 | 42.8765 | 38.9708 | 35.5971 | 31.3830 | 40.4867 | 32.9775 | 28.4004 | 23.9095 | 41.4360 | 34.6748 | 29.8098 | 24.6459 | |

| HUTV | 44.4063 | 37.8306 | 35.0888 | 33.3301 | 42.9837 | 37.0956 | 33.5024 | 31.6250 | 39.1709 | 32.5503 | 29.5291 | 24.8175 | 39.5229 | 32.5002 | 28.9732 | 23.8722 | |

| SUV | 53.3067 | 51.7171 | 46.3221 | 46.1404 | 46.5165 | 46.7954 | 42.6519 | 40.8579 | 36.9867 | 35.7377 | 30.4483 | 25.0203 | 33.8652 | 34.7488 | 29.8671 | 25.1922 | |

| WDSUV | 57.7296 | 53.3637 | 48.7888 | 50.0378 | 47.2587 | 46.8928 | 45.6753 | 42.4041 | 41.0117 | 36.4808 | 32.0655 | 26.8727 | 36.9841 | 36.9584 | 34.1676 | 28.5905 | |

| Hyperspectral data S3 periodical stripe | Degrade | 38.1319 | 28.5903 | 24.2106 | 20.9388 | 32.1110 | 22.5704 | 18.1923 | 14.9239 | 30.3499 | 20.8081 | 16.4339 | 13.1754 | 29.1004 | 19.5592 | 15.1807 | 11.9043 |

| BM3D | 37.4299 | 28.3034 | 23.8879 | 20.7112 | 31.9536 | 22.5020 | 18.1119 | 14.8803 | 30.2482 | 20.7593 | 16.3793 | 13.1497 | 29.0179 | 19.5218 | 15.1392 | 11.8848 | |

| SNRCNN | 39.0079 | 31.7377 | 26.0553 | 21.9460 | 37.3156 | 24.9018 | 19.3512 | 15.5074 | 35.7618 | 22.8175 | 17.2138 | 13.4562 | 34.9288 | 21.0031 | 15.9262 | 12.2672 | |

| GF-based | 42.5060 | 41.2574 | 40.8444 | 37.1256 | 42.3063 | 40.7073 | 39.4821 | 31.5140 | 41.7498 | 39.8682 | 38.1542 | 28.8929 | 42.0426 | 40.4338 | 36.8492 | 26.7469 | |

| WAFT | 42.1924 | 39.1589 | 35.2671 | 34.5917 | 39.8006 | 38.6106 | 34.7035 | 30.9176 | 39.6123 | 38.0180 | 34.2849 | 29.3421 | 39.7383 | 39.1095 | 34.7673 | 28.1147 | |

| UV | 40.8155 | 38.3434 | 38.2253 | 36.8282 | 40.6768 | 37.6610 | 36.9930 | 32.0192 | 40.5251 | 36.7676 | 35.2858 | 27.9936 | 40.8458 | 37.9764 | 36.7563 | 27.9281 | |

| HUTV | 39.1680 | 37.5216 | 36.0298 | 33.4295 | 38.7537 | 35.8826 | 34.2408 | 30.6096 | 37.8728 | 32.2482 | 32.0498 | 27.6395 | 38.4658 | 35.4814 | 31.2428 | 27.8749 | |

| SUV | 51.9430 | 46.5639 | 45.6472 | 41.4831 | 42.8934 | 45.0270 | 43.0221 | 36.4940 | 38.3094 | 43.6120 | 40.9821 | 31.5260 | 36.9397 | 42.1857 | 39.1560 | 31.2246 | |

| WDSUV | 52.4407 | 50.1288 | 40.3177 | 36.2103 | 48.1432 | 47.2473 | 39.7532 | 34.9969 | 45.8231 | 45.5917 | 37.2981 | 32.8550 | 44.4537 | 43.5823 | 32.3576 | 29.1048 | |

| Hyperspectral data S3 nonperiodical stripe | Degrade | 38.1308 | 28.5897 | 24.2027 | 20.9204 | 32.1107 | 22.5698 | 18.1960 | 14.9065 | 30.3496 | 20.8086 | 16.4317 | 13.1732 | 29.1007 | 19.5598 | 15.1899 | 11.9409 |

| BM3D | 37.3848 | 28.2907 | 23.8749 | 20.6880 | 31.9603 | 22.5008 | 18.1143 | 14.8589 | 30.2458 | 20.7606 | 16.3766 | 13.1439 | 29.0211 | 19.5228 | 15.1459 | 11.9203 | |

| SNRCNN | 38.7845 | 30.9571 | 25.5719 | 21.6554 | 36.7569 | 24.7794 | 19.2770 | 15.4183 | 35.3986 | 22.5178 | 17.2044 | 13.5205 | 33.5486 | 20.8901 | 15.7624 | 12.1881 | |

| GF-based | 42.2991 | 39.7528 | 37.9501 | 34.7591 | 40.7576 | 36.6630 | 33.6928 | 28.9421 | 40.2180 | 35.3194 | 33.0864 | 26.9983 | 37.7981 | 31.7956 | 29.7875 | 24.2069 | |

| WAFT | 41.7831 | 37.7890 | 35.4026 | 33.4041 | 39.2474 | 34.2986 | 31.8598 | 28.4658 | 38.3109 | 33.0572 | 32.1086 | 28.4944 | 36.4695 | 30.6235 | 30.6457 | 25.6458 | |

| UV | 40.7916 | 39.4278 | 36.1542 | 32.8773 | 39.8204 | 35.5633 | 31.1753 | 27.6026 | 39.5813 | 34.4219 | 30.1753 | 25.8380 | 38.0463 | 30.7917 | 26.3328 | 22.1651 | |

| HUTV | 39.3207 | 36.8817 | 35.1384 | 33.2775 | 37.8767 | 33.4628 | 30.0189 | 28.9225 | 37.9971 | 32.5308 | 30.0652 | 25.8394 | 36.0397 | 29.0336 | 27.1285 | 20.5277 | |

| SUV | 47.6830 | 46.4302 | 40.2209 | 38.8257 | 39.4676 | 39.4614 | 38.3263 | 32.1460 | 36.4566 | 37.3494 | 33.5141 | 26.1144 | 30.6345 | 31.0487 | 26.7216 | 20.4176 | |

| WDSUV | 50.6259 | 50.5892 | 45.9726 | 43.1071 | 44.0476 | 46.2188 | 42.4513 | 35.8632 | 44.3317 | 41.5655 | 33.9083 | 28.0227 | 39.7249 | 35.8832 | 30.5793 | 25.0109 | |

| Hyperspectral data S4 periodical stripe | Degrade | 38.3081 | 28.7657 | 24.4384 | 21.0540 | 32.2875 | 22.7453 | 18.4232 | 15.0411 | 30.5267 | 20.9842 | 16.6662 | 13.2752 | 29.2773 | 19.7348 | 15.4126 | 12.0143 |

| BM3D | 37.7608 | 28.5314 | 24.0485 | 20.6537 | 32.1885 | 22.6851 | 18.3337 | 14.9621 | 30.4644 | 20.9403 | 16.6086 | 13.2282 | 29.2339 | 19.7007 | 15.3722 | 11.9862 | |

| SNRCNN | 38.3191 | 31.2693 | 26.0951 | 21.9826 | 36.1631 | 24.8326 | 19.4969 | 15.5880 | 34.6511 | 22.8072 | 17.4050 | 13.5335 | 33.8092 | 21.0800 | 16.0903 | 12.3224 | |

| GF-based | 35.4574 | 35.4402 | 35.4038 | 31.9845 | 34.5255 | 34.1008 | 33.9158 | 28.8678 | 34.4463 | 33.9952 | 33.2673 | 26.9497 | 34.4388 | 34.0353 | 33.0103 | 25.4585 | |

| WAFT | 35.5012 | 32.4622 | 29.1166 | 28.8143 | 33.0202 | 32.3641 | 28.9810 | 27.5259 | 33.0025 | 32.1794 | 28.8702 | 25.8683 | 33.0308 | 32.4567 | 28.9425 | 25.2356 | |

| UV | 34.6952 | 31.8635 | 31.6717 | 30.9389 | 34.6984 | 31.6092 | 31.3027 | 28.2991 | 34.6677 | 31.2433 | 30.6359 | 25.7582 | 34.6379 | 31.7924 | 30.9559 | 25.7389 | |

| HUTV | 34.8731 | 32.6289 | 32.0435 | 28.6424 | 34.7107 | 31.8426 | 29.7554 | 26.9722 | 34.2293 | 29.5224 | 27.9733 | 25.3840 | 34.7866 | 30.8774 | 27.2127 | 25.2576 | |

| SUV | 45.8325 | 37.9881 | 37.8021 | 32.6007 | 37.4824 | 37.5948 | 36.9134 | 30.5562 | 33.0448 | 36.8791 | 32.5475 | 27.9168 | 32.8909 | 36.4694 | 32.2048 | 27.3382 | |

| WDSUV | 47.4231 | 42.7254 | 38.0800 | 34.0489 | 42.4871 | 41.4536 | 37.1274 | 32.4728 | 40.9252 | 39.6583 | 34.6712 | 30.5732 | 40.7620 | 39.8275 | 33.6095 | 28.3756 | |

| Hyperspectral data S4 nonperiodical stripe | Degrade | 38.1308 | 28.5884 | 24.2417 | 20.8247 | 32.1102 | 22.5678 | 18.2484 | 14.8619 | 30.3494 | 20.8070 | 16.4906 | 13.1183 | 29.1000 | 19.5576 | 15.2422 | 11.8480 |

| BM3D | 37.5847 | 28.3488 | 23.8618 | 20.4240 | 32.0228 | 22.5114 | 18.1630 | 14.7838 | 30.2945 | 20.7653 | 16.4354 | 13.0743 | 29.0606 | 19.5250 | 15.2006 | 11.8110 | |

| SNRCNN | 38.2193 | 30.7263 | 25.5635 | 21.5607 | 35.5068 | 24.5464 | 19.2753 | 15.3704 | 34.3556 | 22.3848 | 17.2245 | 13.4406 | 33.1192 | 20.8728 | 15.7966 | 12.0450 | |

| GF-based | 35.3150 | 34.2430 | 32.9393 | 31.2654 | 35.0389 | 32.4143 | 31.1472 | 27.2521 | 34.1816 | 32.6377 | 31.2622 | 26.0432 | 34.0332 | 32.1975 | 30.1290 | 24.2097 | |

| WAFT | 35.3527 | 31.8039 | 29.8337 | 29.0496 | 34.7215 | 31.2300 | 28.5766 | 25.7183 | 32.7093 | 30.3576 | 28.2436 | 25.9449 | 32.6929 | 30.2041 | 28.0116 | 25.0329 | |

| UV | 34.6023 | 33.2470 | 30.6498 | 28.5669 | 34.3881 | 32.1598 | 29.0409 | 25.8483 | 34.3881 | 31.9343 | 28.5941 | 24.6804 | 34.2568 | 31.3453 | 27.8425 | 23.4028 | |

| HUTV | 34.7922 | 32.3350 | 30.4412 | 28.2654 | 34.1625 | 31.1241 | 27.7662 | 26.0231 | 34.3461 | 29.6436 | 27.7787 | 24.6458 | 34.0478 | 28.0687 | 26.4209 | 22.0203 | |

| SUV | 42.4435 | 38.0209 | 33.3810 | 32.5933 | 36.5716 | 37.4128 | 32.3467 | 28.0938 | 33.8128 | 32.9177 | 31.4965 | 25.5164 | 30.9471 | 31.2761 | 27.7703 | 22.6486 | |

| WDSUV | 46.4176 | 45.4022 | 36.8808 | 36.5418 | 42.7482 | 42.7082 | 32.2437 | 30.0206 | 41.6863 | 39.5000 | 33.5065 | 28.5151 | 39.0474 | 33.6656 | 31.1113 | 25.3288 | |

| Image | Method | r = 0.1 | r = 0.4 | r = 0.6 | r = 0.8 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Intensity | Intensity | Intensity | Intensity | ||||||||||||||

| 10 | 30 | 50 | 80 | 10 | 30 | 50 | 80 | 10 | 30 | 50 | 80 | 10 | 30 | 50 | 80 | ||

| MODIS data S1 periodical stripe | Degrade | 0.9117 | 0.7689 | 0.6737 | 0.5932 | 0.7748 | 0.4430 | 0.2898 | 0.1745 | 0.7192 | 0.3633 | 0.2067 | 0.1078 | 0.6572 | 0.2800 | 0.1426 | 0.0665 |

| BM3D | 0.8885 | 0.7157 | 0.5286 | 0.3559 | 0.7571 | 0.4117 | 0.2240 | 0.1027 | 0.7035 | 0.3360 | 0.1580 | 0.0609 | 0.6422 | 0.2585 | 0.1092 | 0.0382 | |

| SNRCNN | 0.9294 | 0.8076 | 0.6857 | 0.5844 | 0.8857 | 0.5505 | 0.3336 | 0.1890 | 0.8674 | 0.4595 | 0.2364 | 0.1148 | 0.8273 | 0.3320 | 0.1574 | 0.0692 | |

| GF-based | 0.9238 | 0.9109 | 0.8939 | 0.8656 | 0.9130 | 0.8733 | 0.8148 | 0.7469 | 0.9075 | 0.8454 | 0.8054 | 0.6668 | 0.8996 | 0.8257 | 0.7678 | 0.5675 | |

| WAFT | 0.9291 | 0.9072 | 0.8805 | 0.8654 | 0.9120 | 0.8827 | 0.8382 | 0.7885 | 0.9090 | 0.8690 | 0.8202 | 0.7479 | 0.9053 | 0.8591 | 0.8026 | 0.7105 | |

| UV | 0.9187 | 0.9020 | 0.8969 | 0.8849 | 0.9109 | 0.8794 | 0.8634 | 0.8059 | 0.9066 | 0.8609 | 0.8271 | 0.7169 | 0.9042 | 0.8536 | 0.8150 | 0.7050 | |

| HUTV | 0.9203 | 0.8988 | 0.8831 | 0.8185 | 0.9107 | 0.8759 | 0.8188 | 0.6720 | 0.9030 | 0.7609 | 0.7531 | 0.6151 | 0.9026 | 0.8460 | 0.6742 | 0.5601 | |

| SUV | 0.9955 | 0.9927 | 0.9892 | 0.9770 | 0.9837 | 0.9737 | 0.9591 | 0.9221 | 0.9542 | 0.9578 | 0.9203 | 0.7652 | 0.9450 | 0.9359 | 0.8502 | 0.7367 | |

| WDSUV | 0.9962 | 0.9941 | 0.9910 | 0.9859 | 0.9845 | 0.9800 | 0.9735 | 0.9485 | 0.9884 | 0.9719 | 0.9728 | 0.9402 | 0.9925 | 0.9687 | 0.9396 | 0.8902 | |

| MODIS data S1 nonperiodical stripe | Degrade | 0.9261 | 0.8009 | 0.7181 | 0.6489 | 0.7660 | 0.4441 | 0.2801 | 0.1671 | 0.7252 | 0.3758 | 0.2169 | 0.1169 | 0.6756 | 0.3052 | 0.1596 | 0.0758 |

| BM3D | 0.9003 | 0.7438 | 0.5654 | 0.4008 | 0.7497 | 0.4120 | 0.2154 | 0.0952 | 0.7094 | 0.3481 | 0.1662 | 0.0670 | 0.6605 | 0.2818 | 0.1216 | 0.0436 | |

| SNRCNN | 0.9325 | 0.8289 | 0.7241 | 0.6392 | 0.8807 | 0.5382 | 0.3183 | 0.1788 | 0.8640 | 0.4564 | 0.2448 | 0.1234 | 0.8294 | 0.3652 | 0.1750 | 0.0782 | |

| GF-based | 0.9245 | 0.8960 | 0.8883 | 0.8667 | 0.9138 | 0.8657 | 0.8292 | 0.7461 | 0.9078 | 0.8518 | 0.7975 | 0.6653 | 0.9024 | 0.8346 | 0.7666 | 0.5769 | |

| WAFT | 0.9292 | 0.9058 | 0.8913 | 0.8458 | 0.9164 | 0.8769 | 0.8410 | 0.7837 | 0.9026 | 0.8592 | 0.8114 | 0.7386 | 0.8994 | 0.8373 | 0.7951 | 0.7020 | |

| UV | 0.9191 | 0.9099 | 0.8942 | 0.8671 | 0.9114 | 0.8841 | 0.8563 | 0.7969 | 0.9067 | 0.8666 | 0.8210 | 0.7247 | 0.9026 | 0.8521 | 0.7848 | 0.6579 | |

| HUTV | 0.9207 | 0.8975 | 0.8724 | 0.8262 | 0.9106 | 0.8751 | 0.8034 | 0.7336 | 0.9039 | 0.8405 | 0.7913 | 0.6378 | 0.8981 | 0.8079 | 0.7519 | 0.4897 | |

| SUV | 0.9961 | 0.9938 | 0.9850 | 0.9770 | 0.9808 | 0.9745 | 0.9557 | 0.9113 | 0.9459 | 0.9246 | 0.8807 | 0.7459 | 0.9171 | 0.8743 | 0.7973 | 0.6599 | |

| WDSUV | 0.9953 | 0.9949 | 0.9920 | 0.9866 | 0.9934 | 0.9782 | 0.9703 | 0.9370 | 0.9890 | 0.9774 | 0.9349 | 0.8657 | 0.9825 | 0.9660 | 0.9100 | 0.8187 | |

| MODIS data S2 periodical stripe | Degrade | 0.7780 | 0.5538 | 0.4683 | 0.4096 | 0.4661 | 0.1396 | 0.0651 | 0.0308 | 0.3872 | 0.0970 | 0.0406 | 0.0169 | 0.3035 | 0.0624 | 0.0248 | 0.0098 |

| BM3D | 0.6868 | 0.4476 | 0.3275 | 0.2438 | 0.4151 | 0.1126 | 0.0428 | 0.0157 | 0.3449 | 0.0780 | 0.0267 | 0.0087 | 0.2702 | 0.0504 | 0.0166 | 0.0051 | |

| SNRCNN | 0.9078 | 0.6096 | 0.4681 | 0.3919 | 0.8217 | 0.2269 | 0.0854 | 0.0374 | 0.7746 | 0.1505 | 0.0500 | 0.0183 | 0.6371 | 0.0805 | 0.0282 | 0.0104 | |

| GF-based | 0.9698 | 0.9550 | 0.9059 | 0.9221 | 0.9588 | 0.9316 | 0.8831 | 0.6338 | 0.9470 | 0.9314 | 0.8148 | 0.4661 | 0.9593 | 0.9110 | 0.7185 | 0.3084 | |

| WAFT | 0.9656 | 0.9530 | 0.9143 | 0.9098 | 0.9595 | 0.9454 | 0.9034 | 0.8843 | 0.9578 | 0.9374 | 0.8950 | 0.8648 | 0.9583 | 0.9389 | 0.8931 | 0.8577 | |

| UV | 0.9637 | 0.9595 | 0.9613 | 0.9580 | 0.9620 | 0.9510 | 0.9488 | 0.9286 | 0.9606 | 0.9427 | 0.9340 | 0.8050 | 0.9618 | 0.9429 | 0.9370 | 0.8797 | |

| HUTV | 0.9531 | 0.9082 | 0.8978 | 0.7903 | 0.9460 | 0.8935 | 0.7997 | 0.5670 | 0.9380 | 0.8630 | 0.7290 | 0.4315 | 0.9407 | 0.8575 | 0.5210 | 0.3616 | |

| SUV | 0.9957 | 0.9947 | 0.9927 | 0.9890 | 0.9882 | 0.9846 | 0.9802 | 0.9679 | 0.9754 | 0.9748 | 0.9645 | 0.9099 | 0.9622 | 0.9578 | 0.9359 | 0.8876 | |

| WDSUV | 0.9986 | 0.9954 | 0.9970 | 0.9925 | 0.9899 | 0.9880 | 0.9865 | 0.9813 | 0.9959 | 0.9841 | 0.9768 | 0.9538 | 0.9947 | 0.9729 | 0.9609 | 0.9329 | |

| MODIS data S2 nonperiodical stripe | Degrade | 0.8019 | 0.6048 | 0.5321 | 0.4838 | 0.4669 | 0.1459 | 0.0706 | 0.0347 | 0.3926 | 0.0998 | 0.0419 | 0.0170 | 0.3275 | 0.0714 | 0.0282 | 0.0109 |

| BM3D | 0.7061 | 0.4880 | 0.3747 | 0.2937 | 0.4163 | 0.1183 | 0.0477 | 0.0192 | 0.3490 | 0.0798 | 0.0272 | 0.0084 | 0.2908 | 0.0569 | 0.0182 | 0.0053 | |

| SNRCNN | 0.9080 | 0.6415 | 0.5233 | 0.4605 | 0.8183 | 0.2150 | 0.0870 | 0.0386 | 0.7499 | 0.1467 | 0.0507 | 0.0183 | 0.6561 | 0.0962 | 0.0319 | 0.0112 | |

| GF-based | 0.9668 | 0.9483 | 0.9409 | 0.9090 | 0.9638 | 0.9231 | 0.8795 | 0.6440 | 0.9514 | 0.8842 | 0.8020 | 0.4692 | 0.9457 | 0.9052 | 0.7217 | 0.3296 | |

| WAFT | 0.9668 | 0.9430 | 0.9252 | 0.9145 | 0.9522 | 0.9313 | 0.9105 | 0.8692 | 0.9491 | 0.8746 | 0.8699 | 0.8550 | 0.9477 | 0.8968 | 0.8651 | 0.8437 | |

| UV | 0.9636 | 0.9582 | 0.9518 | 0.9336 | 0.9633 | 0.9498 | 0.9394 | 0.9042 | 0.9595 | 0.9237 | 0.8725 | 0.7702 | 0.9596 | 0.9219 | 0.8605 | 0.7199 | |

| HUTV | 0.9579 | 0.9059 | 0.8586 | 0.7686 | 0.9503 | 0.8932 | 0.8004 | 0.6863 | 0.9359 | 0.8441 | 0.7152 | 0.5715 | 0.9291 | 0.8178 | 0.7036 | 0.4286 | |

| SUV | 0.9966 | 0.9947 | 0.9893 | 0.9877 | 0.9895 | 0.9848 | 0.9754 | 0.9628 | 0.9423 | 0.9510 | 0.9007 | 0.7473 | 0.9124 | 0.9137 | 0.8696 | 0.6503 | |

| WDSUV | 0.9982 | 0.9965 | 0.9934 | 0.9941 | 0.9972 | 0.9875 | 0.9860 | 0.9772 | 0.9946 | 0.9635 | 0.9843 | 0.9599 | 0.9911 | 0.9534 | 0.9832 | 0.9608 | |

| Hyperspectral data S3 periodical stripe | Degrade | 0.8730 | 0.6499 | 0.5345 | 0.4711 | 0.6414 | 0.2375 | 0.1097 | 0.0548 | 0.5652 | 0.1661 | 0.0736 | 0.0304 | 0.4652 | 0.1152 | 0.0388 | 0.0114 |

| BM3D | 0.8165 | 0.5396 | 0.3183 | 0.1931 | 0.5973 | 0.1898 | 0.0569 | 0.0156 | 0.5250 | 0.1304 | 0.0397 | 0.0105 | 0.4278 | 0.0913 | 0.0177 | 0.0007 | |

| SNRCNN | 0.8876 | 0.6882 | 0.5256 | 0.4439 | 0.8456 | 0.3394 | 0.1397 | 0.0658 | 0.8267 | 0.2410 | 0.0894 | 0.0337 | 0.7293 | 0.1428 | 0.0452 | 0.0128 | |

| GF-based | 0.9591 | 0.9614 | 0.9537 | 0.9066 | 0.9584 | 0.9557 | 0.9260 | 0.7191 | 0.9563 | 0.9420 | 0.8969 | 0.5936 | 0.9563 | 0.9502 | 0.8354 | 0.4289 | |

| WAFT | 0.9500 | 0.9239 | 0.8561 | 0.8305 | 0.9369 | 0.9230 | 0.8470 | 0.7250 | 0.9323 | 0.9226 | 0.8402 | 0.6873 | 0.9323 | 0.9237 | 0.8369 | 0.6312 | |

| UV | 0.9403 | 0.9139 | 0.9128 | 0.8859 | 0.9405 | 0.9104 | 0.8999 | 0.7741 | 0.9404 | 0.9049 | 0.8844 | 0.6396 | 0.9406 | 0.9112 | 0.8868 | 0.6278 | |

| HUTV | 0.9199 | 0.8952 | 0.8750 | 0.7698 | 0.9177 | 0.8779 | 0.7889 | 0.5602 | 0.9119 | 0.8352 | 0.7178 | 0.5032 | 0.9127 | 0.8576 | 0.6043 | 0.4058 | |

| SUV | 0.9954 | 0.9852 | 0.9818 | 0.9191 | 0.9717 | 0.9782 | 0.9638 | 0.8278 | 0.9194 | 0.9706 | 0.9454 | 0.6888 | 0.9051 | 0.9616 | 0.9242 | 0.6142 | |

| WDSUV | 0.9955 | 0.9926 | 0.9872 | 0.9579 | 0.9953 | 0.9855 | 0.9681 | 0.8982 | 0.9933 | 0.9791 | 0.9714 | 0.9119 | 0.9912 | 0.9707 | 0.9587 | 0.8711 | |

| Hyperspectral data S3 nonperiodical stripe | Degrade | 0.8845 | 0.6818 | 0.5884 | 0.5363 | 0.6408 | 0.2301 | 0.1068 | 0.0512 | 0.5524 | 0.1599 | 0.0656 | 0.0248 | 0.5172 | 0.1451 | 0.0587 | 0.0205 |

| BM3D | 0.8272 | 0.5655 | 0.3600 | 0.2327 | 0.5963 | 0.1820 | 0.0548 | 0.0140 | 0.5120 | 0.1251 | 0.0325 | 0.0057 | 0.4791 | 0.1158 | 0.0325 | 0.0078 | |

| SNRCNN | 0.8844 | 0.6945 | 0.5650 | 0.4992 | 0.8478 | 0.3239 | 0.1322 | 0.0578 | 0.7923 | 0.2178 | 0.0784 | 0.0277 | 0.7556 | 0.1871 | 0.0669 | 0.0222 | |

| GF-based | 0.9595 | 0.9591 | 0.9545 | 0.9046 | 0.9573 | 0.9510 | 0.9205 | 0.7174 | 0.9564 | 0.9442 | 0.8859 | 0.5820 | 0.9533 | 0.9319 | 0.8448 | 0.4633 | |

| WAFT | 0.9516 | 0.9221 | 0.8885 | 0.8591 | 0.9386 | 0.9125 | 0.8719 | 0.7416 | 0.9296 | 0.9087 | 0.8378 | 0.6814 | 0.9280 | 0.8465 | 0.8276 | 0.6128 | |

| UV | 0.9408 | 0.9385 | 0.9107 | 0.8558 | 0.9403 | 0.9326 | 0.8834 | 0.7410 | 0.9398 | 0.9303 | 0.8711 | 0.6576 | 0.9376 | 0.9099 | 0.8134 | 0.5254 | |

| HUTV | 0.9216 | 0.8947 | 0.8639 | 0.7749 | 0.9153 | 0.8742 | 0.7572 | 0.6200 | 0.9142 | 0.8415 | 0.7490 | 0.4768 | 0.9085 | 0.7947 | 0.7095 | 0.3061 | |

| SUV | 0.9932 | 0.9858 | 0.9434 | 0.9206 | 0.9615 | 0.9331 | 0.9176 | 0.7922 | 0.9378 | 0.9216 | 0.8903 | 0.6224 | 0.8985 | 0.8609 | 0.7900 | 0.4566 | |

| WDSUV | 0.9980 | 0.9936 | 0.9822 | 0.9702 | 0.9920 | 0.9853 | 0.9618 | 0.9432 | 0.9909 | 0.9698 | 0.9663 | 0.8991 | 0.9857 | 0.9829 | 0.9579 | 0.8471 | |

| Hyperspectral data S4 periodical stripe | Degrade | 0.9584 | 0.8199 | 0.7163 | 0.6317 | 0.8601 | 0.5260 | 0.3422 | 0.2195 | 0.8169 | 0.4390 | 0.2551 | 0.1391 | 0.7571 | 0.3476 | 0.1849 | 0.0948 |

| BM3D | 0.9460 | 0.7821 | 0.6095 | 0.4384 | 0.8475 | 0.4969 | 0.2836 | 0.1353 | 0.8044 | 0.4136 | 0.2087 | 0.0809 | 0.7443 | 0.3263 | 0.1495 | 0.0525 | |

| SNRCNN | 0.9559 | 0.8602 | 0.7398 | 0.6365 | 0.9380 | 0.6237 | 0.3919 | 0.2439 | 0.9286 | 0.5309 | 0.2867 | 0.1476 | 0.8921 | 0.3987 | 0.2016 | 0.0986 | |

| GF-based | 0.9480 | 0.9473 | 0.9486 | 0.9181 | 0.9381 | 0.9474 | 0.9369 | 0.8234 | 0.9375 | 0.9462 | 0.9272 | 0.7548 | 0.9380 | 0.9407 | 0.9122 | 0.6662 | |

| WAFT | 0.9394 | 0.9061 | 0.8459 | 0.8293 | 0.9153 | 0.9060 | 0.8401 | 0.7740 | 0.9153 | 0.9055 | 0.8358 | 0.7199 | 0.9154 | 0.9059 | 0.8326 | 0.6822 | |

| UV | 0.9298 | 0.8910 | 0.8887 | 0.8706 | 0.9299 | 0.8888 | 0.8795 | 0.7893 | 0.9298 | 0.8857 | 0.8691 | 0.7197 | 0.9296 | 0.8900 | 0.8696 | 0.6976 | |

| HUTV | 0.9249 | 0.9035 | 0.8971 | 0.8135 | 0.9247 | 0.8984 | 0.8366 | 0.7085 | 0.9221 | 0.8533 | 0.7874 | 0.6494 | 0.9253 | 0.8771 | 0.7096 | 0.5733 | |

| SUV | 0.9935 | 0.9726 | 0.9701 | 0.9085 | 0.9666 | 0.9680 | 0.9567 | 0.8418 | 0.9184 | 0.9635 | 0.9025 | 0.7573 | 0.9140 | 0.9586 | 0.8936 | 0.7114 | |

| WDSUV | 0.9952 | 0.9876 | 0.9803 | 0.9575 | 0.9912 | 0.9831 | 0.9688 | 0.9204 | 0.9893 | 0.9785 | 0.9452 | 0.8773 | 0.9886 | 0.9756 | 0.9337 | 0.8802 | |

| Hyperspectral data S4 nonperiodical stripe | Degrade | 0.9561 | 0.8204 | 0.7227 | 0.6475 | 0.8559 | 0.5061 | 0.3098 | 0.1748 | 0.8040 | 0.4095 | 0.2266 | 0.1113 | 0.7621 | 0.3436 | 0.1737 | 0.0746 |

| BM3D | 0.9440 | 0.7837 | 0.6174 | 0.4525 | 0.8441 | 0.4784 | 0.2568 | 0.1076 | 0.7915 | 0.3855 | 0.1874 | 0.0680 | 0.7494 | 0.3226 | 0.1420 | 0.0428 | |

| SNRCNN | 0.9569 | 0.8559 | 0.7412 | 0.6524 | 0.9377 | 0.6050 | 0.3570 | 0.1925 | 0.9149 | 0.4877 | 0.2546 | 0.1185 | 0.8930 | 0.4047 | 0.1905 | 0.0776 | |

| GF-based | 0.9478 | 0.9458 | 0.9438 | 0.9166 | 0.9474 | 0.9443 | 0.9308 | 0.8142 | 0.9374 | 0.9431 | 0.9228 | 0.7403 | 0.9377 | 0.9446 | 0.9089 | 0.6613 | |

| WAFT | 0.9392 | 0.9047 | 0.8725 | 0.8543 | 0.9366 | 0.9033 | 0.8625 | 0.7609 | 0.9144 | 0.8995 | 0.8334 | 0.7219 | 0.9147 | 0.9003 | 0.8288 | 0.6813 | |

| UV | 0.9296 | 0.9220 | 0.8860 | 0.8407 | 0.9296 | 0.9196 | 0.8727 | 0.7674 | 0.9293 | 0.9191 | 0.8652 | 0.7142 | 0.9292 | 0.9178 | 0.8572 | 0.6605 | |

| HUTV | 0.9253 | 0.9041 | 0.8779 | 0.8052 | 0.9220 | 0.8978 | 0.8183 | 0.7265 | 0.9233 | 0.8708 | 0.8336 | 0.6308 | 0.9207 | 0.8259 | 0.7959 | 0.5198 | |

| SUV | 0.9865 | 0.9714 | 0.9263 | 0.9098 | 0.9598 | 0.9223 | 0.9075 | 0.8129 | 0.9473 | 0.9168 | 0.8966 | 0.7156 | 0.9201 | 0.9072 | 0.8709 | 0.6324 | |

| WDSUV | 0.9955 | 0.9911 | 0.9660 | 0.9611 | 0.9720 | 0.9848 | 0.9392 | 0.9313 | 0.9900 | 0.9812 | 0.9585 | 0.9084 | 0.9881 | 0.9545 | 0.9458 | 0.8550 | |

| Images | Index | BM3D | SNRCNN | GF Based | WAFT | UV | HUTV | SUV | WDSUV |

|---|---|---|---|---|---|---|---|---|---|

| MODIS data | MICV | 4.3145 | 4.7477 | 30.7270 | 36.5294 | 35.5363 | 40.6438 | 36.4977 | 37.1580 |

| band 27 R1 | MMRD | 0.3794 | 0.4570 | 9.8935 | 4.5328 | 2.6238 | 9.6883 | 0.4078 | 0.3357 |

| MODIS data | MICV | 4.8430 | 4.9733 | 32.7694 | 49.7900 | 44.1401 | 50.0661 | 43.5883 | 52.2439 |

| band 27 R2 | MMRD | 0.4644 | 0.1537 | 0.8864 | 0.8394 | 0.9264 | 0.7710 | 0.5938 | 0.1482 |

| MODIS data | MICV | 16.2057 | 18.7728 | 27.4309 | 27.0622 | 17.0865 | 29.0929 | 28.3908 | 29.1068 |

| band 30 R3 | MMRD | 0.0699 | 0.0266 | 0.1017 | 0.1677 | 0.0860 | 0.0786 | 0.0631 | 0.0367 |

| MODIS data | MICV | 32.7398 | 86.8249 | 68.4269 | 67.2392 | 79.0380 | 82.3611 | 77.5485 | 76.2834 |

| band 28 R4 | MMRD | 0.2426 | 0.3595 | 0.5278 | 0.1922 | 0.4178 | 0.2082 | 0.0723 | 0.0631 |

| Hyperion data | MICV | 67.8933 | 42.0641 | 33.7644 | 29.0568 | 33.2571 | 39.5301 | 33.7672 | 33.7672 |

| band 35 R5 | MMRD | 0.0802 | 0.0218 | 0.0274 | 0.0377 | 0.0284 | 0.0296 | 0 | 0 |

| Hyperion data | MICV | 26.3375 | 26.1986 | 23.0954 | 19.7025 | 23.3781 | 23.1603 | 23.7723 | 23.5074 |

| band 135 R6 | MMRD | 0.0474 | 0.0281 | 0.0370 | 0.0330 | 0.0419 | 0.0414 | 0.0245 | 0.0224 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Q.; Wang, Y.; Yan, X.; Gu, H. Remote Sensing Images Stripe Noise Removal by Double Sparse Regulation and Region Separation. Remote Sens. 2018, 10, 998. https://doi.org/10.3390/rs10070998

Song Q, Wang Y, Yan X, Gu H. Remote Sensing Images Stripe Noise Removal by Double Sparse Regulation and Region Separation. Remote Sensing. 2018; 10(7):998. https://doi.org/10.3390/rs10070998

Chicago/Turabian StyleSong, Qiong, Yuehuan Wang, Xiaoyun Yan, and Haiguo Gu. 2018. "Remote Sensing Images Stripe Noise Removal by Double Sparse Regulation and Region Separation" Remote Sensing 10, no. 7: 998. https://doi.org/10.3390/rs10070998