Large-Scale Oil Palm Tree Detection from High-Resolution Satellite Images Using Two-Stage Convolutional Neural Networks

Abstract

:1. Introduction

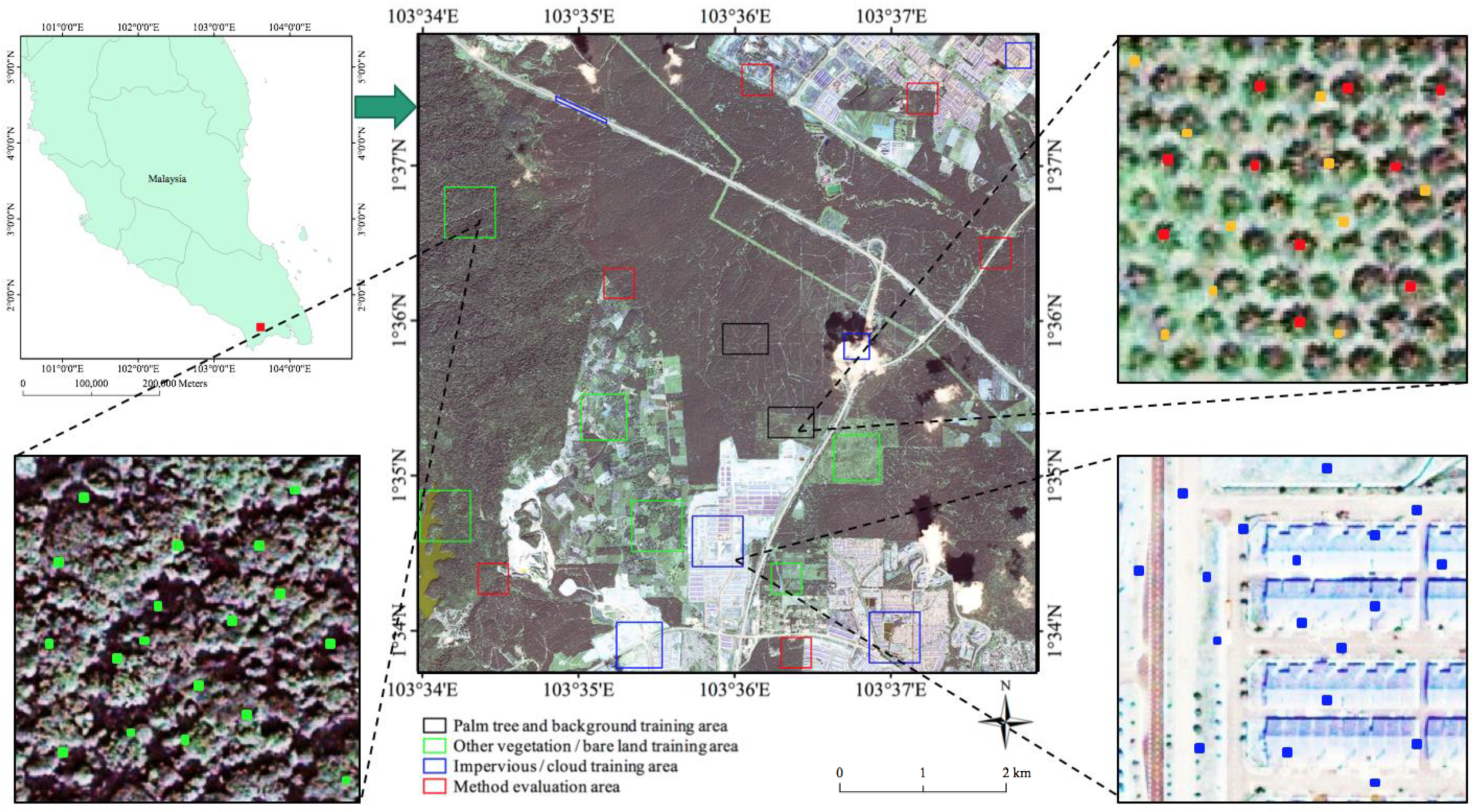

2. Study Area and Datasets

3. Methods

3.1. Motivations and Overview of Our Proposed Method

3.2. Training and Optimization of the TS-CNN

3.2.1. Training and Optimization of the CNN for Land Cover Classification

3.2.2. Training and Optimization of the CNN for Object Classification

3.3. Oil Palm Tree Detection in the Large-Scale Study Area

3.3.1. Overlapping Partitioning Based Large-Scale Image Division

3.3.2. Multi-Scale Sliding Window Based Image Dataset Collection and Label Prediction

3.3.3. Minimum Distance Filter Based Post-Processing

4. Experimental Results Analysis

4.1. Hyper-Parameter Setting and Classification Accuracies

4.2. Evaluation of the Oil Palm Tree Detection Results

4.3. Oil palm Tree Detection Results in the Whole Study Area

5. Discussion

5.1. Detection Results of Different CNN Architectures

5.2. The Detection Result Comparison of Different Methods

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cheng, Y.; Yu, L.; Xu, Y.; Lu, H.; Cracknell, A.P.; Kanniah, K.; Gong, P. Mapping oil palm extent in Malaysia using ALOS-2 PALSAR-2 data. Int. J. Remote Sens. 2018, 39, 432–452. [Google Scholar] [CrossRef]

- Lian, P.K.; Wilcove, D.S. Cashing in palm oil for conservation. Nature 2007, 448, 993–994. [Google Scholar]

- Chong, K.L.; Kanniah, K.D.; Pohl, C.; Tan, K.P. A review of remote sensing applications for oil palm studies. Geo-Spat. Inf. Sci. 2017, 20, 184–200. [Google Scholar] [CrossRef] [Green Version]

- Abram, N.K.; Meijaard, E.; Wilson, K.A.; Davis, J.T.; Wells, J.A.; Ancrenaz, M.; Budiharta, S.; Durrant, A.; Fakhruzzi, A.; Runting, R.K.; et al. Oil palm-community conflict mapping in Indonesia: A case for better community liaison in planning for development initiatives. Appl. Geogr. 2017, 78, 33–44. [Google Scholar] [CrossRef]

- Cheng, Y.; Yu, L.; Zhao, Y.; Xu, Y.; Hackman, K.; Cracknell, A.P.; Gong, P. Towards a global oil palm sample database: Design and implications. Int. J. Remote Sens. 2017, 38, 4022–4032. [Google Scholar] [CrossRef]

- Barnes, A.D.; Jochum, M.; Mumme, S.; Haneda, N.F.; Farajallah, A.; Widarto, T.H.; Brose, U. Consequences of tropical land use for multitrophic biodiversity and ecosystem functioning. Nat. Commun. 2014, 5, 5351. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Busch, J.; Ferretti-Gallon, K.; Engelmann, J.; Wright, M.; Austin, K.G.; Stolle, F.; Turubanova, S.; Potapov, P.V.; Margono, B.; Hansen, M.C.; et al. Reductions in emissions from deforestation from Indonesia’s moratorium on new oil palm, timber, and logging concessions. Proc. Natl. Acad. Sci. USA 2015, 112, 1328–1333. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheng, Y.; Yu, L.; Xu, Y.; Liu, X.; Lu, H.; Cracknell, A.P.; Kanniah, K.; Gong, P. Towards global oil palm plantation mapping using remote-sensing data. Int. J. Remote Sens. 2018, 39, 5891–5916. [Google Scholar] [CrossRef]

- Koh, L.P.; Miettinen, J.; Liew, S.C.; Ghazoul, J. Remotely sensed evidence of tropical peatland conversion to oil palm. Proc. Natl. Acad. Sci. USA 2011, 1, 201018776. [Google Scholar] [CrossRef]

- Carlson, K.M.; Heilmayr, R.; Gibbs, H.K.; Noojipady, P.; Burns, D.N.; Morton, D.C.; Walker, N.F.; Paoli, G.D.; Kremen, C. Effect of oil palm sustainability certification on deforestation and fire in Indonesia. Proc. Natl. Acad. Sci. USA 2018, 115, 121–126. [Google Scholar] [CrossRef]

- Cracknell, A.P.; Kanniah, K.D.; Tan, K.P.; Wang, L. Evaluation of MODIS gross primary productivity and land cover products for the humid tropics using oil palm trees in Peninsular Malaysia and Google Earth imagery. Int. J. Remote Sens. 2013, 34, 7400–7423. [Google Scholar] [CrossRef]

- Tan, K.P.; Kanniah, K.D.; Cracknell, A.P. Use of UK-DMC 2 and ALOS PALSAR for studying the age of oil palm trees in southern peninsular Malaysia. Int. J. Remote Sens. 2013, 34, 7424–7446. [Google Scholar] [CrossRef]

- Gutiérrez-Vélez, V.H.; DeFries, R. Annual multi-resolution detection of land cover conversion to oil palm in the Peruvian Amazon. Remote Sens. Environ. 2013, 129, 154–167. [Google Scholar] [CrossRef]

- Cheng, Y.; Yu, L.; Cracknell, A.P.; Gong, P. Oil palm mapping using Landsat and PALSAR: A case study in Malaysia. Int. J. Remote Sens. 2016, 37, 5431–5442. [Google Scholar] [CrossRef]

- Balasundram, S.K.; Memarian, H.; Khosla, R. Estimating oil palm yields using vegetation indices derived from Quickbird. Life Sci. J. 2013, 10, 851–860. [Google Scholar]

- Thenkabail, P.S.; Stucky, N.; Griscom, B.W.; Ashton, M.S.; Diels, J.; Van Der Meer, B.; Enclona, E. Biomass estimations and carbon stock calculations in the oil palm plantations of African derived savannas using IKONOS data. Int. J. Remote Sens. 2004, 25, 5447–5472. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2016, 9, 22. [Google Scholar] [CrossRef]

- Wulder, M.; Niemann, K.O.; Goodenough, D.G. Local maximum filtering for the extraction of tree locations and basal area from high spatial resolution imagery. Remote Sens. Environ. 2000, 73, 103–114. [Google Scholar] [CrossRef]

- Wang, L.; Gong, P.; Biging, G.S. Individual tree-crown delineation and treetop detection in high-spatial-resolution aerial imagery. Photogramm. Eng. Remote Sens. 2004, 70, 351–357. [Google Scholar] [CrossRef]

- Jiang, H.; Chen, S.; Li, D.; Wang, C.; Yang, J. Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method. Remote Sens. 2017, 9, 721. [Google Scholar] [CrossRef]

- Pitkänen, J. Individual tree detection in digital aerial images by combining locally adaptive binarization and local maxima methods. Can. J. For. Res. 2001, 31, 832–844. [Google Scholar] [CrossRef]

- Daliakopoulos, I.N.; Grillakis, E.G.; Koutroulis, A.G.; Tsanis, I.K. Tree crown detection on multispectral VHR satellite imagery. Photogramm. Eng. Remote Sens. 2009, 75, 1201–1211. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Dos Santos, A.M.; Mitja, D.; Delaître, E.; Demagistri, L.; de Souza Miranda, I.; Libourel, T.; Petit, M. Estimating babassu palm density using automatic palm tree detection with very high spatial resolution satellite images. J. Environ. Manag. 2017, 193, 40–51. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, J.; He, Y.; Caspersen, J.P.; Jones, T.A. Delineating Individual Tree Crowns in an Uneven-Aged, Mixed Broadleaf Forest Using Multispectral Watershed Segmentation and Multiscale Fitting. IEEE J. Sel. Top. Appl. Earth Observ. 2017, 10, 1390–1401. [Google Scholar] [CrossRef]

- Chemura, A.; van Duren, I.; van Leeuwen, L.M. Determination of the age of oil palm from crown projection area detected from WorldView-2 multispectral remote sensing data: The case of Ejisu-Juaben district, Ghana. ISPRS J. Photogramm. 2015, 100, 118–127. [Google Scholar] [CrossRef]

- Pouliot, D.A.; King, D.J.; Bell, F.W.; Pitt, D.G. Automated tree crown detection and delineation in high-resolution digital camera imagery of coniferous forest regeneration. Remote Sens. Environ. 2002, 82, 322–334. [Google Scholar] [CrossRef]

- Shafri, H.Z.; Hamdan, N.; Saripan, M.I. Semi-automatic detection and counting of oil palm trees from high spatial resolution airborne imagery. Int. J. Remote Sens. 2011, 32, 2095–2115. [Google Scholar] [CrossRef]

- Srestasathiern, P.; Rakwatin, P. Oil palm tree detection with high resolution multi-spectral satellite imagery. Remote Sens. 2014, 6, 9749–9774. [Google Scholar] [CrossRef]

- Gomes, M.F.; Maillard, P.; Deng, H. Individual tree crown detection in sub-meter satellite imagery using Marked Point Processes and a geometrical-optical model. Remote Sens. Environ. 2018, 211, 184–195. [Google Scholar] [CrossRef]

- Ardila, J.P.; Bijker, W.; Tolpekin, V.A.; Stein, A. Multitemporal change detection of urban trees using localized region-based active contours in VHR images. Remote Sens. Environ. 2012, 124, 413–426. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Hung, C.; Bryson, M.; Sukkarieh, S. Multi-class predictive template for tree crown detection. ISPRS J. Photogramm. 2012, 68, 170–183. [Google Scholar] [CrossRef]

- Dalponte, M.; Ørka, H.O.; Ene, L.T.; Gobakken, T.; Næsset, E. Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens. Environ. 2014, 140, 306–317. [Google Scholar] [CrossRef]

- Malek, S.; Bazi, Y.; Alajlan, N.; AlHichri, H.; Melgani, F. Efficient framework for palm tree detection in UAV images. IEEE J. Sel. Top. Appl. Earth Observ. 2014, 7, 4692–4703. [Google Scholar] [CrossRef]

- López-López, M.; Calderón, R.; González-Dugo, V.; Zarco-Tejada, P.J.; Fereres, E. Early detection and quantification of almond red leaf blotch using high-resolution hyperspectral and thermal imagery. Remote Sens. 2016, 8, 276. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Chen, X.; Xiang, S.; Liu, C.L.; Pan, C.H. Vehicle detection in satellite images by hybrid deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Han, W.; Feng, R.; Wang, L.; Cheng, Y. A semi-supervised generative framework with deep learning features for high-resolution remote sensing image scene classification. ISPRS J. Photogramm. 2017, 145, 23–43. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE T. Geosci. Remote. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Gong, P.; Feng, D.; Li, C.; Clinton, N. Stacked autoencoder-based deep learning for remote-sensing image classification: A case study of African land-cover mapping. Int. J. Remote Sens. 2016, 37, 5632–5646. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Unsupervised spectral–spatial feature learning via deep residual Conv–Deconv network for hyperspectral image classification. IEEE T. Geosci. Remote. 2018, 56, 391–406. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Observ. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Makantasis, K.; Doulamis, A.D.; Doulamis, N.D.; Nikitakis, A. Tensor-based classification models for hyperspectral data analysis. IEEE T. Geosci. Remote. Sens. 2018, 9, 1–15. [Google Scholar] [CrossRef]

- Zou, Q.; Ni, L.; Zhang, T.; Wang, Q. Deep Learning Based Feature Selection for Remote Sensing Scene Classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2321–2325. [Google Scholar] [CrossRef]

- Hu, F.; Xia, G.S.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Liu, Y.; Zhong, Y.; Fei, F.; Zhu, Q.; Qin, Q. Scene Classification Based on a Deep Random-Scale Stretched Convolutional Neural Network. Remote Sens. 2018, 10, 444. [Google Scholar] [CrossRef]

- Rey, N.; Volpi, M.; Joost, S.; Tuia, D. Detecting animals in African Savanna with UAVs and the crowds. Remote Sens. Environ. 2017, 200, 341–351. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Ding, P.; Zhang, Y.; Deng, W.J.; Jia, P.; Kuijper, A. A light and faster regional convolutional neural network for object detection in optical remote sensing images. ISPRS J. Photogramm. 2018, 141, 208–218. [Google Scholar] [CrossRef]

- Tang, T.; Zhou, S.; Deng, Z.; Lei, L.; Zou, H. Arbitrary-oriented vehicle detection in aerial imagery with single convolutional neural networks. Remote Sens. 2017, 9, 1170. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Zhang, L. An efficient and robust integrated geospatial object detection framework for high spatial resolution remote sensing imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef]

- Li, W.; He, C.; Fang, J.; Fu, H. Semantic Segmentation based Building Extraction Method using Multi-source GIS Map Datasets and Satellite Imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 238–241. [Google Scholar]

- Fu, G.; Liu, C.; Zhou, R.; Sun, T.; Zhang, Q. Classification for high resolution remote sensing imagery using a fully convolutional network. Remote Sens. 2017, 9, 498. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Y.; Zhang, Q.; Xiang, S.; Pan, C. Gated convolutional neural network for semantic segmentation in high-resolution images. Remote Sens. 2017, 9, 446. [Google Scholar] [CrossRef]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-learning versus OBIA for scattered shrub detection with Google earth imagery: Ziziphus Lotus as case study. Remote Sens. 2017, 9, 1220. [Google Scholar] [CrossRef]

- Pibre, L.; Chaumont, M.; Subsol, G.; Ienco, D.; Derras, M. How to deal with multi-source data for tree detection based on deep learning. In Proceedings of the GlobalSIP: Global Conference on Signal and Information Processing, Montreal, QC, Canada, 14–16 November 2017. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Li, W.; Fu, H.; You, Y.; Yu, L.; Fang, J. Parallel Multiclass Support Vector Machine for Remote Sensing Data Classification on Multicore and Many-Core Architectures. IEEE J. Sel. Top. Appl. Earth Observ. 2017, 10, 4387–4398. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Dalponte, M.; Ene, L.T.; Marconcini, M.; Gobakken, T.; Næsset, E. Semi-supervised SVM for individual tree crown species classification. ISPRS J. Photogramm. 2015, 110, 77–87. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

| Index | Region 1 | Region 2 | Region 3 | Region 4 | Region 5 | Region 6 |

|---|---|---|---|---|---|---|

| TP | 445 | 1034 | 956 | 1114 | 385 | 1028 |

| FP | 12 | 47 | 35 | 38 | 35 | 29 |

| FN | 33 | 44 | 35 | 78 | 40 | 49 |

| Precision | 97.37% | 95.65% | 96.47% | 96.70% | 91.67% | 97.26% |

| Recall | 93.10% | 95.92% | 96.47% | 93.46% | 90.59% | 95.45% |

| F1-score | 95.19% | 95.79% | 96.47% | 95.05% | 91.12% | 96.34% |

| CNN 1 | CNN 2 | Region 1 | Region 2 | Region 3 | Region 4 | Region 5 | Region 6 |

|---|---|---|---|---|---|---|---|

| LeNet | LeNet | 90.79% | 93.69% | 94.22% | 90.78% | 79.73% | 94.86% |

| LeNet | AlexNet | 93.86% | 94.42% | 96.83% | 94.52% | 90.11% | 96.53% |

| LeNet | VGG-19 | 91.12% | 90.73% | 92.57% | 90.06% | 76.72% | 93.92% |

| AlexNet | LeNet | 91.92% | 93.39% | 94.14% | 91.69% | 80.18% | 95.09% |

| AlexNet | AlexNet | 95.19% | 95.79% | 96.47% | 95.05% | 91.12% | 96.34% |

| AlexNet | VGG-19 | 89.53% | 91.07% | 93.45% | 91.70% | 77.30% | 94.14% |

| VGG-19 | LeNet | 88.96% | 86.77% | 92.37% | 89.18% | 72.48% | 93.51% |

| VGG-19 | AlexNet | 91.35% | 87.53% | 95.37% | 93.16% | 84.28% | 95.08% |

| VGG-19 | VGG-19 | 86.22% | 85.81% | 92.58% | 86.91% | 67.20% | 91.63% |

| Method Type | Method Name | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Our proposed method | CNN+CNN | 445 | 12 | 33 | 97.37% | 93.10% | 95.19% |

| Existing methods using single classifer | CNN | 458 | 147 | 20 | 75.70% | 95.82% | 84.58% |

| SVM | 448 | 221 | 30 | 66.97% | 93.72% | 78.12% | |

| RF | 457 | 253 | 21 | 64.37% | 95.61% | 76.94% | |

| ANN | 438 | 296 | 40 | 59.67% | 91.63% | 72.28% | |

| Two-stage methods using different combinations of classifiers | SVM+SVM | 403 | 76 | 75 | 84.13% | 84.31% | 84.22% |

| RF+RF | 448 | 37 | 30 | 92.37% | 93.72% | 93.04% | |

| ANN+ANN | 428 | 40 | 50 | 91.45% | 89.54% | 90.49% | |

| CNN+SVM | 434 | 24 | 44 | 94.76% | 90.79% | 92.74% | |

| CNN+RF | 440 | 25 | 38 | 94.62% | 92.05% | 93.32% | |

| CNN+ANN | 427 | 41 | 51 | 91.24% | 89.33% | 90.27% |

| Method Type | Method Name | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Our proposed method | CNN+CNN | 1034 | 47 | 44 | 95.65% | 95.92% | 95.79% |

| Existing methods using single classifer | CNN | 1047 | 121 | 31 | 89.64% | 97.12% | 93.23% |

| SVM | 1041 | 153 | 37 | 87.19% | 96.57% | 91.64% | |

| RF | 1061 | 166 | 17 | 86.47% | 98.42% | 92.06% | |

| ANN | 1036 | 223 | 42 | 82.29% | 96.10% | 88.66% | |

| Two-stage methods using different combinations of classifiers | SVM+SVM | 1030 | 116 | 48 | 89.88% | 95.55% | 92.63% |

| RF+RF | 1011 | 74 | 67 | 93.18% | 93.78% | 93.48% | |

| ANN+ANN | 984 | 104 | 94 | 90.44% | 91.28% | 90.86% | |

| CNN+SVM | 990 | 59 | 88 | 94.38% | 91.84% | 93.09% | |

| CNN+RF | 1008 | 65 | 70 | 93.94% | 93.51% | 93.72% | |

| CNN+ANN | 986 | 109 | 92 | 90.05% | 91.47% | 90.75% |

| Method Type | Method Name | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Our proposed method | CNN+CNN | 956 | 35 | 35 | 96.47% | 96.47% | 96.47% |

| Existing methods using single classifer | CNN | 976 | 169 | 15 | 85.24% | 98.49% | 91.39% |

| SVM | 960 | 218 | 31 | 81.49% | 96.87% | 88.52% | |

| RF | 970 | 264 | 21 | 78.61% | 97.88% | 87.19% | |

| ANN | 944 | 277 | 47 | 77.31% | 95.26% | 85.35% | |

| Two-stage methods using different combinations of classifiers | SVM+SVM | 938 | 151 | 53 | 86.13% | 94.65% | 90.19% |

| RF+RF | 957 | 136 | 34 | 87.56% | 96.57% | 91.84% | |

| ANN+ANN | 930 | 147 | 61 | 86.35% | 93.84% | 89.94% | |

| CNN+SVM | 944 | 72 | 47 | 92.91% | 95.26% | 94.07% | |

| CNN+RF | 953 | 71 | 38 | 93.07% | 96.17% | 94.59% | |

| CNN+ANN | 929 | 124 | 62 | 88.22% | 93.74% | 90.90% |

| Method Type | Method Name | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Our proposed method | CNN+CNN | 1114 | 38 | 78 | 96.70% | 93.46% | 95.05% |

| Existing methods using single classifer | CNN | 1137 | 139 | 55 | 89.11% | 95.39% | 92.14% |

| SVM | 1113 | 218 | 79 | 83.62% | 93.37% | 88.23% | |

| RF | 1136 | 275 | 56 | 80.51% | 95.30% | 87.28% | |

| ANN | 1095 | 287 | 97 | 79.23% | 91.86% | 85.08% | |

| Two-stage methods using different combinations of classifiers | SVM+SVM | 1006 | 117 | 186 | 89.58% | 84.40% | 86.91% |

| RF+RF | 1125 | 200 | 67 | 84.91% | 94.38% | 89.39% | |

| ANN+ANN | 1078 | 213 | 114 | 83.50% | 90.44% | 86.83% | |

| CNN+SVM | 1104 | 94 | 88 | 92.15% | 92.62% | 92.38% | |

| CNN+RF | 1124 | 85 | 68 | 92.97% | 94.30% | 93.63% | |

| CNN+ANN | 1083 | 173 | 109 | 86.23% | 90.86% | 88.48% |

| Method Type | Method Name | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Our proposed method | CNN+CNN | 385 | 35 | 40 | 91.67% | 90.59% | 91.12% |

| Existing methods using single classifer | CNN | 388 | 298 | 37 | 56.56% | 91.29% | 69.85% |

| SVM | 379 | 737 | 46 | 33.96% | 89.18% | 49.19% | |

| RF | 392 | 932 | 33 | 29.61% | 92.24% | 44.83% | |

| ANN | 365 | 742 | 60 | 32.97% | 85.88% | 47.65% | |

| Two-stage methods using different combinations of classifiers | SVM+SVM | 377 | 499 | 48 | 43.04% | 88.71% | 57.96% |

| RF+RF | 382 | 830 | 43 | 31.52% | 89.88% | 46.67% | |

| ANN+ANN | 356 | 614 | 69 | 36.70% | 83.76% | 51.04% | |

| CNN+SVM | 376 | 125 | 49 | 75.05% | 88.47% | 81.21% | |

| CNN+RF | 389 | 113 | 36 | 77.49% | 91.53% | 83.93% | |

| CNN+ANN | 360 | 153 | 65 | 70.18% | 84.71% | 76.76% |

| Method Type | Method Name | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Our proposed method | CNN+CNN | 1028 | 29 | 49 | 97.26% | 95.45% | 96.34% |

| Existing methods using single classifer | CNN | 1056 | 56 | 21 | 94.96% | 98.05% | 96.48% |

| SVM | 1055 | 87 | 22 | 92.38% | 97.96% | 95.09% | |

| RF | 1059 | 85 | 18 | 92.57% | 98.33% | 95.36% | |

| ANN | 1032 | 157 | 45 | 86.80% | 95.82% | 91.09% | |

| Two-stage methods using different combinations of classifiers | SVM+SVM | 1024 | 56 | 53 | 94.81% | 95.08% | 94.95% |

| RF+RF | 997 | 45 | 80 | 95.68% | 92.57% | 94.10% | |

| ANN+ANN | 970 | 60 | 107 | 94.17% | 90.06% | 92.07% | |

| CNN+SVM | 1034 | 46 | 43 | 95.74% | 96.01% | 95.87% | |

| CNN+RF | 1036 | 52 | 41 | 95.22% | 96.19% | 95.70% | |

| CNN+ANN | 1009 | 70 | 68 | 93.51% | 93.69% | 93.60% |

| Method | Region 1 | Region 2 | Region 3 | Region 4 | Region 5 | Region 6 | Average |

|---|---|---|---|---|---|---|---|

| CNN+CNN | 95.19% | 95.79% | 96.47% | 95.05% | 91.12% | 96.34% | 94.99% |

| CNN | 84.58% | 93.23% | 91.39% | 92.14% | 69.85% | 96.48% | 87.95% |

| SVM | 78.12% | 91.64% | 88.52% | 88.23% | 49.19% | 95.09% | 81.80% |

| RF | 76.94% | 92.06% | 87.19% | 87.28% | 44.83% | 95.36% | 80.61% |

| ANN | 72.28% | 88.66% | 85.35% | 85.08% | 47.65% | 91.09% | 78.35% |

| SVM+SVM | 84.22% | 92.63% | 90.19% | 86.91% | 57.96% | 94.95% | 84.48% |

| RF+RF | 93.04% | 93.48% | 91.84% | 89.39% | 46.67% | 94.10% | 84.75% |

| ANN+ANN | 90.49% | 90.86% | 89.94% | 86.83% | 51.04% | 92.07% | 83.54% |

| CNN+SVM | 92.74% | 93.09% | 94.07% | 92.38% | 81.21% | 95.87% | 91.56% |

| CNN+RF | 93.32% | 93.72% | 94.59% | 93.63% | 83.93% | 95.70% | 92.48% |

| CNN+ANN | 90.27% | 90.75% | 90.90% | 88.48% | 76.76% | 93.60% | 88.46% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Dong, R.; Fu, H.; Yu, L. Large-Scale Oil Palm Tree Detection from High-Resolution Satellite Images Using Two-Stage Convolutional Neural Networks. Remote Sens. 2019, 11, 11. https://doi.org/10.3390/rs11010011

Li W, Dong R, Fu H, Yu L. Large-Scale Oil Palm Tree Detection from High-Resolution Satellite Images Using Two-Stage Convolutional Neural Networks. Remote Sensing. 2019; 11(1):11. https://doi.org/10.3390/rs11010011

Chicago/Turabian StyleLi, Weijia, Runmin Dong, Haohuan Fu, and Le Yu. 2019. "Large-Scale Oil Palm Tree Detection from High-Resolution Satellite Images Using Two-Stage Convolutional Neural Networks" Remote Sensing 11, no. 1: 11. https://doi.org/10.3390/rs11010011

APA StyleLi, W., Dong, R., Fu, H., & Yu, L. (2019). Large-Scale Oil Palm Tree Detection from High-Resolution Satellite Images Using Two-Stage Convolutional Neural Networks. Remote Sensing, 11(1), 11. https://doi.org/10.3390/rs11010011