Abstract

The last decade has seen an exponential increase in the application of unmanned aerial vehicles (UAVs) to ecological monitoring research, though with little standardisation or comparability in methodological approaches and research aims. We reviewed the international peer-reviewed literature in order to explore the potential limitations on the feasibility of UAV-use in the monitoring of ecological restoration, and examined how they might be mitigated to maximise the quality, reliability and comparability of UAV-generated data. We found little evidence of translational research applying UAV-based approaches to ecological restoration, with less than 7% of 2133 published UAV monitoring studies centred around ecological restoration. Of the 48 studies, > 65% had been published in the three years preceding this study. Where studies utilised UAVs for rehabilitation or restoration applications, there was a strong propensity for single-sensor monitoring using commercially available RPAs fitted with the modest-resolution RGB sensors available. There was a strong positive correlation between the use of complex and expensive sensors (e.g., LiDAR, thermal cameras, hyperspectral sensors) and the complexity of chosen image classification techniques (e.g., machine learning), suggesting that cost remains a primary constraint to the wide application of multiple or complex sensors in UAV-based research. We propose that if UAV-acquired data are to represent the future of ecological monitoring, research requires a) consistency in the proven application of different platforms and sensors to the monitoring of target landforms, organisms and ecosystems, underpinned by clearly articulated monitoring goals and outcomes; b) optimization of data analysis techniques and the manner in which data are reported, undertaken in cross-disciplinary partnership with fields such as bioinformatics and machine learning; and c) the development of sound, reasonable and multi-laterally homogenous regulatory and policy framework supporting the application of UAVs to the large-scale and potentially trans-disciplinary ecological applications of the future.

1. Introduction

Despite the common public perception of unmanned aerial vehicles (UAVs, drones) as a recent innovation predominantly for military application [1] or for photography [2], surveying or mapping [3], UAVs have been utilised as tools for biological management and environmental monitoring for nearly four decades [4]. Unmanned helicopters were employed for crop spraying in agricultural systems as early as 1990, and this method now accounts for >90% of all crop spraying in Japan [5]. UAVs are commonly used to conduct broad-acre monitoring of crop health and yield in Europe and the United States [6,7,8,9]. Additionally, they have been used for atmospheric monitoring projects such as the measurement of trace compounds since the early 1990s [10,11]. While cost and availability have been a broad constraint to the use of UAVs in research projects, the last decade has seen an exponential increase in their application to ecological research.

Rapid advances in technology have produced increasingly smaller, cheaper drones capable of mounting a wider variety of sensors that are able to more rapidly collect a greater diversity of data [12,13,14]. In addition, improved battery technology has greatly improved the endurance offered by electric models [9,15]. Although highly specialised UAVs require significant financial and infrastructure investment, entry-level drones are commercially available at low cost and are increasingly capable of capturing meaningful data—for example, estimates have been provided of UAV platform and sensor costs ranging from 2000 euros for less advanced systems, up to 120,000 euros for large UAVs with hyperspectral sensors [13]. While even low-cost UAVs may represent a significant expenditure for smaller projects, the price is still low in comparison to obtaining remotely sensed date from manned aircraft or satellites. Correspondingly, the last decade in particular has seen UAVs employed with increasing novelty as tools to address complex ecological questions [16,17,18,19]. One field that has benefited from this application in particular is the monitoring of environmental rehabilitation and ecological restoration [20].

Traditional monitoring of ecological restoration is often undertaken manually and can involve transects or quadrats over large areas and frequently on sandy or rocky substrates in remote regions [21,22]. UAVs on the other hand are able to traverse large areas in very short periods of time [23,24], are unaffected by the difficulty of the terrain [25], and have negligible impact upon ecologically sensitive areas or species of interest [16,26,27]. UAVs can collect large amounts of high-resolution images even during short flights [28], allowing scientists to conduct virtual site surveys. In addition to factory-standard digital Red-Green-Blue (RGB) cameras carried by most commercial UAVs, sensors also include multispectral and hyperspectral cameras, thermal imaging, and LiDAR units [9]. Although the accessibility and capability of both UAVs and UAV-mounted sensors continue to improve [13,25], this improvement has occurred asynchronously in relation to translation research and development for the effective, replicable and accurate use of UAVs in the monitoring of ecological restoration.

Historically, robots have been employed to undertake ‘the three D’s’; tasks that are considered too dirty, dangerous or dull for humans [29]. Although this also reflects the focus of early UAV application and research [4], we propose the future direction of UAV work is another four D’s: the collection of detailed data over difficult or delicate terrain. However, full realization of the potential of UAV use in ecological monitoring requires more than simple technological innovation and improvement. The effective implementation of UAV technology by ecologists at the required scales and replicability is likely to be constrained by inconsistency in the application of different sensors to the monitoring of target landforms, organisms and ecosystems, a degree of uncertainty around the optimization of data analysis techniques and reporting to meet project-specific monitoring goals and objectives, and the absence of sound, reasonable and multi-laterally homogenous regulatory and policy framework development in alignment with increasing societal and scientific expectations and aspirations for ecological recovery. We discuss the potential implications of these limitations on the feasibility of UAV use in the monitoring of ecological restoration, and recommend how they may be addressed in order to maximise the quality, reliability and comparability of UAV-generated data.

2. Materials and Methods

We compiled a database of peer-reviewed literature composed of studies employing UAVs in the monitoring of ecological recovery. Ecological recovery projects employ a wide range of terminology depending upon the particular goals of individual projects [30]. For the purposes of this review, we use the term ‘restoration’ following the widely accepted terminology of McDonald et al., defined as ‘the process of assisting the recovery of an ecosystem that has been damaged, degraded or destroyed’ [31,32]. Additional search terms were ‘UAV’, ‘UAS’, ‘RPAS’, ‘drone’ AND ‘monitoring’ AND ‘restoration’, ‘rehabilitation’, ‘recovery’, ‘revegetation’, or ‘remediation’. Three databases were included in compiling the literature, including Google Scholar (date range from 1950–2019), Web of Science (all databases, date range from 1950–2019), and Scopus (all documents, all years). The results from literature searches are presented in a PRISMA 2009 flow diagram (Figure S1).

Searches returned 121 results on Web of Science, 235 results on Scopus, and 61,553 results on Google Scholar. The extreme magnitude of the difference between databases seems to be a result of how searches were conducted. While Google Scholar returned numerous papers that only contained keywords within the references section, this did not occur in searches conducted on Web of Science or Scopus. Removal of duplicates and out-of-scope papers (e.g., UAV-use in monitoring the restoration of building facades, or articles on the recovery of UAVs that had lost signal) resulted in 2133 articles relating to UAV-use in monitoring broadly. These predominantly comprised articles of silvicultural or agricultural monitoring application (e.g., 210 articles returned by “UAV forest monitoring”, 206 articles returned by “UAV agriculture monitoring”, and 238 articles returned by “UAV crop monitoring”). In total 56 papers related to UAV-use in ecological recovery monitoring, of which 48 studies presenting experimental data were included in analyses (Table S1).

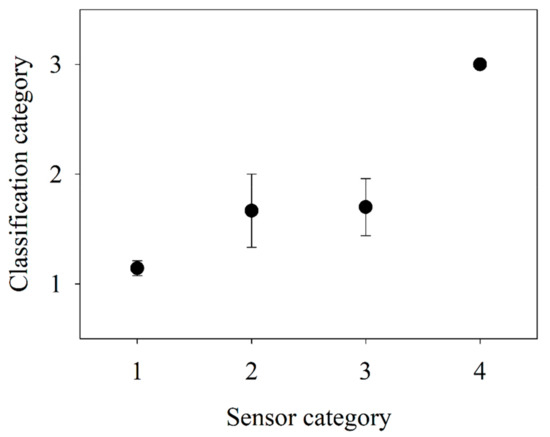

Publications were sorted into categorical variables based on publication type (e.g., studies presenting experimental data, theoretical development, literature reviews); ecological recovery terminology (restoration, rehabilitation, recovery, revegetation or remediation); pre-recovery land use (agricultural, natural); UAV type (e.g., multirotor, helicopter, fixed-wing or not stated); maximum number of sensors employed in a single flight (one, two, three, or four); type of sensor(s) employed (RGB, modified-RGB (here referring to commercial RGB cameras that have been modified to detect near-infrared (NIR) light), Multispectral, Hyperspectral, Thermal, LiDAR); and method of analysing captured data (manual, object-based, supervised machine-learning). For the purposes of analysis, sensors were classified into four categories of increasing technological complexity. The classification increased in complexity from ‘Non-complex’ sensors including RGB-alone (category 1) or modified-RGB cameras (category 2), to ‘complex sensors’, defined here as those designed to capture non-visible light, including multispectral sensors (category 3) and other (Hyperspectral, thermal, LiDAR; category 4). Similarly, analytical techniques were classified in increasing complexity from manual classification (category 1), to Object-Based Image Analysis (OBIA; category 2), and machine learning (category 3). While automated classification methods can be performed at either pixel level or on image objects, OBIA was commonly used throughout the reviewed papers in order to denote the use of a manually generated rulesets being used to classify images that were segmented into image objects, most commonly through the program eCognition. While OBIA is technically an approach classifying images-based upon created image objects rather than on a per-pixel basis that can be used with any means of classification, we adopt this terminology when referring to any instance of user-defined image-object classification for consistency with the published literature.

Pearson’s Chi-square tests were undertaken to compare differences between all categorical variables (SPSS Statistics 25, IBM, USA), with statistical significance determined by p ≤ 0.05. Multinomial logistic regression (SPSS Statistics 25, IBM, USA) was undertaken to assess the relationship between sensor complexity (categorized) and the complexity of analytics (categorised) in published studies, including year of study publication as a control variable.

3. Results

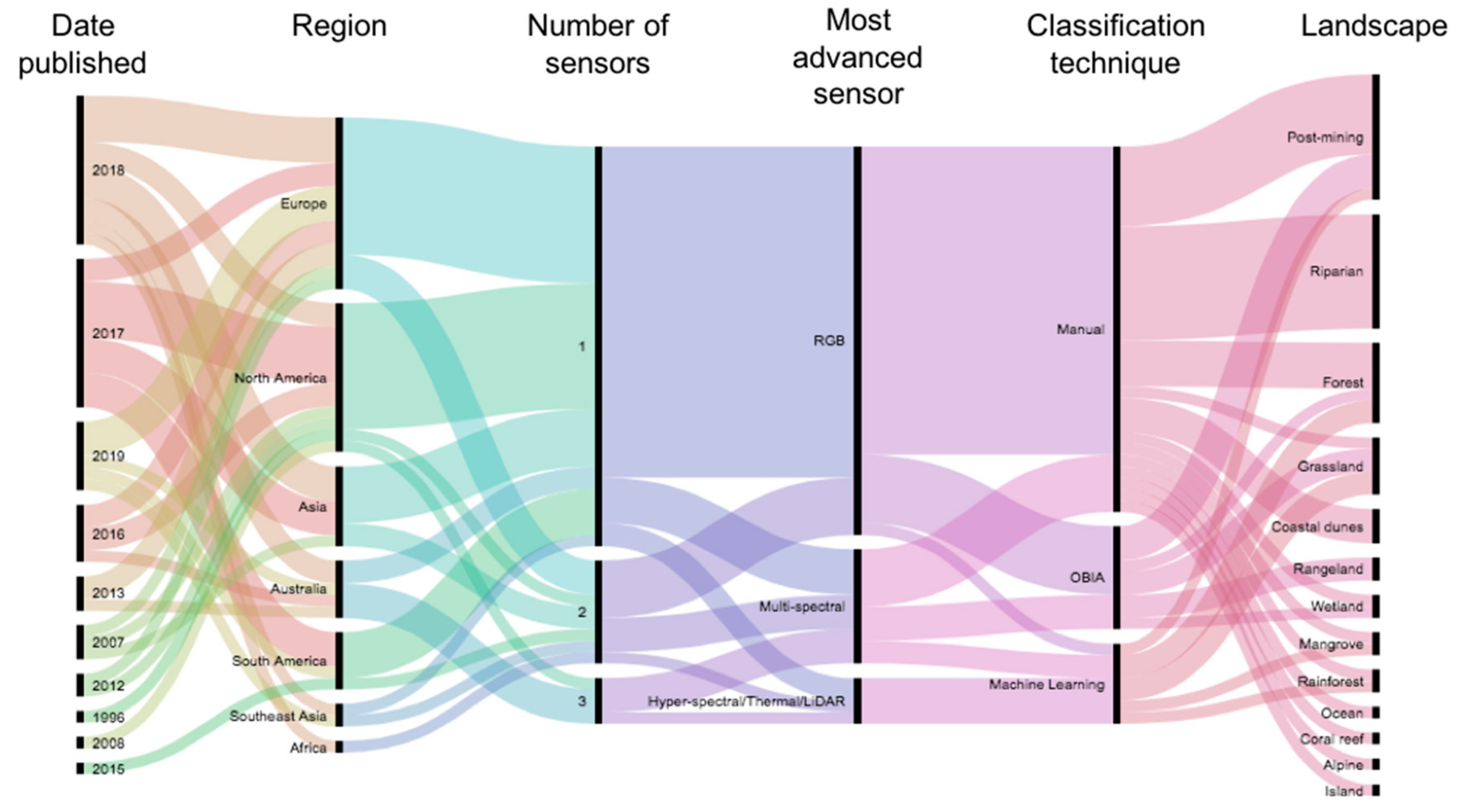

3.1. Date and Origin of Studies

Two thirds of the studied literature had been published since 2017 (Figure 1), and only one publication predated 2007 (published in 1996). Studies were significantly more likely to originate from Europe (31% of papers) or North America (29%) than other regions (χ2 = 23.46, d.f. = 5, p = 0.001). Far fewer studies were returned from regions such as China (13%), Australia and Central and South America (10% each), southeast Asian countries (6%), and Africa (2%).

Figure 1.

Alluvial diagram illustrating the proportion of research publications from 1996 to 2019 from a literature search over Scopus, Web of Science and Google Scholar providing empirical data on the use of UAVs in the monitoring of ecological recovery in terms of including date of publication, region of study interest, ecological recovery terminology utilised, maximum number of sensors utilised in a single flight, most advanced sensor type utilised during the study, captured imagery classification technique, and landscape of study interest.

3.2. Terminology

‘Restoration’ was the most commonly employed terminology in the literature analysed (> 70% of published studies) followed by ‘recovery’ (ca. 30%), while ‘rehabilitation’, ‘revegetation’, and ‘remediation’ were infrequently used (8–13% in each case; Figure 1). Studies frequently employed multiple terms in relation to the same ecological monitoring project, with a third of studies using two or more terms (most commonly ‘restoration’ and ‘recovery’).

3.3. Platform and Sensors

Studies employed a relatively even use of fixed-wing (42%) and multirotor (38%) UAVs (including two articles that utilised both), and these platforms were significantly more common in monitoring than other platforms (χ2 = 49.38, d.f. = 5, p < 0.001). Additional studies also employed unmanned helicopters (two studies) or paramotors (one study), while ten percent of studies presented no information about the type of UAV utilised (Figure 1).

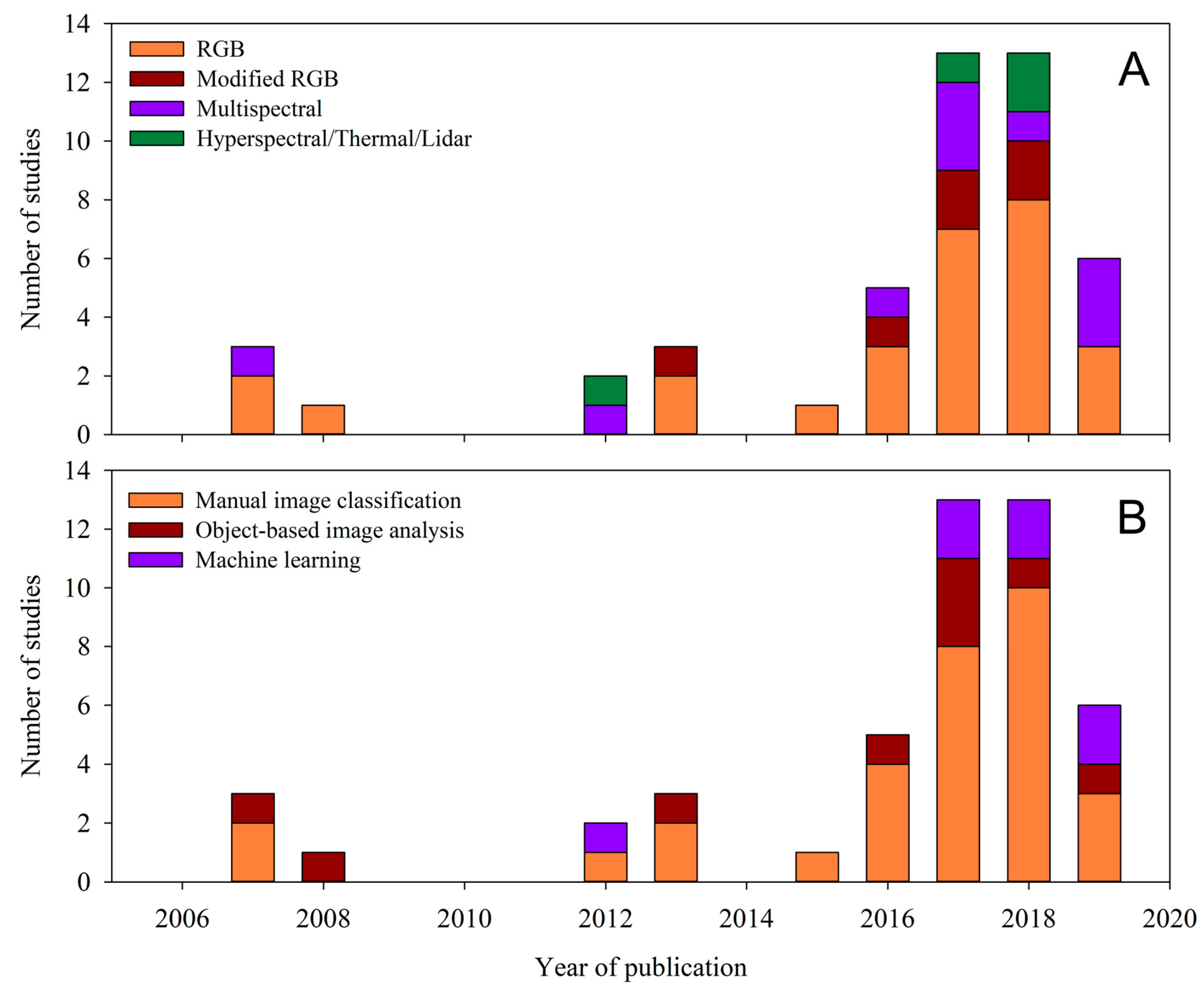

Studies overwhelmingly employed only a single sensor (73%; χ2 = 34.63, d.f. = 2, p < 0.001). Although 13 studies utilised multiple sensors in data capture, only five employed two sensors simultaneously on flights and only a single study demonstrated the use of more than two sensors simultaneously (however, this comprised two duplicate sensor pairs). RGB cameras were by far the most commonly utilised sensors in the monitoring of ecological recovery (employed in nearly 85% of studies; χ2 = 49.38, d.f. = 5, p < 0.001), with more complex non-RGB sensors (e.g., LiDAR, hyperspectral sensors) uncommonly employed (29% of studies). Where more complex non-RGB sensors were used, they were often employed in combination with RGB cameras (half of the 14 studies). RGB cameras were the only sensor employed in nearly 60% of the literature, while true multispectral sensors were employed in 11 studies (22% of studies), hyperspectral sensors in three studies, and thermal cameras and LiDAR sensors in one study each (Figure 2).

Figure 2.

Number of analysed studies employing sensors of various complexity (A) and captured image classification techniques of varying complexity (B) in relation to year of publication for UAV-based studies monitoring ecological recovery. * Data were collected up until March 2019, thus data are presented for only the first quarter of 2019.

3.4. Classification and Processing of Captured Data

While all studies utilised manual image classification, the majority (67% of studies; χ2 = 24.12, d.f. = 3, p < 0.001) used solely manual classification, without utilising any automated methods. More complex methods of image classification included OBIA (19%), with accuracy assessments undertaken manually, and machine learning (15%), again with accuracy assessments undertaken manually. Almost all studies utilising OBIA undertook classification in the eCognition environment (89%; Figure 2), while studies utilising supervised machine learning employed eight different algorithms with little comparability in methodology. No studies compared the results of classification by machine learning with OBIA.

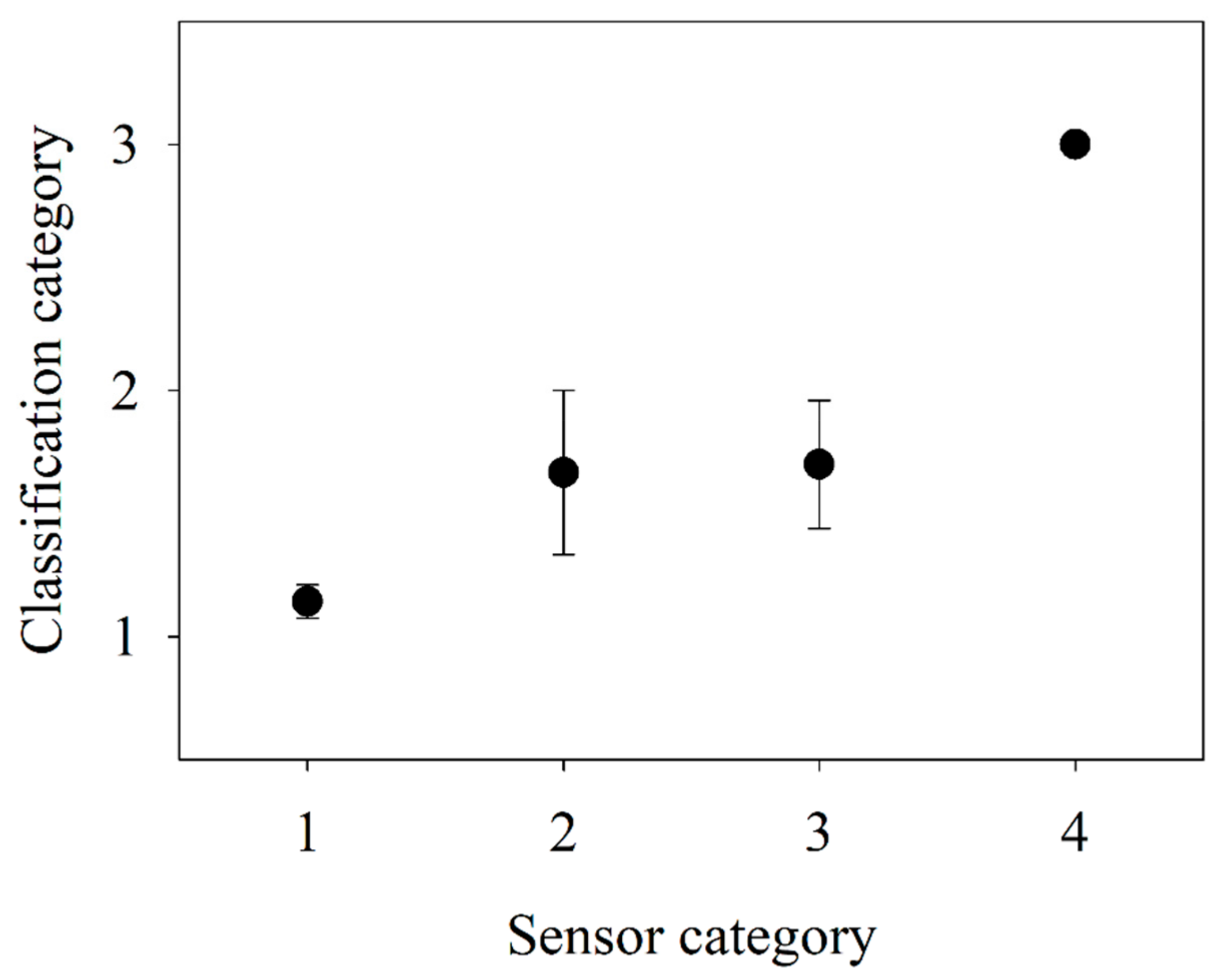

Multinomial logistic regression indicated a statistically significant relationship was present between sensor complexity and the complexity of classification with year as a control variable (χ2 = 43.68, d.f. = 24, p = 0.008). Likelihood Ratio Tests indicating that the effect of sensor complexity on the complexity of analytics was significant (χ2 = 30.11, d.f. = 6, p < 0.001) while the effect of year was not (χ2 = 16.34, d.f. = 18, p = 0.569), indicating that this trend was unlikely to reflect the application of more technically complex platforms by more recent studies (Figure 3).

Figure 3.

Association between categorised sensor complexity and categorised classification technique complexity in analysed studies of UAV-based monitoring of ecological recovery. Annotated lettering represents the results of pairwise tests among sensor complexity categories. Values followed by the same letters are not significantly different at p = 0.05.

4. Discussion

The application of UAVs in plant health monitoring is well established for agriculture [9,33,34,35,36] and forestry [14,37,38]. However, there is little evidence of translational research applying these approaches to ecological restoration, with UAV use in the monitoring of ecological recovery comprising less than 7% of studies (56 out of 2133 studies) of the UAV monitoring literature. Importantly, we found no evidence of UAV assisted plant condition analysis being proven despite this claim by many in the UAV vegetation assessment industry. This low representation may reflect the nascence of UAVs for ecological monitoring, as > 65% of all literature analysed was published in the three years preceding this study. However, trends observed in the growing body of literature in this field suggest a level of regional bias, poor reporting of project-specific monitoring goals and outcomes, a degree of inconsistency in the application of different sensors to achieving monitoring goals, and low comparability between image processing techniques. We propose that if UAVs are to represent the future of ecological monitoring, research requires a) greater consistency in the application of different platforms and sensors to the monitoring of target landforms, organisms and ecosystems, underpinned by clearly articulated monitoring goals and outcomes; b) optimization of data analysis techniques and the manner in which data are reported, undertaken in cross-disciplinary partnership with fields such as bioinformatics and machine learning; c) the development of sound, reasonable and multi-laterally homogenous regulatory and policy frameworks supporting the application of UAVs to the large-scale and potentially trans-boundary ecological applications of the future; and d) links to plant physiologists to validate UAV-based interpretations of plant health and vegetation condition.

4.1. Regional Bias in UAV Application

Despite the increasingly lower costs of UAVs and their sensor payloads, the use of UAVs in restoration monitoring is still geographically limited. Much of the literature analysed (ca. 58% of studies) originated from Europe and North America, with most remaining studies from China, Australia, and Central and South America (Figure 1). However, given the relatively low skillset required for UAV use [39], UAVs are a viable prospect for monitoring of restoration and conservation projects in developing countries [40]. The coming years are likely to see a far greater global application of UAV monitoring research, particularly as developing countries begin to take advantage of the increasing availability and affordability of UAVs.

4.2. A Need to Realise the Full Potential of UAV Platforms and Sensors in Monitoring Ecological Recovery

Despite the increasing complexity, affordability and reliability of commercially available UAVs, the use of UAVs in ecological recovery monitoring is lagging compared with the rapidly growing portfolio of their novel application in other ecological contexts such as fauna monitoring [40,41,42]. Where studies have utilised UAVs for rehabilitation or restoration applications, there continues to be a propensity for single-sensor monitoring using commercially available drones fitted with modest-resolution RGB sensors (Figure 1). Fixed wing and multirotor UAVs were used in similar numbers, perhaps due to the different scales of operation. While multirotor UAVs have several advantages over fixed wing platforms, such as reduced vibration and a smaller required area for takeoff and landing, the higher cruise altitude and greater endurance of fixed wing UAVs makes them a superior choice when monitoring large areas that do not require sub-centimetre resolution [13]. Very few articles sourced (29% of studies) employed complex sensors or multiple-sensor assemblies (Figure 1). The strong correlation between the application of these complex sensors and increasingly detailed image analytical techniques (Figure 3), both representing comparatively expensive technologies, suggests that cost may be a primary constraint to their wide application in research.

RGB imagery represents the most rudimentary remote sensing option for UAV use, but the near-universal application of RGB sensors in the literature analysed highlights their significant utility (and accessibility) for restoration monitoring. For example, low-altitude high-resolution RGB imagery has been used to accurately estimate tree height, crown diameter and biomass in forested areas [14,43,44,45], and has been employed to determine the presence or absence of target plant species [46,47,48,49]. One particularly attractive feature of RGB imagery is its capacity to be stitched into large orthomosaics using, for example, Structure from Motion (SfM) techniques [50]. Orthomosaics retain the ground sampling distance (GSD) of initial images, and high-resolution imagery provides a high level of detail facilitating visual recognition and classification of plant and animal species or individuals [23]. SfM technology also allows for the creation of digital elevation models (DEMs) from RGB images. DEMs created in this manner can approach the accuracy of more expensive and complex sensors such as LiDAR, and have been employed for measurements of tree height and biomass estimation [51,52]. Studies have used DEMs generated from UAV imagery for the creation of slope maps [53], and for the monitoring of the stability of tailings stockpiles [54]. Given the accuracy of these DEMs and the cost of LiDAR surveys, geomorphological mapping from RGB imagery obtained from UAVs is a viable alternative [3].

We found few examples of fauna monitoring using UAVs in a restoration context, potentially reflecting the global paucity of literature relating to fauna recovery following disturbance [55]. However, RGB-equipped UAVs have been employed to assess distribution and density in fauna species that are challenging to survey on foot, such as orangutan (Pongo abelii) [56] and seabird colonies [57,58]. Their application appears most suitable for the covert observation and recording of ecological, behavioural and demographic data from fauna communities in poorly accessible habitats [59,60,61], and UAV-derived count data can be up to twice as accurate than ground-based counts [62,63]. Although the ontogenetic and species-specific responses of fauna to UAVs remain unknown [64,65], particularly in regard to behavioural responses of fauna following interactions with UAVs [66,67,68], it seems likely that UAV-use in fauna monitoring will rapidly expand as technology improves and costs decline [42]. For example, many GPS units attached to animals for research are accessed by remote download, and UAVs may facilitate both target location via VHF signal and remote download of GPS data without the need to approach, or disturb, the animal.

Multi- and hyperspectral imagery has been employed to successfully discriminate between horticultural and agricultural crops on the basis of spectral signature [18,69,70,71], to estimate leaf carotenoid content in vineyards [72], to determine plant water stress in commercial citrus orchards [73], assess the health of pest-afflicted crops [74], and estimate the leaf area index of wheat [7]. However, there are few examples of multi- or hyperspectral sensor application in native species ecological recovery monitoring (only 23% of studies used a multispectral sensor, and only 6% used a hyperspectral sensor), possibly due to the markedly higher cost of these sensors compared with RGB sensors. Although it should be noted that commercial RGB cameras can be modified in such a way that they can detect red-edge and near infra-red light in a similar fashion to multispectral sensors, and that this technique has been used effectively, for example, to map the distribution and health of different species in vegetation surveys of forested areas and peat bogs [18,20]. Combinations of RGB and multispectral imagery may allow for high levels of accuracy in species-level discrimination, which would be of significant utility for the identification and potential classification of small plants (e.g., seedlings), estimation of invasive plant cover, or the targeted mapping of rare or keystone species in restoration projects.

Where multiple sensors have been employed, they often required multiple flights with sensor payloads being exchanged between flights [75,76,77]. Thermal imagery was used by Iizuka et al. [76] to monitor thermal trends in peat forest, and a LiDAR/hyperspectral fusion has been proven to be more effective in vegetation classification than either sensor alone [77]. UAVs equipped with thermal sensors have been used to locate and monitor fauna species such as deer, kangaroos and koalas [78,79], including large mammals of conservation concern such as white rhinos (Ceratotherium simum) [80]. They have also been applied to detecting water stress in citrus orchards [73], olive orchards [6], and barley fields [81], although only at small scales (<32 ha). More advanced sensors such as multispectral and thermal cameras may also have utility in the monitoring of disturbance-related environmental issues important for some rehabilitation and restoration scenarios, such as methane seepage, acid mine drainage and collapse hazards in near-surface underground mining [82]. However, further work is required to translate thermal imagery studies conducted in agricultural or forestry settings to a restoration context.

4.3. Analysis and Reporting of Restoration Monitoring Data

Comparison of the data gathered by UAVs is limited by considerable disparity in the analytical and processing methods used by various studies. While manual classification of imagery is generally undertaken comparably across all studies, it can be slow, time-consuming, and has potential for operator error, offsetting the time benefits of UAV-based monitoring [28]. Thus, automated image classification is a clear direction for the technology to maximise the utility, efficiency and cost-effectiveness inherent in UAV monitoring. Importantly, techniques such as OBIA and machine learning have proven to be of significant utility in UAV monitoring to date [35].

In OBIA, images are split into small “objects” on the basis of spectral similarity and are then classified using a defined set of rules [35]. The advantage of this method is that the objects created can be classified based on contextual information such as an object’s shape, size, and texture, which provides additional capacity to the spectral characteristics [83]. These factors make object-based image analysis an extremely useful tool for restoration monitoring, as species-level classification can be undertaken on imagery of sufficiently high resolution. The first OBIA software on the market was eCognition [83], and this continues to be employed almost ubiquitously (89% of studies). eCognition has been employed in a diverse range of applications, for example to identify and count individual plant species of interest within a study area from multispectral UAV imagery [84], to map weed abundance in commercial crops [85,86], and to identify infestations of oak splendour beetles in oak trees [38]. Studies employing eCognition for automated imagery classification in the context of ecological recovery generally undertook relatively simple tasks such as vegetation classification and counting [20,46,47], generating maps of vegetation cover over time [87], and identifying terraces on a plateau [88]. However, some recent studies have broadened the scope of their classification processes and assessed additional information in order to provide a greater level of detail. For example, while many studies identified areas of bare ground, Johansen et al. [89] further classified bare ground based on topographical position. While most studies on species discrimination were content to only identify target species, Baena et al. [18] further separated the keystone species Prosopis pallida (Algarrobo) into distinct classes representing the health of the tree supplemented by ground truthing. Even without the additional spectral bands offered by multispectral imagery, object-based image analysis is a powerful classification tool, in some applications, such as the classification of coastal fish nursery grounds by identifying seabed cover type [90]. Notably, this study compared OBIA with non-OBIA and two different methods of machine learning, and found OBIA to be the most accurate. Given the ability of OBIA to layer the output from multiple sensors into one project for classification, the technique will only increase in utility as more and more sensors become practical for UAV-based deployment. OBIA represents a powerful tool for reducing the workload required for repeated monitoring of restoration areas.

Machine learning can be separated into supervised and unsupervised methods [17]. Both have been applied to UAV surveys to good effect. Unsupervised machine learning relies on user input to define how many classes an image should be separated into, and then classifies each pixel in such a way that the mean similarity between each class is minimised [17]. This technique has been applied successfully to UAV imagery to classify vernal pool habitats [17], to classify vegetation in mountain landscapes [91], and to detect deer in forested areas [78]. Supervised classification relies on the input of selected examples as training data, but provides a higher level of accuracy [17]. However, supervised learning requires sufficient training data to capture the variance of the targets, and should avoid spatially dependent samples [92]. For example, one study that assessed the automated identification of cars from UAV imagery required 1.2 million vehicle images as training data, all of a common required size, to minimise false negatives [93]. Negative examples must also be provided. However, despite these requirements, machine learning is a powerful tool for automated classification and has previously been used in UAV-based studies to accurately classify tree crowns in orchards [6,71], to identify different species of trees and weeds [94,95], to detect algae in river systems [96], to estimate biomass of wheat and barley crops [34], and to identify and map riparian invasive species [84]. Several of the reviewed studies (15%) applied machine learning techniques to ecological recovery projects, reporting accuracies over 90% in creating classified vegetation maps of forests [76,97] and restored quarries [98]. Overall, supervised classification returns superior levels of accuracy compared with unsupervised classification, particularly in cases with minimal spectral differentiation [35].

While UAVs can rapidly and effectively map restoration areas with multiple different sensors, the increasingly large volumes of data gathered render image classification a complex task. Although machine learning programs and other automated methods of image classification can overcome this limitation, there appears to be little consistency in the approaches that have been employed in image classification automation. We found studies utilised an even mix of machine learning and OBIA approaches, with little comparability or uniformity in specific software or methodology used. Only three studies provided a comparison of different methods of automated classification. While eCognition was by far the most common software package employed in automated image classification, it was generally utilised without reference to the methodological approaches of previous studies and few articles considered other potential options for classification. We propose that the apparent lack of clarity surrounding the most appropriate methodological approaches to image classification in ecological monitoring could be addressed through greater collaboration between restoration ecologists and specialised data analysts, remote sensing experts, and computer scientists to ensure that the most accurate and efficient methods are being used. Additionally, consideration should be paid to whether or not UAV-based remote sensing is the most appropriate method of data acquisition for the specific requirements of each project.

It is also noteworthy to recognise the increasing use of UAV-based LiDAR, which is rapidly becoming a practical alternative to UAV-based imagery in situations where penetration of water of plant canopy is desired in order to image underlying topography [99]. Although drone laser scanning technology (e.g., LiDAR) is currently more commonly employed by non-UAV remote sensing platforms (e.g., manned aircraft and satellites), increasing technological development of these sensors will improve accessibility of this technology as a surveying option and thus greatly improve its utility for UAV application into the future [99].

4.4. Regulatory and Community Expectations of Restoration Monitoring

The regulatory and community expectations of restoration monitoring continue to increase as the international community aspire towards better ecological recovery outcomes at larger scales [30,32]. Monitoring must be tied to specific targets and measurable goals and objectives identified at the start of the project, and the establishment of baseline data, as well as the collection of data at appropriate intervals after restoration works, is fundamental for achieving appropriate measures of success [32]. Additionally, sampling units must be of an appropriate size for the attributes measured, and must be replicated sufficiently within the site to allow for meaningful interpretation [32]. These requirements concur with the possibilities offered by UAV-based remote sensing, as highly detailed data can be gathered quickly and easily from small to medium areas (up to ca. 250 hectares with current technology) [100].

The National Standards for the Practice of Ecological Restoration [32] state that restoration science and practice are synergistic, particularly in the field of monitoring. Restoration practice is often determined by legislative requirements, and thus legislative requirements should be supported by scientific knowledge. While UAVs are being increasingly integrated into aviation legislation worldwide, and following local aviation law is undeniably important, legislation covering the use of UAVs in restoration monitoring is also needed to provide guidelines for practitioners to follow. However, the confusion in the use of UAVs ranging from choice of platform, choice of sensor, and choice of classification method is such that enacting legislative requirements would be of very little value. Until there is a strong scientific consensus on best practice, there should be no legislative guidelines introduced. Rather, effort should be focused towards conducting further studies in order to bring about consensus.

5. Conclusions

Monitoring of ecological restoration efforts is a requirement for ensuring goals are met, determining trajectories and averting potential failures. However, despite technological advances in its practice, ecological monitoring generally continues to be conducted with a ‘boots on the ground’ philosophy. Although UAV-mounted sensors are increasingly being used in novel ways to monitor a wide variety of indicators of ecosystem health, the technology is consistently applied to examine only highly specific aims or questions and fails to consider the wide and increasing potential for capturing associated ecological data. The ongoing miniaturization, affordability and accessibility of UAV-mounted sensors presents an increasing opportunity for their application to broad-scale restoration monitoring. Although the wide application and low cost of RGB sensors mean they are likely to remain a staple component of the UAV-based monitoring toolbox into the future, sensing in the visible spectrum alone may represent a missed opportunity to gather significantly more complex and meaningful data where project goals and finance allow. We propose that the collection of default RGB imagery is self-limiting, and the future of monitoring lies in UAVs equipped with multiple sensors that can provide a complete understanding of restoration efforts in the span of a single flight, supported by robust computer-aided analytical technologies to mitigate time-intensive image classification processes. However, few studies currently utilise more than one or two sensors, and even those that do use three or more require multiple flights with different drones due to weight and power limitations.

The ecological monitoring industry requires more resilient and reliable UAVs capable of multiple sensor payloads, enabling more effective data acquisition. However, future UAV research efforts should also adopt a more consistent and replicable approach to undertaking ecological research, incorporating a broader scope of complementary sensors into UAV-based monitoring projects and undertaking image analysis and classification using comparable methodological approaches. Although we are rapidly approaching an era in which UAVs are likely to represent the most cost-efficient and effective monitoring technology for ecological recovery projects, the full potential of UAVs as ecological monitoring tools is unlikely to be realized without greater trans-disciplinary collaboration between industry, practitioners and academia to create evidence-based frameworks.

Supplementary Materials

The following are available online at https://www.mdpi.com/2072-4292/11/10/1180/s1, Figure S1: “The PRISMA flow diagram for Buters et al. (2019), detailing the databases searched, the number of non-duplicate articles found, and the final number of relevant articles retrieved, after discarding papers that did not present experimental results.”, Table S1: “All analysed unmanned aerial vehicle (UAV) studies providing empirical data on the use of UAVs in the monitoring of ecological recovery by Buters et al. 2019, including year of publication, region of study interest, landscape of study interest, ecological recovery terminology utilised, UAV platform employed, captured imagery classification technique, maximum number of sensors utilised in a single flight, and most advanced sensor type utilised during the study.”

Author Contributions

Conceptualization, T.M.B., A.T.C., & D.B.; methodology, T.M.B. & A.T.C.; formal analysis, A.T.C., T.M.B.; investigation, T.M.B.; data curation, T.M.B.; writing—original draft preparation, T.M.B., P.W.B., & A.T.C.; writing—review and editing, T.M.B., A.T.C., P.WB, D.B, T.R, & K.W.D.; visualization, A.T.C., T.M.B., & K.W.D.; supervision, A.T.C., D.B., P.W.B., T.R., & K.W.D.; project administration, A.T.C., P.W.B, D.B, T.R, & K.W.D.; funding acquisition, K.W.D.

Funding

T.B. received a scholarship from the Centre for Mine Site Restoration, Curtin University. This research was supported by the Australian Government through the Australian Research Council Industrial Transformation Training Centre for Mine Site Restoration (Project Number ICI150100041).

Acknowledgments

T.M.B. thanks Renee Young and Vanessa MacDonald for logistical assistance in undertaking fieldwork, and for support throughout the project.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shaw, I.G.R. Predator Empire: The Geopolitics of US Drone Warfare. Geopolitics 2013, 18, 536–559. [Google Scholar] [CrossRef]

- Schmidt, H. From a bird’s eye perspective: Aerial drone photography and political protest. A case study of the Bulgarian #resign movement 2013. Digit. Icons Stud. Russ. Eur. Cent. Eur. New Med. 2015, 13, 1–27. [Google Scholar]

- Hugenholtz, C.H.; Whitehead, K.; Brown, O.W.; Barchyn, T.E.; Moorman, B.J.; LeClair, A.; Riddell, K.; Hamilton, T. Geomorphological mapping with a small unmanned aircraft system (sUAS): Feature detection and accuracy assessment of a photogrammetrically-derived digital terrain model. Geomorphology 2013, 194, 16–24. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned Aircraft Systems in Remote Sens. and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Warwick, G. AUVSI—Precision Agriculture will Lead Civil UAS. 2014. Available online: http://aviationweek.com/blog/auvsi-precision-agriculture-will-lead-civil-uas (accessed on 15 March 2019).

- Berni, J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sens. for Vegetation Monitoring from an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hively, W.D.; Fujikawa, S.; Linden, D.; Daughtry, C.S.; McCarty, G. Acquisition of NIR-Green-Blue Digital Photographs from Unmanned Aircraft for Crop Monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R.; et al. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Langford, J.S. New aircraft platforms for earth system science: an opportunity for the 1990s. In Proceedings of the 17th Congress of the International Council of the Aeronautical Sciences, Sweden, Stockholm, 9–14 September 1990. [Google Scholar]

- Conniff, R. Drones are Ready for Takeoff. 2012. Available online: http://www.smithsonianmag.com/science-nature/drones-are-ready-for-takeoff-160062162/ (accessed on 17 March 2019).

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogram. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Dudek, M.; Tomczyk, P.; Wygonik, P.; Korkosz, M.; Bogusz, P.; Lis, B. Hybrid fuel cell—Battery system as a main power unit for small unmanned aerial vehicles (UAV). Int. J. Electrochem. Sci. 2013, 8, 8442–8463. [Google Scholar]

- Bennett, A.; Preston, V.; Woo, J.; Chandra, S.; Diggins, D.; Chapman, R.; Wang, Z.; Rush, M.; Lye, L.; Tieu, M.; et al. Autonomous vehicles for remote sample collection in difficult conditions: Enabling remote sample collection by marine biologists. In Proceedings of the IEEE International Conference on Technologies for Practical Robot Applications, Woburn, MA, USA, 11–12 May 2015. [Google Scholar]

- Cruzan, M.B.; Weinstein, B.G.; Grasty, M.R.; Kohrn, B.F.; Hendrickson, E.C.; Arredondo, T.M.; Thompson, P.G. Small unmanned aerial vehicles (micro-UAVs, drones) in plant ecology. Appl. Plant. Sci. 2016, 4, 160004. [Google Scholar] [CrossRef]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D.S. Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef] [PubMed]

- Suduwella, C.; Amarasinghe, A.; Niroshan, L.; Elvitigala, C.; De Zoysa, K.; Keppetiyagama, C. Identifying Mosquito Breeding Sites via Drone Images. In Proceedings of the 3rd Workshop on Micro Aerial Vehicle Networks, Systems, and Applications—DroNet ’17, Niagara Falls, NY, USA, 23 June 2017; pp. 27–30. [Google Scholar]

- Knoth, C.; Klein, B.; Prinz, T.; Kleinebecker, T. Unmanned aerial vehicles as innovative remote sensing platforms for high-resolution infrared imagery to support restoration monitoring in cut-over bogs. Appl. Veg. Sci. 2013, 16, 509–517. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Ludwig, J.A.; Hindley, N.; Barnett, G. Indicators for monitoring minesite rehabilitation: Trends on waste-rock dumps, northern Australia. Ecol. Indic. 2003, 3, 143–153. [Google Scholar] [CrossRef]

- Ishihama, F.; Watabe, Y.; Oguma, H.; Moody, A. Validation of a high-resolution, remotely operated aerial remote-sensing system for the identification of herbaceous plant species. Appl. Veg. Sci. 2012, 15, 383–389. [Google Scholar] [CrossRef]

- Woodget, A.S.; Austrums, R.; Maddock, I.P.; Habit, E. Drones and digital photogrammetry: From classifications to continuums for monitoring river habitat and hydromorphology. Wiley Interdiscip. Rev. Water 2017, 4, e1222. [Google Scholar] [CrossRef]

- Cao, J.; Leng, W.; Liu, K.; Liu, L.; He, Z.; Zhu, Y. Object-Based Mangrove Species Classification Using Unmanned Aerial Vehicle Hyperspectral Images and Digital Surface Models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef]

- Zmarz, A.; Korczak-Abshire, M.; Storvold, R.; Rodzewicz, M.; Kędzierska, I. Indicator Species Population Monitoring in Antarctica with Uav. ISPRS Int. Arch. Photogram. Remote Sens. Spat. Inf. Sci. 2015, 40, 189–193. [Google Scholar] [CrossRef]

- D’Oleire-Oltmanns, S.; Marzolff, I.; Peter, K.; Ries, J. Unmanned aerial vehicle (UAV) for monitoring soil erosion in Morocco. Remote Sens. 2012, 4, 3390–3416. [Google Scholar] [CrossRef]

- Vasuki, Y.; Holden, E.-J.; Kovesi, P.; Micklethwaite, S. Semi-automatic mapping of geological Structures using UAV-based photogrammetric data: An image analysis approach. Comput. Geosci. 2014, 69, 22–32. [Google Scholar] [CrossRef]

- Takayama, L.; Ju, W.; Nass, C. Beyond dirty, dangerous and dull: what everyday people think robots should do. In Proceedings of the 3rd ACM/IEEE International Conference on Human-Robot Interaction (HRI), Amsterdam, The Netherlands, 12–15 March 2008; pp. 25–32. [Google Scholar]

- Cross, A.T.; Young, R.; Nevill, P.; McDonald, T.; Prach, K.; Aronson, J.; Wardell-Johnson, G.W.; Dixon, K.W. Appropriate aspirations for effective post-mining restoration and rehabilitation: A response to Kaz’mierczak et al. Environ. Earth Sci. 2018, 77, 256. [Google Scholar] [CrossRef]

- Clewell, A.; Aronson, J.; Winterhalder, K. The SER International Primer on Ecological Restoration; Science & Policy Working Group: Washington, DC, USA, 2004. [Google Scholar]

- McDonald, T.; Gann, G.D.; Jonson, J.; Dixon, K.W. International Standards for the Practice of Ecological Restoration—Including Principles and Key Concepts; Society for Ecological Restoration: Washington, DC, USA, 2016. [Google Scholar]

- Huang, Y.; Thomson, S.J.; Hoffman, W.C.; Lan, Y.; Fritz, B.K. Development and prospect of unmanned aerial vehicle technologies for agricultural production management. Int. J. Agric. Biol. Eng. 2013, 6, 1–10. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef]

- Shahbazi, M.; Théau, J.; Ménard, P. Recent applications of unmanned aerial imagery in natural resource management. GISci. Remote Sens. 2014, 51, 339–365. [Google Scholar] [CrossRef]

- Tripicchio, P.; Satler, M.; Dabisias, G.; Ruffaldi, E.; Avizzano, C.A. Towards Smart Farming and Sustainable Agriculture with Drones. In Proceedings of the 2015 International Conference on Intelligent Environments, Prague, Czech Republic, 15–17 July 2015; pp. 140–143. [Google Scholar]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Lehmann, J.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef]

- Paneque-Gálvez, J.; McCall, M.; Napoletano, B.; Wich, S.; Koh, L. Small Drones for Community-Based Forest Monitoring: An Assessment of Their Feasibility and Potential in Tropical Areas. Forests 2014, 5, 1481–1507. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of drone ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef]

- Van Gemert, J.C.; Verschoor, C.R.; Mettes, P.; Epema, K.; Koh, L.P.; Wich, S. Nature Conservation Drones for Automatic Localization and Counting of Animals; Springer International Publishing: Cham, Switzerland, 2015; pp. 255–270. [Google Scholar]

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are unmanned aircraft systems (UASs) the future of wildlife monitoring? A review of accomplishments and challenges. Mamm. Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

- Arnon, A.I.; Ungar, E.D.; Svoray, T.; Shachak, M.; Blankman, J.; Perevolotsky, A. The Application of Remote Sens. to Study Shrub-Herbaceous Relations at a High Spatial Resolution. Israel J. Plant Sci. 2007, 55, 73–82. [Google Scholar] [CrossRef]

- Chen, S.; McDermid, G.; Castilla, G.; Linke, J. Measuring Vegetation Height in Linear Disturbances in the Boreal Forest with UAV Photogrammetry. Remote Sens. 2017, 9, 1257. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Fredrickson, E.L. Unmanned aerial vehicles for rangeland mapping and monitoring: a comparison of two systems. In Proceedings of the American Society for Photogrammetry and Remote Sensing Annual Conference, Tampa, FL, USA, 7–11 May 2007. [Google Scholar]

- Laliberte, A.S.; Rango, A. Incorporation of texture, intensity, hue, and saturation for rangeland monitoring with unmanned aircraft imagery. In GEOBIA Proceedings; Hay, G.J., Blaschke, T., Marceau, D., Eds.; GEOBIA/ISPRS: Calgary, AB, Canada, 2008. [Google Scholar]

- Hird, J.; Montaghi, A.; McDermid, G.; Kariyeva, J.; Moorman, B.; Nielsen, S.; McIntosh, A. Use of Unmanned Aerial Vehicles for Monitoring Recovery of Forest Vegetation on Petroleum Well Sites. Remote Sens. 2017, 9, 413. [Google Scholar] [CrossRef]

- Waite, C.E.; van der Heijden, G.M.F.; Field, R.; Boyd, D.S.; Magrach, A. A view from above: Unmanned aerial vehicles (UAVs) provide a new tool for assessing liana infestation in tropical forest canopies. J. Appl. Ecol. 2019. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Zahawi, R.A.; Dandois, J.P.; Holl, K.D.; Nadwodny, D.; Reid, J.L.; Ellis, E.C. Using lightweight unmanned aerial vehicles to monitor tropical forest recovery. Biol. Conserv. 2015, 186, 287–295. [Google Scholar] [CrossRef]

- Tahar, K.; Ahmad, A.; Akib, W.; Mohd, W. A New Approach on Production of Slope Map Using Autonomous Unmanned Aerial Vehicle. Int. J. Phys. Sci. 2012, 7, 5678–5686. [Google Scholar]

- Rauhala, A.; Tuomela, A.; Davids, C.; Rossi, P. UAV Remote Sensing Surveillance of a Mine Tailings Impoundment in Sub-Arctic Conditions. Remote Sens. 2017, 9, 1318. [Google Scholar] [CrossRef]

- Cross, S.L.; Tomlinson, S.; Craig, M.D.; Dixon, K.W.; Bateman, P.W. Overlooked and undervalued: The neglected role of fauna and a global bias in ecological restoration assessments. Pac. Conserv. Biol. 2019. [Google Scholar] [CrossRef]

- Wich, S.; Dellatore, D.; Houghton, M.; Ardi, R.; Koh, L.P. A preliminary assessment of using conservation drones for Sumatran orang-utan (Pongo abelii) distribution and density. J. Unmanned Veh. Syst. 2015, 4, 45–52. [Google Scholar] [CrossRef]

- McClelland, G.T.W.; Bond, A.L.; Sardana, A.; Glass, T. Rapid population estimate of a surface-nesting seabird on a remote island using a low-cost unmanned aerial vehicle. Mar. Ornithol. 2016, 44, 215–220. [Google Scholar]

- Ratcliffe, N.; Guihen, D.; Robst, J.; Crofts, S.; Stanworth, A.; Enderlein, P. A protocol for the aerial survey of penguin colonies using UAVs. J. Unmanned Veh. Syst. 2015, 3, 95–101. [Google Scholar] [CrossRef]

- Koski, W.R.; Gamage, G.; Davis, A.R.; Mathews, T.; LeBlanc, B.; Ferguson, S.H. Evaluation of UAS for photographic re-identification of bowhead whales, Balaena mysticetus. J. Unmanned Veh. Syst. 2015, 3, 22–29. [Google Scholar] [CrossRef]

- Durban, J.W.; Fearnbach, H.; Barrett-Lennard, L.G.; Perryman, W.L.; Leroi, D.J. Photogrammetry of killer whales using a small hexacopter launched at sea. J. Unmanned Veh. Syst. 2015, 3, 131–135. [Google Scholar] [CrossRef]

- Schofield, G.; Katselidis, K.A.; Lilley, M.K.S.; Reina, R.D.; Hays, G.C.; Gremillet, D. Detecting elusive aspects of wildlife ecology using drones: New insights on the mating dynamics and operational sex ratios of sea turtles. Funct. Ecol. 2017, 31, 2310–2319. [Google Scholar] [CrossRef]

- Martin, J.; Edwards, H.H.; Burgess, M.A.; Percival, H.F.; Fagan, D.E.; Gardner, B.E.; Ortega-Ortiz, J.G.; Ifju, P.G.; Evers, B.S.; Rambo, T.J. Estimating distribution of hidden objects with drones: From tennis balls to manatees. PLoS ONE 2012, 7, e38882. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Mott, R.; Baylis, S.M.; Pham, T.T.; Wotherspoon, S.; Kilpatrick, A.D.; Raja Segaran, R.; Reid, I.; Terauds, A.; Koh, L.P.; et al. Drones count wildlife more accurately and precisely than humans. Methods Ecol. Evol. 2018, 9, 1160–1167. [Google Scholar] [CrossRef]

- Weimerskirch, H.; Prudor, A.; Schull, Q. Flights of drones over sub-Antarctic seabirds show species- and status-specific behavioural and physiological responses. Polar Biol. 2017, 41, 259–266. [Google Scholar] [CrossRef]

- Vas, E.; Lescroel, A.; Duriez, O.; Boguszewski, G.; Gremillet, D. Approaching birds with drones: First experiments and ethical guidelines. Biol. Lett. 2015, 11, 20140754. [Google Scholar] [CrossRef]

- Ditmer, M.A.; Vincent, J.B.; Werden, L.K.; Tanner, J.C.; Laske, T.G.; Iaizzo, P.A.; Garshelis, D.L.; Fieberg, J.R. Bears Show a Physiological but Limited Behavioral Response to Unmanned Aerial Vehicles. Curr. Biol. 2015, 25, 2278–2283. [Google Scholar] [CrossRef]

- Lyons, M.; Brandis, K.; Callaghan, C.; McCann, J.; Mills, C.; Ryall, S.; Kingsford, R. Bird interactions with drones, from individuals to large colonies. Aust. Field Ornithol. 2018, 35, 51–56. [Google Scholar] [CrossRef]

- Borrelle, S.; Fletcher, A. Will drones reduce investigator disturbance to surface-nesting seabirds? Mar. Ornithol. 2017, 45, 89–94. [Google Scholar]

- Richardson, A.J.; Menges, R.M.; Nixon, P.R. Distinguishing weed from crop plants using video remote sensing. Photogram. Eng. Remote Sens. 1985, 51, 1785–1790. [Google Scholar]

- Lacar, F.M.; Lewis, M.M.; Grierson, I.T. Use of hyperspectral imagery for mapping grape varieties in the Barossa Valley, South Australia. In Proceedings of the Scanning the Present and Resolving the Future, IEEE 2001 International Geoscience and Remote Sensing Symposium (Cat. No.01CH37217), Sydney, NSW, Australia, 9–13 July 2001; Volume 6, pp. 2875–2877. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Electron. Agric. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating leaf carotenoid content in vineyards using high resolution hyperspectral imagery acquired from an unmanned aerial vehicle (UAV). Agric. For. Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Yue, J.; Lei, T.; Li, C.; Zhu, J. The Application of Unmanned Aerial Vehicle Remote Sens. in Quickly Monitoring Crop Pests. Intell. Autom. Soft Comput. 2012, 18, 1043–1052. [Google Scholar] [CrossRef]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Iizuka, K.; Watanabe, K.; Kato, T.; Putri, N.; Silsigia, S.; Kameoka, T.; Kozan, O. Visualizing the Spatiotemporal Trends of Thermal Characteristics in a Peatland Plantation Forest in Indonesia: Pilot Test Using Unmanned Aerial Systems (UASs). Remote Sens. 2018, 10. [Google Scholar] [CrossRef]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Chrétien, L.-P.; Théau, J.; Ménard, P. Visible and thermal infrared remote sensing for the detection of white-tailed deer using an unmanned aerial system. Wildl. Soc. Bull. 2016, 40, 181–191. [Google Scholar] [CrossRef]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned Aerial Vehicles (UAVs) and Artificial Intelligence Revolutionizing Wildlife Monitoring and Conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef]

- Mulero-Pazmany, M.; Stolper, R.; van Essen, L.D.; Negro, J.J.; Sassen, T. Remotely piloted aircraft systems as a rhinoceros anti-poaching tool in Africa. PLoS ONE 2014, 9, e83873. [Google Scholar] [CrossRef]

- Hoffmann, H.; Jensen, R.; Thomsen, A.; Nieto, H.; Rasmussen, J.; Friborg, T. Crop water stress maps for an entire growing season from visible and thermal UAV imagery. Biogeosciences 2016, 13, 6545–6563. [Google Scholar] [CrossRef]

- Lamb, A.D. Earth observation technology applied to mining-related environmental issues. Min. Technol. 2013, 109, 153–156. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogram. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Michez, A.; Piégay, H.; Jonathan, L.; Claessens, H.; Lejeune, P. Mapping of riparian invasive species with supervised classification of Unmanned Aerial System (UAS) imagery. Int. J. Appl. Earth Observ. Geoinf. 2016, 44, 88–94. [Google Scholar] [CrossRef]

- Ye, X.; Sakai, K.; Asada, S.-I.; Sasao, A. Use of airborne multispectral imagery to discriminate and map weed infestations in a citrus orchard. Weed Biol. Manag. 2007, 7, 23–30. [Google Scholar] [CrossRef]

- Pena, J.M.; Torres-Sanchez, J.; de Castro, A.I.; Kelly, M.; Lopez-Granados, F. Weed mapping in early-season maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed]

- Whiteside, T.G.; Bartolo, R.E. A robust object-based woody cover extraction technique for monitoring mine site revegetation at scale in the monsoonal tropics using multispectral RPAS imagery from different sensors. Int. J. Appl. Earth Observ. Geoinf. 2018, 73, 300–312. [Google Scholar] [CrossRef]

- Zhao, H.; Fang, X.; Ding, H.; Josef, S.; Xiong, L.; Na, J.; Tang, G. Extraction of Terraces on the Loess Plateau from High-Resolution DEMs and Imagery Utilizing Object-Based Image Analysis. ISPRS Int. J. Geo-Inf. 2017, 6, 157. [Google Scholar] [CrossRef]

- Johansen, K.; Erskine, P.D.; McCabe, M.F. Using Unmanned Aerial Vehicles to assess the rehabilitation performance of open cut coal mines. J. Clean. Prod. 2019, 209, 819–833. [Google Scholar] [CrossRef]

- Ventura, D.; Bruno, M.; Jona Lasinio, G.; Belluscio, A.; Ardizzone, G. A low-cost drone based application for identifying and mapping of coastal fish nursery grounds. Estuar. Coast. Shelf Sci. 2016, 171, 85–98. [Google Scholar] [CrossRef]

- Wundram, D.; Löffler, J. High-resolution spatial analysis of mountain landscapes using a low-altitude remote sensing approach. Int. J. Remote Sens. 2008, 29, 961–974. [Google Scholar] [CrossRef]

- Green, K.; Congalton, R. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep Learning Approach for Car Detection in UAV Imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Bryson, M.; Reid, A.; Ramos, F.; Sukkarieh, S. Airborne vision-based mapping and classification of large farmland environments. J. Field Robot. 2010, 27, 632–655. [Google Scholar] [CrossRef]

- Bryson, M.; Reid, A.; Hung, C.; Ramos, F.T.; Sukkarieh, S. Cost-Effective Mapping Using Unmanned Aerial Vehicles in Ecology Monitoring Applications. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 509–523. [Google Scholar]

- Flynn, K.; Chapra, S. Remote Sensing of Submerged Aquatic Vegetation in a Shallow Non-Turbid River Using an Unmanned Aerial Vehicle. Remote Sens. 2014, 6, 12815–12836. [Google Scholar] [CrossRef]

- Reis, B.P.; Martins, S.V.; Fernandes Filho, E.I.; Sarcinelli, T.S.; Gleriani, J.M.; Leite, H.G.; Halassy, M. Forest restoration monitoring through digital processing of high resolution images. Ecol. Eng. 2019, 127, 178–186. [Google Scholar] [CrossRef]

- Padro, J.C.; Carabassa, V.; Balague, J.; Brotons, L.; Alcaniz, J.M.; Pons, X. Monitoring opencast mine restorations using Unmanned Aerial System (UAS) imagery. Sci. Total Environ. 2019, 657, 1602–1614. [Google Scholar] [CrossRef]

- Resop, J.P.; Lehmann, L.; Hession, W.C. Drone Laser Scanning for Modeling Riverscape Topography and Vegetation: Comparison with Traditional Aerial Lidar. Drones 2019, 3, 35–49. [Google Scholar] [CrossRef]

- Felderhof, L.; Gillieson, D. Near-infrared imagery from unmanned aerial systems and satellites can be used to specify fertilizer application rates in tree crops. Can. J. Remote Sens. 2014, 37, 376–386. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).