Non-Rigid Vehicle-Borne LiDAR-Assisted Aerotriangulation

Abstract

:1. Introduction

2. Related Work

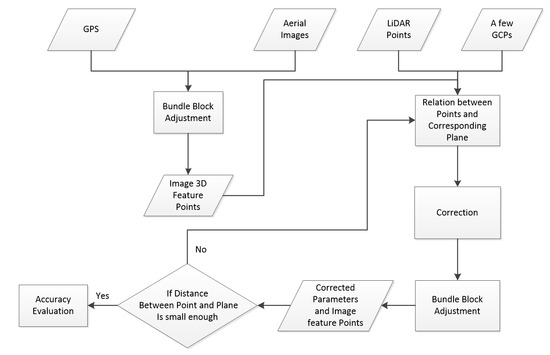

3. Methodology

3.1. UAV Image Aerotriangulation

3.2. Non-Rigid Vehicle-Borne LiDAR Point-Assisted Aerotriangulation

4. Experiment and Result Discussion

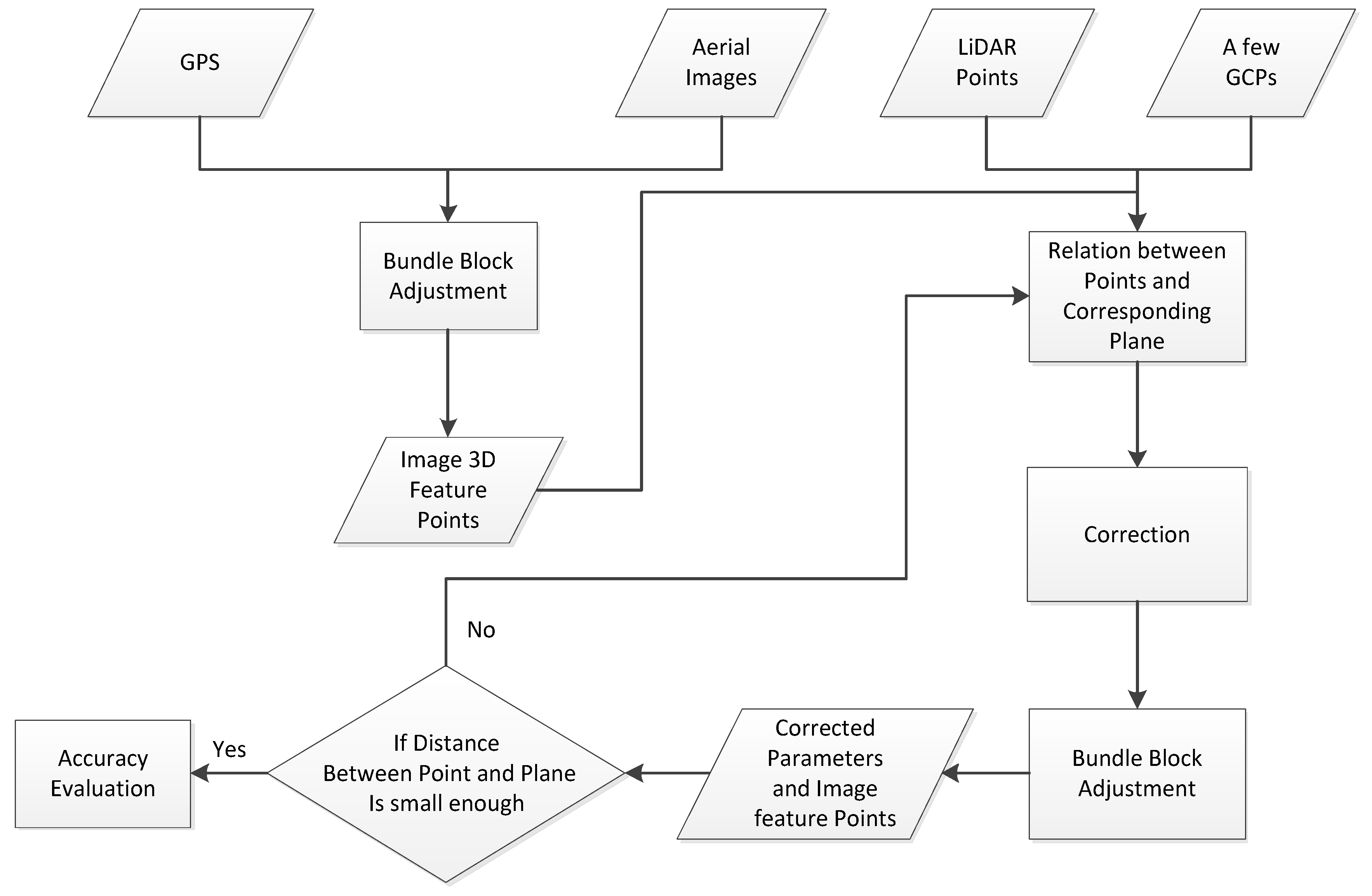

4.1. Experimental Data

4.2. Quantitative Evaluation

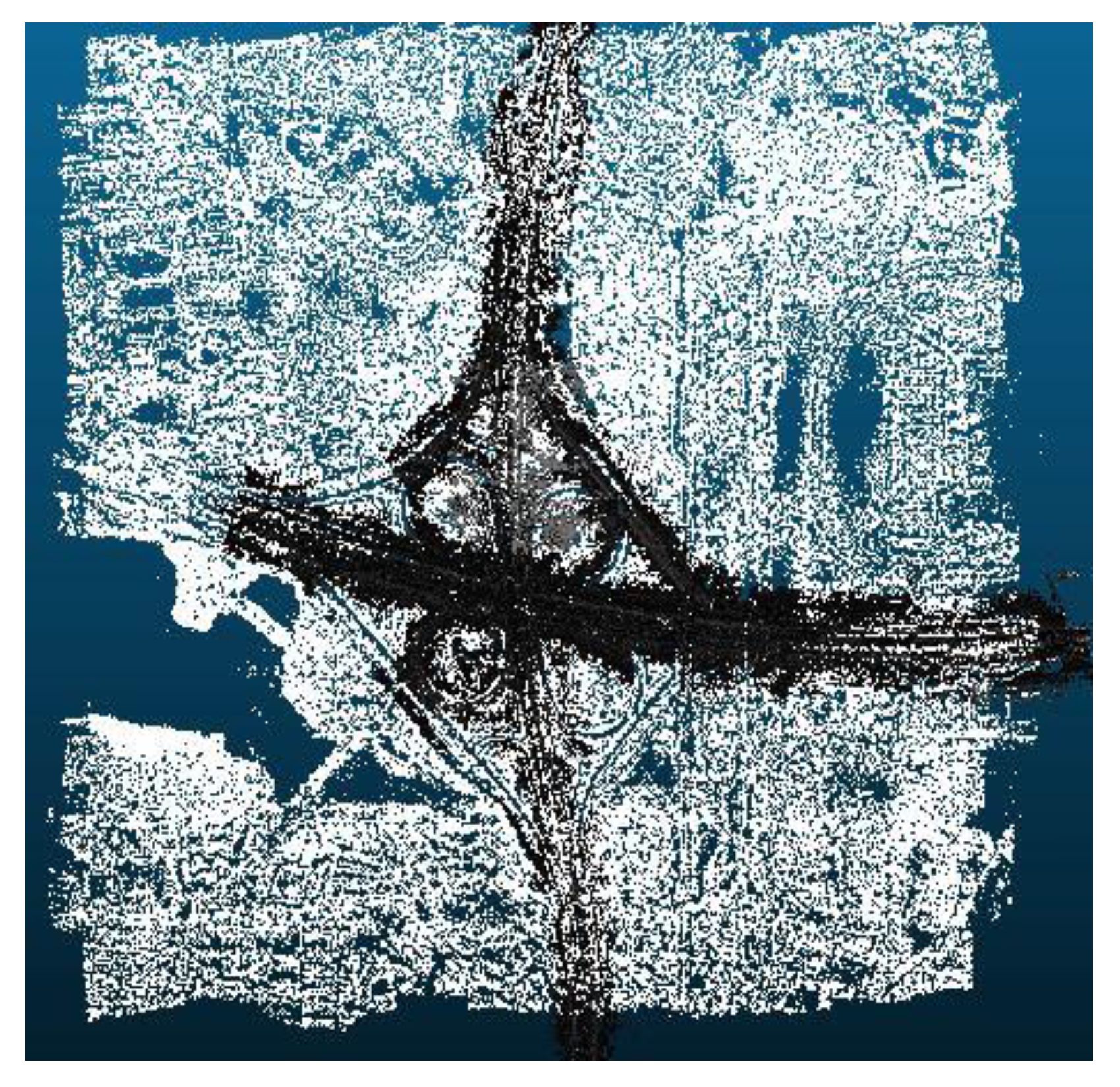

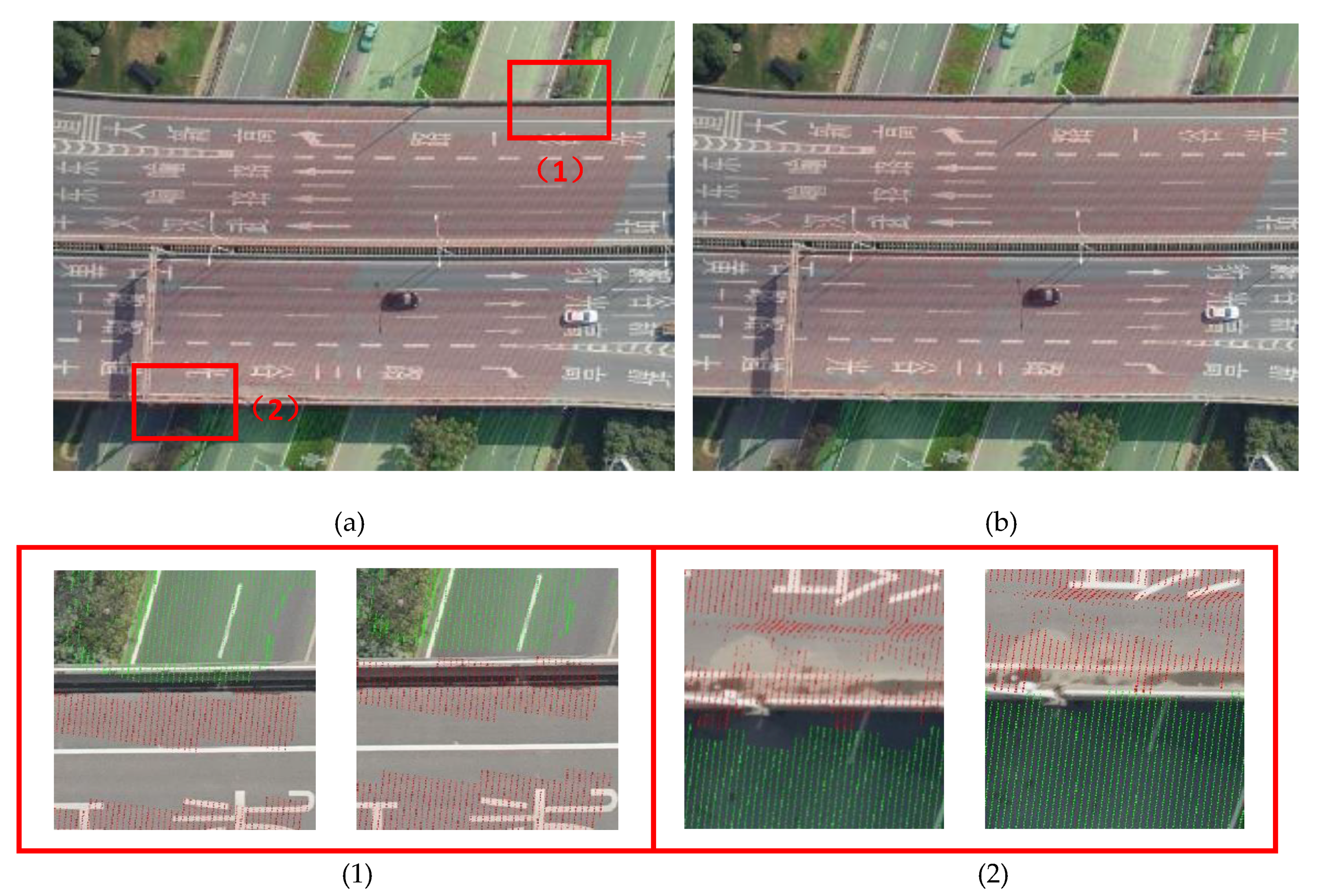

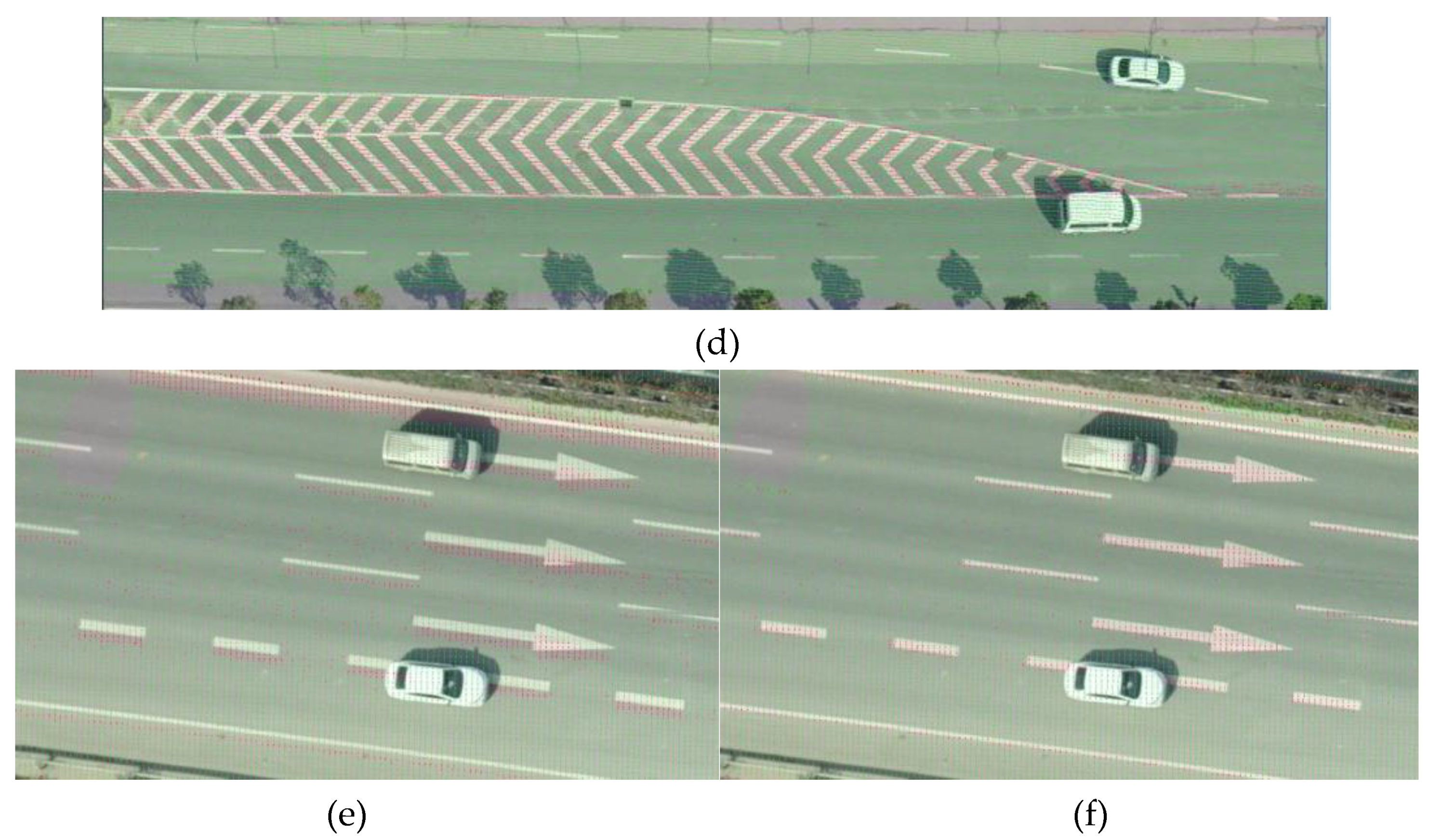

4.3. Visual Quality Evaluation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected Using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006. [Google Scholar] [CrossRef]

- Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan® and MicMac® in Sub-Optimal Survey Conditions. Remote Sens. 2016, 8, 465. [Google Scholar] [CrossRef]

- Phantom 4 RTK Sheet. Available online: https://www.dji.com/cn/phantom-4-rtk/info (accessed on 14 June 2018).

- Cui, T.; Ji, S.; Shan, J.; Gong, J.; Liu, K. Line-Based Registration of Panoramic Images and LiDAR Point Clouds for Mobile Mapping. Sensors 2017, 17, 70. [Google Scholar] [CrossRef] [PubMed]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Bodensteiner, C.; Arens, M. Real-time 2D video/3D LiDAR registration. In Proceedings of the IEEE 21st International Conference on Pattern Recognition, Tsukuba, Japan, 11–15 November 2012; pp. 2206–2209. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef]

- Lee, J.; Cai, X.; Schonlieb, C.-B.; Coomes, D.A. Nonparametric Image Registration of Airborne LiDAR, Hyperspectral and Photographic Imagery of Wooded Landscapes. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6073–6084. [Google Scholar] [CrossRef]

- Vu, H.H.; Labatut, P.; Pons, J.P.; Keriven, R. High accuracy and visibility-consistent dense multiview stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 889–901. [Google Scholar] [CrossRef] [PubMed]

- ContextCapture—3D Reality Modeling Software. Available online: https://www.bentley.com/en/ products/brands/contextcapture (accessed on 12 September 2018).

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef] [Green Version]

- Li, N.; Huang, X.; Zhang, F.; Wang, L. Registration of Aerial Imagery and Lidar Data in Desert Areas Using the Centroids of Bushes as Control Information. Photogramm. Eng. Remote Sens. 2013, 79, 743–752. [Google Scholar] [CrossRef]

- Habib, A.F.; Shin, S.; Kim, C.; Al-Durgham, M. Integration of Photogrammetric and LIDAR Data in a Multi-Primitive Triangulation Environment. In Innovations in 3D Geo Information Systems; Springer: Berlin, Germany, 2006; pp. 29–45. [Google Scholar]

- Habib, A.; Ghanma, M.; Morgan, M.; Al-Ruzouq, R. Photogrammetric and Lidar Data Registration Using Linear Features. Photogramm. Eng. Remote Sens. 2005, 71, 699–707. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiong, X.; Shen, X. Automatic registration of urban aerial imagery with airborne LiDAR data. Int. J. Remote Sens. 2012, 16, 579–595. [Google Scholar]

- Sheng, Q.; Wang, Q.; Zhang, X.; Wang, B.; Zhang, B.; Zhang, Z. Registration of Urban Aerial Image and LiDAR Based on Line Vectors. Appl. Sci. 2017, 7, 965. [Google Scholar] [CrossRef]

- Yang, B.; Chen, C. Automatic registration of UAV-borne sequent images and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2015, 101, 262–274. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2000; pp. 1865–1872. [Google Scholar]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4104–4113. [Google Scholar]

- Mikhail, E.; Bethel, J.; McGlone, J.C. Introduction to Modern Photogrammetry; Wiley: New York, NY, USA, 2001; p. 496. [Google Scholar]

- Abayowa, B.O.; Yilmaz, A.; Hardie, R.C. Automatic registration of optical aerial imagery to a LiDAR point cloud for generation of city models. ISPRS J. Photogramm. Remote Sens. 2015, 106, 68–81. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Chi, C.; Yang, B.; Peng, X. Automatic Registration of Low Altitude UAV Sequent Images and Laser Point Clouds. Acta Geod. Cartogr. Sin. 2015, 44, 518–525. [Google Scholar]

- Li, Y.; Low, K.-L. Automatic registration of color images to 3D geometry. In Proceedings of the 2009 Computer Graphics International Conference, Victoria, BC, Canada; 2016; pp. 21–28. [Google Scholar]

- Zheng, S.; Huang, R.; Zhou, Y. Registration of optical images with lidar data and its accuracy assessment. Photogramm. Eng. Remote Sens. 2013, 79, 731–741. [Google Scholar] [CrossRef]

- Swart, A.; Broere, J.; Veltkamp, R.; Tan, R. Refined Non-rigid Registration of a Panoramic Image Sequence to a LiDAR Point Cloud. Photogramm. Image Anal. Lect. Notes Comput. Sci. 2010, 6952, 73–84. [Google Scholar]

- Ila, V.; Polok, L.; Solony, M.; Istenic, K. Fast Incremental Bundle Adjustment with Covariance Recovery. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 175–184. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Vision Algorithms: Theory and Practice; Springer: Berlin, Germany, 2000; pp. 298–372. [Google Scholar]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Arya, S.; Mount, D.M.; Netanyahu, N.S.; Silverman, R.; Wu, A.Y. An optimal algorithm for approximate nearest neighbor searching fixed dimensions. J. ACM 1998, 45, 891–923. [Google Scholar] [CrossRef] [Green Version]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- K-d_tree. Available online: https://en.wikipedia.org/wiki/K-d_tree (accessed on 12 September 2018).

| Data | Sensor | Image Size (Pixels) | Focal Length (mm) | Cell Size (mm) | Resolution (m) |

|---|---|---|---|---|---|

| Images | SONY ILCE-7R | 7360*4912 | 35.8 | 0.0048 | 0.04 |

| Data | Sensors | Distance between Point Cloud (m) | The Number of Point Cloud |

|---|---|---|---|

| LiDAR point | Leica ALS50-II | 0.13 | 0.5billion |

| Checkpoint | Before LiDAR Constrain | After LiDAR Constrain | ||||

|---|---|---|---|---|---|---|

| 1 | 0.237 | -0.214 | 1.765 | 0.201 | -0.181 | -0.170 |

| 2 | 0.073 | -0.125 | 1.998 | -0.058 | -0.118 | -0.007 |

| 3 | 0.017 | -0.022 | 1.670 | -0.001 | -0.010 | -0.053 |

| 4 | -0.184 | -0.235 | -1.090 | -0.099 | -0.238 | -0.059 |

| 5 | -0.063 | -0.046 | 0.861 | -0.062 | -0.039 | 0.072 |

| 6 | -0.036 | 0.127 | 0.230 | -0.041 | 0.131 | 0.008 |

| 7 | -0.075 | -0.247 | 0.103 | -0.079 | -0.243 | -0.100 |

| 8 | 0.090 | -0.282 | 0.804 | 0.074 | -0.252 | -0.022 |

| 9 | 0.077 | 0.322 | 0.983 | 0.056 | -0.140 | 0.030 |

| 10 | 0.159 | -0.355 | 1.037 | 0.133 | -0.293 | -0.005 |

| 11 | 0.159 | -0.050 | 1.736 | 0.122 | -0.014 | -0.123 |

| 12 | -0.095 | -0.220 | 1.788 | -0.132 | -0.190 | -0.088 |

| 13 | 0.304 | -0.033 | 1.808 | 0.267 | -0.009 | -0.059 |

| 14 | 0.016 | -0.076 | 1.768 | -0.021 | -0.051 | -0.042 |

| 15 | 0.134 | -0.204 | 1.746 | 0.099 | -0.182 | -0.054 |

| 16 | 0.156 | 0.157 | 0.556 | 0.141 | 0.163 | -0.038 |

| 17 | 0.120 | 0.028 | 0.208 | 0.109 | 0.043 | -0.189 |

| Maximum error | 0.304 | 0.355 | 1.998 | 0.267 | 0.293 | 0.189 |

| Minimum error | 0.016 | 0.022 | 0.103 | 0.001 | 0.009 | 0.005 |

| The mean error | 0.140 | 0.193 | 1.341 | 0.118 | 0.163 | 0.084 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, L.; Li, Y.; Sun, M.; Ji, Z.; Yu, M.; Shu, Q. Non-Rigid Vehicle-Borne LiDAR-Assisted Aerotriangulation. Remote Sens. 2019, 11, 1188. https://doi.org/10.3390/rs11101188

Zheng L, Li Y, Sun M, Ji Z, Yu M, Shu Q. Non-Rigid Vehicle-Borne LiDAR-Assisted Aerotriangulation. Remote Sensing. 2019; 11(10):1188. https://doi.org/10.3390/rs11101188

Chicago/Turabian StyleZheng, Li, Yuhao Li, Meng Sun, Zheng Ji, Manzhu Yu, and Qingbo Shu. 2019. "Non-Rigid Vehicle-Borne LiDAR-Assisted Aerotriangulation" Remote Sensing 11, no. 10: 1188. https://doi.org/10.3390/rs11101188

APA StyleZheng, L., Li, Y., Sun, M., Ji, Z., Yu, M., & Shu, Q. (2019). Non-Rigid Vehicle-Borne LiDAR-Assisted Aerotriangulation. Remote Sensing, 11(10), 1188. https://doi.org/10.3390/rs11101188