Suppressing False Alarm in VideoSAR viaGradient-Weighted Edge Information

Abstract

:1. Introduction

2. Difference-Based Shadow Detection Algorithm

- Background Difference: Difference-based algorithm which extracts motion regions by thresholding the difference between the current frame and the background template. It should be noted that background template can be modeled via many methods like median average, mean average and Mixture Gauss [12]. Normally background difference algorithm could obtain decent effect when image sequence owns a stationary, smooth background and clear, large-size targets.

- Inter-Frame Difference: Difference-based algorithm which operates difference between adjacent frame and the current one. This algorithm holds the consensus that variation between adjacent frames is quite minor except for moving objects. In case of slow-moving objects, inter-frame difference can also be done between 2 longer-interval frames or among several frames (more than 2).

3. Analysis of Gradient Difference between False Alarm and Real Target in Edge Region

3.1. Shadow Region Analysis

3.2. Gradient Distinction between False Alarm and Real Target in Edge Region

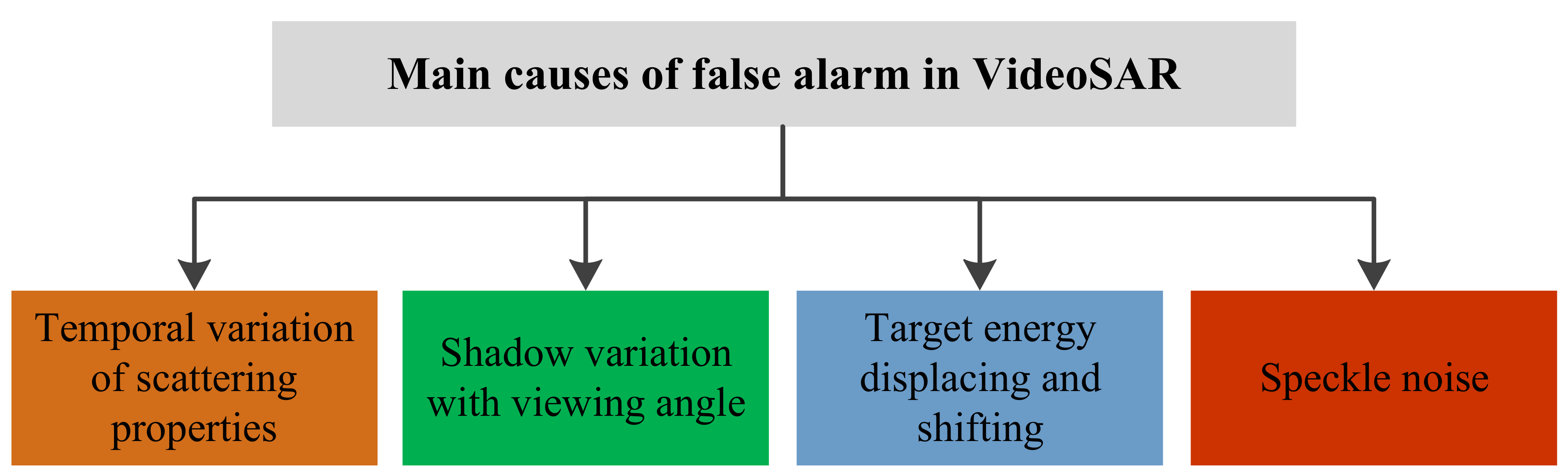

- FA 1: False alarm introduced by the variation of the scattering characteristics. Due to the slow changing of the features, VideoSAR image sequence will inevitably jitter and cause false alarms.

- FA 2: False alarm introduced by the change of the viewing angle. The shadow of stationary target (eg: trees alongside the road) also changes with the viewing angle, bringing in some false alarms.

- FA 3: False alarm introduced by the target defocusing energy return, which regarding as bright spots (or lines) shifting in the video.

4. False Alarm Reduction Method via Gradient-Weighted Edge Information

5. Experiments and Analysis

6. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Fan, Q.; Chen, F.; Cheng, M.; Lou, S.; Xiao, R.; Zhang, B.; Wang, C.; Li, J. Ship Detection Using a Fully Convolutional Network with Compact Polarimetric SAR Images. Remote Sens. 2019, 11, 2171. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, C. Compact polarimetric SAR ship detection with m-δ decomposition using visual attention model. Remote Sens. 2016, 8, 751. [Google Scholar] [CrossRef]

- Xiang, D.; Wang, W.; Tang, T.; Su, Y. Multiple-component polarimetric decomposition with new volume scattering models for PolSAR urban areas. IET Radar Sonar Navig. 2017, 11, 410–419. [Google Scholar] [CrossRef]

- William, H.D.; Lloyd, B.D.; Ana, M. A Velocity Independent Continuous Tracking Radar Concept; Sandia National Laboratory (SNL-NM): Albuquerque, NM, USA, 2011.

- Jahangir, M. Moving target detection for synthetic aperture radar via shadow detection. In Proceedings of the 2007 IET International Conference on Radar System, Edinburgh, UK, 15–18 October 2007; pp. 16–18. [Google Scholar]

- Zhang, Y.; Mao, X.; Yan, H.; Zhu, D.; Hu, X. A novel approach to moving targets shadow detection in VideoSAR imagery sequence. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2017), Fort Worth, TX, USA, 23–28 July 2017; pp. 606–609. [Google Scholar]

- Jahangir, M.; Blacknell, D.; Moate, C.P.; Hill, R.D. Extracting information from shadows in SAR imagery. In Proceedings of the 2007 IEEE International Conference on Machine Vision (ICMV 2007), Islamabad, Pakistan, 28–29 December 2007; pp. 107–112. [Google Scholar]

- Wang, H.; Chen, Z.; Zheng, S. Preliminary research of low-RCS moving target detection based on Ka-band video SAR. IEEE Geosci. Remote Sens. Lett. 2017, 14, 811–815. [Google Scholar] [CrossRef]

- Yuan, Y.; He, G.; Wang, G. A background subtraction and frame subtraction combined method for moving vehicle detection in satellite video data. J. Univ. Chin. Acad. Sci. 2018, 35, 50–58. [Google Scholar]

- Xu, H.; Yang, Z.; Chen, G. A ground moving target detection approach based on shadow feature with multichannel high-resolution synthetic aperture radar. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1572–1576. [Google Scholar] [CrossRef]

- Xu, H.; Yang, Z.; Tian, M. An Extended Moving Target Detection Approach for High-Resolution Multichannel SAR-GMTI Systems Based on Enhanced Shadow-Aided Decision. IEEE Trans. Geosci. Remote Sens. 2018, 56, 715–729. [Google Scholar] [CrossRef]

- Culibrk, D.; Marques, O.; Socek, D. Neural network approach to background modeling for video object segmentation. IEEE Trans. Neural Netw. 2007, 18, 1614–1627. [Google Scholar] [CrossRef]

- Song, X.; Yu, W. Processing video-SAR data with the fast backprojection method. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2838–2848. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, X.; Tang, K. Spaceborne Video-SAR moving target surveillance system. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2016), Beijing, China, 10–15 July 2016; pp. 2348–2351. [Google Scholar]

- Soumekh, M. Synthetic Aperture Radar Signal Processing; Wiley: New York, NY, USA, 1999. [Google Scholar]

- Vincent, O.R.; Folorunso, O. A descriptive algorithm for sobel image edge detection. In Proceedings of the 2009 Informing Science & IT Education Conference (InSITE 2009), Macon, GA, USA, 12–15 June 2009. [Google Scholar]

- Zimmerman, J.B.; Pizer, S.M.; Staab, E.V. An evaluation of the effectiveness of adaptive histogram equalization for contrast enhancement. IEEE Trans. Med. Imaging 1988, 7, 304–312. [Google Scholar] [CrossRef] [PubMed]

- Attar, A.; Rad, R.M.; Atani, R.E. A survey of image spamming and filtering techniques. Artif. Intell. Rev. 2013, 40, 71–105. [Google Scholar] [CrossRef]

| Frame Number | False Alarm Amount | Detection Rate | ||||

|---|---|---|---|---|---|---|

| Median | Unweighted | Gradient | Median | Unweighted | Gradient | |

| 10 | 1 | 1 | 1 | 0.57 | 0.57 | 0.57 |

| 70 | 2 | 2 | 1 | 0.86 | 0.86 | 0.71 |

| 130 | 1 | 1 | 0 | 1 | 1 | 1 |

| 190 | 1 | 1 | 0 | 0.5 | 1 | 1 |

| 250 | 0 | 0 | 0 | 0.5 | 0.5 | 0.5 |

| 310 | 2 | 2 | 1 | 1 | 1 | 1 |

| 370 | 1 | 1 | 1 | 0.75 | 0.75 | 0.75 |

| 430 | 4 | 3 | 2 | 0.75 | 0.75 | 0.75 |

| 500 | 1 | 0 | 0 | 0.83 | 0.83 | 0.83 |

| 570 | 4 | 5 | 2 | 0.67 | 0.67 | 0.67 |

| 630 | 3 | 1 | 0 | 1 | 1 | 0.8 |

| 690 | 3 | 1 | 0 | 1 | 1 | 1 |

| 750 | 5 | 4 | 2 | 0.5 | 0.5 | 0.5 |

| 810 | 6 | 6 | 3 | 0.8 | 0.8 | 0.8 |

| 870 | 3 | 2 | 1 | 0.67 | 0.67 | 0.67 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Yu, A.; Dong, Z.; He, Z.; Yi, T. Suppressing False Alarm in VideoSAR viaGradient-Weighted Edge Information. Remote Sens. 2019, 11, 2677. https://doi.org/10.3390/rs11222677

Li Z, Yu A, Dong Z, He Z, Yi T. Suppressing False Alarm in VideoSAR viaGradient-Weighted Edge Information. Remote Sensing. 2019; 11(22):2677. https://doi.org/10.3390/rs11222677

Chicago/Turabian StyleLi, Zihan, Anxi Yu, Zhen Dong, Zhihua He, and Tianzhu Yi. 2019. "Suppressing False Alarm in VideoSAR viaGradient-Weighted Edge Information" Remote Sensing 11, no. 22: 2677. https://doi.org/10.3390/rs11222677