High-Resolution Optical Remote Sensing Image Registration via Reweighted Random Walk Based Hyper-Graph Matching

Abstract

:1. Introduction

- (1)

- Improving the robustness and success rate of image matching without paying too high of a computational cost. Image matching is an ill-posed problem, and ABM, FBM, as well as graph matching methods have their pros and cons. Currently, most graph matching methods have high computation cost and require large amount of computer memory, and many of them are not suitable for the remote sensing image registration since it needs to match a large number of feature points. This paper describes a framework of image matching that integrates ABM, FBM and graph matching methods together to improve the image matching robustness and success rate without paying too much computation cost;

- (2)

- Simultaneously utilizing high-order structure information and one-order intensity similarity in the matching process in an efficient way. Taking building the three-order similarity tensor for example, most graph matching algorithms will randomly sample a certain number of triangles for each point in the reference image, and all the possible triangles will be selected. In this paper, the candidates for each matching feature point are firstly searched by the ABM method, and the feature points’ candidate relationship is utilized to build the hyper-edge tensor, which can significantly improve the sparseness of association graph and the computational efficiency.

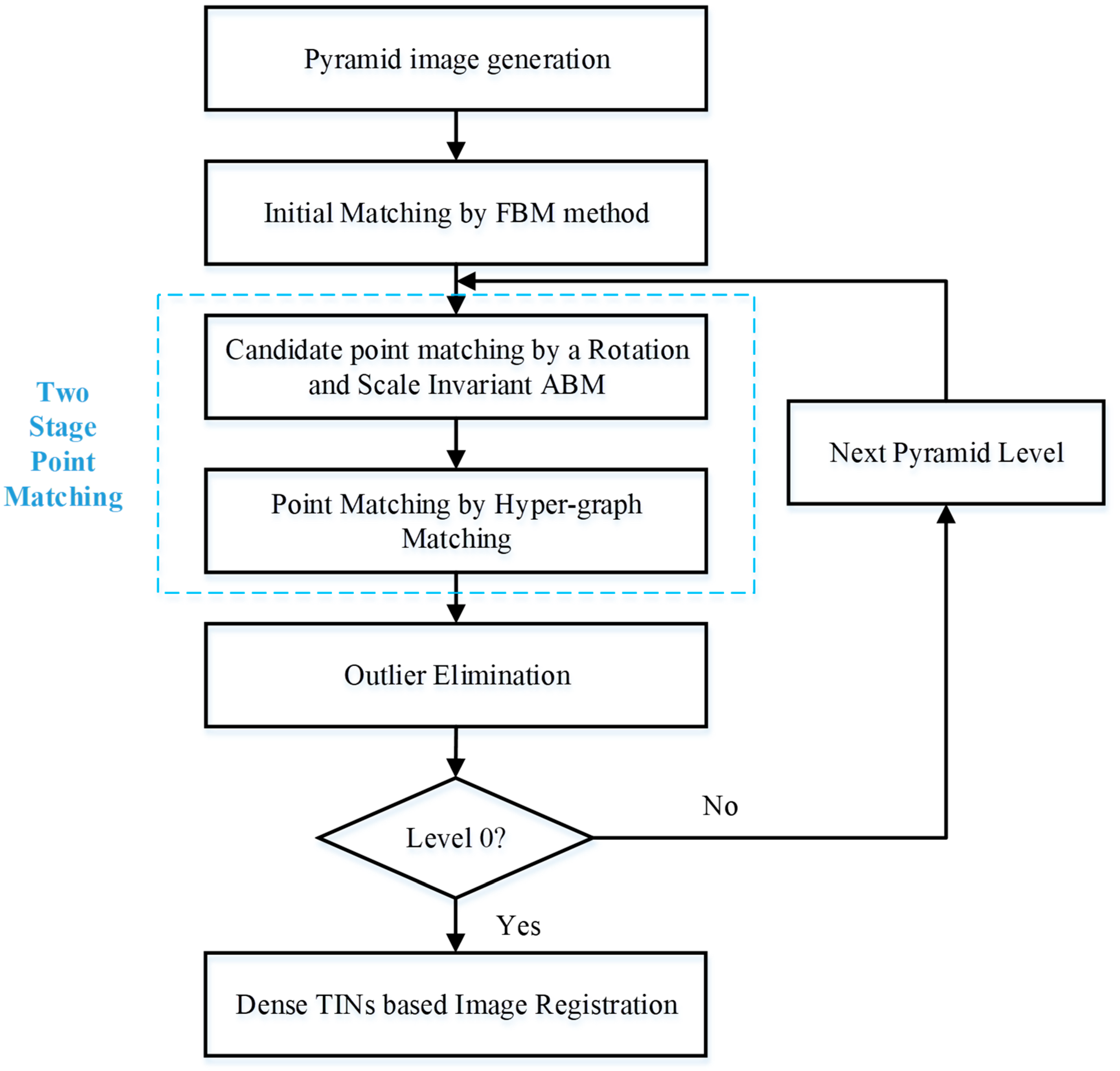

2. Methodology

2.1. Matching Feature and Process

2.1.1. Matching Feature

2.1.2. Matching Process

2.2. Initial Matching by FBM Method

2.3. Two-Stage Point Matching

2.3.1. Candidate Point Matching by a Rotation and Scale Invariant ABM

2.3.2. Point Matching by Hyper-Graph Matching

2.4. Outlier Elimination and Image Resampling

- (1)

- Adopt the kd-tree to store the image coordinates of the matching points.

- (2)

- Traverse and judge each matching point. For the current judging point, several nearest neighboring points around it are collected by using the K-NN strategy on the basis of image coordinates distance. For quadratic polynomial is used, we recommend the number of nearest neighboring points is better larger than 10. The estimated quadratic polynomial is used to calculate the coordinate residual of current judging point. When the coordinate residual is greater than RMSE twice, the judge point is regarded as outliers and indexed.

- (3)

- Return to step (1) to reconstruct kd-tree using the matching points which are not labeled as outlier after traversing all matching points.

- (4)

- Iteratively perform above process until no matching point is labeled as outlier.

3. Experiments and Analysis

3.1. Description of Test Data

3.2. Matching Results and Analysis

3.3. Comparison with Other Methods

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Dawn, S.; Saxena, V.; Sharma, B. Remote sensing image registration techniques: A survey. In Proceedings of the 4th International Conference on Image and Signal Processing (ICISP), Trois-Rivières, QC, Canada, 30 June–2 July 2010; pp. 103–112. [Google Scholar]

- Alberga, V. Similarity measures of remotely sensed multi-sensor images for change detection Applications. Remote Sens. 2009, 1, 122–143. [Google Scholar] [CrossRef]

- Ehlers, M. Multisensor image fusion techniques in remote sensing. ISPRS J. Photogramm. Remote Sens. 1991, 46, 19–30. [Google Scholar] [CrossRef]

- Moskal, L.M.; Styers, D.M.; Halabisky, M. Monitoring urban tree cover using object-based image analysis and public domain remotely sensed data. Remote Sens. 2011, 3, 2243–2262. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J. A local descriptor based registration method for multispectral remote sensing images with non-linear intensity differences. ISPRS J. Photogramm. Remote Sens. 2014, 90, 83–95. [Google Scholar] [CrossRef]

- Scheffler, D.; Hollstein, A.; Diedrich, H.; Segl, K.; Hostert, P. AROSICS: An automated and robust open-source image co-registration software for multi-sensor satellite data. Remote Sens. 2017, 9, 676. [Google Scholar] [CrossRef]

- Xiong, Z.; Zhang, Y. A novel interest-point-matching algorithm for high-resolution satellite images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 4189–4200. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference (AVC), Manchester, UK, 1–6 September 1988. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Forssén, P.E. Maximally stable colour regions for recognition and matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 27 June–2 July 2004. [Google Scholar]

- Wang, S.; You, H.; Fu, K. BFSIFT: A novel method to find feature matches for SAR image registration. IEEE Geosci. Remote Sens. Lett. 2012, 9, 649–653. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef]

- Shechtman, E.; Irani, M. Matching local self-similarities across images and videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Yu, L.; Zhang, D.; Holden, E.J. A fast and fully automatic registration approach based on point features for multi-source remote-sensing images. Comput. Geosci. 2008, 34, 838–848. [Google Scholar] [CrossRef]

- Loeckx, D.; Slagmolen, P.; Maes, F.; Vandermeulen, D.; Suetens, P. Nonrigid image registration using conditional mutual information. IEEE Trans. Med. Imaging 2010, 29, 19–29. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Li, Z. Feature-based image registration using the shape context. Int. J. Remote Sens. 2010, 31, 2169–2177. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with application to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Torr, P.H.S.; Zisserman, A. MLESAC: A new robust estimator with application to estimating image geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Wong, A.; Clausi, D.A. AISIR: Automated inter-sensor/inter-band satellite image registration using robust complex wavelet feature representations. Pattern Recognit. Lett. 2010, 31, 1160–1167. [Google Scholar] [CrossRef]

- Kouyama, T.; Kanemura, A.; Kato, S.; Imamoglu, N.; Fukuhara, T.; Nakamura, R. Satellite Attitude Determination and Map Projection Based on Robust Image Matching. Remote Sens. 2017, 9, 90. [Google Scholar] [CrossRef]

- Sugimoto, R.; Kouyama, T.; Kanemura, A.; Kato, S.; Imamoglu, N.; Nakamura, R. Automated attitude determination for pushbroom sensors based on robust image matching. Remote Sens. 2018, 10, 1629. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 2nd ed.; Prentice Hall Press: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Schwind, P.; Suri, S.; Reinartz, P.; Siebert, A. Applicability of the SIFT operator to geometric SAR image registration. Int. J. Remote Sens. 2010, 31, 1959–1980. [Google Scholar] [CrossRef]

- Suri, S.; Schwind, P.; Uhl, J.; Reinartz, P. Modifications in the SIFT operator for effective SAR image matching. Int. J. Image Data Fusion 2010, 1, 243–256. [Google Scholar] [CrossRef]

- Saleem, S.; Sablatnig, R. A robust SIFT descriptor for multispectral images. IEEE Signal Process. Lett. 2014, 21, 400–403. [Google Scholar] [CrossRef]

- Hasan, M.; Jia, X.; Robles-Kelly, A.; Zhou, J.; Pickering, M.R. Multi-spectral remote sensing image registration via spatial relationship analysis on sift keypoints. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Yi, Z.; Zhiguo, C.; Yang, X. Multi-spectral remote image registration based on SIFT. Electron. Lett. 2008, 44, 107–108. [Google Scholar] [CrossRef]

- Mehmet, F.; Yardimci, Y.; Temlzel, A. Registration of multispectral satellite images with orientation-restricted SIFT. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Cape Town, South Africa, 12–17 July 2009. [Google Scholar]

- Dong, Y.; Jiao, W.; Long, T.; Liu, L.; He, G.; Gong, C.; Guo, Y. Local deep descriptor for remote sensing image feature matching. Remote Sens. 2019, 11, 430. [Google Scholar] [CrossRef]

- He, H.; Chen, M.; Chen, T.; Li, D. Matching of remote sensing with complex background variations via Siamese convolutional neural network. Remote Sens. 2018, 10, 355. [Google Scholar] [CrossRef]

- Liu, Y.; Mo, F.; Tao, P. Matching multi-Source optical satellite imagery exploiting a multi-stage approach. Remote Sens. 2017, 9, 1249. [Google Scholar] [CrossRef]

- Leordeanu, M.; Hebert, M. A spectral technique for correspondence problems using pairwise constraints. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Beijing, China, 17–20 October 2005. [Google Scholar]

- Zass, R.; Shashua, A. Probabilistic graph and hypergraph matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Yuan, X.X.; Chen, S.Y.; Yuan, W.; Cai, Y. Poor textural image tie point matching via graph theory. ISPRS J. Photogram. Remote Sens. 2017, 129, 21–31. [Google Scholar] [CrossRef]

- Schölkopf, B.; Platt, J.; Hofmann, T. Balanced graph matching. In Proceedings of the Neural Information Processing Systems (NIPS), Vancouver, BC, Canada, 3–6 December 2007. [Google Scholar]

- Duchenne, O.; Bach, F.; Kweon, I.; Ponce, J. A tensor-based algorithm for high-order graph matching. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2383–2395. [Google Scholar] [CrossRef]

- Cho, M.; Lee, J.; Lee, K.M. Reweighted random walks for graph matching. In Proceedings of the 11th European Conference on Computer Vision (ECCV), Crete, Greece, 5–11 September 2010; Volume 6315, pp. 492–505. [Google Scholar]

- Lee, J.; Cho, M.; Lee, K.M. Hyper-graph matching via reweighted random walks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Sedaghat, A.; Mokhtarzade, M.; Ebadi, H. Uniform robust scale-invariant feature matching for optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4516–4527. [Google Scholar] [CrossRef]

| No. | Platform | Acquisition Time | Land Type | Image Size (Pixels) | Pixel Size (m/Pixel) |

|---|---|---|---|---|---|

| 1 | WorldView-1 | 2009 | Mountain | 35,180 × 11,028 | 0.5 |

| 2 | WorldView-1 | 2010 | Suburb | 35,154 × 13,045 | 0.5 |

| 3 | GeoEye | 2013 | Urban | 27,552 × 25,132 | 0.5 |

| 4 | SPOT-5 | 2008 | Flat | 12,000 × 12,000 | 5.0 |

| 5 | GF-1 | 2017 | Flat | 2000 × 2000 | 16 |

| 6 | WorldView-3 | 2016 | Urban | 4000 × 4000 | 0.5 |

| Datasets | Number of Checkpoints | RMS (Pixels) | |

|---|---|---|---|

| x | y | ||

| 1 | 60 | 0.41 | 0.63 |

| 2 | 60 | 0.63 | 0.58 |

| 3 | 85 | 0.42 | 0.22 |

| 4 | 85 | 0.41 | 0.33 |

| 5 | 100 | 0.36 | 0.27 |

| 6 | 100 | 0.52 | 0.75 |

| Image Pair | Indicators | SURF | AKAZE | ORB | BRISK | FAST | Our Method |

|---|---|---|---|---|---|---|---|

| 1 | C | 162 | 52 | 246 | 131 | 63 | 1428 |

| CM | 53 | 21 | 84 | 59 | 51 | 1108 | |

| Recall | 32.72% | 40.38% | 34.15% | 45.04% | 80.95% | 77.59% | |

| Precision | 4.35% | 9.06% | 4.53% | 7.81% | 0.77% | 31.21% | |

| Time(s) | 0.54 | 1.57 | 3.73 | 3.30 | 1.02 | 22.64 | |

| 2 | C | 295 | 81 | 223 | 60 | 119 | 2221 |

| CM | 99 | 59 | 90 | 29 | 76 | 1246 | |

| Recall | 33.56% | 72.84% | 40.36% | 48.33% | 63.87% | 56.10% | |

| Precision | 10.07% | 15.76% | 6.45% | 8.17% | 3.20% | 46.90% | |

| Time(s) | 0.47 | 1.31 | 2.83 | 1.70 | 0.50 | 38.33 | |

| 3 | C | 116 | 59 | 96 | 81 | 16 | 1234 |

| CM | 0 | 6 | 24 | 31 | 11 | 474 | |

| Recall | 0.00% | 10.17% | 25.00% | 38.27% | 68.75% | 38.41% | |

| Precision | 0.00% | 3.20% | 1.50% | 1.02% | 0.12% | 28.34% | |

| Time(s) | 0.87 | 1.97 | 4.89 | 0.51 | 1.98 | 20.58 | |

| 4 | C | 99 | 78 | 128 | 108 | 23 | 2546 |

| CM | 8 | 38 | 31 | 37 | 17 | 1432 | |

| Recall | 8.08% | 48.72% | 24.22% | 34.26% | 73.91% | 56.25% | |

| Precision | 1.78% | 2.74% | 1.97% | 1.18% | 0.17% | 68.00% | |

| Time(s) | 0.67 | 1.55 | 4.06 | 0.98 | 1.54 | 47.64 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Di, L.; Ming, Y.; Lv, H.; Tan, H. High-Resolution Optical Remote Sensing Image Registration via Reweighted Random Walk Based Hyper-Graph Matching. Remote Sens. 2019, 11, 2841. https://doi.org/10.3390/rs11232841

Wu Y, Di L, Ming Y, Lv H, Tan H. High-Resolution Optical Remote Sensing Image Registration via Reweighted Random Walk Based Hyper-Graph Matching. Remote Sensing. 2019; 11(23):2841. https://doi.org/10.3390/rs11232841

Chicago/Turabian StyleWu, Yingdan, Liping Di, Yang Ming, Hui Lv, and Han Tan. 2019. "High-Resolution Optical Remote Sensing Image Registration via Reweighted Random Walk Based Hyper-Graph Matching" Remote Sensing 11, no. 23: 2841. https://doi.org/10.3390/rs11232841