Color Calibration of Proximal Sensing RGB Images of Oilseed Rape Canopy via Deep Learning Combined with K-Means Algorithm

Abstract

:1. Introduction

2. Materials and Methods

2.1. Image Acquisition and Camera Parameters

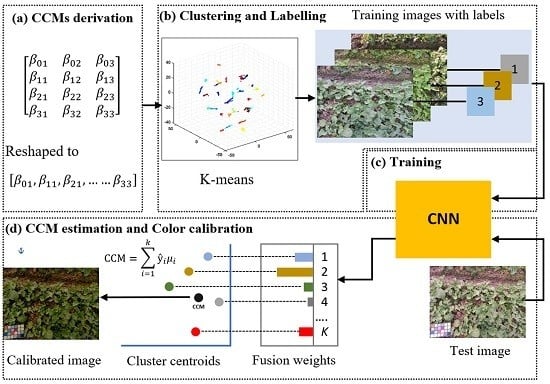

2.2. Overview of the Proposed Method

2.2.1. Color Calibration Matrices Derivation

2.2.2. Clustering and Image Labeling

2.2.3. Network Architecture and Training Strategy

2.2.4. The Network Testing and Image Color Calibration

2.3. Performance Evaluation of the Proposed Framework

3. Results and Discussion

3.1. The CCMs Derivation

3.2. Performance of the K-Means Clustering

3.3. Performance Evaluation of the CNN Classification

3.4. Performance of Image Color Calibration

3.5. Comparison of Different Color Calibration Methods

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, J.; Naik, H.S.; Assefa, T.; Sarkar, S.; Reddy, R.C.; Singh, A.; Ganapathysubramanian, B.; Singh, A.K. Computer vision and machine learning for robust phenotyping in genome-wide studies. Sci. Rep. 2017, 7, 44048. [Google Scholar] [CrossRef] [PubMed]

- Sulistyo, S.B.; Wu, D.; Woo, W.L.; Dlay, S.S.; Gao, B. Computational deep intelligence vision sensing for nutrient content estimation in agricultural automation. IEEE Trans. Autom. Sci. Eng. 2017, 15, 1243–1257. [Google Scholar] [CrossRef]

- Abdalla, A.; Cen, H.; El-manawy, A.; He, Y. Infield oilseed rape images segmentation via improved unsupervised learning models combined with supreme color features. Comput. Electron. Agric. 2019. [Google Scholar] [CrossRef]

- Furbank, R.T.; Tester, M. Phenomics—Technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef]

- Rahaman, M.M.; Chen, D.; Gillani, Z.; Klukas, C.; Chen, M. Advanced phenotyping and phenotype data analysis for the study of plant growth and development. Front. Plant Sci. 2015, 6, 619. [Google Scholar] [CrossRef] [Green Version]

- Chen, D.; Neumann, K.; Friedel, S.; Kilian, B.; Chen, M.; Altmann, T.; Klukas, C. Dissecting the Phenotypic Components of Crop Plant Growth and Drought Responses Based on High-Throughput Image Analysis. Plant Cell 2014. [Google Scholar] [CrossRef] [Green Version]

- Hernández-Hernández, J.L.; García-Mateos, G.; González-Esquiva, J.M.; Escarabajal-Henarejos, D.; Ruiz-Canales, A.; Molina-Martínez, J.M. Optimal color space selection method for plant/soil segmentation in agriculture. Comput. Electron. Agric. 2016, 122, 124–132. [Google Scholar] [CrossRef]

- Guo, W.; Rage, U.K.; Ninomiya, S. Illumination invariant segmentation of vegetation for time series wheat images based on decision tree model. Comput. Electron. Agric. 2013, 96, 58–66. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the main challenges in automatic plant disease identification based on visible range images. Biosys. Eng. 2016, 144, 52–60. [Google Scholar] [CrossRef]

- Liang, Y.; Urano, D.; Liao, K.-L.; Hedrick, T.L.; Gao, Y.; Jones, A.M.J.P.M. A nondestructive method to estimate the chlorophyll content of Arabidopsis seedlings. Plant Methods 2017, 13, 26. [Google Scholar] [CrossRef]

- Riccardi, M.; Mele, G.; Pulvento, C.; Lavini, A.; d’Andria, R.; Jacobsen, S.-E.J.P.R. Non-destructive evaluation of chlorophyll content in quinoa and amaranth leaves by simple and multiple regression analysis of RGB image components. Photosynth. Res. 2014, 120, 263–272. [Google Scholar] [CrossRef]

- Jiménez-Zamora, A.; Delgado-Andrade, C.; Rufián-Henares, J.A. Antioxidant capacity, total phenols and color profile during the storage of selected plants used for infusion. Food Chem. 2016, 199, 339–346. [Google Scholar] [CrossRef]

- Esaias, W.E.; Iverson, R.L.; Turpie, K. Ocean province classification using ocean colour data: Observing biological signatures of variations in physical dynamics. Glob. Chang. Biol. 2000, 6, 39–55. [Google Scholar] [CrossRef]

- Malmer, N.; Johansson, T.; Olsrud, M.; Christensen, T.R. Vegetation, climatic changes and net carbon sequestration in a North-Scandinavian subarctic mire over 30 years. Global Change Biol. 2005, 11, 1895–1909. [Google Scholar] [CrossRef]

- Grose, M.J. Green leaf colours in a suburban Australian hotspot: Colour differences exist between exotic trees from far afield compared with local species. Landsc. Urban Plan. 2016, 146, 20–28. [Google Scholar] [CrossRef]

- Grose, M.J. Plant colour as a visual aspect of biological conservation. Biol. Conserv. 2012, 153, 159–163. [Google Scholar] [CrossRef]

- Porikli, F. Inter-camera color calibration by correlation model function. In Proceedings of the 2003 International Conference on Image Processing (Cat. No.03CH37429), Barcelona, Spain, 14–17 September 2003; p. II-133. [Google Scholar]

- Brown, M.; Majumder, A.; Yang, R. Camera-based calibration techniques for seamless multiprojector displays. IEEE Trans. Vis. Comput. Graph. 2005, 11, 193–206. [Google Scholar] [CrossRef]

- Kagarlitsky, S.; Moses, Y.; Hel-Or, Y. Piecewise-consistent Color Mappings of Images Acquired Under Various Conditions; IEEE: Piscataway, NJ, USA, 2009; pp. 2311–2318. [Google Scholar]

- Shajahan, S.; Igathinathane, C.; Saliendra, N.; Hendrickson, J.R.; Archer, D. Color calibration of digital images for agriculture and other applications. ISPRS J. Photogram. Remote Sens. 2018, 146, 221–234. [Google Scholar]

- Charrière, R.; Hébert, M.; Treméau, A.; Destouches, N. Color calibration of an RGB camera mounted in front of a microscope with strong color distortion. Appl. Opt. 2013, 52, 5262–5271. [Google Scholar]

- Finlayson, G.; Mackiewicz, M.; Hurlbert, A. Color Correction Using Root-Polynomial Regression. IEEE Trans. Image Process 2015, 24, 1460–1470. [Google Scholar] [CrossRef] [Green Version]

- Jetsu, T.; Heikkinen, V.; Parkkinen, J.; Hauta-Kasari, M.; Martinkauppi, B.; Lee, S.D.; Ok, H.W.; Kim, C.Y. Color calibration of digital camera using polynomial transformation. In Proceedings of the Conference on Colour in Graphics, Imaging, and Vision, Leeds, UK, 19–22 June 2006; pp. 163–166. [Google Scholar]

- Jackman, P.; Sun, D.-W.; ElMasry, G. Robust colour calibration of an imaging system using a colour space transform and advanced regression modelling. Meat Sci. 2012, 91, 402–407. [Google Scholar] [CrossRef] [PubMed]

- Kang, H.R.; Anderson, P. Neural network applications to the color scanner and printer calibrations. J. Electron. Imaging 1992, 1, 125–135. [Google Scholar]

- Wee, A.G.; Lindsey, D.T.; Kuo, S.; Johnston, W.M. Color accuracy of commercial digital cameras for use in dentistry. Dent. Mater. 2006, 22, 553–559. [Google Scholar] [CrossRef] [PubMed]

- Colantoni, P.; Thomas, J.-B.; Yngve Hardeberg, J. High-end colorimetric display characterization using an adaptive training set. J. Soc. Inf. Disp. 2011, 19, 520–530. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.-L.; Lin, S.-H. Intelligent color temperature estimation using fuzzy neural network with application to automatic white balance. Expert Syst. Appl. 2011, 38, 7718–7728. [Google Scholar] [CrossRef]

- Bala, R.; Monga, V.; Sharma, G.; R. Van de Capelle, J.-P. Two-dimensional transforms for device color calibration. Proc. SPIE 2003, 5293. [Google Scholar]

- Virgen-Navarro, L.; Herrera-López, E.J.; Corona-González, R.I.; Arriola-Guevara, E.; Guatemala-Morales, G.M. Neuro-fuzzy model based on digital images for the monitoring of coffee bean color during roasting in a spouted bed. Expert Syst. Appl. 2016, 54, 162–169. [Google Scholar] [CrossRef]

- Akkaynak, D.; Treibitz, T.; Xiao, B.; Gürkan, U.A.; Allen, J.J.; Demirci, U.; Hanlon, R.T. Use of commercial off-the-shelf digital cameras for scientific data acquisition and scene-specific color calibration. J. Opt. Soc. Am. A 2014, 31, 312–321. [Google Scholar] [CrossRef] [Green Version]

- Suh, H.K.; Ijsselmuiden, J.; Hofstee, J.W.; van Henten, E.J. Transfer learning for the classification of sugar beet and volunteer potato under field conditions. Biosys. Eng. 2018, 174, 50–65. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; E. Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, Nevada, 3–6 December 2012; Volume 25. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vision Image Understanding 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 1152, pp. 1150–1157. [Google Scholar]

- Shi, W.; Loy, C.C.; Tang, X. Deep Specialized Network for Illuminant Estimation. In Proceedings of the Computer Vision—ECCV 2016, Cham, The Netherlands, 8–16 October 2016; pp. 371–387. [Google Scholar]

- Lou, Z.; Gevers, T.; Hu, N.; Lucassen, M. Color Constancy by Deep Learning. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015; pp. 76.1–76.12. [Google Scholar] [CrossRef] [Green Version]

- Qian, Y.; Chen, K.; Kamarainen, J.-K.; Nikkanen, J.; Matas, J. Deep Structured-Output Regression Learning for Computational Color Constancy. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016. [Google Scholar]

- Gong, R.; Wang, Q.; Shao, X.; Liu, J. A color calibration method between different digital cameras. Optik 2016, 127, 3281–3285. [Google Scholar] [CrossRef]

- X-Rite. Colorimetric values for ColorChecker Family of Targets. Available online: https://xritephoto.com/ph_product_overview.aspx?ID=1257&Action=Support&SupportID=5159 (accessed on 11 February 2018).

- Oh, S.W.; Kim, S.J. Approaching the computational color constancy as a classification problem through deep learning. Pattern Recognit. 2017, 61, 405–416. [Google Scholar] [CrossRef] [Green Version]

- Ciresan, D.C.; Meier, U.; Masci, J.; Gambardella, L.M.; Schmidhuber, J. Flexible, high performance convolutional neural networks for image classification. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Catalonia, Spain, 16–22 July 2011. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. J. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Bridle, J.S. Probabilistic Interpretation of Feedforward Classification Network Outputs, with Relationships to Statistical Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 1990; pp. 227–236. [Google Scholar]

- Bianco, S.; Gasparini, F.; Schettini, R. Consensus-based framework for illuminant chromaticity estimation. J. Electron. Imaging 2008, 17, 023013. [Google Scholar] [CrossRef] [Green Version]

- Gijsenij, A.; Gevers, T.; Weijer, J.v.d. Computational Color Constancy: Survey and Experiments. IEEE Trans. Image Process. 2011, 20, 2475–2489. [Google Scholar] [CrossRef]

- Maaten, L.v.d.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Buchsbaum, G. A spatial processor model for object colour perception. J. Frankl. Inst. 1980, 310, 1–26. [Google Scholar] [CrossRef]

- Cheng, D.; Prasad, D.K.; Brown, M.S. Illuminant estimation for color constancy: Why spatial-domain methods work and the role of the color distribution. J. Opt. Soc. Am. A 2014, 31, 1049–1058. [Google Scholar] [CrossRef]

- Land, E.H.; McCann, J.J. Lightness and Retinex Theory. J. Opt. Soc. Am. 1971, 61, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Gijsenij, A.; Gevers, T.; Lucassen, M.P. Perceptual analysis of distance measures for color constancy algorithms. JOSA A 2009, 26, 2243–2256. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, Z.; Song, R.; Luo, H.; Xu, J.; Chen, S. Color Calibration for Colorized Vision System with Digital Sensor and LED Array Illuminator. Act. Passiv. Electron. Compon. 2016, 2016. [Google Scholar] [CrossRef] [Green Version]

- Chopin, J.; Kumar, P.; Miklavcic, S. Land-based crop phenotyping by image analysis: Consistent canopy characterization from inconsistent field illumination. Plant Methods 2018, 14, 39. [Google Scholar] [CrossRef] [PubMed]

- Grieder, C.; Hund, A.; Walter, A. Image based phenotyping during winter: A powerful tool to assess wheat genetic variation in growth response to temperature. Funct. Plant Biol. 2015, 42, 387. [Google Scholar] [CrossRef]

- Buchaillot, M.; Gracia Romero, A.; Vergara, O.; Zaman-Allah, M.; Tarekegne, A.; Cairns, J.; M Prasanna, B.; Araus, J.; Kefauver, S. Evaluating Maize Genotype Performance under Low Nitrogen Conditions Using RGB UAV Phenotyping Techniques. Sensors 2019, 19, 1815. [Google Scholar] [CrossRef] [Green Version]

- Makanza, R.; Zaman-Allah, M.; Cairns, J.; Magorokosho, C.; Tarekegne, A.; Olsen, M.; Prasanna, B. High-Throughput Phenotyping of Canopy Cover and Senescence in Maize Field Trials Using Aerial Digital Canopy Imaging. Remote Sens. 2018, 10, 330. [Google Scholar] [CrossRef] [Green Version]

- Bosilj, P.; Aptoula, E.; Duckett, T.; Cielniak, G. Transfer learning between crop types for semantic segmentation of crops versus weeds in precision agriculture. J. Field Robot 2019. [Google Scholar] [CrossRef]

| Training No. of Images | Validation No. of Images | Training Time (min) | Classification Time (s/Image) | Training Accuracy (%) | Validation Accuracy (%) |

|---|---|---|---|---|---|

| 2100 | 900 | 2345 | 0.12 | 98.00 | 98.53 |

| Method | Mean (µ) | Median | Trimean | Best-25% (µ) | Worst-25% (µ) | Time (s) |

|---|---|---|---|---|---|---|

| No calibration | 41.94 | 40.39 | 42.58 | 38.25 | 46.28 | N/A |

| Proposed method | 16.23 | 13.27 | 14.85 | 10.92 | 23.37 | 0.150 |

| GW | 35.09 | 33.72 | 34.18 | 27.93 | 42.98 | 0.132 |

| WP | 38.97 | 36.09 | 37.94 | 34.39 | 44.47 | 0.112 |

| PCA | 35.46 | 33.85 | 34.72 | 29.11 | 42.23 | 0.146 |

| PCA | WP | GW | Proposed | |

|---|---|---|---|---|

| 1 | 1 | 1 | 0 | Proposed |

| 1 | 1 | 0 | −1 | GW |

| −1 | 0 | −1 | −1 | WP |

| 0 | 1 | −1 | −1 | PCA |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdalla, A.; Cen, H.; Abdel-Rahman, E.; Wan, L.; He, Y. Color Calibration of Proximal Sensing RGB Images of Oilseed Rape Canopy via Deep Learning Combined with K-Means Algorithm. Remote Sens. 2019, 11, 3001. https://doi.org/10.3390/rs11243001

Abdalla A, Cen H, Abdel-Rahman E, Wan L, He Y. Color Calibration of Proximal Sensing RGB Images of Oilseed Rape Canopy via Deep Learning Combined with K-Means Algorithm. Remote Sensing. 2019; 11(24):3001. https://doi.org/10.3390/rs11243001

Chicago/Turabian StyleAbdalla, Alwaseela, Haiyan Cen, Elfatih Abdel-Rahman, Liang Wan, and Yong He. 2019. "Color Calibration of Proximal Sensing RGB Images of Oilseed Rape Canopy via Deep Learning Combined with K-Means Algorithm" Remote Sensing 11, no. 24: 3001. https://doi.org/10.3390/rs11243001

APA StyleAbdalla, A., Cen, H., Abdel-Rahman, E., Wan, L., & He, Y. (2019). Color Calibration of Proximal Sensing RGB Images of Oilseed Rape Canopy via Deep Learning Combined with K-Means Algorithm. Remote Sensing, 11(24), 3001. https://doi.org/10.3390/rs11243001