Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning

Abstract

:1. Introduction

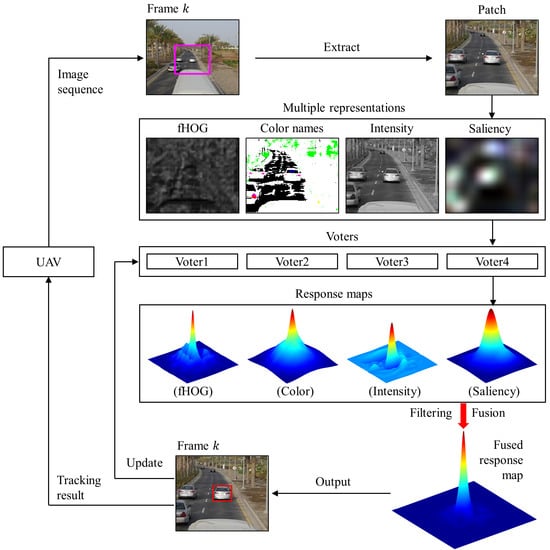

- Novel visual features, i.e., intensity, color names (CN) [31] and saliency [32], for background-aware correlation filter framework to achieve tracking: In original background-aware correlation filter framework, HOG is used as the feature to conduct object tracking. It is difficult to obtain comprehensive information of objects or background using a single feature, especially in aerial tracking.

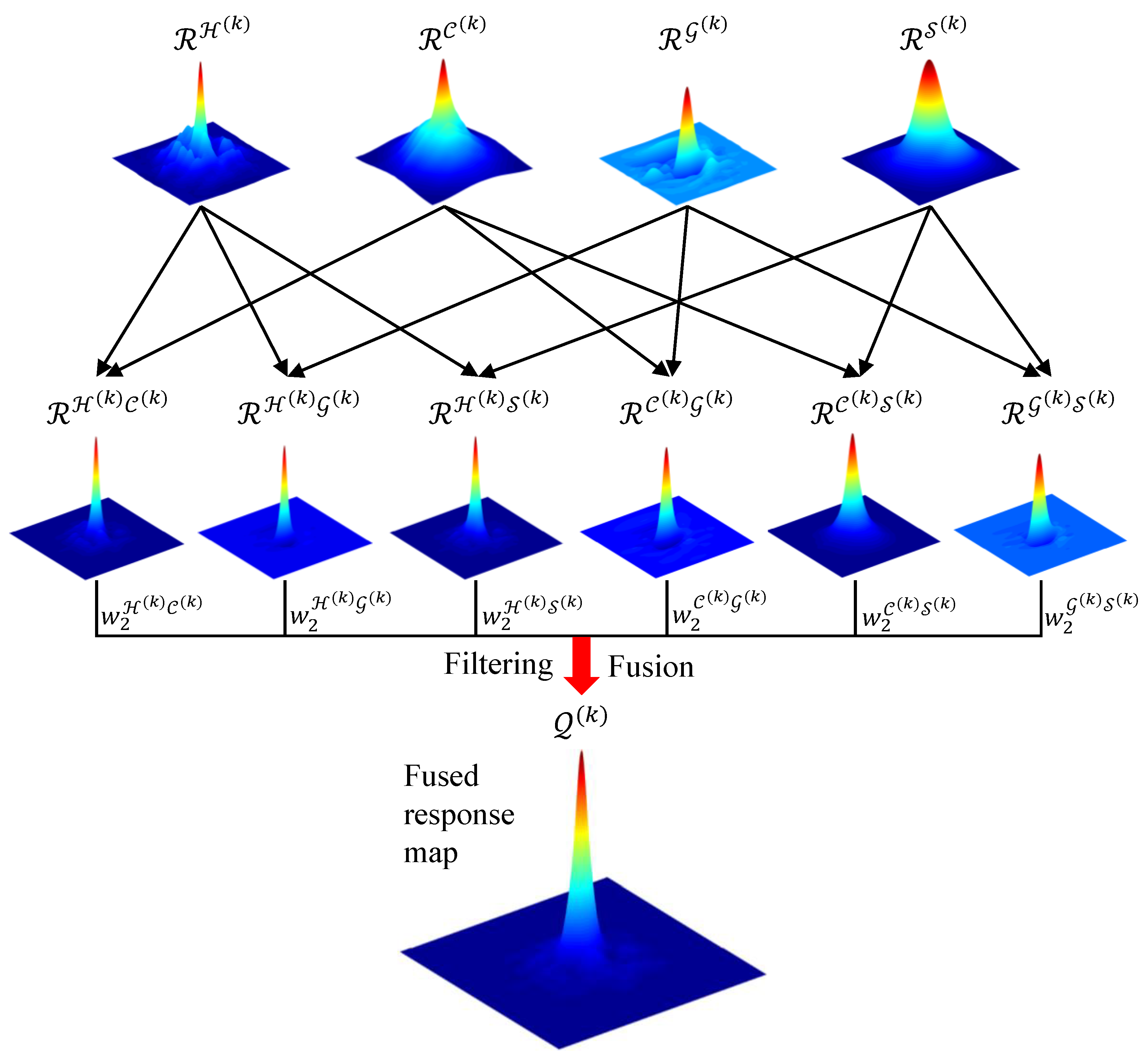

- A new strategy for fusing response maps which learned from related multiple features: A simple yet effective fusion strategy that effectively combines different response maps is designed. In the challenging tracking sequences with various changes, the response from a single feature may contain interference information, which should be filtered before the fusion. To make the interference information filtering more accurate and adaptive, the peak to sidelobe ratio (PSR) is used to measure the peak strength of the response and weight the response map. In addition, the weighting approach is able to gradually enhance the response level for the final fusion map.

- Qualitative and quantitative experiments on 123 challenging UAV image sequences, i.e., UAV123 [17], show that the presented tracking approach, i.e., OMFL tracker, achieves real-time performance on an i7 processor (3.07 GHz) with 32 GB RAM and performs favorably against 13 state-of-the-art trackers in terms of robustness, accuracy, and efficiency.

2. Related Works

2.1. Tracking with Discriminative Method

2.2. Tracking with Correlation Filter

2.3. Tracking for Reducing Boundary Effect

3. Proposed Tracking Approach

3.1. Overview

3.2. Tracking with Background-Aware Correlation Filter

3.3. Object Representation with Multiple Features

3.3.1. Object Representation with fHOG

| Algorithm 1: fHOG feature extraction |

| Input: 1 Convert to the grayscale intensity image 2 Calculate the gradient of each pixel to get a pixel-level feature map 3 Aggregate into a dense grid of rectangular cells to obtain a cell-based feature map , where the cell size is set to 4 Truncate and normalize by using four different normalization factors of a 27-dimensional histogram over nine insensitive orientations and 18 sensitive orientations 5 Sum on each orientation channel with different normalization factors 6 Sum on the values of nine contrast insensitive orientations based on each normalization factor Output: fHOG feature |

3.3.2. Object Representation with Color Names

3.3.3. Object Representation with Pixel Intensity

3.3.4. Object Representation with Saliency

3.4. Fusion of Multi-Feature Learning

| Algorithm 2: OMFL tracker |

|

4. Evaluation of Tracking Performance

4.1. Evaluation Criteria

4.2. Overall Performance

4.2.1. Evaluation with Different Voters

4.2.2. Evaluation with Other State-Of-The-Art Trackers

4.3. Limitations of Presented Tracking Approach

4.3.1. Limitations in Attributes

4.3.2. Limitations in Speed

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mueller, M.; Sharma, G.; Smith, N.; Ghanem, B. Persistent Aerial Tracking System for UAVs. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 1562–1569. [Google Scholar]

- Xue, X.; Li, Y.; Shen, Q. Unmanned Aerial Vehicle Object Tracking by Correlation Filter with Adaptive Appearance Model. Sensors 2018, 18, 2751. [Google Scholar] [CrossRef] [PubMed]

- Fu, C.; Carrio, A.; Olivares-Mendez, M.A.; Suarez-Fernandez, R.; Campoy, P. Robust Real-Time Vision-Based Aircraft Tracking from Unmanned Aerial Vehicles. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 5441–5446. [Google Scholar]

- Martinez, C.; Sampedro, C.; Chauhan, A.; Campoy, P. Towards Autonomous Detection and Tracking of Electric Towers for Aerial Power Line Inspection. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 284–295. [Google Scholar]

- Olivares-Mendez, M.A.; Fu, C.; Ludivig, P.; Bissyande, T.F.; Kannan, S.; Zurad, M.; Annaiyan, A.; Voos, H.; Campoy, P. Towards an Autonomous Vision-Based Unmanned Aerial System against Wildlife Poachers. Sensors 2015, 15, 31362–31391. [Google Scholar] [CrossRef] [PubMed]

- Lin, S.; Garratt, M.A.; Lambert, A.J. Monocular Vision-Based Real-Time Target Recognition and Tracking for Autonomously Landing an UAV in a Cluttered Shipboard Environment. Auton. Robots 2017, 41, 881–901. [Google Scholar] [CrossRef]

- Garimella, G.; Kobilarov, M. Towards Model-Predictive Control for Aerial Pick-and-Place. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 4692–4697. [Google Scholar]

- Yin, Y.; Wang, X.; Xu, D.; Liu, F.; Wang, Y.; Wu, W. Robust Visual Detection–Learning–Tracking Framework for Autonomous Aerial Refueling of UAVs. IEEE Trans. Instrum. Meas. 2016, 65, 510–521. [Google Scholar] [CrossRef]

- Xu, W.; Zhong, S.; Yan, L.; Wu, F.; Zhang, W. Moving Object Detection in Aerial Infrared Images with Registration Accuracy Prediction and Feature Points Selection. Infrared Phys. Technol. 2018, 92, 318–326. [Google Scholar] [CrossRef]

- Opromolla, R.; Fasano, G.; Accardo, D. A Vision-Based Approach to UAV Detection and Tracking in Cooperative Applications. Sensors 2018, 18, 3391. [Google Scholar] [CrossRef] [PubMed]

- Cheng, H.; Lin, L.; Zheng, Z.; Guan, Y.; Liu, Z. An Autonomous Vision-Based Target Tracking System for Rotorcraft Unmanned Aerial Vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1732–1738. [Google Scholar]

- Chakrabarty, A.; Morris, R.; Bouyssounouse, X.; Hunt, R. Autonomous Indoor Object Tracking with the Parrot AR.Drone. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; pp. 25–30. [Google Scholar]

- Qu, Z.; Lv, X.; Liu, J.; Jiang, L.; Liang, L.; Xie, W. Long-term Reliable Visual Tracking with UAVs. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 2000–2005. [Google Scholar]

- Häger, G.; Bhat, G.; Danelljan, M.; Khan, F.S.; Felsberg, M.; Rudl, P.; Doherty, P. Combining Visual Tracking and Person Detection for Long Term Tracking on a UAV. In Advances in Visual Computing; Springer: New York, NY, USA, 2016; pp. 557–568. [Google Scholar]

- Fu, C.; Duan, R.; Kircali, D.; Kayacan, E. Onboard Robust Visual Tracking for UAVs Using a Reliable Global-Local Object Model. Sensors 2016, 16, 1406. [Google Scholar] [CrossRef] [PubMed]

- Carrio, A.; Fu, C.; Collumeau, J.F.; Campoy, P. SIGS: Synthetic Imagery Generating Software for the Development and Evaluation of Vision-based Sense-And-Avoid Systems. J. Intell. Robot. Syst. 2016, 84, 559–574. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. A Benchmark and Simulator for UAV Tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 445–461. [Google Scholar]

- Hare, S.; Golodetz, S.; Saffari, A.; Vineet, V.; Cheng, M.; Hicks, S.L.; Torr, P.H.S. Struck: Structured Output Tracking with Kernels. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2096–2109. [Google Scholar] [CrossRef] [PubMed]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed]

- Babenko, B.; Yang, M.H.; Belongie, S.J. Robust Object Tracking with Online Multiple Instance Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1619–1632. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zhang, L.; Yang, M. Fast Compressive Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2002–2015. [Google Scholar] [CrossRef]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual Object Tracking Using Adaptive Correlation Filters. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J.P. Exploiting the Circulant Structure of Tracking-by-Detection with Kernels. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 702–715. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; van de Weijer, J. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1090–1097. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, J. A Scale Adaptive Kernel Correlation Filter Tracker with Feature Integration. In Proceedings of the ECCV Workshops, Zurich, Switzerland, 6–7 September 2014; pp. 254–265. [Google Scholar]

- Danelljan, M.; Häger, G.; Khan, F.S.; Felsberg, M. Learning Spatially Regularized Correlation Filters for Visual Tracking. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Galoogahi, H.K.; Sim, T.; Lucey, S. Correlation Filters with Limited Boundaries. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 4630–4638. [Google Scholar]

- Galoogahi, H.K.; Fagg, A.; Lucey, S. Learning Background-Aware Correlation Filters for Visual Tracking. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1144–1152. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- de Weijer, J.V.; Schmid, C.; Verbeek, J.J.; Larlus, D. Learning Color Names for Real-World Applications. IEEE Trans. Image Process. 2009, 18, 1512–1523. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency Detection: A Spectral Residual Approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Berlin, B.; Kay, P. Basic Color Terms: Their Universality and Evolution; University of California Press: Berkeley, CA, USA, 1991. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-Cue Correlation Filters for Robust Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4844–4853. [Google Scholar]

- Zhang, J.; Ma, S.; Sclaroff, S. MEEM: Robust Tracking via Multiple Experts Using Entropy Minimization. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 188–203. [Google Scholar]

- Hong, Z.; Chen, Z.; Wang, C.; Mei, X.; Prokhorov, D.; Tao, D. MUlti-Store Tracker (MUSTer): A Cognitive Psychology Inspired Approach to Object Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 749–758. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Accurate Scale Estimation for Robust Visual Tracking. In Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2014; pp. 1–11. [Google Scholar]

- Jia, X.; Lu, H.; Yang, M. Visual Tracking via Adaptive Structural Local Sparse Appearance Model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1822–1829. [Google Scholar]

- Ross, D.A.; Lim, J.; Lin, R.S.; Yang, M.H. Incremental Learning for Robust Visual Tracking. Int. J. Comput. Vis. 2008, 77, 125–141. [Google Scholar] [CrossRef]

| Paramter | Value | Parameter | Value |

|---|---|---|---|

| Cell size | Regularization parameter | ||

| Number of scales | 5 | Penalty factor | 1 |

| Scale step | 10,000 | ||

| Number of iterations of ADMM | 2 | 10 | |

| Bandwidth of a 2D gaussian function (object size ) | Online adaptation rate |

| ARC | BC | CM | FM | FOC | IV | LR | OV | POC | SV | SOB | VC | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCCT | 49.3 | 46.9 | 54.4 | 36.1 | 42.1 | 47.7 | 45.5 | 49.3 | 54.2 | 54.7 | 62.7 | 48.4 |

| MEEM | 51.1 | 51.0 | 53.3 | 34.0 | 41.0 | 48.1 | 48.4 | 46.9 | 51.6 | 53.2 | 62.4 | 53.3 |

| SRDCF | 47.2 | 38.9 | 52.7 | 42.7 | 41.8 | 43.6 | 43.1 | 49.2 | 50.4 | 53.1 | 58.5 | 47.4 |

| BACF | 47.8 | 42.5 | 53.2 | 40.7 | 33.6 | 43.0 | 43.1 | 42.1 | 46.7 | 52.5 | 60.5 | 49.1 |

| MUSTER | 43.5 | 37.5 | 47.1 | 30.2 | 43.9 | 38.4 | 45.2 | 40.7 | 44.9 | 49.1 | 56.0 | 43.6 |

| Struck | 39.9 | 52.2 | 43.9 | 22.2 | 38.3 | 41.5 | 47.8 | 38.2 | 44.6 | 46.0 | 52.7 | 42.8 |

| SAMF | 39.0 | 27.4 | 37.8 | 33.2 | 37.9 | 33.9 | 32.2 | 40.9 | 41.9 | 44.1 | 50.3 | 35.8 |

| DSST | 36.1 | 22.2 | 34.0 | 27.7 | 31.3 | 33.3 | 34.5 | 35.6 | 38.4 | 42.4 | 50.9 | 35.8 |

| TLD | 34.9 | 28.5 | 35.4 | 22.6 | 28.3 | 23.3 | 44.4 | 28.1 | 34.5 | 39.1 | 48.0 | 35.1 |

| KCF | 30.3 | 22.3 | 30.5 | 21.7 | 28.1 | 26.9 | 30.5 | 30.9 | 34.4 | 37.3 | 45.3 | 31.6 |

| CSK | 29.3 | 22.7 | 30.6 | 24.8 | 29.2 | 27.2 | 30.9 | 30.6 | 32.8 | 37.5 | 43.2 | 29.4 |

| ASLA | 29.3 | 23.8 | 23.2 | 17.1 | 29.3 | 23.1 | 33.8 | 26.3 | 31.8 | 35.3 | 44.4 | 27.3 |

| IVT | 23.2 | 18.1 | 18.6 | 15.0 | 24.0 | 19.6 | 25.5 | 23.6 | 26.3 | 29.3 | 35.6 | 24.0 |

| OMFL | 57.9 | 48.4 | 62.4 | 54.2 | 39.7 | 54.1 | 50.8 | 55.2 | 57.0 | 61.1 | 66.4 | 59.9 |

| ARC | BC | CM | FM | FOC | IV | LR | OV | POC | SV | SOB | VC | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCCT | 35.7 | 30.5 | 40.7 | 26.0 | 23.6 | 34.2 | 25.7 | 36.5 | 37.6 | 39.6 | 45.1 | 36.1 |

| MEEM | 32.7 | 31.1 | 36.1 | 23.1 | 21.1 | 32.2 | 24.1 | 32.5 | 33.7 | 34.0 | 40.3 | 34.8 |

| SRDCF | 34.6 | 26.3 | 39.9 | 31.1 | 22.9 | 33.3 | 23.7 | 36.8 | 35.5 | 39.0 | 42.1 | 35.6 |

| BACF | 33.4 | 27.5 | 39.7 | 27.5 | 17.3 | 31.0 | 24.8 | 32.1 | 32.7 | 37.4 | 42.4 | 35.3 |

| MUSTER | 29.9 | 22.5 | 33.3 | 20.3 | 22.9 | 28.1 | 23.5 | 29.5 | 30.2 | 34.3 | 38.5 | 31.6 |

| Struck | 27.4 | 33.1 | 30.4 | 15.5 | 19.8 | 29.0 | 24.6 | 28.0 | 30.0 | 30.7 | 34.0 | 29.1 |

| SAMF | 27.4 | 16.0 | 27.1 | 22.0 | 19.7 | 23.8 | 16.6 | 28.9 | 28.2 | 30.6 | 34.8 | 26.4 |

| DSST | 23.5 | 13.7 | 22.5 | 15.7 | 15.6 | 21.0 | 17.1 | 24.5 | 24.6 | 26.2 | 31.5 | 23.1 |

| TLD | 23.9 | 16.3 | 25.2 | 14.8 | 13.5 | 16.0 | 24.2 | 20.2 | 21.9 | 26.8 | 30.1 | 25.1 |

| KCF | 20.2 | 12.5 | 20.9 | 14.5 | 13.5 | 18.3 | 14.7 | 22.2 | 22.3 | 23.8 | 27.9 | 21.0 |

| CSK | 20.5 | 13.4 | 21.4 | 15.1 | 15.0 | 18.9 | 14.9 | 22.7 | 22.0 | 24.7 | 28.5 | 20.3 |

| ASLA | 19.4 | 14.8 | 15.9 | 9.9 | 13.4 | 18.0 | 18.6 | 16.2 | 20.2 | 23.8 | 30.6 | 19.9 |

| IVT | 16.5 | 10.3 | 13.3 | 9.1 | 11.5 | 16.2 | 13.7 | 15.2 | 17.0 | 21.4 | 25.6 | 18.4 |

| OMFL | 38.9 | 31.2 | 44.9 | 33.9 | 20.8 | 35.6 | 27.8 | 39.5 | 38.3 | 42.2 | 46.6 | 41.0 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fu, C.; Lin, F.; Li, Y.; Chen, G. Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning. Remote Sens. 2019, 11, 549. https://doi.org/10.3390/rs11050549

Fu C, Lin F, Li Y, Chen G. Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning. Remote Sensing. 2019; 11(5):549. https://doi.org/10.3390/rs11050549

Chicago/Turabian StyleFu, Changhong, Fuling Lin, Yiming Li, and Guang Chen. 2019. "Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning" Remote Sensing 11, no. 5: 549. https://doi.org/10.3390/rs11050549

APA StyleFu, C., Lin, F., Li, Y., & Chen, G. (2019). Correlation Filter-Based Visual Tracking for UAV with Online Multi-Feature Learning. Remote Sensing, 11(5), 549. https://doi.org/10.3390/rs11050549