Monitoring of Snow Cover Ablation Using Very High Spatial Resolution Remote Sensing Datasets

Abstract

:1. Introduction

2. Materials and Methods

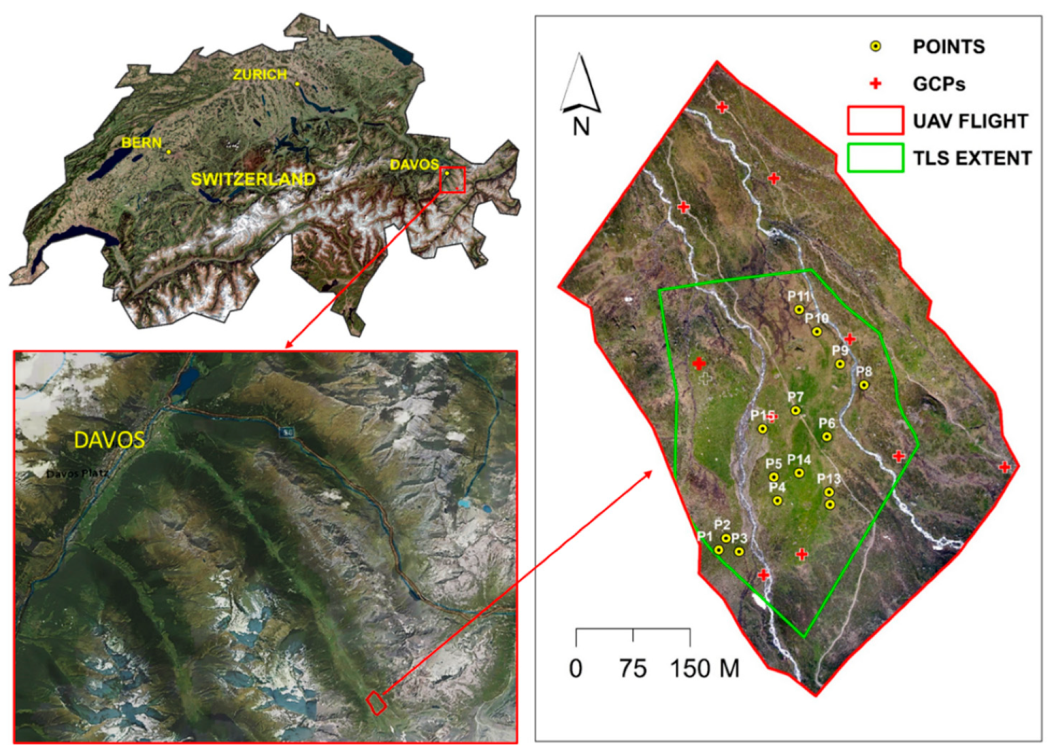

2.1. Study Area

2.2. UAS-Based Image Acquisition and Data Processing

2.3. Terrestrial Laser Scanning

2.4. Monitoring Snow Cover Ablation

3. Results and Discussion

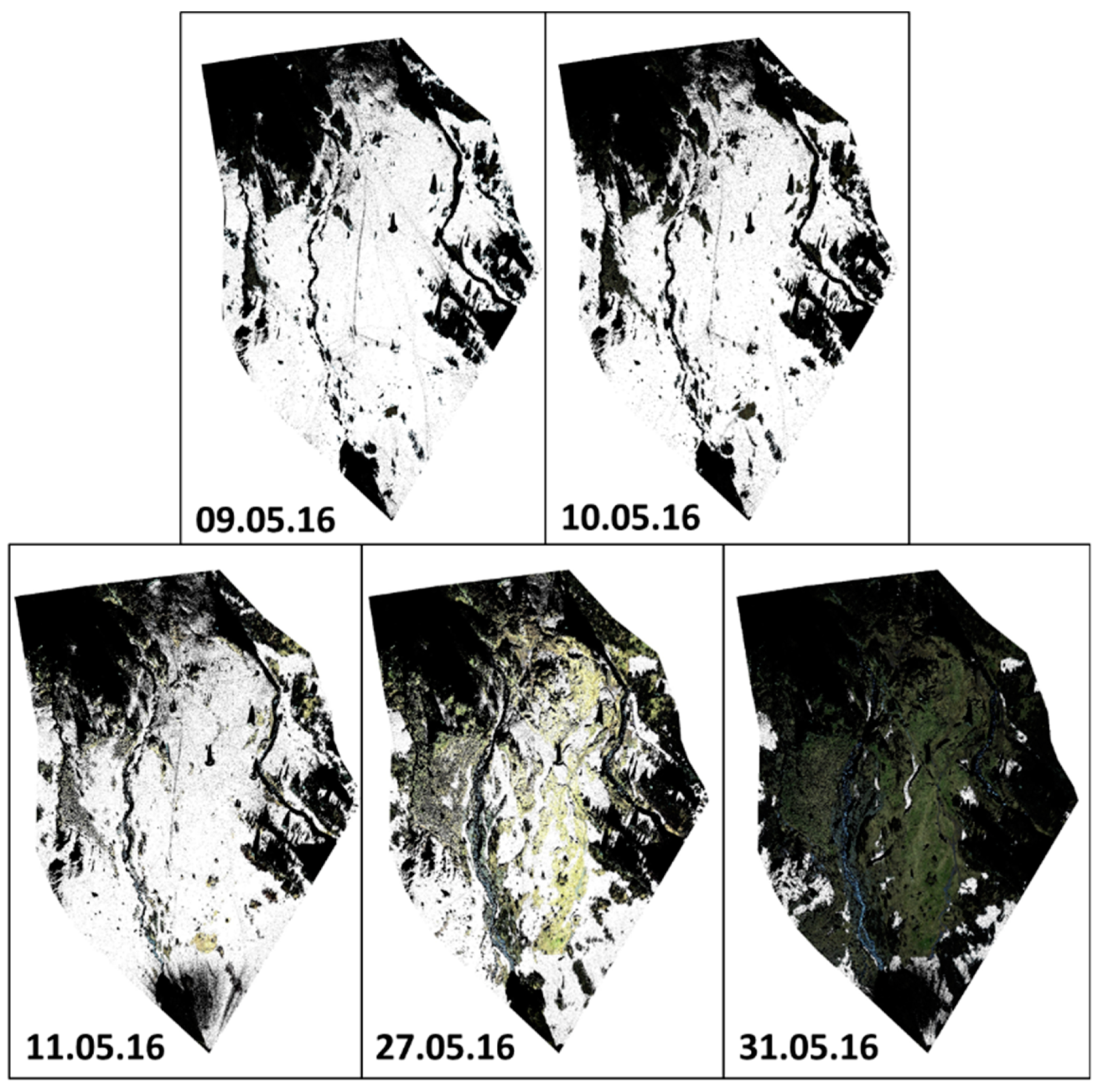

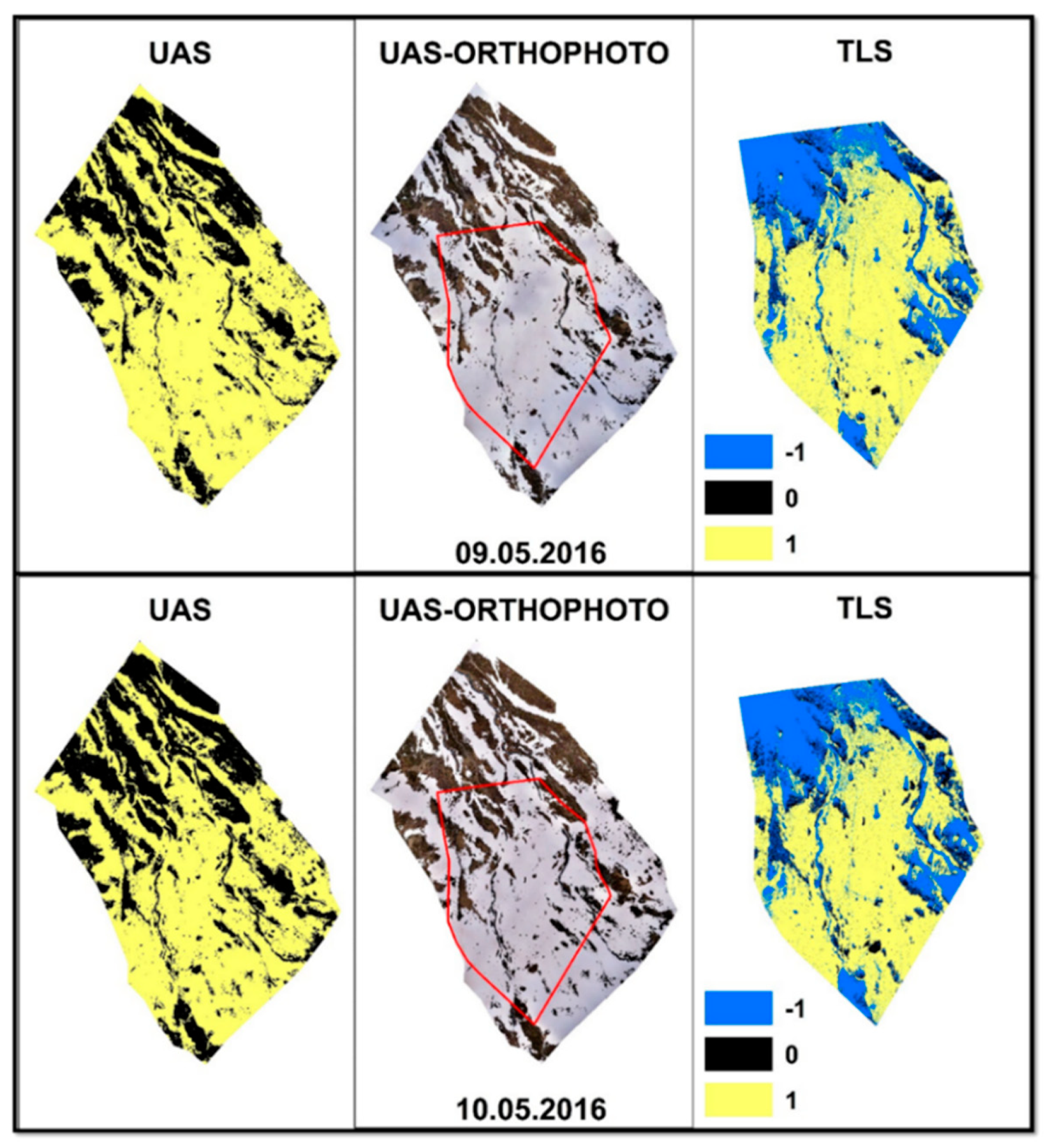

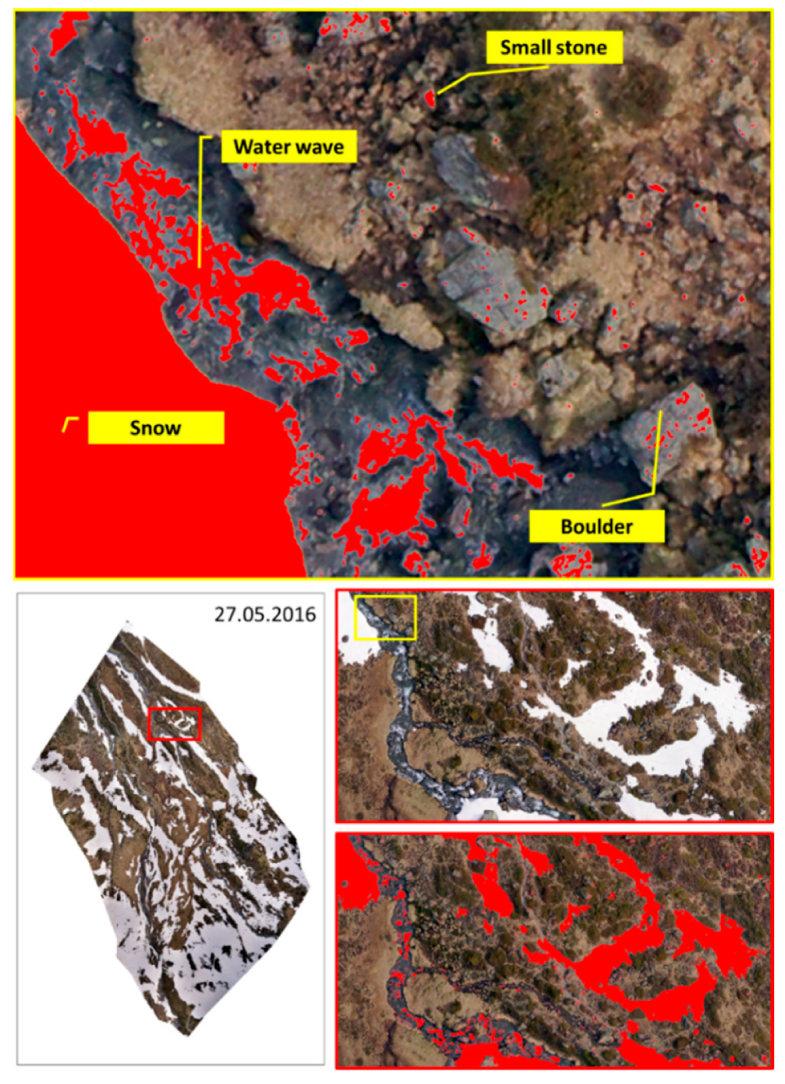

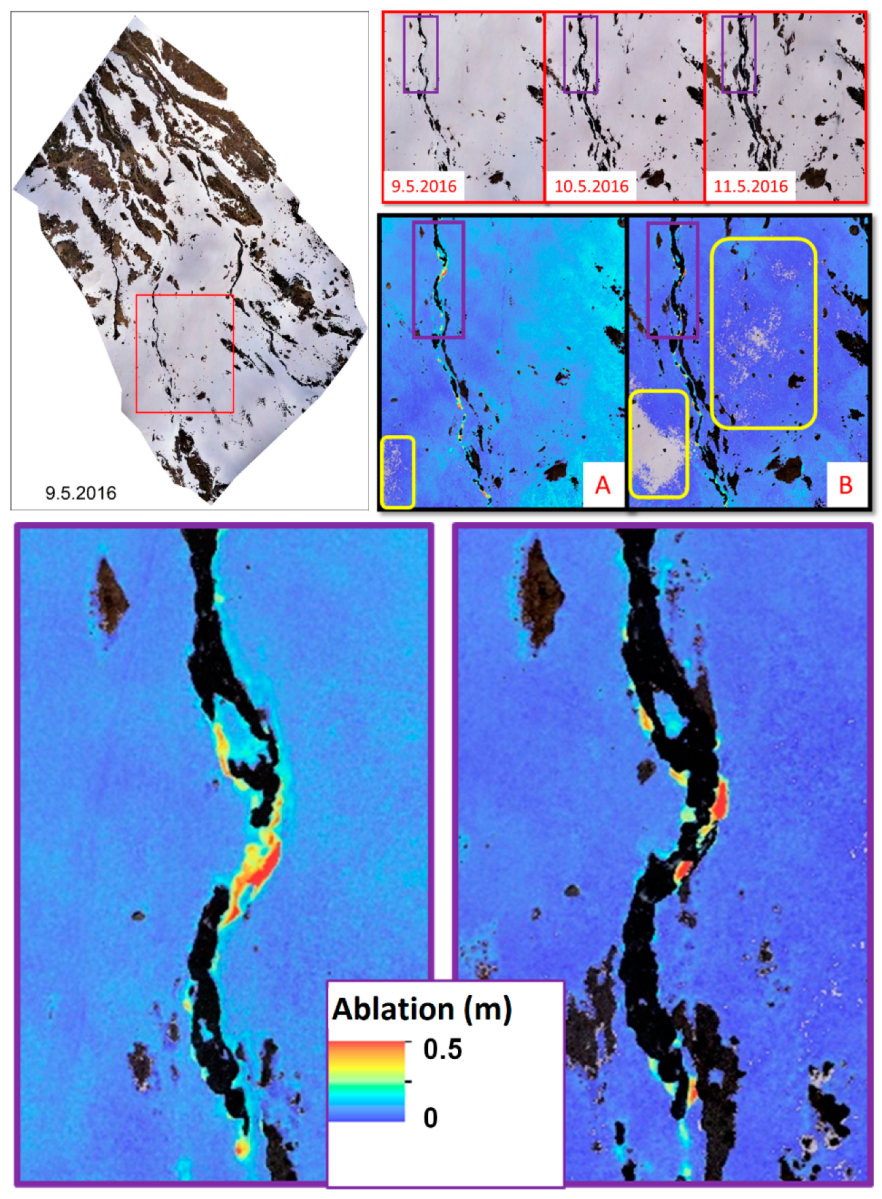

3.1. Representation of Snow-Covered Areas via UAS and TLS Orthophoto Measurements

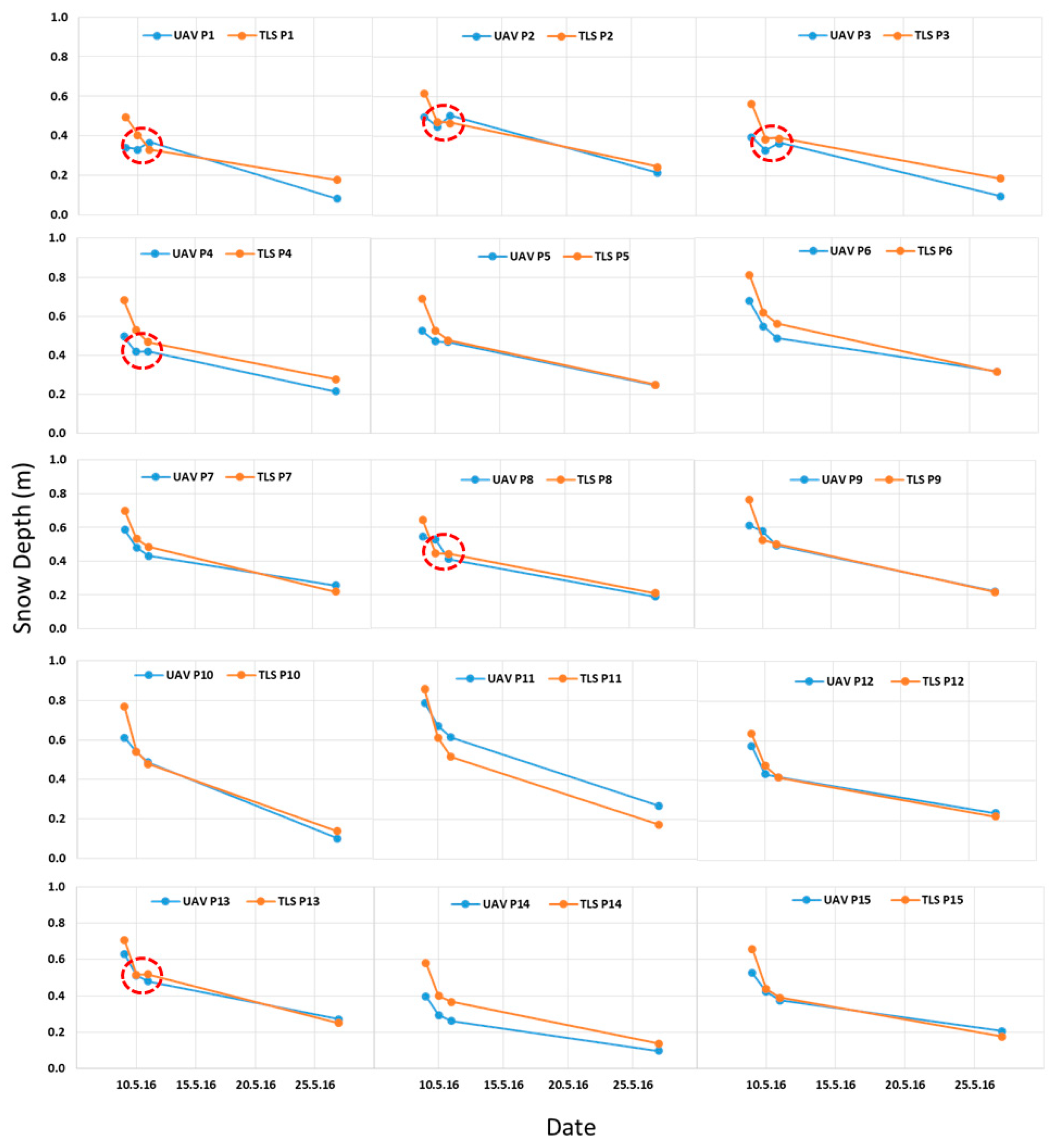

3.2. Representation of Snow Ablation Change in HS

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- MacDonell, S.; Kinnard, C.; Mölg, T.; Abermann, J. Meteorological drivers of ablation processes on a cold glacier in the semi-arid Andes of Chile. Cryosphere 2013, 7, 1513–1526. [Google Scholar] [CrossRef]

- Schmieder, J.; Hanzer, F.; Marke, T.; Garvelmann, J.; Warscher, M.; Kunstmann, H.; Strasser, U. The importance of snowmelt spatiotemporal variability for isotope-based hydrograph separation in a high-elevation catchment. Hydrol. Earth Syst. Sci. 2016, 20, 5015–5033. [Google Scholar] [CrossRef]

- Egli, L.; Jonas, T.; Grünewald, T.; Schirmer, M.; Burlando, P. Dynamics of snow ablation in a small Alpine catchment observed by repeated terrestrial laser scans. Hydrol. Process. 2012, 26, 1574–1585. [Google Scholar] [CrossRef]

- Dyer, J.L.; Mote, T.L. Trends in snow ablation over North America. Int. J. Climatol. 2007, 27, 739–748. [Google Scholar] [CrossRef]

- Lehning, M.; Löwe, H.; Ryser, M.; Raderschall, N. Inhomogeneous precipitation distribution and snow transport in steep terrain. Water Ressour. Res. 2008, 44, 19. [Google Scholar] [CrossRef]

- Schweizer, J.; Kronholm, K.; Jamieson, J.B.; Birkeland, K.W. Review of spatial variability of snowpack properties and its importance for avalanche formation. Cold Reg. Sci. Technol. 2008, 51, 253–272. [Google Scholar] [CrossRef]

- Luzi, G.; Noferini, L.; Mecatti, D.; Macaluso, G.; Pieraccini, M.; Atzeni, C.; Schaffhauser, A.; Fromm, R.; Nagler, T. Using a ground-based SAR interferometer and a terrestrial laser scanner to monitor a snow-covered slope: Results from an experimental data collection in Tyrol (Austria). IEEE Trans. Geosci. Remote Sens. 2009, 47, 382–393. [Google Scholar] [CrossRef]

- Grünewald, T.; Lehning, M. Are flat-field snow depth measurements representative? A comparison of selected index sites with areal snow depth measurements at the small catchment scale. Hydrol. Process. 2014, 29, 1717–1728. [Google Scholar] [CrossRef]

- Vikhamar, D.; Solberg, R. Snow-cover mapping in forests by constrained linear spectral unmixing of MODIS data. Remote Sens. Environ. 2003, 88, 309–323. [Google Scholar] [CrossRef]

- Miziǹski, B.; Niedzielski, T. Fully-automated estimation of snow depth in near real time with the use of unmanned aerial vehicles without utilizing ground control points. Cold Reg. Sci. Technol. 2017, 138, 63–72. [Google Scholar] [CrossRef]

- Bühler, Y.; Marty, M.; Egli, L.; Veitinger, J.; Jonas, T.; Thee, P.; Ginzler, C. Snow depth mapping in high-alpine catchments using digital photogrammetry. Cryosphere 2015, 9, 229–243. [Google Scholar] [CrossRef]

- Matson, M. NOAA satellite snow cover data. Glob. Planet. Chang. 1991, 4, 213–218. [Google Scholar] [CrossRef]

- Robinson, D.A.; Frei, A. Seasonal variability of Northern Hemisphere snow extent using visible satellite data. Prof. Geogr. 2000, 52, 307–315. [Google Scholar] [CrossRef]

- Klein, A.G.; Barnett, A.C. Validation of daily MODIS snow cover maps of the upper Rio Grande River basin for the 2000–2001 snow year. Remote Sens. Environ. 2003, 86, 162–176. [Google Scholar] [CrossRef]

- Tekeli, A.E.; Akyürek, Z.; Şorman, A.A.; Şensoy, A.; Şorman, A.Ü. Using MODIS snow cover maps in modeling snowmelt runoff process in the eastern part of Turkey. Remote Sens. Environ. 2005, 97, 216–230. [Google Scholar] [CrossRef]

- Brown, R.D.; Derksen, C.; Wang, L. Assessment of spring snow cover duration variability over northern Canada from satellite datasets. Remote Sens. Environ. 2007, 111, 367–381. [Google Scholar] [CrossRef]

- Nolin, A.W. Recent advances in remote sensing of seasonal snow. J. Glaciol. 2010, 56, 1141–1150. [Google Scholar] [CrossRef]

- Roy, A.; Royer, A.; Turcotte, R. Improvement of springtime stream-flow simulations in a boreal environment by incorporating snow-covered area derived from remote sensing data. J. Hydrol. 2010, 390, 35–44. [Google Scholar] [CrossRef]

- Eckerstorfer, M.; Bühler, Y.; Frauenfelder, R.; Malnes, E. Remote Sensing of Snow Avalanches: Recent Advances, Potential, and Limitations. Cold Reg. Sci. Technol. 2016, 121, 126–140. [Google Scholar] [CrossRef]

- Hori, M.; Sugiura, K.; Kobayashi, K.; Aoki, T.; Tanikawa, T.; Kuchiki, K.; Niwano, M.; Enomoto, H. A 38-year (1978–2015) Northern Hemisphere daily snow cover extent product derived using consistent objective criteria from satellite-borne optical sensors. Remote Sens. Environ. 2017, 191, 402–418. [Google Scholar] [CrossRef]

- Haefner, H.; Seidel, K.; Ehrler, H. Applications of snow cover mapping in high mountain regions. Phys. Chem. Earth. 1997, 22, 275–278. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G.A.; Salomonson, V.V. Development of methods for mapping global snow cover using moderate resolution imaging spectroradiometer data. Remote Sens. Environ. 1995, 54, 127–140. [Google Scholar] [CrossRef]

- Crawford, C.J.; Manson, S.M.; Bauer, M.E.; Hall, D.K. Multitemporal snow cover mapping in mountainous terrain for Landsat climate data record development. Remote Sens. Environ. 2013, 135, 224–233. [Google Scholar] [CrossRef]

- Lee, C.Y.; Jones, S.D.; Bellman, C.J.; Buxton, L. DEM creation of a snow covered surface using digital aerial photography. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XXXVII (Part B8), Beijing, China, 3–11 July 2008; pp. 831–835. [Google Scholar]

- Nolan, M.; Larsen, C.; Sturm, M. Mapping snow depth from manned aircraft on landscape scales at centimeter resolution using structure-from-motion photogrammetry. Cryosphere 2015, 9, 1445–1463. [Google Scholar] [CrossRef]

- Deems, J.; Painter, T.; Finnegan, D. Lidar measurement of snow depth: A review. J. Glaciol. 2013, 59, 467–479. [Google Scholar] [CrossRef]

- Prokop, A. Assessing the applicability of terrestrial laser scanning for spatial snow depth measurements. Cold Reg. Sci. Technol. 2008, 54, 155–163. [Google Scholar] [CrossRef]

- Prokop, A.; Schirmer, M.; Rub, M.; Lehning, M.; Stocker, M. A comparison of measurement methods: Terrestrial laser scanning, tachymetry and snow probing for the determination of the spatial snow-depth distribution on slopes. Ann. Glaciol. 2008, 49, 210–216. [Google Scholar] [CrossRef]

- Grünewald, T.; Schirmer, M.; Mott, R.; Lehning, M. Spatial and temporal variability of snow depth and ablation rates in a small mountain catchment. Cryosphere 2010, 4, 215–225. [Google Scholar] [CrossRef]

- Jóhannesson, T.; Björnsson, H.; Magnússon, E.; Guđmundsson, S.; Pálsson, F.; Sigurđsson, O.; Thorsteinsson, T.; Berthier, E. Ice-volume changes, bias estimation of mass-balance measurements and changes in subglacial lakes derived by lidar mapping of the surface of Icelandic glaciers. Ann. Glaciol. 2013, 54, 63–74. [Google Scholar] [CrossRef]

- Grünewald, T.; Bühler, Y.; Lehning, M. Elevation dependency of mountain snow depth. Cryosphere 2014, 8, 2381–2394. [Google Scholar] [CrossRef]

- Mott, R.; Schlögl, S.; Dirks, L.; Lehning, M. Impact of Extreme Land Surface Heterogeneity on Micrometeorology over Spring Snow Cover. J. Hydrometeorol. 2017, 18, 2705–2722. [Google Scholar] [CrossRef]

- Avanzi, F.; Bianchi, A.; Cina, A.; De Michele, C.; Maschio, P.; Pagliari, D.; Passoni, D.; Pinto, L.; Piras, M.; Rossi, L. Centimetric accuracy in snow depth using Unmanned Aerial System photogrammetry and a multistation. Remote Sens. 2018, 10, 765. [Google Scholar] [CrossRef]

- Machguth, H.; Eisen, O.; Paul, F.; Hoezle, M. Strong spatial variability of snow accumulation observed with helicopter-borne GPR on two adjacent Alpine glaciers. Geophys. Res. Lett. 2006, 33, L13503. [Google Scholar] [CrossRef]

- Wainwright, H.M.; Liljedahl, A.K.; Dafflon, B.; Ulrich, C.; Peterson, J.E.; Gusmeroli, A.; Hubbard, S.S. Mapping snow depth within a tundra ecosystem using multiscale observations and Bayesian methods. Cryosphere 2017, 11, 857–875. [Google Scholar] [CrossRef]

- Farinotti, D.; Magnusson, J.; Huss, M.; Bauder, A. Snow accumulation distribution inferred from time-lapse photography and simple modelling. Hydrol. Process. 2010, 24, 2087–2097. [Google Scholar] [CrossRef]

- Parajka, J.; Haas, P.; Kirnbauer, R.; Jansa, J.; Blöschl, G. Potential of time-lapse photography of snow for hydrological purposes at the small catchment scale. Hydrol. Process. 2012, 26, 3327–3337. [Google Scholar] [CrossRef]

- Vander Jagt, B.; Lucieer, A.; Wallace, L.; Turner, D.; Durand, M. Snow Depth Retrieval with UAS Using Photogrammetric Techniques. Geosciences 2015, 5, 264–285. [Google Scholar] [CrossRef]

- De Michele, C.; Avanzi, F.; Passoni, D.; Barzaghi, R.; Pinto, L.; Dosso, P.; Ghezzi, A.; Gianatti, R.; Della Vedova, G. Using a fixed-wing UAS to map snow depth distribution: An evaluation at peak accumulation. Cryosphere 2016, 10, 511–522. [Google Scholar] [CrossRef]

- Harder, P.; Schirmer, M.; Pomeroy, J.; Helgason, W. Accuracy of snow depth estimation in mountain and prairie environments by an unmanned aerial vehicle. Cryosphere 2016, 10, 2559–2571. [Google Scholar] [CrossRef]

- Lendzioch, T.; Langhammer, J.; Jenicek, M. Tracking forest and open area effects on snow accumulation by unmanned aerial vehicle photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 917. [Google Scholar] [CrossRef]

- Bühler, Y.; Adams, M.S.; Bösch, R.; Stoffel, A. Mapping snow depth in alpine terrain with unmanned aerial systems (UASs): Potential and limitations. Cryosphere 2016, 10, 1075–1088. [Google Scholar] [CrossRef]

- Bühler, Y.; Adams, M.S.; Stoffel, A.; Boesch, R. Photogrammetric reconstruction of homogenous snow surfaces in alpine terrain applying near-infrared UAS imagery. Int. J. Remote Sens. 2017, 38, 3135–3158. [Google Scholar] [CrossRef]

- Adams, M.S.; Bühler, Y.; Fromm, R. Multitemporal Accuracy and Precision Assessment of Unmanned Aerial System Photogrammetry for Slope-Scale Snow Depth Maps in Alpine Terrain. Pure Appl. Geophys. 2018, 175, 3303–3324. [Google Scholar] [CrossRef]

- Boesch, R.; Bühler, Y.; Marty, M.; Ginzler, C. Comparison of digital surface models for snow depth mapping with UAV and aerial cameras. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B8, 453–458. [Google Scholar] [CrossRef]

- Marti, R.; Gascoin, S.; Berthier, E.; de Pinel, M.; Houet, T.; Laffly, D. Mapping snow depth in open alpine terrain from stereo satellite imagery. Cryosphere 2016, 10, 1361–1380. [Google Scholar] [CrossRef]

- Bash, E.A.; Moorman, B.J.; Gunther, A. Detecting short-term surface melt on an Arctic Glacier using UAV surveys. Remote Sens. 2018, 10, 1547. [Google Scholar] [CrossRef]

- Rossini, M.; Di Mauro, B.; Garzonio, R.; Baccolo, G.; Cavallini, G.; Mattavelli, M.; De Amicis, M.; Colombo, R. Rapid melting dynamics of an alpine glacier with repeated UAV photogrammetry. Geomorphology 2018, 304, 159–172. [Google Scholar] [CrossRef]

- Mott, R.; Schirmer, M.; Bavay, M.; Grünewald, T.; Lehning, M. Understanding snow-transport processes shaping the mountain snow-cover. Cryosphere 2010, 4, 545–559. [Google Scholar] [CrossRef]

- Eker, R.; Aydın, A.; Hübl, J. Unmanned aerial vehicle (UAV)-based monitoring of a landslide: Gallenzerkogel landslide (Ybbs-Lower Austria) case study. Environ. Monitor. Assess. 2018, 190, 14. [Google Scholar] [CrossRef] [PubMed]

- Lucieer, A.; de Jong, S.M.; Turner, D. Mapping landslide displacements using structure from motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Brodu, N.; Lague, D. 3D Terrestrial lidar data classification of complex natural scenes using a multi-scale dimensionality criterion: Applications in geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef]

- Paul, F.; Winswold, S.H.; Kääb, A.; Nagler, T.; Schwaizer, G. Glacier Remote Sensing Using Sentinel-2. Part II:Mapping Glacier Extents and Surface Facies, and Comparison to Landsat 8. Remote Sens. 2016, 8, 575. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Remondino, F. Accuracy of typical photogrammetric networks in cultural heritage 3D modeling projects, ISPRS-International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2014, 1, 465–472. [Google Scholar]

- Javernick, L.; Brasington, J.; Caruso, B. Modelling the topography of shallow braided rivers using structure-from-motion photogrammetry. Geomorphology 2014, 213, 166–182. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef]

- Dietrich, J.T. Riverscape mapping with helicopter-based Structure-from-Motion photogrammetry. Geomorphology 2015, 252, 144–157. [Google Scholar] [CrossRef]

- Smith, M.; Carrivick, J.L.; Quincey, D.J. Structure from motion photogrammetry in physical geography. Phys. Geogr. 2016, 40, 247–275. [Google Scholar] [CrossRef]

- Dai, F.; Feng, Y.; Hough, R. Photogrammetric error sources and impacts on modeling and surveying in construction engineering applications. Vis. Eng. 2014, 2. [Google Scholar] [CrossRef]

- Rumpler, M.; Daftry, S.; Tscharf, A.; Prettenthaler, R.; Hoppe, C.; Mayer, G.; Bischof, H. Automated end-to-end workflow for precise and geo-accurate reconstructions using fiducial markers. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 135–142. [Google Scholar] [CrossRef]

| Date | Number of Images | Average Flight Height (m AGL) | Focal Length (mm) | ISO | Shutter Speed | GSD (cm/px) | Area Covered (m2) | Number of GCPs |

|---|---|---|---|---|---|---|---|---|

| 09.05.2016 | 235 | 121 | 20 | 100 | 1/800–1/1000 | 2.24 | 305,457.9 | 9 |

| 10.05.2016 | 238 | 123 | 20 | 100 | 1/800 | 2.27 | 303,577.2 | 9 |

| 11.05.2016 | 234 | 122 | 20 | 100 | 1/800 | 2.24 | 302,012.0 | 9 |

| 27.05.2016 | 244 | 124 | 20 | 100 | 1/1000 | 2.29 | 311,802.7 | 9 |

| 24.06.2016 | 216 | 129 | 20 | 100 | 1/1000–1/1250 | 2.38 | 315,327.5 | 9 |

| Date of Scans | Number of Scans | Point Numbers in Raw Clouds | Point Numbers in Noise Class | Point Numbers in Non-Noise Class | Noise Class % | Non-Noise Class % |

|---|---|---|---|---|---|---|

| 09.05.2016 | 1 | 2,724,596 | 17,238 | 2,707,358 | 0.6 | 99.4 |

| 10.05.2016 | 1 | 2,909,957 | 16,275 | 2,893,682 | 0.6 | 99.4 |

| 11.05.2016 | 1 | 3,095,311 | 262,941 | 2,832,370 | 8.5 | 91.5 |

| 27.05.2016 | 1 | 3,944,456 | 243,243 | 3,701,213 | 6.2 | 93.8 |

| 31.05.2016 | 1 | 3,277,353 | 562,438 | 2,714,915 | 17.2 | 82.8 |

| Classes | 9.05.2016 | 10.05.2016 | 11.05.2016 | 27.05.2016 | |||||

|---|---|---|---|---|---|---|---|---|---|

| UAS | TLS | UAS | TLS | UAS | TLS | UAS | TLS | ||

| User Accuracy | 1 | 1.00 | 0.98 | 1.00 | 0.96 | 1.00 | 0.95 | 1.00 | 0.87 |

| 0 | 0.97 | 0.74 | 0.98 | 0.88 | 0.94 | 0.88 | 0.99 | 0.95 | |

| Producer Accuracy | 1 | 0.97 | 0.79 | 0.98 | 0.89 | 0.94 | 0.89 | 0.99 | 0.95 |

| 0 | 1.00 | 0.97 | 1.00 | 0.96 | 1.00 | 0.95 | 1.00 | 0.88 | |

| Overall Accuracy | 0.98 | 0.86 | 0.99 | 0.92 | 0.97 | 0.92 | 0.99 | 0.91 | |

| Kappa Index Value | 0.97 | 0.72 | 0.98 | 0.84 | 0.94 | 0.83 | 0.99 | 0.82 | |

| Date | UAS | TLS | UAS | TLS | ||||

|---|---|---|---|---|---|---|---|---|

| Number of Snow-Covered Pixels | Number of Snow-Free Pixels | Number of Snow-Covered Pixels | Number of Snow-Free Pixels | Number of NoData Pixels | Snow-Covered Area (%) | Snow-Covered Area (%) | NoData Pixels (%) | |

| 09.05.2016 | 18,580,602 | 8,122,602 | 1,681,822 | 180,374 | 852,549 | 69.6 | 61.9 | 31.4 |

| 10.05.2016 | 17,170,161 | 9,533,453 | 1,554,396 | 250,833 | 909,473 | 64.3 | 57.3 | 33.5 |

| 11.05.2016 | 15,944,740 | 10,758,035 | 1,443,553 | 220,441 | 1,050,810 | 52.6 | 53.2 | 38.7 |

| 27.05.2016 | 10,623,759 | 16,079,909 | 805,836 | 697,481 | 1,211,432 | 39.8 | 29.6 | 44.6 |

| Date | ME | MAE | SD | RMSE |

|---|---|---|---|---|

| 09.05.2016 | 0.08 | 0.12 | 0.10 | 0.14 |

| 10.05.2016 | 0.01 | 0.07 | 0.09 | 0.09 |

| 11.05.2016 | 0.01 | 0.06 | 0.08 | 0.08 |

| 27.05.2016 | -0.01 | 0.04 | 0.06 | 0.07 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eker, R.; Bühler, Y.; Schlögl, S.; Stoffel, A.; Aydın, A. Monitoring of Snow Cover Ablation Using Very High Spatial Resolution Remote Sensing Datasets. Remote Sens. 2019, 11, 699. https://doi.org/10.3390/rs11060699

Eker R, Bühler Y, Schlögl S, Stoffel A, Aydın A. Monitoring of Snow Cover Ablation Using Very High Spatial Resolution Remote Sensing Datasets. Remote Sensing. 2019; 11(6):699. https://doi.org/10.3390/rs11060699

Chicago/Turabian StyleEker, Remzi, Yves Bühler, Sebastian Schlögl, Andreas Stoffel, and Abdurrahim Aydın. 2019. "Monitoring of Snow Cover Ablation Using Very High Spatial Resolution Remote Sensing Datasets" Remote Sensing 11, no. 6: 699. https://doi.org/10.3390/rs11060699

APA StyleEker, R., Bühler, Y., Schlögl, S., Stoffel, A., & Aydın, A. (2019). Monitoring of Snow Cover Ablation Using Very High Spatial Resolution Remote Sensing Datasets. Remote Sensing, 11(6), 699. https://doi.org/10.3390/rs11060699