Why Not a Single Image? Combining Visualizations to Facilitate Fieldwork and On-Screen Mapping

Abstract

:1. Introduction

2. Background

2.1. The Logic Behind Using Two-Dimensional Terrain Representations

2.2. Enhancing Visibility of Small-Scale Features With Visualizations

2.3. Combining Information From Different Visualizations with Blend Modes

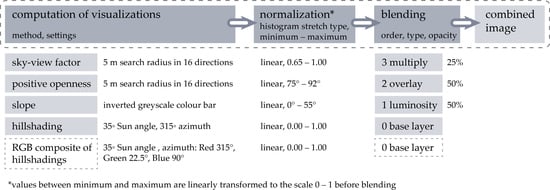

3. Data and Methods

3.1. Study Areas, Data, and Data Processing

3.2. Visualization Methods

- -

- Small-scale features must be clearly visible;

- -

- The visualization must be intuitive and easy to interpret, i.e., the users should have a clear understanding of what the visualization is showing them;

- -

- The visualization must not depend on the orientation or shape of small topographic features;

- -

- The visualization should ‘work well’ in all terrain types;

- -

- Calculation of the visualization should not create artificial artifacts;

- -

- It must be possible to calculate the visualization from a raster elevation model;

- -

- It must be possible to study the result at the entire range of values (saturation of extreme values must be minimal even in extreme rugged terrain);

- -

- Color or grayscale bar should be effective with a linear histogram stretch, manual saturation of extreme values is allowed; and

- -

- Calculation should not slow down the interpretation process.

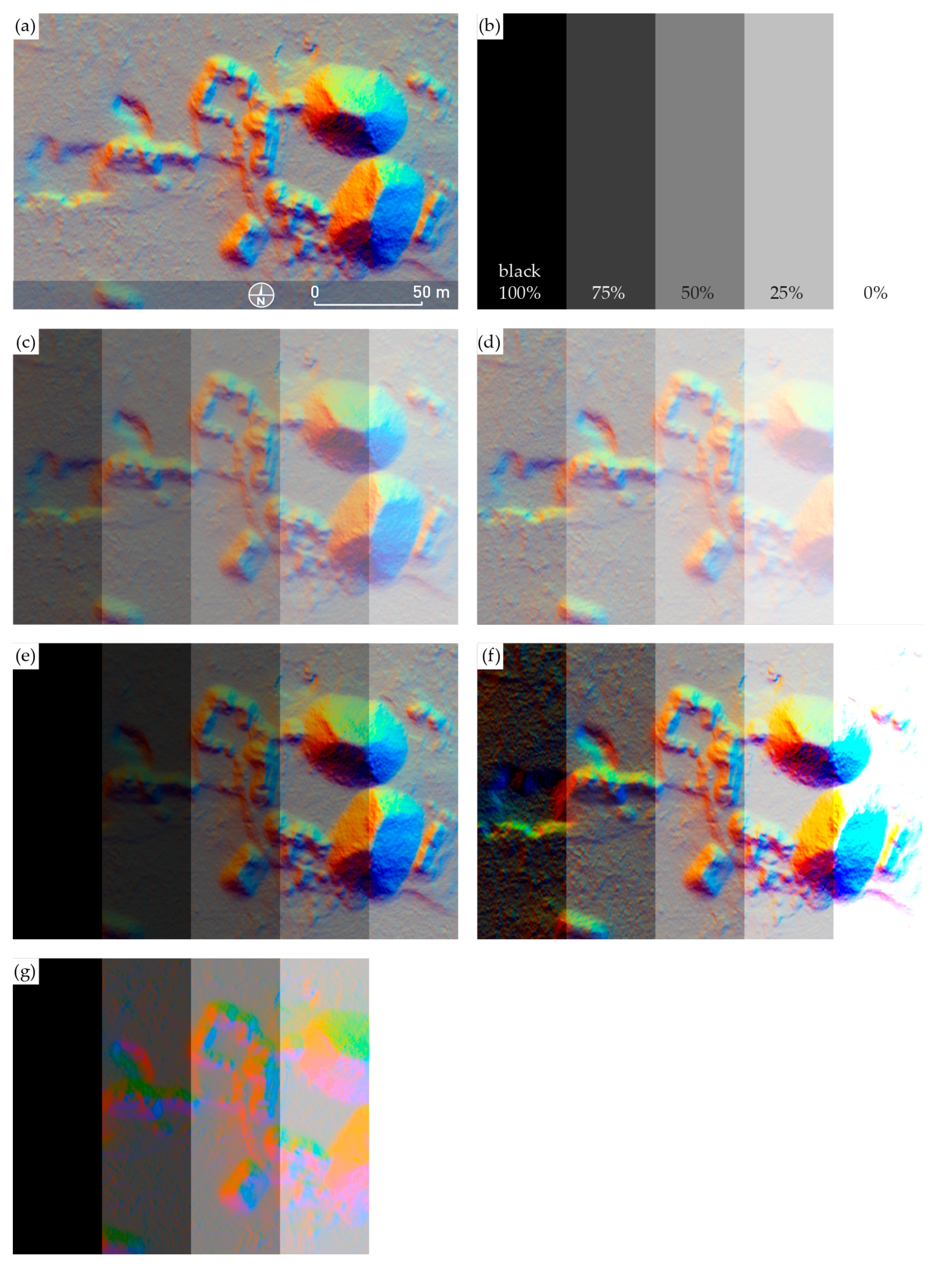

3.3. Blend Modes

- -

- Normal: Only affects opacity;

- -

- Lighten: Produces a lighter image;

- -

- Darken: Produces a darker image;

- -

- Contrast: Increases contrast, gray is less visible;

- -

- Comparative: Calculates difference between images;

- -

- Color: Calculations with different color qualities.

4. Results

4.1. Effect of Blend Modes on Visualizations

4.2. Visualization for Archaeological Topography

5. Discussion

- -

- Deep learning requires a database of a large number of known and verified archaeological sites as learning samples—in the first instance these still have to come from visual interpretation and field observation;

- -

- Large-scale results of deep learning have to be verified and the most straightforward ‘first check’ is visual inspection.

6. Conclusions

- -

- It highlights the small-scale structures and is intuitive to read,

- -

- It does not introduce artificial artifacts,

- -

- It preserves the colors of the base layer well,

- -

- The visual extent and shape of recorded features are not altered,

- -

- It shows small topographic features in the same way irrespective of their orientation or shape, allowing us to judge their height and amplitude

- -

- Results from different areas are comparable,

- -

- The calculation is fast and does not slow down the mapping process.

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhuge, Y.; Udupa, J.K. Intensity standardization simplifies brain MR image segmentation. Comput. Vis. Image Underst. 2009, 113, 1095–1103. [Google Scholar] [CrossRef]

- Fujiki, Y.; Yokota, S.; Okada, Y.; Oku, Y.; Tamura, Y.; Ishiguro, M.; Miwakeichi, F. Standardization of Size, Shape and Internal Structure of Spinal Cord Images: Comparison of Three Transformation Methods. PLoS ONE 2013, 8, e76415. [Google Scholar]

- Borland, D.; Russell, M.T.I. Rainbow Color Map (Still) Considered Harmful. IEEE Comput. Graph. Appl. 2007, 27, 14–17. [Google Scholar] [PubMed]

- Schuetz, M. Potree: Rendering Large Point Clouds in Web Browsers. Bachelor’s Thesis, Vienna University of Technology, Vienna, Austria, 2016. [Google Scholar]

- Borkin, M.; Gajos, K.; Peters, A.; Mitsouras, D.; Melchionna, S.; Rybicki, F.; Feldman, C.; Pfister, H. Evaluation of Artery Visualizations for Heart Disease Diagnosis. IEEE Trans. Vis. Comput. Graph. 2011, 17, 2479–2488. [Google Scholar] [PubMed] [Green Version]

- Kjellin, A.; Pettersson, L.W.; Seipel, S.; Lind, M. Evaluating 2D and 3D Visualizations of Spatiotemporal Information. ACM Trans. Appl. Percept. 2008, 7, 19:1–19:23. [Google Scholar]

- Kaya, E.; Eren, M.T.; Doger, C.; Balcisoy, S. Do 3D Visualizations Fail? An Empirical Discussion on 2D and 3D Representations of the Spatio-temporal Data. In Proceedings of the EURASIA GRAPHICS 2014; Paper 05; Hacettepe University Press: Ankara, Turkey, 2014; p. 12. [Google Scholar]

- Szafir, D.A. The Good, the Bad, and the Biased: Five Ways Visualizations Can Mislead (and How to Fix Them). Interactions 2018, 25, 26–33. [Google Scholar] [CrossRef]

- Gehlenborg, N.; Wong, B. Points of view: Into the third dimension. Nat. Methods 2012, 9, 851. [Google Scholar]

- Moreland, K. Why We Use Bad Color Maps and What You Can Do About It. Electron. Imaging 2016, 2016, 1–6. [Google Scholar] [CrossRef]

- Cockburn, A.; McKenzie, B. Evaluating the Effectiveness of Spatial Memory in 2D and 3D Physical and Virtual Environments. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Minneapolis, MN, USA, 20–25 April 2002; ACM: New York, NY, USA, 2002; pp. 203–210. [Google Scholar]

- Tory, M.; Sprague, D.; Wu, F.; So, W.Y.; Munzner, T. Spatialization Design: Comparing Points and Landscapes. IEEE Trans. Vis. Comput. Graph. 2007, 13, 1262–1269. [Google Scholar] [CrossRef]

- Zakšek, K.; Oštir, K.; Kokalj, Ž. Sky-view factor as a relief visualization technique. Remote Sens. 2011, 3, 398–415. [Google Scholar]

- Bennett, R.; Welham, K.; Hill, R.A.; Ford, A. A Comparison of Visualization Techniques for Models Created from Airborne Laser Scanned Data. Archaeol. Prospect. 2012, 19, 41–48. [Google Scholar]

- Štular, B.; Kokalj, Ž.; Oštir, K.; Nuninger, L. Visualization of lidar-derived relief models for detection of archaeological features. J. Archaeol. Sci. 2012, 39, 3354–3360. [Google Scholar] [CrossRef]

- Kokalj, Ž.; Zakšek, K.; Oštir, K. Visualizations of lidar derived relief models. In Interpreting Archaeological Topography—Airborne Laser Scanning, Aerial Photographs and Ground Observation; Opitz, R., Cowley, C.D., Eds.; Oxbow Books: Oxford, UK, 2013; pp. 100–114. ISBN 978-1-84217-516-3. [Google Scholar]

- Inomata, T.; Pinzón, F.; Ranchos, J.L.; Haraguchi, T.; Nasu, H.; Fernandez-Diaz, J.C.; Aoyama, K.; Yonenobu, H. Archaeological Application of Airborne LiDAR with Object-Based Vegetation Classification and Visualization Techniques at the Lowland Maya Site of Ceibal, Guatemala. Remote Sens. 2017, 9, 563. [Google Scholar]

- Kokalj, Ž.; Hesse, R. Airborne Laser Scanning Raster Data Visualization: A Guide to Good Practice; Prostor, kraj, čas; Založba ZRC: Ljubljana, Slovenia, 2017; ISBN 978-961-254-984-8. [Google Scholar]

- Hesse, R. LiDAR-derived Local Relief Models—A new tool for archaeological prospection. Archaeol. Prospect. 2010, 17, 67–72. [Google Scholar] [CrossRef]

- Chiba, T.; Kaneta, S.; Suzuki, Y. Red Relief Image Map: New Visualization Method for Three Dimension Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1071–1076. [Google Scholar]

- Podobnikar, T. Multidirectional Visibility Index for Analytical Shading Enhancement. Cartogr. J. 2012, 49, 195–207. [Google Scholar] [CrossRef]

- Mara, H.; Krömker, S.; Jakob, S.; Breuckmann, B. GigaMesh and gilgamesh: 3D multiscale integral invariant cuneiform character extraction. In Proceedings of the 11th International conference on Virtual Reality, Archaeology and Cultural Heritage, Paris, France, 21–24 September 2010; Eurographics Association: Paris, France, 2010; pp. 131–138. [Google Scholar]

- Orengo, H.A.; Petrie, C.A. Multi-scale relief model (MSRM): A new algorithm for the visualization of subtle topographic change of variable size in digital elevation models. Earth Surf. Process. Landf. 2018, 43, 1361–1369. [Google Scholar] [CrossRef] [PubMed]

- Kennelly, P.J. Terrain maps displaying hill-shading with curvature. Geomorphology 2008, 102, 567–577. [Google Scholar]

- Mayoral Pascual, A.; Toumazet, J.-P.; François-Xavier, S.; Vautier, F.; Peiry, J.-L. The Highest Gradient Model: A New Method for Analytical Assessment of the Efficiency of LiDAR-Derived Visualization Techniques for Landform Detection and Mapping. Remote Sens. 2017, 9, 120. [Google Scholar] [CrossRef]

- Hesse, R. Visualisierung hochauflösender Digitaler Geländemodelle mit LiVT. In Computeranwendungen und Quantitative Methoden in der Archäologie. 4. Workshop der AG CAA 2013; Lieberwirth, U., Herzog, I., Eds.; Berlin Studies of the Ancient World; Topoi: Berlin, Germany, 2016; pp. 109–128. [Google Scholar]

- Kokalj, Ž.; Zakšek, K.; Oštir, K.; Pehani, P.; Čotar, K.; Somrak, M. Relief Visualization Toolbox; ZRC SAZU: Ljubljana, Slovenia, 2018. [Google Scholar]

- Alcalde, J.; Bond, C.; Randle, C.H. Framing bias: The effect of Figure presentation on seismic interpretation. Interpretation 2017, 5, 591–605. [Google Scholar]

- Canuto, M.A.; Estrada-Belli, F.; Garrison, T.G.; Houston, S.D.; Acuña, M.J.; Kováč, M.; Marken, D.; Nondédéo, P.; Auld-Thomas, L.; Castanet, C.; et al. Ancient lowland Maya complexity as revealed by airborne laser scanning of northern Guatemala. Science 2018, 361, eaau0137. [Google Scholar]

- Mitchell, H.B. Image Fusion: Theories, Techniques and Applications; Springer-Verlag: Berlin/Heidelberg, Germany, 2010; ISBN 978-3-642-11215-7. [Google Scholar]

- Verhoeven, G.; Nowak, M.; Nowak, R. Pixel-Level Image Fusion for Archaeological Interpretative Mapping; Lerma, J.L., Cabrelles, M., Eds.; Editorial Universitat Politècnica de València: Valencia, Spain, 2016; pp. 404–407. [Google Scholar]

- Filzwieser, R.; Olesen, L.H.; Verhoeven, G.; Mauritsen, E.S.; Neubauer, W.; Trinks, I.; Nowak, M.; Nowak, R.; Schneidhofer, P.; Nau, E.; et al. Integration of Complementary Archaeological Prospection Data from a Late Iron Age Settlement at Vesterager-Denmark. J. Archaeol. Method Theory 2018, 25, 313–333. [Google Scholar] [CrossRef]

- Mark, R.; Billo, E. Application of Digital Image Enhancement in Rock Art Recording. Am. Indian Rock Art 2002, 28, 121–128. [Google Scholar]

- Tzvetkov, J. Relief visualization techniques using free and open source GIS tools. Pol. Cartogr. Rev. 2018, 50, 61–71. [Google Scholar]

- Wang, S.; Lee, W.; Provost, J.; Luo, J.; Konofagou, E.E. A composite high-frame-rate system for clinical cardiovascular imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2008, 55, 2221–2233. [Google Scholar] [CrossRef]

- Howell, G.; Owen, M.J.; Salazar, L.; Haberlah, D.; Goergen, E. Blend Modes for Mineralogy Images. U.S. Patent US9495786B2, 15 November 2016. [Google Scholar]

- Krishnamoorthy, S.; Soman, K.P. Implementation and Comparative Study of Image Fusion Algorithms. Int. J. Comput. Appl. 2010, 9, 24–28. [Google Scholar] [CrossRef]

- Ma, Y. The mathematic magic of Photoshop blend modes for image processing. In Proceedings of the 2011 International Conference on Multimedia Technology, Hangzhou, China, 26–28 July 2011; pp. 5159–5161. [Google Scholar]

- Giesel, F.L.; Mehndiratta, A.; Locklin, J.; McAuliffe, M.J.; White, S.; Choyke, P.L.; Knopp, M.V.; Wood, B.J.; Haberkorn, U.; von Tengg-Kobligk, H. Image fusion using CT, MRI and PET for treatment planning, navigation and follow up in percutaneous RFA. Exp. Oncol. 2009, 31, 106–114. [Google Scholar]

- Faulhaber, P.; Nelson, A.; Mehta, L.; O’Donnell, J. The Fusion of Anatomic and Physiologic Tomographic Images to Enhance Accurate Interpretation. Clin. Positron Imaging Off. J. Inst. Clin. PET 2000, 3, 178. [Google Scholar]

- Förster, G.J.; Laumann, C.; Nickel, O.; Kann, P.; Rieker, O.; Bartenstein, P. SPET/CT image co-registration in the abdomen with a simple and cost-effective tool. Eur. J. Nucl. Med. Mol. Imaging 2003, 30, 32–39. [Google Scholar] [CrossRef]

- Antoch, G.; Kanja, J.; Bauer, S.; Kuehl, H.; Renzing-Koehler, K.; Schuette, J.; Bockisch, A.; Debatin, J.F.; Freudenberg, L.S. Comparison of PET, CT, and dual-modality PET/CT imaging for monitoring of imatinib (STI571) therapy in patients with gastrointestinal stromal tumors. J. Nucl. Med. Off. Publ. Soc. Nucl. Med. 2004, 45, 357–365. [Google Scholar]

- Sale, D.; Joshi, D.M.; Patil, V.; Sonare, P.; Jadhav, C. Image Fusion for Medical Image Retrieval. Int. J. Comput. Eng. Res. 2013, 3, 1–9. [Google Scholar]

- Schöder, H.; Yeung, H.W.D.; Gonen, M.; Kraus, D.; Larson, S.M. Head and neck cancer: Clinical usefulness and accuracy of PET/CT image fusion. Radiology 2004, 231, 65–72. [Google Scholar] [CrossRef] [PubMed]

- Keidar, Z.; Israel, O.; Krausz, Y. SPECT/CT in tumor imaging: Technical aspects and clinical applications. Semin. Nucl. Med. 2003, 33, 205–218. [Google Scholar] [CrossRef]

- James, A.P.; Dasarathy, B.V. Medical image fusion: A survey of the state of the art. Inf. Fusion 2014, 19, 4–19. [Google Scholar] [CrossRef] [Green Version]

- Šprajc, I. Introducción. In Exploraciones arqueológicas en Chactún, Campeche, México; Šprajc, I., Ed.; Prostor, kraj, čas; Založba ZRC: Ljubljana, Slovenia, 2015; pp. 1–3. ISBN 978-961-254-780-6. [Google Scholar]

- Šprajc, I.; Flores Esquivel, A.; Marsetič, A. Descripción del sitio. In Exploraciones Arqueológicas en Chactún, Campeche, México; Šprajc, I., Ed.; Prostor, kraj, čas; Založba ZRC: Ljubljana, Slovenia, 2015; pp. 5–24. ISBN 978-961-254-780-6. [Google Scholar]

- Fernandez-Diaz, J.C.; Carter, W.E.; Shrestha, R.L.; Glennie, C.L. Now You See It… Now You Don’t: Understanding Airborne Mapping LiDAR Collection and Data Product Generation for Archaeological Research in Mesoamerica. Remote Sens. 2014, 6, 9951–10001. [Google Scholar] [CrossRef] [Green Version]

- Fernandez-Diaz, J.; Carter, W.; Glennie, C.; Shrestha, R.; Pan, Z.; Ekhtari, N.; Singhania, A.; Hauser, D.; Sartori, M.; Fernandez-Diaz, J.C.; et al. Capability Assessment and Performance Metrics for the Titan Multispectral Mapping Lidar. Remote Sens. 2016, 8, 936. [Google Scholar] [CrossRef]

- Berendsen, H.J.A. De Vorming van Het Land; Van Gorcum: Assen, The Netherlands, 2004. [Google Scholar]

- van der Sande, C.; Soudarissanane, S.; Khoshelham, K. Assessment of Relative Accuracy of AHN-2 Laser Scanning Data Using Planar Features. Sensors 2010, 10, 8198–8214. [Google Scholar] [CrossRef] [Green Version]

- Kenzler, H.; Lambers, K. Challenges and perspectives of woodland archaeology across Europe. In Proceedings of the 42nd Annual Conference on Computer Applications and Quantitative Methods in Archaeology; Giligny, F., Djindjian, F., Costa, L., Moscati, P., Robert, S., Eds.; Archaeopress: Oxford, UK, 2015; pp. 73–80. [Google Scholar]

- Arnoldussen, S. The Fields that Outlived the Celts: The Use-histories of Later Prehistoric Field Systems (Celtic Fields or Raatakkers) in the Netherlands. Proc. Prehist. Soc. 2018, 84, 303–327. [Google Scholar] [CrossRef]

- Planina, J. Soča (Slovenia). A monograph of a village and its surroundings (in Slovenian). Acta Geogr. 1954, 2, 187–250. [Google Scholar]

- Triglav-Čekada, M.; Bric, V. The project of laser scanning of Slovenia is completed. Geoderski Vestn. 2015, 59, 586–592. [Google Scholar]

- Yoëli, P. Analytische Schattierung. Ein kartographischer Entwurf. Kartographische Nachrichten 1965, 15, 141–148. [Google Scholar]

- Yokoyama, R.; Shlrasawa, M.; Pike, R.J. Visualizing topography by openness: A new application of image processing to digital elevation models. Photogramm. Eng. Remote Sens. 2002, 68, 251–266. [Google Scholar]

- Doneus, M.; Briese, C. Full-waveform airborne laser scanning as a tool for archaeological reconnaissance. In From Space to Place: 2nd International Conference on Remote Sensing in Archaeology: Proceedings of the 2nd International Workshop, Rome, Italy, 4–7 December 2006; Campana, S., Forte, M., Eds.; Archaeopress: Oxford, UK, 2006; pp. 99–105. [Google Scholar]

- Doneus, M. Openness as visualization technique for interpretative mapping of airborne LiDAR derived digital terrain models. Remote Sens. 2013, 5, 6427–6442. [Google Scholar] [CrossRef]

- Moore, B. Lidar Visualisation of the Archaeological Landscape of Sand Point and Middle Hope, Kewstoke, North Somerset. Master’s Thesis, University of Oxford, Oxford, UK, 2015. [Google Scholar]

- Schneider, A.; Takla, M.; Nicolay, A.; Raab, A.; Raab, T. A Template-matching Approach Combining Morphometric Variables for Automated Mapping of Charcoal Kiln Sites. Archaeol. Prospect. 2015, 22, 45–62. [Google Scholar] [CrossRef]

- de Matos Machado, R.; Amat, J.-P.; Arnaud-Fassetta, G.; Bétard, F. Potentialités de l’outil LiDAR pour cartographier les vestiges de la Grande Guerre en milieu intra-forestier (bois des Caures, forêt domaniale de Verdun, Meuse). EchoGéo 2016, 1–22. [Google Scholar] [CrossRef]

- Krasinski, K.E.; Wygal, B.T.; Wells, J.; Martin, R.L.; Seager-Boss, F. Detecting Late Holocene cultural landscape modifications using LiDAR imagery in the Boreal Forest, Susitna Valley, Southcentral Alaska. J. Field Archaeol. 2016, 41, 255–270. [Google Scholar] [CrossRef]

- Roman, A.; Ursu, T.-M.; Lăzărescu, V.-A.; Opreanu, C.H.; Fărcaş, S. Visualization techniques for an airborne laser scanning-derived digital terrain model in forested steep terrain: Detecting archaeological remains in the subsurface. Geoarchaeology 2017, 32, 549–562. [Google Scholar] [CrossRef]

- Holata, L.; Plzák, J.; Světlík, R.; Fonte, J.; Holata, L.; Plzák, J.; Světlík, R.; Fonte, J. Integration of Low-Resolution ALS and Ground-Based SfM Photogrammetry Data. A Cost-Effective Approach Providing an ‘Enhanced 3D Model’ of the Hound Tor Archaeological Landscapes (Dartmoor, South-West England). Remote Sens. 2018, 10, 1357. [Google Scholar] [CrossRef]

- Stott, D.; Kristiansen, S.M.; Lichtenberger, A.; Raja, R. Mapping an ancient city with a century of remotely sensed data. Proc. Natl. Acad. Sci. USA 2018, 201721509. [Google Scholar] [CrossRef] [PubMed]

- Williams, R.; Byer, S.; Howe, D.; Lincoln-Owyang, J.; Yap, M.; Bice, M.; Ault, J.; Aygun, B.; Balakrishnan, V.; Brereton, F.; et al. Photoshop CS5; Adobe Systems Incorporated: San Jose, CA, USA, 2010. [Google Scholar]

- Valentine, S. The Hidden Power of Blend Modes in Adobe Photoshop; Adobe Press: Berkeley, CA, USA, 2012. [Google Scholar]

- ISO 32000-1:2008. Document Management—Portable Document Format—Part 1: PDF 1.7; ISO: Geneva, Switzerland, 2008; p. 747. [Google Scholar]

- Lidar Data Acquired by Plate Boundary Observatory by NCALM, USA. PBO Is Operated by UNAVCO for EarthScope and Supported by the National Science Foundation (No. EAR-0350028 and EAR-0732947). 2008. Available online: http://opentopo.sdsc.edu/datasetMetadata?otCollectionID=OT.052008.32610.1 (accessed on 22 March 2019).

- Lidar Data Acquired by the NCALM at the University of Houston and the University of California, Berkeley, on behalf of David E. Haddad (Arizona State University) as Part of an NCALM Graduate Student Seed Grant: Geologic and Geomorphic Characterization of Precariously Balanced Rocks. 2009. Available online: http://opentopo.sdsc.edu/datasetMetadata?otCollectionID=OT.102010.26912.1 (accessed on 22 March 2019).

- Grohmann, C.H.; Sawakuchi, A.O. Influence of cell size on volume calculation using digital terrain models: A case of coastal dune fields. Geomorphology 2013, 180–181, 130–136. [Google Scholar] [CrossRef]

- Wong, B. Points of view: Color coding. Nat. Methods 2010, 7, 573. [Google Scholar] [CrossRef] [PubMed]

- Lindsay, J.B.; Cockburn, J.M.H.; Russell, H.A.J. An integral image approach to performing multi-scale topographic position analysis. Geomorphology 2015, 245, 51–61. [Google Scholar] [CrossRef]

- Guyot, A.; Hubert-Moy, L.; Lorho, T.; Guyot, A.; Hubert-Moy, L.; Lorho, T. Detecting Neolithic Burial Mounds from LiDAR-Derived Elevation Data Using a Multi-Scale Approach and Machine Learning Techniques. Remote Sens. 2018, 10, 225. [Google Scholar] [CrossRef]

- Niccoli, M. Geophysical tutorial: How to evaluate and compare color maps. Lead Edge 2014, 33, 910–912. [Google Scholar] [CrossRef]

- Zhou, L.; Hansen, C.D. A Survey of Colormaps in Visualization. IEEE Trans. Vis. Comput. Graph. 2015, 22, 2051–2069. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ware, C. Color sequences for univariate maps: Theory, experiments and principles. IEEE Comput. Graph. Appl. 1988, 8, 41–49. [Google Scholar] [CrossRef]

- Banaszek, Ł.; Cowley, D.C.; Middleton, M. Towards National Archaeological Mapping. Assessing Source Data and Methodology—A Case Study from Scotland. Geosciences 2018, 8, 272. [Google Scholar] [CrossRef]

- Cowley, D.C. In with the new, out with the old? Auto-extraction for remote sensing archaeology. In Proceedings of the SPIE 8532; Bostater, C.R., Mertikas, S.P., Neyt, X., Nichol, C., Cowley, D., Bruyant, J.-P., Eds.; SPIE: Edinburgh, UK, 2012; p. 853206. [Google Scholar]

- Bennett, R.; Cowley, D.; Laet, V.D. The data explosion: Tackling the taboo of automatic feature recognition in airborne survey data. Antiquity 2014, 88, 896–905. [Google Scholar] [CrossRef]

- Kramer, I.C. An Archaeological Reaction to the Remote Sensing Data Explosion. Reviewing the Research on Semi-Automated Pattern Recognition and Assessing the Potential to Integrate Artificial Intelligence. Master’s Thesis, University of Southampton, Southampton, UK, 2015. [Google Scholar]

- Trier, Ø.D.; Zortea, M.; Tonning, C. Automatic detection of mound structures in airborne laser scanning data. J. Archaeol. Sci. Rep. 2015, 2, 69–79. [Google Scholar] [CrossRef]

- Traviglia, A.; Cowley, D.; Lambers, K. Finding common ground: Human and computer vision in archaeological prospection. AARGnews 2016, 53, 11–24. [Google Scholar]

- Grammer, B.; Draganits, E.; Gretscher, M.; Muss, U. LiDAR-guided Archaeological Survey of a Mediterranean Landscape: Lessons from the Ancient Greek Polis of Kolophon (Ionia, Western Anatolia). Archaeol. Prospect. 2017, 24, 311–333. [Google Scholar] [CrossRef] [PubMed]

| Visualization | Visible Microrelief [18] (p. 35) | Highlighted Topography (Appears Bright) | Shadowed Topography (Appears Dark) |

|---|---|---|---|

| hillshading | o | non-level terrain facing towards illumination | non-level terrain facing away from illumination |

| slope (inverted grayscale color bar) | + | level terrain | steep terrain |

| simple local relief model | ++ | local high elevation | local depressions |

| positive openness | ++ | convexities | concavities |

| negative openness (inverted grayscale color bar) | ++ | convexities | lowest parts of concavities |

| sky-view factor | ++ | planes, ridges, peaks | concavities |

| o indistinct; + suitable; ++ very suitable | |||

| DEM Size [px] (0.5 m Resolution) | Size on Disk [MB] | Settings | Visualizations Time [min:s] | Blending Time [min:s] | Combined Time [min:s] |

|---|---|---|---|---|---|

| 1000 × 1000 | 4 | same as in Figure 1 | 0:02 | 0:01 | 0:03 |

| 2000 × 2000 | 16 | same as in Figure 1 | 0:09 | 0:03 | 0:12 |

| 10,000 × 10,000 | 390 | same as in Figure 1 | 3:37 | 1:07 | 4:44 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kokalj, Ž.; Somrak, M. Why Not a Single Image? Combining Visualizations to Facilitate Fieldwork and On-Screen Mapping. Remote Sens. 2019, 11, 747. https://doi.org/10.3390/rs11070747

Kokalj Ž, Somrak M. Why Not a Single Image? Combining Visualizations to Facilitate Fieldwork and On-Screen Mapping. Remote Sensing. 2019; 11(7):747. https://doi.org/10.3390/rs11070747

Chicago/Turabian StyleKokalj, Žiga, and Maja Somrak. 2019. "Why Not a Single Image? Combining Visualizations to Facilitate Fieldwork and On-Screen Mapping" Remote Sensing 11, no. 7: 747. https://doi.org/10.3390/rs11070747

APA StyleKokalj, Ž., & Somrak, M. (2019). Why Not a Single Image? Combining Visualizations to Facilitate Fieldwork and On-Screen Mapping. Remote Sensing, 11(7), 747. https://doi.org/10.3390/rs11070747