Two New Ways of Documenting Miniature Incisions Using a Combination of Image-Based Modelling and Reflectance Transformation Imaging

Abstract

:1. Introduction

2. Materials and Methods

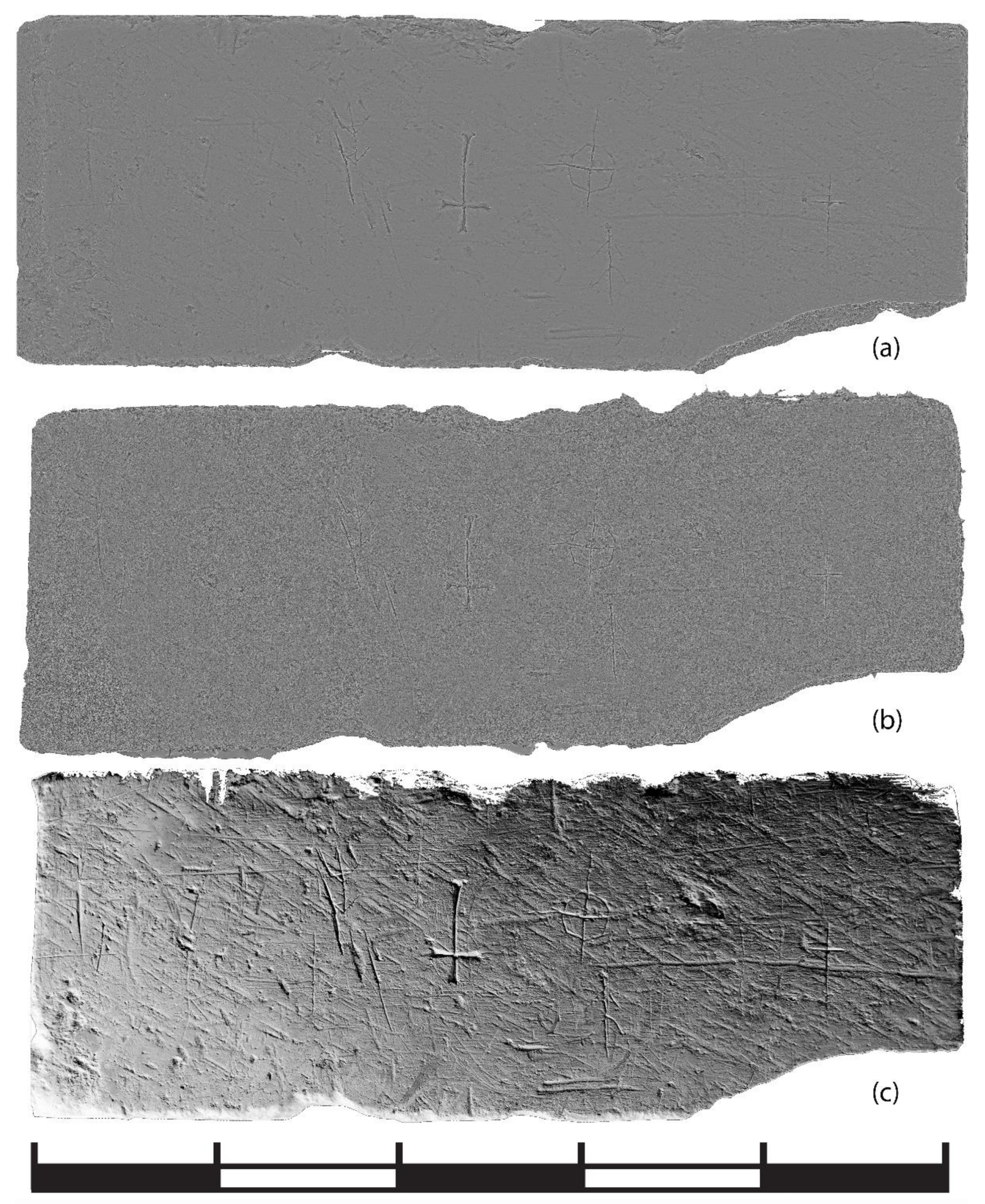

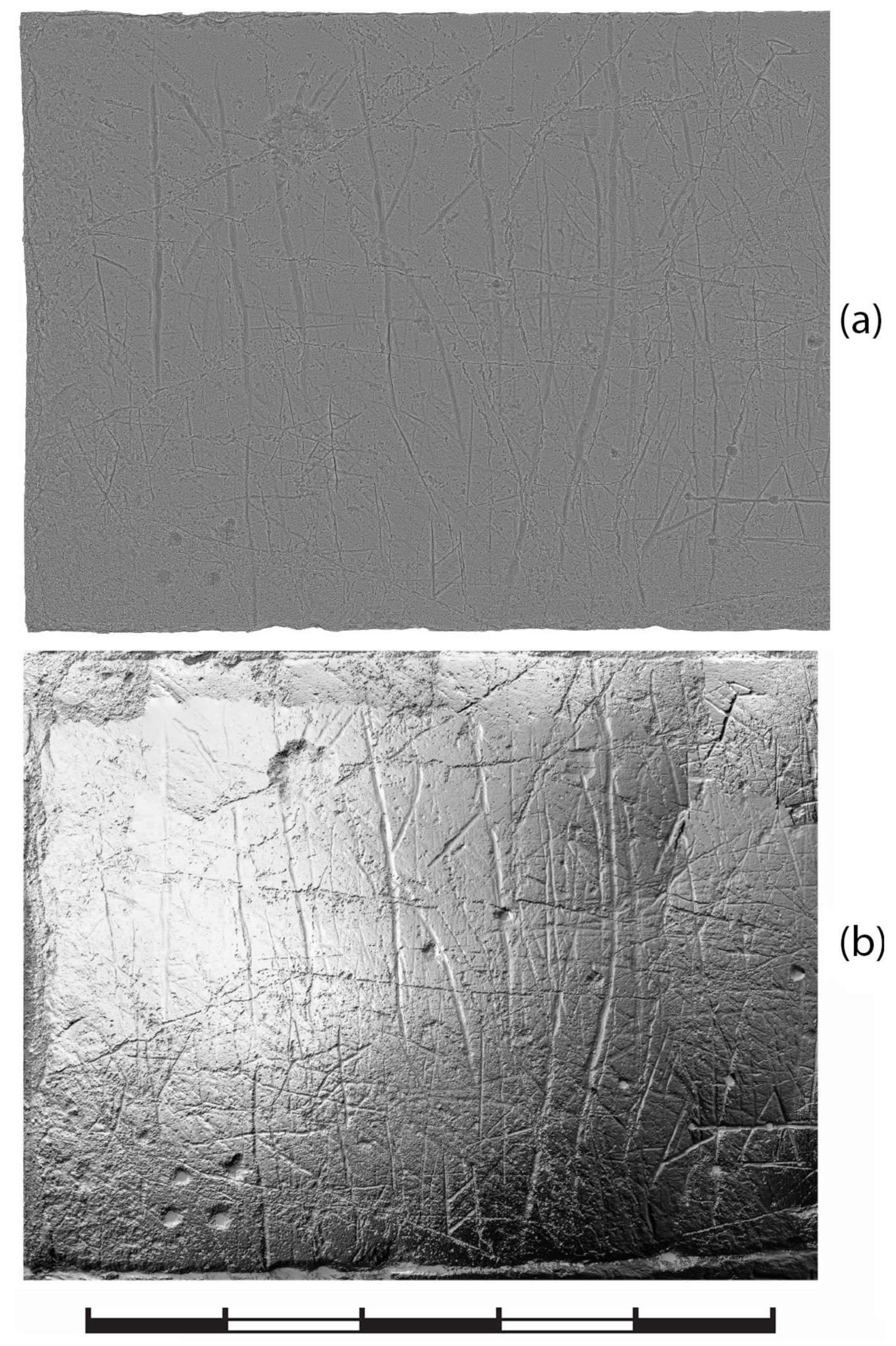

2.1. The Documented Surfaces

2.2. Methods

2.2.1. Image-Based Modelling

- Alignment—The positions of the camera are calculated for each picture based on homologous points where the pictures overlap.

- 3D point cloud generation—the matching points are used to create a 3D sparse point cloud consisting of X-, Y- and Z-data by using SfM. Then, dense image matching algorithms are used to densify the point cloud.

- 3D mesh reconstruction—The relevant points in the dense point cloud are connected by triangles, creating a 3D mesh.

- Texture projection—The 3D mesh is textured by projecting colors from the source images.

2.2.2. Reflectance Transformation Imaging

2.2.3. IBM and RTI Combined

- Each of the 28 and 25 sets of 50 pictures of Surface A and B were processed into 28 and 25 RTI models using RTI Processor.

- Two JPGs of each RTI models were exported in RTIViewer, using two of the different rendering modes available in this software; Normals Visualization and Dynamic Multi Light. This step will be elaborated below.

- The 56 and 50 JPG exports were processed by IBM software.

3. Results

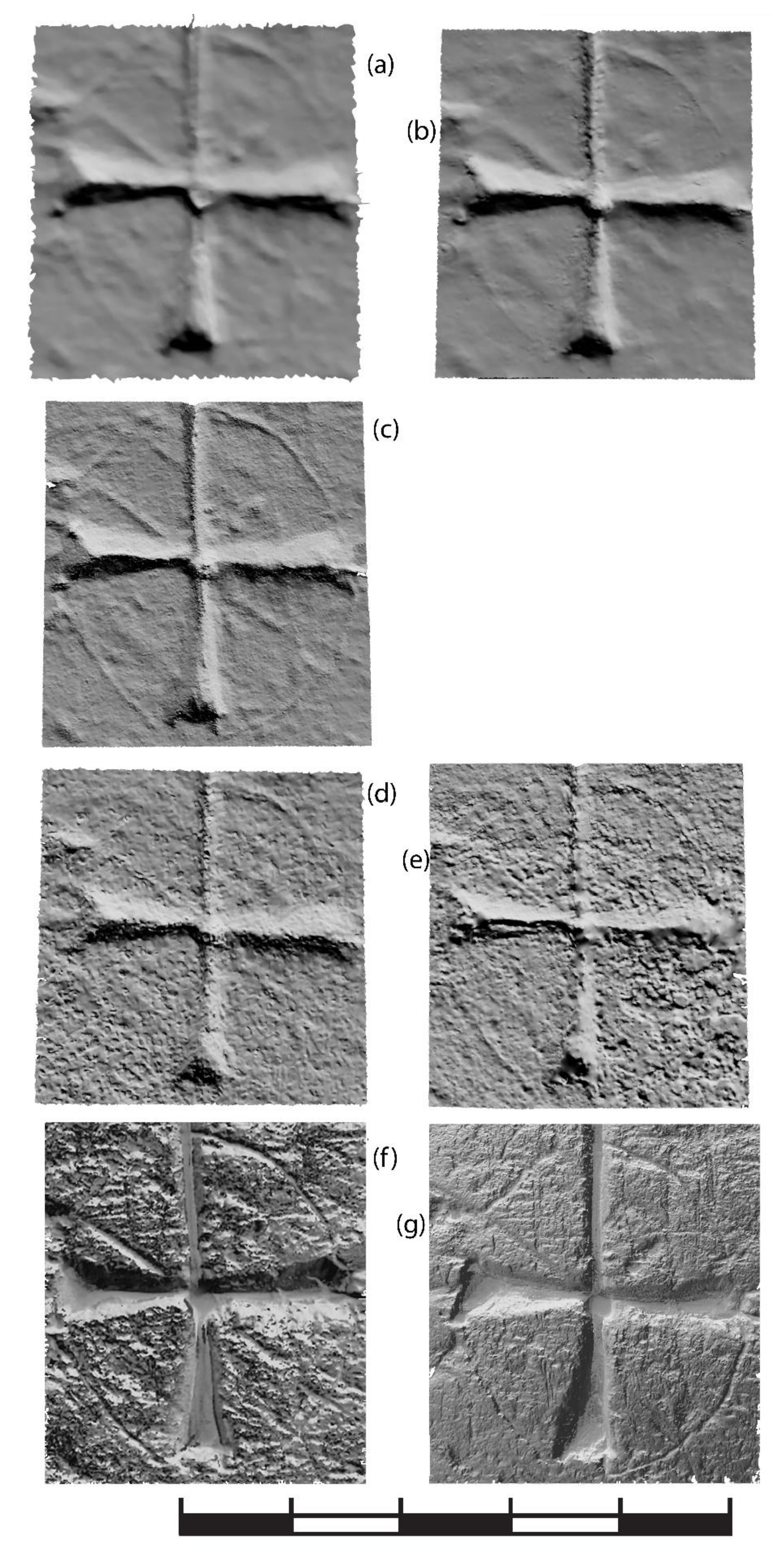

3.1. Usability

3.2. Time-Efficiency

3.3. Cost-Efficiency

3.4. Accuracy

4. Discussion

5. Conclusions

- None of the methods tested were optimal in all regards. Consequently, the choice of method must be based upon a prioritization of the reviewed factors.

- The two techniques that combine IBM and RTI gave positive results. Combo 2 (RTI image acquisition by an RTI dome, combined with post-processing using RTI software first and IBM software subsequently) appears to be the overall best method based on the reviewed factors. The process generates both RTI and 3D models and does this efficiently in terms of both time and cost. Although the resulting 3D models are less accurate than those produced by the other methods, the models may be fitted with highly detailed surface texture maps derived from the RTI processing. The numerous original pictures may also be post-processed directly by using more expensive IBM software (Combo 1). Although this method is not cost- or time-efficient, it generates the most accurate 3D models of the methods tested.

- The more expensive, RealityCapture, was a lot more time-efficient than MetaShape. However, in cases where the same pictures could be processed by both software packages, the accuracy of the 3D models generated showed minor differences. The evaluation of the IBM software packages ability to generate high detail quality remains inconclusive.

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A—Specification and Settings of Hardware Used

Appendix B—Specification and Settings of Software Used

Appendix C—Specifications and Cost of Equipment Used

- Canon EOS 6D: $2000

- Nikon D90: $1462

- Canon EF 24 mm f/1.8: $2000

- Canon EF 50 mm f/1.4: $415

- Nikon AF-S Micro-Nikkor 60 mm f/1.4: $1189

- Zeiss Distagon T* 28 mm f/2: $1233

- Leofoto LT mount: $300

- Canon RS-80N3: $100

- Phottis Mitros Remote Flash: $500

- RTI

- Starter Kit: $650

References

- Demesticha, S. KARAVOI: A Programme for the Documentation of Ship Graffiti on Medieval and post-Medieval Monuments of Cyprus. In ISBSA 14: Baltic and Beyond. Change and Continuity in Shipbuilding. Proceedings of the Fourteenth International Symposium on Boat and Ship Archaeology; Litwin, J., Ed.; Gdansk National Maritime Museum: Gdansk, Poland, 2017; pp. 135–141. [Google Scholar]

- Champion, M. Medieval Graffiti: The Lost Voices of England’s Churches, 1st ed.; Crown Publishing Group: New York, NY, USA, 2015. [Google Scholar]

- Demesticha, S.; Delouca, K.; Trentin, M.G.; Bakirtzis, N.; Neophytou, A. Seamen on Land? A Preliminary Analysis of Medieval Ship Graffiti on Cyprus. Int. J. Naut. Archaeol. 2017, 46, 346–381. [Google Scholar] [CrossRef]

- Samaan, M.; Deseilligny, M.P.; Heno, R.; De la Vaissière, E.; Roger, J. Close-range photogrammetric tools for epigraphic surveys. J. Comput. Cult. Herit. 2016, 9, 1–18. [Google Scholar] [CrossRef]

- Caine, M.; Maggen, M.; Altaratz, D. Combining RTI & SFM. A Multi-Faceted approach to Inscription Analysis; Electronic Imaging & the Visual Arts: Florence, Italy, 2019; pp. 97–104. [Google Scholar]

- Sammons, J.F.D. Application of Reflectance Transformation Imaging (RTI) to the study of ancient graffiti from Herculaneum, Italy. J. Archaeol. Sci. Rep. 2018, 17, 184–194. [Google Scholar] [CrossRef]

- Frood, K. Elisabeth & Howley, Applications of Reflectance Transformation Imaging (RTI) in the Study of Temple Graffiti. In Thebes in the First Millenium BC; Pischikova, K., Budka, E., Griffin, J., Eds.; Cambridge Scholars Publishing: Newcastle upon Tyne, UK, 2014; pp. 625–639. [Google Scholar]

- Cosentino, A.; Stout, S.; Scandurra, C. Innovative Imaging Techniques for Examination and Documentation of mural paintings and historical graffiti in the catacombs of San Giovanni. Int. J. Conserv. Sci. 2015, 6, 23–34. [Google Scholar]

- Kjellman, E. From 2D to 3D: A Photogrammetric Revolution in Archaeology? University of Tromsø: Tromsø, Norway, 2012; p. 102. [Google Scholar]

- Pamart, A.; Ponchio, F.; Abergel, V.; Alaoui M’darhri, A.; Corsini, M.; Dellepiane, M.; Morlet, F.; Scopigno, R.; De Luca, L. A COMPLETE FRAMEWORK OPERATING SPATIALLY-ORIENTED RTI in A 3D/2D CULTURAL HERITAGE DOCUMENTATION and ANALYSIS TOOL. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 573–580. [Google Scholar] [CrossRef] [Green Version]

- Porter, S.T.; Huber, N.; Hoyer, C.; Floss, H. Portable and low-cost solutions to the imaging of Paleolithic art objects: A comparison of photogrammetry and reflectance transformation imaging. J. Archaeol. Sci. Rep. 2016, 10, 859–863. [Google Scholar] [CrossRef]

- Miles, J.; Pitts, M.; Pagi, H.; Earl, G. New applications of photogrammetry and reflectance transformation imaging to an Easter Island statue. Antiquity 2014, 88, 596–605. [Google Scholar] [CrossRef]

- Shi, W.; Kotoula, E.; Akoglu, K.; Yang, Y.; Rushmeier, H. CHER-Ob: A Tool for Shared Analysis in Cultural Heritage. EUROGRAPHICS Work. Graph. Cult. Herit. 2016. [Google Scholar] [CrossRef]

- Giachetti, A.; Ciortan, I.M.; Daffara, C.; Marchioro, G.; Pintus, R.; Gobbetti, E. A novel framework for highlight reflectance transformation imaging. Comput. Vis. Image Underst. 2018, 168, 118–131. [Google Scholar] [CrossRef] [Green Version]

- Graichen, T. Photogrammetry and RTI—Combination Tests. 2020. Available online: http://www.tgraichen.de/?page_id=561 (accessed on 22 April 2020).

- Frank, E.B. Integrating Multispectral Imaging, Reflectance Transformation Imaging (RTI) and Photogrammetry for Archaeological Objects. 2017. Available online: http://www.emilybeatricefrank.com/combining-computational-imaging-techniques (accessed on 22 April 2020).

- Mathys, A.; Brecko, J.; Semal, P. Comparing 3D digitizing technologies: What are the differences? In Proceedings of the 2013 Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; Volume 1, pp. 201–204. [Google Scholar] [CrossRef]

- Boehler, W.; Marbs, A. 3D Scanning and Photogrammetry for Heritage Recording: A Comparison. In Proceedings of the 12th International Conference Geoinformatics, Gävle, Sweden, 7–9 June 2004. [Google Scholar]

- Hess, M.; Macdonald, L.W.; Valach, J. Application of multi - modal 2D and 3D imaging and analytical techniques to document and examine coins on the example of two Roman silver denarii. Herit. Sci. 2018, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef] [Green Version]

- Palomar-Vazquez, J.; Baselga, S.; Viñals-Blasco, M.-J.; García-Sales, C.; Sancho-Espinós, I. Application of a combination of digital image processing and 3D visualization of graffiti in heritage conservation. J. Archaeol. Sci. Rep. 2017, 12, 32–42. [Google Scholar] [CrossRef] [Green Version]

- Mudge, M.; Malzbender, T.; Schroer, C.; Lum, M. New Reflection Transformation Imaging Methods for Rock Art and Multiple-Viewpoint Display. In Proceedings of the 7th International Symposium on Virtual Reality, Archaeology and Intelligent Cultural Heritage VAST2006, Nicosia, Cyprus, 30 October–4 November 2006; pp. 195–202. [Google Scholar] [CrossRef]

- Reflectance Transformation Imaging—Guide to Highlight Image Capture v. 2.0. 2013. Available online: http://culturalheritageimaging.org/What_We_Offer/Downloads/RTI_Hlt_Capture.Guide_v2_0.pdf (accessed on 18 May 2020).

- Berrier, S.; Tetzlaff, M.; Ludwig, M.; Meyer, G. Improved appearance rendering for photogrammetrically acquired 3D models. Digit. Herit. 2015, 1, 255–262. [Google Scholar] [CrossRef]

- Agisoft MetaShape User Manual—Professional Edition, version 1.5; Agisoft LLC, St. Petersburg, Russia. 2019. Available online: https://www.agisoft.com/pdf/metashape-pro_1_5_en.pdf (accessed on 14 May 2020).

- Lojewski, T. Document analysis at AGH University of Science and Technology. In Proceedings of the 2018 IEEE International Conference on Metrology for Archaeology and Cultural Heritage, Cassino, Italy, 22–24 October 2018. [Google Scholar]

| Surface A | Surface B | |||||

|---|---|---|---|---|---|---|

| Method | Pictures Taken | Positions | Time Used | Pictures Taken | Positions | Time Used |

| IBM | 109 | 109 | 1 hr 10 min | 171 | 171 | 45 min |

| H-RTI | 71 | 1 | 14 min | Not tested | - | - |

| RL-RTI Combo 1 Combo 2 | 1400 | 28 | 105 min | 1250 | 25 | 100 min |

| Surface A | Surface B | ||||

|---|---|---|---|---|---|

| Method | Software | Pictures | Time Used | Pictures | Time Used |

| IBM | MetaShape | 109 | 16 h, 20 min | 171 | 16 h, 50 min |

| RealityCapture | 109 | 1 hr, 28 min | 171 | 1 hr, 31 min | |

| H-RTI | RTIBuilder | 71 | 18 min | Not tested | - |

| RL-RTI | RTI Processor | 1400 | 2 h | 1250 | 2 h, 5 min |

| Combo 1 | MetaShape | 1400 | Failed | 1250 | Failed |

| RealityCapture | 1400 | 18 h, 46 min | 1250 | 8 h | |

| Combo 2 | RTI Processor (Preprocessing and exports) | 1400 | 3 h | 1250 | 2 h, 45 min |

| MetaShape | 56 | 8 h, 12 min | 50 | 6 h, 52 min | |

| RealityCapture | 56 | 34 min | 50 | 19 min | |

| Method | Equipment Cost | Software | Software Cost | Sum | Cost Evaluation |

|---|---|---|---|---|---|

| IBM | $4400 | MetaShape Professional | $3500 | $7900 | Moderate |

| IBM | $4400 | RealityCapture | $15,000 | $19,400 | High |

| Combo 1 | $5873 | RealityCapture RTI Processor | $15,000 | $20873 | High |

| Combo 2 | $5873 | MetaShape Professional RTI Processor | $3500 Free | $9373 | Moderate |

| Combo 2 | $5873 | RealityCapture RTI Processor | $15,000 Free | $20873 | High |

| H-RTI | $4350 | RTIBuilder | Free | $4352 | Low |

| RL-RTI | $5873 | RTI Processor | Free | $5773 | Low |

| Surface | Method | GSD | MetaShape | RealityCapture |

|---|---|---|---|---|

| A | IBM Combo 1 Combo 2 | 0.1 mm 0.07 mm 0.07 mm | 1.7 M Failed 5.9 M | 6.7 M 30.7 M 18.2 M |

| B | IBM | 0.04 mm | 23.5 M | 68.6 M |

| Combo 1 | 0.07 mm | Failed | 25 M | |

| Combo 2 | 0.07 mm | 5.9 M | 12.7 M |

| Method | Usability | Time-efficiency | Cost-efficiency | Accuracy |

|---|---|---|---|---|

| IBM-MS IBM image acquisition and IBM post-processing (MetaShape) | Good + Can record large and uneven surfaces + Results in 3D models, high usability ÷ Well- and even-lit surfaces needed ÷ Ample workspace needed for uneven surfaces ÷ Photographic texture maps only | Poor ÷ Low time-efficiency off-site | Good | Good |

| IBM-RC IBM image acquisition and IBM post-processing (RealityCapture) | Excellent + Higher time-efficiency off-site | Poor | ||

| H-RTI Highlight RTI image acquisition and RTI post-processing | Fair + Can record larger and more uneven surfaces than RL-RTI ÷ Best suited for flat and small to medium surfaces ÷ Dark surroundings required ÷ Ample workspace required for larger surfaces ÷ Results in RTI models, low usability | Fair | Excellent | Excellent + Highly detailed RTI models |

| RL-RTI RTI dome image acquisition and RTI post-processing | Good + Provides its own illumination + Not much workspace needed ÷ Best suited for flat and small surfaces ÷ Results in RTI models, low usability | Excellent | Good | Excellent + Highly detailed RTI models |

| Combo 1 RTI dome image acquisition and IBM post-processing | Good + Provides its own illumination + Not much workspace needed + Results in both 3D and RTI models ÷ Best suited for flat and small to medium surfaces ÷ Photographic texture maps only | Fair ÷ Large number of pictures results in low time-efficiency off-site | Poor ÷ Large number of pictures requires more expensive IBM software | Excellent + The most detailed 3D models of the techniques tested |

| Combo 2 RTI dome image acquisition and both RTI and IBM post-processing | Excellent + Provides its own illumination + Not much workspace needed + Results in both 3D and RTI models + Both photographic and surface texture maps available ÷ Best suited for flat and small to medium surfaces | Good + Low number of pictures results in higher time-efficiency off-site | Good + Low number of pictures allows use of low-cost software | Fair + Highly detailed RTI models ÷ The least detailed 3D models of the techniques tested |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Solem, D.-Ø.E.; Nau, E. Two New Ways of Documenting Miniature Incisions Using a Combination of Image-Based Modelling and Reflectance Transformation Imaging. Remote Sens. 2020, 12, 1626. https://doi.org/10.3390/rs12101626

Solem D-ØE, Nau E. Two New Ways of Documenting Miniature Incisions Using a Combination of Image-Based Modelling and Reflectance Transformation Imaging. Remote Sensing. 2020; 12(10):1626. https://doi.org/10.3390/rs12101626

Chicago/Turabian StyleSolem, Dag-Øyvind E., and Erich Nau. 2020. "Two New Ways of Documenting Miniature Incisions Using a Combination of Image-Based Modelling and Reflectance Transformation Imaging" Remote Sensing 12, no. 10: 1626. https://doi.org/10.3390/rs12101626

APA StyleSolem, D.-Ø. E., & Nau, E. (2020). Two New Ways of Documenting Miniature Incisions Using a Combination of Image-Based Modelling and Reflectance Transformation Imaging. Remote Sensing, 12(10), 1626. https://doi.org/10.3390/rs12101626