Autonomous Underwater Monitoring System for Detecting Life on the Seabed by Means of Computer Vision Cloud Services

Abstract

:1. Introduction

2. Related Work

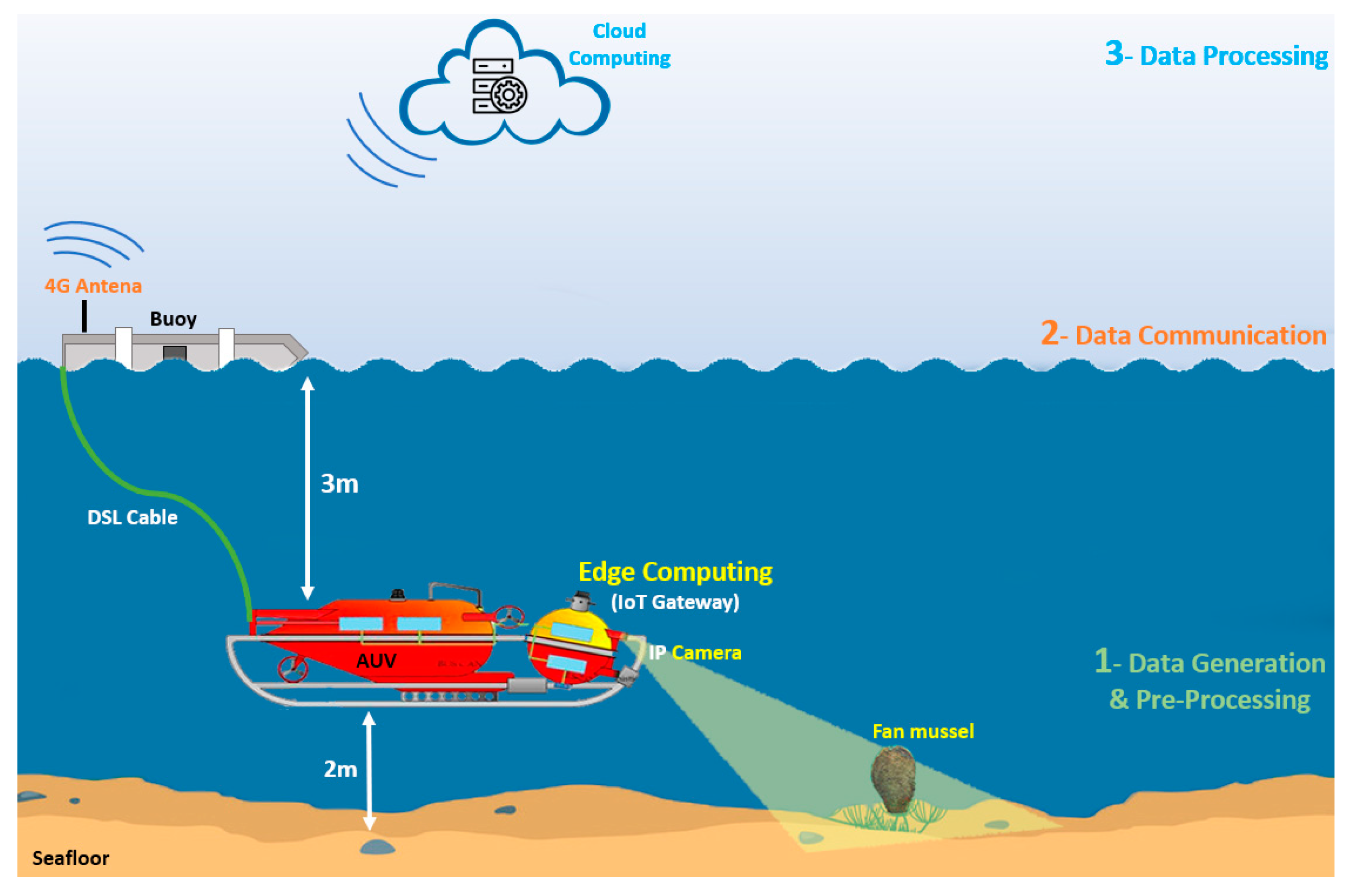

3. Proposed AUV-IoT Platform

- Node 1 (in the head of the vehicle) manages its movement, lighting, camera power, tilt reading (pitch and roll) and the acquisition of inertial unit variables.

- Node 2 (DVL: Doppler velocity logger) manages data acquisition and body tilt reading (pitch and roll).

- Node 3 governs GPS reading, engine management and control (propulsion, rudder and dive).

- Node 4 monitors marine instrumentation sensors (side-scan sonar, image sonar, microUSBL) and their energy management.

- The master node consists of a National Instrument single-board Remote Input/Output (NI sbRIO) 9606 (the main vehicle controller). Its function in this network is to collect process information from each of the nodes and send commands. It is the link with the superior Ethernet network.

3.1. The AUV-IoT Architecture Development

| Algorithm 1. Specimen tracking algorithm |

| Start () |

| Step 1: |

| While (mission has not started) {} |

| Step 2: |

| If (mission has ended) |

| {End()} |

| Else |

| {Acquire frame and send to cloud} |

| {Get the answer} |

| If (accuracy > 20%) |

| {Go to step 3} |

| Else |

| {Go to step 2} |

| Step 3: |

| {Calculate the bounding box centre of detected object} |

| {Calculate the distance between the centre of the detected nacre bounding box (C1) and the center of the captured frame (C2)} |

| {Conversion of distance (C = C2 − C1) into degrees (new heading and tilt setpoint)} |

| {Send command to sbRIO with new heading and tilt setpoint.} |

| If (C==0) |

| {Go to step 4} |

| Else |

| {Go to step 3} |

| Step 4: |

| {Send the command to sbRIO to set the speed (fixed speed setpoint)} |

| {Take images I1 and I2 in two different positions, where P1 and P2 are the pixel widths of the objects detected in both images} |

| {Calculate the distance using the following equations.

|

| If (the distance D calculated > 2 m) |

| {Go to step 4} |

| Else |

| {Go to step 5} |

| Step 5: |

| {Get accuracy of the specimen image} |

| If (accuracy ≥ 80%) |

| {Save point, save picture and resume mission} |

| {Send command to sbRIO to save specimen’s position} |

| Else |

| {Go back to the main mission without saving. It is not a specimen} |

| {Go to Step 2} |

| End () |

3.2. IoT Gateway: The Edge Node and Connection to the Cloud

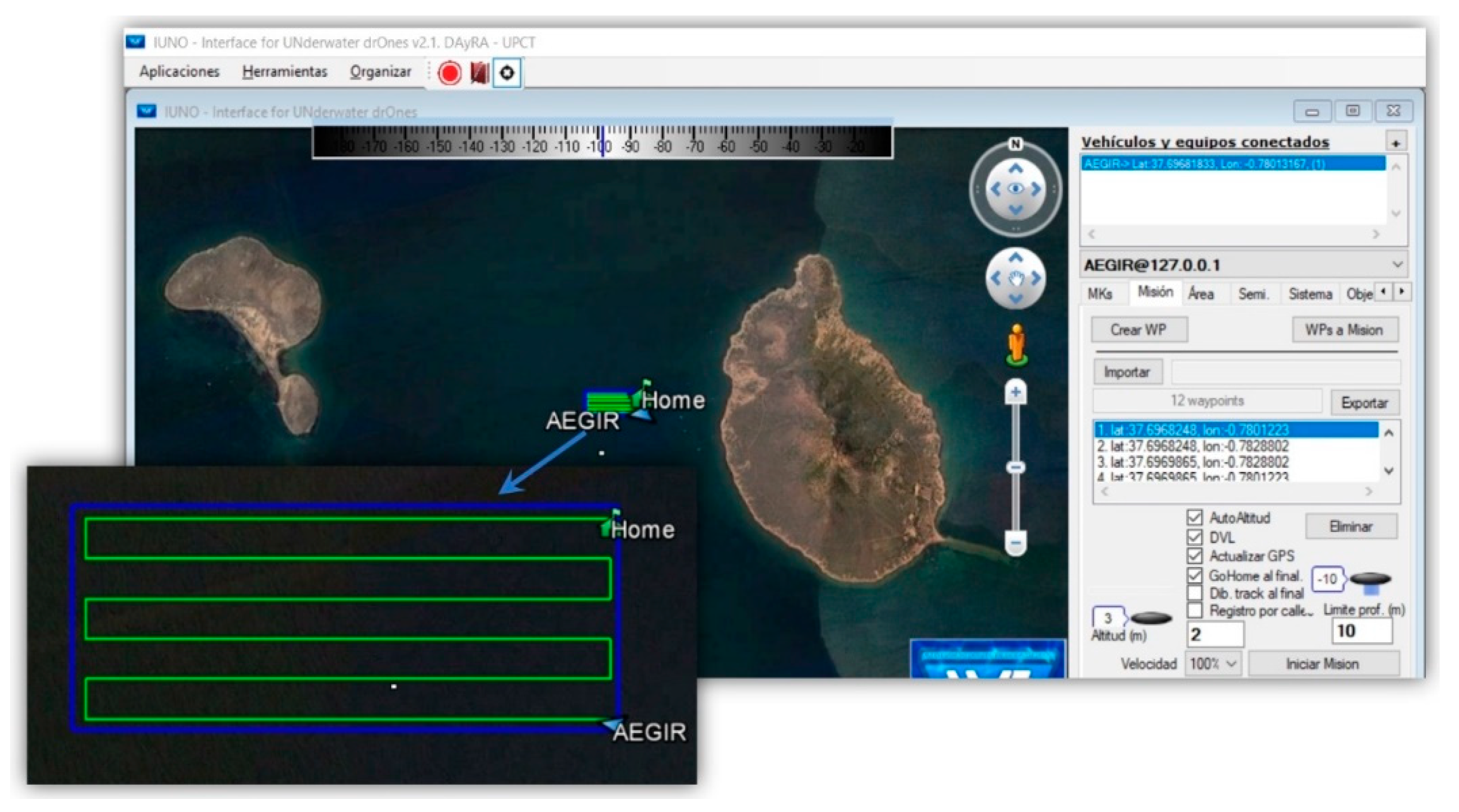

3.3. AUV Control

- CAN bus (reading and writing interface): There are a number of nodes connected to the vehicle’s CAN bus, whose network master is the sbRIO. Each of the nodes has a series of sensors and actuators connected. The function of these blocks is to receive information and send commands to the different nodes through the CANopen protocol. The type of data received or sent will depend on the function of the node.

- TCP/IP (reading and writing interface): This manages TCP/IP communications for receiving commands from IUNO and the IoT gateway, as well as sending navigation information from the vehicle to the rest of the equipment on the Ethernet network.

- Data manipulation: This is responsible for adapting the data formats from the different sources (CAN, inertial unit, IUNO) to a common format within the program and vice versa: e.g., conversion of latitude received through the CAN network interface (UINT8 array type, extracted from a buffer) to I32 data type.

- Data saving: This saves the process and navigation information in the sbRIO in TDMS (Technical Data Management Streaming) format files. TDMS is a binary measurement file format, focused on storing information in the smallest possible space. It can be exported to several formats (csv, xls, txt, etc.).

- Heading control/depth control/velocity control/heading tilt control: Management of the different control loops for heading, depth, velocity and head tilt. These take on special importance in automatic or semi-automatic navigation modes.

- Thruster control: As a result of the previous timed loop, a heading, depth or position setpoint is obtained. In this module, they are processed to obtain as a result a PWM (Pulse-Width Modulation) value to be applied to each of the vehicle’s engines.

- Automatic (IUNO)/manual mode navigation: AEGIR developed at the Division of Automation and Autonomous Robotics (DAyRA) of the Polytechnic University of Cartagena, and the Ocean Server AUV IVER2. IUNO’s capabilities and characteristics. An AEGIR vehicle can be handled in both modes: manual and automatic. This timed loop is in charge of selecting the appropriate navigation source. Only the automatic mode is considered in this paper.

- Mission management: Once the mission created in IUNO is downloaded, this module manages each of the waypoints to which the vehicle must navigate, dispatching the different navigation commands for the heading control/depth control/position control timed loops. This module also handles the main navigation mode in normal operations and the specimen tracking navigation mode, as described in Section 7.

4. Artificial Intelligence and Vision-Based Object Recognition

4.1. Deep Learning for Object Detection

4.2. Convolutional Neural Network for Object Recognition

4.3. Object Detection Training in the Cloud

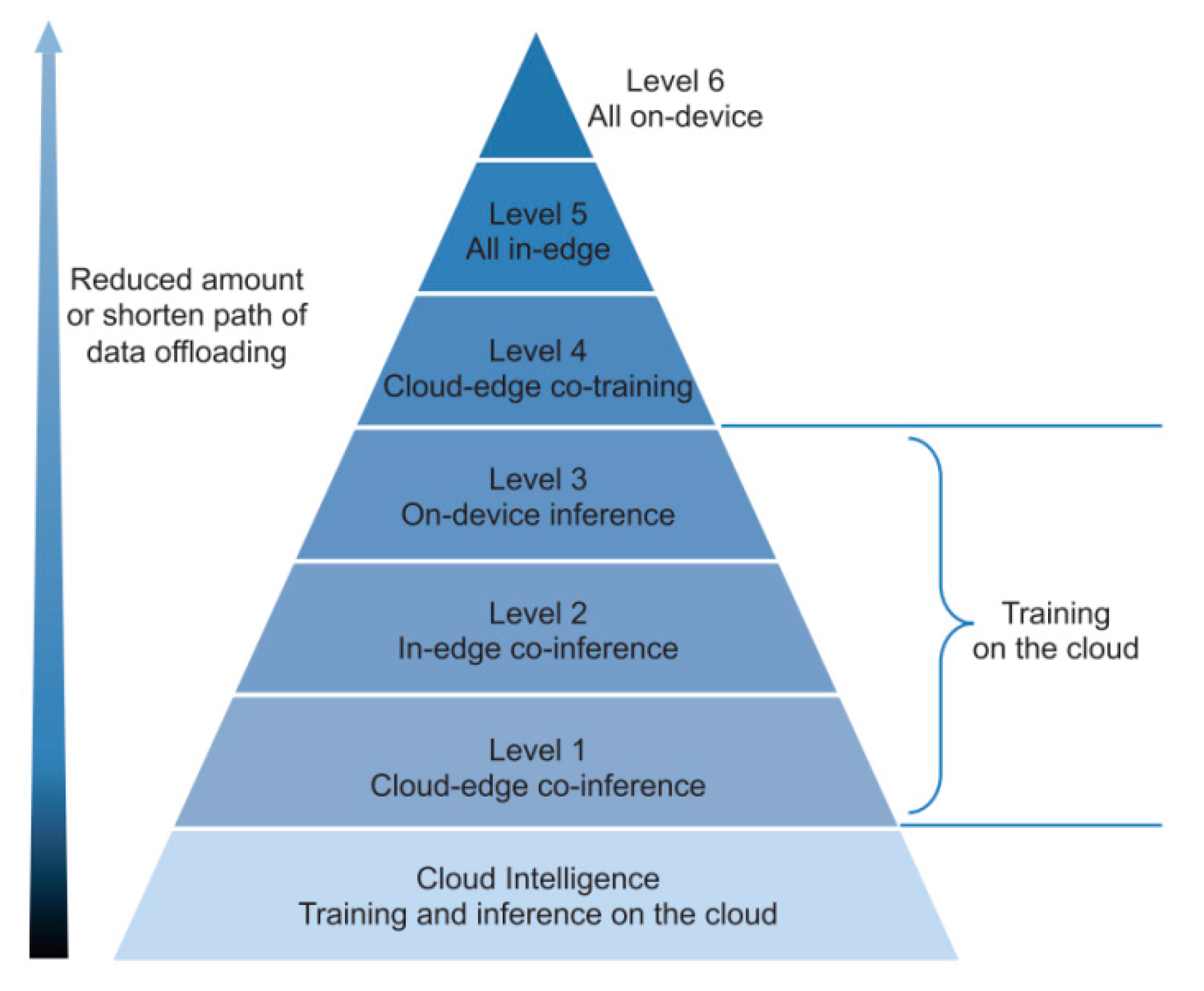

4.4. The Cloud AI at the Edge

5. Visual Servo Control and Distance Estimation

Servo Control Latency

6. Performance

6.1. Delay Assessment in the Proposed Platforms

6.1.1. Cloud Architecture

- (1)

- Tnav is the navigation sensor time,

- (2)

- Tsb1 is the acquisition time of the sensor data in sbRIO,

- (3)

- Tgt1 is the processing time of the first and second threads in the IoT gateway presented,

- (4)

- Tby1 is the transmission time from the AUV to the buoy,

- (5)

- Tcloud is the time needed to send photos to the cloud and receive the response results,

- (6)

- Tby2 is the transmission time of cloud results to the AUV,

- (7)

- Tgt2 is the processing time of the first, second, and third threads in the IoT gateway presented,

- (8)

- Tsb2 is the IoT gateway data acquisition time in sbRIO, and

- (9)

- Tact is the actuation time.

6.1.2. Edge Architecture

6.2. Metrics

6.3. Latency Evaluation

7. Exploration Case Study

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- González-Reolid, I.; Molina-Molina, J.C.; Guerrero-González, A.; Ortiz, F.J.; Alonso, D. An Autonomous Solar-Powered Marine Robotic Observatory for Permanent Monitoring of Large Areas of Shallow Water. Sensors 2018, 18, 3497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Boletín Oficial de la Región de Murcia, Numero 298, Viernes, 27 de Diciembre de 2019, Página 36008, 8089 Decreto-Ley N° 2/2019, de 26 de Diciembre, de Protección Integral del Mar Menor. Available online: https://www.borm.es/services/anuncio/ano/2019/numero/8089/pdf?id=782206 (accessed on 18 June 2020).

- Informe Integral Sobre el Estado Ecológico del Mar Menor; Comité de Asesoramiento Científico del Mar Menor: Murcia, Spain, 2017.

- Kersting, D.; Benabdi, M.; Čižmek, H.; Grau, A.; Jimenez, C.; Katsanevakis, S.; Öztürk, B.; Tuncer, S.; Tunesi, L.; Vázquez-Luis, M.; et al. Pinna nobilis. IUCN Red List Threat. Species 2019, e.T160075998A160081499. Available online: https://www.iucnredlist.org/species/160075998/160081499 (accessed on 19 June 2020). [CrossRef]

- del Año, M. La Nacra Pinna nobilis. In Noticiario de la Sociedad Española de Malacologia N° 67-2017; Moreno, D., Rolan, E., Troncoso, J.S., Eds.; Katsumi-san Co.: Cambridge, MA, USA, 2017. [Google Scholar]

- Belando, M.D.; García-Muñoz, M.R.; Ramos-Segura, A.; Franco-Navarro, I.J.; García-Moreno, P.; Ruiz-Fernández, J.M. Distribución y Abundancia de las Praderas de MACRÓFITOS bentónicos y las Poblaciones de Nacra (Pinna nobilis) en el Mar Menor; Informe del Instituto Español de Oceanografía y la Asociación de Naturalistas del Sureste: Murcia, Spain, 2014; 60p. [Google Scholar]

- Paull, L.; Seto, M.; Saeedi, S.; Leonard, J.J. Navigation for Underwater Vehicles; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar] [CrossRef]

- Liu, X.; Xu, X.; Liu, Y.; Wang, L. Kalman filter for cross-noise in the integration of SINS and DVL. Math. Probl. Eng. 2014, 2014, 1–8. [Google Scholar] [CrossRef]

- Paull, L.; Saeedi, S.; Seto, M.; Li, H. AUV navigation and localization: A review. IEEE J. Ocean. Eng. 2014, 39, 131–149. [Google Scholar] [CrossRef]

- Moysiadis, V.; Sarigiannidis, P.; Moscholios, I. Towards Distributed Data Management in Fog Computing. Wirel. Commun. Mob. Comput. 2018, 2018. [Google Scholar] [CrossRef]

- Armbrust, M.; Fox, A.; Griffith, R.; Joseph, A.D.; Katz, R.; Konwinski, A.; Lee, G.; Patterson, D.; Rabkin, A.; Stoica, I.; et al. A view of cloud computing. Commun. ACM 2010, 53, 50–58. [Google Scholar] [CrossRef] [Green Version]

- Kenitar, S.B.; Arioua, M.; Younes, A.; Radi, M.; Salhaoui, M. Comparative Analysis of Energy Efficiency and Latency of Fog and Cloud Architectures. In Proceedings of the 2019 International Conference on Sensing and Instrumentation in IoT Era (ISSI), Lisbon, Portugal, 29–30 August 2019; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Wang, X.; Victor, C.M.; Niyato, D.; Yan, X.; Chen, X. Convergence of Edge Computing and Deep Learning: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 869–904. [Google Scholar] [CrossRef] [Green Version]

- Computer Vision, WikiPedia. Available online: https://en.wikipedia.org/wiki/Computer_vision (accessed on 18 June 2020).

- Feng, X.; Jiang, Y.; Yang, X.; Du, M.; Li, X. Computer Vision Algorithms and Hardware Implementations: A Survey. Integration 2019, 69, 309–320. [Google Scholar] [CrossRef]

- Kang, Y.; Hauswald, J.; Gao, C.; Rovinski, A.; Mudge, T.; Mars, J.; Tang, L. Neurosurgeon: Collaborative Intelligence Between the Cloud and Mobile Edge. In Proceedings of the 22nd International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS 2017), Xi’an, China, 8–12 April 2017; pp. 615–629. [Google Scholar]

- Basagni, S.; Conti, M.; Giordano, S.; Stojmenovic, I. Advances in Underwater Acoustic Networking. In Mobile Ad Hoc Networking: The Cutting Edge Directions; IEEE: Piscataway, NJ, USA, 2013; pp. 804–852. [Google Scholar]

- Luo, H.; Wu, K.; Ruby, R.; Liang, Y.; Guo, Z.; Ni, L.M. Software-Defined Architectures and Technologies for Underwater Wireless Sensor Networks: A Survey. IEEE Commun. Surv. Tutor. 2018, 20, 2855–2888. [Google Scholar] [CrossRef]

- Dol, H.S.; Casari, P.; van der Zwan, T.; Otnes, R. Software-Defined Underwater Acoustic Modems: Historical Review and the NILUS Approach. IEEE J. Ocean. Eng. 2017, 42, 722–737. [Google Scholar] [CrossRef]

- Xu, G.; Shi, Y.; Sun, X.; Shen, W. Internet of Things in Marine Environment Monitoring: A Review. Sensors 2019, 19, 1711. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bao, J.; Li, D.; Qiao, X.; Rauschenbach, T. Integrated navigation for autonomous underwat vehicles in aquaculture: A review. Inf. Process. Agric. 2020, 7, 139–151. [Google Scholar] [CrossRef]

- Generation and Processing of Simulated Underwater Images for Infrastructure Visual Inspection with UUVs. Sensors 2019, 19, 5497. [CrossRef] [PubMed] [Green Version]

- Wynn, R.B.; Huvenne, V.A.I.; le Bas, T.P.; Murton, B.; Connelly, D.P.; Bett, B.J.; Ruhl, H.A.; Morris, K.J.; Peakall, J.; Parsons, D.R.; et al. Autonomous Underwater Vehicles (AUVs): Their past, present and future contributions to the advancement of marine geoscience. Mar. Geol. 2014. [Google Scholar] [CrossRef] [Green Version]

- Barrett, N.; Seiler, J.; Anderson, T.; Williams, S.; Nichol, S.; Hill, N. Autonomous Underwater Vehicle (AUV) for mapping marine biodiversity in coastal and shelf waters: Implications for Marine Management. In Proceedings of the OCEANS’10 IEEE Conference, Sydney, Australia, 24−27 May 2010. [Google Scholar]

- Liu, S.; Xu, H.; Lin, Y.; Gao, L. Visual Navigation for Recovering an AUV by Another AUV in Shallow Water. Sensors 2019, 19, 1889. [Google Scholar] [CrossRef] [Green Version]

- Corgnati, L.; Marini, S.; Mazzei, L.; Ottaviani, E.; Aliani, S.; Conversi, A.; Griffa, A. Looking inside the Ocean: Toward an Autonomous Imaging System for Monitoring Gelatinous Zooplankton. Sensors 2016, 16, 2124. [Google Scholar] [CrossRef] [Green Version]

- Yoerger, D.R.; Bradley, A.M.; Walden, B.B.; Singh, H.; Bachmayer, R. Surveying asubsea lavaflow using the Autonomous Benthic Explorer (ABE). Int. J. Syst. Sci. 1998, 10, 1031–1044. [Google Scholar] [CrossRef]

- Yoerger, D.R.; Bradley, A.M.; Jakuba, M.; German, C.R.; Shank, T.; Tivey, M. Autono-mous and remotely operated vehicle technology for hydrothermal vent discovery, exploration, and sampling. Oceanography 2007, 20, 152–161. [Google Scholar] [CrossRef] [Green Version]

- Caress, D.W.; Thomas, H.; Kirkwood, W.J.; McEwen, R.; Henthorn, R.; Clague, D.A.; Paull, C.K.; Paduan, J. High-Resolution Multibeam, Sides Can and Sub Bottomsurveys Using the MBARI AUVD; Allan, B., Greene, H.G., Reynolds, J.R., Eds.; Marine HabitatMapping Technology for Alaska, Alaska Sea Grant College Program; University of Alaska: Fairbanks, Alaska, 2008; pp. 47–69. [Google Scholar]

- Silva, E.; Martins, A.; Dias, A.; Matos, A.; Olivier, A.; Pinho, C.; Silva, E.; de Sá, F.A.; Ferreira, H.; Silva, H.; et al. Strengthening marine and maritime research and technology. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–9. [Google Scholar]

- Nicholson, J.; Healey, A. The present state of autonomous underwater vehicle (AUV) applications and technologies. Mar. Technol. Soc. J. 2008, 42, 44–51. [Google Scholar] [CrossRef]

- Lucieer, V.L.; Forrest, A.L. Emerging Mapping Techniques for Autonomous Underwater Vehicles (AUVs). In Seafloor Mapping along Continental Shelves: Research and Techniques for Visualizing Benthic Environments; Finkl, C.W., Makowski, C., Eds.; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar]

- Wynn, R.; Bett, B.; Evans, A.; Griffiths, G.; Huvenne, V.; Jones, A.; Palmer, M.; Dove, D.; Howe, J.; Boyd, T. Investigating the Feasibility of Utilizing AUV and Glider 33 Technology for Mapping and Monitoring of the UK MPA Network; National Oceanography Centre: Southampton, UK, 2012. [Google Scholar]

- Weidner, N.; Rahman, S.; Li, A.Q.; Rekleitis, I. Underwater cave mapping using stereo vision. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 5709–5715. [Google Scholar]

- Hernández, J.D.; Istenic, K.; Gracias, N.; García, R.; Ridao, P.; Carreras, M. Autonomous seabed inspection for environmental monitoring. In Advances in Intelligent Systems and Computing, Proceedings of the Robot 2015: Second Iberian Robotics Conference, Lisbon, Portugal, 19–21 November 2015; Springer: Berlin, Germany, 2016; pp. 27–39. [Google Scholar]

- Johnson-Roberson, M.; Bryson, M.; Friedman, A.; Pizarro, O.; Troni, G.; Ozog, P.; Henderson, J.C. High-resolution underwater robotic vision-based mapping and three-dimensional reconstruction for archaeology. J. Field Robot. 2017, 34, 625–643. [Google Scholar] [CrossRef] [Green Version]

- Ozog, P.; Carlevaris-Bianco, N.; Kim, A.; Eustice, R.M. Long-term Mapping Techniques for Ship Hull Inspection and Surveillance using an Autonomous Underwater Vehicle. J. Field Robot. 2016, 33, 265–289. [Google Scholar] [CrossRef] [Green Version]

- Bonnin-Pascual, F.; Ortiz, A. On the use of robots and vision technologies for the inspection of vessels: A survey on recent advances. Ocean. Eng. 2019, 190, 106420. [Google Scholar] [CrossRef]

- Plymouth University. Available online: https://www.plymouth.ac.uk/news/study-explores-the-use-of-robots-and-artificial-intelligence-to-understand-the-deep-sea (accessed on 12 July 2019).

- High, R.; Bakshi, T. Cognitive Computing with IBM Watson: Build Smart Applications Using Artificial Intelligence as a Service; Published by Packt Publishing: Birmingham, UK, 2019. [Google Scholar]

- Lu, H.; Li, Y.; Zhang, Y.; Chen, M.; Serikawa, S.; Kim, H. Underwater Optical Image Processing: A Comprehensive Review. arXiv 2017, arXiv:1702.03600. [Google Scholar] [CrossRef]

- Schechner, Y.; Averbuch, Y. Regularized image recovery in scattering media. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1655–1660. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yemelyanov, K.; Lin, S.; Pugh, E.; Engheta, N. Adaptive algorithms for two-channel polarization sensing under various polarization statistics with nonuniform distributions. Appl. Opt. 2006, 45, 5504–5520. [Google Scholar] [CrossRef]

- Arnold-Bos, A.; Malkasset, J.; Kervern, G. Towards a model-free denoising of underwater optical images. In Proceedings of the IEEE Europe Oceans Conference, Brest, France, 20–23 June 2005; pp. 527–532. [Google Scholar]

- Roser, M.; Dunbabin, M.; Geiger, A. Simultaneous underwater visibility assessment, enhancement and improved stereo. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 1–8. [Google Scholar]

- Lu, H.; Li, Y.; Xu, X.; He, L.; Dansereau, D.; Serikawa, S. Underwater image descattering and quality assessment. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 1998–2002. [Google Scholar]

- Lu, H.; Serikawa, S. Underwater scene enhancement using weighted guided median filter. In Proceedings of the IEEE International Conference on Multimedia and Expo, Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Foresti, G.L.; Murino, V.; Regazzoni, C.S.; Trucco, A. A Voting-Based Approach for Fast Object Recognition in Underwater Acoustic Images. IEEE J. Ocean. Eng. 1997, 22, 57–65. [Google Scholar] [CrossRef]

- Hansen, R.K.; Andersen, P.A. 3D Acoustic Camera for Underwater Imaging. Acoust. Imaging 1993, 20, 723–727. [Google Scholar]

- Lane, D.M.; Stoner, J.P. Automatic interpretation of sonar imagery using qualitative feature matching. IEEE J. Ocean. Eng. 1994, 19, 391–405. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, J.; Cao, Y.; Wang, Z. A deep CNN method for underwater image enhancement. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1382–1386. [Google Scholar]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef] [Green Version]

- Oleari, F.; Kallasi, F.; Rizzini, D.L.; Aleotti, J.; Caselli, S. An underwater stereo vision system: From design to deployment and dataset acquisition. In Proceedings of the Oceans’15 MTS/IEEE, Genova, Italy, 18–21 May 2015; pp. 1–6. [Google Scholar]

- Sanz, P.J.; Ridao, P.; Oliver, G.; Melchiorri, C.; Casalino, G.; Silvestre, C.; Petillot, Y.; Turetta, A. TRIDENT: A framework for autonomous underwater intervention missions with dexterous manipulation capabilities. IFAC Proc. Vol. 2010, 43, 187–192. [Google Scholar] [CrossRef]

- Duarte, A.; Codevilla, F.; Gaya, J.D.O.; Botelho, S.S. A dataset to evaluate underwater image restoration methods. In Proceedings of the OCEANS, Shanghai, China, 10–13 April 2016; pp. 1–6. [Google Scholar]

- Ferrera, M.; Moras, J.; Trouvé-Peloux, P.; Creuze, V.; Dégez, D. The Aqualoc Dataset: Towards Real-Time Underwater Localization from a Visual-Inertial-Pressure Acquisition System. arXiv 2018, arXiv:1809.07076. [Google Scholar]

- Akkaynak, D.; Treibitz, T.; Shlesinger, T.; Loya, Y.; Tamir, R.; Iluz, D. What is the space of attenuation coefficients in underwater computer vision? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4931–4940. [Google Scholar]

- Foresti, G.L.; Gentili, S. A Vison Based System for Object Detection In Underwater Images. Int. J. Pattern Recognit. Artif. Intell. 2000, 14, 167–188. [Google Scholar] [CrossRef]

- Valdenegro-Toro, M. Improving Sonar Image Patch Matching via Deep Learning. In Proceedings of the 2017 European Conference on Mobile Robots (ECMR), Paris, France, 6–8 September 2017. [Google Scholar] [CrossRef] [Green Version]

- Villon, S.; Mouillot, D.; Chaumont, M.; Darling, E.S.; Subsolb, G.; Claverie, T.; Villéger, S. A Deep Learning method for accurate and fast identification of coral reef fishes in underwater images. Ecol. Inform. 2018. [Google Scholar] [CrossRef] [Green Version]

- Rampasek, L.; Goldenberg, A. TensorFlow: Biology’s Gateway to Deep Learning. Cell Syst. 2016, 2, 12–14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Weinstein, B.G. A computer vision for animal ecology. J. Anim. Ecol. 2018, 87, 533–545. [Google Scholar] [CrossRef]

- Gomes-Pereira, J.N.; Auger, V.; Beisiegel, K.; Benjamin, R.; Bergmann, M.; Bowden, D.; Buhl-Mortensen, P.; De Leo, F.C.; Dionísio, G.; Durden, J.M. Current and future trends in marine image annotation software. Prog. Oceanogr. 2016, 149, 106–120. [Google Scholar] [CrossRef]

- Qut University. Available online: https://www.qut.edu.au/news?id=135108 (accessed on 3 May 2020).

- Piechaud, N.; Hunt, C.; Culverhouse, P.F.; Foster, N.L.; Howell, K.L. Automated identification of benthic epifauna with computer vision. Mar. Ecol. Prog. Ser. 2019, 615, 15–30. [Google Scholar] [CrossRef]

- Lorencik, D.; Tarhanicova, M.; Sincak, P. Cloud-Based Object Recognition: A System Proposal; Springer International Publishing: Cham, Switzerland, 2014. [Google Scholar] [CrossRef]

- Embedded-Vision. Available online: https://www.embedded-vision.com/platinum-members/embedded-vision-alliance/embedded-vision-training/documents/pages/cloud-vs-edge (accessed on 3 May 2020).

- Wang, X.; Han, Y.; Leung, V.C.; Niyato, D.; Yan, X.; Chen, X. Convergence of Edge Computing and Deep Learning: A Comprehensive Survey. arXiv 2019, arXiv:1907.08349v2. [Google Scholar] [CrossRef] [Green Version]

- Kim, D.; Han, K.; Sim, J.S.; Noh, Y. Smombie Guardian: We watch for potentialobstacles while you are walking andconducting smartphone activities. PLoS ONE 2018, 13, e0197050. [Google Scholar] [CrossRef]

- Megalingam, R.K.; Shriram, V.; Likhith, B.; Rajesh, G.; Ghanta, S. Monocular distance estimation using pinhole camera approximation to avoid vehicle crash and back-over accidents. In Proceedings of the 2016 10th International Conference on Intelligent Systems and Control (ISCO), Coimbatore, India, 7–8 January 2016; IEEE: Coimbatore, India. [Google Scholar] [CrossRef]

- Salhaoui, M.; Guerrero-Gonzalez, A.; Arioua, M.; Ortiz, F.J.; El Oualkadi, A.; Torregrosa, C.L. Smart industrial iot monitoring and control system based on UAV and cloud computing applied to a concrete plant. Sensors 2019, 19, 3316. [Google Scholar] [CrossRef] [Green Version]

- Stackoverflow. Available online: https://stackoverflow.blog/2017/09/14/python-growing-quickly/ (accessed on 3 May 2020).

- Netguru. Available online: https://www.netguru.com/blog/why-is-python-good-for-research-benefits-of-the-programming-language (accessed on 3 May 2020).

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107. [Google Scholar] [CrossRef] [Green Version]

- Sikeridis, D.; Papapanagiotou, I.; Rimal, B.P.; Devetsikiotis, M. A Comparative Taxonomy and Survey of Public Cloud Infrastructure Vendors. arXiv 2018, arXiv:1710.01476v2. [Google Scholar]

- National Instruments. Available online: https://www.ni.com/es-es/support/model.sbrio-9606.html (accessed on 3 May 2020).

- National Instruments. Available online: https://www.ni.com/en-us/shop/labview.html (accessed on 3 May 2020).

- Buttazzo Giorgio, C. Hard Real-Time Computing Systems: Predictable Scheduling Algorithms and Applications; Springer Science & Business Media: New York, NY, USA, 2011; Volume 24. [Google Scholar]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of Deep Learning for Object Detection. In Proceedings of the International Conference on Computational Intelligence and Data Science (ICCIDS 2018), Gurugram, India, 7–8 April 2018. [Google Scholar]

- Zhao, Z.; Zheng, P.; Xu, S.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dahlkamp, H.; Kaehler, A.; Stavens, D.; Thrun, S.; Bradski, G.R. Self supervised monocular road detection in desert terrain. In Proceedings of the Robotics: Science and Systems, Philadelphia, PA, USA, 16–19 August 2006. [Google Scholar]

- Chen, C.; Seff, A.; Kornhauser, A.; Xiao, J. Deep Driving: Learning affordance for direct perception in autonomous driving. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 2722–2730. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3D object detection network for autonomous driving. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Honolulu, HI, USA, 21–26 July 2017; pp. 6526–6534. [Google Scholar]

- Coates, A.; Ng, A.Y. Multi-camera object detection for robotics. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 412–419. [Google Scholar]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Santiago, Chile, 7–13 December 2015; pp. 2625–2634. [Google Scholar]

- Zhiqiang, W.; Jun, L. A review of object detection based on convolutional neural network. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A large-scale hierarchical image database. In Proceedings of the Computer Vision and Pattern Recognition, 2009 (CVPR 2009), Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Ghidoni, P.L.N.S.; Brahnam, S. Handcrafted vs. non-handcrafted features for computer vision classification. Pattern Recognit. 2017, 71, 158–172. [Google Scholar] [CrossRef]

- Marine Species. Available online: http://www.marinespecies.org/ (accessed on 2 June 2020).

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Mao, Y.; You, C.; Zhang, J.; Huang, K.; Letaief, K.B. A survey on mobile edge computing: The communication perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar] [CrossRef] [Green Version]

- Google Cloud. Available online: https://cloud.google.com/vision/?hl=en (accessed on 3 May 2020).

- Azure. Available online: https://azure.microsoft.com/en-au/services/cognitive-services/computer-vision/ (accessed on 3 May 2020).

- Chaumette, F.; Hutchinson, S. Visual servo control, Part I: Basic approaches. IEEE Robot. Autom. Mag. 2006, 13. [Google Scholar] [CrossRef]

- Prats, M.; Palomeras, N.; Ridao, P.; Sanz, P.J. Template Tracking and Visual Servoing for Alignment Tasks with Autonomous Underwater Vehicles. In Proceedings of the 9th IFAC Conference on Manoeuvring and Control of Marine Craft, Arenzano, Italy, 19–21 September 2012. [Google Scholar]

- Gao, J.; Liu, C.; Proctor, A. Nonlinear model predictive dynamic positioning control of an underwater vehicle with an onboard USBL system. J. Mar. Sci. Technol. 2016, 21, 57–69. [Google Scholar] [CrossRef]

- Krupinski, S.; Desouche, R.; Palomeras, N.; Allibert, G.; Hua, M.D. Pool Testing of AUV Visual Servoing for Autonomous Inspection. IFAC-PapersOnLine 2015, 48, 274–280. [Google Scholar] [CrossRef]

- Kumar, G.S.; Unnikrishnan, V.; Painumgal, M.N.V.; Kumar, C.; Rajesh, K.H.V. Autonomous Underwater Vehicle for Vision Based Tracking. Procedia Comput. Sci. 2018. [Google Scholar] [CrossRef]

- Islam, M.J.; Fulton, M.; Sattar, J. Towards a Generic Diver-Following Algorithm: Balancing Robustness and Efficiency in Deep Visual Detection. IEEE Robot. Autom. Lett. 2019, 4, 113–120. [Google Scholar] [CrossRef] [Green Version]

- Yosafat, R.; Machbub, C.; Hidayat, E.M.I. Design and Implementation of Pan-Tilt for Face Tracking. In Proceedings of the International Conference on System Engineering and Technology, Shah Alam, Malaysia, 2–3 October 2017. [Google Scholar]

- Zhang, B.; Huang, J.; Lin, J. A Novel Algorithm for Object Tracking by Controlling PAN/TILT Automatically. In Proceedings of the ICETC 2nd International Conference on Intelligent System 2010, Shanghai, China, 22–24 June 2010; Volume VI, pp. 596–602. [Google Scholar]

- González, A.G.; Coronado, J. Tratamiento de los retrasos del procesamiento visual en el sistema de control de un cabezal estereoscópico. In XX Jornadas de Automática: Salamanca, 27, 28 y 29 de Septiembre; Universidad de Salamanca: Salamanca, Spain; pp. 83–87.

- IBM. Available online: https://cloud.ibm.com/docs/services/visual-recognition?topic=visual-recognition-object-detection-overview (accessed on 3 May 2020).

| TP | FP | FN | Precision | Recall | IoU | |

|---|---|---|---|---|---|---|

| IBM | 28 | 2 | 8 | 0.933333 | 0.777778 | 0.82506 |

| 22 | 3 | 13 | 0.916666 | 0.611111 | 0.83364 | |

| Azure cloud | 33 | 4 | 3 | 0.891892 | 0.916667 | 0.86601 |

| Azure edge | 24 | 3 | 11 | 0.888889 | 0.666667 | 0.678634 |

| Total Response Time (ms) | Cloud Response Time (ms) | IoT Computing Time (ms) | Capturing and Writing Time (ms) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Min | Max | Mean | Min | Max | Mean | Min | Max | Mean | Min | Max | Mean | |

| IBM | 1407 | 4060 | 2064 | 1280 | 3896 | 1935 | 0 | 0 | 0 | 93 | 192 | 129 |

| 1291 | 4384 | 1696 | 1160 | 4071 | 1520 | 0 | 0 | 0 | 92 | 196 | 130 | |

| Azure | 1298 | 4572 | 1703 | 1171 | 4435 | 1571 | 0 | 0 | 0 | 92 | 196 | 131 |

| Azure edge | 623 | 687 | 634 | 0 | 0 | 0 | 523 | 595 | 532 | 93 | 194 | 130 |

| Corner | Latitude | Longitude |

|---|---|---|

| North east | 37.697635° | −0.780121° |

| North west | 37.697635° | −0.782876° |

| South west | 37.696825° | −0.782876° |

| South east | 37.696825° | −0.780121° |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salhaoui, M.; Molina-Molina, J.C.; Guerrero-González, A.; Arioua, M.; Ortiz, F.J. Autonomous Underwater Monitoring System for Detecting Life on the Seabed by Means of Computer Vision Cloud Services. Remote Sens. 2020, 12, 1981. https://doi.org/10.3390/rs12121981

Salhaoui M, Molina-Molina JC, Guerrero-González A, Arioua M, Ortiz FJ. Autonomous Underwater Monitoring System for Detecting Life on the Seabed by Means of Computer Vision Cloud Services. Remote Sensing. 2020; 12(12):1981. https://doi.org/10.3390/rs12121981

Chicago/Turabian StyleSalhaoui, Marouane, J. Carlos Molina-Molina, Antonio Guerrero-González, Mounir Arioua, and Francisco J. Ortiz. 2020. "Autonomous Underwater Monitoring System for Detecting Life on the Seabed by Means of Computer Vision Cloud Services" Remote Sensing 12, no. 12: 1981. https://doi.org/10.3390/rs12121981

APA StyleSalhaoui, M., Molina-Molina, J. C., Guerrero-González, A., Arioua, M., & Ortiz, F. J. (2020). Autonomous Underwater Monitoring System for Detecting Life on the Seabed by Means of Computer Vision Cloud Services. Remote Sensing, 12(12), 1981. https://doi.org/10.3390/rs12121981