Remote Crop Mapping at Scale: Using Satellite Imagery and UAV-Acquired Data as Ground Truth

Abstract

1. Introduction

2. Materials and Methods

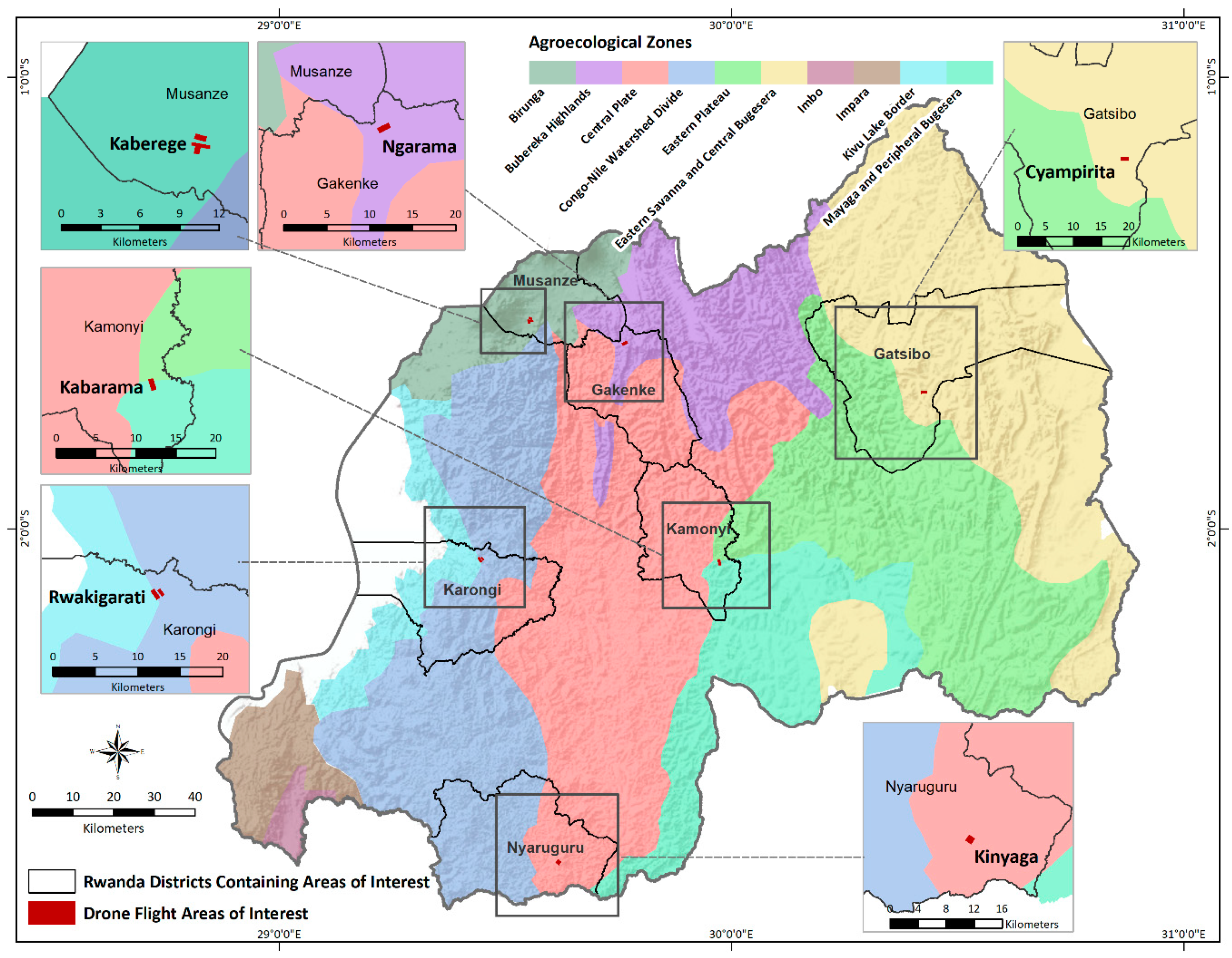

2.1. Study Site

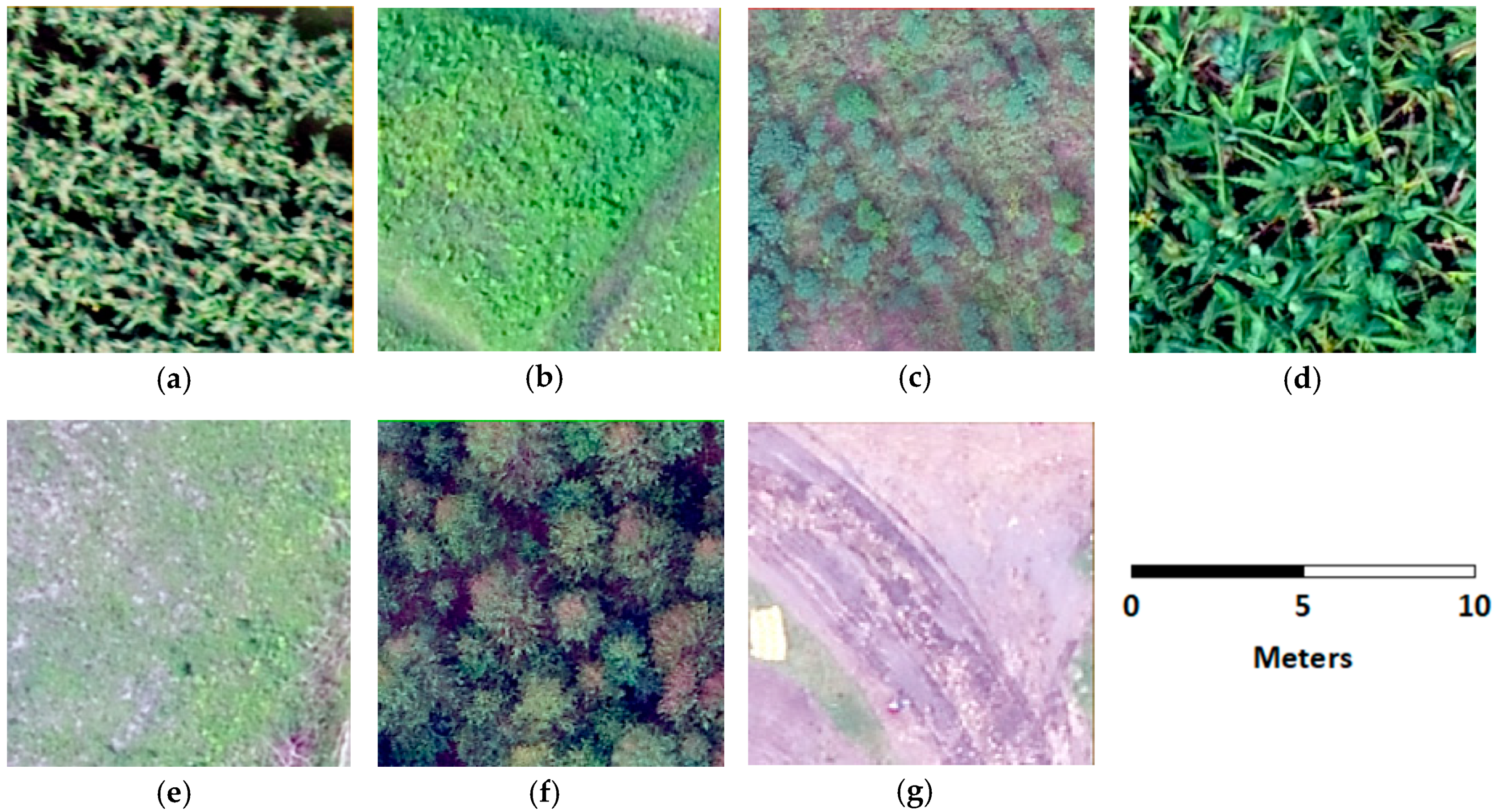

2.2. Ground-Truth Data

- Maize;

- Beans (bush beans and climbing beans);

- Cassava;

- Bananas (all varieties);

- Other vegetation (OtherVeg) representing grassland and crops not in class 1 through 4;

- Trees representing small tree stands, forest, and woodlands;

- Non-vegetative (NonVeg) land cover representing bare ground, buildings, structures, and roads.

2.3. Satellite Data Processing

2.4. Cropped Land Modeling

3. Results

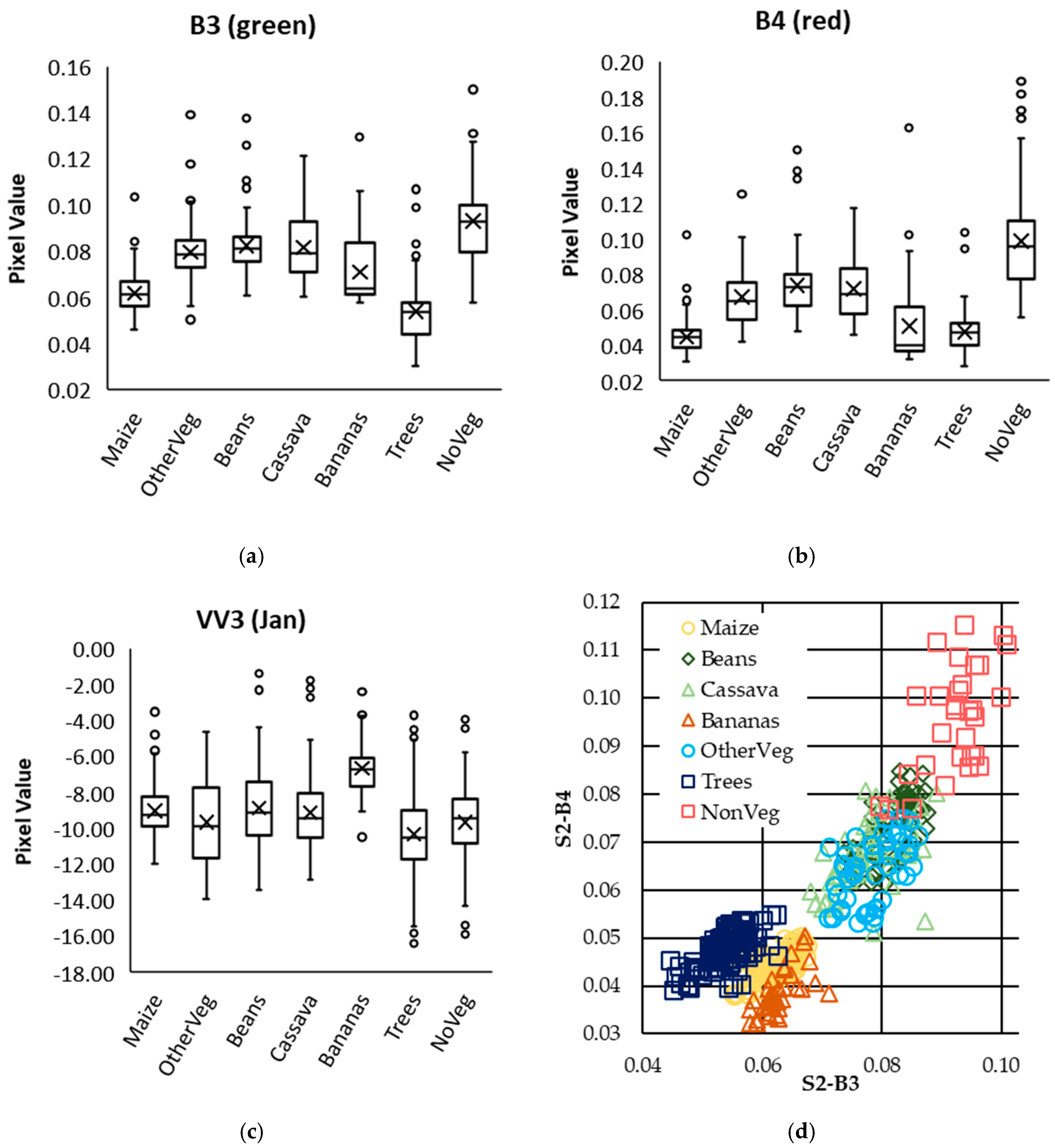

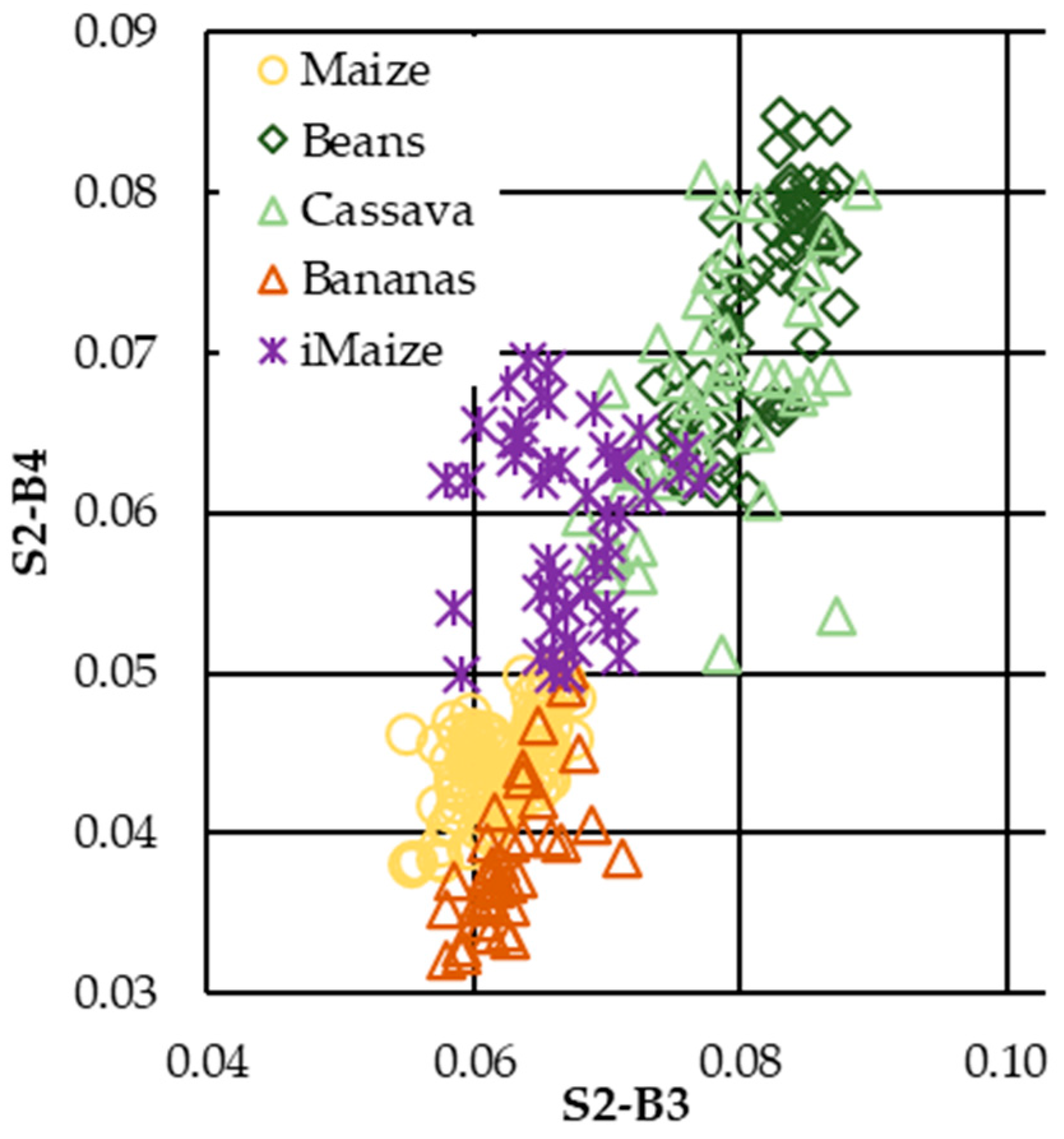

3.1. Discrimination of Labeled Categories

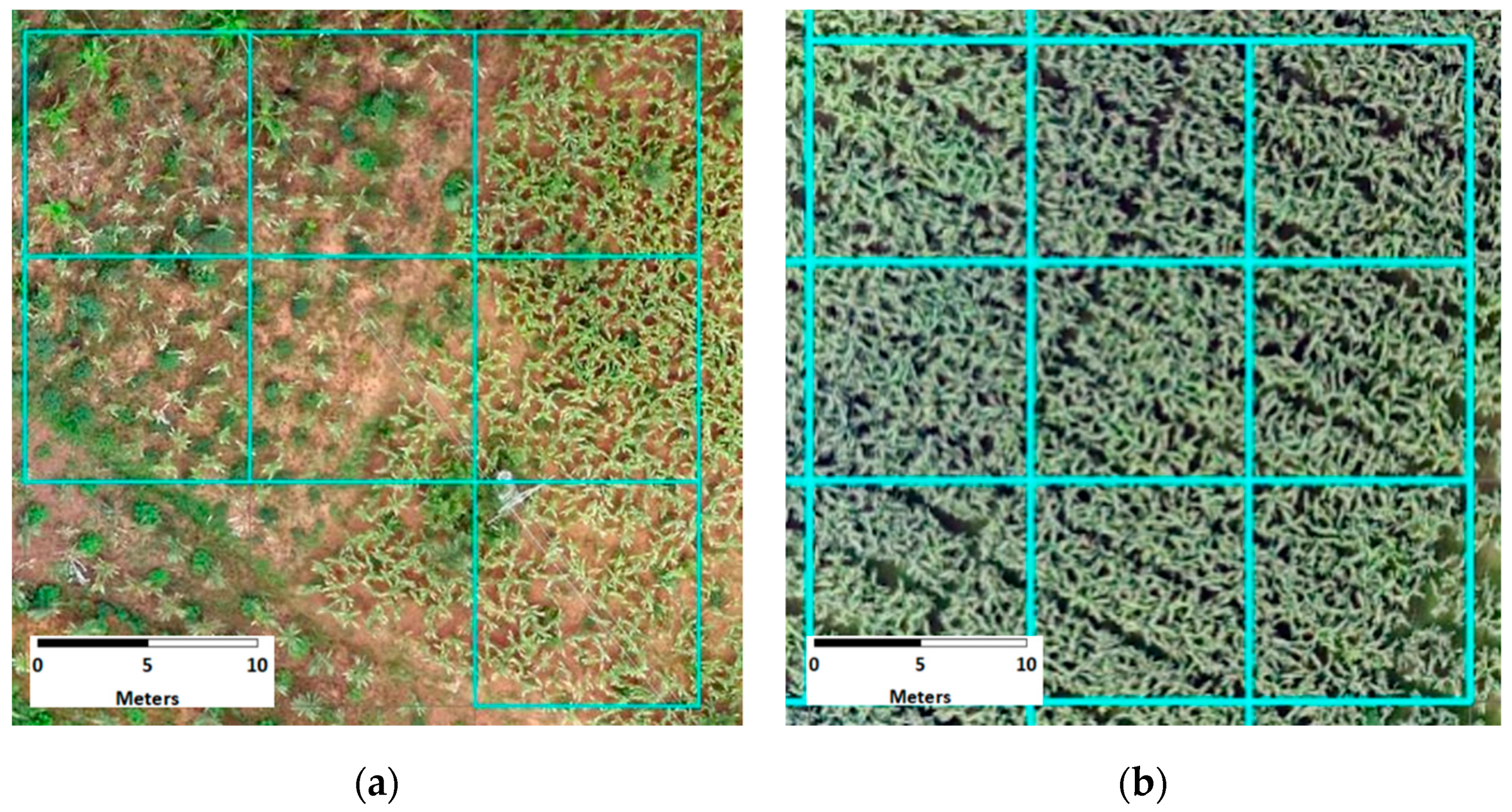

3.2. Intercropped Maize (iMaize)

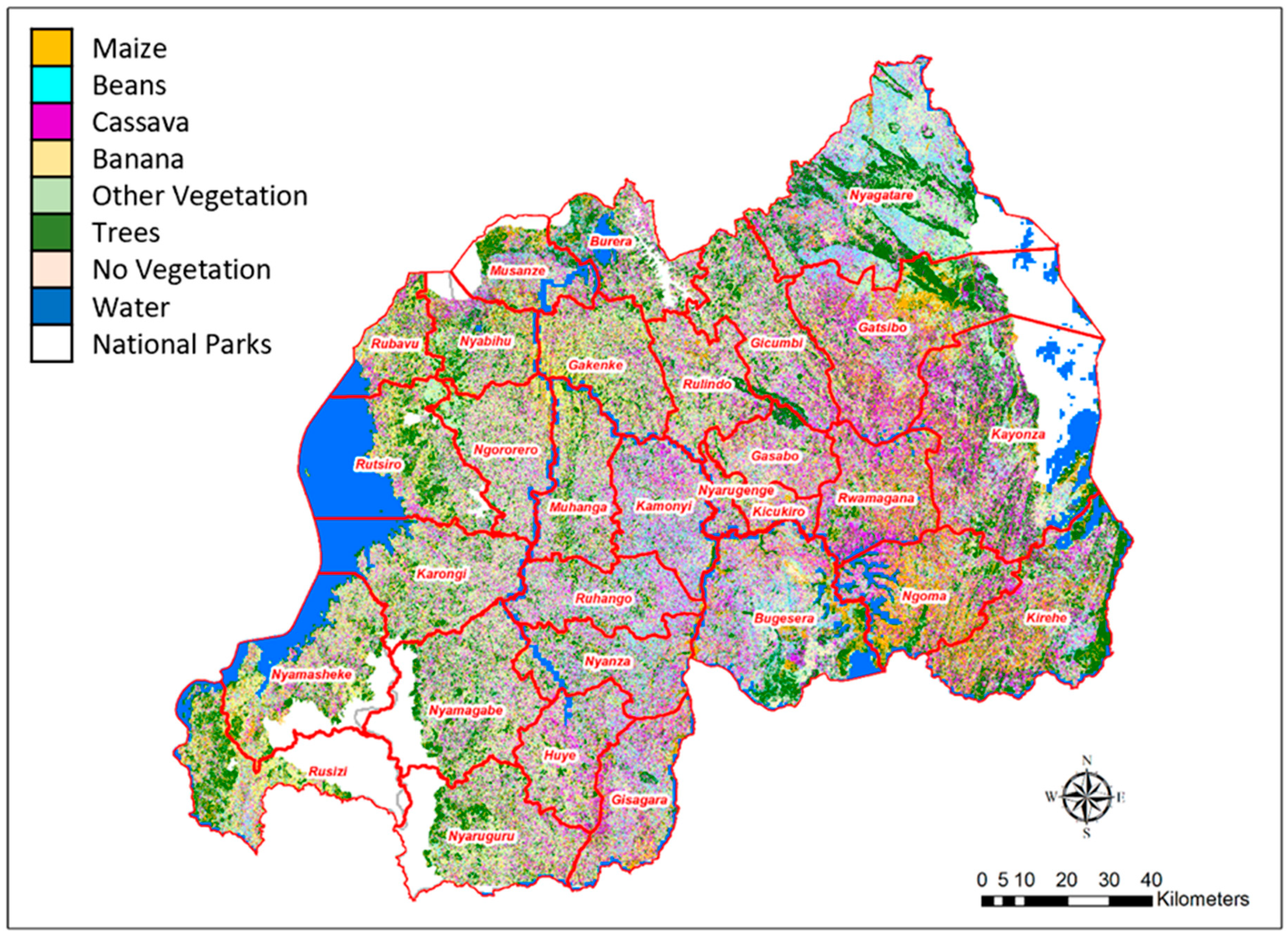

3.3. Cropped Land Model Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations (FAO). Global Strategy to Improve Agricultural and Rural Statistics, Action Plan, 2020–2025; FAO: Rome, Italy, 2018. [Google Scholar]

- Global Strategy to Improve Agricultural and Rural Statistics (GSARS). Handbook on Remote Sensing for Agricultural Statistics; GSARS: Rome, Italy, 2017. [Google Scholar]

- Fritz, S.; See, L.; You, L.; Justice, C.; Becker-Reshef, I.; Bydekerke, L.; Cumani, R.; Defourny, P.; Erb, K.; Foley, J.; et al. The Need for Improved Maps of Global Cropland. Eos Trans. Am. Geophys. Union 2013, 94, 31–32. [Google Scholar] [CrossRef]

- Macdonald, R.B. A summary of the history of the development of automated remote sensing for agricultural applications. IEEE Trans. Geosci. Remote Sens. 1984, GE-22, 473–482. [Google Scholar] [CrossRef]

- Frey, H.T.; Mannering, J.V.; Burwell, R.E. Agricultural Application of Remote Sensing: The Potential from Space Platforms; Economic Research Service, US Department of Agriculture: Washington, DC, USA, 1949.

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.-T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Burke, M.; Lobell, D.B. Satellite-based assessment of yield variation and its determinants in smallholder African systems. Proc. Natl. Acad. Sci. USA 2017, 114, 2189–2194. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; Burke, M.; Aston, S.; Lobell, D. Mapping Smallholder Yield Heterogeneity at Multiple Scales in Eastern Africa. Remote Sens. 2017, 9, 931. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Dupuy, S.; Vintrou, É.; Ameline, M.; Butler, S.; Bégué, A. A combined random forest and OBIA classification scheme for mapping smallholder agriculture at different nomenclature levels using multisource data (simulated Sentinel-2 time series, VHRS and DEM). Remote Sens. 2017, 9, 259. [Google Scholar] [CrossRef]

- Clevers, J.; Kooistra, L.; Van Den Brande, M. Using Sentinel-2 data for retrieving LAI and leaf and canopy chlorophyll content of a potato crop. Remote Sens. 2017, 9, 405. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Planells, M.; Dejoux, J.-F.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Kussul, N.; Lemoine, G.; Gallego, F.J.; Skakun, S.V.; Lavreniuk, M.; Shelestov, A.Y. Parcel-based crop classification in ukraine using landsat-8 data and sentinel-1A data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2500–2508. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, H.; Wang, C.; Zhang, B.; Liu, M. Crop classification based on temporal information using sentinel-1 SAR time-series data. Remote Sens. 2019, 11, 53. [Google Scholar] [CrossRef]

- Tomppo, E.; Antropov, O.; Praks, J. Cropland Classification Using Sentinel-1 Time Series: Methodological Performance and Prediction Uncertainty Assessment. Remote Sens. 2019, 11, 2480. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.-I. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GISci. Remote Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; You, C.; Di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with Google Earth Engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Tilton, J.C.; Gumma, M.K.; Teluguntla, P.; Oliphant, A.; Congalton, R.G.; Yadav, K.; Gorelick, N. Nominal 30-m Cropland Extent Map of Continental Africa by Integrating Pixel-Based and Object-Based Algorithms Using Sentinel-2 and Landsat-8 Data on Google Earth Engine. Remote Sens. 2017, 9, 1065. [Google Scholar] [CrossRef]

- JECAM. Joint Experiment for Crop Assessment and Monitoring. Available online: http://jecam.org/ (accessed on 19 March 2020).

- Shi, Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.; Neely, H.L.; et al. Unmanned Aerial Vehicles for High-Throughput Phenotyping and Agronomic Research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef]

- Eckman, S.; Eyerman, J.; Temple, D. Unmanned Aircraft Systems Can Improve Survey Data Collection; RTI Press Publication No. RB-0018-1806; RTI International: Research Triangle Park, NC, USA, 2018. [Google Scholar]

- Bigirimana, F. National Institute of Statistics Rwanda: Kigali, Rwanda. 2019. Available online: https://www.statistics.gov.rw/ (accessed on 22 April 2020).

- Yang, M.-D.; Huang, K.-S.; Kuo, Y.-H.; Tsai, H.; Lin, L.-M. Spatial and Spectral Hybrid Image Classification for Rice Lodging Assessment through UAV Imagery. Remote Sens. 2017, 9, 583. [Google Scholar] [CrossRef]

- Yahyanejad, S.; Rinner, B. A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale UAVs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Lelong, C.C.; Burger, P.; Jubelin, G.; Roux, B.; Labbe, S.; Baret, F. Assessment of Unmanned Aerial Vehicles Imagery for Quantitative Monitoring of Wheat Crop in Small Plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Hall, O.; Dahlin, S.; Marstorp, H.; Archila Bustos, M.; Öborn, I.; Jirström, M. Classification of Maize in Complex Smallholder Farming Systems Using UAV Imagery. Drones 2018, 2, 22. [Google Scholar] [CrossRef]

- Tripicchio, P.; Satler, M.; Dabisias, G.; Ruffaldi, E.; Avizzano, C.A. Towards Smart Farming and Sustainable Agriculture with Drones. In Proceedings of the 2015 International Conference on Intelligent Environments, Prague, Czech Republic, 15–17 July 2015; pp. 140–143. [Google Scholar]

- Polly, J.; Hegarty-Craver, M.; Rineer, J.; O’Neil, M.; Lapidus, D.; Beach, R.; Temple, D.S. The use of Sentinel-1 and -2 data for monitoring maize production in Rwanda. Pro. SPIE 2019, 11149, 111491Y. [Google Scholar]

- National Institute of Statistics of Rwanda. Seasonal Agricultural Survey. Season A 2019 Report; National Institute of Statistics Rwanda: Kigali, Rwanda, 2019.

- National Institute of Statistics of Rwanda. Gross Domestic Product—2019. Available online: http://www.statistics.gov.rw/publication/gdp-national-accounts-2019 (accessed on 22 April 2019).

- Rushemuka, P.N.; Bock, L.; Mowo, J.G. Soil science and agricultural development in Rwanda: State of the art. A review. BASE 2014, 18, 142–154. [Google Scholar]

- Prasad, P.V.; Hijmans, R.J.; Pierzynski, G.M.; Middendorf, J.B. Climate Smart Agriculture and Sustainable Intensification: Assessment and Priority Setting for Rwanda; Kansas State University: Manhattan, KS, USA, 2016. [Google Scholar]

- Chew, R.; Rineer, J.; Beach, R.; O’Neil, M.; Ujeneza, N.; Lapidus, D.; Miano, T.; Hegarty-Craver, M.; Polly, J.; Temple, D.S. Deep Neural Networks and Transfer Learning for Food Crop Identification in UAV Images. Drones 2020, 4, 7. [Google Scholar] [CrossRef]

- Jensen, J.R. Remote Sensing of the Environment, 2nd ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- Shelestov, A.; Lavreniuk, M.; Kussul, N.; Novikov, A.; Skakun, S. Exploring Google Earth Engine Platform for Big Data Processing: Classification of Multi-Temporal Satellite Imagery for Crop Mapping. Front. Earth Sci. 2017, 5. [Google Scholar] [CrossRef]

- Kumaraperumal, R.; Shama, M.; Ragunath, B.; Jagadeeswaran, R. Sentinel 1A SAR Backscattering Signature of Maize and Cotton Crops. Madras Agric. J. 2017, 104, 54–57. [Google Scholar]

- Richard, K.; Abdel-Rahman, E.M.; Subramanian, S.; Nyasani, J.O.; Thiel, M.; Jozani, H.; Borgemeister, C.; Landmann, T. Maize Cropping Systems Mapping Using RapidEye Observations in Agro-Ecological Landscapes in Kenya. Sensors 2017, 17, 2537. [Google Scholar] [CrossRef]

- Google Earth Engine. Machine Learning in Earth Engine. Available online: https://developers.google.com/earth-engine/machine-learning (accessed on 3 March 2020).

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- UNEP-WCMC. WDPA: World Database on Protected Areas (Polygons). Available online: https://developers.google.com/earth-engine/datasets/catalog/WCMC_WDPA_current_polygons (accessed on 20 March 2020).

- Copernicus. Copernicus Global Land Cover Layers: CGLS-LC100 Collection 2. Available online: https://developers.google.com/earth-engine/datasets/catalog/COPERNICUS_Landcover_100m_Proba-V_Global (accessed on 20 March 2020).

| Location | Agro-Ecological Zone | Flight #1 | Flight #2 | Flight #3 |

|---|---|---|---|---|

| Kaberege | Birunga | 27 December 2018 | 29 January 2019 | 19 February 2019 |

| Kinyaga | Central Plate/Eastern Plateau | 12 December 2018 | 24 January 2019 | 21 February 2019 |

| Kabarama | Mayaga and Peripheral Bugesera | 10 December 2018 | 21 January 2019 | 16 February 2019 |

| Cyampirita | Eastern Savanna and Central Bugesera | 17 December 2018 | 25 January 2019 | 18 February 2019 |

| Ngarama | Buberuka Highlands | -- | 30 January 2019 | 20 February 2019 |

| Rwakigarati | Congo-Nile Watershed Divide/Kivu Lake Border | 27 December 2018 | 31 January 2019 | 22 February 2019 |

| Class | Kbg | Kin | Kbm | Cym | Nga | Rwa | Total |

|---|---|---|---|---|---|---|---|

| Maize | 130 | 37 | 11 | 101 | 8 | 2 | 289 |

| Beans | 33 | 8 | 69 | 20 | 7 | 7 | 144 |

| Cassava | 0 | 17 | 53 | 47 | 6 | 12 | 135 |

| Bananas | 4 | 12 | 42 | 14 | 9 | 4 | 85 |

| OtherVeg | 12 | 46 | 29 | 47 | 0 | 9 | 143 |

| Trees | 105 | 30 | 56 | 104 | 0 | 0 | 295 |

| NonVeg | 37 | 38 | 29 | 26 | 5 | 25 | 160 |

| Total | 321 | 188 | 289 | 359 | 35 | 59 | 1251 |

| S2 Flight Date | 35MQU | 35MRU | 35MQT | 35MRT |

|---|---|---|---|---|

| 2018-12-04 | 77% | 98% | 93% | 65% |

| 2018-12-09 | 100% | 100% | 100% | 100% |

| 2018-12-14 | 44% | 39% | 19% | 51% |

| 2018-12-24 | 79% | 87% | 90% | 76% |

| 2018-12-29 | 39% | 52% | 19% | 18% |

| 2019-01-03 | 49% | 29% | 33% | 21% |

| 2019-01-08 | 37% | 34% | 36% | 63% |

| 2019-01-13 | 25% | 12% | 7% | 14% |

| 2019-01-18 | 81% | 100% | 100% | 89% |

| 2019-01-23 | 6% | 12% | 26% | 7% |

| 2019-01-28 | 31% | 22% | 13% | 4% |

| 2019-02-02 | 40% | 40% | 54% | 35% |

| 2019-02-07 | 91% | 100% | 82% | 45% |

| 2019-02-12 | 36% | 77% | 37% | 66% |

| 2019-02-17 | 99% | 97% | 98% | 97% |

| 2019-02-22 | 100% | 100% | 98% | 99% |

| 2019-02-27 | 35% | 12% | 12% | 3% |

| Class | Maize | Beans | Cassava | Bananas | OtherVeg | Trees | NonVeg | % Accuracy |

|---|---|---|---|---|---|---|---|---|

| Maize | 52 | 1 | 1 | 2 | 0 | 1 | 0 | 91 ± 4 |

| Beans | 1 | 16 | 2 | 0 | 0 | 0 | 0 | 84 ± 8 |

| Cassava | 1 | 1 | 12 | 1 | 1 | 0 | 2 | 67 ± 11 |

| Bananas | 2 | 0 | 1 | 11 | 0 | 1 | 0 | 73 ± 11 |

| OtherVeg | 1 | 4 | 2 | 1 | 21 | 1 | 0 | 72 ± 8 |

| Trees | 4 | 0 | 1 | 0 | 1 | 53 | 0 | 90 ± 4 |

| NonVeg | 0 | 5 | 1 | 0 | 0 | 0 | 16 | 73 ± 9 |

| Total Pts | 61 | 27 | 20 | 15 | 23 | 56 | 18 | -- |

| Model Class | Model Area (ha) | SAS Category | 2019A SAS (ha) | Difference |

|---|---|---|---|---|

| 1. Maize | 222,570 | Maize | 215,159 | 3% |

| 2. Beans | 319,548 | Beans | 299,443 | 7% |

| 3. Cassava | 322,060 | Cassava | 195,135 | 65% |

| 4. Bananas | 137,784 | Bananas | 253,996 | −46% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hegarty-Craver, M.; Polly, J.; O’Neil, M.; Ujeneza, N.; Rineer, J.; Beach, R.H.; Lapidus, D.; Temple, D.S. Remote Crop Mapping at Scale: Using Satellite Imagery and UAV-Acquired Data as Ground Truth. Remote Sens. 2020, 12, 1984. https://doi.org/10.3390/rs12121984

Hegarty-Craver M, Polly J, O’Neil M, Ujeneza N, Rineer J, Beach RH, Lapidus D, Temple DS. Remote Crop Mapping at Scale: Using Satellite Imagery and UAV-Acquired Data as Ground Truth. Remote Sensing. 2020; 12(12):1984. https://doi.org/10.3390/rs12121984

Chicago/Turabian StyleHegarty-Craver, Meghan, Jason Polly, Margaret O’Neil, Noel Ujeneza, James Rineer, Robert H. Beach, Daniel Lapidus, and Dorota S. Temple. 2020. "Remote Crop Mapping at Scale: Using Satellite Imagery and UAV-Acquired Data as Ground Truth" Remote Sensing 12, no. 12: 1984. https://doi.org/10.3390/rs12121984

APA StyleHegarty-Craver, M., Polly, J., O’Neil, M., Ujeneza, N., Rineer, J., Beach, R. H., Lapidus, D., & Temple, D. S. (2020). Remote Crop Mapping at Scale: Using Satellite Imagery and UAV-Acquired Data as Ground Truth. Remote Sensing, 12(12), 1984. https://doi.org/10.3390/rs12121984