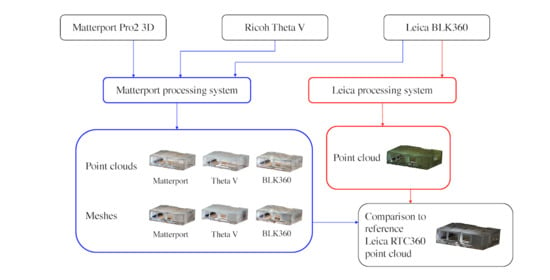

4.1. Analysis of Data Acquisition and Processing Times

In terms of data acquisition times, longer-range instruments, such as the BLK360, become advantageous in larger open spaces, requiring fewer scans to cover the entire space. For the spaces used in this study, however, this is offset by the shorter scan duration of the Theta V and Matterport, which also enables them to cover occluded areas quicker than the BLK360. While the intention of the comparison was to study the capabilities of the sensor systems as is, in the state they are delivered to a user, the calibration of the sensor systems could potentially improve the results. The Matterport can be calibrated using a set of TLS-scanned targets and a comparison of their coordinates, which has been shown to improve performance and eliminate the possibility of systematic errors in the sensor system [

3]. As a dual fisheye lens camera, the calibration of the Theta V is challenging [

60], but the successful calibration of its predecessor Theta S has been conducted [

61]. The self-calibration of the TLS can be conducted using either point or planar targets to ensure adequate data quality, though correlation between the calibration parameters, particularly the scanner rangefinder offset and the position of the scanner, must be accounted for. The use of an asymmetric target field and the measurement of tilt angle observations has been found to reduce correlation, thus improving the calibration [

62].

The processing times differed notably between the sensor systems, as shown in

Table 3 and

Table 4. The choice of sensor system may even be impacted by the processing time; for example, the processing of the Theta V data of the Tetra hall initially failed after 36 h, and required more than ten hours to complete on a second attempt. Processing times in excess of 12 h may already complicate the daily operation of systems. The Theta V data also took the longest to process of the hall 101 data sets, but the difference was significantly smaller, and at slightly under two hours, it is a comparatively quick process. For smaller spaces, the Theta V can be a viable option in terms of the required time resources, though the 173.5 m

2 Tetra hall proved difficult to process, to the point of limiting the feasibility of the Theta V in large spaces.

The geometric accuracy of the tested sensor systems with automatic processing should be adequate for measurements being conducted on the resulting point clouds and models. In addition, sufficiently dense point clouds or mesh models are required for accurate reconstruction and detailed visualization, though a high density does not necessarily reflect the quality of the point cloud or model.

4.2. Point Cloud Density and Mesh Triangle Counts

As seen in

Table 3 and

Table 4, the point count difference between the Matterport-processed point clouds is smaller than the difference between the Matterport- and Leica-processed BLK360 point clouds, as the Matterport processing limits the point clouds to a 1-cm grid by default unless the algorithm detects details smaller than 1 cm. This detection differs depending on the sensor system used, and can also lead to false positives, where the processing has detected details in the Matterport point cloud that do not exist in reality, as shown in

Figure 4. Conversely, however, small objects may not be present in the Theta V point cloud.

The Leica processing does not limit the point cloud to a perceivable grid, creating a significant difference in the point counts of the BLK360 point clouds depending on the processing system. The Leica processing produces a dense point cloud, containing 2175% and 742% of the points of the Matterport-processed BLK360 data for Tetra and hall 101, respectively. The markedly lower density of the Matterport-processed point clouds may impact their feasibility in applications requiring the visualization of point clouds, as the textures of the space or small details may not be visible in a sparse point cloud.

When comparing the mesh triangle counts to point cloud point counts, we can see that the mesh triangle counts vary less by the room size. This is likely a result of decimation in the Matterport processing. Most notably, the BLK360 triangle count is lower for the larger Tetra hall than for the smaller hall 101, this being notable with the curtains lining the walls containing complex shapes to reconstruct. The Theta V is an outlier in both cases, however, with a triangle count several times that of the other systems despite the Theta V point clouds having a lower point count.

4.3. Distance Analysis of Indoor Space Geometries

As shown in

Table 5 and

Table 7, the C2C distances of the Matterport and BLK360 point cloud of the room geometries are similar, with an improvement in accuracy for the BLK360 when Leica processing is used.

Figure 2 shows the difference between the sensor systems’ capabilities in a large space, with the Matterport point cloud showing moderate deviations in the roof and the curtain along the walls, while the Theta V point cloud is increasingly skewed towards the left parts of the image, also having areas with clearly higher deviations along the curtain.

Figure 5 shows the deviation of the Theta V point clouds along the walls, where the shape for hall 101 is skewed, though it follows the general shape of the wall, while the shape of the curtain in the Tetra hall has not been taken into account.

The deviations of the Matterport point cloud are consistent with the findings of [

4], where the errors in an indoor setting generally fell within the one-percent deviation stated by the manufacturer. While [

9] present a TLS–Matterport comparison where the roof height forces the Matterport to exceed its recommended range, our test sites have a roof height well within the range of the Matterport, and the deviations of the area within its range appear similar. In our results, the deviations of the area within the range of the Matterport appear similar for both test sites. The Matterport-processed BLK360 point cloud and Matterport point cloud exhibit similar characteristics in all metrics, while the Theta V point cloud has larger deviations with a broader spread. With 53 percent of points within 1 cm of the hall 101 room geometry reference point cloud, as noted in

Table 7, it falls well below the Matterport, which has the worst performance of the remaining sensors.

Figure 6 shows the distribution of points in the hall 101 room geometry, displaying the difference between the points located below the 90th and 99th percentile of deviations, as well as the mean deviations. The mean indicates the spread of deviations when used in conjunction with the 90th and 99th percentile deviations, as a mean close to the 90th percentile deviation may point to outliers skewing the mean upwards. For the meshes, the deviation is calculated as the distance from a point in the reference point cloud to the closest point on the mesh. Both distributions are a measure of the ability of the sensor system to reconstruct geometry; a high 99th percent deviation indicates inconsistencies in the presence of elements in comparison to the reference, affecting completeness and correctness, while the 90th percent deviation measures accuracy, and in the case of a high value, also indicates severe deficiencies in completeness and correctness. The Theta V shows such values, while the Matterport and BLK360 with Matterport processing provide better, similar looking results for point clouds and meshes alike. In contrast, the Leica-processed BLK360 point cloud is superior to all other data sets, including the Matterport-processed BLK360 data.

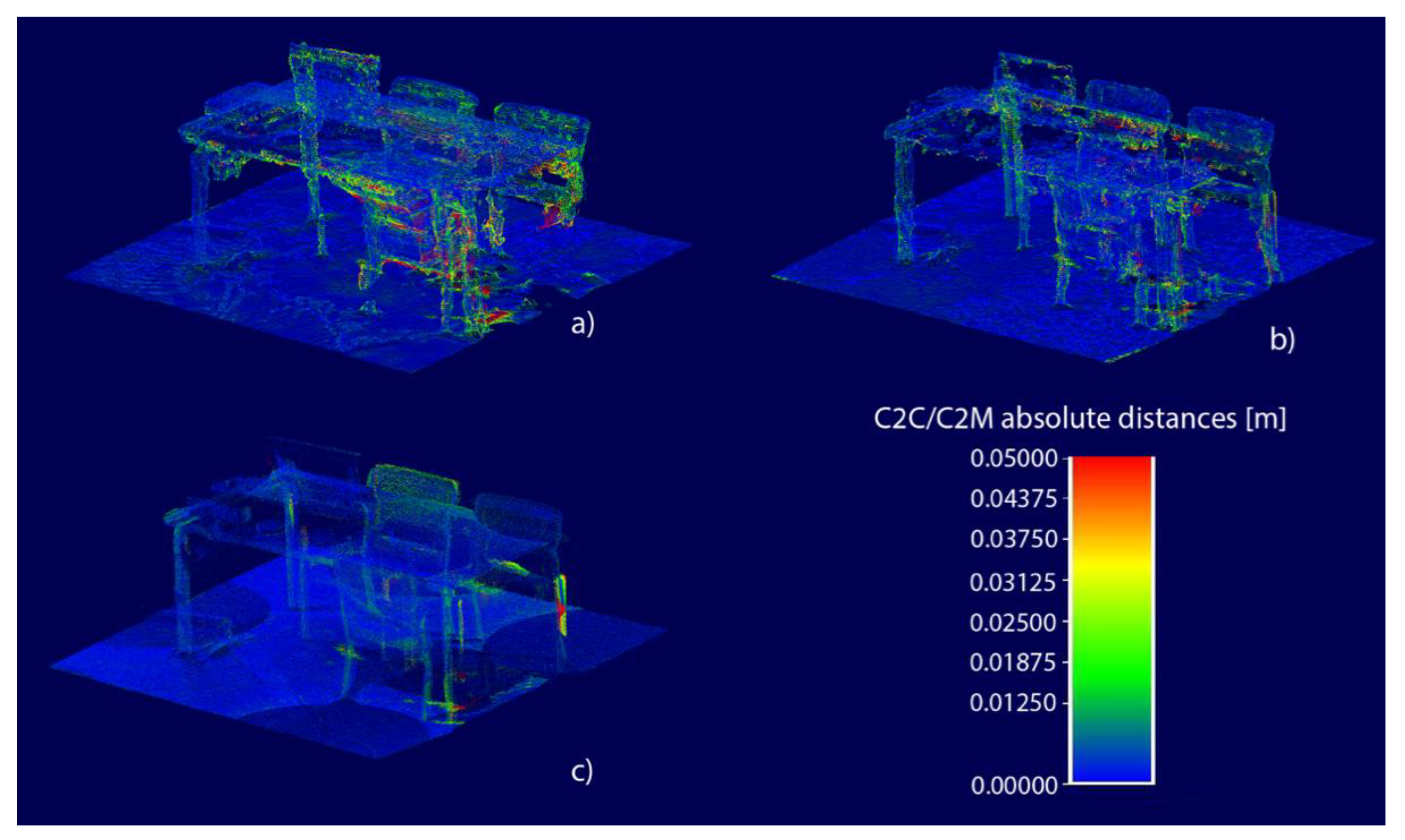

In the meshes, the Theta V was an even further outlier than in the point clouds, with only 17 percent of the points in the Tetra room geometry reference cloud at a distance below 1 cm from the mesh. While the Theta V mesh contains more triangles than the other two meshes combined, this does not reflect positively in the C2M distance analysis, as seen in

Figure 2 and

Figure 3. Unlike for the point clouds, the Matterport-processed BLK360 clearly outperforms the Matterport.

Figure 7 displays the two point clouds, showing the relative consistency of the BLK360 between the point clouds and the meshes in comparison to the Matterport.

The mean deviations do not significantly differ, though the Matterport exhibited a higher variance. The Theta V remains the outlier of the three tested sensor systems, showing larger C2M distances than the BLK360 and Matterport in every metric. In [

10], panoramic photogrammetry is used to automatically generate a model from Samsung Gear360 images. With the default projection, the errors are larger than those we obtained with the Theta V, though by using a custom projection, the authors of [

10] were able to achieve a higher degree of accuracy in photogrammetric modeling.

In a detailed segment, the results of

Table 6 and

Table 8 show a clearer difference between the Matterport and the Matterport-processed BLK360 data than is the case for the room geometry. As in the room geometry, the Theta V remains the clearly worst-performing sensor system. The choice of processing system for the BLK360 data clearly impacts the quality of the point cloud, with the Leica processing providing more accurate results, though the Matterport-processed BLK360 point cloud remains superior to the Matterport point cloud.

Figure 8 displays the difference between the Matterport point cloud and the two BLK360 point clouds of the hall 101 segment.

The authors of [

9] show a TLS–Matterport comparison, where the Matterport cannot accurately represent detailed areas. The choice of processing method makes a larger difference for the BLK360 in the detailed segment than for the room geometry, as the Leica-processed BLK360 point cloud exhibits the best results in every metric. A benefit of the Matterport processing for the BLK360, however, is the possibility to use an online walkthrough, and the measurement data captured by operating the scanner in Matterport’s Capture app can also be processed with Leica’s proprietary processing system.

In the meshes of the segments, the Matterport outperforms the BLK360 in both spaces, having a larger share of points within 1 cm of the reference point cloud. The Theta V still exhibits the largest deviations, though it performs slightly better in the segment mesh than in the point cloud, as shown in

Figure 9. The chairs by the desk have not been reconstructed in either Theta V data set, while the other sensor systems are capable of doing so.

The results of the hall 101 segment follow the ones of the Tetra segment, with similar differences between the sensor systems. Among the segment point clouds, the BLK360 outperforms the Matterport regardless of processing system, as shown in

Figure 8. The opposite is true for the meshes, where the Matterport is ahead of the BLK360 in every metric. This may point to Matterport’s processing system having the best compatibility with their proprietary sensor system, despite also supporting the Leica BLK360, which optimally provides more accurate point clouds, particularly if processed through Leica’s processing system. If a higher-quality mesh is desired, Leica-processed point clouds must be processed with third-party software. The Theta V performs at a similar level to the Tetra segment in the point cloud, but its accuracy drops further in the mesh processing. The meshes of the hall 101 segment are presented in

Figure 10.

In both segments, it can be noted that the Theta V performs poorly in reconstructing details, and even larger objects, causing significant completeness deficiencies in the data. If comparing the RGB-colored hall 101 segment point clouds of RTC360 and Theta V in

Figure 11a,b, significant differences can immediately be seen. The computer on the table is missing entirely, and the furniture is poorly defined.

Figure 11c shows the points on the RTC360 point cloud at a distance larger than the 90th percentile deviation to the Theta V point cloud, as the RTC360 point cloud contains more details, allowing the areas not captured by the Theta V to be easily distinguished. The points above the 99th percentile deviation in

Figure 11d show the areas with the most significant completeness issues.

Figure 11e shows the points with a deviation of larger than 1 cm, which corresponds to 43 percent of all points. These points are found throughout the point cloud, though less so on the surface of the table.

In all tested scenarios, the Matterport performs reasonably well, with the share of points below 1 cm falling within a maximum of eight percentage points in comparison to the BLK360 with Matterport processing, and the Matterport performing better by 73 to 64 percent in the Tetra segment. While the BLK360 mostly provided the best results for the Matterport-processed data, the Leica-processed BLK360 point cloud showed the lowest C2C distances up to the 90th percentile in every case. The room geometry mean deviations for of the Leica-processed BLK360 point cloud were 8 mm in the Tetra conference hall and 2 mm in the hall 101 test site, supporting the accuracy described in [

3,

40], though the deviations of the Matterport-processed point clouds and meshes were larger, and the difference was more pronounced in the detailed segments. The BLK360 uses a unique tripod, and is incompatible with most tripods used for other instruments, with the scanner base set to a height of 110 cm, which barely enables the scanner to reach the surface of a table of average height. In the Matterport-processed mesh of the Tetra segment displayed in

Figure 2d, the top of the desk is mostly absent, causing large discrepancies between the mesh and the reference point cloud. Should the BLK360 be used from a taller height than the proprietary tripod, the results would be expected to improve, given its high accuracy in the areas in full view of the instrument. The other sensor systems can be set up on tripods of multiple types, and can be used from any height.

The Theta V is the clear outlier in every scenario, consistently showing the weakest performance of the tested sensor systems. While featureless areas are difficult for image-based sensors to model due to a lack of points to connect the images with, the processing has extracted sufficient geometry for the Theta V to create a full model of the room geometries. The largest deviations can be found in the details of the segment, as the Theta V is incapable of modeling small objects, with the objects on the tables not being present in either model. While small objects are missing entirely, there are also issues in modeling furniture, with objects being reduced to horizontal or vertical surfaces, e.g., the chairs by the table in the hall 101 segment, as seen in

Figure 3d. Thus, the Theta V is unsuited for projects in which the shapes of objects within the space are to be reconstructed with a reasonable degree of accuracy. It remains a feasible alternative for obtaining the general geometry of a space, however.