Abstract

Ship detection in synthetic aperture radar (SAR) images is becoming a research hotspot. In recent years, as the rise of artificial intelligence, deep learning has almost dominated SAR ship detection community for its higher accuracy, faster speed, less human intervention, etc. However, today, there is still a lack of a reliable deep learning SAR ship detection dataset that can meet the practical migration application of ship detection in large-scene space-borne SAR images. Thus, to solve this problem, this paper releases a Large-Scale SAR Ship Detection Dataset-v1.0 (LS-SSDD-v1.0) from Sentinel-1, for small ship detection under large-scale backgrounds. LS-SSDD-v1.0 contains 15 large-scale SAR images whose ground truths are correctly labeled by SAR experts by drawing support from the Automatic Identification System (AIS) and Google Earth. To facilitate network training, the large-scale images are directly cut into 9000 sub-images without bells and whistles, providing convenience for subsequent detection result presentation in large-scale SAR images. Notably, LS-SSDD-v1.0 has five advantages: (1) large-scale backgrounds, (2) small ship detection, (3) abundant pure backgrounds, (4) fully automatic detection flow, and (5) numerous and standardized research baselines. Last but not least, combined with the advantage of abundant pure backgrounds, we also propose a Pure Background Hybrid Training mechanism (PBHT-mechanism) to suppress false alarms of land in large-scale SAR images. Experimental results of ablation study can verify the effectiveness of the PBHT-mechanism. LS-SSDD-v1.0 can inspire related scholars to make extensive research into SAR ship detection methods with engineering application value, which is conducive to the progress of SAR intelligent interpretation technology.

1. Introduction

Synthetic aperture radar (SAR) is an active microwave imaging sensor whose all-day and all-weather working capacity give it an important place in marine exploration [1,2,3,4,5,6,7]. Since the United States launched the first SAR satellite, SAR has received much attention in marine remote sensing, e.g., geological exploration, topographic mapping, disaster forecast, traffic monitoring, etc. As a valuable ocean mission, SAR ship detection is playing a critical role in shipwreck rescue, fishery and traffic management, etc., so it is becoming a research hotspot [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70].

So far, many traditional SAR ship detection methods have been proposed, e.g., global threshold-based [36,37,38], constant false alarm ratio (CFAR)-based [39,40,41], generalized likelihood ratio test (GLRT)-based [42,43,44], transformation domain-based [45,46,47], visual saliency-based [48,49,50], super-pixel segmentation-based [51,52,53], and auxiliary feature-based (e.g., ship-wake) [54,55,56], all of which obtained modest results in specific backgrounds, but these methods always extract ship features by hand-designed means, leading to complexity in computation, weakness in generalization, and trouble in manual feature extraction [1,4]. Moreover, as ship wakes do not exist all the time, and their features are not as obvious as ship targets, the research on the detection of ship wakes is not extensive [13].

With the rise of AI, deep learning [71] is providing much power for SAR ship detection. Based on our survey [8,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,61,62,70], deep learning has almost dominated the SAR ship detection community for its higher accuracy, faster speed, less human intervention, etc., so increasingly, scholars have made use of deep learning-based ship detection act in an important research direction. In the early stage, deep learning was applied in various parts of SAR ship detection, e.g., land masking [28], region of interest (ROI) extraction, and ship discrimination [28,72] (i.e., ship or background binary classification of a single chip). However, in the present stage, deep-learning-based SAR ship detection methods utilize an end-to-end mode to send SAR images into networks for detection and output detection results directly [13], without the support of auxiliary tools (e.g., traditional preprocessing tools, geo information, etc.) and without manual involvement, so the operation of sea–land segmentation [13] that may need geo information is removed, which greatly improves detection efficiency. In addition, the coastline of the earth is constantly changing, so the use of fixed coastline data from geo information will inevitably result in some deviations [28]. Today, deep-learning-based SAR ship detectors always simultaneously locate many ships in large SAR images, instead of just ship–background binary classification in some small single chips, so the ship discrimination process is integrated into the end-to-end mode, improving detection efficiency.

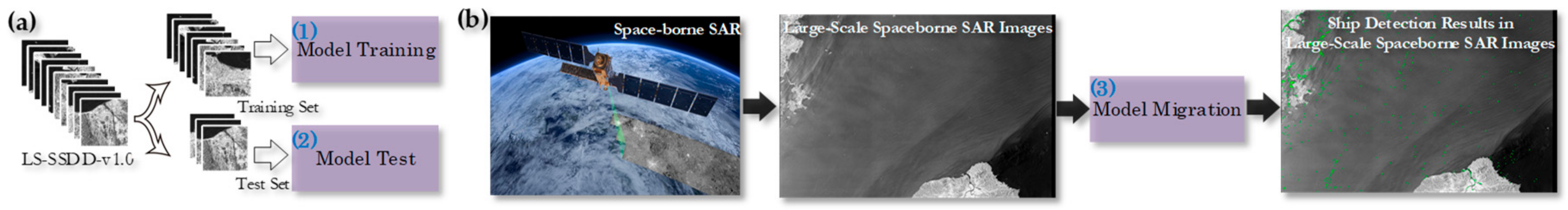

However, the greatest weakness of lacking SAR ship learning data is bound to cause the inadequate play of the above advantages, because deep learning always needs much labeled data to enrich the learning experience [10]. Thus, some famous scholars, e.g., Li et al. [12], Wang et al. [10], Sun et al. [22] and Wei et al. [7], released their SAR ship detection datasets, i.e., SAR ship detection dataset (SSDD) [12], SAR-Ship-Dataset [10], AIR-SARShip-1.0 [22], and high-resolution SAR images dataset (HRSID) [7], but these datasets still have five defects: (1) the lack of considering the practical migration application of ship detection in large-scene space-borne SAR images, (2) the lack of pure backgrounds, (3) the lack of considering relatively hard-detected small ships, (4) insufficient automation of detection process in practical migration applications, and (5) quantity-insufficient and non-standardized research baselines. Thus, there is still a lack of a reliable deep learning SAR ship detection dataset that can meet the practical migration application of ship detection in large-scene space-borne SAR images. Moreover, deep learning for SAR ship detection involves three basic processes (see Figure 1): (1) model training on the training set of open datasets to learn ship features (see Figure 1a), (2) model test on the test set (see Figure 1a), and (3) model practical applications or model migration capability verification on actual large-scene space-borne SAR images [1,2,3,4,6,7,10,14,15] (see Figure 1b). The last process is the most critical because one always need to focus on the actual migration application capability, instead of the good detection performance just on the test set. Thus, “practical engineering applications” refers to the actual migration applications, from the acquisition of raw large-scene space-borne SAR images to the final ship detection result presentation in raw space-borne SAR images, using deep learning, without any human involvement. Briefly, the existing datasets still have resistance to ensure the smooth implementation of the last practical application process.

Figure 1.

Deep learning-based synthetic aperture radar (SAR) ship detection. (a) Model establishment; (b) model application. (1) Model training on the training set; (2) model test on the test set; (3) model migration.

Thus, we release LS-SSDD-v1.0 from Sentinel-1 [73] for small ship detection under large-scale backgrounds, which contains 15 large-scale images with 24,000 × 16,000 pixels (the first 10 images are selected as a training set, and the remaining are selected as a test set). To facilitate network training, the 15 large-scale images are directly cut into 9000 sub-images with 800 × 800 pixels without bells and whistles.

In contrast to the existing datasets, LS-SSDD-v1.0 has the following five advantages:

- (i)

- Large-scale background: Except AIR-SARShip-1.0, the other datasets did not consider large-scale practical engineering application of space-borne SAR. One possible reason is that small-size ship chips are beneficial to ship classification [7], but they contain fewer scattering information from land. Thus, models trained by ship chips will have trouble locating ships near highly-reflective objects in large-scale backgrounds [7]. Although images in AIR-SARShip-1.0 have a relatively large size (3000 × 3000 pixels), their coverage area is only about 9 km wide, which is still not consistent with the large-scale and wide-swath characteristics of space-borne SAR that often cover hundreds of kilometers, e.g., 240–400 km of Sentinel-1. In fact, ship detection in large-scale SAR images is closer to practical application in terms of global ship surveillance. Thus, we collect 15 large-scale SAR images with 250 km swath width to construct LS-SSDD-v1.0. See Section 4.1 for more details.

- (ii)

- Small ship detection: The existing datasets all consider multi-scale ship detection [13,17], so there are many ships with different sizes in their datasets. One possible reason is that multi-scale ship detection is an important research topic that is one of the important factors to evaluate detectors’ performance. Today, using these datasets, there have been many scholars who have achieved great success in multi-scale SAR ship detection [13,17]. However, small ship detection is an another important research topic that has received much attention by other scholars [19,24,66] because small target detection is still a challenging issue in the deep learning community (it should be noted that “small ship” in the deep learning community refers to occupying minor pixels in the whole image according to the definition of the Common Objects in COntext (COCO) dataset [74] instead of the actual physical size of the ship, which is also recognized by other scholars [19,24,66].). However, at present, there is still lack of deep learning datasets for SAR small ship detection, so to make up for such a vacancy, we collect large-scene SAR images with small ships to construct LS-SSDD-v1.0. Most importantly, ships in large-scene SAR images are always small, from the perspective of pixel proportion, so the small ship detection task is more in line with the practical engineering application of space-borne SAR. See Section 4.2 for more details.

- (iii)

- Abundant pure backgrounds: In the existing datasets, all image samples contain at least one ship, meaning that pure background image samples (i.e., no ships in images) are abandoned artificially. In fact, such practice is controversial in application, because (1) for one thing, human intervention practically destroyed the raw SAR image real property (i.e., there are indeed many sub-samples not containing ships in large-scale space-borne SAR images); and (2) for another thing, detection models may not be able to effectively learn features of pure backgrounds, causing more false alarms. Although models may learn partial backgrounds’ features from the difference between ship ground truths and ship backgrounds in the same SAR image, false alarms of brightened dots will still emerge in pure backgrounds, e.g., urban areas, agricultural regions, etc. Thus, we include all sub-images into LS-SSDD-v1.0, regardless of sub-images contain ships or not. See Section 4.3 for more details.

- (iv)

- Fully automatic detection flow: In previous studies [1,2,3,4,6,10,34], many scholars have trained detection models on the training set of open datasets and then tested models on the test set. Finally, they always verified the migration capability of models on other wide-region SAR images. However, their process of authenticating model migration capability is a lack of sufficient automation for the domain mismatch between datasets and practical wide-region images. Thus, artificial interference is incorporated to select ship candidate regions from the raw large-scale images, which is troublesome and insufficiently intelligent, but if using LS-SSDD-v1.0, one can directly evaluate models’ migration capability because we keep the original status of large-scale space-borne SAR images (i.e., the pure background samples without ships are not discarded manually and the ship candidate regions are not selected by human involvement.), and the final detection results can also be better presented by simple sub-image stitching without complex coordinate transformation or other post-processing means, so LS-SSDD-v1.0 can enable a fully automatic detection flow without any human involvement that is closer to the engineering applications of deep learning. See Section 4.4 for more details.

- (v)

- Numerous and standardized research baselines: The existing datasets all provides research baselines (e.g., Faster R-CNN [75], SSD [76], RetinaNet [77], Cascade R-CNN [78], etc.), but (1) for one thing, the numbers of their provided baselines are all relatively a few that cannot facilitate adequate comparison for other scholars, i.e., two baselines for SSDD, six for SAR-Ship-Dataset, nine for AIR-SAR-Ship-1.0, and eight for HRSID; and (2) for another thing, they provided non-standardized baselines because these baselines are run under different deep learning frameworks, different training strategies, different image preprocessing means, different hyper-parameter configurations, different hardware environments, different programing languages, etc., bringing possible uncertainties in accuracy and speed. Although the research baselines of HRSID is standardized, they followed the COCO evaluation criteria [74], which is rarely used in the SAR ship detection community. Thus, we provide numerous and standardized research baselines with the PASCAL VOC evaluation criteria [79]: (1) 30 research baselines and (2) under the same detection framework, the same training strategies, the same image preprocessing means, almost the same hyper-parameter configuration, the same development environment, the same programing language, etc. See Section 4.5 for more details.

Moreover, based on abundant pure backgrounds, we also propose a PBHT-mechanism to suppress false alarms of land, and the experimental results can verify its effectiveness. LS-SSDD-v1.0 is a dataset according with practical engineering application of space-borne SAR, helpful for the progress of SAR intelligent interpretation technology. LS-SSDD-v1.0 is also a challenging dataset because small ship detection in the practical large-scale space-borne SAR images is a difficult task. It seems that the number of LS-SSDD-v1.0 is small because its image number is only 15. However, in fact, LS-SSDD-v1.0 is still a relatively big dataset because it contains 9000 sub-images. Although the image number in the SAR-Ship-Dataset seems to be bigger than ours (43,819 >> 9000), its image size is far small than ours (256 << 800 pixels) and their image samples also have a 50% overlap, causing (1) the detection speed decline for the duplicate detection in overlapping regions and (2) an inconvenience of subsequent results presentation in large-scale images. To be clear, some datasets, e.g., OpenSARShip [80], FUSAR [81], etc., were released for ship classification, e.g., cargo, tanker, etc., but this is not our focus, because this paper only focuses on ship location without the follow-up classification.

Especially, LS-SSDD-v1.0 is of significance, because (1) it can promote scholars to make extensive research into large-scale SAR ship detection methods with more engineering application value, (2) it can provide a data source for scholars who are focusing on the detection of small ships or densely clustered ships [19], and (3) it can motivate the emergence of higher-quality SAR ship datasets closer to engineering application and more in line with space-borne SAR characteristics in the future. LS-SSDD-v1.0 is available at https://github.com/TianwenZhang0825/LS-SSDD-v1.0-OPEN.

The main contributions of our work are as follows:

- LS-SSDD-v1.0 is the first open large-scale ship detection dataset, considering migration applications of space-borne SAR and also the first one for small ship detection, to our knowledge;

- LS-SSDD-v1.0 is provided with five advantages that can solve five defects of existing datasets.

2. Related Work

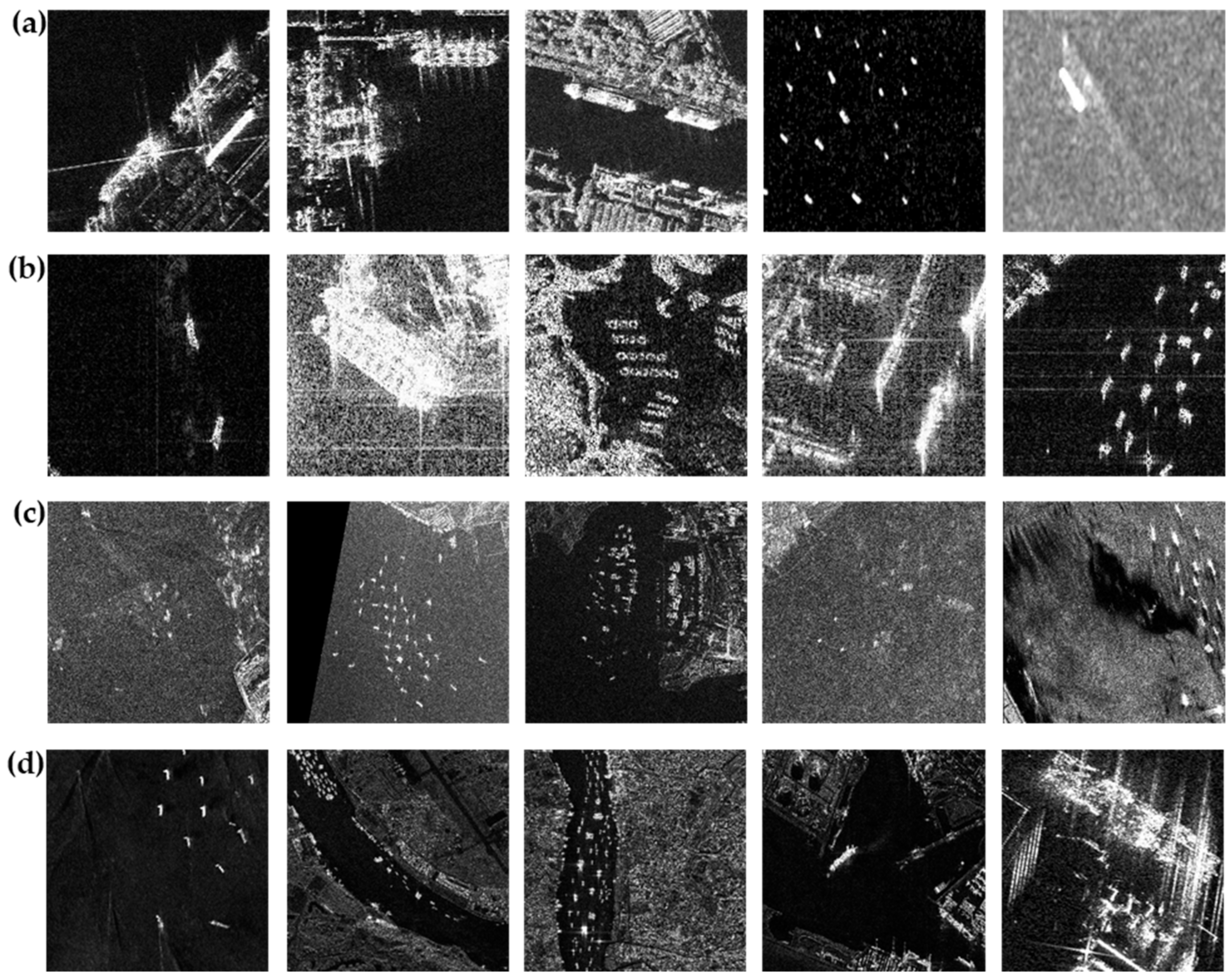

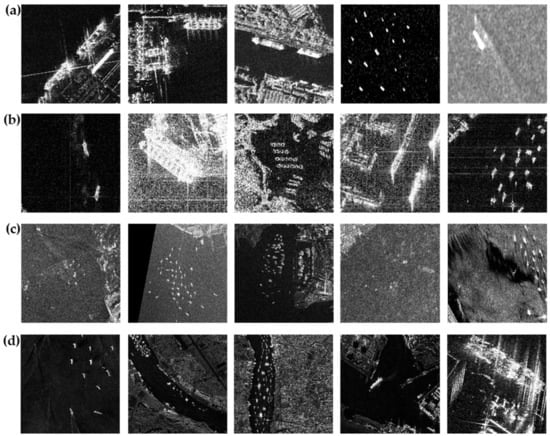

Table 1 shows the descriptions of the existing four datasets and our LS-SSDD-v1.0, as provided in their original papers [7,10,12,22]. Figure 2 shows some SAR image samples in them.

Table 1.

Descriptions of existing four datasets provided in references [7,10,12,22] and our LS-SSDD-v1.0. Resolution refers to R.×A. where R. is range and A. is azimuth (note: SSDD, AIR-SARShip-1.0, and HRSID only provided a single optimal resolution, and SSDD only provided a rough resolution range from 1 m to 15 m). Image size refers to W.×H., where W. is width and H. is height. Moreover, SAR-Ship-Dataset and AIR-SARShip-1.0 only provide polarization in the form of single, dual, and full, instead of the specific vertical or horizontal mode. “--” means no provision in their original papers.

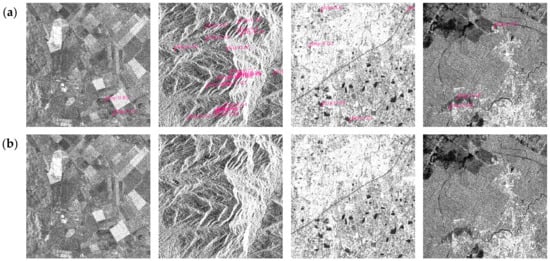

Figure 2.

Images samples. (a) SAR ship detection dataset (SSDD); (b) SAR-Ship-Dataset; (c) AIR-SARShip-1.0; (d) high-resolution SAR images dataset (HRSID).

2.1. SSDD

In 2017, Li et al. [12] released SSDD from RadarSat-2, TerraSAR-X and Sentinel-1 with mixed HH, HV, VV, and VH polarizations, consisting of 1160 SAR images with 500 × 500 pixels containing 2358 ships, with various resolutions from 1 m to 15 m. Similar to PASCAL VOC [79], SSDD was established whose ground truths are labeled by LabelImg [3]. One image corresponds to an extensible markup language (XML) label. Regions with > 3 pixels are seen as ships, instead of AIS and Google Earth, so label errors may occur declining dataset authenticity. So far, scholars have proposed many SAR ship detection methods [1,2,3,4,5,6,8,13,14,15,16,17,18,19,20,21,29,32] on SSDD, but SSDD has careless annotation, repeated scenes, deficiency of sample number, etc., which hinders the further progress of research.

SSDD prophesied that deep learning would prevail, whose initial work has laid an important foundation. Later, as the update of deep learning object detectors, scholars realized that one must obtain more training data to enhance learning representation capability to further improve accuracy. As a result, the SAR-Ship-Dataset emerged.

2.2. SAR-Ship-Dataset

In March 2019, Wang et al. [30] released the SAR-Ship-Dataset from Gaofen-3, Sentinel-1, consisting of 43,819 small ship chips of 256 × 256 pixels, containing 59,535 ships, with various resolutions (3 m, 5 m, 8 m, 10 m, 25 m, etc.) and with single, dual and full polarizations. The ground truths are labeled by SAR experts. They did not draw support from AIS nor Google Earth, so the annotation process of their dataset relies heavily on expert experience, probably declining dataset authenticity. Moreover, the SAR-Ship-Dataset abandoned the samples without ships, but such practice (1) destroyed the large-scale space-borne SAR image property, i.e., there are indeed many samples not containing ships, and (2) may not effectively learn features of pure backgrounds. Although detectors can learn background features from the difference between ground truths and backgrounds in the same image, false alarms of brightened dots will still emerge in pure backgrounds.

The SAR-Ship-Dataset’s large number of images number can make models learn rich ship features. So far, scholars have reported research results on it, e.g., Cui et al. [14], Gao et al. [26], etc., but its image size is too small to satisfy the practical migration requirements, so AIR-SARShip-1.0 emerged.

2.3. AIR-SARShip-1.0

In December 2019, Sun et al. [22] released AIR-SARShip-1.0 from Gaofen-3 with 1-m and 3-m resolution and single polarization consisting of 31 images with 3000 × 3000 pixels in harbors, islands, reefs, and the sea surface under different conditions. They also labeled the ship ground truths based on expert experience, not drawing support from AIS or Google Earth. AIR-SARShip-1.0 provides the labels of the raw SAR images with 3000 × 3000 pixels, instead of those of their training sub-images. Although they have cut the raw SAR images into 500 × 500 pixel sub-images for network training, the final detection results are shown in the raw 3000 × 3000 pixel images, reflecting an end-to-end mode of the practical migration applications. That is, no matter what kind of image division mechanism (e.g., direct cutting, sliding window, visual saliency, etc.), they should provide the final ship detection results on the raw images, instead of small sub-images. However, their 3000 × 3000 pixel image size (only 9 km of swath width) still does not fully reflect SAR images’ wide-swath characteristic. Moreover, its total quantity of images for network training is relatively small (1116 sub-images), inevitably bringing about inadequate learning, and even leading to training over-fitting, which reduces the models’ generalization capability. Although they used data augmentation, e.g., dense rotation, image flipping, contrast enhancement, random scaling, etc., to alleviate this problem, data augmentation can only learn limited ship features based on limited data, and it is obvious that it is not definitely a better choice if they do not expand the number of datasets. In addition, according to our observation, SAR images in AIR-SARShip-1.0 seem to be of relatively low-quality compared with SSDD and SAR-Ship-Dataset, e.g., severe speckle noise, intense image defocus, etc., possibly coming from the modest imaging algorithms for the raw SAR data, which probably declines the feature extraction of real ships.

AIR-SARShip-1.0 inspired our work (i.e., design a dataset in line with the actual migration application.). So far, Fu et al. [35] and Gao et al. [27] have reported their research results based on AIR-SARShip-1.0. However, the SAR image quality of AIR-SARShip-1.0 is modest, possibly bringing about certain resistance for other scholars’ further studies. As a result, HRSID emerged.

2.4. HRSID

In July 2020, Wei et al. [7] released HRSID from Sentinel-1 and TerraSAR-X, for ship instance segmentation including ship detection. There are 5604 SAR images with 0.5 m, 1 m, and 3 m resolutions; 800 × 800 pixels; and HH, HV, and VV polarization in HRSID, containing 16,951 ships. It is worth noting that they labeled the ship ground truths in SAR images, with the help of Google Earth, which is a progress compared to SSDD, SAR-Ship-Dataset, and AIR-SARShip-1.0, but AIS is still not considered in their work. Moreover, HRSID also abandoned the pure background samples by manual intervention, which leads to the emergence of many false alarm cases of brightened dots in some pure background SAR images, e.g., urban areas, agricultural regions, etc.

HRSID reminds us that small ship chips are beneficial to ship classification [7] because these ship chips contain fewer scatterings from land, so models trained by ship chips may have trouble locating ships near highly reflective objects, which inspired us to consider large-scale SAR ship detection.

3. Establishment Process

3.1. Step 1: Raw Data Acquisition

We download the 15 raw Sentinel SAR data from the Copernicus Open Access Hub [82] in busy ports, straits, river areas, etc. Table 2 shows the detailed information of the 15 raw Sentinel-1 SAR data. From Table 2, LS-SSDD-v1.0 is composed of Sentinel-1 images in the interferometric wide swath (IW) mode, which is the main Sentinel-1 mode to acquire data in areas of maritime surveillance (MS) interest [9]. We choose ground range multi-look detected (GRD) data for most intensity-based applications, instead of single-look complex (SLC) data for interferometric applications [83]. The default polarimetric combination for Sentinel-1 images acquired over areas of maritime interest is (VV-VH) [83]. For incidence angles in the range of 30° to 45°, which are characteristic for Sentinel-1 IW, vessels generally exhibited higher backscattering values in the co-polarization channel (VV), while in the cross-polarization channel (VH), the backscattering values were lower [9,83]. In addition, we also note that there is a special type of instrumental artifact in Sentinel-1 VH polarization images [84], probably coming from the radio frequency interference (RFI) of instrumental artifacts [84]. The intensity of RFIs could be as strong as ships, making it difficult to distinguish them using methods based on intensity information. Given the above, we choose VV co-polarization to perform actual ship annotation. In particular, for cross-polarization, the ship RCS is smaller, but the clutter is below the noise floor; the cross-polarization case is noise limited [83]. There are significant benefits to using cross-polarization, especially for acquisitions at smaller incidence angles [83]. Furthermore, cross-polarization provides more uniform ship detection performance across the image swath since the noise floor is somewhat independent of incidence angle [83]. Therefore, we also provide the 15 raw large-scale SAR images with VH polarization in the LS-SSDD-v1.0 source documents for possible further research in the future, which possess the same ship label ground truths as VV polarization.

Table 2.

Detailed descriptions of Large-Scale SAR Ship Detection Dataset-v1.0 (LS-SSDD-v1.0).

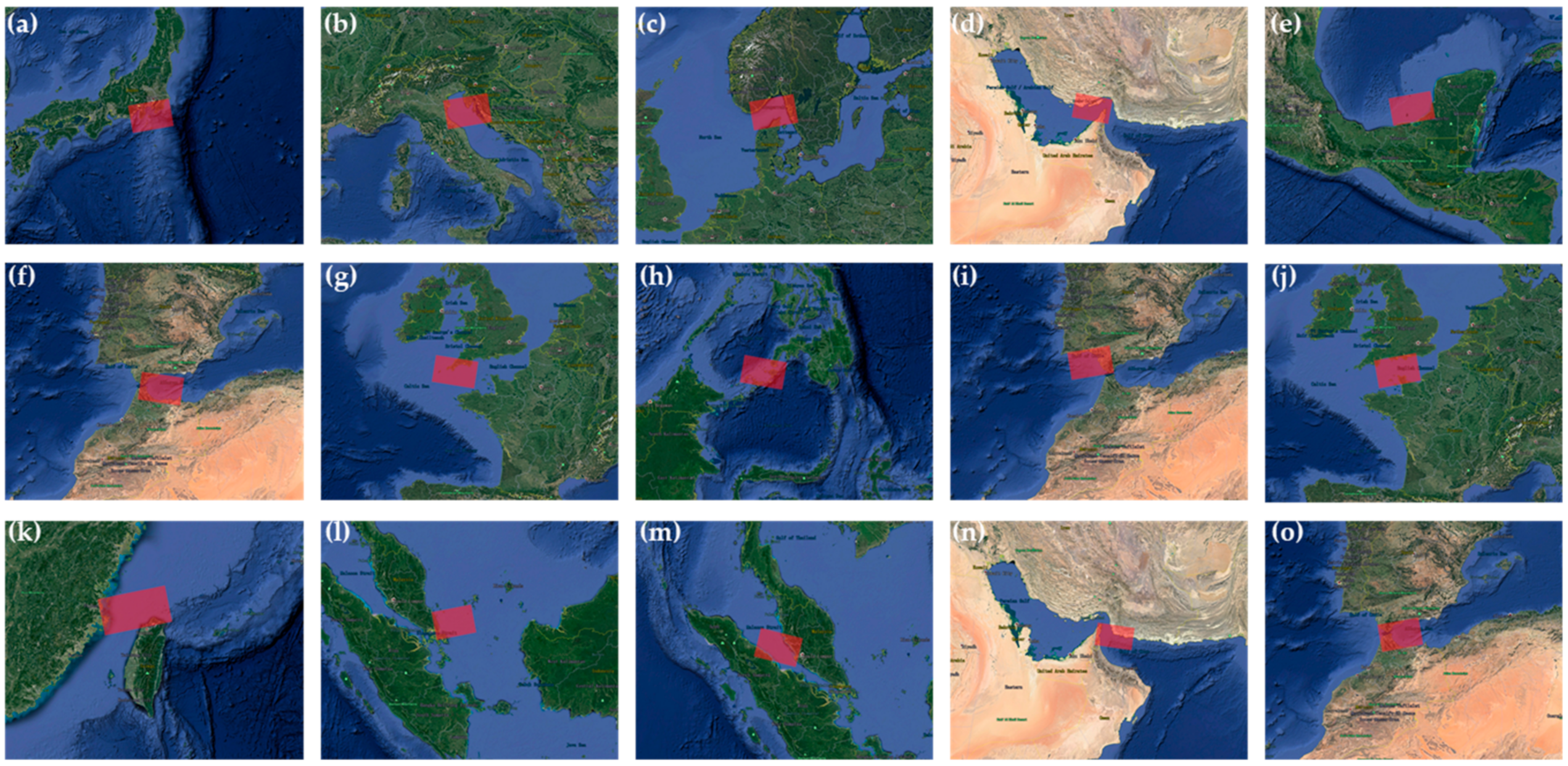

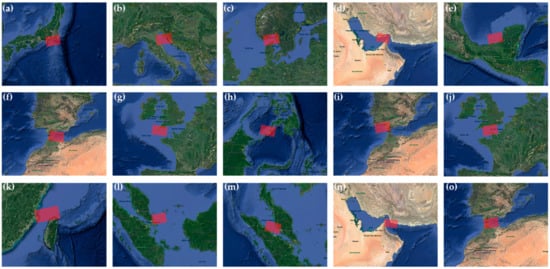

Figure 3 shows their coverage areas. In Figure 3, the 15 raw Sentinel SAR data has large-scale and width swath characteristics (250 km > 9 km of AIR-SAR-Ship-1.0 > 4 km of HRSID > 0.4 km of SAR-Ship-Dataset), covering a large area of sea, straits, ports, shipping channels, etc. Moreover, their image sizes are all relatively large, about 26,000 × 16,000 pixels on average, compared to other datasets.

Figure 3.

Coverage areas. (a) Tokyo Port; (b) Adriatic Sea; (c) Skagerrak; (d) Qushm Islands; (e) Campeche; (f) Alboran Sea; (g) Plymouth; (h) Basilan Islands; (i) Gulf of Cadiz; (j) English Channel; (k) Taiwan Strait; (l) Singapore Strait; (m) Malacca Strait; (n) Gulf of Cadiz; (o) Gibraltarian Strait.

We used the Sentinel-1 toolbox [85] to help imaging to obtain 15 tag image file format (TIFF) files with 16-bit physical brightness or grey levels, where all images are processed by geometrical rectification, radiometric calibration and de-speckling. Moreover, as demonstrated in the scientific literature [83], the minimum detectable ship length for the groups of single beam modes is about 25–34 m for 30° to 45° incidence angle [80,83] (~1.46 dB intensity contrast [83] similar to ice), and it also decreases with increasing incidence angle, which illustrates the importance of incidence angle in reducing the background ocean clutter level. More details of Sentinel-1 can be found in Torres et al. [83].

Finally, the European Space Agency (ESA) provided the specific shooting time of Sentinel-1 satellite in their annotation files, and moreover, with the help of the Sentinel-1 toolbox, we can also obtain the latitude and longitude information of the surveyed areas. Thus, the above exact time and accurate geographical location will be convenient for the follow-up AIS consultation and Google Earth correction. Thus far, the raw 15 large-scale SAR images with the TIFF file format have been obtained.

3.2. Step 2: Image Format Conversion

To keep a same image format as PASCAL VOC [79], we convert the 15 raw tiff files into the Joint Photographic Experts Group (JPG) files, and we use the Geospatial Data Abstraction Library (GDAL) [86], a translator library for raster and vector geospatial data formats, to accomplish such format conversion. Thus far, the raw 15 large-scale SAR images with the .jpg file format were obtained.

3.3. Step 3: Image Resizing

From Table 2 in Section 3.1, the raw 15 large-scale SAR images are provided with different sizes, but they are all about 26,000 × 16,000 pixels on average. To generate more uniform training samples so as to facilitate the image cutting in Section 3.4, we resize these images with uniform sizes into 24,000 × 16,000 pixels by resampling, where 24,000 and 16,000 can be divisible by 800 which is a moderate size for most deep learning detection models. Our such practice is also the similar as HRSID [7]. Thus far, the raw 15 large-scale SAR images with 24,000× 16,000 pixels have been obtained.

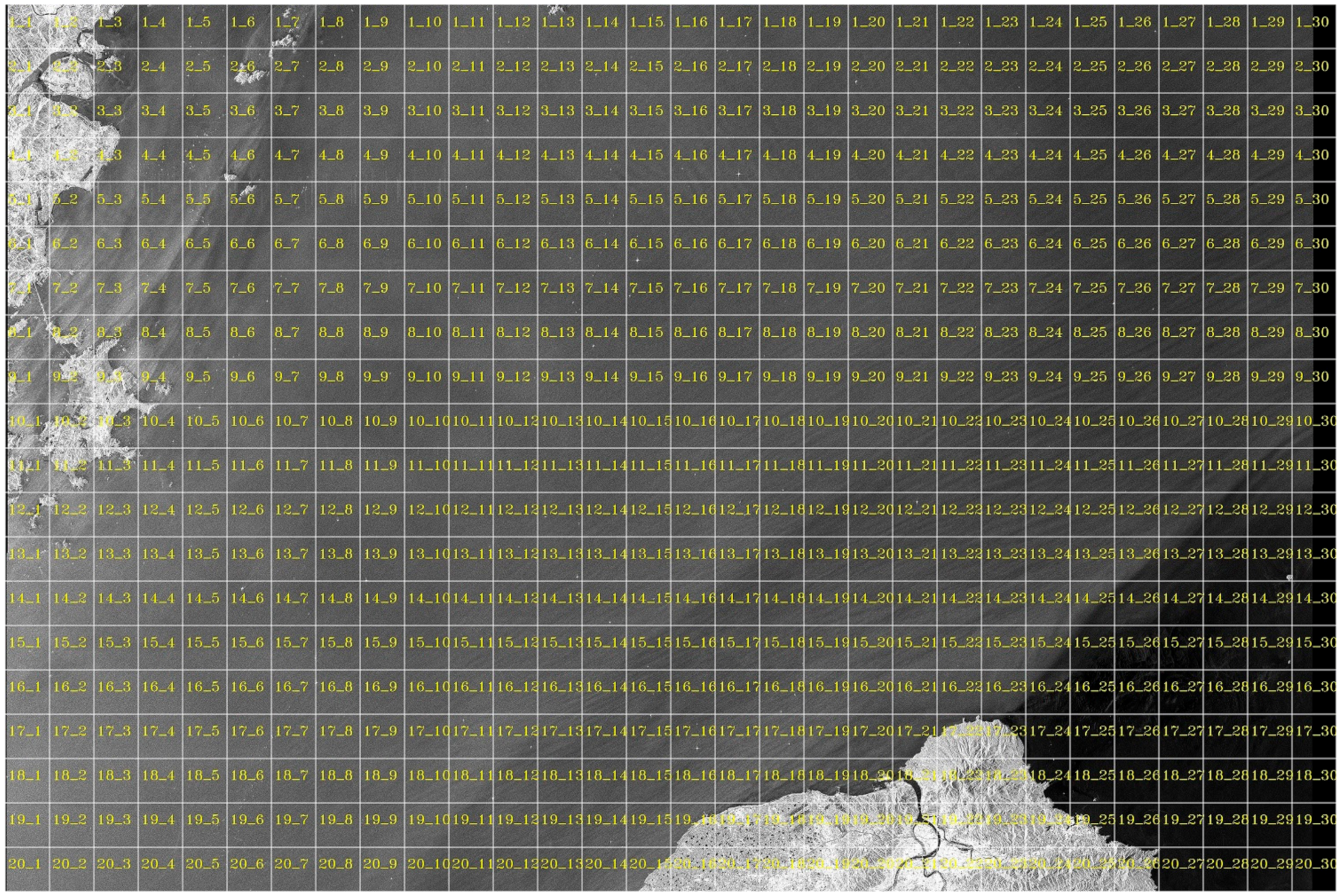

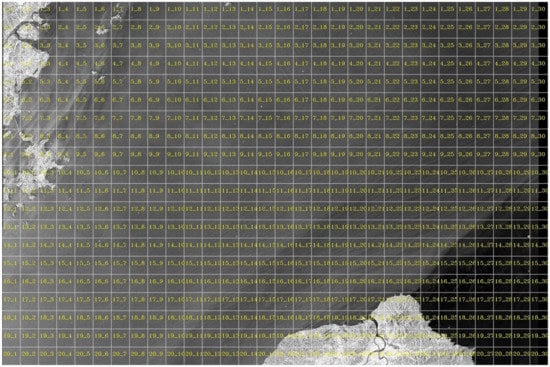

3.4. Step 4: Image Cutting

Figure 4 shows the process of image cutting. In order to facilitate network training, these 15 large-scale SAR images with 24,000 × 16,000 pixels are directly cut into 9000 sub-images with 800 × 800 pixels without bells and whistles (marked in white lines). As a result, 600 sub-images for each large-scale image are obtained. In Figure 4, the small 600 sub-images from each large-scale image are, respectively, numbered as N_R_C.jpg (marked in yellow numbers), where N denotes the serial number of the large-scale image, R denotes the row of sub-images, and C denotes the column. For instance, as is shown in Figure 4 and taking the 11.jpg as an example, the size of the original large-scale SAR images is 24,000 × 16,000 pixels, and after cutting, 600 sub-images with 800 × 800 pixels are generated that are numbered as from 1_1.jpg to 1_30.jpg, from 2_1.jpg to 2_30.jpg, …, and from 20_1.jpg to 20_30.jpg, which means the division of 20 rows and 30 columns.

Figure 4.

Image cutting process taking the 11.jpg as an example.

Finally, the total 9000 sub-images are generated for network training and test in the LS-SSDD-v1.0 dataset. In LS-SSDD-v1.0, we select the first 10 large-scale images as a training set and the remaining five images as a test set. In other words, the first 6000 small sub-images coming from 01.jpg to 10.jpg are selected as a training set, and the remaining 3000 sub-images coming from 11.jpg to 15.jpg as a test set. Different from the sliding window mechanism used in SAR-Ship-Dataset, we adopt the direct and simple cutting mechanism that is the similar as AIR-SARShip-1.0 to produce sub-images, because (1) for one thing, such direct cutting mechanism is more efficient and concise, and also can avoid repeated detection of the same ship in multiple sub-images (e.g., 128 pixels are shifted over both columns and lines during the sliding window, leading to a 50% overlap of adjacent ship chips in the work of Wang et al. [30]). As a result, the total detection speed can be improved. (2) For another thing, our practice is also convenient for the subsequent presentation of large-scene SAR ship detection results, i.e., only the simple image stitching operation is needed, without more complicated post-processing of ship coordinate transformation, from small sub-images to large-scale images.

Regardless of whether the sub-images contain ships or not, we all add them to our LS-SSDD-v1.0 dataset for network training and test, because (1) for one thing, our such practice complies with the raw SAR image property because there are indeed many samples not containing ships in the practical migrating applications of large-scale space-borne SAR images, and (2) for another thing, detection models can effectively learn features of many pure backgrounds to suppress some false alarms, e.g., some brightened dots in urban areas, agricultural regions, mountain areas, etc., according to our findings in Section 7. Thus far, these 9000 sub-images have been obtained.

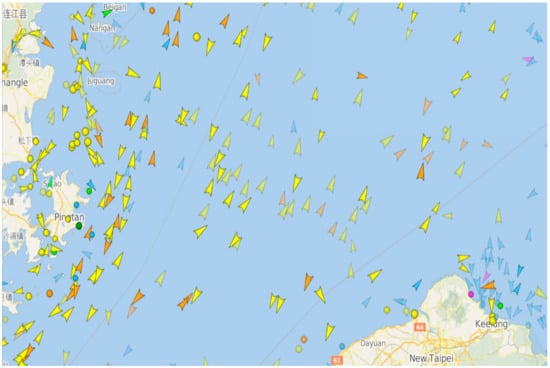

3.5. Step 5: AIS Support

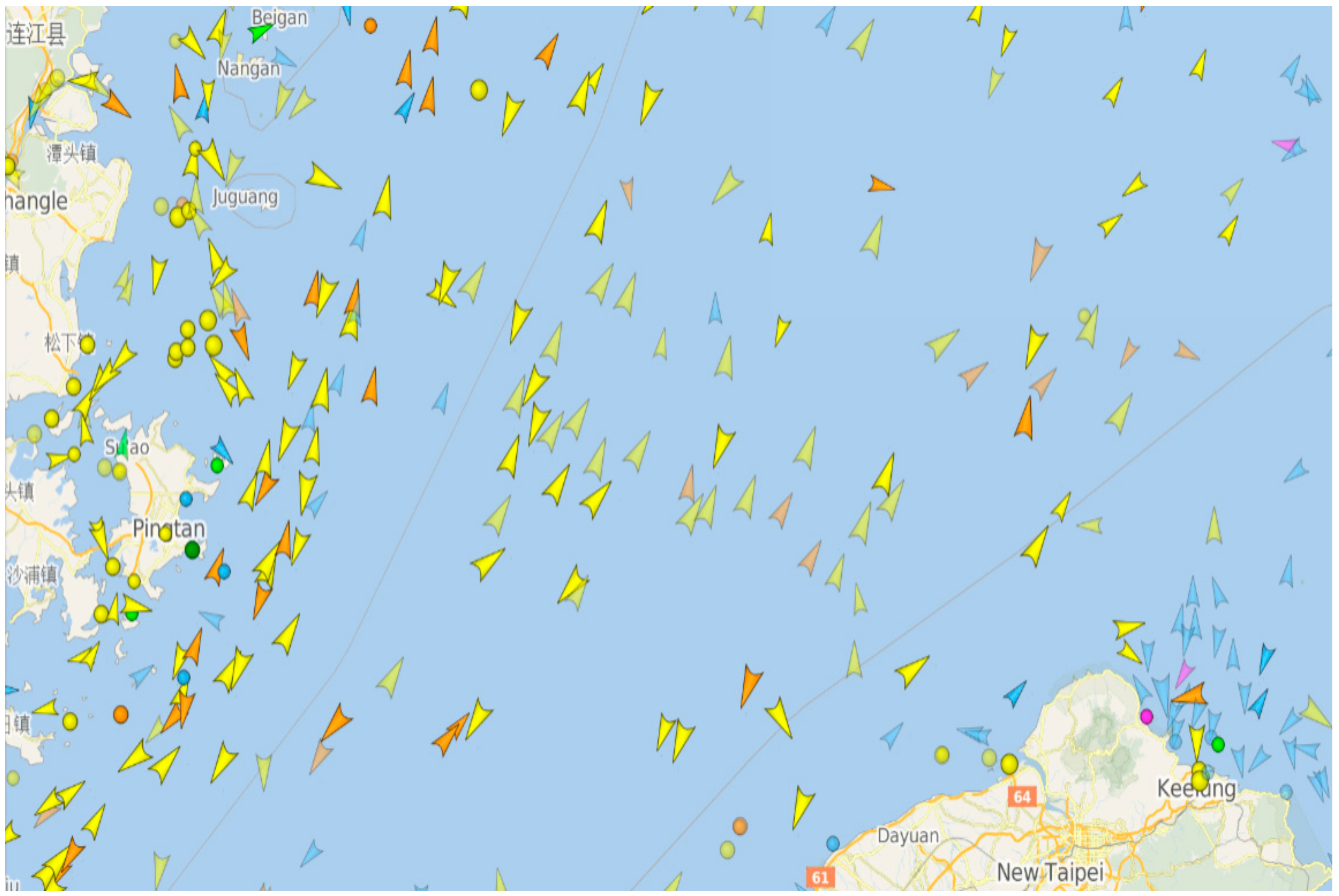

Before labeling the ship ground truths, SAR experts firstly draw support from AIS to roughly determine the possible positions of ships in the large-scale SAR images, according to the exact time and the accurate geographical location of the raw SAR data, introduced in Section 3.1. Figure 5 shows the AIS messages. In Figure 5, the inshore AIS systems and satellite AIS systems are both employed to get more comprehensive ship information.

Figure 5.

Automatic Identification System (AIS) messages in Taiwan Strait taking the 11.jpg as an example. Marks of different shapes represent different types of ships, and the depth of colors represents the time delay.

In addition, it should be noted that AIS can automatically broadcasts information from the vessel to an AIS ship receiver or an inshore receiver by very high frequency (VHF), but they can only work in a limited geographical space [87] (about 200 nautical miles) coming from their limited base station (BS) operating distance. In other words, in fact, some ships far away from land cannot be searched by inshore VHF receivers, and moreover, although the satellite AIS systems can find these ships, there is still a long time delay due to the periodic motion of the satellite around the Earth. Therefore, it is difficult to fully match the SAR images with a very accurate AIS information at the corresponding time and corresponding locations, but we have tried our best to narrow such gap. Moreover, in fact, it is not fully feasible to mark ships if merely using AIS information because many small ships with <300 tons mass are rarely installed AIS, according to our investigation [88]. Therefore, we also draw support from the Google Earth for our annotation correction, which will be introduced in Section 3.7. Thus far, SAR experts have obtained some prior information from AIS.

3.6. Step 6: Expert Annotation

The ship ground truths of the 9000 sub-images are annotated by SAR experts, using LabelImg. In the expert annotation process, AIS and Google Earth both provide some suggestions for some controversial areas, e.g., some islands, ports facilities similar to ships, etc. For prominent marine SAR reflectors, in most cases, we can distinguish them easily according to their shape feature difference with real ships. If some prominent marine SAR reflectors are so similar to ships that we cannot discriminate them based on significant geometric features, we draw support from Google Earth to determine their real conditions. For example, there is a prominent marine SAR reflector in the Google Earth optical images through visual observation, so we remove it in the SAR images.

During the annotation process, if there are obvious V-shape wake and scattering point defocusing phenomenon, we regard these strong scattering points as moving ships, and the others are regarded as stationary/moored ships. In other words, as long as the speed of the ship is not large enough to appear in the above two situations, we think that they are stationary. Although our practice is rough, it is in line with the actual situation to a certain extent, because in such wide-region space-borne Sentinel-1 SAR images, compared to the satellite with a high-speed operation, the speed/velocity of ships is far lower, so in most cases, they can be regarded as stationary targets, which is obviously different from air-borne SAR according to our experience. For ships with obvious wakes and ships with scattering point defocusing, we do not include their wake pixels and defocusing pixels into the ship ground truth rectangular box. In other words, similar to the other existing datasets, we only use the geometrical properties of ships or the areas around them to act as the salient features of ships based on visual enhanced mechanism. Moreover, for ships with different headings or courses, we use the maximum and minimum pixel coordinates from pixels of ship hulls to draw the ship ground truth rectangular box. In other words, similar to the other datasets, we only use rectangular box to locate the center point of the ship, not considering the direction estimation of rotatable boxes. Therefore, a ship ground truth box may have ships in different headings or courses, which means that as long as these ships with different courses have similar center coordinates, they are measured by a same rectangular box.

Moreover, in SAR images, ships and icebergs typically have a stronger backscatter response than the surrounding open water, so icebergs that occur in a large variety of sizes and shapes impose additional challenge to our ship annotation process. First, according to the geographical locations and shooting time (the determination of the area season) of the original SAR images, drawing support from the World Glacier Inventory (WGI) [89], we determined that the covered area belongs to an open sea area where icebergs rarely exist or a complex sea-ice area where icebergs often exist. For the former, we do not consider icebergs effects that hardly exist. For the latter, we use intensity and shape features to discriminate ships and icebergs or ice floes, inspired from Bentes et al. [90], because they generally have the obvious differences in the dominant scattering mechanism [90].

According to the shooting time and geographical positions of SAR images, one can also consult the satellite images of the corresponding weather conditions including precipitation in the website of the World Weather Information Service from the World Meteorological Organization (WMO) [91]. In addition, for different water surface phenomena that comes from different weather conditions, we do not pay too much attention to it because the gray level change of image backgrounds possibly from different sea clutter distribution is still not obvious in contrast to high-intensity ships. Certainly, if there is extreme weather in a sea area (e.g., typhoon), we will pay more attention to inshore ships that may be less likely to go to sea in extreme weather conditions, so they often park at ports side by side densely.

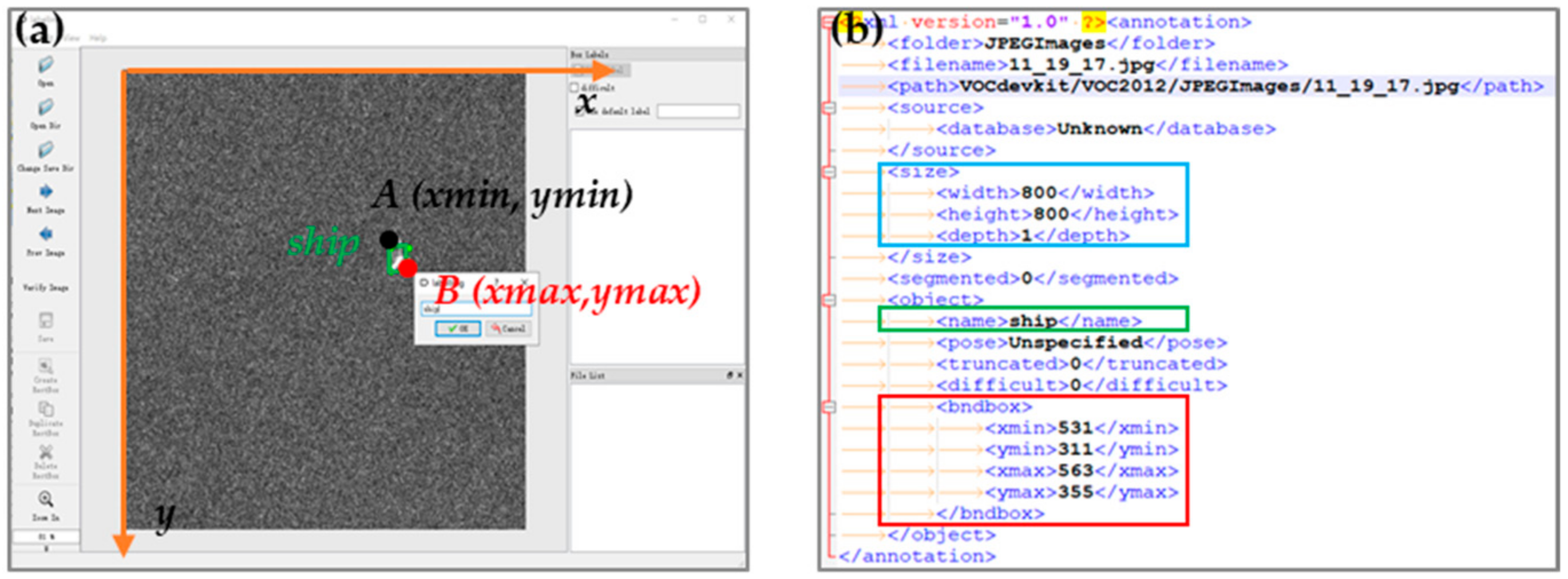

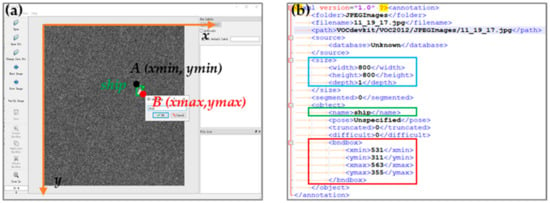

Figure 6 shows the expert annotation process. In Figure 6a, the image axis x and y are marked in orange. From Figure 6a, in LS-SSDD-v1.0, we employ a rectangular box to represent a ship (marked in green), where its top-left vertex A (xmin, ymin) (marked in black) and bottom-right vertex B (xmax, ymax) (marked in red) are used to locate the real ship. After the rectangle box has been drawn, the LabelImg annotation tool will pop up a dialog box to prompt for the category information (i.e., ship in LS-SSDD-v1.0). After the category information is input successfully, the xml label file, as is shown in Figure 6b, will be generated automatically. In Figure 6b, the blue rectangle mark refers to the image information including the width, height, and depth; the green rectangle mark refers to the category information (i.e., ship in LS-SSDD-v1.0); and the red rectangle mark refers to the ship ground truth box, which are denoted as xmin, ymin, xmax, and ymax.

Figure 6.

Expert annotation. (a) LabelImg annotation tool; (b) xml label file.

Different from HRSID, which employs polygons to describe ships because they need to implement ship segmentation task and in the deep learning community, the ship detection task generally is always described by rectangular boxes. Finally, in order to ensure the correctness of ship labels as much as possible, we also invite more experts to provide technical guidance. Thus far, 9000 label files with the xml format have been obtained.

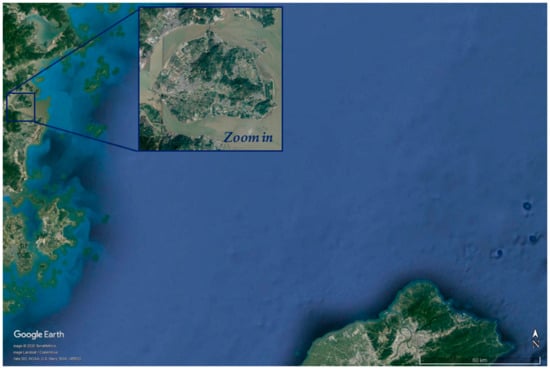

3.7. Step 7: Google Earth Correction

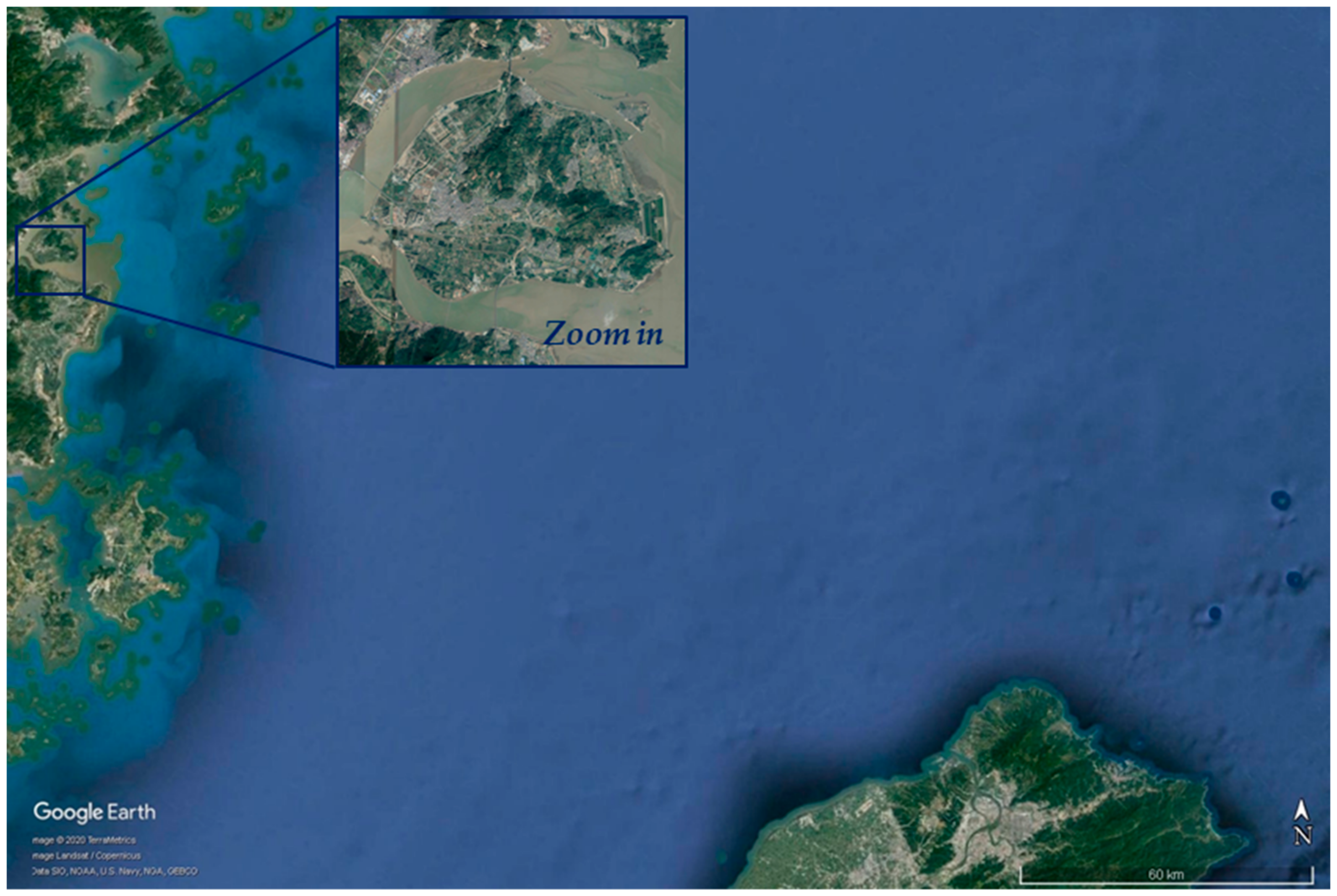

To ensure the authenticity of the dataset as much as possible, we also utilize Google Earth for more careful inspection. In Google Earth, we choose the closest time to approximately match the shooting time of SAR images. Figure 7 shows a Google Earth optical image of 11.jpg SAR image in the same coverage areas. In our correction process, appropriate enlargement of images, i.e., zoom in (marked in blue box in Figure 7) is needed to obtain more detailed geomorphological information and ocean conditions. Moreover, we find that some islands and reefs are labeled as ships by mistake, so we performed their correction, which shows Google Earth correction plays an important role.

Figure 7.

A Google Earth image corresponding to the coverage areas of 11.jpg in Taiwan Strait. In the Google Earth software, more image details can be obtained by the operation of zooming in.

It needs to be clarified that Google Earth is merely used for annotation correction, and in fact, it cannot provide fully accurate ship information because there is still a time gap between its optical images shooting time and SAR images. Moreover, such time gap is objective and cannot be overcome unless Google Corporation updates maps in time. Yet, LS-SSDD-v1.0 is still superior to other datasets because they rarely considered Google Earth correction. Thus, finally, we draw support from Google Earth to identify islands or docks whose locations may not change with time based on visual observation, so as to (1) check some areas that are easy to label incorrectly and (2) correct wrong labels.

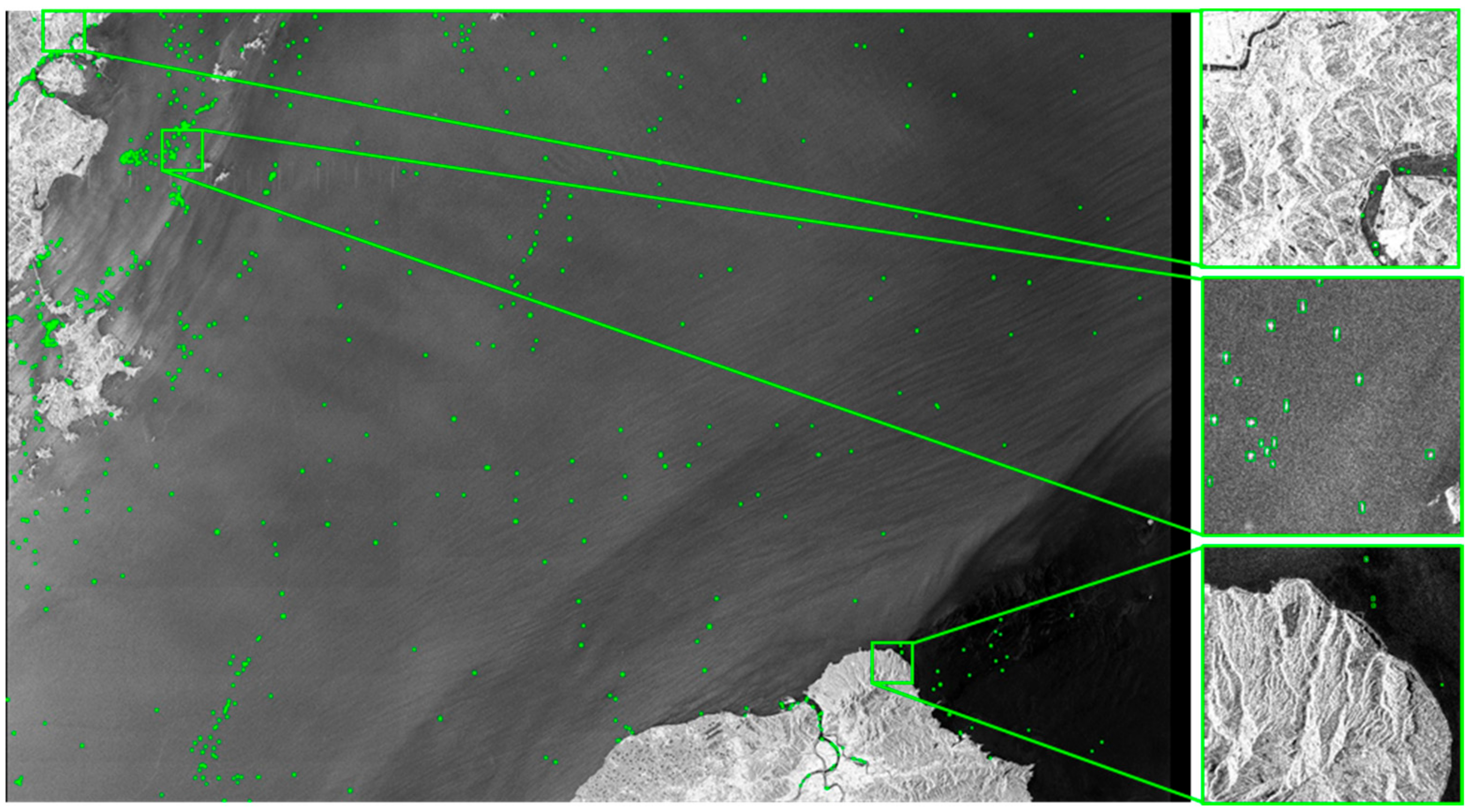

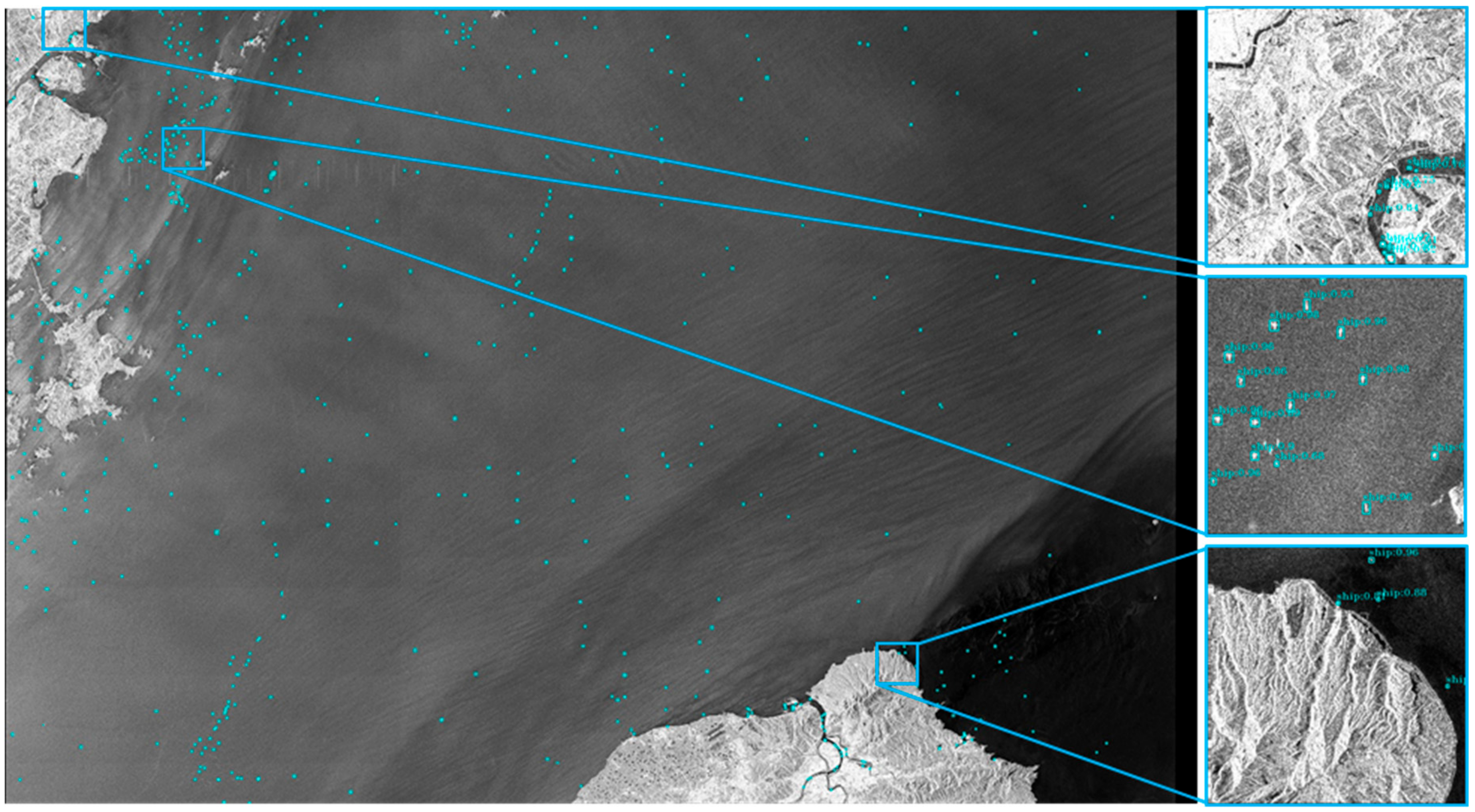

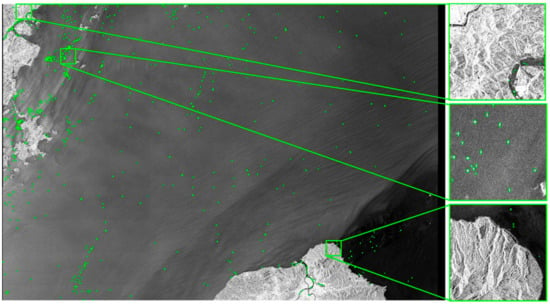

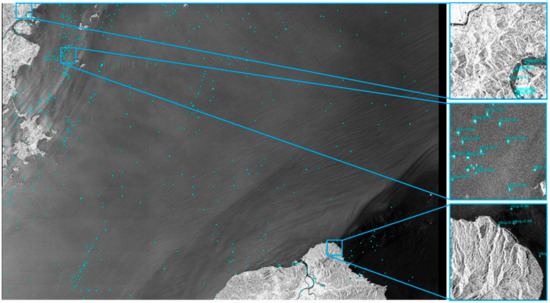

To here, we complete the dataset establishment process. Figure 8 shows ground truths of 11.jpg (marked in green). Compared with other datasets, from Figure 8, it is obvious that LS-SSDD-v1.0 has the characteristics of large-scale background and small ship detection.

Figure 8.

Ship ground truth labels of the 11.jpg. Real ships are marked in green boxes.

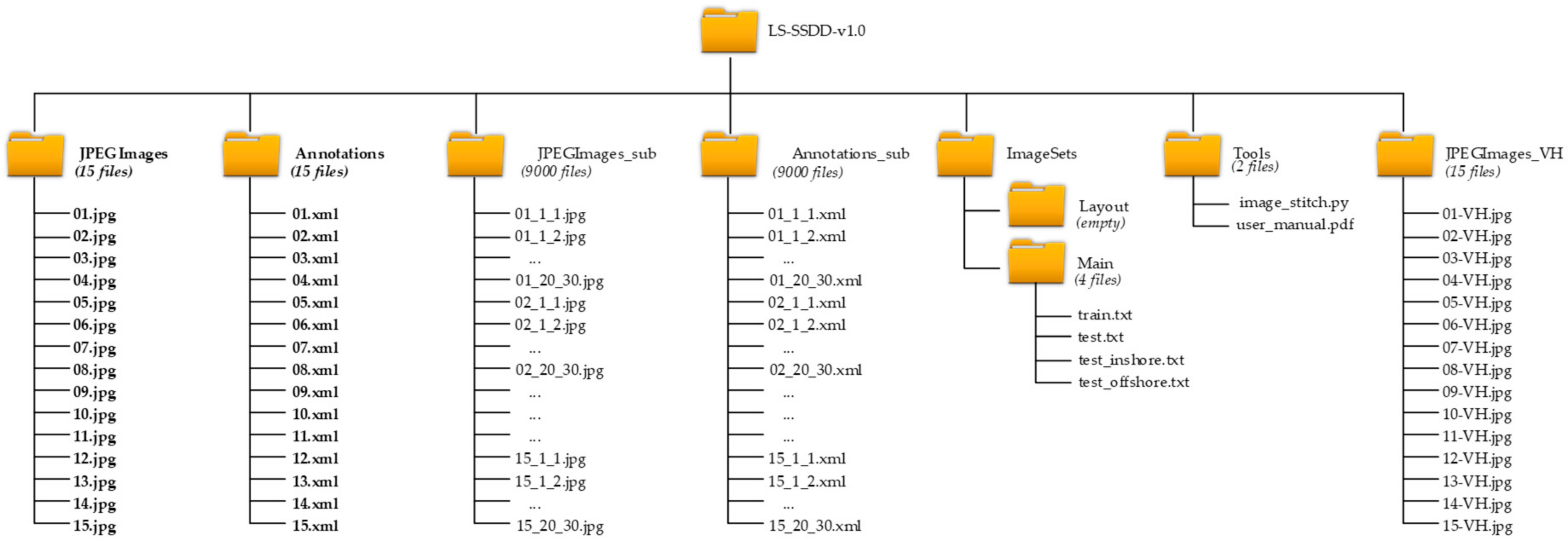

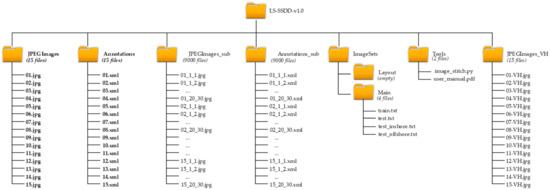

Finally, in order to facilitate scholars to use LS-SSDD-v1.0, we provide a file preview as is shown in Figure 9. In Figure 9, there are seven file folders in the root directory of LS-SSDD-v1.0 (a compressed package file with zip format): (1) JPEGImages, (2) Annotations, (3) JPEGImages_sub, (4) Annotations_sub, (5) ImageSets, (6) Tools, and (7) JPEGImages_VH.

Figure 9.

File content preview of LS-SSDD-v1.0.

(1) JPEGImages in Figure 9 has 15 files, which contain the 15 raw large-scale space-borne SAR images that are numbered as from 01.jpg to 15.jpg. The size of these SAR images is 24,000 × 16,000 pixels.

(2) Annotations in Figure 9 has 15 files, which contain the 15 ground truth label files of real ships that are numbered as from 01.xml to 15.xml. These labels files are in line with PASCAL VOC standard shown in Figure 6b.

(3) JPEGImages_sub in Figure 9 has 9000 files, which contain 9000 sub-images with 800 × 800 pixels that are obtained from the raw 15 large-scale SAR images based on image cutting numbered as from 1_1.jpg to 1_30.jpg, from 2_1.jpg to 2_30.jpg, …, and from 20_1.jpg to 20_30.jpg, as introduced in Section 3.4. In addition, these sub-images are numbered as N_R_C.jpg, where N denotes the serial number of the large-scale image, R denotes the row of sub-images, and C denotes the column. It should be noted that these sub-images are actually used in our network training and test in this paper, due to limitation of GPU memory. If there are some distributed high performance computing (HPC) [8] GPU servers, one can direct train the raw 24,000×16,000 pixels large-scale SAR images.

(4) Annotations_sub in Figure 9 contains 9000 label files of ship ground truths numbered as 1_1.xml to 1_30.xml, 2_1.xml to 2_30.xml, …, and 20_1.xml to 20_30.xml, respectively, corresponding to 9000 sub-images with the same file number.

(5) ImageSets in Figure 9 contains two file folders (Layout and Main). Layout is used to place ship segmentation labels for future version updates, and Main is used to place the training set and test set division files (train.txt and test.txt). Similar to HRSID [7], regarded images containing land as inshore samples, we follow this means to divide the test set into a test inshore set and a test offshore set (test_inshore.txt and test_offshore.txt) to facilitate future studies of other scholars [48,49] who focus on inshore ship detection, because it is more difficult to detect inshore ships than offshore ships.

(6) Tools in Figure 9 contains a Python tool file named as image_stitch.py to stitch sub-images’ detection results into the original large-scale SAR image. We also provide a user manual for other scholars’ easier use, named as user_manual.pdf. In addition, in the user_manual.pdf, one can also link to and obtain additional information about the images by searching the specific product IDs of these 15 raw SAR images in the Copernicus Open Access Hub website [82].

(7) JPEGImages_VH in Figure 9 has 15 files, which contain the 15 raw large-scale space-borne SAR images with VH cross-polarization numbered as from 01_VH.jpg to 15_VH.jpg that is used for future dual polarization research. In addition, these 15 VH cross-polarization SAR images possess the same label ground truths as VV co-polarization. In this paper, we only provide the research baselines of the VV co-polarization.

4. Advantages

4.1. Advantage 1: Large-Scale Backgrounds

The capability to cover a wide area is a significant advantage of space-borne SAR, but previous datasets were always provided with limited detection backgrounds that may be not a best choice for the practical migration application in engineering. In fact, in general, small ship slices are relatively appropriate for ship classification [7] due to their obvious shapes, outlines, sizes, textures, etc., but they contain fewer scattering information from land, islands, artificial facilities, etc., negatively affecting the practical detection [7]. For the practical space-borne SAR ship detection task, in most cases, one always needs to detect ships in the large-scene SAR images so as to provide wide-range and all-round ship monitoring in the Earth, which is also recognized by Cui et al. [14] and Hwang et al. [58], so we collect SAR images with large-scale backgrounds to establish LS-SSDD-v1.0.

Table 3 shows the background comparison with other existing datasets. From Table 3, LS-SSDD-v1.0′s image size is the largest. Moreover, from Figure 2 and Figure 8, LS-SSDD-v1.0′s coverage areas are also the largest. Thus, LS-SSDD-v1.0 is closer to the practical migration application of wide-region global ship detection in space-borne SAR images, compared to the other four existing datasets. Finally, in Table 3, it should be noted that “image size” refers to the size of image samples in the dataset files that are provided by their publishers.

Table 3.

Background comparison.

4.2. Advantage 2: Small Ship Detection

LS-SSDD-v1.0 can also be used for SAR small ship detection, and there are also two types of benefits: (1) in fact, ships in large-scale Sentinel-1 SAR images are always rather small from the perspective of occupied pixels (not real physical size), so LS-SSDD-v1.0 is closer to the practical engineering migration application; and (2) today, SAR small ship detection is also an important research topic that has received significant attention from many scholars [19,24,66], but there is still a lack of datasets that are used specifically to address this problem among open reports, so LS-SSDD-v1.0 can also compensate for such vacancy.

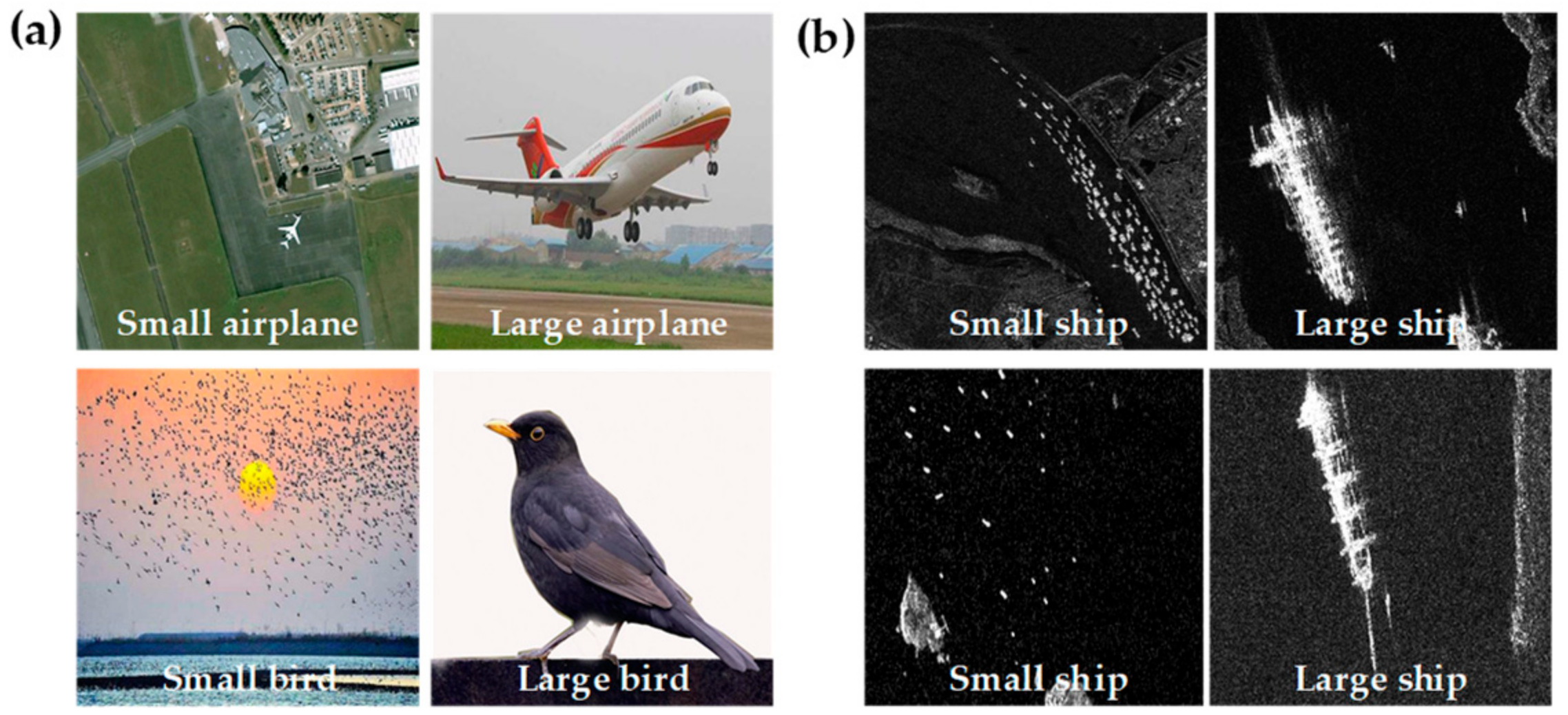

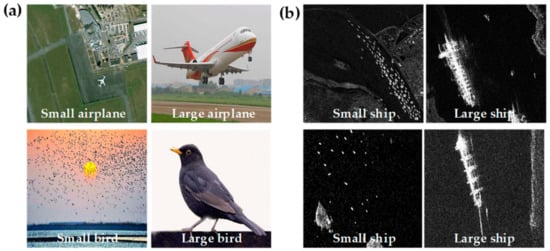

Special attention needs to be paid to is that in the deep learning community, “small ship” refers to occupying minor pixels in the whole image based on the definition of the COCO dataset [74], instead of real ship physical size (i.e., ship length and ship breadth). Figure 10 is the intuitive schematic diagram of small ship detection. For example, in nature, the airplane size is rather large, while in Figure 10a, the airplane in the first image is often regarded as a small one, but the airplane in the second image can also often regarded as a large one. In addition, in nature, bird size is often rather small, while in Figure 10a, the birds in the third image are often regarded as small ones, but the bird in the fourth image can also often be regarded as a large one. Therefore, for SAR ship detection, in Figure 10b, the ships in the first and the third images are regarded as small ships; meanwhile, the ships in the second and the fourth images can also often be regarded as large ships. To sum up, ship pixel ratio among the whole image is used to measure the ship size, instead of the physical sizes in meters. Up to now, many other scholars [19,24,66] have adopted this definition, and the existing other datasets are also based on this definition, so in this paper, we follow the usual practice. In other words, for deep learning object detection, we do not pay special attention to the specific resolution of images in meters or centimeters; on the contrary, we merely focus on the pixel proportion of the real object in the whole image, i.e., the relative pixel size, instead of the physical size.

Figure 10.

Small targets and large targets in the deep learning community. (a) Small objects and large objects in the nature; (b) small ships and large ships in the SAR images.

Table 4 shows the ship pixel size comparison with the other existing datasets. From Table 4, the average ship area of LS-SSDD-v1.0 is only 381 pixels2, which is far smaller than others (i.e., 381 << 1134 < 1808 < 1882 < 4027), and the largest ship area is only 5822 pixels2, which is far smaller than others (i.e., 5822 << 26,703 < 62,878 < 72,297 < 522,400). Moreover, if we calculate the proportion of ship pixels among the whole image pixels based on average pixels2, ships in LS-SSDD-v1.0 will be greatly smaller than others. If we can take the average pixels2 as an example, then we can find that 381/(24,000 × 160,00) = 0.0001% of LS-SSDD-v1.0 << 4027/(3000×3000) = 0.0447% of AIR-SARShip-1.0 < 1808/(800 × 800) = 0.28% of HRSID < 1882/(500 × 500) = 0.75% of SSDD < 1134/(256 × 256) = 1.73% of SAR-Ship-Dataset. Therefore, LS-SSDD-v1.0 is also dedicated to SAR small ship detection.

Table 4.

Ship pixel size. “Pixels2” refers to the area of ship rectangle box. “Proportion” refers to the proportion of ship pixels occupying the whole image when using average pixels2 to make a statistic.

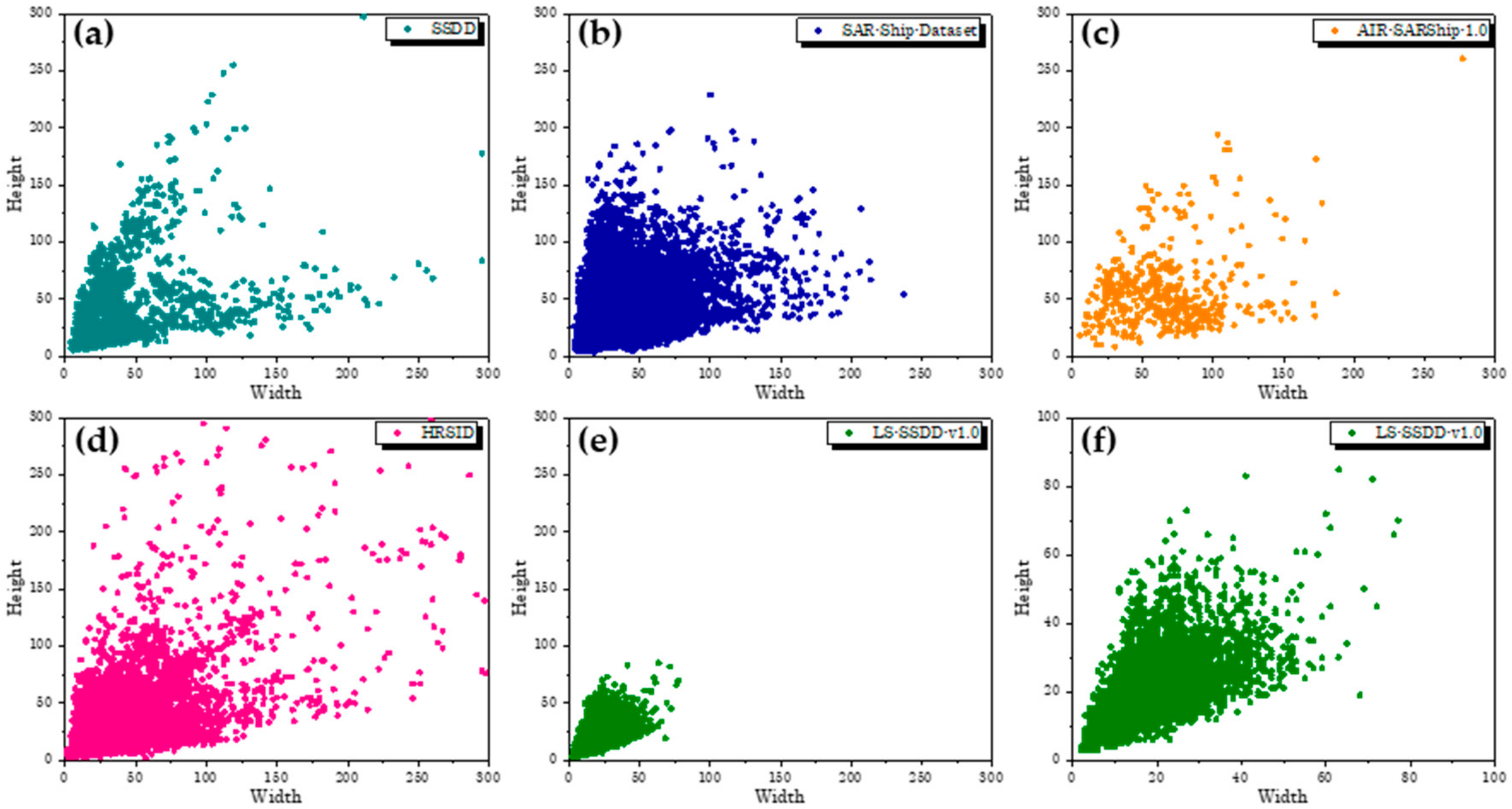

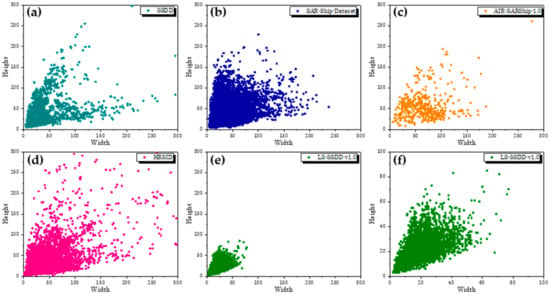

Figure 11 shows the ship pixel size distribution of different datasets. In Figure 11, we measure the ship size in the pixel level (i.e., the number of pixels and the pixel proportion among the whole image), instead of the physical size level, because (1) SAR images in different datasets have inconsistent resolutions, and these datasets’ publishers only provided a rough resolution range, so we cannot perform a strict comparison if using the physical size; (2) the specific resolution of a specific image of other datasets was not provided in the other datasets’ original papers; (3) the physical size of real ships of other datasets was also not provided in their original papers; and (4) in the deep learning community, it is a common sense to use pixel to measure the object size in a relative pixel proportion among the whole image [78].

Figure 11.

Ship pixel size distribution of different datasets. (a) SSDD; (b) SAR-Ship-Dataset; (c) AIR-SARShip-1.0; (d) HRSID; (e) LS-SSDD-v1.0; (f) coordinate axis enlarged display of (e).

In addition, in Figure 11, similar to the other existing datasets, we use the width and height of the ship ground truth rectangular box to represent the ship pixel size, instead of the ship length L and the ship breadth B (always B < L), because (1) this is to facilitate dataset comparison because other datasets’ publishers did not provided the ship length L and the ship breadth B in their original papers, and they all adopt the width and height [7,10,12,22] to plot the figures in Figure 11. More cases can be found in references [3,4,6,10,15]. (2) The ship length L and the ship breadth B is related to image resolution, but other datasets’ publishers did not provide the specific resolution of a specific image in their datasets, just a rough resolution range. (3) For deep-learning-based ship detection, a ship is generally represented as a rectangular box where the width and height are the two core parameters of a rectangular box, and when using deep learning for SAR ship detection, the final detection results are also generally represented by a series of rectangular boxes, instead of the ship length L and the ship breadth B. (4) In fact, there is still a lot of resistance to obtain the physical size of the ship (i.e., the ship length L and the ship breadth B), because it is difficult to obtain these accurate information comprehensively from the limited AIS data (i.e., there are still some “dark” ships [9,92] that fish illegally, smuggle in illegally, etc., which cannot be monitored by AIS, whose ship length L and ship breadth B cannot be obtained; see more detail in references [9,92]).

Given the above, it does not affect the core work of this paper if not using the ship length L and ship breadth B, because (1) the width and height of a ship ground truth rectangular box have been able to describe ship pixel size well, merely not containing more information when compared to the ship length L and ship breadth B; (2) different from the ship recognition or classification task in OpenSARShip [80] that may need specific the ship length L and ship breadth B to represent ship features to enhance recognition accuracy, our LS-SSDD-v1.0 only focus on ship location, which means just using a simple rectangle box to frame the ship; and (3) similar to the other datasets, we only use a vertical or horizontal rectangular box to locate the center point of the ship, not considering the direction estimation of rotatable boxes in references [11,93] that may be involved with the ship length L and ship breadth B.

From Figure 11, ships in LS-SSDD-v1.0 (Figure 11e,f) have a concentrated distribution in the area of both width < 100 pixels and height < 100 pixels, but ships in other datasets are provided with multi-scale characteristic, especially for HRSID (Figure 11d). Although detecting ship with different sizes is an important research topic that has attracted many scholars [13,17], the hard-detected small ship detection are also rather important to evaluate the minimum recognition capability of detectors. Therefore, today, SAR small ship detection has also received much attention by another scholars [19,24,66]. However, there is still a lack of datasets that are used for small ship detection among open reports, so LS-SSDD-v1.0 can solve this problem.

According to the COCO dataset regulation, we also count the number of small ships, medium ships and large ships in different datasets in detail, as is shown in Table 5. It needs to be noted that, in Table 5, different from traditional understanding that ships with < 50 pixels in Sentinel-1 SAR images are regarded as small ones, in our LS-SSDD-v1.0 dataset of this paper, we use the definition standard from the COCO dataset regulation [74] to determine small ships, medium ships and large ships, because in the deep learning community, most scholars (e.g., Cui et al. [14], Wei et al. [6,7], Mao et al. [34], etc.) always adopt such size definition standard. First, the average size of images for training in the COCO dataset is 484 × 578 = 279,752 pixels in total. As a result, in the COCO dataset regulation, the targets with their rectangular box areas < 1024 pixels (0.37% proportion among the total 279,752 pixels) are regarded as small ones; 1024 pixels < area < 9216 pixels as medium ones (from 0.37% proportion among the total 279,752 pixels to 3.29% proportion among the total 279,752 pixels); and area > 9216 pixels as large ones (3.29% proportion among the total 279,752 pixels). Therefore, the pixel proportions of 0.37% and 3.29% are used to determine the target size, instead of the simple pixel number of targets. More intuitive observation can be found in Figure 10.

Table 5.

The definition standard of ship size in different datasets.

According to the relationship between the proportion of the ship rectangular box occupying the full image pixels, using the above pixel proportion rule, in LS-SSDD-v1.0, whose training images average size is 800 × 800 pixels, the targets with their rectangular box areas < 2342 pixels are regarded as small ones (0.37% proportion among the total 640,000 pixels); 2342 pixels < area < 21,056 pixels as medium ones (0.37% proportion among the total 640,000 pixels to 3.29% proportion among the total 640,000 pixels); and area > 21,056 pixels as large ones (3.29% proportion among the total 640,000 pixels).

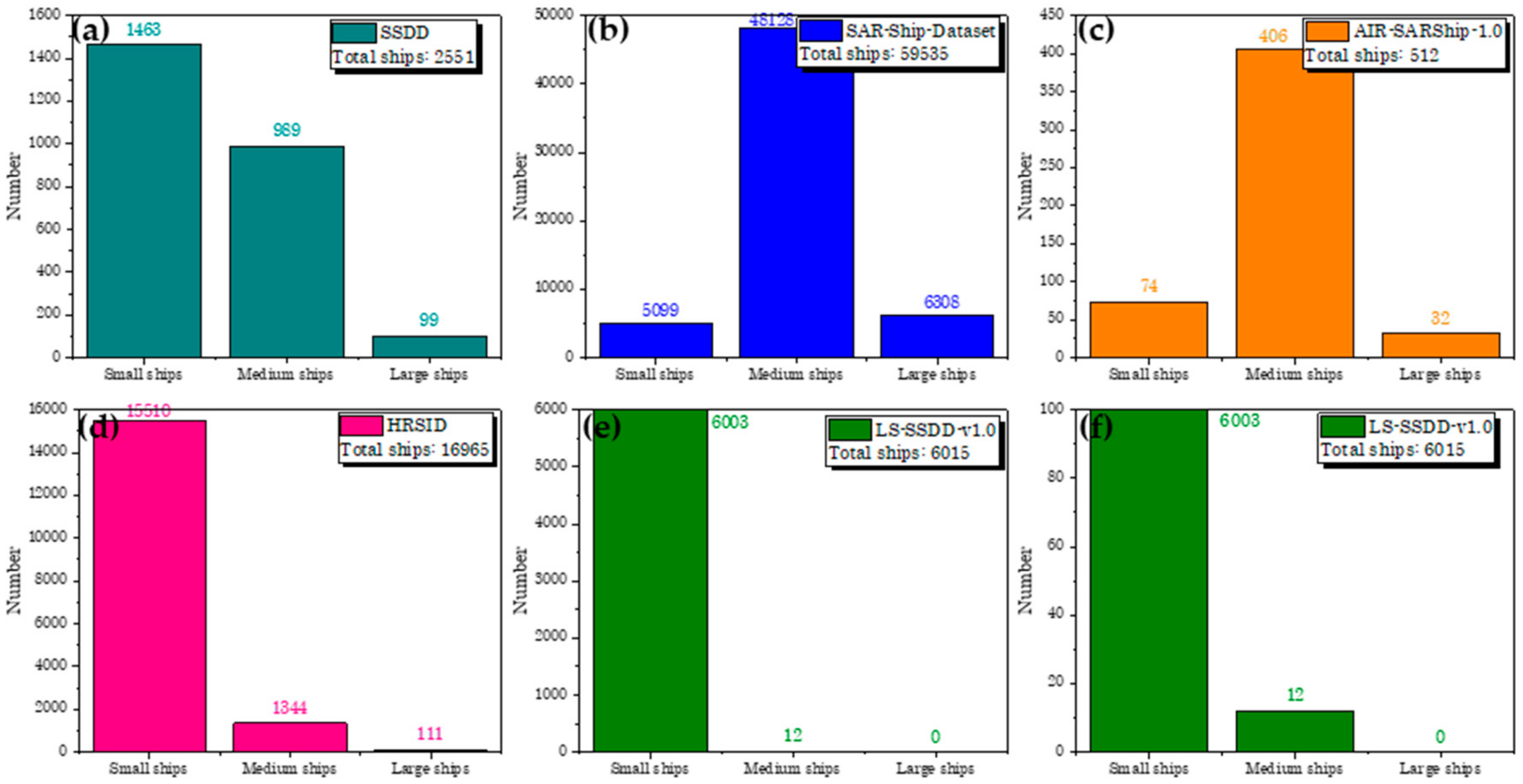

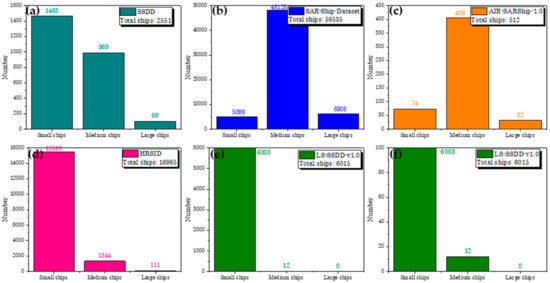

Finally, the comparison results of the number of different size ships in different datasets are shown in Figure 12. From Figure 12, we can draw the following conclusions: (i) There are 2551 ships in total in SSDD. Among them, there are 1463 small ships (57.35% of the total), 989 medium ships (38.77% of the total), and 99 large ships (3.88% of the total). (ii) There are 59,535 ships in total in SAR-Ship-Dataset. Among them, there are 5099 small ships (8.56% of the total), 48,128 medium ships (80.84% of the total), and 6308 large ships (10.60% of the total). (iii) There are 512 ships in total in AIR-SARShip-1.0. Among them, there are 74 small ships (14.45% of the total), 406 medium ships (79.30% of the total), and 32 large ships (6.25% of the total). (iv) There are 16,965 ships in total in HRSID. Therefore, there are 15,510 small ships (91.42% of the total), 1344 medium ships (7.92% of the total), and 111 large ships (0.66% of the total). (v) There are 6015 ships in total in LS-SSDD-v1.0. Among them, there are 6003 small ships (99.80% of the total), 12 medium ships (0.20% of the total), and 0 large ships (0.00% of the total).

Figure 12.

The number of different size ships in different datasets. (a) SSDD; (b) SAR-Ship-Dataset; (c) AIR-SARShip-1.0; (d) HRSID; (e) LS-SSDD-v1.0; (f) coordinate axis enlarged display of (e).

To sum up, LS-SSDD-v1.0 is also a dataset dedicated to SAR small ship detection, because its proportion of small ships is far higher than others (99.80% of LS-SSDD-v1.0 > 91.42% of HRSID > 57.35% of SSDD > 14.45% of AIR-SARShip-1.0 > 8.56% of SAR-Ship-Dataset).

4.3. Advantage 3: Abundant Pure Backgrounds

In the existing four datasets, the image samples with pure backgrounds are all discarded artificially (Pure backgrounds mean that there are no ships in images.). There may be two possible reasons for this: (1) detection models seem to be able to simultaneously learn the features of ships (i.e., positive samples) and those of backgrounds (i.e., negative samples) from the images containing ships, so previous scholars possibly think that it is unnecessary to add so many pure background samples; and (2) if too many pure background samples are added, the training time of models is bound to be increased.

However, we find that if pure background samples are fully discarded in training, many false alarm cases of brightened dots will emerge in urban areas, agricultural regions, mountain areas, etc., which will be shown in Section 7. In addition, such phenomenon also really appeared in the work of Cui et al. [14] and our previous work [4]. Obviously, it is impossible for ships to exist in these areas.

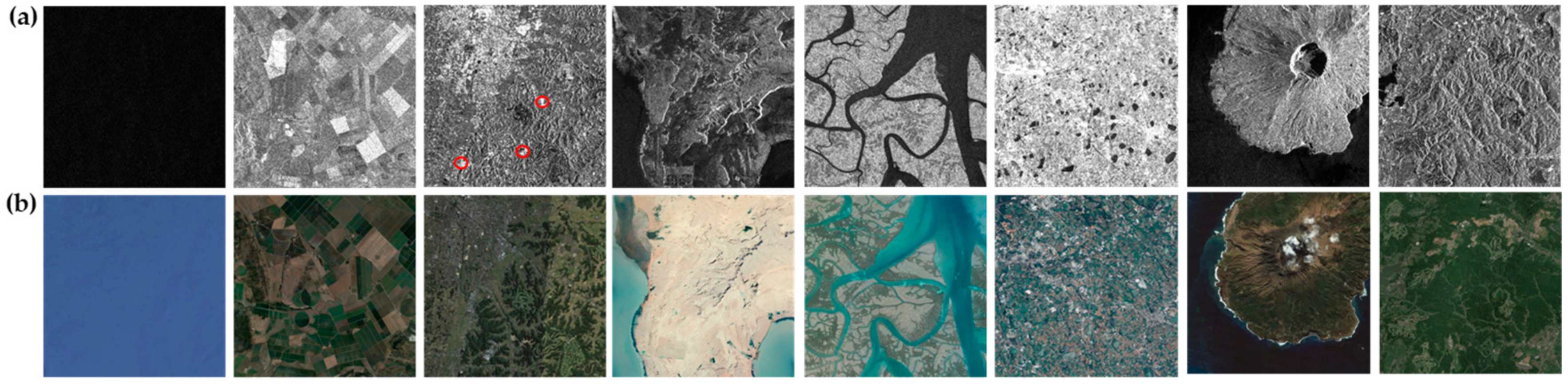

In addition, it is indeed time-consuming for training to add many pure backgrounds samples, but we hold the view that it is cost-effective to obtain better detection performance, because, in fact, such practice does not slow down the final detection speed for the test process (or inference process).

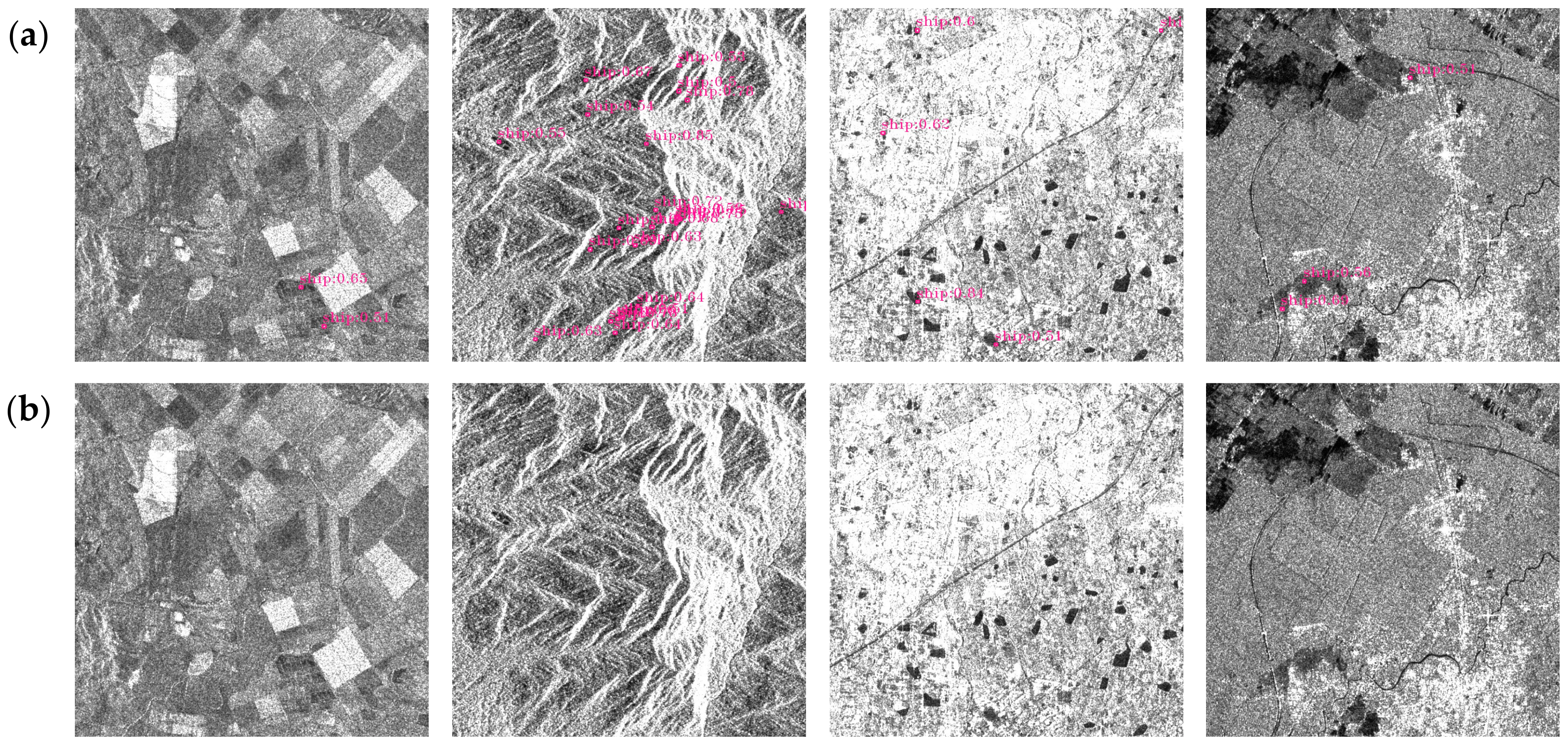

Table 6 shows the abundant pure background comparison with other datasets. From Table 6, only LS-SSDD-v1.0 has abundant pure backgrounds. Figure 13 shows some typical SAR images with pure backgrounds in LS-SSDD-v1.0 and their optical images of corresponding areas. In Figure 13, we show eight types of areas that generally do not contain ships, i.e., pure ocean surface, farmlands, urban areas, Gobi, remote rivers, villages, volcanos and forests. Moreover, it should be noted that these typical pure background samples are all from the original 15 large-scale SAR images, not a deliberate addition.

Table 6.

Abundant pure backgrounds comparison.

Figure 13.

Abundant pure backgrounds of SAR images in LS-SSDD-v1.0. (a) SAR images; (b) optical images. Sea surface; farmlands; urban areas; Gobi; remote rivers; villages; volcanos; forests.

Especially, combined with abundant pure backgrounds, we propose PBHT-mechanism to suppress false alarms of land in large-scale SAR images. In other words, these pure background samples in Figure 13 are mixed in non-pure background samples that are jointly inputted into networks for the actual training. In this way, for example, for the urban areas in Figure 13a, the false alarms of some bright spots marked in red circles can be effectively suppressed. Influences of PBHT-mechanism on the overall detection performance will be discussed in detail in Section 7.

Finally, it needs to be noted that the theme of our work is “ship detection,” as is shown in this paper’s title, but we still include these land areas with pure backgrounds in Figure 13 into our LS-SSDD-v1.0 dataset, because (1) according to the evolution of time, the use of deep learning for SAR ship detection can be divided into two stages, i.e., the early stage and the present stage. In the early stage, deep learning was applied in various parts of SAR ship detection, e.g., land masking [28], region of interest (ROI) extraction, and ship discrimination [28,72] (i.e., ship or background binary classification of a single chip.). However, in the present stage, deep learning-based SAR ship detection methods utilize an end-to-end mode to send SAR images into networks for detection and output detection results directly [13], without the support of auxiliary tools (e.g., traditional preprocessing tools, geo information, etc.) and without manual involvement. In other words, the operation of sea–land segmentation [13] that may need geo information is removed at present, so the detection efficiency is greatly improved, which reflects the biggest advantage of AI, i.e., a thoroughly intelligent process. Although it seems to be forcible, it is rather highly efficient, as long as there are abundant image samples containing land used for network training and learning. (2) In addition, the coastlines of the Earth are constantly changing, so the use of fixed coastline data from geo information to perform sea-land segmentation will inevitably result in some deviations [28], and especially, in fact, it is also challenging to obtain an accurate result of land–sea segmentation that are under extensive research by many other scholars [94,95,96]. (3) Today, deep learning-based SAR ship detectors always simultaneously locate many ships in large SAR images, instead of just ship–background binary classification in some small single chips, so the ship discrimination process is integrated into the end-to-end mode, improving detection efficiency. (4) In order to avoid the trouble of land-sea segmentation, networks have to train on these land areas to learn their features to discriminate land, sea, ship, etc., which is a compromise alternative to replace the operation of land–sea segmentation, and our such practice is also same as the other existing datasets where some land areas are also included (just these existing datasets do not include the pure background land areas without ships.). (5) As described before, such practice can suppress the false alarms of brightened dots in urban areas, agricultural regions, mountain areas, etc.

4.4. Advantage 4: Fully Automatic Detection Flow

Fully automatic detection flow means that there is no human involvement when detecting ships in large-scale SAR images in the practical engineering migration application. According to our investigation, today, many scholars [1,2,3,4,6,10,34] needed to verified their detection models on some open datasets and then performed practical ship detection in several other large-scale SAR images to confirm the migration capability of models. However, their practical operation process needs some preprocessing means, e.g., separation of land-sea, vision selection, etc., to obtain ship candidate areas, because there is a huge domain gap between the existing datasets and the practical large-scale space-borne SAR images (small ship chips VS. large detection regions), according to intuitive observation. Thus, they often needed to abandon these regions without ships (i.e., pure backgrounds) based on the human’s observing experience or some sophisticated traditional algorithms. Moreover, their detection results from ship candidate areas also need to be integrated into the original large-scale SAR image via some specific post-processing means (i.e., coordinate transformation).

Obviously, the above practice is not a fully automated and adequately intelligent process. One possible reason for such is that the datasets they used for model training are not exclusively designed for their practical large-scale space-borne SAR images, consequently leading to insufficient automation and insufficient intelligence in their model migration capability verification process (the last process in Figure 1b of Section 1). On the contrary, if using LS-SSDD-v1.0 to conduct model training, one can verify their detection models’ migration application capability on large-scale SAR images, without any human involvement and any use of traditional algorithms, fully automatically, which is also the most appropriate embodiment of the advantages of deep learning.

Table 7 shows the automatic detection flow comparison with other existing datasets. From Table 7, only LS-SSDD-v1.0 can achieve an automatic detection flow, while others are not fully automatic in practical engineering applications.

Table 7.

Fully automatic detection flow comparison.

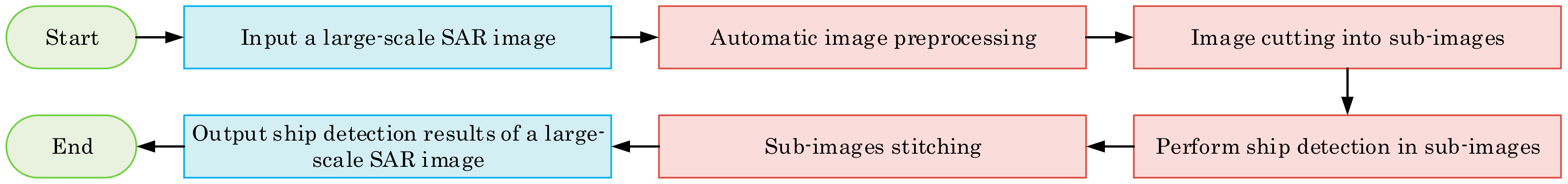

Figure 14 shows the fully-automatic ship detection process in a large-scale space-borne SAR image when using our LS-SSDD-v1.0 dataset to train network models.

Figure 14.

Fully automatic detection flow based on LS-SSDD-v1.0 to train network models.

From Figure 14, the fully-automatic detection flow is as follows.

Step 1: Input a large-scale SAR image.

That is, input a raw large-scale Sentinel-1 SAR image with the raw .tiff file format.

Step 2: Automatic image preprocessing.

That is, convert the raw SAR image with the .tiff file format into the .jpg file format. Afterward, resize the image into 24,000 × 16,000 pixels.

Step 3: Image cutting into sub-images.

That is, cut the 24,000 × 16,000 pixels SAR image into 600 sub-images with 800 × 800 pixels directly, without bells and whistles. It should be noted that these sub-images are actually used in our network training and test in this paper, coming from limitation of GPU memory, because most deep learning network models are so huge (hundreds of MB) that their training needs huge GPU memory. Such image cutting is also a common sense in the deep learning community, and the AIR-SARShip-1.0 dataset also adopts such practice where the original large SAR images with 3000 × 3000 pixels are cut into small sub-images with 500 × 500 pixels for the actual network training. Of course, if there are some distributed cluster high performance computing (HPC) GPU servers [8] with enough GPU memory, one can also directly train the raw 24,000 × 16,000 pixels large-scale SAR images, similar to traditional CFAR-based methods that may need not image cutting, i.e., a one-step procedure clipping single ship from the start is straight forward.

Step 4: Perform ship detection in sub-images.

That is, use trained models on LS-SSDD-v1.0 to perform ship detection in sub-images, and obtain the detection results of 600 sub-images.

Step 5: Sub-images stitching.

That is, stitch the detection results of 600 sub-images.

Step 6: Output ship detection results of the large-scale SAR image.

That is, ship detection results are presented in the raw large-scale space-borne SAR image.

To sum up, in brief, from Figure 14, if using LS-SSDD-v1.0 to train detection models, one can directly input a raw large-scene SAR images to be detected into models, and obtain the output of the final large-scene SAR ship detection results, which means that models can achieve a fully end-to-end large-scene detection flow, shown in Figure 1b of Section 1. In other words, the above steps are all completed on automated machines, without any manual involvement, so LS-SSDD-v1.0 enables a fully automatic detection flow in the last process in Figure 1b of Section 1.

4.5. Advantage 5: Numerous and Standardized Research Baselines

The existing datasets all have provided some research baselines for future related scholars, but it is a pity that their research baselines still have two shortcomings: (1) For one thing, apart from HRSID, the research baselines of the other three existing datasets are non-standardized, because most of their different detection methods are run under different deep learning frameworks (e.g., Caffe, Tensorflow, Pytorch, Keras, etc.), different training strategies (e.g., different training optimizers, different learning rate adjustment mechanisms, etc.), different image preprocessing means (e.g., different image resizing, different data augmentation ways, etc.), different hyper-parameter configurations (e.g., different batch sizes, different detection thresholds, etc.), different experimental development environments (e.g., CPU and GPU), different programing languages (e.g., C++, Python, Matlab, etc.), etc., which possibly bring many uncertainties in both detection accuracy and detection speed, according to our experience [5]. (2) For another thing, the numbers of their research baselines are generally insufficient, which is not conducive to make a more adequate method comparison in detection performance for other scholars in the future.

Thus, we provide standardized and numerous baselines of LS-SSDD-v1.0, as shown in Table 8:

Table 8.

Research baselines comparison with other existing datasets.

- Standardized. In Table 8, the research baselines of LS-SSDD-v1.0 are run under the same detection framework (MMDetection with Pytorch), the same training strategies, the same image preprocessing method, almost the same hyper-parameter configuration (it is impossible for different models to have the same hyper-parameters, but we try to keep them basically the same), the same experimental environments, the same programing language (Python), etc. Moreover, HRSID provided standardized ones, but their detection accuracy evaluation indexes followed the evaluation protocol on COCO [74], which is scarcely used by other scholars. One possible reason is that the COCO evaluation protocol only can reflect the detection probability but not the false alarm probability, so many scholars generally abandon it. Thus, LS-SSDD-v1.0 uses the evaluation protocol on PASCAL VOC [79], i.e., Recall, Precision, mAP and F1 as accuracy criteria.

- Numerous. From Table 8, the number of research baselines of LS-SSDD-v1.0 is far more than other datasets, i.e., 30 of LS-SSDD-v1.0 >> 9 of AIR-SAR-Ship-1.0 > 8 of HRSID > 6 of SAR-Ship-Dataset > 2 of SSDD. Therefore, in the future, other scholars can make more research on the basis of these 30 research baselines.

To be clear, we do not provide the research baselines of traditional methods, because (1) traditional methods generally run in the Matlab development environment instead of Python, and moreover they scarcely call GPU for training and test acceleration; and (2) the detection accuracy of traditional methods is far inferior to that of deep learning methods (See Cui et al. [13] and Sun et al. [22].), so it has little significance compared with traditional methods. Moreover, as to why methods based on deep learning is generally superior to traditional methods, it is still an unsolved problem, probably from the fact that deep networks can extract more representative and abstract hierarchical features [71].

5. Experiments

Our experiments are run on a personal computer with RTX2080Ti GPU and i9-9900k CPU, using Python and MMDetection [97] based on PyTorch. CUDA is used to call GPU to accelerate training.

5.1. Experimental Details

We train 30 deep learning detectors on LS-SSDD-v1.0 by using the MMDetection toolbox [97]. We input the sub-images with 800 × 800 pixels into networks for training. SSD-300′s input image size is set as 300 × 300 pixels and SSD-512 as 512 × 512 pixels. We train the following 30 detectors by using stochastic gradient descent (SGD) [98] for 12 epochs. Given limited GPU memory, the batch size is set as 1. The learning rate is set as 0.01 with momentum as 0.9 and weight decay as 0.0001. In order to further reduce the training loss, the learning rate is also reduced 10 times per epoch from 8-epoch to 11-epoch. We also adopt the linear scaling rule (LSR) [99] to adjust the learning rate proportional. These 30 detectors are (1) Faster R-CNN [75] without FPN [100], (2) Faster R-CNN [75], (3) OHEM Faster R-CNN [101], (4) CARAFE Faster R-CNN [102], (5) SA Faster R-CNN [103], (6) SE Faster R-CNN [104], (7) CBAM Faster R-CNN [105], (8) PANET [106], (9) Cascade R-CNN [78], (10) OHEM Cascade R-CNN [101], (11) CARAFE Cascade R-CNN [102], (12) SA Cascade R-CNN [103], (13) SE Cascade R-CNN [104], (14) CBAM Cascade R-CNN [105], (15) Libra R-CNN [107], (16) Double-Head R-CNN [108], (17) Grid R-CNN [109], (18) DCN [110], (19) EfficientDet [111], (20) Guided Anchoring [112], (21) HR-SDNet [6], (22) SSD-300 [76], (23) SSD-512 [76], (24) YOLOv3 [113], (25) RetinaNet [77], (26) GHM [114], (27) FCOS [115], (28) ATSS [116], (29) FreeAnchor [117], and (30) FoveaBox [118]. We utilize (1) ResNet-50 [119] as the backbone networks of Faster R-CNN without FPN, Faster R-CNN, OHEM Faster R-CNN, CARAFE Faster R-CNN, SA Faster R-CNN, SE Faster R-CNN, CBAM Faster R-CNN, PANET, Cascade R-CNN, OHEM Cascade R-CNN, CARAFE Cascade R-CNN, SA Cascade R-CNN, SE Cascade R-CNN, CBAM Cascade R-CNN, Libra R-CNN, Double-Head R-CNN, Grid R-CNN, DCN, EfficientDet, Guided Anchoring, RetinaNet, GHM, FCOS, ATSS, FreeAnchor and FoveaBox; (2) HRNetV2p-w40 [6] as the backbone network of HR-SDNet; (3) VGG-16 [120] as the backbone network of SSD; and (4) DarkNet-53 as the backbone network of YOLOv3. These backbones are all pre-trained on ImageNet [121] and their pre-training weights are transferred into networks for fine-tuning [121].

Moreover, the loss functions of different methods are basically the same as their original work. When performing ship detection in the test process, we set the score threshold as 0.5 and also set the intersection over union (IOU) threshold of detections as 0.5, which means that if the overlap area of a predictive box and a ground truth box exceeds or equals 50%, then ships in this box are detected successfully [1]. IOU is defined by (BG∩BD)/(BG∪BD), where BG denotes ground truth boxes and BD denotes detection boxes. Non-maximum suppression (NMS) [122] is used to suppress repeatedly detected boxes whose threshold is set as 0.5. We do not select Soft-NMS [123] used by Wei et al. [6] to complete such operation, because (1) Soft-NMS is insensitive to high score threshold, i.e., 0.5 of ours >> 0.05 of Wei et al. [6]; and (2) Soft-NMS increases extra computation cost, declining detection speed.

5.2. Evaluation Indices

Detection probability Pd, false alarm one Pf and missed detection one Pm are defined by [5]

where TP is true positive, GT is ground truth, FP is false positive, and FN is false negative.

Recall, precision, mean average precision (mAP), and F1 are defined by [5,16]

where P denotes precision, R denotes recall, and P(R) denotes the precision–recall curve.

Frames per second (FPS) is defined by 1/t, where t denotes the time to detect a small sub-image. As a result, the total time T to detect a raw large-scale space-borne SAR image equals 600 t.

6. Results

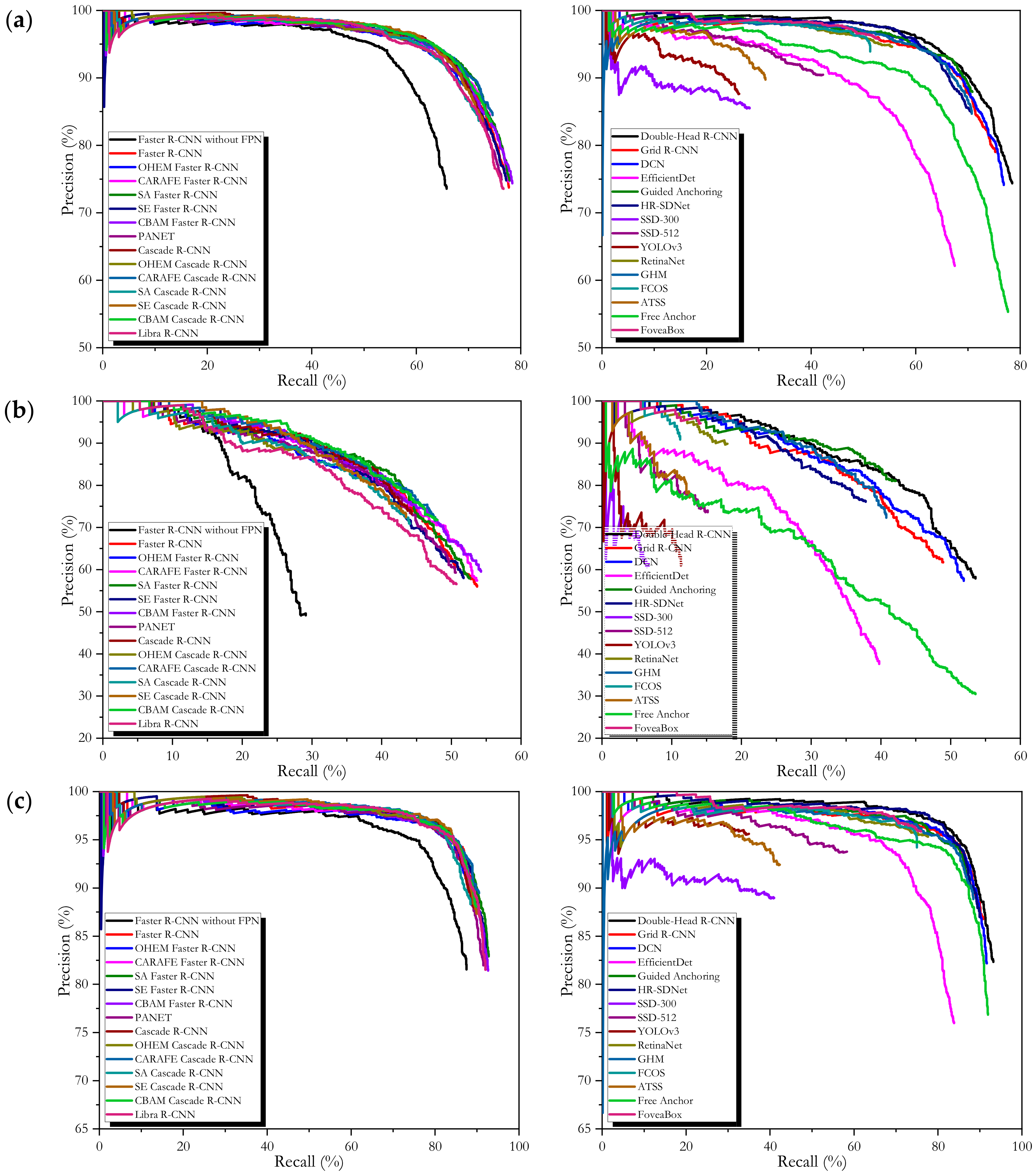

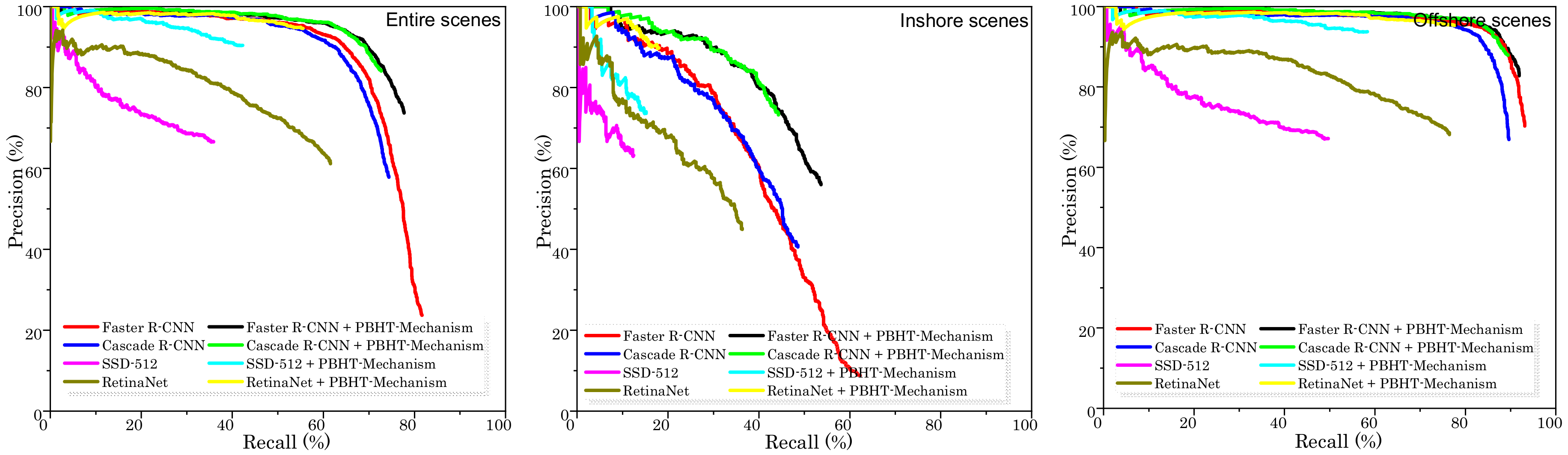

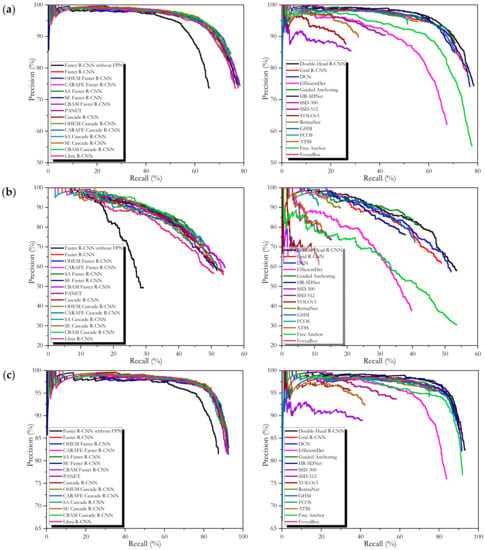

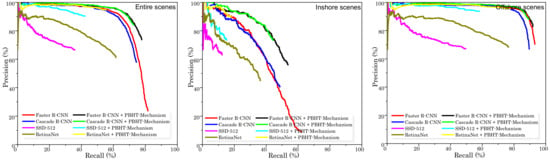

Table 9, Table 10 and Table 11, respectively, shows the research baselines on the entire scenes, the inshore ones and the offshore ones. Figure 15 respectively shows precision–recall curves on the entire scenes, the inshore ones and the offshore ones.

Table 9.

Research baselines of the entire scenes. Pd: Detection probability; Pf: False alarm probability; Pm: Missed detection probability; mAP: mean Average Precision.

Table 10.

Research baselines of the inshore scenes.

Table 11.

Research baselines of the offshore scenes.

Figure 15.

Precision–recall curves. (a) Entire scenes; (b) inshore scenes; (c) offshore scenes.

- If using mAP as the accuracy criteria, among the 30 detectors, the best on the entire scenes is 75.74% of double-head R-CNN, that on the inshore scenes is 47.70% of CBAM Faster R-CNN, and that on the offshore scenes is 91.34% of Double-Head R-CNN. If using F1 as the accuracy criteria, the best on the entire scenes is 0.79 of CARAFE Cascade R-CNN and CBAM Faster R-CNN, that on the inshore scenes is 0.59 of Faster R-CNN, and that on the offshore scenes is 0.90 of CARAFE Cascade R-CNN and CBAM Faster R-CNN. If using Pd as the accuracy criteria, the best on the entire scenes is 78.47% of Double-Head R-CNN, that on the inshore scenes is 54.25% of CBAM Faster R-CNN, and that on the offshore scenes is 93.18% of Double-Head R-CNN.

- There is a huge accuracy gap between the inshore scenes and the offshore ones, e.g., the inshore accuracy of Faster R-CNN is far inferior to the offshore one (46.76% mAP << 89.99% mAP and 0.59 F1 << 0.87 F1). This phenomenon is consistent with common sense, because ships in the inshore scenes are harder to detect than the offshore, due to the severe interference of land, harbor facilities, etc. Thus, one can use LS-SSDD-v1.0 to emphatically study the inshore ship detection.

- On the entire dataset, the optimal accuracies are 75.74% mAP of Double-Head R-CNN, 0.79 F1 of CARAFE Cascade R-CNN and CBAM Faster R-CNN, and 78.47% Pd of Double-Head R-CNN. Therefore, there is still a huge research space for future scholars. However, so far, the existing four datasets have almost reached a satisfactory accuracy (about 90% mAP), e.g., for SSDD, the existing open reports [1,2,4,5,6,15] have reached >95% mAP. Thus, related scholars may have resistance in driving the emergence of more excellent research results. In particular, it needs to be clarified that the accuracies on LS-SSDD-v1.0 are universally lower that on the other datasets if using same detectors. This phenomenon is because small ship detection in large-scale space-borne SAR images is a challenging task, instead of the problem from our annotation process. In fact, the seven steps in Section 3 has been able to guarantee the authenticity of dataset annotation. In short, LS-SSDD-v1.0 can promote a new round of SAR ship detection research upsurge.