Detection of Irrigated and Rainfed Crops in Temperate Areas Using Sentinel-1 and Sentinel-2 Time Series

Abstract

:1. Introduction

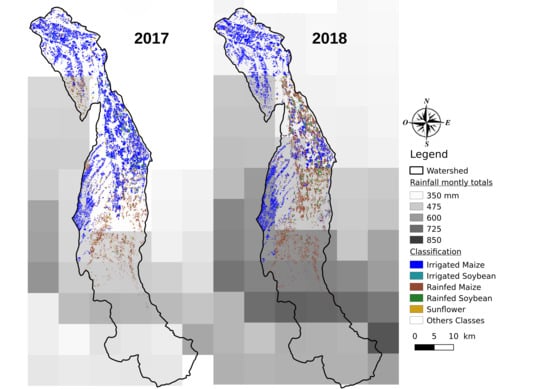

2. Study Site and Dataset

2.1. The Reference Dataset

2.2. Sentinel-2

2.3. Sentinel-1

2.4. Meteorological Data

3. Methods

3.1. Feature Computation

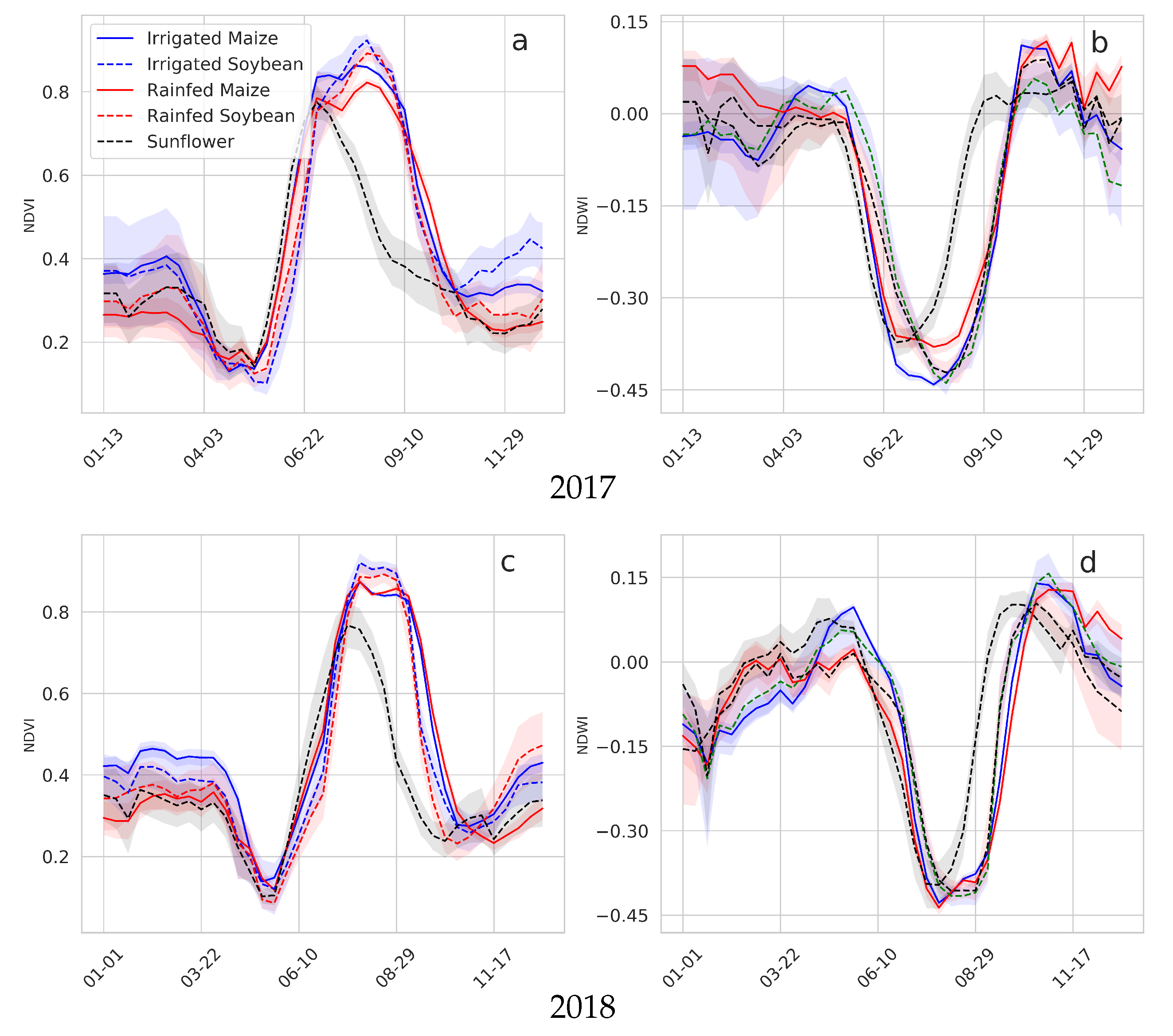

3.1.1. Optical Features

3.1.2. Radar Features

3.1.3. Cumulative Indices

3.2. Classification

3.3. Scenarios

- Scenario 1: with monthly cumulative SAR features only (VH, VV, VH/VV) referenced as “SAR only” in Figure 2,

- Scenario 2: with monthly cumulative Sentinel 2 features only (NDVI, NDRE, NDWI) referenced as “Optical only” in Figure 2,

- Scenario 3: with monthly cumulative optical and SAR features referenced as “Optical and SAR” in Figure 2,

- Scenario 4: scenario 3 with in addition cumulative rainfall referenced as “Optical, SAR and Rainfall” in Figure 2,

- Scenario 5: 10-day Optical, SAR features referenced as “10-day Optical and SAR” in Figure 2.

3.4. Validation

- Accuracy is the ratio between the correctly classified pixels and the sum of all pixels classified as this class, and

- Recall is the ratio between the correctly classified pixels and the total number of reference data pixels of that class.

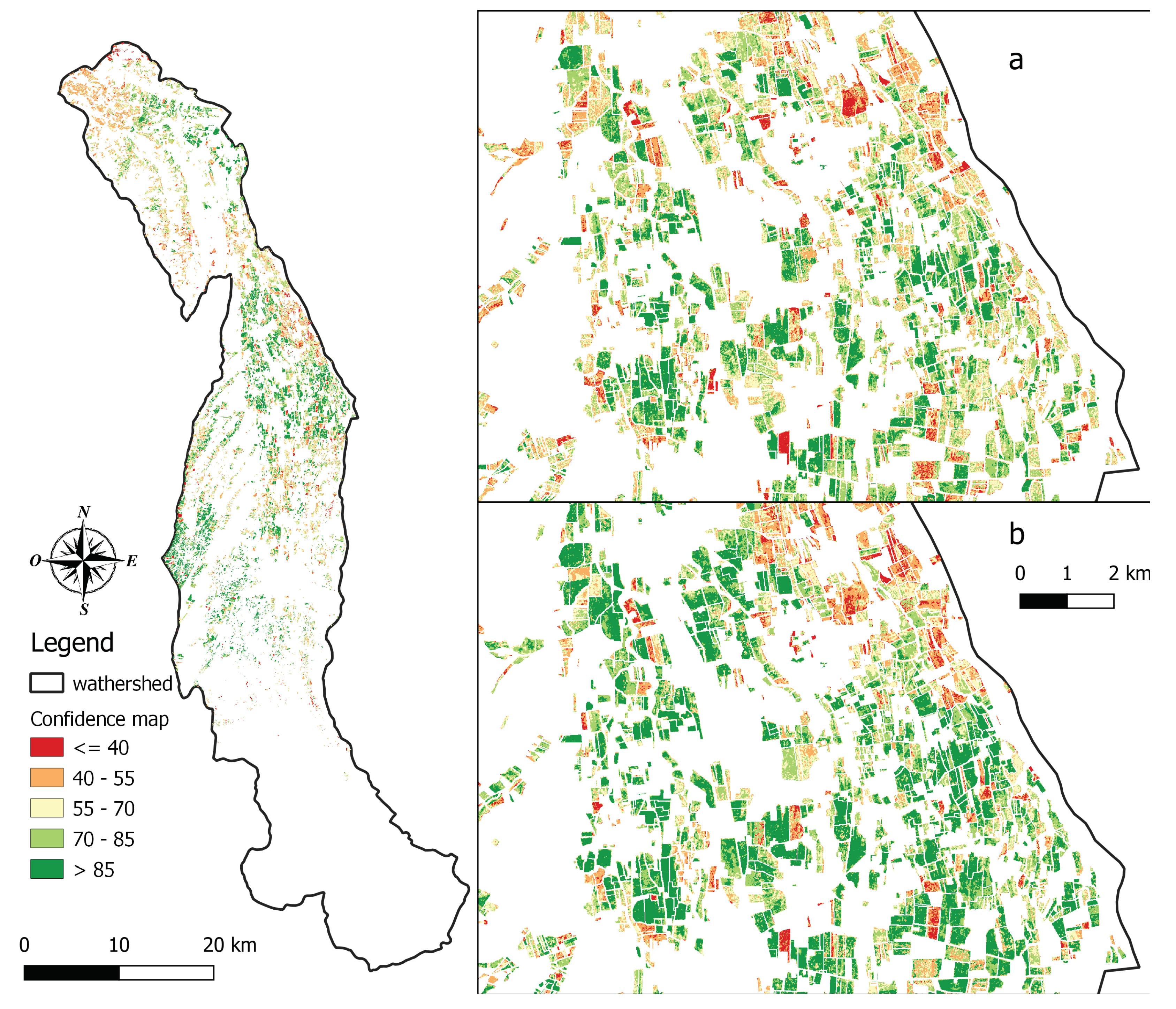

3.5. Confidence Map

3.6. Postprocessing

4. Results

4.1. Performance of Each Scenario

4.2. Fscore Results

4.3. Analysis of Confusion Between Classes for Irrigated Crops

4.4. Confidence Map

4.5. Regional Statistics

5. Discussion

5.1. Optical or/and Radar Features

5.2. Impact of Cumulative Indices

5.3. Contribution of Rainfall Features

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bruinsma, J. Food and Agriculture Organization of the United Nations. World Agriculture: Towards 2015/2030: An FAO Perspective; Earthscan Publications Ltd.: London, UK, 2003. [Google Scholar]

- Schaldach, R.; Koch, J.; der Beek, T.A.; Kynast, E.; Flörke, M. Current and future irrigation water requirements in pan-Europe: An integrated analysis of socio-economic and climate scenarios. Glob. Planet. Chang. 2012, 94, 33–45. [Google Scholar] [CrossRef]

- Dubois, O. The State of the World’s Land and Water Resources for Food and Agriculture: Managing Systems at Risk; Earthscan Publications Ltd.: London, UK, 2011. [Google Scholar]

- Ozdogan, M.; Yang, Y.; Allez, G.; Cervantes, C. Remote Sensing of Irrigated Agriculture: Opportunities and Challenges. Remote Sens. 2010, 2, 2274–2304. [Google Scholar] [CrossRef] [Green Version]

- Bastiaanssen, W.G.; Molden, D.J.; Makin, I.W. Remote sensing for irrigated agriculture: Examples from research and possible applications. Agric. Water Manag. 2000, 46, 137–155. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; El Hajj, M.; Zribi, M. Potential of Sentinel-1 Surface Soil Moisture Product for Detecting Heavy Rainfall in the South of France. Sensors 2019, 19, 802. [Google Scholar] [CrossRef] [Green Version]

- Boken, V.K.; Hoogenboom, G.; Kogan, F.N.; Hook, J.E.; Thomas, D.L.; Harrison, K.A. Potential of using NOAA-AVHRR data for estimating irrigated area to help solve an inter-state water dispute. Int. J. Remote Sens. 2004, 25, 2277–2286. [Google Scholar] [CrossRef]

- Kamthonkiat, D.; Honda, K.; Turral, H.; Tripathi, N.; Wuwongse, V. Discrimination of irrigated and rainfed rice in a tropical agricultural system using SPOT VEGETATION NDVI and rainfall data. Int. J. Remote Sens. 2005, 26, 2527–2547. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Schull, M.; Turral, H. Ganges and Indus river basin land use/land cover (LULC) and irrigated area mapping using continuous streams of MODIS data. Remote Sens. Environ. 2005, 95, 317–341. [Google Scholar] [CrossRef]

- Ozdogan, M.; Gutman, G. A new methodology to map irrigated areas using multi-temporal MODIS and ancillary data: An application example in the continental US. Remote Sens. Environ. 2008, 112, 3520–3537. [Google Scholar] [CrossRef] [Green Version]

- Portmann, F.T.; Siebert, S.; Döll, P. MIRCA2000—Global monthly irrigated and rainfed crop areas around the year 2000: A new high-resolution data set for agricultural and hydrological modeling. Glob. Biogeochem. Cycles 2010, 24. [Google Scholar] [CrossRef]

- Pervez, M.S.; Brown, J.F. Mapping irrigated lands at 250-m scale by merging MODIS data and national agricultural statistics. Remote Sens. 2010, 2, 2388–2412. [Google Scholar] [CrossRef] [Green Version]

- Hajj, M.E.; Baghdadi, N.; Belaud, G.; Zribi, M.; Cheviron, B.; Courault, D.; Hagolle, O.; Charron, F. Irrigated Grassland Monitoring Using a Time Series of TerraSAR-X and COSMO-SkyMed X-Band SAR Data. Remote Sens. 2014, 6, 10002–10032. [Google Scholar] [CrossRef] [Green Version]

- Zohaib, M.; Kim, H.; Choi, M. Detecting global irrigated areas by using satellite and reanalysis products. Sci. Total Environ. 2019, 677, 679–691. [Google Scholar] [CrossRef] [PubMed]

- Thenkabail, P.; Dheeravath, V.; Biradar, C.; Gangalakunta, O.R.; Noojipady, P.; Gurappa, C.; Velpuri, M.; Gumma, M.; Li, Y.; Thenkabail, P.S.; et al. Irrigated Area Maps and Statistics of India Using Remote Sensing and National Statistics. Remote Sens. 2009, 1, 50–67. [Google Scholar] [CrossRef] [Green Version]

- Cheema, M.J.M.; Bastiaanssen, W.G.M. Land use and land cover classification in the irrigated Indus Basin using growth phenology information from satellite data to support water management analysis. Agric. Water Manag. 2010, 97, 1541–1552. [Google Scholar] [CrossRef]

- Dheeravath, V.; Thenkabail, P.S.; Chandrakantha, G.; Noojipady, P.; Reddy, G.P.O.; Biradar, C.M.; Gumma, M.K.; Velpuri, M. Irrigated areas of India derived using MODIS 500 m time series for the years 2001–2003. ISPRS J. Photogramm. Remote Sens. 2010, 65, 42–59. [Google Scholar] [CrossRef]

- Gumma, M.K.; Thenkabail, P.S.; Hideto, F.; Nelson, A.; Dheeravath, V.; Busia, D.; Rala, A. Mapping irrigated areas of Ghana using fusion of 30 m and 250 m resolution remote-sensing data. Remote Sens. 2011, 3, 816–835. [Google Scholar] [CrossRef] [Green Version]

- Ambika, A.K.; Wardlow, B.; Mishra, V. Remotely sensed high resolution irrigated area mapping in India for 2000 to 2015. Sci. Data 2016, 3, 160118. [Google Scholar] [CrossRef] [Green Version]

- Bousbih, S.; Zribi, M.; El Hajj, M.; Baghdadi, N.; Lili-Chabaane, Z.; Gao, Q.; Fanise, P. Soil moisture and irrigation mapping in A semi-arid region, based on the synergetic use of Sentinel-1 and Sentinel-2 data. Remote Sens. 2018, 10, 1953. [Google Scholar] [CrossRef] [Green Version]

- Gao, Q.; Zribi, M.; Escorihuela, M.J.; Baghdadi, N.; Segui, P.Q. Irrigation Mapping Using Sentinel-1 Time Series at Field Scale. Remote Sens. 2018, 10, 1495. [Google Scholar] [CrossRef] [Green Version]

- Jalilvand, E.; Tajrishy, M.; Hashemi, S.A.G.Z.; Brocca, L. Quantification of irrigation water using remote sensing of soil moisture in a semi-arid region. Remote Sens. Environ. 2019, 231, 111226. [Google Scholar] [CrossRef]

- Xie, Y.; Lark, T.J.; Brown, J.F.; Gibbs, H.K. Mapping irrigated cropland extent across the conterminous United States at 30 m resolution using a semi-automatic training approach on Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2019, 155, 136–149. [Google Scholar] [CrossRef]

- Peña-Arancibia, J.L.; McVicar, T.R.; Paydar, Z.; Li, L.; Guerschman, J.P.; Donohue, R.J.; Dutta, D.; Podger, G.M.; van Dijk, A.I.J.M.; Chiew, F.H.S. Dynamic identification of summer cropping irrigated areas in a large basin experiencing extreme climatic variability. Remote Sens. Environ. 2014, 154, 139–152. [Google Scholar] [CrossRef]

- Shahriar Pervez, M.; Budde, M.; Rowland, J. Mapping irrigated areas in Afghanistan over the past decade using MODIS NDVI. Remote Sens. Environ. 2014, 149, 155–165. [Google Scholar] [CrossRef] [Green Version]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an Operational System for Crop Type Map Production Using High Temporal and Spatial Resolution Satellite Optical Imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef] [Green Version]

- Valero, S.; Morin, D.; Inglada, J.; Sepulcre, G.; Arias, M.; Hagolle, O.; Dedieu, G.; Bontemps, S.; Defourny, P.; Koetz, B.; et al. Production of a Dynamic Cropland Mask by Processing Remote Sensing Image Series at High Temporal and Spatial Resolutions. Remote Sens. 2016, 8, 55. [Google Scholar] [CrossRef] [Green Version]

- Immitzer, M.; Vuolo, F.; Atzberger, C.; Immitzer, M.; Vuolo, F.; Atzberger, C. First Experience with Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.I. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GISci. Remote Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Vogels, M.F.; De Jong, S.M.; Sterk, G.; Douma, H.; Addink, E.A. Spatio-temporal patterns of smallholder irrigated agriculture in the horn of Africa using GEOBIA and Sentinel-2 imagery. Remote Sens. 2019, 11, 143. [Google Scholar] [CrossRef] [Green Version]

- Fieuzal, R.; Duchemin, B.; Jarlan, L.; Zribi, M.; Baup, F.; Merlin, O.; Hagolle, O.; Garatuza-Payan, J. Combined use of optical and radar satellite data for the monitoring of irrigation and soil moisture of wheat crops. Hydrol. Earth Syst. Sci. 2011, 15, 1117–1129. [Google Scholar] [CrossRef] [Green Version]

- Ferrant, S.; Selles, A.; Le Page, M.; Herrault, P.A.; Pelletier, C.; Al-Bitar, A.; Mermoz, S.; Gascoin, S.; Bouvet, A.; Saqalli, M.; et al. Detection of Irrigated Crops from Sentinel-1 and Sentinel-2 Data to Estimate Seasonal Groundwater Use in South India. Remote Sens. 2017, 9, 1119. [Google Scholar] [CrossRef] [Green Version]

- Demarez, V.; Helen, F.; Marais-Sicre, C.; Baup, F. In-Season Mapping of Irrigated Crops Using Landsat 8 and Sentinel-1 Time Series. Remote Sens. 2019, 11, 118. [Google Scholar] [CrossRef] [Green Version]

- Bazzi, H.; Baghdadi, N.; Ienco, D.; El Hajj, M.; Zribi, M.; Belhouchette, H.; Escorihuela, M.J.; Demarez, V. Mapping Irrigated Areas Using Sentinel-1 Time Series in Catalonia, Spain. Remote Sens. 2019, 11, 1836. [Google Scholar] [CrossRef] [Green Version]

- Bazzi, H.; Baghdadi, N.; Fayad, I.; Zribi, M.; Belhouchette, H.; Demarez, V. Near Real-Time Irrigation Detection at Plot Scale Using Sentinel-1 Data. Remote Sens. 2020, 12, 1456. [Google Scholar] [CrossRef]

- Le Page, M.; Jarlan, L.; El Hajj, M.M.; Zribi, M.; Baghdadi, N.; Boone, A. Potential for the Detection of Irrigation Events on Maize Plots Using Sentinel-1 Soil Moisture Products. Remote Sens. 2020, 12, 1621. [Google Scholar] [CrossRef]

- Durand, Y.; Brun, E.; Merindol, L.; Guyomarc’h, G.; Lesaffre, B.; Martin, E. A meteorological estimation of relevant parameters for snow models. Ann. Glaciol. 1993, 18, 65–71. [Google Scholar] [CrossRef] [Green Version]

- Cantelaube, P.; Carles, M. Le registre parcellaire graphique: Des données géographiques pour décrire la couverture du sol agricole. In Le Cahier des Techniques de L’INRA; INRA: Paris, France, 2014; pp. 58–64. [Google Scholar]

- IRRIGADOUR, O.U. Organisme Unique de Gestion Collective IRRIGADOUR, Rapport Annuel 2018; Technical Report; Organisme Unique IRRIGADOUR: Paris, France, 2019. [Google Scholar]

- Monod, B. Carte Géologique Numérique à 1/250 000 de la Région Midi-Pyrénées; Notice Technique -BRGM/RP-63650-FR; BRGM: Paris, France, 2014; p. 160. [Google Scholar]

- Hagolle, O.; Huc, M.; Villa Pascual, D.; Dedieu, G. A multi-temporal and multi-spectral method to estimate aerosol optical thickness over land, for the atmospheric correction of FormoSat-2, LandSat, VENμS and Sentinel-2 images. Remote Sens. 2015, 7, 2668–2691. [Google Scholar] [CrossRef] [Green Version]

- Baetens, L.; Desjardins, C.; Hagolle, O. Validation of Copernicus Sentinel-2 Cloud Masks Obtained from MAJA, Sen2Cor, and FMask Processors Using Reference Cloud Masks Generated with a Supervised Active Learning Procedure. Remote Sens. 2019, 11, 433. [Google Scholar] [CrossRef] [Green Version]

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open source processing of remote sensing images. Open Geospat. Data Softw. Stand. 2017, 2, 15. [Google Scholar] [CrossRef] [Green Version]

- Inglada, J.; Vincent, A.; Arias, M.; Tardy, B.; Morin, D.; Rodes, I. Operational High Resolution Land Cover Map Production at the Country Scale Using Satellite Image Time Series. Remote Sens. 2017, 9, 95. [Google Scholar] [CrossRef] [Green Version]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral reflectance changes associated with autumn senescence of Aesculus hippocastanum L. and Acer platanoides L. leaves. Spectral features and relation to chlorophyll estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Gao, B.C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Patel, P.; Srivastava, H.S.; Panigrahy, S.; Parihar, J.S. Comparative evaluation of the sensitivity of multi-polarized multi-frequency SAR backscatter to plant density. Int. J. Remote Sens. 2006, 27, 293–305. [Google Scholar] [CrossRef]

- Jacome, A.; Bernier, M.; Chokmani, K.; Gauthier, Y.; Poulin, J.; De Sève, D. Monitoring volumetric surface soil moisture content at the La Grande basin boreal wetland by radar multi polarization data. Remote Sens. 2013, 5, 4919–4941. [Google Scholar] [CrossRef] [Green Version]

- Amazirh, A.; Merlin, O.; Er-Raki, S.; Gao, Q.; Rivalland, V.; Malbeteau, Y.; Khabba, S.; Escorihuela, M.J. Retrieving surface soil moisture at high spatio-temporal resolution from a synergy between Sentinel-1 radar and Landsat thermal data: A study case over bare soil. Remote Sens. Environ. 2018, 211, 321–337. [Google Scholar] [CrossRef]

- Chauhan, S.; Srivastava, H.S. Comparative evaluation of the sensitivity of multi-polarized SAR and optical data for various land cover classes. Int. J. Adv. Remote Sens. GIS Geogr. 2016, 4, 1–14. [Google Scholar]

- Keeling, C.; Tucker, C.; Asrar, G.; Nemani, R. Increased plant growth in the northern high latitudes from 1981 to 1991. Nature 1997, 386, 698–702. [Google Scholar]

- Dong, J.; Kaufmann, R.K.; Myneni, R.B.; Tucker, C.J.; Kauppi, P.E.; Liski, J.; Buermann, W.; Alexeyev, V.; Hughes, M.K. Remote sensing estimates of boreal and temperate forest woody biomass: Carbon pools, sources, and sinks. Remote Sens. Environ. 2003, 84, 393–410. [Google Scholar] [CrossRef] [Green Version]

- Myneni, R.; Williams, D. On the relationship between FAPAR and NDVI. Remote Sens. Environ. 1994, 49, 200–211. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Miao, X.; Heaton, J.S.; Zheng, S.; Charlet, D.A.; Liu, H. Applying tree-based ensemble algorithms to the classification of ecological zones using multi-temporal multi-source remote-sensing data. Int. J. Remote Sens. 2012, 33, 1823–1849. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Marais Sicre, C.; Dedieu, G. Effect of Training Class Label Noise on Classification Performances for Land Cover Mapping with Satellite Image Time Series. Remote Sens. 2017, 9, 173. [Google Scholar] [CrossRef] [Green Version]

- Igel, C.; Heidrich-Meisner, V.; Glasmachers, T. Shark. J. Mach. Learn. Res. 2008, 9, 993–996. [Google Scholar]

- Bouttier, F. Arome, avenir de la prévision régionale. In La Météorologie; Société Météorologique de France: Paris, France, 2007. [Google Scholar]

- Tabary, P.; Desplats, J.; Do Khac, K.; Eideliman, F.; Gueguen, C.; Heinrich, J.C. The New French Operational Radar Rainfall Product. Part II: Validation. Weather Forecast. 2007, 22, 409–427. [Google Scholar] [CrossRef]

| Growing Year | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jan. | Feb. | Mar. | April | May | June | July | Aug. | Sept. | Oct. | Nov. | Dec. | |||||||

| Maize | ||||||||||||||||||

| Soybean | ||||||||||||||||||

| Sunflower | ||||||||||||||||||

| Class Label | Number of Plots | Total Area Sampled (ha) | Distribution (%) | RPG (%) | ||||

|---|---|---|---|---|---|---|---|---|

| 2017 | 2018 | 2017 | 2018 | 2017 | 2018 | 2017 | 2018 | |

| Maize irrigated | 526 | 639 | 943 | 727 | 60 | 48.1 | 82.8 | 84 |

| Maize rainfed | 198 | 175 | 302 | 500 | 19.2 | 33.1 | ||

| Soybean irrigated | 31 | 41 | 54 | 85 | 3.4 | 5.6 | 8.2 | 9.6 |

| Soybean rainfed | 27 | 38 | 77 | 151 | 4.9 | 10 | ||

| Sunflower | 50 | 49 | 120 | 40 | 7.6 | 2.7 | 8.6 | 5.5 |

| Name | Description | Equation |

|---|---|---|

| NDVI | Normalized Difference Vegetation Index | (NIR − Red)/(NIR + Red) |

| NDRE | Normalized Difference Red-Edge | (NIR − Red-Edge)/(NIR + Red-Edge) |

| NDWI | Normalized Difference Water Index | (NIR − SWIR)/(NIR + SWIR) |

| VV | Vertical—Vertical Polarisation | - |

| VH | Vertical—Horizontal Polarisation | - |

| VH/VV | Ratio | - |

| ID | Scenario | Number of Features | |

|---|---|---|---|

| Cumulative | 1 | SAR only | 24 |

| 2 | Optical only | 24 | |

| 3 | Optical and SAR | 48 | |

| 4 | Optical, SAR and rainfall data | 56 | |

| Not cumulative | 5 | 10-day Optical and SAR | 385 |

| Class Label | 2017 | 2018 | ||

|---|---|---|---|---|

| Training | Validation | Training | Validation | |

| Irrigated Maize | 10,000 | 51,731 | 10,000 | 33,651 |

| Rainfed Maize | 10,000 | 12,606 | 10,000 | 24,899 |

| Irrigated Soybean | 3388 | 2173 | 3844 | 4973 |

| Rainfed Soybean | 3461 | 4437 | 7319 | 7464 |

| Sunflower | 6502 | 4853 | 2173 | 1662 |

| CPU Time (in Hours) | RAM (in GB) | |||||

|---|---|---|---|---|---|---|

| Scenario | ID | Nb. of Features | Model Learning | Classification | Model Learning | Classification |

| SAR | 1 | 24 | 4.5 | 176 | 0.21 | 19 |

| Optical | 2 | 24 | 2.2 | 150 | 0.14 | 19 |

| Optical & SAR | 3 | 48 | 4.5 | 181 | 0.23 | 21 |

| Optical SAR and Rainfall | 4 | 54 | 3.5 | 164 | 0.22 | 21 |

| 10-days Optical & SAR | 5 | 385 | 6.6 | 739 | 0.57 | 22 |

| Class Label | 2017 | 2018 | ||

|---|---|---|---|---|

| Scenario 3 | Scenario 4 | Scenario 3 | Scenario 4 | |

| Irrigated maize | ||||

| Rainfed maize | ||||

| Irrigated soybean | ||||

| Rainfed soybean | ||||

| Sunflower | ||||

| Class Label | 2017 | 2018 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RPG | Scenario 4 | Diff. | Scenario 5 | Diff. | RPG | Scenario 4 | Diff. | Scenario 5 | Diff. | |

| Maize | 20,987 | 21,479 | +2% | 20,601 | −2% | 20,242 | 20,695 | +2% | 20,149 | −1% |

| Sunflower | 2210 | 1973 | −11% | 2183 | −1% | 1242 | 1131 | −9% | 1339 | +8% |

| Soybean | 2301 | 1402 | −39% | 2445 | +6% | 2326 | 2001 | +2% | 2339 | 0% |

| Total | 25,498 | 24,854 | −3% | 25,229 | −1% | 23,811 | 23,827 | 0% | 23,827 | 0% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pageot, Y.; Baup, F.; Inglada, J.; Baghdadi, N.; Demarez, V. Detection of Irrigated and Rainfed Crops in Temperate Areas Using Sentinel-1 and Sentinel-2 Time Series. Remote Sens. 2020, 12, 3044. https://doi.org/10.3390/rs12183044

Pageot Y, Baup F, Inglada J, Baghdadi N, Demarez V. Detection of Irrigated and Rainfed Crops in Temperate Areas Using Sentinel-1 and Sentinel-2 Time Series. Remote Sensing. 2020; 12(18):3044. https://doi.org/10.3390/rs12183044

Chicago/Turabian StylePageot, Yann, Frédéric Baup, Jordi Inglada, Nicolas Baghdadi, and Valérie Demarez. 2020. "Detection of Irrigated and Rainfed Crops in Temperate Areas Using Sentinel-1 and Sentinel-2 Time Series" Remote Sensing 12, no. 18: 3044. https://doi.org/10.3390/rs12183044

APA StylePageot, Y., Baup, F., Inglada, J., Baghdadi, N., & Demarez, V. (2020). Detection of Irrigated and Rainfed Crops in Temperate Areas Using Sentinel-1 and Sentinel-2 Time Series. Remote Sensing, 12(18), 3044. https://doi.org/10.3390/rs12183044