Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review

Abstract

1. Introduction

2. Landsat 8 /OLI and Sentinel-2/MSI Characteristics

3. Background

4. Material and Methods

5. Results and Discussion

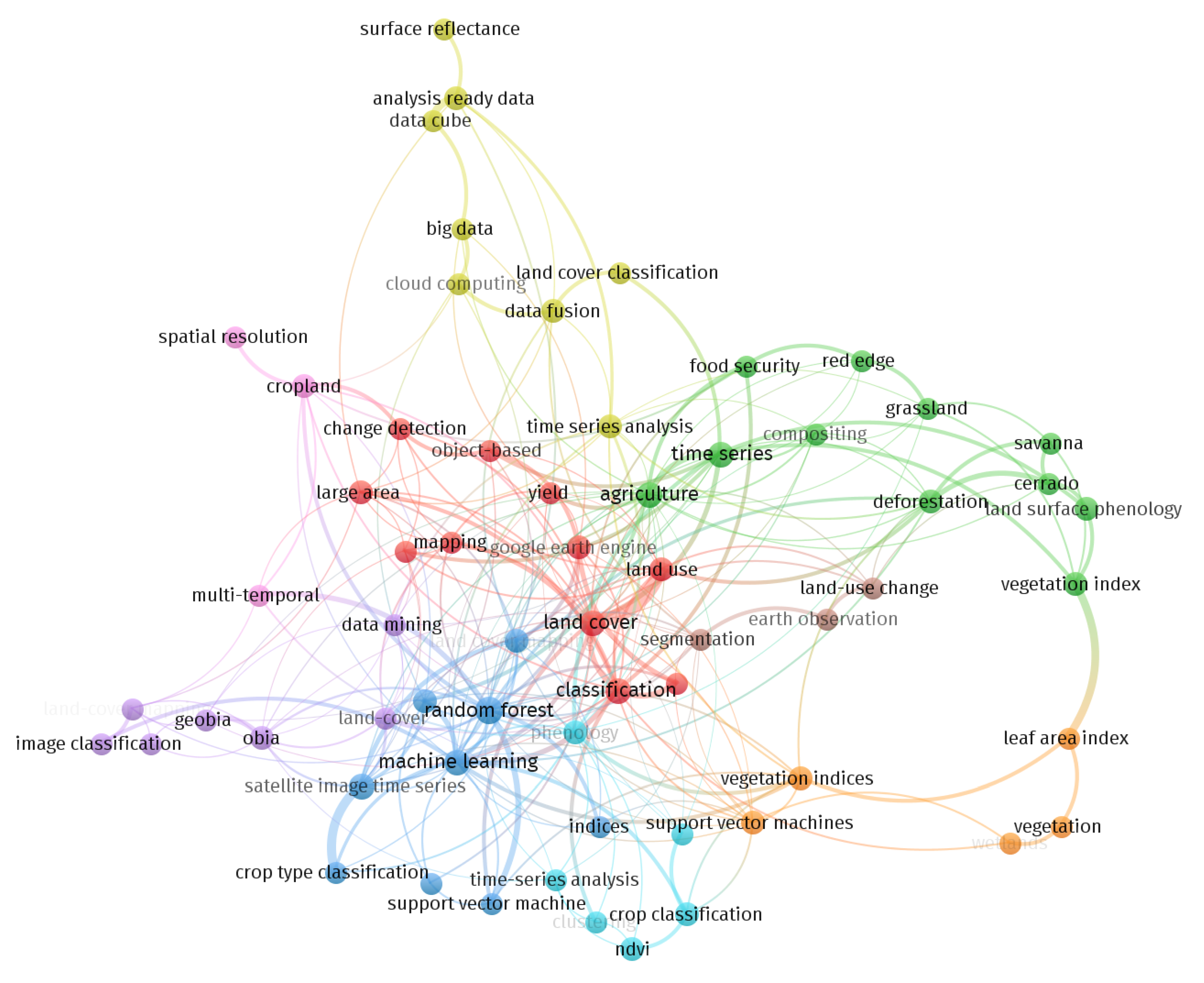

5.1. Consolidated Trends

5.1.1. SITS and Pixel-Based Approaches

5.1.2. SITS and Geographical Object-Based Approaches

5.1.3. Conventional Spectral Bands and VIs

5.1.4. Landsat 8 and Sentinel-2 Data Integration

5.2. Gaps and Challenges

5.2.1. The Gap of Representative Samples

5.2.2. Challenges to Deal with Big Data and Landsat-Sentinel’s Data Integration

5.3. Trends to Be Further Explored

5.3.1. Less Frequently Used Spectral Bands and VIs

5.3.2. Phenological Metrics

5.3.3. Data Hierarchy and Ancillary Data

5.3.4. Data Cubes and ARD

5.3.5. Incorporating Radar Data

5.4. Summary of Applications

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef]

- Lambin, E.; Rounsevell, M.; Geist, H. Are agricultural land-use models able to predict changes in land-use intensity? Agric. Ecosyst. Environ. 2000, 82, 321–331. [Google Scholar] [CrossRef]

- Steinhausen, M.J.; Wagner, P.D.; Narasimhan, B.; Waske, B. Combining Sentinel-1 and Sentinel-2 data for improved land use and land cover mapping of monsoon regions. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 595–604. [Google Scholar] [CrossRef]

- Becker-Reshef, I.; Justice, C.; Barker, B.; Humber, M.; Rembold, F.; Bonifacio, R.; Zappacosta, M.; Budde, M.; Magadzire, T.; Shitote, C.; et al. Strengthening agricultural decisions in countries at risk of food insecurity: The GEOGLAM Crop Monitor for Early Warning. Remote Sens. Environ. 2020, 237, 111553. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, L.; Zhao, F.R.; Cai, X.; Zhao, J.; Lu, H.; Gong, P. Tracking annual cropland changes from 1984 to 2016 using time-series Landsat images with a change-detection and post-classification approach: Experiments from three sites in Africa. Remote Sens. Environ. 2018, 218, 13–31. [Google Scholar] [CrossRef]

- Feng, Q.; Gong, J.; Liu, J.; Li, Y. Monitoring Cropland Dynamics of the Yellow River Delta based on Multi-Temporal Landsat Imagery over 1986 to 2015. Sustainability 2015, 7, 14834–14858. [Google Scholar] [CrossRef]

- Szantoi, Z.; Geller, G.N.; Tsendbazar, N.E.; See, L.; Griffiths, P.; Fritz, S.; Gong, P.; Herold, M.; Mora, B.; Obregón, A. Addressing the need for improved land cover map products for policy support. Environ. Sci. Policy 2020, 112, 28–35. [Google Scholar] [CrossRef]

- Whitcraft, A.K.; Becker-Reshef, I.; Justice, C.O.; Gifford, L.; Kavvada, A.; Jarvis, I. No pixel left behind: Toward integrating Earth Observations for agriculture into the United Nations Sustainable Development Goals framework. Remote Sens. Environ. 2019, 235, 111470. [Google Scholar] [CrossRef]

- Maus, V.; Câmara, G.; Appel, M.; Pebesma, E. dtwSat: Time-Weighted Dynamic Time Warping for Satellite Image Time Series Analysis in R. J. Stat. Softw. 2019, 88, 1–3. [Google Scholar] [CrossRef]

- Petitjean, F.; Kurtz, C.; Passat, N.; Gançarski, P. Spatio-temporal reasoning for the classification of satellite image time series. Pattern Recognit. Lett. 2012, 33, 1805–1815. [Google Scholar] [CrossRef]

- Khatami, R.; Mountrakis, G.; Stehman, S.V. A meta-analysis of remote sensing research on supervised pixel-based land-cover image classification processes: General guidelines for practitioners and future research. Remote Sens. Environ. 2016, 177, 89–100. [Google Scholar] [CrossRef]

- Bolton, D.K.; Gray, J.M.; Melaas, E.K.; Moon, M.; Eklundh, L.; Friedl, M.A. Continental-scale land surface phenology from harmonized Landsat 8 and Sentinel-2 imagery. Remote Sens. Environ. 2020, 240, 111685. [Google Scholar] [CrossRef]

- Wang, L.; Qi, F.; Shen, X.; Huang, J. Monitoring Multiple Cropping Index of Henan Province, China Based on MODIS-EVI Time Series Data and Savitzky-Golay Filtering Algorithm. Comput. Model. Eng. Sci. 2019, 119, 331–348. [Google Scholar] [CrossRef]

- Chaves, M.; Alves, M.; Oliveira, M.; Sáfadi, T. A Geostatistical Approach for Modeling Soybean Crop Area and Yield Based on Census and Remote Sensing Data. Remote Sens. 2018, 10, 680. [Google Scholar] [CrossRef]

- Picoli, M.C.A.; Camara, G.; Sanches, I.; Simões, R.; Carvalho, A.; Maciel, A.; Coutinho, A.; Esquerdo, J.; Antunes, J.; Begotti, R.A.; et al. Big earth observation time series analysis for monitoring Brazilian agriculture. ISPRS J. Photogramm. Remote Sens. 2018, 145, 1–12. [Google Scholar] [CrossRef]

- Simoes, R.; Picoli, M.C.A.; Camara, G.; Maciel, A.; Santos, L.; Andrade, P.R.; Sánchez, A.; Ferreira, K.; Carvalho, A. Land use and cover maps for Mato Grosso State in Brazil from 2001 to 2017. Sci. Data 2020, 7, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.; Chen, X.; He, C.; Han, G.; Peng, S.; Lu, M.; et al. Global land cover mapping at 30 m resolution: A POK-based operational approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, C.; He, Y.; Liu, Q.; Li, H.; Su, F.; Liu, G.; Bridhikitti, A. Land Cover Mapping in Cloud-Prone Tropical Areas Using Sentinel-2 Data: Integrating Spectral Features with Ndvi Temporal Dynamics. Remote Sens. 2020, 12, 1163. [Google Scholar] [CrossRef]

- Bégué, A.; Arvor, D.; Bellon, B.; Betbeder, J.; de Abelleyra, D.; Ferraz, R.P.; Lebourgeois, V.; Lelong, C.; Simões, M.; Verón, S.R. Remote sensing and cropping practices: A review. Remote Sens. 2018, 10, 99. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Wang, Q.; Blackburn, G.A.; Onojeghuo, A.O.; Dash, J.; Zhou, L.; Zhang, Y.; Atkinson, P.M. Fusion of Landsat 8 OLI and Sentinel-2 MSI Data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3885–3899. [Google Scholar] [CrossRef]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Wolanin, A.; Camps-valls, G.; Gómez-chova, L.; Mateo-garcía, G.; Tol, C.V.D.; Zhang, Y.; Guanter, L. Estimating crop primary productivity with Sentinel-2 and Landsat 8 using machine learning methods trained with radiative transfer simulations. Remote Sens. Environ. 2019, 225, 441–457. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved early crop type identification by joint use of high temporal resolution sar and optical image time series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Loveland, T.R.; Herold, M. Preface: Time series analysis imagery special issue. Remote Sens. Environ. 2020, 238, 111613. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Chirici, G.; Mura, M.; McInerney, D.; Py, N.; Tomppo, E.O.; Waser, L.T.; Travaglini, D.; McRoberts, R.E. A meta-analysis and review of the literature on the k-Nearest Neighbors technique for forestry applications that use remotely sensed data. Remote Sens. Environ. 2016, 176, 282–294. [Google Scholar] [CrossRef]

- Zeng, L.; Wardlow, B.D.; Xiang, D.; Hu, S.; Li, D. A review of vegetation phenological metrics extraction using time-series, multispectral satellite data. Remote Sens. Environ. 2020, 237, 111511. [Google Scholar] [CrossRef]

- Wulder, M.A.; Hilker, T.; White, J.C.; Coops, N.C.; Masek, J.G.; Pflugmacher, D.; Crevier, Y. Virtual constellations for global terrestrial monitoring. Remote Sens. Environ. 2015, 170, 62–76. [Google Scholar] [CrossRef]

- Piedelobo, L.; Hernández-López, D.; Ballesteros, R.; Chakhar, A.; Del Pozo, S.; González-Aguilera, D.; Moreno, M.A. Scalable pixel-based crop classification combining Sentinel-2 and Landsat-8 data time series: Case study of the Duero river basin. Agric. Syst. 2019, 171, 36–50. [Google Scholar] [CrossRef]

- European Space Agency. Sentinel-2: Resolution and Swath, 2020. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-2/instrument-payload/resolution-and-swath (accessed on 18 August 2020).

- National Aeronautics and Space Administration. Spectral Response of the Operational Land Imager In-Band, Band-Average Relative Spectral Response, 2020. Available online: https://landsat.gsfc.nasa.gov/preliminary-spectral-response-of-the-operational-land-imager-in-band-band-average-relative-spectral-response/ (accessed on 18 August 2020).

- Defourny, P.; Bontemps, S.; Bellemans, N.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Nicola, L.; Rabaute, T.; et al. Near real-time agriculture monitoring at national scale at parcel resolution: Performance assessment of the Sen2-Agri automated system in various cropping systems around the world. Remote Sens. Environ. 2019, 221, 551–568. [Google Scholar] [CrossRef]

- Maponya, M.G.; van Niekerk, A.; Mashimbye, Z.E. Pre-harvest classification of crop types using a Sentinel-2 time-series and machine learning. Comput. Electron. Agric. 2020, 169, 105164. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huete, A.; Jiang, A.; Yin, G.; Ding, Y.; Peng, D.; Hall, C.C.; Brown, L.; Shi, Y.; et al. Retrieva of crop biophysical parameters from Sentinel-2 remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 187–195. [Google Scholar] [CrossRef]

- Sánchez-Espinosa, A.; Schröder, C. Land use and land cover mapping in wetlands one step closer to the ground: Sentinel-2 versus Landsat 8. J. Environ. Manag. 2019, 247, 484–498. [Google Scholar] [CrossRef] [PubMed]

- Xie, Q.; Dash, J.; Huang, W.; Peng, D.; Qin, Q.; Mortimer, H.; Casa, R.; Pignatti, S.; Laneve, G.; Pascucci, S.; et al. Vegetation Indices Combining the Red and Red-Edge Spectral Information for Leaf Area Index Retrieval. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1482–1492. [Google Scholar] [CrossRef]

- Fernández-Manso, A.; Fernández-Manso, O.; Quintano, C. SENTINEL-2A red-edge spectral indices suitability for discriminating burn severity. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 170–175. [Google Scholar] [CrossRef]

- Munyati, C.; Balzter, H.; Economon, E. Correlating Sentinel-2 MSI-derived vegetation indices with in-situ reflectance and tissue macronutrients in savannah grass. Int. J. Remote Sens. 2020, 41, 3820–3844. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.I. Crop classification from Sentinel-2-derived vegetation indices using ensemble learning. J. Appl. Remote Sens. 2018, 12, 1. [Google Scholar] [CrossRef]

- Li, J.; Roy, D.P. A Global Analysis of Sentinel-2A, Sentinel-2B and Landsat-8 Data Revisit Intervals and Implications for Terrestrial Monitoring. Remote Sens. 2017, 9, 902. [Google Scholar] [CrossRef]

- Skakun, S.; Vermote, E.; Roger, J.C.; Franch, B. Combined Use of Landsat-8 and Sentinel-2A Images for Winter Crop Mapping and Winter Wheat Yield Assessment at Regional Scale. AIMS Geosci. 2017, 3, 163–186. [Google Scholar] [CrossRef]

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B.; et al. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147. [Google Scholar] [CrossRef]

- See, L.; Fritz, S.; You, L.; Ramankutty, N.; Herrero, M.; Justice, C.; Becker-Reshef, I.; Thornton, P.; Erb, K.; Gong, P.; et al. Improved global cropland data as an essential ingredient for food security. Glob. Food Secur. 2015, 4, 37–45. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăgut, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Wulder, M.A.; Coops, N.C.; Roy, D.P.; White, J.C.; Hermosilla, T. Land cover 2.0. Int. J. Remote Sens. 2018, 39, 4254–4284. [Google Scholar] [CrossRef]

- Pandey, P.C.; Koutsias, N.; Petropoulos, G.P.; Srivastava, P.K.; Dor, E.B. Land use/land cover in view of earth observation: Data sources, input dimensions, and classifiers—a review of the state of the art. Geocarto Int. 2019, 1–32. [Google Scholar] [CrossRef]

- Sudmanns, M.; Tiede, D.; Lang, S.; Bergstedt, H.; Trost, G.; Augustin, H.; Baraldi, A.; Blaschke, T. Big Earth data: Disruptive changes in Earth observation data management and analysis? Int. J. Digital Earth 2019, 1–19. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Yu, M.; Bambacus, M.; Cervone, G.; Clarke, K.; Duffy, D.; Huang, Q.; Li, J.; Li, W.; Li, Z.; Liu, Q.; et al. Spatiotemporal event detection: A review. Int. J. Digital Earth 2020, 1–27. [Google Scholar] [CrossRef]

- Phiri, D.; Simwanda, M.; Salekin, S.; Nyirenda, V.R.; Murayama, Y.; Ranagalage, M. Sentinel-2 Data for Land Cover/Use Mapping: A Review. Remote Sens. 2020, 12, 2291. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; and, D.G.A. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef] [PubMed]

- Mengist, W.; Soromessa, T.; Legese, G. Method for conducting systematic literature review and meta-analysis for environmental science research. MethodsX 2020, 7, 100777. [Google Scholar] [CrossRef] [PubMed]

- Bajocco, S.; Raparelli, E.; Teofili, T.; Bascietto, M.; Ricotta, C. Text Mining in Remotely Sensed Phenology Studies: A Review on Research Development, Main Topics, and Emerging Issues. Remote Sens. 2019, 11, 2751. [Google Scholar] [CrossRef]

- Doxani, G.; Vermote, E.; Roger, J.C.; Gascon, F.; Adriaensen, S.; Frantz, D.; Hagolle, O.; Hollstein, A.; Kirches, G.; Li, F.; et al. Atmospheric Correction Inter-Comparison Exercise. Remote Sens. 2018, 10, 352. [Google Scholar] [CrossRef]

- Giuliani, G.; Chatenoux, B.; De Bono, A.; Rodila, D.; Richard, J.P.; Allenbach, K.; Dao, H.; Peduzzi, P. Building an Earth Observations Data Cube: Lessons learned from the Swiss Data Cube (SDC) on generating Analysis Ready Data (ARD). Big Earth Data 2017, 1, 100–117. [Google Scholar] [CrossRef]

- Wu, Z.; Snyder, G.; Vadnais, C.; Arora, R.; Babcock, M.; Stensaas, G.; Doucette, P.; Newman, T. User needs for future Landsat missions. Remote Sens. Environ. 2019, 231, 111214. [Google Scholar] [CrossRef]

- Tian, F.; Wu, B.; Zeng, H.; Zhang, X.; Xu, J. Efficient Identification of Corn Cultivation Area with Multitemporal Synthetic Aperture Radar and Optical Images in the Google Earth Engine Cloud Platform. Remote Sens. 2019, 11, 629. [Google Scholar] [CrossRef]

- Ye, S.; Pontius, R.G.; Rakshit, R. A review of accuracy assessment for object-based image analysis: From per-pixel to per-polygon approaches. ISPRS J. Photogramm. Remote Sens. 2018, 141, 137–147. [Google Scholar] [CrossRef]

- Csillik, O.; Belgiu, M.; Asner, G.P.; Kelly, M. Object-Based Time-Constrained Dynamic Time Warping Classification of Crops Using Sentinel-2. Remote Sens. 2019, 11, 1257. [Google Scholar] [CrossRef]

- Peña, M.A.; Liao, R.; Brenning, A. Using spectrotemporal indices to improve the fruit-tree crop classification accuracy. ISPRS J. Photogramm. Remote Sens. 2017, 128, 158–169. [Google Scholar] [CrossRef]

- Niazmardi, S.; Homayouni, S.; Safari, A.; McNairn, H.; Shang, J.; Beckett, K. Histogram-based spatio-temporal feature classification of vegetation indices time-series for crop mapping. Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 34–41. [Google Scholar] [CrossRef]

- Puletti, N.; Chianucci, F.; Castaldi, C.; Puletti, N.; Chianucci, F.; Castaldi, C. Use of Sentinel-2 for forest classification in Mediterranean environments. Ann. Silvic. Res. 2017, 42, 1–7. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Liu, L.; Xiao, X.; Qin, Y.; Wang, J.; Xu, X.; Hu, Y.; Qiao, Z. Mapping cropping intensity in China using time series Landsat and Sentinel-2 images and Google Earth Engine. Remote Sens. Environ. 2020, 239, 111624. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Tilton, J.C.; Gumma, M.K.; Teluguntla, P.; Oliphant, A.; Congalton, R.G.; Yadav, K.; Gorelick, N. Nominal 30-m cropland extent map of continental Africa by integrating pixel-based and object-based algorithms using Sentinel-2 and Landsat-8 data on Google Earth Engine. Remote Sens. 2017, 9, 1065. [Google Scholar] [CrossRef]

- Bendini, H.N.; Fonseca, L.M.G.; Schwieder, M.; Körting, T.S.; Rufin, P.; Sanches, I.D.; Leitão, P.J.; Hostert, P. Detailed agricultural land classification in the Brazilian cerrado based on phenological information from dense satellite image time series. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101872. [Google Scholar] [CrossRef]

- Sharma, A.; Liu, X.; Yang, X.; Shi, D. A patch-based convolutional neural network for remote sensing image classification. Neural Netw. 2017, 95, 19–28. [Google Scholar] [CrossRef]

- Nasrallah, A.; Baghdadi, N.; Mhawej, M.; Faour, G.; Darwish, T.; Belhouchette, H.; Darwich, S. A Novel Approach for Mapping Wheat Areas Using High Resolution Sentinel-2 Images. Sensors 2018, 18, 2089. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Teluguntla, P.; Thenkabail, P.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Toure, S.I.; Stow, D.A.; chien Shih, H.; Weeks, J.; Lopez-Carr, D. Land cover and land use change analysis using multi-spatial resolution data and object-based image analysis. Remote Sens. Environ. 2018, 210, 259–268. [Google Scholar] [CrossRef]

- Guttler, F.; Ienco, D.; Nin, J.; Teisseire, M.; Poncelet, P. A graph-based approach to detect spatiotemporal dynamics in satellite image time series. ISPRS J. Photogramm. Remote Sens. 2017, 130, 92–107. [Google Scholar] [CrossRef]

- Goodin, D.G.; Anibas, K.L.; Bezymennyi, M. Mapping land cover and land use from object-based classification: An example from a complex agricultural landscape. Int. J. Remote Sens. 2015, 36, 4702–4723. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D.P. Conterminous United States crop field size quantification from multi-temporal Landsat data. Remote Sens. Environ. 2016, 172, 67–86. [Google Scholar] [CrossRef]

- Khiali, L.; Ienco, D.; Teisseire, M. Object-oriented satellite image time series analysis using a graph-based representation. Ecol. Informatics 2018, 211, 52–64. [Google Scholar] [CrossRef]

- Watkins, B.; van Niekerk, A. A comparison of object-based image analysis approaches for field boundary delineation using multi-temporal Sentinel-2 imagery. Comput. Electron. Agric. 2019, 158, 294–302. [Google Scholar] [CrossRef]

- Graesser, J.; Ramankutty, N. Detection of cropland field parcels from Landsat imagery. Remote Sens. Environ. 2017, 201, 165–180. [Google Scholar] [CrossRef]

- Yin, H.; Pflugmacher, D.; Li, A.; Li, Z.; Hostert, P. Land use and land cover change in Inner Mongolia—Understanding the effects of China’s re-vegetation programs. Remote Sens. Environ. 2018, 204, 918–930. [Google Scholar] [CrossRef]

- Shang, M.; Wang, S.X.; Zhou, Y.; Du, C. Effects of Training Samples and Classifiers on Classification of Landsat-8 Imagery. J. Indian Soc. Remote Sens. 2018, 46, 1333–1340. [Google Scholar] [CrossRef]

- Clark, M.L. Comparison of multi-seasonal Landsat 8, Sentinel-2 and hyperspectral images for mapping forest alliances in Northern California. ISPRS J. Photogramm. Remote Sens. 2020, 159, 26–40. [Google Scholar] [CrossRef]

- Meyer, L.H.; Heurich, M.; Beudert, B.; Premier, J.; Pflugmacher, D. Comparison of Landsat-8 and Sentinel-2 Data for Estimation of Leaf Area Index in Temperate Forests. Remote Sens. 2019, 11, 1160. [Google Scholar] [CrossRef]

- Sun, L.; Chen, J.; Guo, S.; Deng, X.; Han, Y. Integration of Time Series Sentinel-1 and Sentinel-2 Imagery for Crop Type Mapping over Oasis Agricultural Areas. Remote Sens. 2020, 12, 158. [Google Scholar] [CrossRef]

- Corti, M.; Cavalli, D.; Cabassi, G.; Marino Gallina, P.; Bechini, L. Does remote and proximal optical sensing successfully estimate maize variables? A review. Eur. J. Agron. 2018, 99, 37–50. [Google Scholar] [CrossRef]

- Suess, S.; van der Linden, S.; Okujeni, A.; Griffiths, P.; Leitão, P.J.; Schwieder, M.; Hostert, P. Characterizing 32 years of shrub cover dynamics in southern Portugal using annual Landsat composites and machine learning regression modeling. Remote Sens. Environ. 2018, 219, 353–364. [Google Scholar] [CrossRef]

- Rouse, R.W.H.; Haas, J.A.W.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Third Earth Resources Technology Satellite-1 Symposium—Volume I: Technical Presentations; NASA SP-351; NASA: Washington, DC, USA, 1974; pp. 309–317. [Google Scholar]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Huete, A. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Schultz, M.; Clevers, J.G.; Carter, S.; Verbesselt, J.; Avitabile, V.; Quang, H.V.; Herold, M. Performance of vegetation indices from Landsat time series in deforestation monitoring. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 318–327. [Google Scholar] [CrossRef]

- Gao, B.C. NDWI A Normalized Difference Water Index for Remote Sensing of Vegetation Liquid Water From Space. Remote Sens. Environ. 1996, 266, 257–266. [Google Scholar] [CrossRef]

- Ferrant, S.; Selles, A.; Le Page, M.; Herrault, P.A.; Pelletier, C.; Al-Bitar, A.; Mermoz, S.; Gascoin, S.; Bouvet, A.; Saqalli, M.; et al. Detection of irrigated crops from Sentinel-1 and Sentinel-2 data to estimate seasonal groundwater use in South India. Remote Sens. 2017, 9, 1119. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS- MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Frampton, W.J.; Dash, J.; Watmough, G.; Milton, E.J. Evaluating the capabilities of Sentinel-2 for quantitative estimation of biophysical variables in vegetation. ISPRS J. Photogramm. Remote Sens. 2013, 82, 83–92. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- McFeeters, S.K. The use of the Normalized Difference Water Index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar] [CrossRef]

- Xu, H. Modification of normalised difference water index (NDWI) to enhance open water features in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Parente, L.; Ferreira, L.; Faria, A.; Nogueira, S.; Araújo, F.; Teixeira, L.; Hagen, S. Monitoring the brazilian pasturelands: A new mapping approach based on the landsat 8 spectral and temporal domains. Int. J. Appl. Earth Obs. Geoinf. 2017, 62, 135–143. [Google Scholar] [CrossRef]

- Palchowdhuri, Y.; Valcarce-Diñeiro, R.; King, P.; Sanabria-Soto, M. Classification of multi-temporal spectral indices for crop type mapping: A case study in Coalville, UK. J. Agric. Sci. 2018, 156, 24–36. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.I. Mapping crop cover using multi-temporal Landsat 8 OLI imagery. Int. J. Remote Sens. 2017, 38, 4348–4361. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Pastick, N.J.; Wylie, B.K.; Wu, Z. Spatiotemporal analysis of Landsat-8 and Sentinel-2 data to support monitoring of dryland ecosystems. Remote Sens. 2018, 10, 791. [Google Scholar] [CrossRef]

- Franch, B.; Vermote, E.; Skakun, S.; Roger, J.C.; Masek, J.; Ju, J.; Villaescusa-Nadal, J.; Santamaria-Artigas, A. A Method for Landsat and Sentinel 2 (HLS) BRDF Normalization. Remote Sens. 2019, 11, 632. [Google Scholar] [CrossRef]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-annual reflectance composites from Sentinel-2 and Landsat for national-scale crop and land cover mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Roy, D.P.; Yan, L. Robust Landsat-based crop time series modelling. Remote Sens. Environ. 2018, 238, 110810. [Google Scholar] [CrossRef]

- Schwieder, M.; Leitão, P.J.; da Cunha Bustamante, M.M.; Ferreira, L.G.; Rabe, A.; Hostert, P. Mapping Brazilian savanna vegetation gradients with Landsat time series. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 361–370. [Google Scholar] [CrossRef]

- Roy, D.P.; Li, J.; Zhang, H.K.; Yan, L.; Huang, H.; Li, Z. Examination of Sentinel-2A multi-spectral instrument (MSI) reflectance anisotropy and the suitability of a general method to normalize MSI reflectance to nadir BRDF adjusted reflectance. Remote Sens. Environ. 2017, 199, 25–38. [Google Scholar] [CrossRef]

- Flood, N. Comparing Sentinel-2A and Landsat 7 and 8 Using Surface Reflectance over Australia. Remote Sens. 2017, 9, 659. [Google Scholar] [CrossRef]

- Griffiths, P.; Nendel, C.; Pickert, J.; Hostert, P. Towards national-scale characterization of grassland use intensity from integrated Sentinel-2 and Landsat time series. Remote Sens. Environ. 2019, 238, 111124. [Google Scholar] [CrossRef]

- Zhou, Q.; Rover, J.; Brown, J.; Worstell, B.; Howard, D.; Wu, Z.; Gallant, A.L.; Rundquist, B.; Burke, M. Monitoring landscape dynamics in central U.S. grasslands with harmonized Landsat-8 and Sentinel-2 time series data. Remote Sens. 2019, 11, 328. [Google Scholar] [CrossRef]

- Torbick, N.; Huang, X.; Ziniti, B.; Johnson, D.; Masek, J.; Reba, M. Fusion of Moderate Resolution Earth Observations for Operational Crop Type Mapping. Remote Sens. 2018, 10, 1058. [Google Scholar] [CrossRef]

- Hao, P.; Tang, H.; Chen, Z.; Yu, L.; Wu, M.q. High resolution crop intensity mapping using harmonized Landsat-8 and Sentinel-2 data. J. Integr. Agric. 2019, 18, 2883–2897. [Google Scholar] [CrossRef]

- Frantz, D. FORCE—Landsat + Sentinel-2 Analysis Ready Data and Beyond. Remote Sens. 2019, 11, 1124. [Google Scholar] [CrossRef]

- Saunier, S.; Louis, J.; Debaecker, V.; Beaton, T.; Cadau, E.G.; Boccia, V.; Gascon, F. Sen2like, A Tool To Generate Sentinel-2 Harmonised Surface Reflectance Products—First, Results with Landsat-8. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 5650–5653. [Google Scholar] [CrossRef]

- Bontemps, S.; Arias, M.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Matton, N.; Morin, D.; Popescu, R.; et al. Building a data set over 12 globally distributed sites to support the development of agriculture monitoring applications with Sentinel-2. Remote Sens. 2015, 7, 16062–16090. [Google Scholar] [CrossRef]

- Whitcraft, A.K.; Becker-Reshef, I.; Justice, C.O. A framework for defining spatially explicit earth observation requirements for a global agricultural monitoring initiative (GEOGLAM). Remote Sens. 2015, 7, 1461–1481. [Google Scholar] [CrossRef]

- Tian, H.; Huang, N.; Niu, Z.; Qin, Y.; Pei, J.; Wang, J. Mapping Winter Crops in China with Multi-Source Satellite Imagery and Phenology-Based Algorithm. Remote Sens. 2019, 11, 820. [Google Scholar] [CrossRef]

- Feng, Q.; Yang, J.; Zhu, D.; Liu, J.; Guo, H.; Bayartungalag, B.; Li, B. Integrating Multitemporal Sentinel-1/2 Data for Coastal Land Cover Classification Using a Multibranch Convolutional Neural Network: A Case of the Yellow River Delta. Remote Sens. 2019, 11, 1006. [Google Scholar] [CrossRef]

- Frantz, D.; Haß, E.; Uhl, A.; Stoffels, J.; Hill, J. Improvement of the Fmask algorithm for Sentinel-2 images: Separating clouds from bright surfaces based on parallax effects. Remote Sens. Environ. 2018, 215, 471–481. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P.; Yan, L.; Li, Z.; Huang, H.; Vermote, E.; Skakun, S.; Roger, J.C. Characterization of Sentinel-2A and Landsat-8 top of atmosphere, surface, and nadir BRDF adjusted reflectance and NDVI differences. Remote Sens. Environ. 2018, 215, 482–494. [Google Scholar] [CrossRef]

- Heydari, S.S.; Mountrakis, G. Effect of classifier selection, reference sample size, reference class distribution and scene heterogeneity in per-pixel classification accuracy using 26 Landsat sites. Remote Sens. Environ. 2018, 204, 648–658. [Google Scholar] [CrossRef]

- Viana, C.M.; Girão, I.; Rocha, J. Long-Term Satellite Image Time-Series for Land Use/Land Cover Change Detection Using Refined Open Source Data in a Rural Region. Remote Sens. 2019, 11, 1104. [Google Scholar] [CrossRef]

- Noi, P.T.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2018, 18, 18. [Google Scholar] [CrossRef]

- Griffiths, P.; Jakimow, B.; Hostert, P. Reconstructing long term annual deforestation dynamics in Pará and Mato Grosso using the Landsat archive. Remote Sens. Environ. 2018, 216, 497–513. [Google Scholar] [CrossRef]

- Waldner, F.; De Abelleyra, D.; Verón, S.R.; Zhang, M.; Wu, B.; Plotnikov, D.; Bartalev, S.; Lavreniuk, M.; Skakun, S.; Kussul, N.; et al. Towards a set of agrosystem-specific cropland mapping methods to address the global cropland diversity. Int. J. Remote Sens. 2016, 37, 3196–3231. [Google Scholar] [CrossRef]

- Leinenkugel, P.; Deck, R.; Huth, J.; Ottinger, M.; Mack, B. The Potential of Open Geodata for Automated Large-Scale Land Use and Land Cover Classification. Remote Sens. 2019, 11, 2249. [Google Scholar] [CrossRef]

- Sanches, I.D.; Feitosa, R.Q.; Achanccaray, P.; Montibeller, B.; Luiz, A.J.; Soares, M.D.; Prudente, V.H.; Vieira, D.C.; Maurano, L.E. LEM benchmark database for tropical agricultural remote sensing application. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2018, 42, 387–392. [Google Scholar] [CrossRef]

- Sun, C.; Bian, Y.; Zhou, T.; Pan, J. Using of Multi-Source and Multi-Temporal Remote Sensing Data Improves Crop-Type Mapping in the Subtropical Agriculture Region. Sensors 2019, 19, 2401. [Google Scholar] [CrossRef]

- Fowler, J.; Waldner, F.; Hochman, Z. All pixels are useful, but some are more useful: Efficient Situ Data Collect. Crop-Type Mapp. Using Seq. Explor. Methods. Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102114. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Mack, B.; Leinenkugel, P.; Kuenzer, C.; Dech, S. A semi-automated approach for the generation of a new land use and land cover product for Germany based on Landsat time-series and Lucas -Situ Data. Remote Sens. Lett. 2016, 8, 244–253. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P. Using the 500 m MODIS land cover product to derive a consistent continental scale 30 m Landsat land cover classification. Remote Sens. Environ. 2017, 197, 15–34. [Google Scholar] [CrossRef]

- Giuliani, G.; Camara, G.; Killough, B.; Minchin, S. Earth Observation Open Science: Enhancing Reproducible Science Using Data Cubes. Data 2019, 4, 147. [Google Scholar] [CrossRef]

- Lewis, A.; Oliver, S.; Lymburner, L.; Evans, B.; Wyborn, L.; Mueller, N.; Raevksi, G.; Hooke, J.; Woodcock, R.; Sixsmith, J.; et al. The Australian Geoscience Data Cube—Foundations and lessons learned. Remote Sens. Environ. 2017, 202, 276–292. [Google Scholar] [CrossRef]

- Mercier, A.; Betbeder, J.; Rumiano, F.; Baudry, J.; Gond, V.; Blanc, L.; Bourgoin, C.; Cornu, G.; Ciudad, C.; Marchamalo, M.; et al. Evaluation of Sentinel-1 and 2 Time Series for Land Cover Classification of Forest—Agriculture Mosaics in Temperate and Tropical Landscapes. Remote Sens. 2019, 11, 979. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Scheffler, D.; Frantz, D.; Segl, K. Spectral harmonization and red edge prediction of Landsat-8 to Sentinel-2 using land cover optimized multivariate regressors. Remote Sens. Environ. 2020, 241, 111723. [Google Scholar] [CrossRef]

- Ienco, D.; Interdonato, R.; Gaetano, R.; Minh, D.H.T. Combining Sentinel-1 and Sentinel-2 Satellite Image Time Series for land cover mapping via a multi-source deep learning architecture. ISPRS J. Photogramm. Remote Sens. 2019, 158, 11–22. [Google Scholar] [CrossRef]

- Roy, D.P.; Kovalskyy, V.; Zhang, H.K.; Vermote, E.F.; Yan, L.; Kumar, S.S.; Egorov, A. Characterization of Landsat-7 to Landsat-8 reflective wavelength and normalized difference vegetation index continuity. Remote Sens. Environ. 2016, 185, 57–70. [Google Scholar] [CrossRef]

- Nativi, S.; Mazzetti, P.; Craglia, M. A view-based model of data-cube to support big earth data systems interoperability. Big Earth Data 2017, 1, 75–99. [Google Scholar] [CrossRef]

- Forkuor, G.; Dimobe, K.; Serme, I.; Tondoh, J.E. Landsat-8 vs. Sentinel-2: Examining the added value of sentinel-2’s red-edge bands to land-use and land-cover mapping in Burkina Faso. GIScience Remote Sens. 2018, 55, 331–354. [Google Scholar] [CrossRef]

- Vuolo, F.; Neuwirth, M.; Immitzer, M.; Atzberger, C.; Ng, W.T. How much does multi-temporal Sentinel-2 data improve crop type classification? Int. J. Appl. Earth Obs. Geoinf. 2018, 72, 122–130. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First, experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Ramoelo, A.; Cho, M.; Mathieu, R.; Skidmore, A.K. Potential of Sentinel-2 spectral configuration to assess rangeland quality. J. Appl. Remote Sens. 2015, 9, 094096. [Google Scholar] [CrossRef]

- Macintyre, P.; van Niekerk, A.; Mucina, L. Efficacy of multi-season Sentinel-2 imagery for compositional vegetation classification. Int. J. Appl. Earth Obs. Geoinf. 2020, 85, 101980. [Google Scholar] [CrossRef]

- Persson, M.; Lindberg, E.; Reese, H. Tree Species Classification with Multi-Temporal Sentinel-2 Data. Remote Sens. 2018, 10, 1794. [Google Scholar] [CrossRef]

- Sothe, C.; de Almeida, C.M.; Liesenberg, V.; Schimalski, M.B. Evaluating Sentinel-2 and Landsat-8 data to map sucessional forest stages in a subtropical forest in Southern Brazil. Remote Sens. 2017, 9, 838. [Google Scholar] [CrossRef]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GIScience Remote Sens. 2019, 57, 1–20. [Google Scholar] [CrossRef]

- Kobayashi, N.; Tani, H.; Wang, X.; Sonobe, R. Crop classification using spectral indices derived from Sentinel-2A imagery. J. Inf. Telecommun. 2019, 4, 67–90. [Google Scholar] [CrossRef]

- Jakimow, B.; Griffiths, P.; van der Linden, S.; Hostert, P. Mapping pasture management in the Brazilian Amazon from dense Landsat time series. Remote Sens. Environ. 2018, 205, 453–468. [Google Scholar] [CrossRef]

- Wilson, E.H.; Sader, S.A. Detection of forest harvest type using multiple dates of Landsat TM imagery. Remote Sens. Environ. 2002, 80, 385–396. [Google Scholar] [CrossRef]

- Garcia, M.J.L.; Caselles, V. Mapping burns and natural reforestation using thematic mapper data. Geocarto Int. 1991, 6, 31–37. [Google Scholar] [CrossRef]

- Müller, H.; Rufin, P.; Griffiths, P.; Barros Siqueira, A.J.; Hostert, P. Mining dense Landsat time series for separating cropland and pasture in a heterogeneous Brazilian savanna landscape. Remote Sens. Environ. 2015, 156, 490–499. [Google Scholar] [CrossRef]

- Radoux, J.; Chomé, G.; Jacques, D.C.; Waldner, F.; Bellemans, N.; Matton, N.; Lamarche, C.; D’Andrimont, R.; Defourny, P. Sentinel-2’s potential for sub-pixel landscape feature detection. Remote Sens. 2016, 8, 488. [Google Scholar] [CrossRef]

- Lambert, M.J.; Sibiry, P.C.; Blaes, X.; Baret, P.; Defourny, P. Estimating smallholder crops production at village level from Sentinel-2 time series in Mali’s cotton belt. Remote Sens. Environ. 2018, 216, 647–657. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A high-performance and in-season classification system of field-level crop types using time-series Landsat data and a machine learning approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Liu, M. Characterization of forest types in Northeastern China, using multi-temporal SPOT-4 VEGETATION sensor data. Remote Sens. Environ. 2002, 82, 335–348. [Google Scholar] [CrossRef]

- Meng, S.; Zhong, Y.; Luo, C.; Hu, X.; Wang, X.; Huang, S. Optimal Temporal Window Selection for Winter Wheat and Rapeseed Mapping with Sentinel-2 Images: A Case Study of Zhongxiang in China. Remote Sens. 2020, 12, 226. [Google Scholar] [CrossRef]

- Immitzer, M.; Neuwirth, M.; Böck, S.; Brenner, H.; Vuolo, F.; Atzberger, C. Optimal Input Features for Tree Species Classification in Central Europe Based on Multi-Temporal Sentinel-2 Data. Remote Sens. 2019, 11, 2599. [Google Scholar] [CrossRef]

- Piyoosh, A.K.; Ghosh, S.K. Development of a modified bare soil and urban index for Landsat 8 satellite data. Geocarto Int. 2018, 33, 423–442. [Google Scholar] [CrossRef]

- Rasul, A.; Balzter, H.; Ibrahim, G.; Hameed, H.; Wheeler, J.; Adamu, B.; Ibrahim, S.; Najmaddin, P. Applying Built-Up and Bare-Soil Indices from Landsat 8 to Cities in Dry Climates. Land 2018, 7, 81. [Google Scholar] [CrossRef]

- Filella, I.; Peñuelas, J. The red edge position and shape as indicators of plant chlorophyll content, biomass and hydric status. Int. J. Remote Sens. 1994, 15, 1459–1470. [Google Scholar] [CrossRef]

- Clerici, N.; Valbuena Calderón, C.A.; Posada, J.M. Fusion of Sentinel-1A and Sentinel-2A data for land cover mapping: A case study in the lower Magdalena region, Colombia. J. Maps 2017, 13, 718–726. [Google Scholar] [CrossRef]

- Cao, Z.; Yao, X.; Liu, H.; Liu, B.; Cheng, T.; Tian, Y.; Cao, W.; Zhu, Y. Comparison of the abilities of vegetation indices and photosynthetic parameters to detect heat stress in wheat. Agric. For. Meteorol. 2019, 265, 121–136. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Ma, B.; Kovacs, J.M.; Walters, D.; Jiao, X.; Geng, X.; Shi, Y. Assessment of red-edge vegetation indices for crop leaf area index estimation. Remote Sens. Environ. 2019, 222, 133–143. [Google Scholar] [CrossRef]

- Lin, S.; Li, J.; Liu, Q.; Li, L.; Zhao, J.; Yu, W. Evaluating the Effectiveness of Using Vegetation Indices Based on Red-Edge Reflectance from Sentinel-2 to Estimate Gross Primary Productivity. Remote Sens. 2019, 11, 1303. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Shoko, C.; Mutanga, O. Examining the strength of the newly-launched Sentinel 2 MSI sensor in detecting and discriminating subtle differences between C3 and C4 grass species. ISPRS J. Photogramm. Remote Sens. 2017, 129, 32–40. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Herrmann, I.; Pimstein, A.; Karnieli, A.; Cohen, Y.; Alchanatis, V.; Bonfil, D. LAI assessment of wheat and potato crops by VENμS and Sentinel-2 bands. Remote Sens. Environ. 2011, 115, 2141–2151. [Google Scholar] [CrossRef]

- Osgouei, P.E.; Kaya, S.; Sertel, E.; Alganci, U. Separating built-up areas from bare land in mediterranean cities using Sentinel-2A imagery. Remote Sens. 2019, 11, 345. [Google Scholar] [CrossRef]

- Vincent, A.; Kumar, A.; Upadhyay, P. Effect of Red-Edge Region in Fuzzy Classification: A Case Study of Sunflower Crop. J. Indian Soc. Remote Sens. 2020, 48, 645–657. [Google Scholar] [CrossRef]

- Hardisky, M.; Klemas, V.; Smart, M. The influence of soil salinity, growth form, and leaf moisture on the spectral radiance of Spartina Alterniflora Canopies. Photogramm. Eng. Remote Sens. 1983, 49, 77–83. [Google Scholar]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Guo, H.Y. Classification of multitemporal Sentinel-2 data for field-level monitoring of rice cropping practices in Taiwan. Adv. Space Res. 2020, 65, 1910–1921. [Google Scholar] [CrossRef]

- Carrasco, L.; O’Neil, A.; Morton, R.; Rowland, C. Evaluating Combinations of Temporally Aggregated Sentinel-1, Sentinel-2 and Landsat 8 for Land Cover Mapping with Google Earth Engine. Remote Sens. 2019, 11, 288. [Google Scholar] [CrossRef]

- Gerard, F.; Plummer, S.; Wadsworth, R.; Sanfeliu, A.F.; Iliffe, L.; Balzter, H.; Wyatt, B. Forest Fire Scar Detection in the Boreal Forest with Multitemporal SPOT-VEGETATION Data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2575–2585. [Google Scholar] [CrossRef]

- Van Deventer, A.; Ward, A.; Gowda, P.; Lyon, J. Using thematic mapper data to identify contrasting soil plains and tillage practices. Photogramm. Eng. Remote Sens. 1997, 63, 87–93. [Google Scholar]

- Gitelson, A.A.; Viña, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30. [Google Scholar] [CrossRef]

- Cai, Y.; Lin, H.; Zhang, M. Mapping paddy rice by the object-based random forest method using time series Sentinel-1/Sentinel-2 data. Adv. Space Res. 2019, 64, 2233–2244. [Google Scholar] [CrossRef]

- Chatziantoniou, A.; Psomiadis, E.; Petropoulos, G. Co-Orbital Sentinel 1 and 2 for LULC Mapping with Emphasis on Wetlands in a Mediterranean Setting Based on Machine Learning. Remote Sens. 2017, 9, 1259. [Google Scholar] [CrossRef]

- Azzari, G.; Lobell, D. Landsat-based classification in the cloud: An opportunity for a paradigm shift in land cover monitoring. Remote Sens. Environ. 2017, 202, 64–74. [Google Scholar] [CrossRef]

- Zhong, L.; Hawkins, T.; Biging, G.; Gong, P. A phenology-based approach to map crop types in the San Joaquin Valley, California. Int. J. Remote Sens. 2011, 32, 7777–7804. [Google Scholar] [CrossRef]

- Yan, L.; Roy, D.P. Improved time series land cover classification by missing-observation-adaptive nonlinear dimensionality reduction. Remote Sens. Environ. 2015, 158, 478–491. [Google Scholar] [CrossRef]

- Misra, G.; Cawkwell, F.; Wingler, A. Status of Phenological Research Using Sentinel-2 Data: A Review. Remote Sens. 2020, 12, 2760. [Google Scholar] [CrossRef]

- Nguyen, L.H.; Joshi, D.R.; Clay, D.E.; Henebry, G.M. Characterizing land cover/land use from multiple years of Landsat and MODIS time series: A novel approach using land surface phenology modeling and random forest classifier. Remote Sens. Environ. 2020, 238, 111017. [Google Scholar] [CrossRef]

- Pastick, N.J.; Dahal, D.; Wylie, B.K.; Parajuli, S.; Boyte, S.P.; Wu, Z. Characterizing Land Surface Phenology and Exotic Annual Grasses in Dryland Ecosystems Using Landsat and Sentinel-2 Data in Harmony. Remote Sens. 2020, 12, 725. [Google Scholar] [CrossRef]

- Schmidt, M.; Pringle, M.; Devadas, R.; Denham, R.; Tindall, D. A Framework for Large-Area Mapping of Past and Present Cropping Activity Using Seasonal Landsat Images and Time Series Metrics. Remote Sens. 2016, 8, 312. [Google Scholar] [CrossRef]

- Peña, M.A.; Brenning, A. Assessing fruit-tree crop classification from Landsat-8 time series for the Maipo Valley, Chile. Remote Sens. Environ. 2015, 171, 234–244. [Google Scholar] [CrossRef]

- Htitiou, A.; Boudhar, A.; Lebrini, Y.; Hadria, R.; Lionboui, H.; Elmansouri, L.; Tychon, B.; Benabdelouahab, T. The Performance of Random Forest Classification Based on Phenological Metrics Derived from Sentinel-2 and Landsat 8 to Map Crop Cover in an Irrigated Semi-arid Region. Remote Sens. Earth Syst. Sci. 2019, 2, 208–224. [Google Scholar] [CrossRef]

- Zhang, X.; Xiong, Q.; Di, L.; Tang, J.; Yang, J.; Wu, H.; Qin, Y.; Su, R.; Zhou, W. Phenological metrics-based crop classification using HJ-1 CCD images and Landsat 8 imagery. Int. J. Digit. Earth 2017, 11, 1219–1240. [Google Scholar] [CrossRef]

- Parente, L.; Mesquita, V.; Miziara, F.; Baumann, L.; Ferreira, L. Assessing the pasturelands and livestock dynamics in Brazil, from 1985 to 2017: A novel approach based on high spatial resolution imagery and Google Earth Engine cloud computing. Remote Sens. Environ. 2019, 232, 111301. [Google Scholar] [CrossRef]

- Santos, C.L.M.d.O.; Lamparelli, R.A.C.; Figueiredo, G.K.D.A.; Dupuy, S.; Boury, J.; Luciano, A.C.d.S.; Torres, R.d.S.; le Maire, G. Classification of crops, pastures, and tree plantations along the season with multi-sensor image time series in a subtropical agricultural region. Remote Sens. 2019, 11, 334. [Google Scholar] [CrossRef]

- Zeferino, L.B.; de Souza, L.F.T.; do Amaral, C.H.; Filho, E.I.F.; de Oliveira, T.S. Does environmental data increase the accuracy of land use and land cover classification? Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102128. [Google Scholar] [CrossRef]

- Zhao, Y.; Feng, D.; Yu, L.; Wang, X.; Chen, Y.; Bai, Y.; Hernández, H.J.; Galleguillos, M.; Estades, C.; Biging, G.S.; et al. Detailed dynamic land cover mapping of Chile: Accuracy improvement by integrating multi-temporal data. Remote Sens. Environ. 2016, 183, 170–185. [Google Scholar] [CrossRef]

- Zhu, Z.; Gallant, A.L.; Woodcock, C.E.; Pengra, B.; Olofsson, P.; Loveland, T.R.; Jin, S.; Dahal, D.; Yang, L.; Auch, R.F. Optimizing selection of training and auxiliary data for operational land cover classification for the LCMAP initiative. ISPRS J. Photogramm. Remote Sens. 2016, 122, 206–221. [Google Scholar] [CrossRef]

- Gilbertson, J.K.; van Niekerk, A. Value of dimensionality reduction for crop differentiation with multi-temporal imagery and machine learning. Comput. Electron. Agric. 2017, 142, 50–58. [Google Scholar] [CrossRef]

- Kopp, S.; Becker, P.; Doshi, A.; Wright, D.J.; Zhang, K.; Xu, H. Achieving the Full Vision of Earth Observation Data Cubes. Data 2019, 4, 94. [Google Scholar] [CrossRef]

- Appel, M.; Pebesma, E. On-Demand Processing of Data Cubes from Satellite Image Collections with the Gdalcubes Library. Data 2019, 4, 92. [Google Scholar] [CrossRef]

- Giuliani, G.; Masó, J.; Mazzetti, P.; Nativi, S.; Zabala, A. Paving the Way to Increased Interoperability of Earth Observations Data Cubes. Data 2019, 4, 113. [Google Scholar] [CrossRef]

- Dwyer, J.L.; Roy, D.P.; Sauer, B.; Jenkerson, C.B.; Zhang, H.K.; Lymburner, L. Analysis Ready Data: Enabling Analysis of the Landsat Archive. Remote Sens. 2018, 10, 1363. [Google Scholar] [CrossRef]

- Camara, G.; Assis, L.F.; Ribeiro, G.; Ferreira, K.R.; Llapa, E.; Vinhas, L. Big Earth Observation Data Analytics: Matching Requirements to System Architectures. In Proceedings of the BigSpatial ’16, 5th ACM SIGSPATIAL International Workshop on Analytics for Big Geospatial Data, San Francisco, CA, USA, 31 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202. [Google Scholar] [CrossRef]

- Dhu, T.; Dunn, B.; Lewis, B.; Lymburner, L.; Mueller, N.; Telfer, E.; Lewis, A.; McIntyre, A.; Minchin, S.; Phillips, C. Digital Earth Australia—Unlocking new value from earth observation data. Big Earth Data 2017, 1, 64–74. [Google Scholar] [CrossRef]

- Augustin, H.; Sudmanns, M.; Tiede, D.; Lang, S.; Baraldi, A. Semantic Earth Observation Data Cubes. Data 2019, 4, 102. [Google Scholar] [CrossRef]

- Mahecha, M.D.; Gans, F.; Brandt, G.; Christiansen, R.; Cornell, S.E.; Fomferra, N.; Kraemer, G.; Peters, J.; Bodesheim, P.; Camps-Valls, G.; et al. Earth system data cubes unravel global multivariate dynamics. Earth Syst. Dyn. 2020, 11, 201–234. [Google Scholar] [CrossRef]

- Potapov, P.; Hansen, M.C.; Kommareddy, I.; Kommareddy, A.; Turubanova, S.; Pickens, A.; Adusei, B.; Tyukavina, A.; Ying, Q. Landsat Analysis Ready Data for Global Land Cover and Land Cover Change Mapping. Remote Sens. 2020, 12, 426. [Google Scholar] [CrossRef]

- Qiu, S.; Lin, Y.; Shang, R.; Zhang, J.; Ma, L.; Zhu, Z. Making Landsat Time Series Consistent: Evaluating and Improving Landsat Analysis Ready Data. Remote Sens. 2019, 11, 51. [Google Scholar] [CrossRef]

- Poortinga, A.; Tenneson, K.; Shapiro, A.; Nquyen, Q.; Aung, K.S.; Chishtie, F.; Saah, D. Mapping plantations in Myanmar by fusing Landsat-8, Sentinel-2 and Sentinel-1 data along with systematic error quantification. Remote Sens. 2019, 11, 831. [Google Scholar] [CrossRef]

- Kaplan, G.; Avdan, U. Evaluating the utilization of the red-edge and radar bands from sentinel sensors for wetland classification. CATENA 2019, 178, 109–119. [Google Scholar] [CrossRef]

- Chen, N. Mapping mangrove in Dongzhaigang, China using Sentinel-2 imagery. J. Appl. Remote Sens. 2020, 14, 1. [Google Scholar] [CrossRef]

- Diniz, C.; Cortinhas, L.; Nerino, G.; Rodrigues, J.; Sadeck, L.; Adami, M.; Souza-Filho, P. Brazilian Mangrove Status: Three Decades of Satellite Data Analysis. Remote Sens. 2019, 11, 808. [Google Scholar] [CrossRef]

- Manna, S.; Raychaudhuri, B. Mapping distribution of Sundarban mangroves using Sentinel-2 data and new spectral metric for detecting their health condition. Geocarto Int. 2018, 35, 434–452. [Google Scholar] [CrossRef]

- Crabbe, R.A.; Lamb, D.; Edwards, C. Discrimination of species composition types of a grazed pasture landscape using Sentinel-1 and Sentinel-2 data. Int. J. Appl. Earth Obs. Geoinf. 2020, 84, 101978. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.I. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GIScience Remote Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Wang, F.; Huang, J.; Yang, L.; Kanu, A.S. Accuracies of support vector machine and random forest in rice mapping with Sentinel-1A, Landsat-8 and Sentinel-2A datasets. Geocarto Int. 2020, 35, 1088–1108. [Google Scholar] [CrossRef]

- Mansaray, L.R.; Yang, L.; Kabba, V.T.S.; Kanu, A.S.; Huang, J.; Wang, F. Optimising rice mapping in cloud-prone environments by combining quad-source optical with Sentinel-1A microwave satellite imagery. GIScience Remote Sens. 2019, 56, 1333–1354. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Blackburn, G.A.; Wang, Q.; Atkinson, P.M.; Kindred, D.; Miao, Y. Mapping paddy rice fields by applying machine learning algorithms to multi-temporal Sentinel-1A and Landsat data. Int. J. Remote Sens. 2017, 39, 1042–1067. [Google Scholar] [CrossRef]

- Waldner, F.; Duveiller, G.; Defourny, P. Local adjustments of image spatial resolution to optimize large-area mapping in the era of big data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 374–385. [Google Scholar] [CrossRef]

- Capolupo, A.; Monterisi, C.; Tarantino, E. Landsat Images Classification Algorithm (LICA) to Automatically Extract Land Cover Information in Google Earth Engine Environment. Remote Sens. 2020, 12, 1201. [Google Scholar] [CrossRef]

- García-Berná, J.A.; Ouhbi, S.; Benmouna, B.; García-Mateos, G.; Fernández-Alemán, J.L.; Molina-Martínez, J.M. Systematic Mapping Study on Remote Sensing in Agriculture. Appl. Sci. 2020, 10, 3456. [Google Scholar] [CrossRef]

| Spectral Band | L8/OLI Central Wavelengths | S2/MSI Central Wavelengths (2A, 2B) |

|---|---|---|

| Coastal/Aerosol | 442.9 nm (30 m) | 442.7 nm (60 m), 442.2 nm (60 m) |

| Blue | 482 nm (30 m) | 492.4 nm (10 m), 492.1 nm (10 m) |

| Green | 561.4 nm (30 m) | 559.8 nm (10 m), 559 nm (10 m) |

| Red | 654.6 nm (30 m) | 664.6 nm (10 m), 664.9 nm (10 m) |

| Red-edge | - | 704.1 nm (20 m), 703.8 nm (20 m) 740.5 nm (20 m), 739.1 nm (20 m) 782.8 nm (20 m), 779.7 nm (20 m) |

| NIR | 864.7 nm (30 m) | 832.8 nm (10 m), 832.9 nm (20 m) 864.7 nm (20 m), 864.0 nm (20 m) |

| SWIR | 1608.9 nm (30 m) 2200.7 nm (30 m) | 1613.7 nm (20 m), 1610.4 nm (20 m) 2202.4 nm (20 m), 2185.7 nm (20 m) |

| Panchromatic | 589.5 nm (15 m) | - |

| Cirrus | 1373.4 nm (30 m) | 1373.5 nm (60 m), 1376.9 nm (60 m) |

| Water vapor | - | 945.1 nm (60 m), 943.2 nm (60 m) |

| Fields | Definition | Categories |

|---|---|---|

| Year | Year of publication | Year |

| Source title | Journal | Journal name |

| Institution | Name | Institution Name |

| Area | Mapped area | Country, area of interest |

| Sensor type | Sensor used | S2/MSI, L8/OLI, both |

| Sampling strategy | Sampling adopted | Stratified random sampling, simple random sampling, others |

| Site type | Study area | Agricultural lands, Natural vegetation |

| Classification method | Method adopted | RF, SVM, others |

| Accuracy measure | Accuracy index and values | OA, Kappa, F-score, and % of accuracy |

| Class/Parameter | Spectral Vegetation Indices | Attested by |

|---|---|---|

| Natural vegetation | NDVIre, MNDWI, SRI, ARI, CIre, GEMI, NDBI, MIRBI, NDFI, NDMI | Feng et al. (2015), Pelletier et al. (2016), Puletti et al. (2017), Lin et al. (2019), Forkuor et al. (2018), Schultz et al. (2016), Carrasco et al. (2019) |

| Soybean | NDVIre, LSWI, GCVI, CIre, MSRre, SWIRIndex, PSRI, S2REP | Cai et al. (2018), Torbick et al. (2018), Müller et al. (2015), Liu et al. (2020) Csillik et al. (2019), Defourny et al. (2019), Niazmardi et al. (2018) |

| Maize | LSWI, RedSWIR, TVI, NDTI, NDVIre, GCVI, CRI700, IRECI, S2REP, CIre, CIred&re, MSR, MSRre, MSRredre, NDVIre2n, SIPI, REIP, PPR, NGRDI | Radoux et al. (2016), Sun et al. (2019,2020), Cai et al. (2018), Xie et al. (2018), Frampton et al. (2013), Kobayashi et al. (2019), Vincent et al. (2020) |

| Cotton | NDVIre, LSWI, CIgreen, TVI, STI, NDTI | Torbick et al. (2018), Lambert et al. (2018) |

| Beetroot | AFRI1.6, SIWSI, NDII, PVR, mNDVI | Sonobe et al. (2018), Kobayashi et al. (2019) |

| Potato | REIP, CVI, Maccioni, Datt1 | Sonobe et al. (2018), Kobayashi et al. (2019) |

| Wheat | NDVIre, NDVIre2, MSR, MSRre, MSRredre, CIre, CIred&re, PSRI, S2REP, REIP, AVI, SIPI, PVR, mNDVI, GARI, VARIgreen | Csillik and Belgiu (2016), Xie et al. (2018), Defourny et al. (2019), Kobayashi et al. (2019), Vincent et al. (2020), Sonobe et al. (2018) |

| Barley | CIred&re, NDVIre, MSR, MSRredre, PSRI, S2REP | Xie et al. (2018), Defourny et al. (2019), Vincent et al. (2020) |

| Alfalfa | CIred&re, NDVIre, CIre, MSR, MSRre, MSRredre, LSWI | Csillik et al. (2019), Xie et al. (2018), Torbick et al. (2018), Vincent et al. (2020) |

| Millet | NDVIre, CIre, CIgreen, STI | Lambert et al. (2018), Forkuor et al. (2018) |

| Sorghum | NDVIre, MNDWI, CIgreen, TVI, CIre | Forkuor et al. (2018), Lambert et al. (2018) |

| Sunflower | NDVIre, PSRI, S2REP, CIR&RE, SAVIr&re | Niazmardi et al. (2018), Defourny et al. (2019), Vincent et al. (2020) |

| Rice | LSWI, NDVIre, RERVI, CIre, MNDWI | Torbick et al. (2018), Cao et al. (2019), Mansaray et al. (2019), Son et al. (2020) |

| Sugar beet | RedSWIR, NDVIre | Radoux et al. (2016) Csillik and Belgiu (2016) |

| Beans and groundnuts | NDVIre, MNDWI, PVR, REIP, GEMI, Datt3 mNDVI, VARIgreen | Forkuor et al. (2018), Kobayashi et al. (2019) |

| Tomato/Chili pepper | CIre, NDVIre1n, NDVIre2n, MSRre, MSRren | Sun et al. (2020) |

| Bare soil | MNDWI, NDBaI, NDBI, NDTI, WDVI, GEMI, DBI, MNDSI DBSI, SRNIRnarrowRed, SRNIRnarrowGreen, RTVIcore, NRUI | Osgouei et al. (2019), Radoux et al. (2016), Piyoosh and Gosh (2018), Rasul et al. (2018), Liu et al. (2020) |

| Grasslands, shrublands, rangelands and pastures | SWIRindex, IRECI, RedSWIR, NDSWIR, NBR, NBR-2, BAI, MNDWI, NDMI, REIP, MNSI, AFRI1.6 | Müller et al. (2015), Radoux et al. (2016), Forkuor et al. (2018), Jakimow et al. (2018), Carrasco et al. (2019), Sonobe et al. (2018) |

| Mangrove | CMRI, MMRI, DNVI | Chen (2020), Diniz et al. (2020), Manna and Raychaudhuri (2018) |

| Water bodies | MNDWI, NHI, VSDI | Radoux et al. (2016), Pelletier et al. (2016), Rasul et al. (2018), Feng et al. (2015), Forkuor et al. (2018), Guttler et al. (2017) |

| Settlements and built-up areas | MNDWI, NDBI, MNDBI, IBI, DBI, DBSI, NRUI | Forkuor et al. (2018), Pelletier et al. (2016), Mansaray et al. (2018), Piyoosh and Gosh (2018), Rasul et al. (2018) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

E. D. Chaves, M.; C. A. Picoli, M.; D. Sanches, I. Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sens. 2020, 12, 3062. https://doi.org/10.3390/rs12183062

E. D. Chaves M, C. A. Picoli M, D. Sanches I. Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sensing. 2020; 12(18):3062. https://doi.org/10.3390/rs12183062

Chicago/Turabian StyleE. D. Chaves, Michel, Michelle C. A. Picoli, and Ieda D. Sanches. 2020. "Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review" Remote Sensing 12, no. 18: 3062. https://doi.org/10.3390/rs12183062

APA StyleE. D. Chaves, M., C. A. Picoli, M., & D. Sanches, I. (2020). Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sensing, 12(18), 3062. https://doi.org/10.3390/rs12183062