An Airport Knowledge-Based Method for Accurate Change Analysis of Airport Runways in VHR Remote Sensing Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. The Airport Knowledge Base

2.1.1. Airport Composition

2.1.2. Runway Features and Runway Markings

2.1.3. Runway Change Types

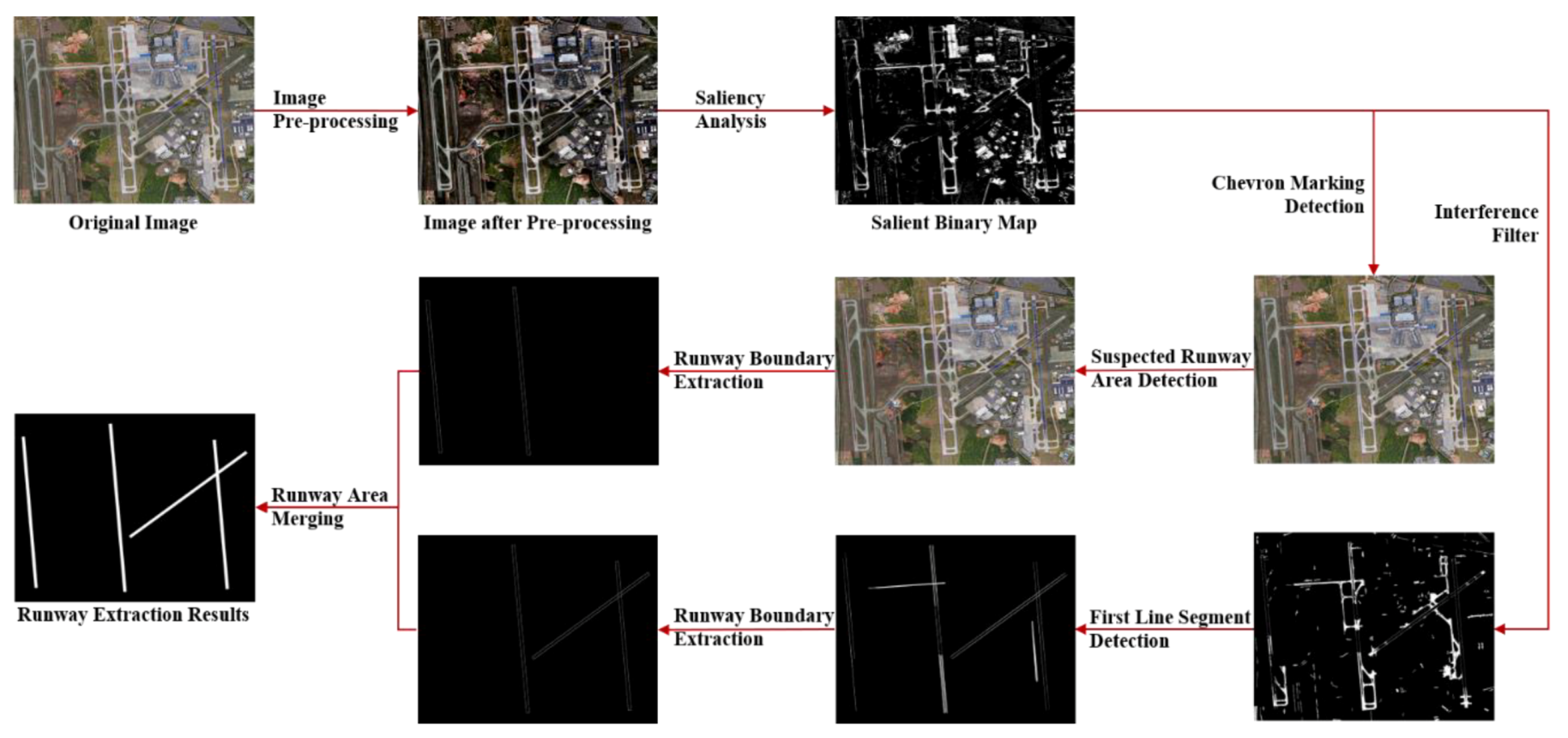

2.2. Runway Extraction

2.2.1. Pre-Processing

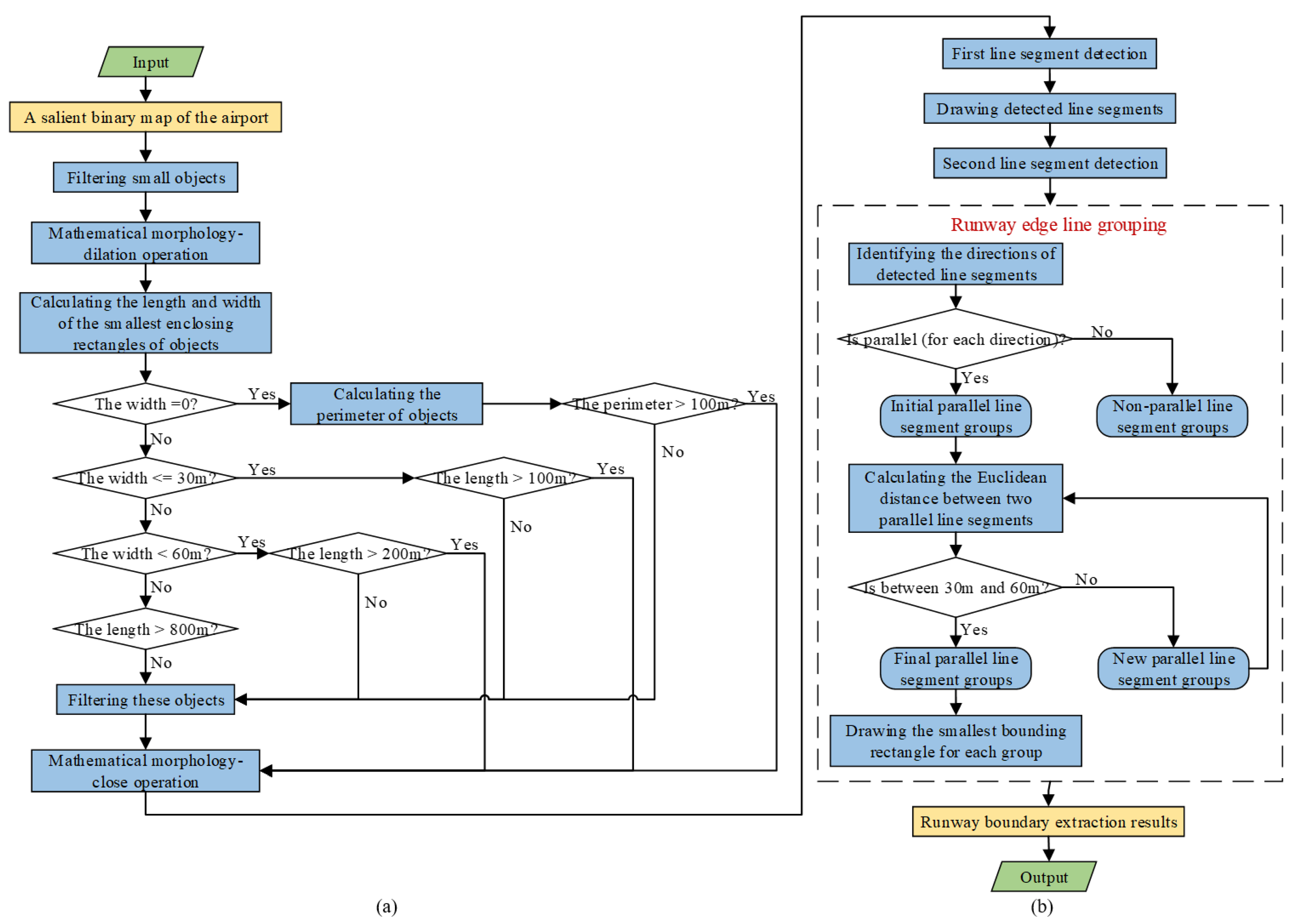

2.2.2. Generation of the Salient Binary Map of the Airport

2.2.3. Runway Boundary Extraction Based on Chevron Marking Detection

2.2.4. Runway Boundary Extraction Based on Interference Filter

2.2.5. Merging Runway Area

2.3. Runway Change Analysis

2.4. Datasets and Evaluation Metrics

2.4.1. Datasets

2.4.2. Evaluation Metrics

3. Results

3.1. Experimental Parameters

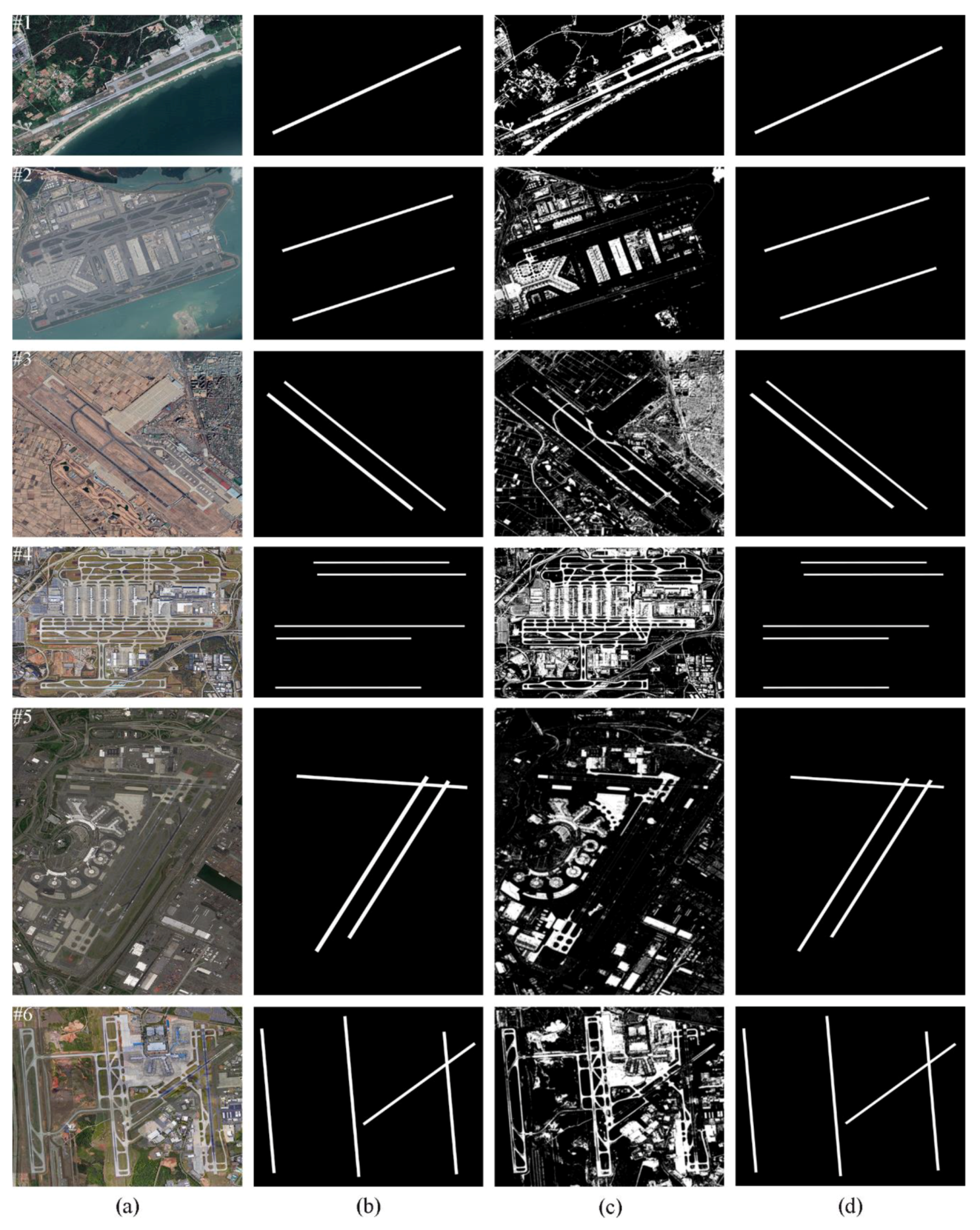

3.2. Experimental Results and Comparison with State-of-the-Arts

3.2.1. Experiment I

3.2.2. Experiment II

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xiao, Z.; Gong, Y.; Long, Y.; Li, D.; Wang, X.; Liu, H. Airport Detection Based on a Multiscale Fusion Feature for Optical Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1469–1473. [Google Scholar] [CrossRef]

- Zeng, F.; Cheng, L.; Li, N.; Xia, N.; Ma, L.; Zhou, X.; Li, M. A Hierarchical Airport Detection Method Using Spatial Analysis and Deep Learning. Remote Sens. 2019, 11, 2204. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Lv, Q.; Wang, B.; Zhang, L. Airport Detection in Remote Sensing Images: A Method Based on Saliency Map. Cogn. Neurodyn. 2012, 7, 143–154. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, D.; Ma, Y.; Jiang, Z.; Shi, Z. Multiresolution Airport Detection via Hierarchical Reinforcement Learning Saliency Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2855–2866. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y. Airport Detection and Aircraft Recognition Based on Two-Layer Saliency Model in High Spatial Resolution Remote-Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 1511–1524. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, L. Airport Detection Based on Superpixel Segmentation and Saliency Analysis for Remote Sensing Images. Int. Geosci. Remote Sens. Symp. (IGARSS) 2018, 2018, 2511–2514. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, L.; Shi, W.; Liu, Y. Airport Extraction via Complementary Saliency Analysis and Saliency-Oriented Active Contour Model. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1085–1089. [Google Scholar] [CrossRef]

- Liu, N.; Cao, Z.; Cui, Z.; Pi, Y.; Dang, S. Multi-Layer Abstraction Saliency for Airport Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9820–9831. [Google Scholar] [CrossRef]

- Chen, F.; Ren, R.; Van De Voorde, T.; Xu, W.; Zhou, G.; Zhou, Y. Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks. Remote Sens. 2018, 10, 443. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Zhu, M.; Li, S.; Feng, H.; Ma, S.; Che, J. End-to-End Airport Detection in Remote Sensing Images Combining Cascade Region Proposal Networks and Multi-Threshold Detection Networks. Remote Sens. 2018, 10, 1516. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Xu, Y.; Zhu, M.; Ma, S.; Tang, H. Remote Sensing Airport Detection Based on End-to-End Deep Transferable Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1640–1644. [Google Scholar] [CrossRef]

- Zhang, Z.; Zou, C.; Han, P.; Lu, X. A Runway Detection Method Based on Classification Using Optimized Polarimetric Features and HOG Features for PolSAR Images. IEEE Access 2020, 8, 49160–49168. [Google Scholar] [CrossRef]

- Liu, D.; Song, K.; Townshend, J.R.; Gong, P. Using Local Transition Probability Models in Markov Random Fields for Forest Change Detection. Remote Sens. Environ. 2008, 112, 2222–2231. [Google Scholar] [CrossRef]

- Seo, D.K.; Kim, Y.; Eo, Y.; Park, W.; Park, H. Generation of Radiometric, Phenological Normalized Image Based on Random Forest Regression for Change Detection. Remote Sens. 2017, 9, 1163. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Liu, Z.; Shi, W. Semiautomatic Airport Runway Extraction Using a Line-Finder-Aided Level Set Evolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4738–4749. [Google Scholar] [CrossRef]

- Aytekin, Ö.; Zongur, U.; Halici, U. Texture-Based Airport Runway Detection. IEEE Geosci. Remote Sens. Lett. 2012, 10, 471–475. [Google Scholar] [CrossRef]

- Wu, W.; Xia, R.; Xiang, W.; Hui, B.; Chang, Z.; Liu, Y.; Zhang, Y. Recognition of Airport Runways in FLIR Images Based on Knowledge. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1534–1538. [Google Scholar] [CrossRef]

- Liu, C.; Cheng, I.; Basu, A. Real-Time Runway Detection for Infrared Aerial Image Using Synthetic Vision and an ROI Based Level Set Method. Remote Sens. 2018, 10, 1544. [Google Scholar] [CrossRef] [Green Version]

- Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sens. 2020, 12, 1688. [Google Scholar] [CrossRef]

- Lv, Z.; Shi, W.; Zhou, X.; Benediktsson, J.A. Semi-Automatic System for Land Cover Change Detection Using Bi-Temporal Remote Sensing Images. Remote Sens. 2017, 9, 1112. [Google Scholar] [CrossRef] [Green Version]

- Lv, Z.; Liu, T.; Wan, Y.; Benediktsson, J.A.; Zhang, X. Post-Processing Approach for Refining Raw Land Cover Change Detection of Very High-Resolution Remote Sensing Images. Remote Sens. 2018, 10, 472. [Google Scholar] [CrossRef] [Green Version]

- Zhou, L.; Cao, G.; Li, Y.; Shang, Y. Change Detection Based on Conditional Random Field with Region Connection Constraints in High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3478–3488. [Google Scholar] [CrossRef]

- Hao, M.; Shi, W.; Zhang, H.; Li, C. Unsupervised Change Detection with Expectation-Maximization-Based Level Set. IEEE Geosci. Remote Sens. Lett. 2013, 11, 210–214. [Google Scholar] [CrossRef]

- Feng, W.; Sui, H.; Chen, X. Saliency-Guided Change Detection of Remotely Sensed Images Using Random Forest. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 341–348. [Google Scholar] [CrossRef] [Green Version]

- Im, J.; Jensen, J.R. A Change Detection Model Based on Neighborhood Correlation Image Analysis and Decision Tree Classification. Remote Sens. Environ. 2005, 99, 326–340. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, A.K.; Tan, K.C. Bipartite Differential Neural Network for Unsupervised Image Change Detection. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 876–890. [Google Scholar] [CrossRef]

- Hou, B.; Liu, Q.; Wang, H.; Wang, Y. From W-Net to CDGAN: Bitemporal Change Detection via Deep Learning Techniques. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1790–1802. [Google Scholar] [CrossRef] [Green Version]

- U.S. Department of Transportation. Federal Aviation Administration. “Airport Design.” Aviation 1 (AC 150/5300-13A): 1–322. 2014. Available online: https://www.faa.gov/documentLibrary/media/Advisory_Circular/150-5300-13A-chg1-interactive-201907.pdf (accessed on 20 July 2020).

- U.S. Department of Transportation. Federal Aviation Administration. “Standards for Airport Markings”. (AC 150/5340-1M): 1–171. 2019. Available online: https://www.faa.gov/documentLibrary/media/Advisory_Circular/150-5340-1M.pdf (accessed on 20 July 2020).

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-Tuned Salient Region Detection. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, CVPR Workshops, Miami Beach, FL, USA, 20–26 June 2009; pp. 1597–1604. [Google Scholar] [CrossRef] [Green Version]

- Comaniciu, D.; Meer, P. Mean Shift: A Robust Approach toward Feature Space Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef] [Green Version]

- Matas, J.; Galambos, C.; Kittler, J. Robust Detection of Lines Using the Progressive Probabilistic Hough Transform. Comput. Vis. Image Underst. 2000, 78, 119–137. [Google Scholar] [CrossRef]

- Pan, X.; Ren, Y.; Sheng, K.; Dong, W.; Yuan, H.; Guo, X.; Ma, C.; Xu, C. Dynamic Refinement Network for Oriented and Densely Packed Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020. [Google Scholar] [CrossRef]

- Janalipour, M.; Mohammadzadeh, A. Building Damage Detection Using Object-Based Image Analysis and ANFIS from High-Resolution Image (Case Study: BAM Earthquake, Iran). IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 1937–1945. [Google Scholar] [CrossRef]

| Types of Features or Markings | Characteristics |

|---|---|

| Shape feature | The overall shape is rectangular, runway length is generally 800–4000 m, runway width is generally 30–60 m, and the length-width ratio is greater than 30. |

| Parallel line feature | Runway edges consist of two parallel lines. |

| Spatial feature | When the airfield code is 1, 2, 3, or 4, the minimum distance of the center line of two parallel runways is no less than 120, 150, and 210 m, respectively. |

| Structural feature | Generally, four structural types of runways are presented: single runway, parallel runway, V-shaped runway, and X-shaped runway. |

| Chevron marking | It is located on the blast pad and stopway that are aligned with and contiguous to the runway end, and dimensionally, the width of the chevron marking is no less than runway width and the length is no less than 45 m to allow for at least two chevron stripes. Moreover, inclined angle of chevron stripes is fixed, namely 90 degrees. |

| Runway displaced threshold marking | The arrow marking (arrowheads with and without arrow shafts) performs three possible functions, that is, two cases for displaced thresholds and one case for a runway threshold with an aligned taxiway. |

| Runway threshold bar marking | It is an elongated rectangular bar that is located perpendicular to the runway centerline and on the landing portion of the runway. The marking extends between the runway edges or between the runway edge markings. |

| Runway threshold marking | It consists of a pattern of longitudinal stripes of uniform dimensions spaced symmetrically about the runway centerline, and the number of longitudinal stripes and their spacing is determined by the runway width: 16 stripes indicate that the runway width is 60 m, 12 stripes indicate that it is 45 m, and 8 stripes indicate that it is 30 m. |

| Runway touchdown zone marking | It consists of symmetrically arranged pairs of rectangular bars in groups of one, two, and three along the runway centerline, and the number of rectangular bars in each marking is related to the available landing distance. |

| Runway aiming point marking | It consists of two conspicuous rectangular markings and is located symmetrically on each side of the runway centerline. |

| Runway centerline marking | It consists of a line of uniformly spaced stripes and gaps and of uniform width. The marking identifies the physical center of the runway width and provides alignment guidance to pilots during take-off and landing operations. |

| Runway edge marking | It consists of two parallel stripes, one placed along each edge of the usable runway with the outer edge of each stripe approximately on the edge of the paved useable runway. The marking extends the full length of the runway, except for precision runways which lack a threshold bar marking. |

| Test Images | Image Size (pixels) | Similarity Threshold | First Length Threshold (meters) | Second Length Threshold (meters) |

|---|---|---|---|---|

| 1 | 60563712 | 0.5 | 800 | 1200 |

| 2 | 86886528 | 0.5 | 400 | 800 |

| 3 | 76966224 | 0.4 | 200 | 1200 |

| 4 | 10,0646576 | 0.5 | 400 | 800 |

| 5 | 67528132 | 0.5 | 400 | 800 |

| 6 | 73285728 | 0.5 | 400 | 1200 |

| 7 | 77683264 | 0.4 | 400 | 1200 |

| 8 | 83364800 | 0.5 | 200 | 800 |

| Test Images | Completeness | Correctness | Quality |

|---|---|---|---|

| 1 | 1.0 | 0.889 | 0.889 |

| 2 | 0.999 | 0.921 | 0.921 |

| 3 | 0.999 | 0.930 | 0.929 |

| 4 | 1.0 | 0.873 | 0.873 |

| 5 | 0.998 | 0.789 | 0.788 |

| 6 | 0.997 | 0.930 | 0.927 |

| Average | 0.999 | 0.889 | 0.888 |

| Test Images | Completeness | Correctness | Quality |

|---|---|---|---|

| 1 | 1.0 | 0.259 | 0.259 |

| 2 | 0.001 | 0 | 0 |

| 3 | 0.173 | 0.079 | 0.057 |

| 4 | 1.0 | 0.108 | 0.108 |

| 5 | 0.074 | 0.016 | 0.013 |

| 6 | 0.873 | 0.040 | 0.040 |

| Test Images | Our(s) | [15] (s) |

|---|---|---|

| 1 | 21.46 | 74.43 |

| 2 | 59.02 | 167.58 |

| 3 | 51.29 | 141.69 |

| 4 | 114.57 | 232.27 |

| 5 | 83.85 | 178.70 |

| 6 | 58.70 | 128.30 |

| Test Images | Completeness | Correctness | Quality |

|---|---|---|---|

| 7 | 1.0 | 0.831 | 0.831 |

| 8 | 1.0 | 0.923 | 0.923 |

| Average | 1.0 | 0.877 | 0.877 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, W.; Wu, J. An Airport Knowledge-Based Method for Accurate Change Analysis of Airport Runways in VHR Remote Sensing Images. Remote Sens. 2020, 12, 3163. https://doi.org/10.3390/rs12193163

Ding W, Wu J. An Airport Knowledge-Based Method for Accurate Change Analysis of Airport Runways in VHR Remote Sensing Images. Remote Sensing. 2020; 12(19):3163. https://doi.org/10.3390/rs12193163

Chicago/Turabian StyleDing, Wei, and Jidong Wu. 2020. "An Airport Knowledge-Based Method for Accurate Change Analysis of Airport Runways in VHR Remote Sensing Images" Remote Sensing 12, no. 19: 3163. https://doi.org/10.3390/rs12193163

APA StyleDing, W., & Wu, J. (2020). An Airport Knowledge-Based Method for Accurate Change Analysis of Airport Runways in VHR Remote Sensing Images. Remote Sensing, 12(19), 3163. https://doi.org/10.3390/rs12193163