3.1. Datasets

MSTAR. The Moving and Stationary Target Acquisition and Recognition (MSTAR) dataset is collected and released with the support of the Defense Advanced Research Projects Agency and the Air Force Research Laboratory.

Figure 5 shows some SAR images and optical images for military targets in the MSTAR dataset. There are 10 military targets: T62, BTR60,ZSU234, BMP2, ZIL131, T72, BTR70, 2S1, BRDM2 and D7. The SAR data are collected by an HH-polarized X-band SAR sensor and the resolution is 0.3 m × 0.3 m. The SAR image size is

pixels. The azimuth angles of target images are in the range of 0

–360

. The depression angles of target images are

,

,

, and

. The details of MSTAR are shown in

Table 1.

The simulated SAR dataset. The simulated SAR dataset is devised by [

36]. The simulated SAR images are collected using a simulation software according to the CAD models of the targets. The simulation software parameter values, e.g., material reflection coefficients and background variation, were set according to the imaging parameters of the MSTAR dataset so that the appearance of the simulated images is close to the real SAR images. There are fourteen target classes from seven types of targets due to each target type with two different CAD models. Some SAR images of the simulated SAR dataset are shown in

Figure 6. The details of the simulated SAR dataset are shown in

Table 2.

3.3. SSR with Limited Training Data

To solve the sample restriction problem, we propose SSR and combine it with the transfer-learning pipeline. The proposed SSR suppresses large singular values and strengthens small singular values to improve the feature discriminability, which can make the deep learning based SAR classification model converge well with limited training data. In this section, we first evaluate the performance of different spectral regularization methods, then compare our best spectral regularization solution with baselines.

The performance of SSR. We evaluate the performance of BSP, SSR and SSR

and the experimental results are shown in

Table 3. The simulated SAR dataset is used for pre-training, then we fine-tune the model using 10% of the real SAR images at a 17

depression angle. All of the real SAR images at a 15

depression angle are used for test. The details of the pre-training, fine-tuning and testing data are shown in

Table 1 and

Table 2.

Firstly, BSP, SSR and SSR

are integrated into a standard training pipeline, where the model is trained from scratch (ID 3, 4, 5 in

Table 3). With the three spectral regularizations, the three trained models can yield slightly higher classification accuracies than the model (ID 1 in

Table 3) without spectral regularization respectively. Secondly, we initialize the models using the pre-trained parameters. then fine-tune the models with BSP, SSR and SSR

respectively (ID 6, 7, 8 in

Table 3). Based on the same pre-trained model, fine-tuning the model with the spectral regularizations can achieve better classification results than without spectral regularization (ID 2 in

Table 3), especially SSR

bringing in a 4.3% relative classification accuracy improvement. And the models with the proposed SSR and SSR

significantly outperform that with BSP. Thirdly, we pre-train the models with BSP, SSR and SSR

respectively, then fine-tune the models without spectral regularization to investigate how much improvements are brought by the three spectral regularizations (ID 9, 10, 11 in

Table 3). Obviously, BSP, SSR and SSR

can provide better-initialized parameters than pre-training without spectral regularization.

The above experimental results indicate that applying spectra regularizations to pre-training and fine-tuning can improve the final classification accuracies in the limited-data regime. SSR

is the best regularization method for pre-training and fine-tuning, thus we combine SSR

with the transfer-learning as our solution for the sample restriction problem (ID 12 in

Table 3), which can yield the best classification accuracy of 88.8%.

Classification results Under SOC. In the limited-data regime, the model is evaluated under the standard operating condition (SOC). After pre-training with the simulated SAR images, the feature extractor

M is initialized with the pre-trained parameters and the model is fine-tuned using 10% of the real SAR images at a 17

depression angle. All of the real SAR images at a 15

depression angle are used for test. The baseline methods are the original CNN (CNN_ORG), CNN with transfer-learning (CNN_TF) [

26], CNN with parameter prediction (CNN_PP) [

37], the cross-domain and cross-task method (CDCT) [

32] and the probabilistic meta-learning method (PML) [

33].

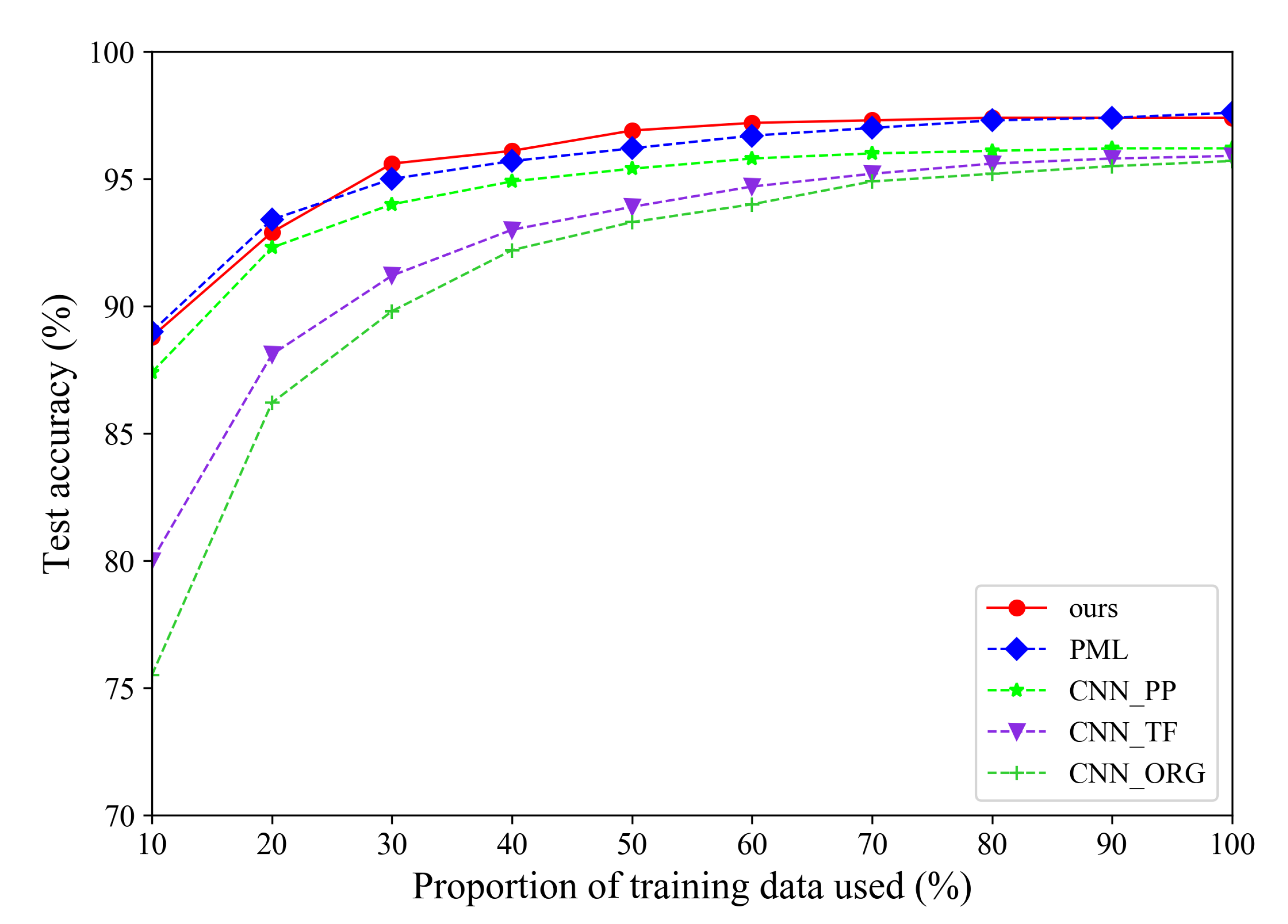

The experimental results are shown in

Table 4. CNN_ORG yields the lowest classification accuracy of 75.5%. From this we can see that training the model using limited SAR data is a challenge. The standard transfer-learning can significantly improve the classification accuracy by using the prior knowledge learned from the sufficient simulated SAR images. CNN_PP, CDCT and PML achieve better classification results based on the carefully designed pipeline and model framework. In contrast, our method is very simple and effective, which achieves a comparable accuracy of 88.8% to CNN_PP, CDCT and PML.

Besides,

Figure 7 illustrates the detailed classification accuracies with different proportions of training data. When increasing the proportion of training data used, the classification accuracies of all methods will become higher. Our method can achieve comparable or better performances for different proportions of training data used.

Classification results Under Depression Variations. In the limited-data regime, the model is evaluated at different depression angles. During the pre-training stage, we train the model on the simulated SAR dataset. Then, the pre-trained parameters are used to initialize the feature extractor

M. We select 3 target classes from the real SAR dataset to fine-tune and test the model.

Table 5 shows the details of the training and test data on the real SAR dataset. The model is fine-tuned on 10% of the real SAR images at a 17

depression angle. During test time, the model is evaluated with images at 30

and 45

depression angles.

The experimental results are shown in

Table 6. For the 30

depression angle, our model achieves the best classification accuracy of 91.0%. When the testing depression angle increases from 30

to 45

, our model still yields a competitive classification result.

In summary, the above experiments prove that the proposed spectral regularization SSR is a feasible way to solve the sample restriction problem for SAR target classification. Although SSR is simple, it performs well with limited training data under SOC and depression variations.

3.4. SSR with Sufficient Training Data

In this section, we investigate how much improvement is brought by the proposed spectral regularization when fine-tuning the model with sufficient data.

Classification results Under SOC. The comparison experiments is conducted under the standard operating condition (SOC). The whole pipeline is the same as above. We pre-train the model with the simulated SAR images and initialize the feature extractor

M using the pre-trained parameters. Then the model is fine-tuned using all of the real SAR images at a 17

depression angle. We perform evaluation on the real SAR images at a 15

depression angle. The baseline methods are CNN_ORG, CNN_TF CDCT, PML, KSR [

38] and TJSR [

39], CDSPP [

40], KRLDP [

41], MCNN [

42] and MFCNN [

43]. CNN_ORG and CNN_TF are selected as the simplest baselines. KSR and TJSR are two sparse representation methods, CDSPP and KRLDP are two discriminant projection methods. MCNN and MFCNN are based on convolutional neural networks (CNN).

The experimental results are shown in

Table 7. Our method can achieve a comparable classification result to CDCT, MCNN and PML. With the proposed spectral regularization, the deep learning based feature extractor can acquire better feature discriminability, providing a 1.5 point boost in classification accuracy.

Classification results Under Depression Variations. In the sufficient-data regime, the model is evaluated at different depression angles. We pre-train the model on the simulated SAR dataset and use the pre-trained parameters as an initialization for the feature extractor M.

Three target classes from the real SAR dataset are selected to fine-tune and test the model. The details of the training and test data on the real SAR dataset are shown in

Table 5. All of the real SAR images at a 17

depression angle are used to fine-tune the model. The real SAR images at 30

and 45

depression angles are used for evaluation.

The experimental results are shown in

Table 8. Our model achieves the best classification accuracy of 98.9% at a 30

depression angle, which brings in a 4.9 point boost in classification accuracy for CNN_TF. For 45

depression angle, the classification accuracies of all methods degrade dramatically. It should be noted that the classification accuracy of CNN_TF decreases when the number of training data changes from 10% to 100%. That’s likely because the standard transfer learning cannot work well when the training images are very different from the test images [

32]. While our model yields a comparable classification result to TJSR and provides a 6.7 point boost in classification accuracy for CNN_TF.

In summary, the above experiments prove that the proposed spectral regularization SSR can also promote the learning of the classification model with sufficient data. In the sufficient-data regime, cooperating with SSR, the performance of CNN_TF can be significantly improved under SOC and depression variations.

3.5. SVD Analysis

In this section, we analyze the effectiveness of the proposed SSR and SSR. With the pre-trained parameters as initialization, the model is fine-tuned on the real SAR images at 17 depression angle. And the max-normalized singular values in different epochs are visualized.

Firstly, we visualize the batch-level singular values of the feature matrix

, which is produced from a batch of SAR image features. As shown in

Figure 8, the difference between the large and small singular values is very big in the first epoch. As training progresses, the difference between the large and small singular values is decreased gradually. It is obvious that BSP makes the small singular values have more dominance than CNN_TF without spectra regularization. That is, the model with BSP has better feature discriminability. As a consequence, BSP can provide a 2.3 point boost in classification accuracy for CNN_TF.

Secondly, we visualize the singular values of the sample-level feature matrix

f, which is generated by reshaping the extracted SAR image feature from

to

.

Figure 9 shows the max-normalized sample-level singular values in different epochs. Both SSR and SSR

can strengthen the small singular values to improve the feature discriminability. Obviously, SSR

implements a significantly smaller difference between the large and small singular values than SSR. This is because SSR

is designed to reduce the difference between the large and small singular values directly. As a consequence, SSR

can provide a 4.3 point boost in classification accuracy for CNN_TF.

Although both BSP and SSR improve the feature discriminability by suppressing the largest singular values, the sample-level spectral regularization SSR performs better than the batch-level spectral regularization BSP. We think this difference comes from the level of spectral regularization. The sample-level regularization can improve the feature discriminability for each SAR image feature precisely. While the batch-level spectral regularization works for a batch of SAR image features and every image feature can affect each other. Therefore, using spectral regularization at the sample-level is more effective than batch-level (3.2% boost of SSR vs. 2.3 % of BSP).

For the original transfer-learning method, the difference between large and small singular values is always very large along with the training, and the large singular values are dominant. In contrast, for the proposed methods SSR and SSR†, the difference of large and small singular values is reduced along with the training, and the dominant position of large singular values is weakened, or small singular values are strengthened. Strengthening small singular values can make the model use more diverse discriminative information for classification and generalize well with limited training samples.

In summary, our best spectral regularization SSR directly reduces the difference of the large and small singular values at the sample-level. The effectiveness of SSR is proved by the above experiments. Applying SSR into the pre-training and fine-tuning stages can achieve better results. Besides, SSR can be plugged into any CNN based SAR target classification models to achieve performance gains no matter the training data is sufficient or not.