Automated Canopy Delineation and Size Metrics Extraction for Strawberry Dry Weight Modeling Using Raster Analysis of High-Resolution Imagery

Abstract

1. Introduction

2. Study Site and Data Preparation

2.1. Study Site

2.2. Image Data Acquisition and Preprocessing

2.3. Information Derived from the Orthoimages

2.4. In-Situ Dry Biomass Measurements

3. Methodology

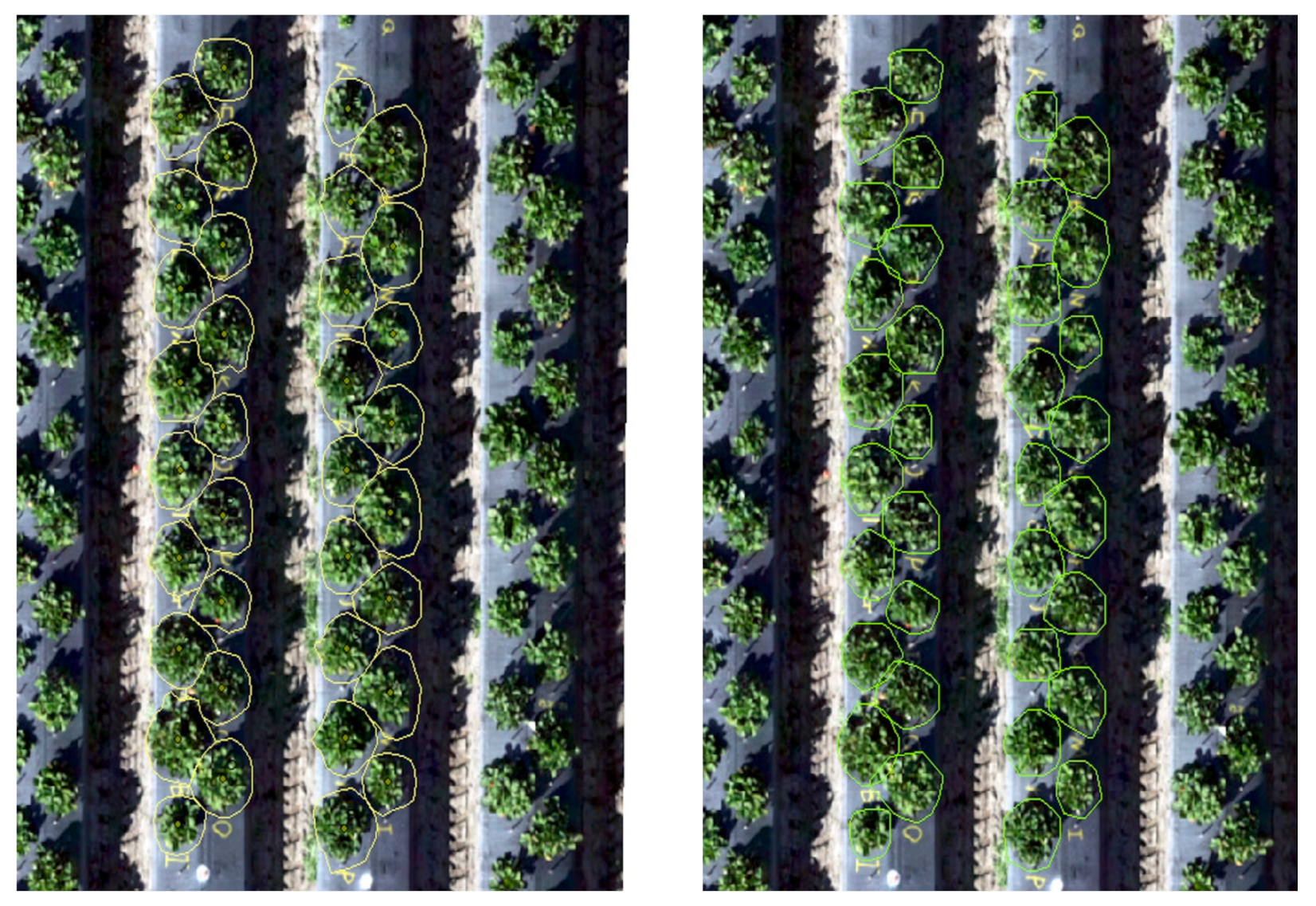

3.1. Canopy Delineation Workflow

- Model 1: The highest point within each canopy was determined using approximate locations. A single approximate location representing each canopy was used to automatically find the highest point of the canopy using the DSM. These points were needed for the watershed algorithm to delineate the canopy. The study area was divided into 1 m square cells (fishnet) and the 2% elevation quantile of the DSM within each cell was extracted. The 2% quantile was the DSM elevation value where 98% of the pixels within each cell were higher. This elevation was chosen to represent the soil (between beds) elevations within each cell in the field. A 1 m grid was used for the strawberry field, which guaranteed that soils represented at least 2% of each cell. A python script customized by the authors based on the ArcGIS user community (https://community.esri.com/thread/95403#555075) was used to compute the quantiles. The script was embedded as a tool within model 1 in ArcMap.

- Model 2: Vegetation pixels lower than bed elevation, which is the 2% quantile elevation in each cell plus bed height (15 cm in this field) were filtered out. These pixels were excluded from the analysis since they either represented weeds growing from the soil or canopy leaves extending outside the bed boundary. In both cases, our experiments showed that excluding the vegetation areas that satisfied this criteria improved analysis results.

- Model 4: A series of operations were used to convert the raster output of the watershed algorithm to a vector layer, generalize the polygons representing canopy boundaries, eliminate island polygons, and implement a minimum bounding convex hull algorithm to improve the shape of the canopy to complete post-delineation canopy enhancement.

3.2. Canopy Size Metrics Extraction

3.3. Statistical Biomass Modeling

4. Results

4.1. Automated Workflow Overall Performance

4.2. Manual and Automated Canopy Metrics Comparison

4.3. Biomass Modeling Using Canopy Size Variables

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Watts, A.C.; Bowman, W.S.; Abd-Elrahman, A.; Mohamed, A.; Wilkinson, B.E.; Perry, J.; Kaddoura, Y.O.; Lee, K. Unmanned Aircraft Systems (UASs) for Ecological Research and Natural-Resource Monitoring (Florida). Ecol. Restor. 2008, 26, 13–14. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H.; Li, Z.; Yang, G. Quantitative analysis and hyperspectral remote sensing of the nitrogen nutrition index in winter wheat. Int. J. Remote Sens. 2019, 41, 858–881. [Google Scholar] [CrossRef]

- Xu, Y.; Smith, S.E.; Grunwald, S.; Abd-Elrahman, A.; Wani, S.P. Incorporation of satellite remote sensing pan-sharpened imagery into digital soil prediction and mapping models to characterize soil property variability in small agricultural fields. ISPRS J. Photogramm. Remote Sens. 2017, 123, 1–19. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, H.; Niu, Y.; Han, W. Mapping Maize Water Stress Based on UAV Multispectral Remote Sensing. Remote Sens. 2019, 11, 605. [Google Scholar] [CrossRef]

- Gerhards, M.; Schlerf, M.; Rascher, U.; Udelhoven, T.; Juszczak, R.; Alberti, G.; Miglietta, F.; Inoue, Y. Analysis of Airborne Optical and Thermal Imagery for Detection of Water Stress Symptoms. Remote Sens. 2018, 10, 1139. [Google Scholar] [CrossRef]

- Guo, C.; Zhang, L.; Zhou, X.; Zhu, Y.; Cao, W.; Qiu, X.; Cheng, T.; Tian, Y. Integrating remote sensing information with crop model to monitor wheat growth and yield based on simulation zone partitioning. Precis. Agric. 2017, 19, 55–78. [Google Scholar] [CrossRef]

- Chivasa, W.; Mutanga, O.; Biradar, C. Application of remote sensing in estimating maize grain yield in heterogeneous African agricultural landscapes: A review. Int. J. Remote Sens. 2017, 38, 6816–6845. [Google Scholar] [CrossRef]

- Newton, I.H.; Islam, A.F.M.T.; Islam, A.K.M.S.; Islam, G.M.T.; Tahsin, A.; Razzaque, S. Yield Prediction Model for Potato Using Landsat Time Series Images Driven Vegetation Indices. Remote Sens. Earth Syst. Sci. 2018, 1, 29–38. [Google Scholar] [CrossRef]

- Watanabe, K.; Guo, W.; Arai, K.; Takanashi, H.; Kajiya-Kanegae, H.; Kobayashi, M.; Yano, K.; Tokunaga, T.; Fujiwara, T.; Tsutsumi, N.; et al. High-Throughput Phenotyping of Sorghum Plant Height Using an Unmanned Aerial Vehicle and Its Application to Genomic Prediction Modeling. Front. Plant Sci. 2017, 8, 421. [Google Scholar] [CrossRef]

- Jang, G.; Kim, J.; Yu, J.-K.; Kim, H.-J.; Kim, Y.; Kim, D.-W.; Kim, K.-H.; Lee, C.W.; Chung, Y.S. Review: Cost-Effective Unmanned Aerial Vehicle (UAV) Platform for Field Plant Breeding Application. Remote Sens. 2020, 12, 998. [Google Scholar] [CrossRef]

- Chawade, A.; Van Ham, J.; Blomquist, H.; Bagge, O.; Alexandersson, E.; Ortiz, R. High-Throughput Field-Phenotyping Tools for Plant Breeding and Precision Agriculture. Agronomy 2019, 9, 258. [Google Scholar] [CrossRef]

- Pinter, J.P.J.; Hatfield, J.L.; Schepers, J.S.; Barnes, E.M.; Moran, M.S.; Daughtry, C.S.; Upchurch, D.R. Remote Sensing for Crop Management. Photogramm. Eng. Remote Sens. 2003, 69, 647–664. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, X.; Xu, Y.; Jia, T.; Cui, S.; Wei, L.; Ma, A.; Zhang, L. MINI-UAV borne hyperspectral remote sensing: A review. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5908–5911. [Google Scholar]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H.; Feng, H.; Xu, B.; Zhao, X.; Yang, X. Retrieving Soybean Leaf Area Index from Unmanned Aerial Vehicle Hyperspectral Remote Sensing: Analysis of RF, ANN, and SVM Regression Models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef]

- Clerici, N.; Rubiano, K.; Abd-Elrahman, A.; Hoestettler, J.M.P.; Escobedo, F.J. Estimating Aboveground Biomass and Carbon Stocks in Periurban Andean Secondary Forests Using Very High Resolution Imagery. Forests 2016, 7, 138. [Google Scholar] [CrossRef]

- Zhao, H.; Xu, L.; Jiang, H.; Shi, S.; Chen, D. HIGH THROUGHPUT SYSTEM FOR PLANT HEIGHT AND HYPERSPECTRAL MEASUREMENT. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 2365–2369. [Google Scholar] [CrossRef]

- Huang, P.; Luo, X.; Jin, J.; Wang, L.; Zhang, L.; Liu, J.; Zhang, Z. Improving High-Throughput Phenotyping Using Fusion of Close-Range Hyperspectral Camera and Low-Cost Depth Sensor. Sensors 2018, 18, 2711. [Google Scholar] [CrossRef] [PubMed]

- Guan, Z.; Abd-Elrahman, A.; Fan, Z.; Whitaker, V.M.; Wilkinson, B. Modeling strawberry biomass and leaf area using object-based analysis of high-resolution images. ISPRS J. Photogramm. Remote Sens. 2020, 163, 171–186. [Google Scholar] [CrossRef]

- Palace, M.; Sullivan, F.B.; Ducey, M.J.; Treuhaft, R.N.; Herrick, C.; Shimbo, J.Z.; Mota-E-Silva, J. Estimating forest structure in a tropical forest using field measurements, a synthetic model and discrete return lidar data. Remote Sens. Environ. 2015, 161, 1–11. [Google Scholar] [CrossRef]

- Wu, X.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Assessment of Individual Tree Detection and Canopy Cover Estimation using Unmanned Aerial Vehicle based Light Detection and Ranging (UAV-LiDAR) Data in Planted Forests. Remote Sens. 2019, 11, 908. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Jing, L.; Hu, B.; Noland, T.; Li, J. An individual tree crown delineation method based on multi-scale segmentation of imagery. ISPRS J. Photogramm. Remote Sens. 2012, 70, 88–98. [Google Scholar] [CrossRef]

- Marasigan, R.; Festijo, E.; Juanico, D.E. Mangrove Crown Diameter Measurement from Airborne Lidar Data using Marker-controlled Watershed Algorithm: Exploring Performance. In Proceedings of the 2019 IEEE 6th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Kuala Lumpur, Malaysia, 20–21 December 2019; pp. 1–7. [Google Scholar]

- Chen, Q.; Baldocchi, D.; Gong, P.; Kelly, M. Isolating Individual Trees in a Savanna Woodland Using Small Footprint Lidar Data. Photogramm. Eng. Remote Sens. 2006, 72, 923–932. [Google Scholar] [CrossRef]

- Meyer, F.; Beucher, S. Morphological segmentation. J. Vis. Commun. Image Represent. 1990, 1, 21–46. [Google Scholar] [CrossRef]

- Huang, H.; Li, X.; Chen, C. Individual Tree Crown Detection and Delineation from Very-High-Resolution UAV Images Based on Bias Field and Marker-Controlled Watershed Segmentation Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2253–2262. [Google Scholar] [CrossRef]

- Bao, Y.; Gao, W.; Gao, Z. Estimation of winter wheat biomass based on remote sensing data at various spatial and spectral resolutions. Front. Earth Sci. China 2009, 3, 118–128. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Zhou, M.; Li, D.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Improved estimation of rice aboveground biomass combining textural and spectral analysis of UAV imagery. Precis. Agric. 2018, 20, 611–629. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation Index Weighted Canopy Volume Model (CVMVI) for soybean biomass estimation from Unmanned Aerial System-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Brocks, S.; Bareth, G. Estimating Barley Biomass with Crop Surface Models from Oblique RGB Imagery. Remote Sens. 2018, 10, 268. [Google Scholar] [CrossRef]

- Abd-Elrahman, A.; Pande-Chhetri, R.; Vallad, G.E. Design and Development of a Multi-Purpose Low-Cost Hyperspectral Imaging System. Remote Sens. 2011, 3, 570–586. [Google Scholar] [CrossRef]

- Abd-Elrahman, A.; Sassi, N.; Wilkinson, B.; DeWitt, B. Georeferencing of mobile ground-based hyperspectral digital single-lens reflex imagery. J. Appl. Remote Sens. 2016, 10, 14002. [Google Scholar] [CrossRef]

- Roberts, J.W.; Koeser, A.K.; Abd-Elrahman, A.; Wilkinson, B.; Hansen, G.; Landry, S.M.; Perez, A. Mobile Terrestrial Photogrammetry for Street Tree Mapping and Measurements. Forests 2019, 10, 701. [Google Scholar] [CrossRef]

- Jensen, J.L.R.; Mathews, A.J. Assessment of Image-Based Point Cloud Products to Generate a Bare Earth Surface and Estimate Canopy Heights in a Woodland Ecosystem. Remote Sens. 2016, 8, 50. [Google Scholar] [CrossRef]

- Wolf, D.; Dewitt, B.A. Elements of Photogrammetry with Applications in GIS; McGraw-Hill: New York, NY, USA, 2000. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. Int. Workshop Vis. Algorithms 1999, 1883, 298–372. [Google Scholar]

- Liu, T.; Abd-Elrahman, A. An Object-Based Image Analysis Method for Enhancing Classification of Land Covers Using Fully Convolutional Networks and Multi-View Images of Small Unmanned Aerial System. Remote Sens. 2018, 10, 457. [Google Scholar] [CrossRef]

- Agisoft LLC. Agisoft PhotoScan User Manual: Professional Edition; Agisoft LLC: Saint Petersburg, Russia, 2016. [Google Scholar]

- Crippen, R. Calculating the vegetation index faster. Remote Sens. Environ. 1990, 34, 71–73. [Google Scholar] [CrossRef]

- Sirmacek, B. Shadow Detection. Available online: https://www.mathworks.com/matlabcentral/fileexchange/56263-shadow-detection (accessed on 19 September 2020).

- Sirmacek, B.; Ünsalan, C. Damaged building detection in aerial images using shadow Information. In Proceedings of the 2009 4th International Conference on Recent Advances in Space Technologies, Istanbul, Turkey, 11–13 June 2009; pp. 249–252. [Google Scholar]

- Joblove, G.H.; Greenberg, D. Color spaces for computer graphics. In Proceedings of the 5th Annual Conference on Computer Graphics and Interactive Techniques, New York, NY, USA, 23–25 August 1978; pp. 20–25. [Google Scholar]

- Bar-On, Y.M.; Phillips, R.; Milo, R. The biomass distribution on Earth. Proc. Natl. Acad. Sci. USA 2018, 115, 6506–6511. [Google Scholar] [CrossRef]

- Plowright, A.; Roussel, J.-R. ForestTools: Analyzing Remotely Sensed Forest Data. Available online: https://rdrr.io/cran/ForestTools/ (accessed on 19 September 2020).

- ESRI. ArcMap. Available online: https://desktop.arcgis.com/en/arcmap/ (accessed on 19 September 2020).

| Genotype | Genotype Code | Min | Max | Mean | Median | Acquisition Date | Min | Max | Mean | Median |

|---|---|---|---|---|---|---|---|---|---|---|

| 11.71-9 | A | 1.4 | 47.0 | 21.1 | 21.7 | 11_16_2017 | 0.5 | 6.9 | 2.4 | 2.2 |

| 12.17-62 | B | 3.5 | 83.1 | 33.9 | 28.5 | 11_21_2017 | 1.0 | 4.9 | 2.8 | 2.8 |

| 12.93-4 | C | 0.5 | 46.8 | 21.1 | 21.7 | 11_30_2017 | 1.5 | 10.6 | 5.5 | 5.1 |

| 13.26-134 | D | 1.4 | 53.3 | 18.8 | 16.7 | 12_07_2017 | 2.5 | 12.7 | 6.4 | 5.9 |

| 13.42-5 | E | 1.1 | 41.8 | 19.1 | 19.4 | 12_14_2017 | 7.1 | 41.4 | 15.4 | 13.1 |

| 13.55-195 | F | 0.8 | 52.0 | 17.1 | 16.9 | 12_21_2017 | 8.4 | 47.0 | 18.3 | 15.7 |

| 14.55-48 | G | 4.0 | 104.9 | 34.0 | 35.1 | 12_27_2017 | 9.4 | 44.8 | 21.1 | 19.6 |

| 14.83-36 | H | 2.4 | 51.9 | 20.2 | 20.7 | 01_04_2018 | 13.8 | 38.9 | 26.1 | 26.3 |

| BEAUTY | I | 1.2 | 31.1 | 14.3 | 13.8 | 01_11_2018 | 12.5 | 59.0 | 30.7 | 28.8 |

| ELYANA | J | 1.5 | 48.6 | 21.4 | 20.1 | 01_18_2018 | 13.0 | 71.1 | 35.1 | 33.8 |

| FESTIVAL | K | 1.0 | 63.9 | 25.1 | 22.1 | 01_25_2018 | 8.8 | 65.7 | 32.4 | 30.7 |

| FL_10-89 | L | 2.0 | 92.5 | 31.8 | 31.1 | 02_01_2018 | 14.6 | 83.1 | 40.8 | 36.2 |

| FLORIDA127 | M | 3.2 | 83.8 | 30.2 | 29.4 | 02_08_2018 | 15.4 | 104.9 | 47.7 | 41.2 |

| FRONTERAS | N | 2.3 | 94.7 | 34.9 | 31.5 | 02_15_2018 | 15.4 | 116.9 | 40.2 | 38.6 |

| RADIANCE | O | 1.0 | 43.0 | 19.4 | 19.7 | 02_22_2018 | 13.4 | 76.3 | 37.7 | 33.8 |

| TREASURE | P | 0.9 | 116.9 | 38.2 | 42.0 | 02_27_2018 | 15.4 | 70.1 | 35.0 | 32.7 |

| WINTERSTAR | Q | 0.6 | 41.7 | 21.0 | 18.9 |

| Canopy Size Metric | Min | Max | Mean | Median | |

|---|---|---|---|---|---|

| Automated Canopy Delineation | Area m2 | 0.01 | 0.35 | 0.11 | 0.11 |

| Average Height m | 0.02 | 0.24 | 0.08 | 0.08 | |

| std deviation of Height m | 0.02 | 0.13 | 0.06 | 0.06 | |

| Volume m3 | 3 × 104 | 4.6 × 102 | 9.3 × 103 | 8.5 × 103 | |

| Visually Interpreted Canopies | Area m2 | 0.04 | 0.37 | 0.13 | 0.13 |

| Average Height m | 0.01 | 0.22 | 0.07 | 0.07 | |

| std deviation of Height m | 0.02 | 0.13 | 0.06 | 0.06 | |

| Volume m3 | 3 × 104 | 4.6 × 102 | 9.4 × 103 | 8.6 × 103 |

| Automatically Delineated Canopy | Visually Interpreted Canopy | Image Acquisition Date | Automatically Delineated Canopy | Visually Interpreted Canopy | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Genotype | Genotype Code | RMSE (g) | R2 | RMSE (g) | R2 | RMSE (g) | R2 | RMSE (g) | R2 | |

| 11.71-9 | A | 6.1 | 0.77 | 5.8 | 0.76 | 11_16_2017 | 7.8 | 0.76 | 8.2 | 0.75 |

| 12.17-62 | B | 14.9 | 0.78 | 13.9 | 0.77 | 11_21_2017 | 6.8 | 0.76 | 5.0 | 0.74 |

| 12.93-4 | C | 6.7 | 0.77 | 6.4 | 0.76 | 11_30_2017 | 6.8 | 0.77 | 7.7 | 0.76 |

| 13.26-134 | D | 7.9 | 0.78 | 8.5 | 0.77 | 12_07_2017 | 9.2 | 0.77 | 8.9 | 0.76 |

| 13.42-5 | E | 7.3 | 0.78 | 6.7 | 0.76 | 12_14_2017 | 6.9 | 0.78 | 6.9 | 0.77 |

| 13.55-195 | F | 9.2 | 0.78 | 9.5 | 0.77 | 12_21_2017 | 6.6 | 0.78 | 6.3 | 0.77 |

| 14.55-48 | G | 11.5 | 0.77 | 12.1 | 0.77 | 12_27_2017 | 7.1 | 0.78 | 7.1 | 0.77 |

| 14.83-36 | H | 8.7 | 0.78 | 8.9 | 0.77 | 01_04_2018 | 6.0 | 0.78 | 5.7 | 0.77 |

| BEAUTY | I | 4.8 | 0.77 | 5.3 | 0.76 | 01_11_2018 | 9.6 | 0.78 | 9.3 | 0.77 |

| ELYANA | J | 6.0 | 0.77 | 7.4 | 0.76 | 01_18_2018 | 8.1 | 0.77 | 8.4 | 0.76 |

| FESTIVAL | K | 8.1 | 0.77 | 8.2 | 0.76 | 01_25_2018 | 6.6 | 0.77 | 7.1 | 0.76 |

| FL_10-89 | L | 11.2 | 0.77 | 11.3 | 0.76 | 02_01_2018 | 11.4 | 0.77 | 12.0 | 0.76 |

| FLORIDA127 | M | 9.1 | 0.77 | 9.3 | 0.76 | 02_08_2018 | 21.0 | 0.81 | 22.1 | 0.80 |

| FRONTERAS | N | 14.0 | 0.78 | 14.8 | 0.77 | 02_15_2018 | 10.2 | 0.76 | 9.5 | 0.75 |

| RADIANCE | O | 8.5 | 0.78 | 8.5 | 0.77 | 02_22_2018 | 8.9 | 0.77 | 7.5 | 0.75 |

| TREASURE | P | 10.5 | 0.76 | 11.2 | 0.75 | 02_27_2018 | 9.8 | 0.78 | 11.7 | 0.77 |

| WINTERSTAR | Q | 6.6 | 0.77 | 6.4 | 0.76 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abd-Elrahman, A.; Guan, Z.; Dalid, C.; Whitaker, V.; Britt, K.; Wilkinson, B.; Gonzalez, A. Automated Canopy Delineation and Size Metrics Extraction for Strawberry Dry Weight Modeling Using Raster Analysis of High-Resolution Imagery. Remote Sens. 2020, 12, 3632. https://doi.org/10.3390/rs12213632

Abd-Elrahman A, Guan Z, Dalid C, Whitaker V, Britt K, Wilkinson B, Gonzalez A. Automated Canopy Delineation and Size Metrics Extraction for Strawberry Dry Weight Modeling Using Raster Analysis of High-Resolution Imagery. Remote Sensing. 2020; 12(21):3632. https://doi.org/10.3390/rs12213632

Chicago/Turabian StyleAbd-Elrahman, Amr, Zhen Guan, Cheryl Dalid, Vance Whitaker, Katherine Britt, Benjamin Wilkinson, and Ali Gonzalez. 2020. "Automated Canopy Delineation and Size Metrics Extraction for Strawberry Dry Weight Modeling Using Raster Analysis of High-Resolution Imagery" Remote Sensing 12, no. 21: 3632. https://doi.org/10.3390/rs12213632

APA StyleAbd-Elrahman, A., Guan, Z., Dalid, C., Whitaker, V., Britt, K., Wilkinson, B., & Gonzalez, A. (2020). Automated Canopy Delineation and Size Metrics Extraction for Strawberry Dry Weight Modeling Using Raster Analysis of High-Resolution Imagery. Remote Sensing, 12(21), 3632. https://doi.org/10.3390/rs12213632