Using Wavelet Transforms to Fuse Nighttime Light Data and POI Big Data to Extract Urban Built-Up Areas

Abstract

:1. Introduction

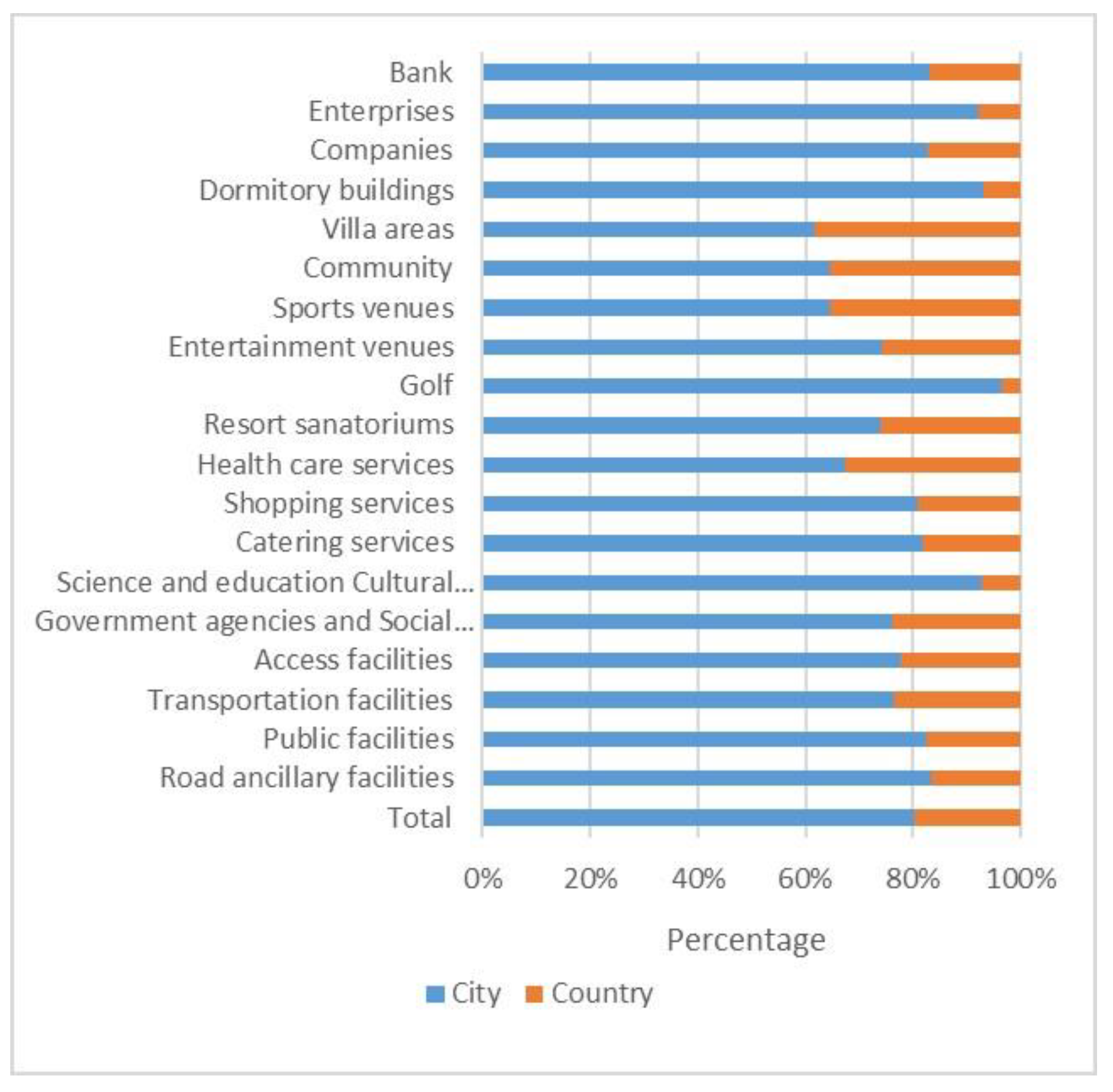

2. Materials and Methods

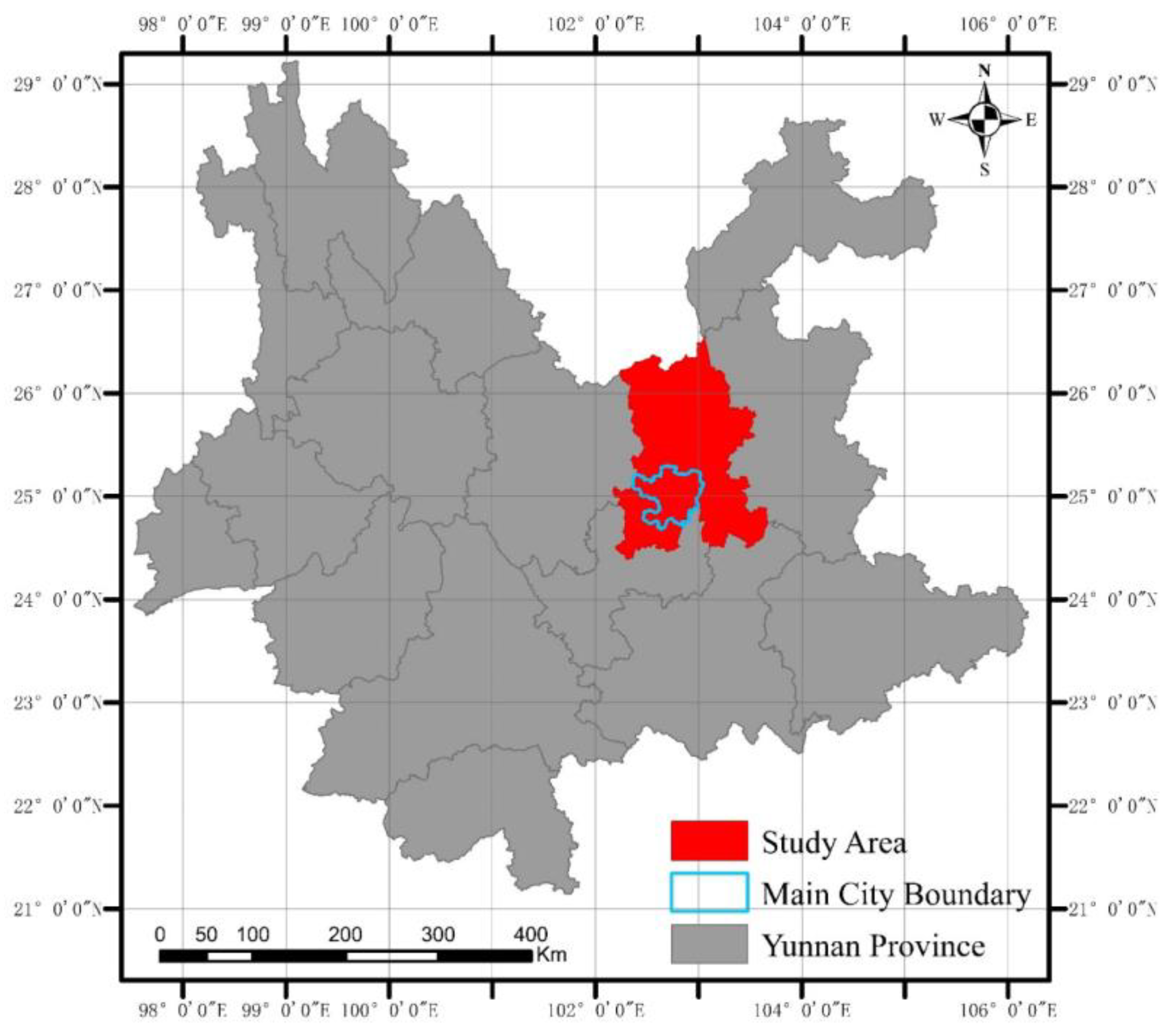

2.1. Study Area

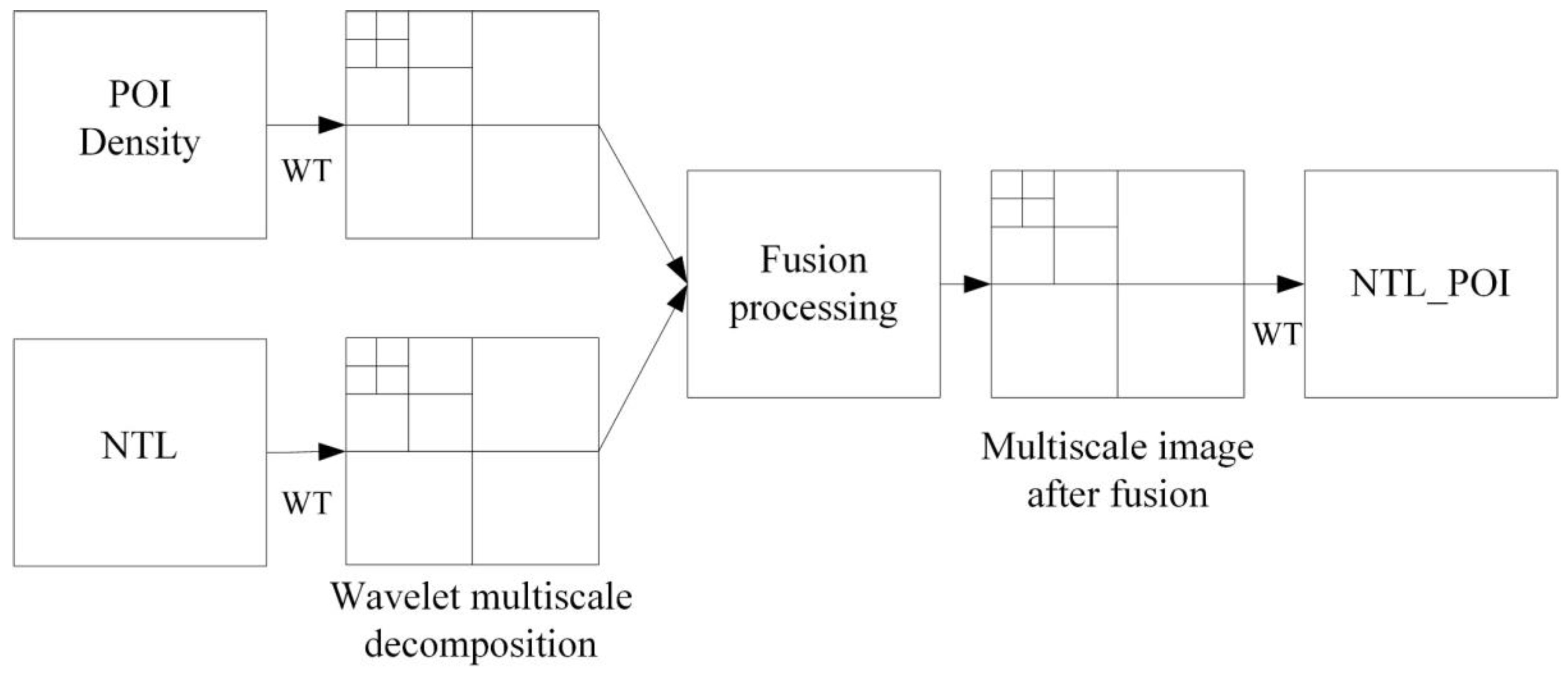

2.2. Methods

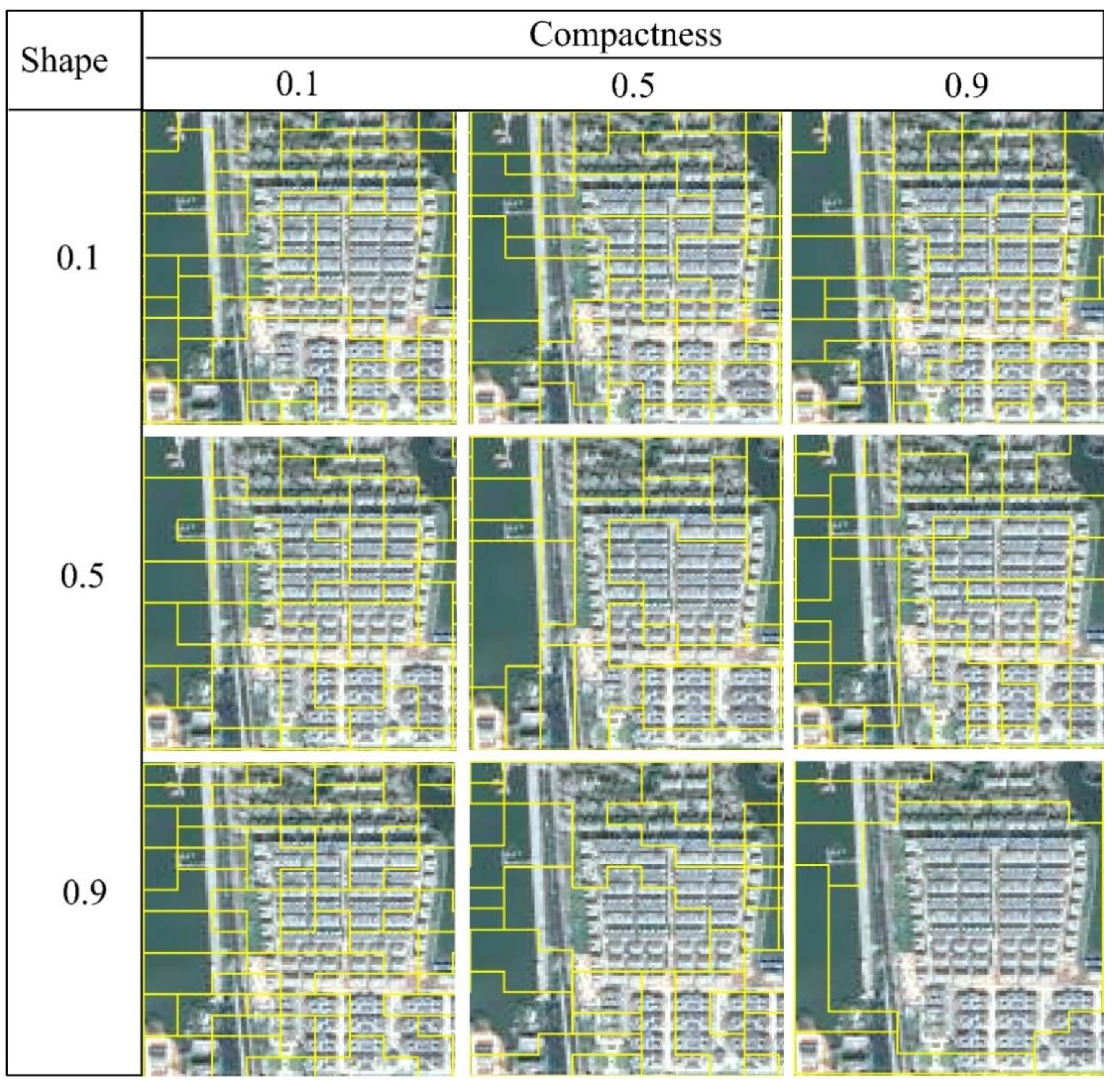

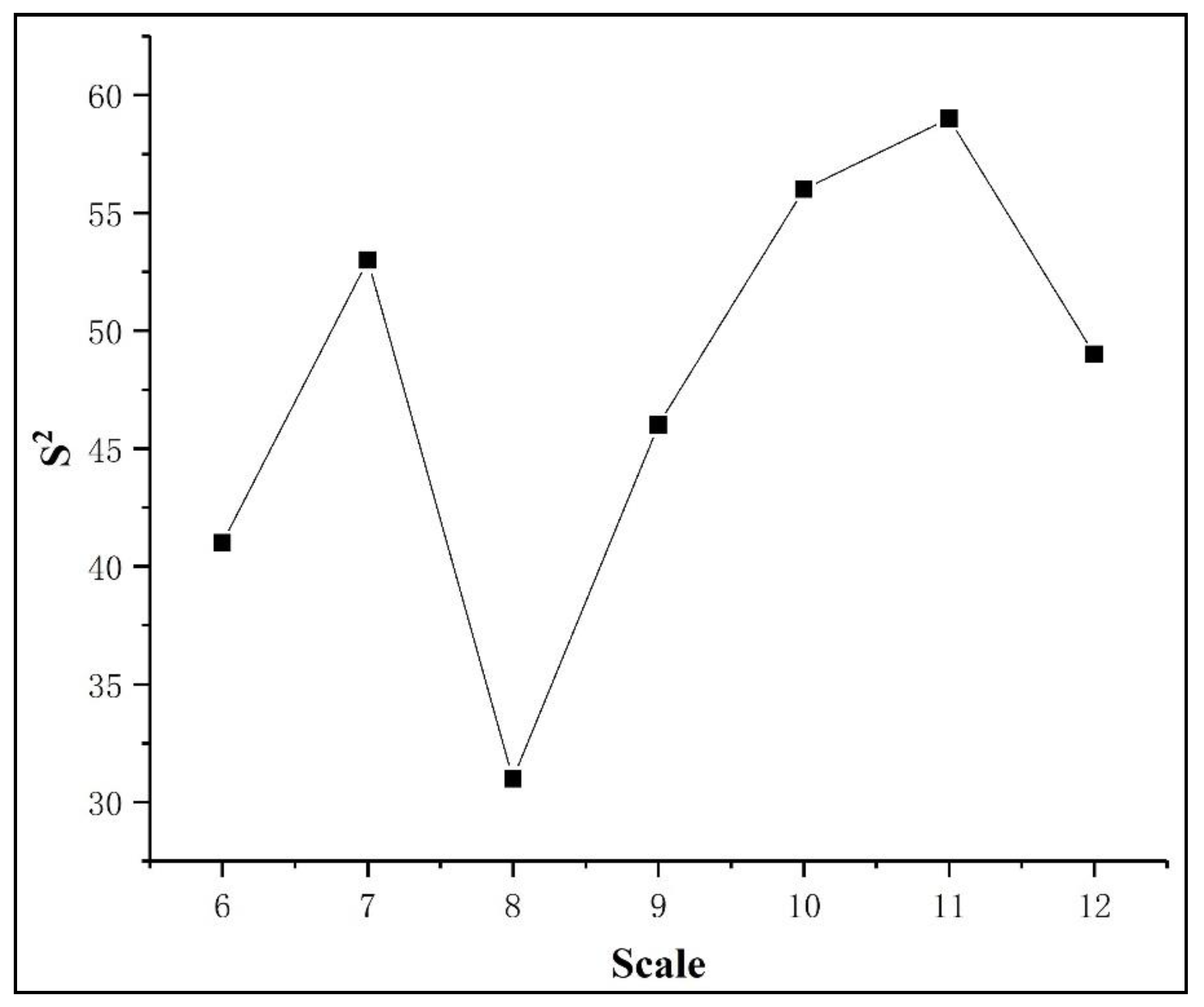

2.2.1. Multiresolution Segmentation

2.2.2. Wavelet Transform

2.3. Accuracy Assessment

3. Results

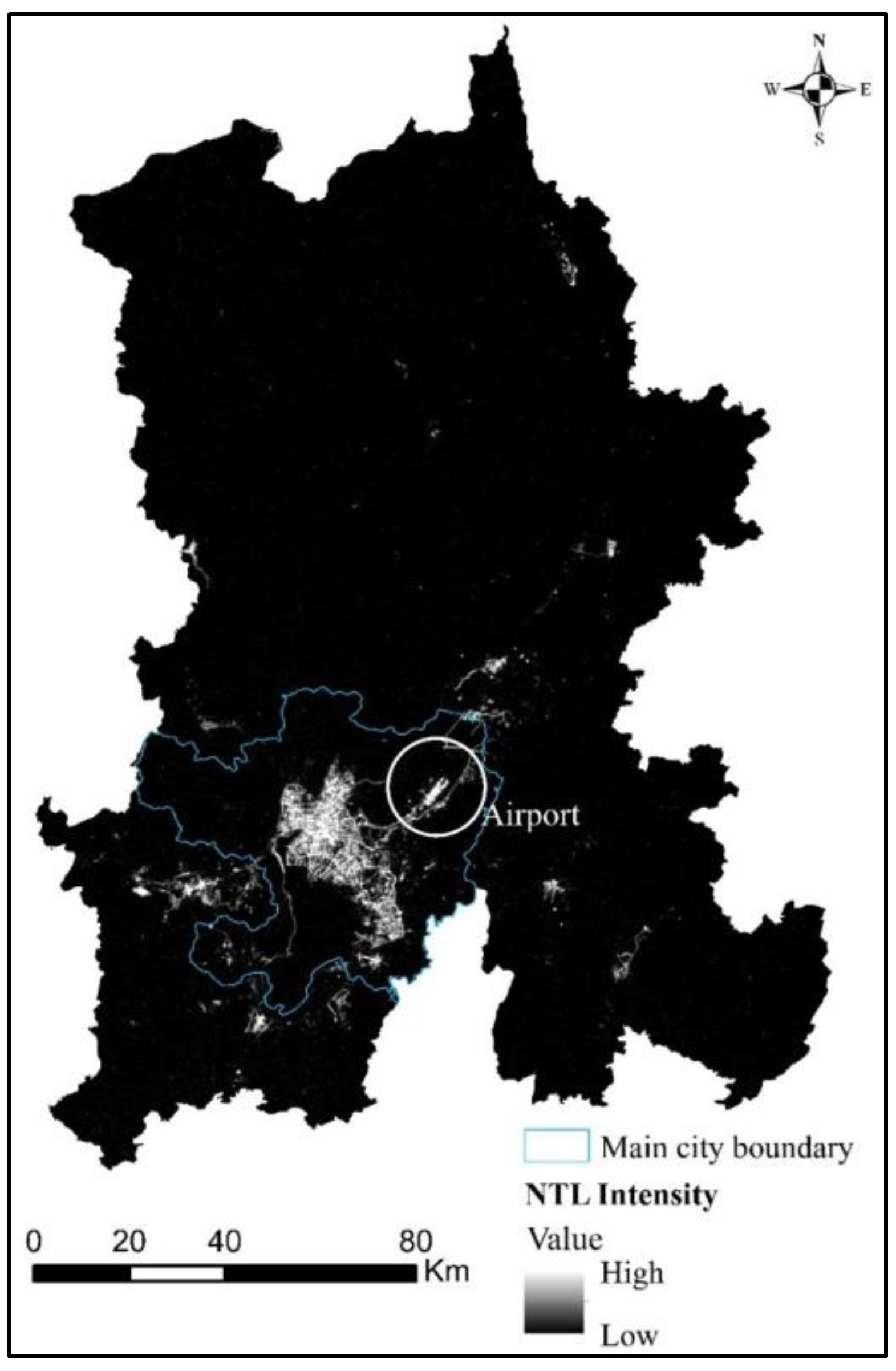

3.1. Built-Up Urban Areas Identified by Luojia1-01

3.1.1. Results of Multiresolution Segmentation

3.1.2. Results of Urban Built-Up Area Identified by NTL Data

3.2. Urban Built-Up Areas Identified by Data Fusion

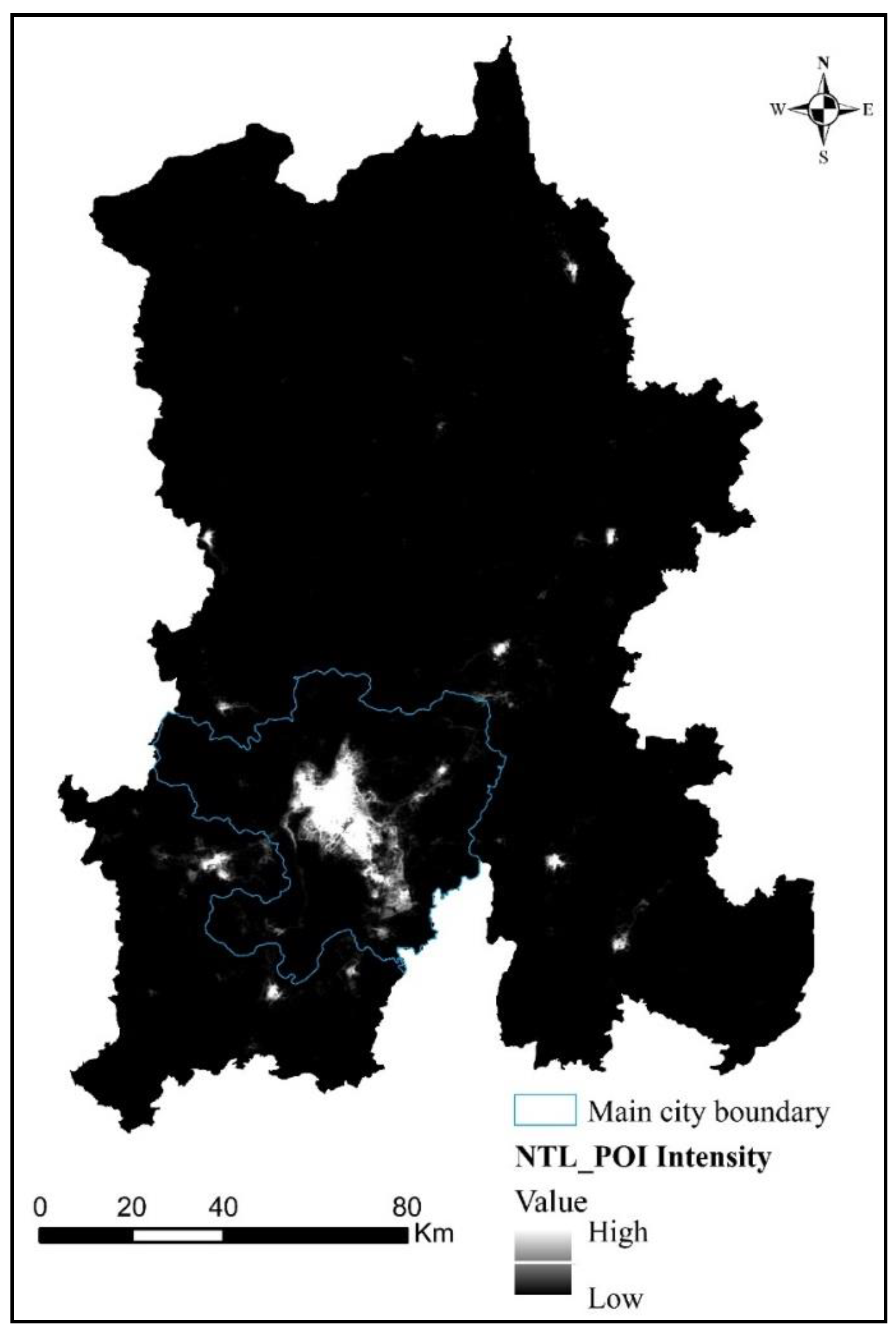

3.2.1. Fusion of POI and NTL Data

3.2.2. Urban Built-Up Areas Identified by POI_NTL

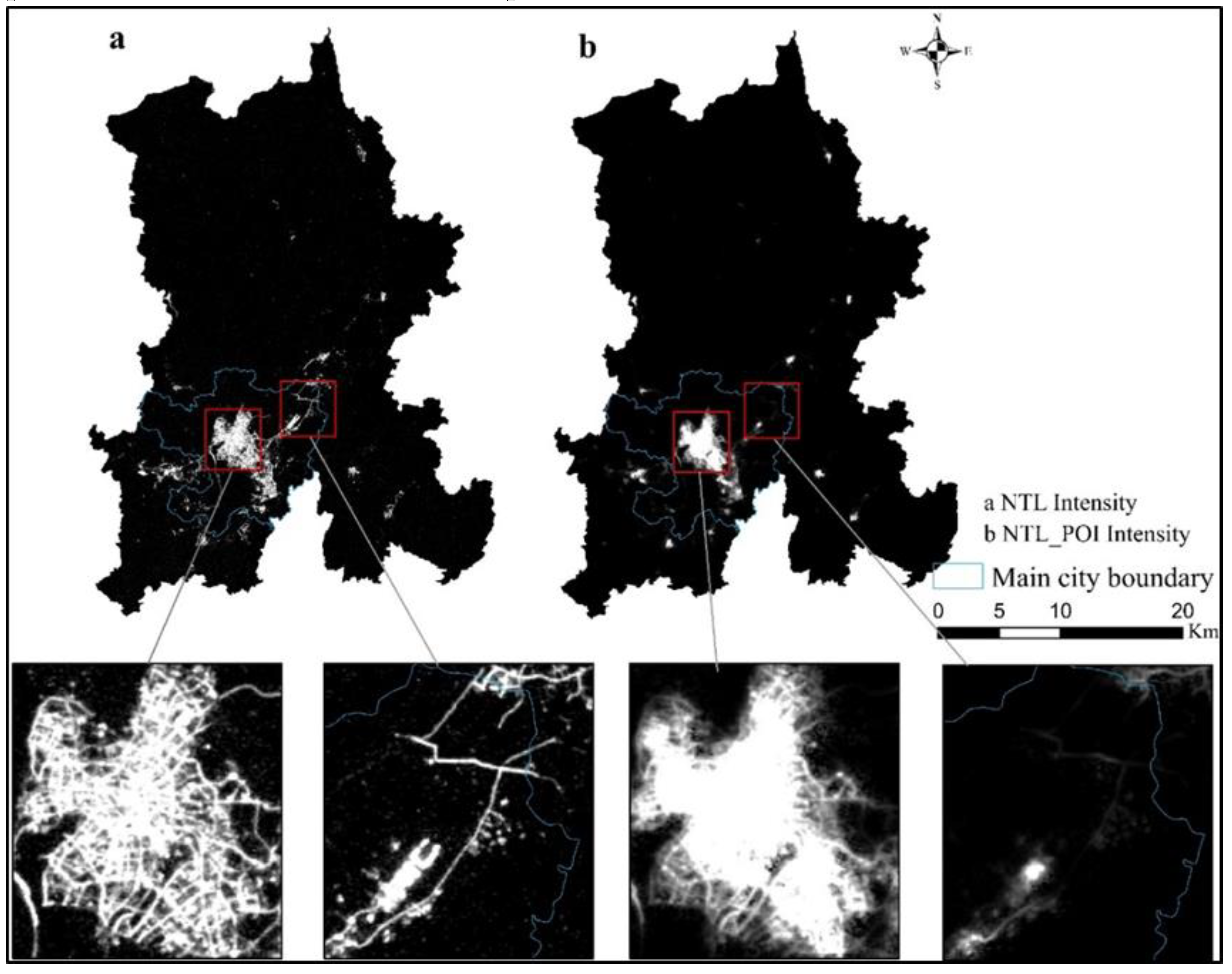

3.3. Comparison of NTL Data and NTL_POI Data after Fusion

3.3.1. NTL Data and Fused NTL_POI Data

3.3.2. Urban Built-Up Areas Identified by NTL and NTL_POI Data

4. Discussion

4.1. Advantages of Urban Built-Up Area Extracted by Wavelet Transform and Image Fusion

4.2. Limitations and Future Research Directions

5. Conclusions

- (1)

- NTL data can identify urban built-up areas at a macro scale. Although the identified built-up area reaches 84%, the verification accuracy is too low, which makes the extracted urban built-up area not coincide with the actual urban built-up area in spatial position, resulting in large error in the extraction results.

- (2)

- Based on NTL data, NTL_POI data is combined with POI data through wavelet transform, the area of the identified urban built-up area reaches 96.27%, and the verification accuracy is also significantly improved. The extracted urban built-up area is highly coincident with the reference built-up area in terms of spatial location.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhan, Q.; Yue, Y.; Xiao, Y. Evolution of built-up area expansion and verification of planning implementation in Wuhan. City Plan. Rev. 2018, 42, 63–71. [Google Scholar]

- Zhang, D.; Zhou, C.; Xu, W. Spatial-temporal characteristics of primary and secondary educational resources for relocated children of migrant workers: The case of Liaoning province. Complexity 2020, 2020, 7457109. [Google Scholar]

- Anasuya, B.; Swain, D.; Vinoj, V. Rapid urbanization and associated impacts on land surface temperature changes over Bhubaneswar urban district, India. Environ. Monit. Assess. 2019, 191, 790. [Google Scholar] [CrossRef] [PubMed]

- Rinder, J.; Liu, Q. Assessing environmental impacts of urban growth using remote sensing. Geo Spat. Inf. Sci. 2020, 23, 20–39. [Google Scholar] [CrossRef] [Green Version]

- Ejiagha, I.R.; Ahmed, M.R.; Hassan, Q.K.; Dewan, A.; Gupta, A.; Rangelova, E. Use of remote sensing in comprehending the influence of urban landscape’s composition and configuration on land surface temperature at neighborhood scale. Remote Sens. 2020, 12, 2508. [Google Scholar] [CrossRef]

- Liang, X.; Liu, X.; Chen, G.; Leng, J.; Wen, Y.; Chen, G. Coupling fuzzy clustering and cellular automata based on local maxima of development potential to model urban emergence and expansion in economic development zones. Int. J. Geogr. Inf. Sci. 2020, 34, 1930–1952. [Google Scholar] [CrossRef]

- Li, H.; Li, X.; Yang, X.; Zhang, H. Analyzing the relationship between developed land area and nighttime light emissions of 36 Chinese cities. Remote Sens. 2019, 11, 10. [Google Scholar] [CrossRef] [Green Version]

- Dou, Y.; Liu, Z.; He, C.; Yue, H. Urban land extraction using VIIRS nighttime light data: An evaluation of three popular methods. Remote Sens. 2017, 9, 175. [Google Scholar] [CrossRef] [Green Version]

- Andrade-Núñez, M.J.; Aide, T.M. Built-up expansion between 2001 and 2011 in South America continues well beyond the cities. Environ. Res. Lett. 2018, 13, 084006. [Google Scholar] [CrossRef]

- Kotharkar, R.; Bahadure, P. Achieving compact city form through density distribution: Case of Indian cities. J. Urban Plan. Dev. 2020, 146, 04019022. [Google Scholar] [CrossRef]

- Dennis, M.; James, P. Evaluating the relative influence on population health of domestic gardens and green space along a rural-urban gradient. Landsc. Urban Plan. 2017, 157, 343–351. [Google Scholar] [CrossRef] [Green Version]

- Son, N.T.; Chen, C.F.; Chen, C.R.; Thanh, B.X.; Vuong, T.H. Assessment of urbanization and urban heat islands in Ho Chi Minh City, Vietnam using Landsat data. Sustain. Cities Soc. 2017, 30, 150–161. [Google Scholar] [CrossRef]

- Shi, K.; Huang, C.; Yu, B.; Yin, B.; Huang, Y.; Wu, J. Evaluation of NPP-VIIRS night-time light composite data for extracting built-up urban areas. Remote Sens. Lett. 2014, 5, 358–366. [Google Scholar] [CrossRef]

- Cheng, X.; Luo, R.; Shi, G.; Xia, L.; Shen, Z. Automated detection of impervious surfaces using night-time light and Landsat images based on an iterative classification framework. Remote Sens. Lett. 2020, 11, 465–474. [Google Scholar] [CrossRef]

- Kotarba, A.Z.; Aleksandrowicz, S. Impervious surface detection with nighttime photography from the international space station. Remote Sens. Environ. 2016, 176, 295–307. [Google Scholar] [CrossRef]

- Pesaresi, M.; Ehrlich, D.; Ferri, S.; Florczyk, A.; Freire, S.; Halkia, M.; Julea, A.; Kemper, T.; Soille, P.; Syrris, V.; et al. Operating procedure for the production of the global human settlement layer from Landsat data of the epochs 1975, 1990, 2000, and 2014. Publ. Off. Eur. Union 2016, 1–62. [Google Scholar]

- Wang, Z.; Yang, S.; Wang, S.; Shen, Y. Monitoring evolving urban cluster systems using DMSP/OLS nighttime light data: A case study of the Yangtze river delta region, China. J. Appl. Remote Sens. 2017, 11, 046029. [Google Scholar] [CrossRef]

- Wang, R.; Wan, B.; Guo, Q.; Hu, M.; Zhou, S. Mapping regional urban extent using NPP-VIIRS DNB and MODIS NDVI data. Remote Sens. 2017, 9, 862. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.X.; Li, X.; Li, C.H. Urban spatial form analysis of GBA based on “LJ1-01” nighttime light remote sensing images. J. Appl. Sci. 2020, 38, 466–477. [Google Scholar]

- Yu, B.; Tang, M.; Wu, Q.; Yang, C.; Deng, S.; Shi, K.; Peng, C.; Wu, J.; Chen, Z. Urban built-up area extraction from log-transformed NPP-VIIRS nighttime light composite data. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1279–1283. [Google Scholar] [CrossRef]

- Kucsicsa, G.; Grigorescu, I. Urban growth in the Bucharest metropolitan area: Spatial and temporal assessment using logistic regression. J. Urban Plan. Dev. 2018, 144, 05017013. [Google Scholar] [CrossRef]

- Lei, S.; Xu, J.; Li, Y.; Du, C.; Mu, M.; Zeng, S.; Miao, S.; Bi, S.; Wang, Q.; Lyu, H.; et al. Remote monitoring of PSD slope under the influence of sand dredging activities in lake Hongze based on landsat-8/OLI data and VIIRS/DNB night-time light composite data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4198–4212. [Google Scholar] [CrossRef]

- Li, S.; Cheng, L.; Liu, X.; Mao, J.; Wu, J.; Li, M. City type-oriented modeling electric power consumption in China using NPP-VIIRS nighttime stable light data. Energy 2019, 189, 116040. [Google Scholar] [CrossRef]

- Marquez, L.O.; Maheepala, S. An object-oriented approach to the integrated planning of urban development and utility services. Comput. Environ. Urban Syst. 1996, 20, 303–312. [Google Scholar] [CrossRef]

- Pesaresi, M.; Huadong, G.; Blaes, X.; Ehrlich, D.; Ferri, S.; Gueguen, L.; Halkia, M.; Kauffmann, M.; Kemper, T.; Lu, L.; et al. A global human settlement layer from optical HR/VHR RS data: Concept and first results. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2102–2131. [Google Scholar] [CrossRef]

- Li, X.; Zhu, J.; Yin, X.; Yao, C.; Huang, J.; Li, M. Mapping construction land of Guangzhou based on LuojiaNo.1 nightlight data. J. Geo Inf. Sci. 2019, 21, 1802–1810. [Google Scholar]

- Wang, X.; Zhou, T.; Wang, W. Building density estimation in Hefei main urban area by Luojia1-01 nighttime light imagery. Remote Sens. Inf. 2020, 35, 71–77. [Google Scholar]

- Ouyang, Z.; Lin, M.; Chen, J.; Fan, P.; Qian, S.S.; Park, H. Improving estimates of built-up area from night time light across globally distributed cities through hierarchical modeling. Sci. Total Environ. 2019, 647, 1266–1280. [Google Scholar] [CrossRef]

- Goldblatt, R.; Stuhlmacher, M.F.; Tellman, B.; Clinton, N.; Hanson, G.; Georgescu, M.; Wang, C.; Serrano-Candela, F.; Khandelwal, A.K.; Cheng, W.H.; et al. Using Landsat and nighttime lights for supervised pixel-based image classification of urban land cover. Remote Sens. Environ. 2018, 205, 253–275. [Google Scholar] [CrossRef]

- Xie, Y.; Weng, Q. Spatiotemporally enhancing time-series DMSP/OLS nighttime light imagery for assessing large-scale urban dynamics. ISPRS J. Photogramm. Remote Sens. 2017, 128, 1–15. [Google Scholar] [CrossRef]

- Shi, K.; Chang, Z.; Chen, Z.; Wu, J.; Yu, B. Identifying and evaluating poverty using multisource remote sensing and point of interest (POI) data: A case study of Chongqing, China. J. Clean. Prod. 2020, 255, 120245. [Google Scholar] [CrossRef]

- Liu, C.; Yang, K.; Bennett, M.M.; Guo, Z.; Cheng, L.; Li, M. Automated extraction of built-up areas by fusing VIIRS nighttime lights and landsat-8 data. Remote Sens. 2019, 11, 1571. [Google Scholar] [CrossRef] [Green Version]

- Li, F.; Yan, Q.; Bian, Z.; Liu, B.; Wu, Z. A POI and LST adjusted NTL urban index for urban built-up area extraction. Sensors 2020, 20, 2918. [Google Scholar] [CrossRef] [PubMed]

- Sun, B.; Zhang, Y.; Zhou, Q.; Gao, D. Street-scale analysis of population exposure to light pollution based on remote sensing and mobile big data—Shenzhen city as a case. Sensors 2020, 20, 2728. [Google Scholar] [CrossRef]

- Yang, X.; Ye, T.; Zhao, N.; Chen, Q.; Yue, W.; Qi, J.; Zeng, B.; Jia, P. Population mapping with multisensor remote sensing images and point-of-interest data. Remote Sens. 2019, 11, 574. [Google Scholar] [CrossRef] [Green Version]

- Song, J.; Lin, T.; Li, X.; Prishchepov, A.V. Mapping urban functional zones by integrating very high spatial resolution remote sensing imagery and points of interest: A case study of Xiamen, China. Remote Sens. 2018, 10, 1737. [Google Scholar] [CrossRef] [Green Version]

- Cao, R.; Tu, W.; Yang, C.; Li, Q.; Liu, J.; Zhu, J.; Zhang, Q.; Li, Q.; Qiu, G. Deep learning-based remote and social sensing data fusion for urban region function recognition. ISPRS J. Photogramm. Remote Sens. 2020, 163, 82–97. [Google Scholar] [CrossRef]

- Garnica-Carrillo, A.; Calderon, F.; Flores, J. Multi-focus image fusion for multiple images using adaptable size windows and parallel programming. Signal Image Video Process. 2020, 14, 1–8. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, B.; Yan, X.; Hu, J.; Chen, M. Multi-focus image fusion using learning-based matting with sum of the Gaussian-based modified Laplacian. Digit. Signal Process. 2020, 106, 102821. [Google Scholar] [CrossRef]

- Aymaz, S.; Köse, C. A novel image decomposition-based hybrid technique with super-resolution method for multi-focus image fusion. Inf. Fusion 2019, 45, 113–127. [Google Scholar] [CrossRef]

- Martinez, J.; Pistonesi, S.; Maciel, M.C.; Flesia, A.G. Multi-scale fidelity measure for image fusion quality assessment. Inf. Fusion 2019, 50, 197–211. [Google Scholar] [CrossRef]

- Sun, J.; Han, Q.; Kou, L.; Zhang, L.; Zhang, K.; Jin, Z. Multi-focus image fusion algorithm based on Laplacian pyramids. JOSA A 2018, 35, 480–490. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Chen, X.; Wang, Z.; Wang, Z.J.; Ward, R.K.; Wang, X. Deep learning for pixel-level image fusion: Recent advances and future prospects. Inf. Fusion 2018, 42, 158–173. [Google Scholar] [CrossRef]

- Hu, K.; Feng, X. Research on the multi-focus image fusion method based on the lifting stationary wavelet transform. JIPS 2018, 14, 1293–1300. [Google Scholar]

- Zhang, J.; He, X. The center of city function in Guiyang, China: An evaluation with emerging data. Int. J. Sustain. Dev. Plan. 2020, 15, 735–741. [Google Scholar] [CrossRef]

- Wicht, M.; Kuffer, M. The continuous built-up area extracted from ISS night-time lights to compare the amount of urban green areas across European cities. Eur. J. Remote Sens. 2019, 52, 58–73. [Google Scholar] [CrossRef]

- Taubenböck, H.; Weigand, M.; Esch, T.; Staab, J.; Wurm, M.; Mast, J.; Dech, S. A new ranking of the world’s largest cities—Do administrative units obscure morphological realities? Remote Sens. Environ. 2019, 232, 111353. [Google Scholar] [CrossRef]

- Zhang, J.; He, X.; Yuan, X.D. Research on the relationship between Urban economic development level and urban spatial structure—A case study of two Chinese cities. PLoS ONE 2020, 15, e0235858. [Google Scholar] [CrossRef]

- Shen, Y.; Chen, J.; Xiao, L.; Pan, D. Optimizing multiscale segmentation with local spectral heterogeneity measure for high resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2019, 157, 13–25. [Google Scholar] [CrossRef]

- Chen, Y.; Lv, Z.; Huang, B.; Jia, Y. Delineation of built-up areas from very high-resolution satellite imagery using multi-scale textures and spatial dependence. Remote Sens. 2018, 10, 1596. [Google Scholar] [CrossRef] [Green Version]

- Gao, J.; Wang, B.; Wang, Z.; Wang, Y.; Kong, F. A wavelet transform-based image segmentation method. Optik 2020, 208, 164123. [Google Scholar] [CrossRef]

- Kalaivani, K.; Phamila, Y.A.V. Detecting changes in multitemporal multispectral Landsat images using spatial frequency-based undecimated wavelet transform fusion. J. Electron. Imaging 2020, 29, 033011. [Google Scholar] [CrossRef]

- Yang, G.; Dai, J.; Liu, X.; Chen, M.; Wu, X. Spectral feature extraction based on continuous wavelet transform and image segmentation for peak detection. Anal. Methods 2020, 12, 169–178. [Google Scholar] [CrossRef]

- Sun, L.; Tang, L.; Shao, G.; Qiu, Q.; Lan, T.; Shao, J. A Machine learning-based classification system for urban built-up areas using multiple classifiers and data sources. Remote Sens. 2020, 12, 91. [Google Scholar] [CrossRef] [Green Version]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [Green Version]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, G.; Yu, B.; Zhang, X. Determination of the optimal segmentation scale of high-resolution remote sensing images of islands and reefs in the south China sea. Geospat. Inf. 2018, 16, 21–24. [Google Scholar]

- Yang, Y.; Jun, Z.; Sui, X.; He, X. Analysis of the evolution of urban center space based on POI: A case study of main area in Kunming. Urban Dev. Stud. 2019, 26, 31–35. [Google Scholar]

- Yang, Z.J.; He, X.; Zhang, K.; Zhang, J. Analysis of the correlation between takeaway and urban space from the perspective of POI: A case study of main area in Kunming. Urban Dev. Stud. 2020, 27, 13–17. [Google Scholar]

- Zhu, Z.; Li, Z.; Liu, Y.; Chen, H.; Zeng, J. The impact of urban characteristics and residents’ income on commuting in China. Transp. Res. Part D Transp. Environ. 2017, 57, 474–483. [Google Scholar] [CrossRef]

- Le Roux, D.S.; Ikin, K.; Lindenmayer, D.B.; Blanchard, W.; Manning, A.D.; Gibbons, P. Reduced availability of habitat structures in urban landscapes: Implications for policy and practice. Landsc. Urban Plan. 2014, 125, 57–64. [Google Scholar] [CrossRef]

- Han, X.; Tana, G.; Qin, K.; Letu, H. Estimating industrial structure changes in China using DMSP-OLS night-time light data during 1999–2012. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 9–15. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Zhou, H.; Wen, Q.; Chen, T.; Guan, F.; Ren, B.; Yu, H.; Wang, Z. Automatic extraction of urban built-up area based on object-oriented method and remote sensing data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 877–883. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Leung, Y. A study of urban expansion of prefectural-level cities in South China using night-time light images. Int. J. Remote Sens. 2015, 36, 5557–5575. [Google Scholar] [CrossRef]

- Jiang, S.; Li, J.; Duan, P.; Wei, Y. An image layer difference index method to extract light area from NPP/VIIRS nighttime light monthly data. Int. J. Remote Sens. 2019, 40, 4839–4855. [Google Scholar] [CrossRef]

- Peng, J.; Zhao, S.; Liu, Y.; Tian, L. Identifying the urban-rural fringe using wavelet transform and kernel density estimation: A case study in Beijing City, China. Environ. Model. Softw. 2016, 83, 286–302. [Google Scholar] [CrossRef]

| Data | Spatial Resolution | Data Sources | Acquisition Time |

|---|---|---|---|

| POI | 30 × 30 m | www.amap.com | March 2019 |

| Luojia-1A | 130 × 130 m | http://59.175.109.173:8888/index.html | October 2018 to March 2019 |

| Urban Built-up Areas | 30 × 30 m | http://www.dsac.cn/ | 2018 |

| Google Earth | 4.78 × 4.78 m | http://earth.google.com/ | March 2019 |

| Area Proportion (%) | Recall Rate | Precision Rate | F1 Score | |

|---|---|---|---|---|

| NTL | 84.00 | 0.577 | 0.5215 | 0.5478 |

| NTL_POI | 96.27 | 0.86 | 0.81 | 0.8343 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, X.; Zhou, C.; Zhang, J.; Yuan, X. Using Wavelet Transforms to Fuse Nighttime Light Data and POI Big Data to Extract Urban Built-Up Areas. Remote Sens. 2020, 12, 3887. https://doi.org/10.3390/rs12233887

He X, Zhou C, Zhang J, Yuan X. Using Wavelet Transforms to Fuse Nighttime Light Data and POI Big Data to Extract Urban Built-Up Areas. Remote Sensing. 2020; 12(23):3887. https://doi.org/10.3390/rs12233887

Chicago/Turabian StyleHe, Xiong, Chunshan Zhou, Jun Zhang, and Xiaodie Yuan. 2020. "Using Wavelet Transforms to Fuse Nighttime Light Data and POI Big Data to Extract Urban Built-Up Areas" Remote Sensing 12, no. 23: 3887. https://doi.org/10.3390/rs12233887