A Remote Sensing Method to Monitor Water, Aquatic Vegetation, and Invasive Water Hyacinth at National Extents

Abstract

1. Introduction

2. Materials and Methods

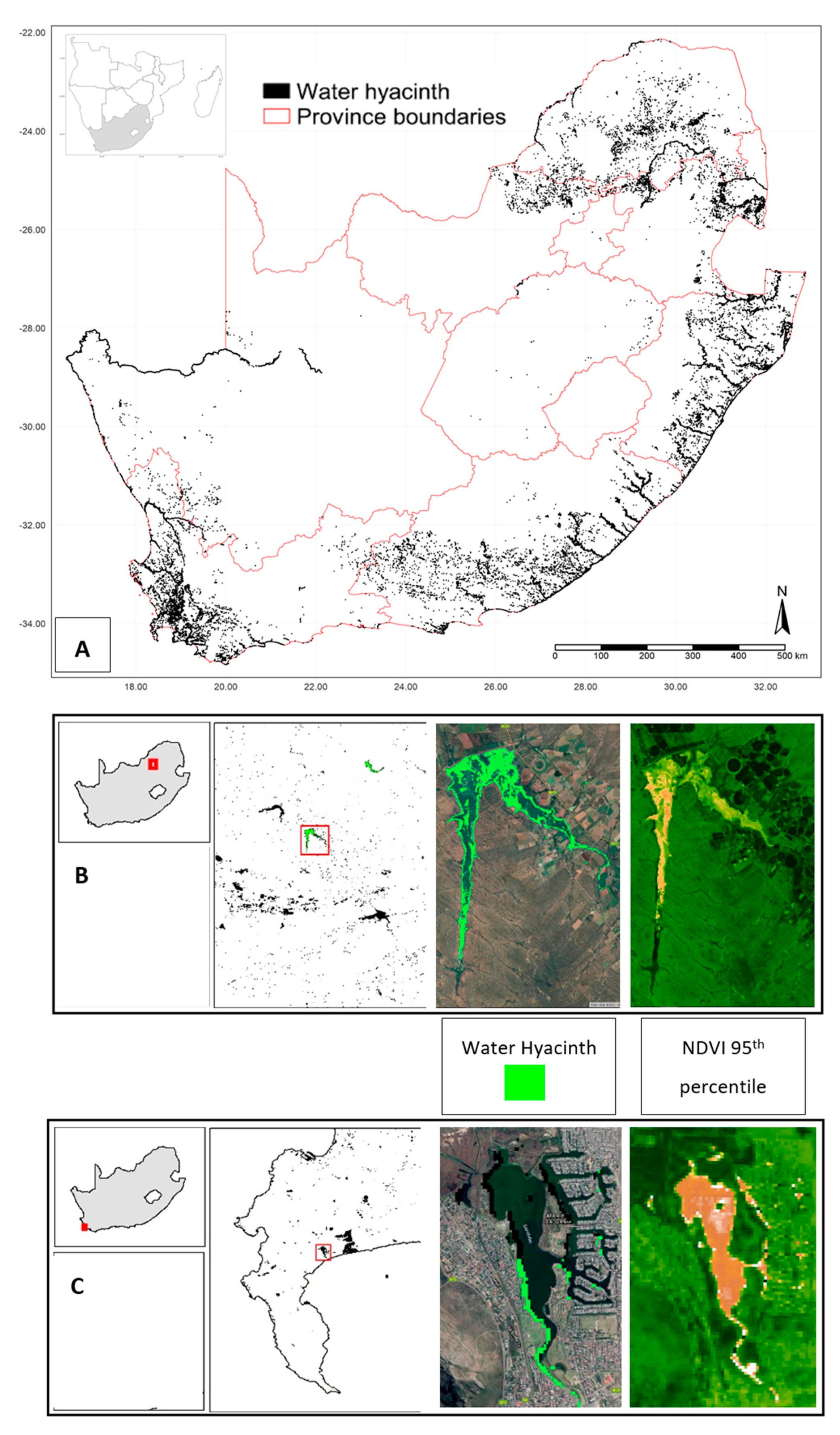

2.1. Study Area and Study Species

2.2. The Mapping Process

2.2.1. Stage 1: Surface Water Detection

2.2.2. Stage 2: Aquatic Vegetation Detection

2.2.2.1. Stage 2: Semantic Segmentation—An Alternative Aquatic Vegetation Detection Method to Otsu + Canny

2.2.2.2. Pre-Processing

2.2.3. Stage 3: Species Discrimination

3. Results

3.1. Evaluation of Surface Water Detection

3.2. Evaluation of Aquatic Vegetation Detection

3.3. Evaluation of Aquatic Vegetation Discrimination

4. Discussion

4.1. Stage 1—Surface Water Detection

4.2. Stage 2—Aquatic Vegetation Detection

4.3. Stage 3—Species Discrimination

4.4. Management Tools

4.5. User Guidelines, Caveats, and Limitations

5. Recommendations and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Strayer, D.L.; Dudgeon, D. Freshwater Biodiversity Conservation: Recent Progress and Future Challenges. J. N. Am. Benthol. Soc. 2010, 29, 344–358. [Google Scholar] [CrossRef]

- Ricciardi, A.; MacIsaac, H.J. Impacts of Biological Invasions on Freshwater Ecosystems. In Fifty Years of Invasion Ecology: The Legacy of Charles Elton; Wiley: Hoboken, NJ, USA, 2011; pp. 211–224. [Google Scholar]

- Rejmánek, M.; Pitcairn, M.J. When is eradication of exotic pest plants a realistic goal. In Turning the Tide: The Eradication of Invasive Species: Proceedings of the International Conference on Eradication of Island Invasives; Veitch, C.R., Clout, M.N., Eds.; IUCN: Cambridge, UK, 2002; pp. 249–253. [Google Scholar]

- Vila, M.; Ibáñez, I. Plant Invasions in the Landscape. Landsc. Ecol. 2011, 26, 461–472. [Google Scholar] [CrossRef]

- Nielsen, C.; Ravn, H.P.; Nentwig, W.; Wade, M. (Eds.) The Giant Hogweed Best Practice Manual. Guidelines for the Management and Control of an Invasive Weed in Europe; Forest and Landscape Denmark: Hørsholm, Denmark, 2005; pp. 1–44. ISBN 87-7903-209-5. [Google Scholar]

- Pyšek, P.; Hulme, P.E. Spatio-Temporal Dynamics of Plant Invasions: Linking Pattern to Process. Ecoscience 2005, 12, 302–315. [Google Scholar] [CrossRef]

- Wittenberg, R.; Cock, M.J.W. Best Practices for the Prevention and Management of Invasive Alien Species. In Invasive Alien Species: A New Synthesis; Island Press: Washington, DC, USA, 2005; pp. 209–232. [Google Scholar]

- Richardson, D.M.; Foxcroft, L.C.; Latombe, G.; Le Maitre, D.C.; Rouget, M.; Wilson, J.R. The Biogeography of South African Terrestrial Plant Invasions. In Biological Invasions in South Africa; Springer: Berlin/Heidelberg, Germany, 2020; pp. 67–96. [Google Scholar]

- Wallace, R.D.; Bargeron, C.T.; Ziska, L.; Dukes, J. Identifying Invasive Species in Real Time: Early Detection and Distribution Mapping System (EDDMapS) and Other Mapping Tools. Invasive Species Glob. Clim. Chang. 2014, 4, 219. [Google Scholar]

- Li, J.; Roy, D.P. A Global Analysis of Sentinel-2A, Sentinel-2B and Landsat-8 Data Revisit Intervals and Implications for Terrestrial Monitoring. Remote Sens. 2017, 9, 902. [Google Scholar]

- Cohen, W.B.; Goward, S.N. Landsat’s Role in Ecological Applications of Remote Sensing. Bioscience 2004, 54, 535–545. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-Scale Geospatial Analysis for Everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Phan, T.N.; Kuch, V.; Lehnert, L.W. Land Cover Classification Using Google Earth Engine and Random Forest Classifier—The Role of Image Composition. Remote Sens. 2020, 12, 2411. [Google Scholar] [CrossRef]

- Persello, C.; Stein, A. Deep Fully Convolutional Networks for the Detection of Informal Settlements in VHR Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2325–2329. [Google Scholar] [CrossRef]

- Wolter, P.T.; Mladenoff, D.J.; Host, G.E.; Crow, T.R. Using Multi-Temporal Landsat Imagery. Photogramm. Eng. Remote Sens. 1995, 61, 1129–1143. [Google Scholar]

- Byrne, M.; Hill, M.; Robertson, M.; King, A.; Katembo, N.; Wilson, J.; Brudvig, R.; Fisher, J.; Jadhav, A. Integrated Management of Water Hyacinth in South Africa; WRC Report No. TT 454/10; Water Research Commission: Pretoria, South Africa, 2010; 285p. [Google Scholar]

- Estes, L.D.; Okin, G.S.; Mwangi, A.G.; Shugart, H.H. Habitat Selection by a Rare Forest Antelope: A Multi-Scale Approach Combining Field Data and Imagery from Three Sensors. Remote Sens. Environ. 2008, 112, 2033–2050. [Google Scholar] [CrossRef]

- Bradley, B.A.; Mustard, J.F. Identifying Land Cover Variability Distinct from Land Cover Change: Cheatgrass in the Great Basin. Remote Sens. Environ. 2005, 94, 204–213. [Google Scholar] [CrossRef]

- Mukarugwiro, J.A.; Newete, S.W.; Adam, E.; Nsanganwimana, F.; Abutaleb, K.A.; Byrne, M.J. Mapping Distribution of Water Hyacinth (Eichhornia Crassipes) in Rwanda Using Multispectral Remote Sensing Imagery. Afr. J. Aquat. Sci. 2019, 44, 339–348. [Google Scholar] [CrossRef]

- Dube, T.; Mutanga, O.; Sibanda, M.; Bangamwabo, V.; Shoko, C. Testing the Detection and Discrimination Potential of the New Landsat 8 Satellite Data on the Challenging Water Hyacinth (Eichhornia Crassipes) in Freshwater Ecosystems. Appl. Geogr. 2017, 84, 11–22. [Google Scholar] [CrossRef]

- Thamaga, K.H.; Dube, T. Testing Two Methods for Mapping Water Hyacinth (Eichhornia Crassipes) in the Greater Letaba River System, South Africa: Discrimination and Mapping Potential of the Polar-Orbiting Sentinel-2 MSI and Landsat 8 OLI Sensors. Int. J. Remote Sens. 2018, 39, 8041–8059. [Google Scholar] [CrossRef]

- Thamaga, K.H.; Dube, T. Understanding Seasonal Dynamics of Invasive Water Hyacinth (Eichhornia Crassipes) in the Greater Letaba River System Using Sentinel-2 Satellite Data. GIScience Remote Sens. 2019, 56, 1355–1377. [Google Scholar] [CrossRef]

- Ingole, N.A.; Nain, A.S.; Kumar, P.; Chalal, R. Monitoring and Mapping Invasive Aquatic Weed Salvinia Molesta Using Multispectral Remote Sensing Technique in Tumaria Wetland of Uttarakhand, India. J. Indian Soc. Remote Sens. 2018, 46, 863–871. [Google Scholar] [CrossRef]

- Hill, M.P.; Coetzee, J.A.; Martin, G.D.; Smith, R.; Strange, E.F. Invasive Alien Aquatic Plants in South African Freshwater Ecosystems. In Biological Invasions in South Africa; Springer: Berlin/Heidelberg, Germany, 2020; pp. 97–114. [Google Scholar]

- Coetzee, J.A.; Hill, M.P.; Byrne, M.J.; Bownes, A. A Review of the Biological Control Programmes on Eichhornia Crassipes (C. Mart.) Solms (Pontederiaceae), Salvinia Molesta DS Mitch.(Salviniaceae), Pistia Stratiotes L.(Araceae), Myriophyllum Aquaticum (Vell.) Verdc.(Haloragaceae) and Azolla Filiculoides L. Afr. Entomol. 2011, 19, 451–468. [Google Scholar] [CrossRef]

- Jones, R.W.; Cilliers, C.J. Integrated Control of Water Hyacinth on the Nseleni/Mposa Rivers and Lake Nsezi in KwaZulu-Natal, South Africa. In Biological and Integrated Control of Water Hyacinth, Eichhornia crassipes. ACIAR Proceedings; Julian, M.H., Hill, M.P., Center, T.D., Ding, J., Eds.; Australian Centre for International Agricultural Research: Canberra, Australia, 2001; Volume 102, pp. 123–129. [Google Scholar]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Dvořák, P.; Müllerová, J.; Bartaloš, T.; Brůna, J. Unmanned aerial vehicles for alien plant species detection and monitoring. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 903–908. [Google Scholar] [CrossRef]

- Müllerová, J.; Pergl, J.; Pyšek, P. Remote Sensing as a Tool for Monitoring Plant Invasions: Testing the Effects of Data Resolution and Image Classification Approach on the Detection of a Model Plant Species Heracleum Mantegazzianum (Giant Hogweed). Int. J. Appl. Earth Obs. Geoinf. 2013, 25, 55–65. [Google Scholar] [CrossRef]

- Joshi, C.; de Leeuw, J.; van Duren, I.C. Remote Sensing and GIS Applications for Mapping and Spatial Modeling of Invasive Species. In Proceedings of the XXth ISPRS Congress, Istanbul, Turkey, 12–23 July 2004; pp. 669–677. [Google Scholar]

- Thamaga, K.H.; Dube, T. Remote Sensing of Invasive Water Hyacinth (Eichhornia Crassipes): A Review on Applications and Challenges. Remote Sens. Appl. Soc. Environ. 2018, 10, 36–46. [Google Scholar] [CrossRef]

- Vaz, A.S.; Alcaraz-Segura, D.; Campos, J.C.; Vicente, J.R.; Honrado, J.P. Managing Plant Invasions through the Lens of Remote Sensing: A Review of Progress and the Way Forward. Sci. Total Environ. 2018, 642, 1328–1339. [Google Scholar] [CrossRef]

- Rahel, F.J. Homogenization of Freshwater Faunas. Annu. Rev. Ecol. Syst. 2002, 33, 291–315. [Google Scholar] [CrossRef]

- Sala, O.E.; Chapin, F.S.; Armesto, J.J.; Berlow, E.; Bloomfield, J.; Dirzo, R.; Huber-Sanwald, E.; Huenneke, L.F.; Jackson, R.B.; Kinzig, A. Global Biodiversity Scenarios for the Year 2100. Science 2000, 287, 1770–1774. [Google Scholar] [CrossRef]

- Dube, T.; Mutanga, O.; Sibanda, M.; Bangamwabo, V.; Shoko, C. Evaluating the Performance of the Newly-Launched Landsat 8 Sensor in Detecting and Mapping the Spatial Configuration of Water Hyacinth (Eichhornia Crassipes) in Inland Lakes, Zimbabwe. Phys. Chem. Earth Parts A/b/c 2017, 100, 101–111. [Google Scholar] [CrossRef]

- Cheruiyot, E.; Menenti, M.; Gerte, B.; Koenders, R. Accuracy and Precision of Algorithms to Determine the Extent of Aquatic Plants: Empirical Sealing of Spectral Indices vs. Spectral Unmixing. ESASP 2013, 722, 85. [Google Scholar]

- Truong, T.T.A.; Hardy, G.E.S.J.; Andrew, M.E. Contemporary Remotely Sensed Data Products Refine Invasive Plants Risk Mapping in Data Poor Regions. Front. Plant Sci. 2017, 8, 770. [Google Scholar] [CrossRef]

- Agutu, P.O.; Gachari, M.K.; Mundia, C.N. An Assessment of the Role of Water Hyacinth in the Water Level Changes of Lake Naivasha Using GIS and Remote Sensing. Am. J. Remote Sens. 2018, 6, 74–88. [Google Scholar] [CrossRef]

- Zhang, Y.; Jeppesen, E.; Liu, X.; Qin, B.; Shi, K.; Zhou, Y.; Thomaz, S.M.; Deng, J. Global Loss of Aquatic Vegetation in Lakes. Earth Sci. Rev. 2017, 173, 259–265. [Google Scholar] [CrossRef]

- Hill, M.P.; Coetzee, J. The Biological Control of Aquatic Weeds in South Africa: Current Status and Future Challenges. Bothalia Afr. Biodivers. Conserv. 2017, 47, 1–12. [Google Scholar] [CrossRef]

- Xu, H. Modification of Normalised Difference Water Index (NDWI) to Enhance Open Water Features in Remotely Sensed Imagery. Int. J. Remote Sens. 2006, 27, 3025–3033. [Google Scholar] [CrossRef]

- Henderson, L. Alien Weeds And Invasive Plants: A Complete Guide to Declared Weeds and Invaders in South Africa; Plant Protection Research Institute Handbook No. 12; Agricultural Research Council: Pretoria, South Africa, 2001. [Google Scholar]

- Coetzee, J.; Mostert, E. (Rhodes University, Centre for Biological Control). GPS Localities for Invasive Aquatic Alien Plants (IAAPs) as a Google Earth Engine Feature Collection. Available online: https://code.earthengine.google.com/?asset=users/geethensingh/IAAP_localities (accessed on 13 June 2019).

- Van Wilgen, B.W.; Richardson, D.M.; Le Maitre, D.C.; Marais, C.; Magadlela, D. The Economic Consequences of Alien Plant Invasions: Examples of Impacts and Approaches to Sustainable Management in South Africa. Environ. Dev. Sustain. 2001, 3, 145–168. [Google Scholar] [CrossRef]

- Henderson, L.; Cilliers, C.J. Invasive Aquatic Plants: A Guide to the Identification of the Most Important and Potentially Dangerous Invasive Aquatic and Wetland Plants in South Africa; Also Featuring the Biological Control of the Five Worst Aquatic Weeds; ARC-Plant Protection Research Inst.: Pretoria, South Africa, 2002. [Google Scholar]

- Richardson, D.M.; Van Wilgen, B.W. Invasive Alien Plants in South Africa: How Well Do We Understand the Ecological Impacts?: Working for Water. S. Afr. J. Sci. 2004, 100, 45–52. [Google Scholar]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Frolking, S.; Salas, W.; Moore Iii, B.; Li, C.; He, L.; Zhao, R. Observation of Flooding and Rice Transplanting of Paddy Rice Fields at the Site to Landscape Scales in China Using VEGETATION Sensor Data. Int. J. Remote Sens. 2002, 23, 3009–3022. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel Algorithms for Remote Estimation of Vegetation Fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Villa, P.; Laini, A.; Bresciani, M.; Bolpagni, R. A Remote Sensing Approach to Monitor the Conservation Status of Lacustrine Phragmites Australis Beds. Wetl. Ecol. Manag. 2013, 21, 399–416. [Google Scholar] [CrossRef]

- Donchyts, G.; Baart, F.; Winsemius, H.; Gorelick, N.; Kwadijk, J.; Van De Giesen, N. Earth’s Surface Water Change over the Past 30 Years. Nat. Clim. Chang. 2016, 6, 810–813. [Google Scholar] [CrossRef]

- Yang, X.; Qin, Q.; Yésou, H.; Ledauphin, T.; Koehl, M.; Grussenmeyer, P.; Zhu, Z. Monthly Estimation of the Surface Water Extent in France at a 10-m Resolution Using Sentinel-2 Data. Remote Sens. Environ. 2020, 244, 111803. [Google Scholar] [CrossRef]

- Pekel, J.-F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-Resolution Mapping of Global Surface Water and Its Long-Term Changes. Nature 2016, 540, 418–422. [Google Scholar] [CrossRef]

- GEOTERRAIMAGE. SANLC. Accuracy Assessment Points. 2018. Available online: https://egis.environment.gov.za/data_egis/data_download/current (accessed on 28 March 2020).

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man. Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Rahman, M.S. MultiResUNet: Rethinking the U-Net Architecture for Multimodal Biomedical Image Segmentation. Neural Netw. 2020, 121, 74–87. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A Survey on Deep Learning in Medical Image Analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Qiao, C.; Luo, J.; Sheng, Y.; Shen, Z.; Zhu, Z.; Ming, D. An Adaptive Water Extraction Method from Remote Sensing Image Based on NDWI. J. Indian Soc. Remote Sens. 2012, 40, 421–433. [Google Scholar] [CrossRef]

- Acharya, T.D.; Lee, D.H.; Yang, I.T.; Lee, J.K. Identification of Water Bodies in a Landsat 8 OLI Image Using a J48 Decision Tree. Sensors 2016, 16, 1075. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, J.; Li, J.; Zhang, D.D. Multi-Spectral Water Index (MuWI): A Native 10-m Multi-Spectral Water Index for Accurate Water Mapping on Sentinel-2. Remote Sens. 2018, 10, 1643. [Google Scholar] [CrossRef]

- Wu, Q.; Lane, C.R.; Li, X.; Zhao, K.; Zhou, Y.; Clinton, N.; DeVries, B.; Golden, H.E.; Lang, M.W. Integrating LiDAR Data and Multi-Temporal Aerial Imagery to Map Wetland Inundation Dynamics Using Google Earth Engine. Remote Sens. Environ. 2019, 228, 1–13. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P.; Yan, L.; Li, Z.; Huang, H.; Vermote, E.; Skakun, S.; Roger, J.-C. Characterization of Sentinel-2A and Landsat-8 Top of Atmosphere, Surface, and Nadir BRDF Adjusted Reflectance and NDVI Differences. Remote Sens. Environ. 2018, 215, 482–494. [Google Scholar] [CrossRef]

- Roy, D.P.; Kovalskyy, V.; Zhang, H.K.; Vermote, E.F.; Yan, L.; Kumar, S.S.; Egorov, A. Characterization of Landsat-7 to Landsat-8 Reflective Wavelength and Normalized Difference Vegetation Index Continuity. Remote Sens. Environ. 2016, 185, 57–70. [Google Scholar] [CrossRef] [PubMed]

- Frouin, R.J.; Franz, B.A.; Ibrahim, A.; Knobelspiesse, K.; Ahmad, Z.; Cairns, B.; Chowdhary, J.; Dierssen, H.M.; Tan, J.; Dubovik, O. Atmospheric Correction of Satellite Ocean-Color Imagery during the PACE Era. Front. Earth Sci. 2019, 7, 145. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic Segmentation of Slums in Satellite Images Using Transfer Learning on Fully Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Zhou, Y.; Dong, J.; Xiao, X.; Xiao, T.; Yang, Z.; Zhao, G.; Zou, Z.; Qin, Y. Open Surface Water Mapping Algorithms: A Comparison of Water-Related Spectral Indices and Sensors. Water 2017, 9, 256. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive Survey of Deep Learning in Remote Sensing: Theories, Tools, and Challenges for the Community. J. Appl. Remote Sens. 2017, 11, 42609. [Google Scholar] [CrossRef]

- Mueller, N.; Lewis, A.; Roberts, D.; Ring, S.; Melrose, R.; Sixsmith, J.; Lymburner, L.; McIntyre, A.; Tan, P.; Curnow, S. Water Observations from Space: Mapping Surface Water from 25 Years of Landsat Imagery across Australia. Remote Sens. Environ. 2016, 174, 341–352. [Google Scholar] [CrossRef]

- Vorster, A.G.; Woodward, B.D.; West, A.M.; Young, N.E.; Sturtevant, R.G.; Mayer, T.J.; Girma, R.K.; Evangelista, P.H. Tamarisk and Russian Olive Occurrence and Absence Dataset Collected in Select Tributaries of the Colorado River for 2017. Data 2018, 3, 42. [Google Scholar] [CrossRef]

- Everitt, J.H.; Yang, C.; Summy, K.R.; Owens, C.S.; Glomski, L.M.; Smart, R.M. Using in Situ Hyperspectral Reflectance Data to Distinguish Nine Aquatic Plant Species. Geocarto Int. 2011, 26, 459–473. [Google Scholar] [CrossRef]

- Everitt, J.H.; Summy, K.R.; Glomski, L.M.; Owens, C.S.; Yang, C. Spectral Reflectance and Digital Image Relations among Five Aquatic Weeds. Subtrop. Plant Sci. 2009, 61, 15–23. [Google Scholar]

| Variable | Data Source (Year) | Spatial Resolution (m) |

|---|---|---|

| Seasonal median spectral bands, excluding panchromatic band 8 | Landsat-8 for the respective field locality year (2013–2015) | 30 |

| Summer (12/01–02/28) | ||

| Autumn (03/01–05/31) | ||

| Winter (06/23–08/31) | ||

| Spring (09/01–11/30) | ||

| Percentiles (5,25,50,75,95) of spectral indices (NDVI, GARI, LSWI) | Landsat-8 for the respective field locality year (2013–2015) | 30 |

| Elevation | SRTM (2000) | 90 |

| Global human modification | Kennedy et al. (2019) (2016) | 1000 |

| Sum of solar radiation | Terraclimate (2013–2015) | ~4670 |

| Minimum temperature | Worldclim: bio variables (1960–1990) | 1000 |

| Temperature seasonality | ||

| Precipitation seasonality | ||

| Gross biomass water productivity | Wapor for the respective field locality year (2013–2015) | 250 |

| Actual evapotranspiration | ||

| Total biomass production |

| Training Accuracy | Validation Accuracy | Training Loss | Validation Loss |

|---|---|---|---|

| 0.9814 ± 0.0030 | 0.9851 ± 0.0035 | 0.0655 ± 0.0017 | 0.0740 ± 0.0078 |

| Reference | Accuracy (%) | Area (km2) | Sensor (Season) | |

|---|---|---|---|---|

| User | Producer | |||

| [35] | 67.35 | 67.35 | ~958 | Landsat-7 |

| 91.67 | 89.8 | ~958 | Landsat-8 | |

| [20] | 100 | 90 | ~5228 | Landsat-8 (wet) |

| 92 | 90 | ~5228 | Landsat-8 (dry) | |

| [21] | 44 | 50 | ~5228 | Landsat-8 |

| 89.3 | 61 | ~5228 | Sentinel-2 | |

| [22] | 76.42 | 94.44 | ~5228 | Sentinel-2 (wet) |

| 74.67 | 66.04 | ~5228 | Sentinel-2 (dry) | |

| [19] | 85 | 83 | ~18,180 | Landsat-8 |

| This study | 87.48 | 92.98 | 1,219,090 | Landsat-8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, G.; Reynolds, C.; Byrne, M.; Rosman, B. A Remote Sensing Method to Monitor Water, Aquatic Vegetation, and Invasive Water Hyacinth at National Extents. Remote Sens. 2020, 12, 4021. https://doi.org/10.3390/rs12244021

Singh G, Reynolds C, Byrne M, Rosman B. A Remote Sensing Method to Monitor Water, Aquatic Vegetation, and Invasive Water Hyacinth at National Extents. Remote Sensing. 2020; 12(24):4021. https://doi.org/10.3390/rs12244021

Chicago/Turabian StyleSingh, Geethen, Chevonne Reynolds, Marcus Byrne, and Benjamin Rosman. 2020. "A Remote Sensing Method to Monitor Water, Aquatic Vegetation, and Invasive Water Hyacinth at National Extents" Remote Sensing 12, no. 24: 4021. https://doi.org/10.3390/rs12244021

APA StyleSingh, G., Reynolds, C., Byrne, M., & Rosman, B. (2020). A Remote Sensing Method to Monitor Water, Aquatic Vegetation, and Invasive Water Hyacinth at National Extents. Remote Sensing, 12(24), 4021. https://doi.org/10.3390/rs12244021