A Deep Convolutional Neural Network for Oil Spill Detection from Spaceborne SAR Images

Abstract

:1. Introduction

2. Data

2.1. Data Set

2.2. Input Size of DCNN

2.3. Preprocessing

2.3.1. Data Set Proportion Allocation

2.3.2. Data Augmentation

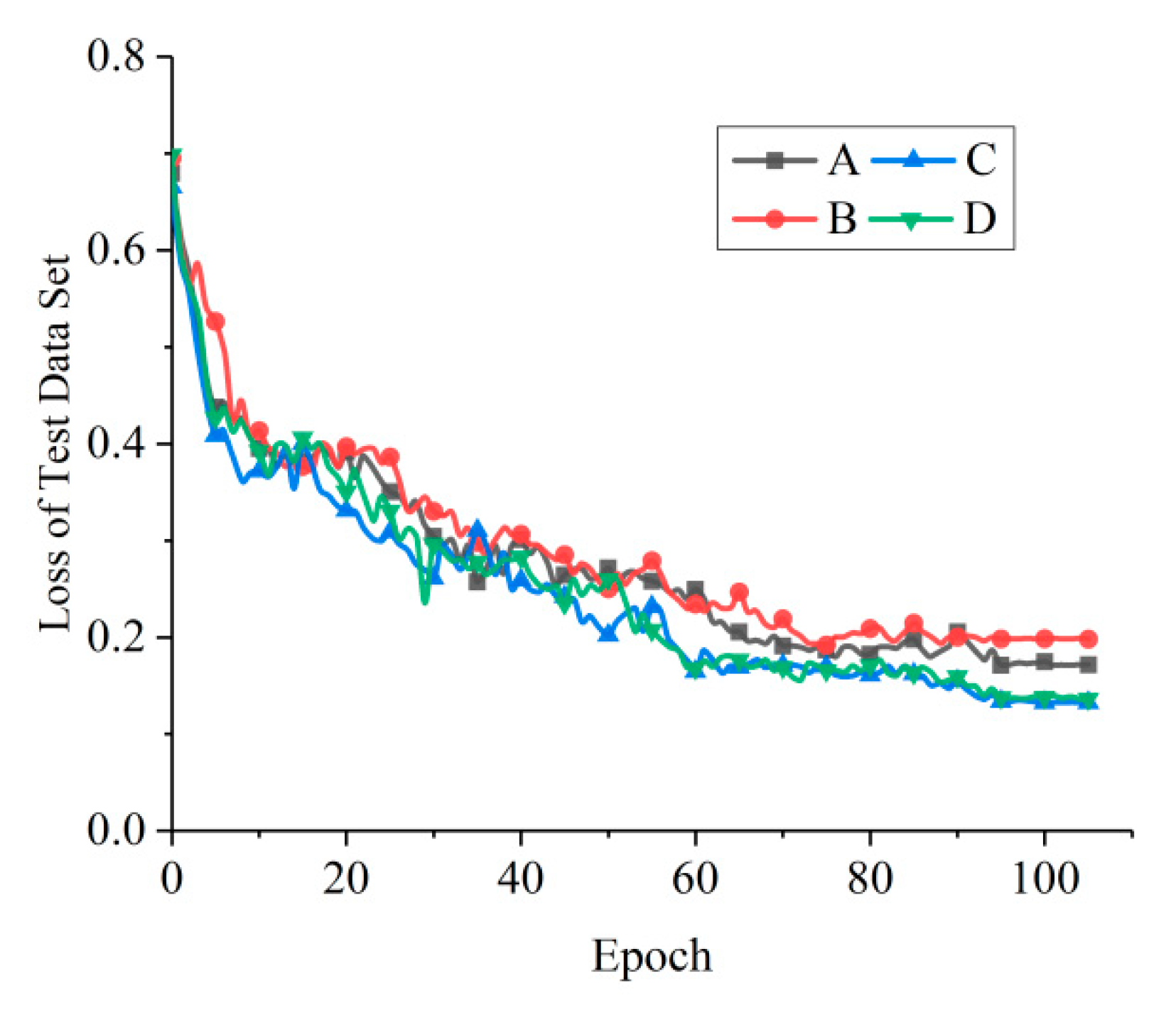

3. Determine the Basic Architecture of DCNNs

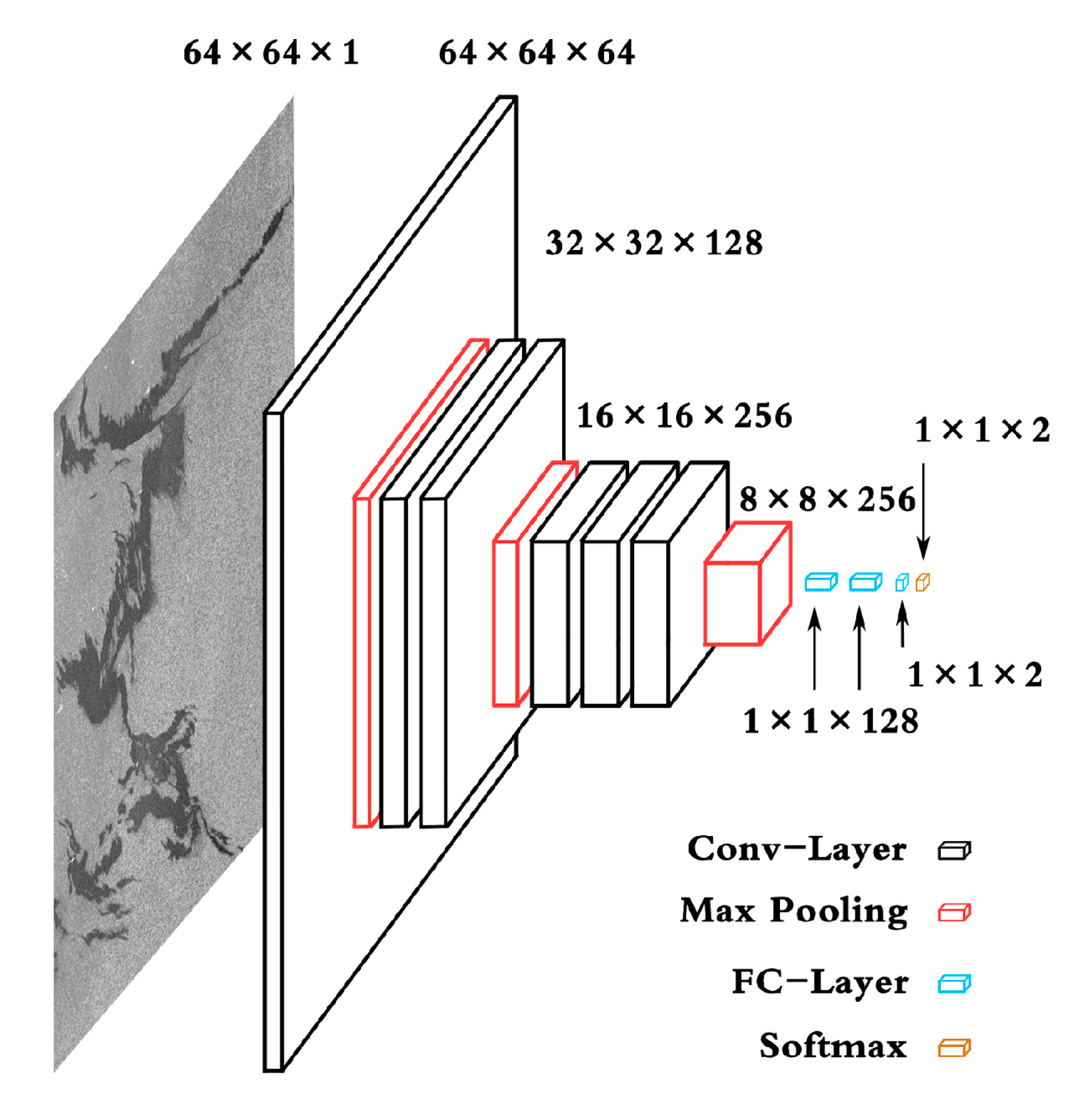

4. The Proposed DCNN for SAR Oil Spill Detection

4.1. Structure of Convolutional Layers

4.2. Node Number of FC and Dropout

4.3. Hyperparameter Evaluation

5. Experimental Results and Analysis

5.1. Evaluation Metrics

5.2. Results

5.3. Comparison Based on the Same Data Set

5.4. The Role of Data Augmentation

5.5. Comparison of Auto-Extracted Features and Hand-Crafted Features

5.6. Comparison with Other DL Classifiers

6. Conclusions and Outlooks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Smith, L.; Smith, M.; Ashcroft, P. Analysis of environmental and economic damages from British Petroleum’s Deepwater Horizon oil spill. Albany Law Rev. 2011, 74, 563–585. [Google Scholar] [CrossRef] [Green Version]

- Lan, G.; Li, Y.; Chen, P. Time Effectiveness Analysis of Remote Sensing Monitoring of Oil Spill Emergencies: A Case Study of Oil Spill in the Dalian Xingang Port. Adv. Mar. Sci. 2012, 4, 13. [Google Scholar]

- Yin, L.; Zhang, M.; Zhang, Y. The long-term prediction of the oil-contaminated water from the Sanchi collision in the East China Sea. Acta Oceanol. Sin. 2018, 37, 69–72. [Google Scholar] [CrossRef]

- Yu, W.; Li, J.; Shao, Y. Remote sensing techniques for oil spill monitoring in offshore oil and gas exploration and exploitation activities: Case study in Bohai Bay. Pet. Explor. Dev. 2007, 34, 378. [Google Scholar]

- Qiao, F.; Wang, G.; Yin, L.; Zeng, K.; Zhang, Y.; Zhang, M.; Xiao, B.; Jiang, S.; Chen, H.; Chen, G. Modelling oil trajectories and potentially contaminated areas from the Sanchi oil spill. Sci. Total Environ. 2019, 685, 856–866. [Google Scholar] [CrossRef]

- Keydel, W.; Alpers, W. Detection of oil films by active and passive microwave sensors. Adv. Space Res. 1987, 7, 327–333. [Google Scholar] [CrossRef]

- Uhlmann, S.; Serkan, K. Classification of dual- and single polarized SAR images by incorporating visual features. ISPRS J. Photogramm. Remote Sens. 2014, 90, 10–22. [Google Scholar] [CrossRef]

- Brekke, C.; Solberg, H. Oil spill detection by satellite remote sensing. Remote Sens. Environ. 2005, 95, 1–13. [Google Scholar] [CrossRef]

- Nirchio, F.; Sorgente, M.; Giancaspro, A. Automatic detection of oil spills from SAR images. Int. J. Remote Sens. 2005, 26, 1157–1174. [Google Scholar] [CrossRef]

- Solberg, H.; Solberg, R. A large-scale evaluation of features for automatic detection of oil spills in ERS SAR images. In Proceedings of the 1996 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Lincoln, NE, USA, 31 May 1996; Volume 3, pp. 1484–1486. [Google Scholar]

- Topouzelis, K.; Psyllos, A. Oil spill feature selection and classification using decision tree forest on SAR image data. ISPRS J. Photogramm. Remote Sens. 2012, 68, 135–143. [Google Scholar] [CrossRef]

- Singha, S.; Vespe, M.; Trieschmann, O. Automatic Synthetic Aperture Radar based oil spill detection and performance estimation via a semi-automatic operational service benchmark. Mar. Pollut. Bull. 2013, 73, 199–209. [Google Scholar] [CrossRef] [PubMed]

- Topouzelis, K.; Karathanassi, V.; Pavlakis, P. Detection and discrimination between oil spills and look-alike phenomena through neural networks. ISPRS J. Photogramm. Remote Sens. 2007, 62, 264–270. [Google Scholar] [CrossRef]

- Zeng, K. Development of Automatic Identification and Early Warning Operational System for Marine Oil Spill Satellites—Automatic Operation Monitoring System for Oil Spill from SAR Images; Technical Report; Ocean University of China: Qingdao, China, 2017. [Google Scholar]

- Del, F.; Petrocchi, A.; Lichtenegger, J. Neural networks for oil spill detection using ERS-SAR data. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2282–2287. [Google Scholar]

- Stathakis, D.; Topouzelis, K.; Karathanassi, V. Large-scale feature selection using evolved neural networks. In Proceedings of the 2006 Image and Signal Processing for Remote Sensing, International Society for Optics and Phonetics, Ispra, Italy, 6 October 2006; Volume 6365, pp. 1–9. [Google Scholar]

- Singha, S.; Bellerby, T.; Trieschmann, O. Satellite oil spill detection using artificial neural networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2355–2363. [Google Scholar] [CrossRef]

- Brekke, C.; Solberg, H. Classifiers and confidence estimation for oil spill detection in ENVISAT ASAR images. IEEE Geosci. Remote Sens. Lett. 2008, 5, 65–69. [Google Scholar] [CrossRef]

- Xu, L.; Li, J.; Brenning, A. A comparative study of different classification techniques for marine oil spill identification using RADARSAT-1 imagery. Remote Sens. Environ. 2014, 141, 14–23. [Google Scholar] [CrossRef]

- Singha, S.; Ressel, R.; Velotto, D. A combination of traditional and polarimetric features for oil spill detection using TerraSAR-X. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4979–4990. [Google Scholar] [CrossRef] [Green Version]

- Solberg, H.; Volden, E. Incorporation of prior knowledge in automatic classification of oil spills in ERS SAR images. In Proceedings of the 1997 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Singapore, 3–8 August 1997; Volume 1, pp. 157–159. [Google Scholar]

- Solberg, H.; Storvik, G.; Solberg, R. Automatic detection of oil spills in ERS SAR images. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1916–1924. [Google Scholar] [CrossRef] [Green Version]

- Huang, X.; Wang, X. The classification of synthetic aperture radar oil spill images based on the texture features and deep belief network. In Computer Engineering and Networking; Springer: Cham, Switzerland, 2014; pp. 661–669. [Google Scholar]

- Guo, H.; Wu, D.; An, J. Discrimination of oil slicks and lookalikes in polarimetric SAR images using CNN. Sensors 2017, 17, 1837. [Google Scholar] [CrossRef] [Green Version]

- Nieto-Hidalgo, M.; Gallego, A.J.; Gil, P. Two-stage convolutional neural network for ship and spill detection using SLAR images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5217–5230. [Google Scholar] [CrossRef] [Green Version]

- Gallego, A.J.; Gil, P.; Pertusa, A. Segmentation of oil spills on side-looking airborne radar imagery with autoencoders. Sensors 2018, 18, 797. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ball, J.; Anderson, D.; Chan, C. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bottou, L.; Bengio, Y. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (NIPS); NeurIPS: Vancouver, BC, Canada, 2012; pp. 1097–1105. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the 2014 European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Volume 8689, pp. 818–833. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2017, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lei, S.; Zhang, H.; Wang, K.; Su, Z. How Training Data Affect the Accuracy and Robustness of Neural Networks for Image Classification. In Proceedings of the 2019 International Conference on Learning Representations (ICLR-2019), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Perez, L.; Wang, J. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T. Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 2010, 22, 199–210. [Google Scholar] [CrossRef] [Green Version]

- Pan, S.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- TensorFlow image classification model library. Available online: https://github.com/tensorflow/models/tree/master/research/slim/#Pretrained (accessed on 22 January 2018).

- A Guide to Receptive Field Arithmetic for Convolutional Neural Networks. Available online: https://medium.com/mlreview/a-guide-to-receptive-field-arithmetic-for-convolutional-neural-networks-e0f514068807 (accessed on 6 April 2017).

- Canziani, A.; Paszke, A.; Culurciello, E. An analysis of deep neural network models for practical applications. arXiv 2016, arXiv:1605.07678. [Google Scholar]

- Cao, X. A Practical Theory for Designing Very Deep Convolutional Neural Networks; Technical Report; Kaggle: Melbourne, Australia, 2015. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In Advances in Neural Information Processing Systems (NIPS); NeurIPS: Vancouver, BC, Canada, 2016; pp. 2802–2810. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- He, K.; Zhang, X.; Ren, S. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1026–1034. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Qian, N. On the momentum term in gradient descent learning algorithms. Neural Netw. 1999, 12, 145–151. [Google Scholar] [CrossRef]

- Kingma, P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Nielsen, A. Neural Networks and Deep Learning; Springer: San Francisco, CA, USA, 2015. [Google Scholar]

- Bengio, Y. Practical Recommendations for Gradient-Based Training of Deep Architectures; Springer: Heidelberg/Berlin, Germany, 2012; pp. 437–478. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the 2010 Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; Volume 9, pp. 249–256. [Google Scholar]

- Theodoridis, S.; Koutroumbas, K. Pattern Recognition, 4th ed.; Publishing House of Electronics Industry: Beijing, China, 2010; p. 660. [Google Scholar]

| Satellite/SAR Band | Geography/Period | Number |

|---|---|---|

| Envisat/C | Bohai Sea/2011.6–2011.8 | 10 |

| China Sea/2002.11–2007.3 | 67 | |

| the Gulf of Mexico/2010.4–2010.7 | 45 | |

| European Seas, China Sea/1994.10–2009.7 | 16 | |

| ERS-1,2/C | China Sea/1992.9–2005.6 | 63 |

| COSMO Sky-Med/X | Bohai Sea/2011.6–2011.11 | 135 |

| Total | 336 |

| Stack | Layer | FM | RF | Stride | Stack | Layer | FM | RF | Stride |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Conv3-64 | 224 | 3 | 1 | Pool3 | 28 | 44 | 8 | |

| Conv3-64 | 224 | 5 | 1 | 4 | Conv3-512 | 28 | 60 | 8 | |

| Pool1 | 112 | 6 | 2 | Conv3-512 | 28 | 76 | 8 | ||

| 2 | Conv3-128 | 112 | 10 | 2 | Conv3-512 | 28 | 92 | 8 | |

| Conv3-128 | 112 | 14 | 2 | Pool4 | 14 | 100 | 16 | ||

| Pool2 | 56 | 16 | 4 | 5 | Conv3-512 | 14 | 132 | 16 | |

| 3 | Conv3-256 | 56 | 24 | 4 | Conv3-512 | 14 | 164 | 16 | |

| Conv3-256 | 56 | 32 | 4 | Conv3-512 | 14 | 196 | 16 | ||

| Conv3-256 | 56 | 40 | 4 | Pool5 | 7 | 212 | 32 |

| Stack | A | B | C | D |

|---|---|---|---|---|

| 10 weight layers | 13 weight layers | 9 weight layers | 12 weight layers | |

| Input (64 × 64 SAR image) | ||||

| 1 | conv3-64 conv3-64 | conv3-64 conv3-64 | conv3-64 | conv3-64 |

| maxpool/2 | ||||

| 2 | conv3-128 conv3-128 | conv3-128 conv3-128 | conv3-128 conv3-128 | conv3-128 conv3-128 |

| maxpool/2 | ||||

| 3 | conv3-256 conv3-256 conv3-256 | conv3-256 conv3-256 conv3-256 | conv3-256 conv3-256 conv3-256 | conv3-256 conv3-256 conv3-256 |

| maxpool/2 | ||||

| 4 | / | conv3-512 conv3-512 conv3-512 | / | conv3-512 conv3-512 conv3-512 |

| / | maxpool/2 | / | maxpool/2 | |

| FC-1024 | ||||

| FC-1024 | ||||

| FC-2 | ||||

| Softmax | ||||

| FC Node Num | Reserved Node Num | Training Accuracy (%) |

|---|---|---|

| 1024 | 512 | 93.95 |

| 512 | 256 | 94.75 |

| 256 | 128 | 97.08 |

| 128 | 64 | 96.88 |

| Model | FC Channel Num | Dropout Rate | Reserved Num |

|---|---|---|---|

| A | 512 | 0.8 | 102 |

| B | 256 | 0.6 | 102 |

| C | 256 | 0.5 | 128 |

| D | 128 | 0.1 | 115 |

| Type | Activation Function | Loss Function | Dropout Rate | Learning Rate | Batch Size | Parameter Initialization |

|---|---|---|---|---|---|---|

| Method/Value | ReLU | Softmax Cross Entropy | 0.1 | 5 × 10−5 | 256 | He Initialization |

| Label | |||

|---|---|---|---|

| Oil spills | Lookalikes | ||

| Classification | Oil spills | TP | FP |

| Lookalikes | FN | TN | |

| Training Times | Recognition Rate | Detection Rate | Precision | F-Measure |

|---|---|---|---|---|

| 1 | 93.41 | 82.33 | 84.34 | 83.32 |

| 2 | 93.77 | 83.50 | 85.07 | 84.28 |

| 3 | 93.94 | 82.31 | 86.68 | 84.45 |

| 4 | 94.14 | 84.92 | 85.67 | 85.29 |

| 5 | 94.11 | 82.57 | 87.30 | 84.87 |

| 6 | 93.91 | 83.45 | 85.71 | 84.56 |

| 7 | 94.03 | 83.85 | 85.85 | 84.83 |

| 8 | 93.86 | 83.41 | 85.53 | 84.45 |

| 9 | 94.25 | 85.17 | 85.95 | 85.55 |

| 10 | 94.07 | 83.76 | 86.22 | 84.96 |

| 11 | 94.11 | 84.72 | 86.36 | 85.53 |

| 12 | 95.46 | 83.74 | 86.05 | 84.88 |

| 13 | 93.57 | 83.11 | 84.51 | 83.80 |

| 14 | 93.28 | 82.95 | 83.37 | 83.16 |

| 15 | 94.12 | 82.84 | 87.07 | 84.91 |

| AVG | 94.01 | 83.51 | 85.70 | 84.59 |

| Classifiers | OSCNet | VGG-16 | AAMLP |

|---|---|---|---|

| Recognition rate (accuracy) | 94.01 | 90.35 | 92.50 |

| Detection rate (recall) | 83.51 | 77.63 | 81.40 |

| Precision | 85.70 | 75.01 | 80.95 |

| F-measure | 84.59 | 76.30 | 81.28 |

| Sample |  |  |  |  |  |  |  |  |

| Number | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 |

| Classification | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| Accuracy | 0.7461 | 0.9978 | ~1.0 | 0.9994 | 0.9509 | 0.9988 | 0.9987 | 0.9169 |

| Sample |  |  |

| Number | 01 | 02 |

| Classification | 0 | 1 |

| Accuracy | 0.8109 | 0.8098 |

| Sample |  |  |  |  |  |

| Number | 01 | 02 | 03 | 04 | 05 |

| Classification | 1 | 1 | 1 | 0 | 0 |

| Accuracy | 0.9985 | 0.8705 | 0.9834 | 0.9999 | 0.9964 |

| Sample |  |  |  |  |  |  |

| Label | 0 | 0 | 0 | 0 | 0 | 0 |

| Classification | 1 | 1 | 1 | 1 | 1 | 1 |

| Accuracy | 0.5354 | 0.5299 | 0.5877 | 0.5763 | 0.5622 | 0.5779 |

| Sample |  |  |  |  |  |  |

| Label | 0 | 0 | 0 | 0 | 0 | 0 |

| Classification | 0 | 0 | 0 | 0 | 0 | 0 |

| Accuracy | 0.5229 | 0.5494 | 0.5790 | 0.5777 | 0.5683 | 0.5304 |

| Training Times | Accuracy (%) | Recall (%) | Precision (%) | F-Measure(%) |

|---|---|---|---|---|

| 1 | 90.23 | 85.96 | 64.54 | 73.72 |

| 2 | 94.39 | 84.91 | 76.75 | 80.63 |

| 3 | 96.35 | 88.99 | 88.69 | 88.83 |

| 4 | 95.85 | 79.70 | 82.59 | 81.12 |

| 5 | 96.70 | 89.19 | 77.57 | 82.97 |

| 6 | 93.29 | 77.35 | 90.07 | 83.23 |

| 7 | 94.12 | 76.86 | 79.01 | 77.92 |

| 8 | 94.04 | 69.80 | 85.62 | 76.90 |

| 9 | 95.90 | 84.59 | 96.39 | 90.11 |

| 10 | 96.67 | 86.94 | 95.49 | 91.01 |

| 11 | 96.64 | 89.08 | 88.47 | 88.78 |

| 12 | 95.87 | 91.58 | 88.03 | 89.77 |

| 13 | 96.90 | 93.36 | 86.90 | 90.02 |

| 14 | 92.91 | 67.38 | 78.35 | 72.45 |

| 15 | 96.60 | 96.71 | 80.98 | 88.15 |

| AVG | 95.09 | 84.30 | 84.12 | 84.21 |

| Classifiers | OSCNet | GCNN | TSCNN | RED-Net | |

|---|---|---|---|---|---|

| Depth of layers | 12 | 5 | 6 | 6 | |

| Data set size | 23,768 | 5400 | - | - | |

| With augmentation | Yes | No | Yes | Yes | |

| Raw SAR images | 336 | 5 | 23 | 38 | |

| Oil spills | 4843 | 1800 | 14 | 22 | |

| Data sources (Satellite/SAR band) | Envisat/C ERS-1,2/C COSMO Sky-Med/X | Radarsat-2/C | SLAR | SLAR | |

| By dark patches | Recognition rate | 94.01 | 91.33 | - | - |

| Detection rate | 83.51 | - | - | - | |

| False alarm rate | 3.42 | - | - | - | |

| By pixels | Accuracy | 95.09 | - | 97.82 | - |

| Precision | 84.12 | - | 52.23 | 93.12 | |

| Recall | 84.29 | - | 72.70 | 92.92 | |

| F-measure | 84.21 | - | 53.62 | 93.01 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, K.; Wang, Y. A Deep Convolutional Neural Network for Oil Spill Detection from Spaceborne SAR Images. Remote Sens. 2020, 12, 1015. https://doi.org/10.3390/rs12061015

Zeng K, Wang Y. A Deep Convolutional Neural Network for Oil Spill Detection from Spaceborne SAR Images. Remote Sensing. 2020; 12(6):1015. https://doi.org/10.3390/rs12061015

Chicago/Turabian StyleZeng, Kan, and Yixiao Wang. 2020. "A Deep Convolutional Neural Network for Oil Spill Detection from Spaceborne SAR Images" Remote Sensing 12, no. 6: 1015. https://doi.org/10.3390/rs12061015

APA StyleZeng, K., & Wang, Y. (2020). A Deep Convolutional Neural Network for Oil Spill Detection from Spaceborne SAR Images. Remote Sensing, 12(6), 1015. https://doi.org/10.3390/rs12061015