The Impact of Pan-Sharpening and Spectral Resolution on Vineyard Segmentation through Machine Learning

Abstract

:1. Introduction

2. Methodology

2.1. Satellite Imagery

2.2. Machine Learning

2.2.1. Data Models

2.2.2. Neural Network Architecture

2.2.3. Neural Network Training

2.3. Measuring Vineyard Detection Performance

- Precision = TP/(FP + TP). This provides a measure of the total fraction of predictions that really are vineyard.

- Recall = TP/(TP + FN). This provides a measure of the total fraction of actual vineyard correctly predicted as vineyard.

- Jaccard Index = TP / (TP + FP + FN). In addition, expressed as “intersection over union” (IOU), this is a measure of the spatial overlap between pixels predicted to be in vineyards, and pixels labelled as being in vineyards.

- Area ratio = (TP + FP)/(TP + FN). This is the ratio of the spatial area (in number of pixels) of predicted vineyards over real vineyards. It is also the ratio of recall over precision. However, even when a high agreement between predicted and actual vineyard area is achieved, the predicted vineyard block boundaries could potentially be non-overlapping with the real boundaries. Penalising such a case is ignored by area ratio but not by Jaccard Index.

- Overall Accuracy = (TP + TN)/(TP + TN + FP + FN). This is the fraction of all pixels that were correctly classified.

- Expected Accuracy = ((TN + FP) × (TN + FN) + (FN + TP) × (FP + TP))/(TP + TN + FP + FN). The expected accuracy estimates the overall accuracy value that could be obtained from a random system. The denominator equals the square of the total number of observations.

- Kappa statistic = (Overall Accuracy − Expected Accuracy)/( Expected Accuracy) [65,66]. This provides a measure of the level of agreement between classification and ground-truth that could originate through chance. A large and positive Kappa (near one) indicates that the overall accuracy is high and exceeds the accuracy that could be expected to arise from random chance. This can be interpreted as the classifier providing a statistically significant improvement in the classification of ‘vineyard’ and ‘not vineyard’ than could be obtained through random assignment of pixels to the binary classes.

3. Results

3.1. Pan-Sharpening

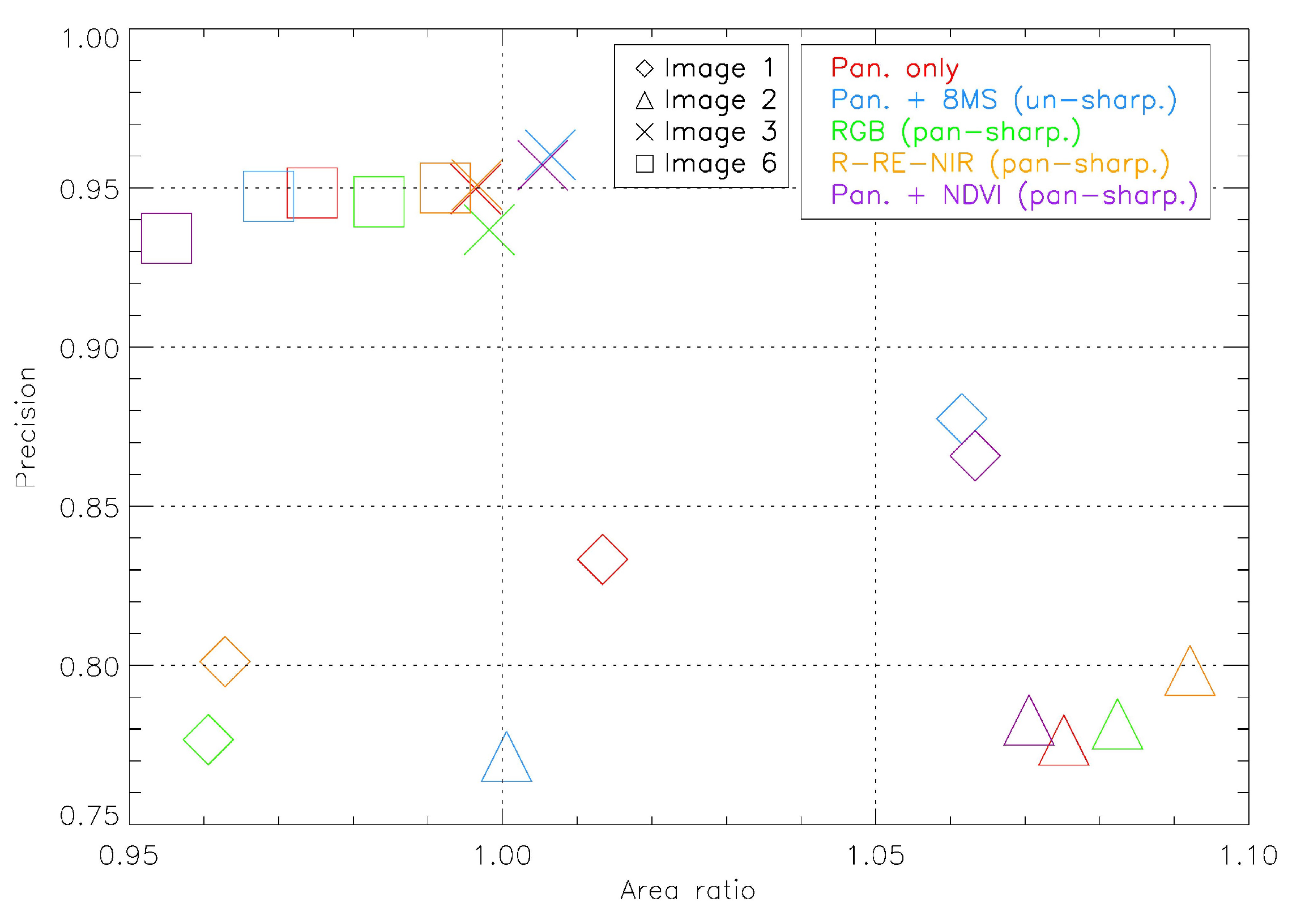

3.2. Quantitative Data Model Comparisons

4. Discussion

4.1. Interplay between Spatial Resolution and Spectral Values (Image Fusion)

4.2. Performance Validation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| PV | Precision Viticulture |

| PAN | Panchromatic |

| p.s.v. | Photosynthetic vegetation |

| MS | Multispectral |

| NIR | Near-infrared |

| VNIR | Visible and Near-Infrared wavelengths |

| SWIR | Short-Wave Infrared Wavelengths |

| NDVI | Normalized Difference Vegetation Index |

| GS | Gram–Schmidt pan-sharpening algorithm. |

Appendix A

| Field | Value |

|---|---|

| Label | Image 1 |

| Catalog ID | 1030010065A6AD00 |

| Acquisition date | 14th February 2017 |

| Acquisition time (ACST ) | 9:53:02 AM |

| Pixel size GSD (meters) | 0.509 (PAN); 2.036 (MS) |

| Mean view-angle | 16.4 |

| Mean solar elevation | 51.4 |

| Label | Image 2 |

| Catalog ID | 1030010066252A00 |

| Acquisition date | 28th February 2017 |

| Acquisition time (ACST) | 9:36:41 AM |

| Pixel size GSD (meters) | 0.509 (PAN); 1.986 (MS) |

| Mean view-angle | 13.4 |

| Mean solar elevation | 44.1 |

| Catalog ID | 10300100737F4D00 |

| Acquisition date | 22th November 2017 |

| Acquisition time (ACST) | 10:32:29 AM |

| Pixel size GSD (meters) | 0.550 (PAN); 2.200 (MS) |

| Mean view-angle | 23.5 |

| Mean solar elevation | 65.8 |

| Label | Image 4 |

| Catalog ID | 103001002DA07900 |

| Acquisition date | 15th January 2014 |

| Acquisition time (ACST) | 10:32:35 AM |

| Pixel size GSD (meters) | 0.475 (PAN); 1.895 (MS) |

| Mean view-angle | 5.4 |

| Mean solar elevation | 63.4 |

| Label | Image 5 |

| Catalog ID | 1030010078903800 |

| Acquisition date | 7th February 2018 |

| Acquisition time (ACST) | 10:01:20 AM |

| Pixel size GSD (meters) | 0.488 (PAN); 1.945 (MS) |

| Mean view-angle | 11.4 |

| Mean solar elevation | 57.5 |

| Label | Image 6 |

| Catalog ID | 1030010063A7F700 |

| Acquisition date | 10th February 2017 |

| Acquisition time (ACST) | 10:38:36 AM |

| Pixel size GSD (meters) | 0.549 (PAN); 2.187 (MS) |

| Mean view-angle | 23.1 |

| Mean solar elevation | 58.0 |

| Label | Image 7 |

| Catalog ID | 1030010052802F00 |

| Acquisition date | 9th March 2016 |

| Acquisition time (ACST) | 12:52:15 PM |

| Pixel size GSD (meters) | 0.576 (PAN); 2.303 (MS) |

| Mean view-angle | 26.7 |

| Mean solar elevation | 49.1 |

| Label | Image 8 |

| Catalog ID | 1030010088661E00 |

| Acquisition date | 12th November 2018 |

| Acquisition time (ACST) | 10:39:46 AM |

| Pixel size GSD (meters) | 0.476 (PAN); 1.900 (MS) |

| Mean view-angle | 7.6 |

| Mean solar elevation | 68.1 |

| Label | Image 9 |

| Catalog ID | 103001007C181200 |

| Acquisition date | 2nd April 2018 |

| Acquisition time (ACST) | 11:18:09 AM |

| Pixel size GSD (meters) | 0.589 (PAN); 2.362 (MS) |

| Mean view-angle | 28.0 |

| Mean solar elevation | 42.8 |

| Image | Number of Pixels | Number (km Length) of Vinerows |

|---|---|---|

| Image 5 | 387085 | 698 (190.3) |

| Image 6 | 214789 | 493 (108.5) |

| Image 7 | 167487 | 389 (86.1) |

| Image 4 | 100159 | 267 (42.8) |

| Image 2 | 107230 | 375 (54.3) |

| Image 1 | 62057 | 327 (30.3) |

| Image 8 | 102583 | 187 (55.6) |

| Image 9 | 128171 | 284 (75.6) |

| Model | Performance Measures | ||

|---|---|---|---|

| Kappa | Accuracy | ||

| Image 1 | M1 | 0.83 | 1.00 |

| M2 | 0.85 | 1.00 | |

| M3 | 0.79 | 1.00 | |

| M4 | 0.82 | 1.00 | |

| M5 | 0.84 | 1.00 | |

| Image 2 | M1 | 0.75 | 1.00 |

| M2 | 0.77 | 1.00 | |

| M3 | 0.75 | 1.00 | |

| M4 | 0.76 | 1.00 | |

| M5 | 0.76 | 1.00 | |

| Image 3 | M1 | 0.95 | 0.99 |

| M2 | 0.96 | 1.00 | |

| M3 | 0.93 | 0.99 | |

| M4 | 0.95 | 1.00 | |

| M5 | 0.95 | 1.00 | |

| Image 6 | M1 | 0.96 | 1.00 |

| M2 | 0.96 | 1.00 | |

| M3 | 0.95 | 1.00 | |

| M4 | 0.95 | 1.00 | |

| M5 | 0.95 | 1.00 | |

| Precision | Recall | Accuracy | Kappa | Area Ratio | JI | |

|---|---|---|---|---|---|---|

| Precision | 1.00 | 0.95 | −0.79 | 0.98 | −0.49 | 0.98 |

| Recall | 1.00 | −0.75 | 0.99 | −0.74 | 0.99 | |

| Accuracy | 1.00 | −0.77 | 0.36 | −0.79 | ||

| Kappa | 1.00 | −0.60 | 1.00 | |||

| Area Ratio | 1.00 | −0.63 | ||||

| JI | 1.00 |

References

- Bramley, R.G.V.; Pearse, B.; Chamberlain, P. Being Profitable Precisely—A Case Study of Precision Viticulture from Margaret River. Aust. N. Z. Grapegrow. Winemak. 2003, 473, 84–87. [Google Scholar]

- Arno, J.; Martinez Casasnovas, J.A.; Dasi, M.R.; Rosell, J.R. Precision Viticulture. Research Topics, Challenges and Opportunities in Site-Specific Vineyard Management. Span. J. Agric. Res. 2009, 7, 779. [Google Scholar] [CrossRef] [Green Version]

- Matese, A.; Di Gennaro, S.F. Technology in Precision Viticulture: A State of the Art Review. Int. J. Wine Res. 2015, 7, 69–81. [Google Scholar] [CrossRef] [Green Version]

- Karakizi, C.; Oikonomou, M.; Karantzalos, K. Vineyard Detection and Vine Variety Discrimination from Very High Resolution Satellite Data. Remote Sens. 2016, 8, 235. [Google Scholar] [CrossRef] [Green Version]

- Sertel, E.; Yay, I. Vineyard parcel identification from Worldview-2 images using object- based classification model. J. Appl. Remote Sens. 2014, 8, 1–17. [Google Scholar] [CrossRef]

- Poblete-Echeverria, C.; Olmedo, G.F.; Ingram, B.; Bardeen, M. Detection and segmentation of vine canopy in ultra-high spatial resolution RGB imagery obtained from Unmanned Aerial Vehicle (UAV): A case study in a commercial vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef] [Green Version]

- Shanmuganathan, S.; Sallis, P.; Pavesi, L.; Munoz, M.C.J. Computational intelligence and geo-informatics in viticulture. In Proceedings of the Second Asia International Conference on Modelling & Simulation (AMS), Kuala Lumpur, Malaysia, 13–15 May 2008; 2008; Volume 2, pp. 480–485. [Google Scholar]

- Rodriguez-Perez, J.R.; Alvarez-Lopez, C.J.; Miranda, D.; Alvarez, M.F. Vineyard Area Estimation Using Medium Spatial Resolution. Span. J. Agric. Res. 2006, 6, 441–452. [Google Scholar] [CrossRef] [Green Version]

- Hall, A.; Lamb, D.W.; Holzapfel, B.; Louis, J. Optical Remote Sensing Applications in Viticulture- a Review. Aust. J. Grape Wine Res. 2002, 8, 36–47. [Google Scholar] [CrossRef]

- Khaliq, A.; Comba, L.; Biglia, A.; Aimonino, D.; Chiaberge, M.; Gay, P. Comparison of Satellite and UAV-Based Multispectral Imagery for Vineyard Variability Assessment. Remote Sens. 2019, 11, 436. [Google Scholar] [CrossRef] [Green Version]

- Delenne, C.; Rabatel, G.; Agurto, V.; Deshayes, M. Vine Plot Detection in Aerial Images Using Fourier Analysis. In Proceedings of the 1st International Conference on Object-Based Image Analysis, Salzburg, Austria, 4–5 July 2006; Volume 1, pp. 1–6. [Google Scholar]

- Delenne, C.; Durrieu, S.; Rabatel, G.; Deshayes, M. From Pixel to Vine Parcel: A Complete Methodology for Vineyard Delineation and Characterization Using Remote-Sensing Data. Comput. Electron. Agric. 2010, 70, 78–83. [Google Scholar] [CrossRef] [Green Version]

- Kaplan, G.; Avdan, U. Sentinel-2 Pan Sharpening—Comparative Analysis. Proceedings 2018, 2, 345. [Google Scholar] [CrossRef] [Green Version]

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Giorgio, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A Critical Comparison Among Pansharpening Algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Du, Q.; King, R. On the Performance Evaluation of Pan-Sharpening Techniques. IEEE Geosci. Remote Sens. 2007, 4, 518–522. [Google Scholar] [CrossRef]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of Pansharpening Algorithms: Outcome of the 2006 GRS-S Data-Fusion Contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef] [Green Version]

- Amro, I.; Mateos, J.; Vega, M.; Molina, R.; Katsaggelos, A. A survey of classical methods and new trends in pansharpening of multispectral images. EURASIP J. Adv. Signal Process. 2011, 79, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Sertel, E.; Seker, D.; Yay, I.; Ozelkan, E.; Saglan, M.; Boz, Y.; Gunduz, A. Vineyard mapping using remote sensing technologies. In Proceedings of the FIG Working Week 2012: Knowing to Manage the Territory, Protect the Environment, Evaluate the Cultural Heritage, Rome, Italy, 6–10 May 2012; pp. 1–8. [Google Scholar]

- Smit, J.L.; Sithole, G.; Strever, A.E. Vine Signal Extraction–an Application of Remote Sensing in Precision Viticulture. S. Afr. J. Enol. Vitic. 2010, 31, 65–73. [Google Scholar] [CrossRef] [Green Version]

- Comba, L.; Gay, P.; Primicerio, J.; Aimonino, D. Vineyard detection from unmanned aerial systems images. Comput. Electron. Agric. 2015, 114, 78–87. [Google Scholar] [CrossRef]

- Cinat, P.; Di Gennaro, S.; Berton, A.; Matese, A. Comparison of Unsupervised Algorithms for Vineyard Canopy Segmentation from UAV Multispectral Images. Remote Sens. 2019, 11, 1023. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Bessa, J.; Sousa, A.; Peres, E.; Morais, R.; Sousa, J. Vineyard properties extraction combining UAS-based RGB imagery with elevation data. Int. J. Remote Sens. 2018, 39, 5377–5401. [Google Scholar] [CrossRef]

- Delenne, C.; Rabatel, G.; Deshayes, M. An automatized frequency analysis for vine plot detection and delineation in remote sensing. IEEE Geosci. Remote Sens. Lett. 2008, 5, 341–345. [Google Scholar] [CrossRef] [Green Version]

- Rabatel, G.; Delenne, C.; Deshayes, M. A Non-Supervised Approach Using Gabor Filters for Vine-Plot Detection in Aerial Images. Comput. Electron. Agric. 2008, 62, 159–168. [Google Scholar] [CrossRef]

- Ranchin, T.; Naert, B.; Albuisson, M.; Boyer, G.; Astrand, P. An Automatic Method for Vine Detection in Airborne Imagery Using Wavelet Transform and Multiresolution Analysis. Photogramm. Eng. Remote Sens. 2001, 67, 91–98. [Google Scholar]

- Gao, F.; He, T.; Masek, J.G.; Shuai, Y.; Schaaf, C.B.; Wang, Z. Angular effects and correction for medium resolution sensors to support crop monitoring. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4480–4489. [Google Scholar] [CrossRef]

- Poblete, T.; Ortega-Farías, S.; Ryu, D. Automatic Coregistration Algorithm to Remove Canopy Shaded Pixels in UAV-Borne Thermal Images to Improve the Estimation of Crop Water Stress Index of a Drip-Irrigated Cabernet Sauvignon Vineyard. Sensors 2018, 18, 397. [Google Scholar] [CrossRef] [Green Version]

- Hall, A.; Louis, J.; Lamb, D.A. Method For Extracting Detailed Information From High Resolution Multispectral Images Of Vineyards. In Proceedings of the 6th International Conference on Geocomputation, Brisbane, Australia, 24–26 September 2001; Volume 6, pp. 1–9. [Google Scholar]

- Towers, P.; Strever, A.; Poblete-Echeverría, C. Comparison of Vegetation Indices for Leaf Area Index Estimation in Vertical Shoot Positioned Vine Canopies With and Without Grenbiule Hail-Protection Netting. Remote Sens. 2019, 11, 16. [Google Scholar] [CrossRef] [Green Version]

- Delenne, C.; Durrieu, S.; Rabatel, G.; Deshayes, M.; Bailly, J.S.; Lelong, C.; Couteron, P. Textural Approaches for Vineyard Detection and Characterization Using Very High Spatial Resolution Remote Sensing Data. Int. J. Remote Sens. 2008, 29, 1153–1167. [Google Scholar] [CrossRef]

- WineAustralia. Geographical Indications. Available online: https://www.wineaustralia.com/labelling/register-of-protected-gis-and-other-terms/geographical-indications (accessed on 22 May 2018).

- Halliday, J. Wine Atlas of Australia, 3rd ed.; Hardie Grant Books: Richmond, VA, USA, 2014. [Google Scholar]

- Sun, L.; Gao, F.; Anderson, M.C.; Kustas, W.P.; Alsina, M.M.; Sanchez, L.; Sams, B.; McKee, L.; Dulaney, W.; White, W.A.; et al. Daily mapping of 30 m LAI and NDVI for grape yield prediction in California vineyards. Remote Sens. 2017, 9, 317. [Google Scholar] [CrossRef] [Green Version]

- Lamb, D.W.; Weedon, M.M.; Bramley, R.G.V. Using remote sensing to predict grape phenolics and colour at harvest in a Cabernet Sauvignon vineyard: Timing observations against vine phenology and optimising image resolution. Aust. J. Grape Wine Res. 2008, 10, 46–54. [Google Scholar] [CrossRef] [Green Version]

- Webb, L.B.; Whetton, P.H.; Bhend, J.; Darbyshire, R.; Alsina, M.M.; Sanchez, L.; Sams, B.; McKee, L.; Dulaney, W.; White, W.A.; et al. Earlier wine-grape ripening driven by climatic warming and drying and management practices. Nat. Clim. Chang. 2012, 2, 259–264. [Google Scholar] [CrossRef]

- Jackson, R.S. Vineyard Practice, 4th ed.; Wine Science—Principles and Applications; Academic Press: San Diego, CA, USA, 2015; pp. 143–306. [Google Scholar]

- Globe, D. Advanced Image Preprocessor with AComp. Available online: https://gbdxdocs.digitalglobe.com/docs/advanced-image-preprocessor (accessed on 22 May 2018).

- DigitalGlobe. Accuracy of Worldview Products. Available online: https://dg-cms-uploads-production.s3.amazonaws.com/uploads/document/file/38/DG_ACCURACY_WP_V3.pdf (accessed on 22 May 2019).

- Carper, W.; Lillesand, T.; Kiefer, R. The use of intensity-hue- saturation transformations for merging SPOT panchromatic and multi- spectral image data. Photogramm. Eng. Remote Sens. 1990, 56, 459–467. [Google Scholar]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent 6,011,875, 4 January 2000. [Google Scholar]

- Chavez, P., Jr.; Kwarteng, A. Extracting spectral contrast in Landsat thematic mapper image data using selective principal component analysis. Photogramm. Eng. Remote Sens. 1989, 55, 338–348. [Google Scholar]

- Lui, W.; Wang, Z. A practical pan-sharpening method with wavelet transform and sparse representation. In Proceedings of the 2013 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 22–23 October 2013; pp. 288–293. [Google Scholar]

- Palubinskas, G. Fast, simple, and good pan-sharpening method. J. Appl. Rem. Sens. 2013, 7, 073526. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Lotti, F. Lossless image compression by quantization feedback in a content-driven enhanced Laplacian pyramid. IEEE Trans. Image Process. 1997, 6, 831–843. [Google Scholar] [CrossRef] [PubMed]

- Imani, M. Band Dependent Spatial Details Injection Based on Collaborative Representation for Pansharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4994–5004. [Google Scholar] [CrossRef]

- HarrisGeospatialSolutions. Gram-Schmidt Pan Sharpening. Available online: http://www.harrisgeospatial.com/docs/GramSchmidtSpectralSharpening.html (accessed on 22 May 2018).

- Song, S.; Liu, J.; Pu, H.; Liu, Y.; Luo, J. The comparison of fusion methods for HSRRSI considering the effectiveness of land cover (Features) object recognition based on deep learning. Remote Sens. 2019, 11, 1435. [Google Scholar] [CrossRef] [Green Version]

- Zhou, C.; Liang, D.; Yang, X.; Xu, B.; Yang, G. Recognition of wheat spike from field based phenotype platform using multi-sensor fusion and improved maximum entropy segmentation algorithms. Remote Sens. 2018, 10, 246. [Google Scholar] [CrossRef] [Green Version]

- Baronti, S.; Aiazzi, B.; Selva, M.; Garzelli, A.; Alparone, L. A Theoretical Analysis of the Effects of Aliasing and Misregistration on Pansharpened Imagery. IEEE J. Sel. Top. Signal Process. 2011, 5, 446–453. [Google Scholar] [CrossRef]

- Li, H.; Jing, L.; Tang, Y. Assessment of pan-sharpening methods applied to WorldView-2 imagery fusion. Sensors 2017, 17, 89. [Google Scholar] [CrossRef]

- Loncan, L.; De Almeida, L.B.; Bioucas-Dias, J.M.; Briottet, X.; Chanussot, J.; Dobigeon, N.; Fabre, S.; Liao, W.; Licciardi, G.A.; Simões, M.; et al. Hyperspectral Pansharpening: A Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Basaeed, E.; Bhaskar, H.; Al-mualla, M. Comparative Analysis of Pan-sharpening Techniques on DubaiSat-1 images. In Proceedings of the 16th International Conference on Information Fusion, Istanbul, Turkey, 9–12 July 2013; Volume 16, pp. 227–234. [Google Scholar]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, T. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sozzi, M.; Kayad, A.; Tomasi, D.; Lovat, L.; Marinello, F.; Sartori, L. Assessment of grapevine yield and quality using a canopy spectral index in white grape variety. In Proceedings of the Precision Agriculture ’19, Montpellier, France, 8–11 July 2019. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Olaf Ronneberger, P.F.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. arXiv 2018, arXiv:1802.02611. [Google Scholar]

- Kornblith, S.; Shlens, J.; Le, Q.V. Do Better ImageNet Models Transfer Better? arXiv 2018, arXiv:1805.08974. [Google Scholar]

- Wong, S.C.; Gatt, A.; Stamatescu, V.; McDonnell, M.D. Understanding Data Augmentation for Classification: When to Warp? In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 30 November–2 December 2016; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018, 106, 249–259. [CrossRef] [Green Version]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006. [Google Scholar]

- Google. Google Maps. Available online: http://maps.google.com/ (accessed on 22 January 2019).

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. Accuracy assessment and validation of remotely sensed and other spatial information. Int. J. Wildland Fire 2001, 10, 321–328. [Google Scholar] [CrossRef] [Green Version]

- Jerri, A.J. The Shannon Sampling Theorem—Its Various Extensions and Applications: A Tutorial Review. Proc. IEEE 1977, 65, 1565–1596. [Google Scholar] [CrossRef]

- Aswatha, S.M.; Mukhopadhyay, J.; Biswas, P.K. Spectral Slopes for Automated Classification of Land Cover in Landsat Images. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 4354–4358. [Google Scholar]

- Jones, E.G.; Caprarelli, G.; Mills, F.P.; Doran, B.; Clarke, J. An Alternative Approach to Mapping Thermophysical Units from Martian Thermal Inertia and Albedo Data Using a Combination of Unsupervised Classification Techniques. Remote Sens. 2014, 6, 5184–5237. [Google Scholar] [CrossRef] [Green Version]

- Thomas, C.; Ranchin, T.; Wald, L.; Chanussot, J. Synthesis of multispectral images to high spatial resolution: A critical review of fusion methods based on remote sensing physics. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1301–1312. [Google Scholar] [CrossRef] [Green Version]

- Lord, D.; Desjardins, R.L.; Dube, P.A. Sun-angle effects on the red and near infrared reflectances of five different crop canopies. Can. J. Remote Sens. 1988, 14, 46–55. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez-Pérez, J.; Ordóñez, C.; González-Fernández, A.; Sanz-Ablanedo, E.; Valenciano, J.; Marcelo, V. Leaf Water Content Estimation By Functional Linear Regression of Field Spectroscopy Data. Biosyst. Eng. 2018, 165, 36–46. [Google Scholar] [CrossRef]

- Huber, S.; Tagesson, T.; Fensholt, R. An Automated Field Spectrometer System For Studying VIS, NIR and SWIR Anisotropy For Semi-Arid Savanna. Remote Sens. Environ. 2014, 152, 547–556. [Google Scholar] [CrossRef] [Green Version]

- Codella, N.C.F.; Gutman, D.; Celebi, M.E.; Helba, B.; Marchetti, M.A.; Dusza, S.W.; Kalloo, A.; Liopyris, K.; Mishra, N.; Kittler, H.; et al. Skin lesion analysis toward melanoma detection: A challenge at the 2017 International symposium on biomedical imaging (ISBI), hosted by the international skin imaging collaboration (ISIC). arXiv 2018, arXiv:1710.05006. [Google Scholar]

- Iglovikov, V.; Mushinskiy, S.; Osin, V. Satellite Imagery Feature Detection using Deep Convolutional Neural Network: A Kaggle Competition. arXiv 2017, arXiv:1706.06169. [Google Scholar]

- Tian, C.; Li, C.; Shi, J. Dense Fusion Classmate Network for Land Cover Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

| Parameter | Sensor | |

|---|---|---|

| PAN | MS | |

| Spatial resolution (m) | 0.46 | 1.85 |

| Radiometric resolution (bits/pixel) | 11 | 11 |

| Spectral resolution (nm) | 450–800 (VNIR) | 400–450 (coastal) 450–510 (blue) 510–580 (green) 585–625 (yellow) 630–690 (red) 705–745 (red edge) 770–895 (NIR1) 860–1040 (NIR2) |

| Temporal resolution | <2 days; 3.7 days at 20 off-nadir or less | |

| Field of view | 16.4 × 112 km (single strip) | |

| Orbit | Geocentric sun-synchronous; altitude 770 km | |

| Model | Description |

|---|---|

| M1 | Panchromatic band only. |

| M2 | Panchromatic band, 8 multispectral bands (native resolution). |

| M3 | R-G-B (3 pan-sharpened bands). |

| M4 | R-RE-NIR1 (3 pan-sharpened bands). |

| M5 | Panchromatic band and NDVI (derived from pan-sharpened). |

| Model | Performance Measures | ||||

|---|---|---|---|---|---|

| Precision | Recall | JI | Area Ratio | ||

| Image 1 | M1 | 0.83 | 0.82 | 0.71 | 1.01 |

| M2 | 0.88 | 0.83 | 0.74 | 1.06 | |

| M3 | 0.78 | 0.81 | 0.66 | 0.96 | |

| M4 | 0.80 | 0.83 | 0.69 | 0.96 | |

| M5 | 0.87 | 0.81 | 0.72 | 1.06 | |

| Image 2 | M1 | 0.78 | 0.72 | 0.60 | 1.08 |

| M2 | 0.77 | 0.77 | 0.63 | 1.00 | |

| M3 | 0.78 | 0.72 | 0.60 | 1.08 | |

| M4 | 0.80 | 0.73 | 0.62 | 1.09 | |

| M5 | 0.78 | 0.73 | 0.61 | 1.07 | |

| Image 3 | M1 | 0.95 | 0.95 | 0.91 | 1.00 |

| M2 | 0.96 | 0.95 | 0.92 | 1.01 | |

| M3 | 0.94 | 0.94 | 0.88 | 1.00 | |

| M4 | 0.95 | 0.95 | 0.91 | 1.00 | |

| M5 | 0.96 | 0.95 | 0.91 | 1.01 | |

| Image 6 | M1 | 0.95 | 0.98 | 0.93 | 0.98 |

| M2 | 0.95 | 0.98 | 0.93 | 0.97 | |

| M3 | 0.95 | 0.96 | 0.91 | 0.98 | |

| M4 | 0.95 | 0.96 | 0.91 | 0.99 | |

| M5 | 0.93 | 0.98 | 0.92 | 0.95 | |

| CV across Models | Performance Measure | |||

|---|---|---|---|---|

| Precision | Recall | Area Ratio | JI | |

| Image 1 | 5 | 1 | 5 | 5 |

| Image 2 | 1 | 4 | 4 | 3 |

| Image 3 | 1 | 1 | 1 | 2 |

| Image 6 | 1 | 1 | 2 | 1 |

| CV across Images | ||||

| Model 1 | 10 | 14 | 4 | 20 |

| Model 2 | 10 | 11 | 4 | 18 |

| Model 3 | 11 | 13 | 5 | 21 |

| Model 4 | 9 | 13 | 7 | 21 |

| Model 5 | 9 | 13 | 6 | 19 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jones, E.G.; Wong, S.; Milton, A.; Sclauzero, J.; Whittenbury, H.; McDonnell, M.D. The Impact of Pan-Sharpening and Spectral Resolution on Vineyard Segmentation through Machine Learning. Remote Sens. 2020, 12, 934. https://doi.org/10.3390/rs12060934

Jones EG, Wong S, Milton A, Sclauzero J, Whittenbury H, McDonnell MD. The Impact of Pan-Sharpening and Spectral Resolution on Vineyard Segmentation through Machine Learning. Remote Sensing. 2020; 12(6):934. https://doi.org/10.3390/rs12060934

Chicago/Turabian StyleJones, Eriita G., Sebastien Wong, Anthony Milton, Joseph Sclauzero, Holly Whittenbury, and Mark D. McDonnell. 2020. "The Impact of Pan-Sharpening and Spectral Resolution on Vineyard Segmentation through Machine Learning" Remote Sensing 12, no. 6: 934. https://doi.org/10.3390/rs12060934

APA StyleJones, E. G., Wong, S., Milton, A., Sclauzero, J., Whittenbury, H., & McDonnell, M. D. (2020). The Impact of Pan-Sharpening and Spectral Resolution on Vineyard Segmentation through Machine Learning. Remote Sensing, 12(6), 934. https://doi.org/10.3390/rs12060934