1. Introduction

Remote sensing technology has been developed rapidly in the past few decades, and remote sensing platforms have gradually become diversified, such as ground, aerial, and satellite platforms. Satellites, airplanes, and unmanned aerial vehicles (UAVs) have been the main carriers in the acquisition of remote sensing images. In particular, the UAV has the advantages of strong maneuverability, convenient operation, being hardly influenced by cloud, strong data acquisition abilities [

1,

2,

3], etc., which make it widely applied in various fields [

4,

5,

6,

7,

8,

9,

10,

11,

12]. How to efficiently locate the UAV images without geo-tags and navigate the UAV when the positioning system (e.g., GPS) is not available still face great challenges that are expected to be solved. Image-based geo-localization is an emerging technology in cross-view information integration and provides a new idea for UAV image localization and navigation. It is able to locate images without geo-tags based on images with geo-tags, so as to better serve UAV image localization and navigation. The key to solving this problem is the cross-view image matching between the images without geo-tags and the images with geo-tags.

Early studies of cross-view image matching are based on the ground view [

13,

14,

15,

16]. However, the ground-view images with geo-tags are usually limited to small spatial and temporal scales and are difficult to obtain. In contrast, satellite-view images have the advantages of wide spatiotemporal coverages and having geo-tags. Some traditional satellite-view image processing methods (e.g., image classification, object detection, and semantic segmentation) simply use the surface feature information captured by satellite images [

17,

18,

19,

20,

21,

22,

23,

24,

25,

26], and the geo-tags of the satellite-view image are usually neglected. To make full use of the geo-tags of the satellite-view images to locate images from other views, scientific communities began to pay attention to cross-view image matching between the satellite view and other views. A large series of image datasets for cross-view image matching, which usually come from the ground view and the satellite view, has been released. Lin et al. [

27] used public image data sources to construct 78,000 image pairs between aerial images and ground-view images. Inspired by this idea, Tian et al. [

28] collected ground-view (street-view) images and aerial-view images of buildings in different cities (including Pittsburg, Orlando, and Manhattan) and produced image pairs. Besides the above two datasets, CVUSA [

29] and CVACT [

30] are the other two datasets related to cross-view image matching. At the same location in CVUSA and CVACT datasets, there is an image pair, which contains a panoramic ground-view image and a satellite-view image.

Based on these datasets, a series of cross-view image matching methods were developed [

29,

30,

31,

32,

33,

34,

35,

36,

37]. Bansal et al. [

31] used the structure of self-similarity of patterns on facades to match ground-view images to an aerial gallery, which demonstrated the feasibility of matching the ground-view image with the aerial-view image. Lin et al. [

32] used the mean of aerial image features or ground attribute image features as labels. Then, they used support vector machines (SVMs) to classify ground-view images to realize the cross-view image matching. With the continuous development of deep learning, the convolutional neural network (CNN) has shown good performance in image feature expression [

36,

38,

39,

40,

41]. Since then, CNN has been widely used for cross-view image matching.

The methods of using CNN can be divided into two types. The first method is to align the image features of one view with the features of another view. Lin et al. [

32] used CNN to search for the matching factor between the aerial-view and ground-view images and found the matching accuracy was significantly improved, which laid a good foundation for later scientists to use deep learning technology to realize cross-view image matching. After extracting the building through the method of target detection, Tian et al. [

28] used the building as a bridge between the ground-view and the aerial-view images to perform cross-view image matching and achieve the goal of geo-localization in the urban environment. Based on the CVUSA dataset, Zhai et al. [

29] proposed a strategy for extracting the semantic features of the satellite view. They first extracted the features of the satellite-view image through CNN and then mapped these extracted features to the ground view to obtain ground-like view features. Finally, the ground-like view features were compared with the semantic features extracted directly from the ground-view image, and the difference was minimized through end-to-end training. The ground-like view features extracted by the model were matched with the ground-view features in the test set to complete the cross-view image matching and realize the geo-localization. Since then, CVUSA has been widely used in cross-view image matching studies, and a series of methods have been proposed. Hu et al. [

42] used a two-branch CNN to extract the local features of the ground-view image and the satellite-view image, and then used the Netvlad layer to aggregate the extracted local features to obtain a global description vector [

43,

44]. Through end-to-end training, the global description vector distance of positive samples between two views is minimized, and the negative sample distance is maximized. Finally, based on the distance of the global description vector of the two views, the cross-view image matching at the same location is realized. Shi et al. [

45] also used a two-branch CNN to extract image features from two views. Instead of directly optimizing the distance between positive and negative samples, they recombined ground-view features through feature transport to obtain satellite-like view features. They optimized the distance between the satellite-view feature and the satellite-like view feature. Based on the CVACT dataset, Liu and Li [

30] added the orientation information in the panoramic ground-view image to the deep learning network model, which shows an outstanding performance in the matching between the panoramic ground-view image and the satellite-view image. This method provides a novel perspective for the cross-view image matching.

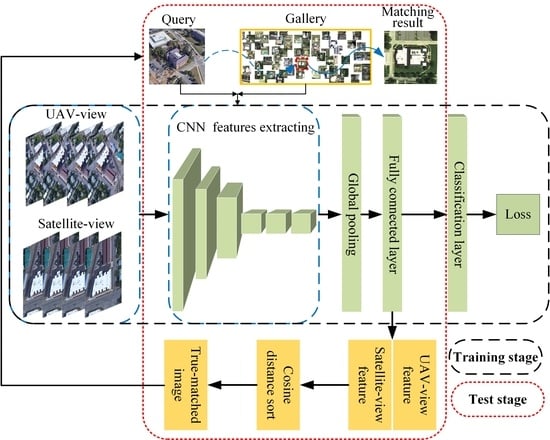

The second method is to map the features of different views to the same space according to the idea of classification. The dataset used in the first method usually has only one image pair at a target location (only one image of each view). Therefore, it is infeasible to use images from different views of a target location as a class to learn the image features of the same location. The images from different views of a target location were used as a class in the second method. Workman and Jacobs [

36] found that the classification model trained on the place dataset performed well in the feature recognition of other places. Zheng et al. [

46] used two-branch CNN and category labels to match the UAV-view, satellite-view, and ground-view images.

The existing cross-view image matching methods are mostly aimed at the aerial view (including satellite view) and the ground view and use a dataset that only has a single image pair at the target location. However, these methods do not consider the similarity between the satellite-view image and the UAV-view image, which makes them difficult to be used for cross-view image matching between the satellite view and the UAV view. To overcome the shortcomings of existing methods in cross-view image matching between the satellite view and the UAV view, we proposed a cross-view image matching method based on location classification (LCM). In this paper, the impact of expanding a single satellite image, the length of image features, and multiple queries on matching accuracy are also explored.

2. Datasets

The dataset used in this study is University-1652, released by Zheng et al. [

46], which contains 1652 buildings (1652 locations) from 72 universities worldwide. Each building consists of images from three different views, including satellite view, UAV view, and ground view (street view). Additionally, each building in the dataset has only one satellite-view image, 54 UAV-view images from different heights and angles, and one or more ground-view images. Satellite-view images and UAV-view images are adopted in this paper. Please note that the satellite-view images can be satellite images or aerial images because whether a satellite-view image in University-1652 is from a satellite or aircraft is unknown to users. Therefore, considering that the view of the aerial image and the satellite image are close, the “satellite-view image” is used in this study. Besides, the satellite-view image is vertical and the UAV-view image is oblique.

Figure 1 shows several images from the three views.

University-1652 was selected as the experimental data for five reasons. First, to the best of our knowledge, it is the only dataset containing both UAV-view and satellite-view images to date. Second, it contains many scenes that are widely distributed and is suitable to be used to train and test models. Third, the target buildings in the images are all ordinary buildings without landmarks, which excludes the influence of special styles on the experiment. Fourth, it has enough image samples for each target building. Fifth, the scales of the satellite-view images and the UAV-view images are similar.

The satellite-view images with geo-tags are from Google Maps. The images of Google Map have high spatial resolutions (from level 18 to level 20, the spatial resolution ranges from 1.07 m to 0.27 m), which have a similar scale to the UAV images. This feature is beneficial to the cross-view image matching of the UAV view and the satellite view. Satellite-view image acquisition is divided into three steps. Firstly, the data owner obtains the metadata (building names and affiliations) of the university building from the websites. Secondly, the data owner obtains the geo-tag (longitude and latitude, in WGS84 coordinate system) of the geometric center of the building on Google Maps based on the metadata. Finally, the data owner acquires the satellite-view images containing the target building and surrounding environment based on the geo-tags.

Due to the airspace control and high cost, real UAV-view images of 1652 buildings are difficult to obtain by actual flight. An alternative solution was using the synthetic UAV-view image obtained through simulation to replace the real UAV-view images. The synthetic UAV-view images are obtained by simulating UAV flight based on the 3D building model on Google Earth through the following steps. First, the 3D model of the corresponding building was found according to the name and geo-tag. Second, the UAV simulation video of the 3D building and surrounding environment was obtained according to the pre-set UAV flight path. The schematic diagram of the UAV simulation flight is shown in

Figure 2. In order to make the synthesized images contain the multi-angle information of buildings, the UAV flight path is set to a spiral curve. Throughout the simulation process, the flight height is reduced from 256 to 121.5 m. This flight path can make the synthesized image close to the real situation [

47,

48]. We call synthetic UAV-view image UAV-view images (please note that it is not “UAV images”) because these images were obtained based on the simulated UAV view and are therefore close to the real UAV images. It should be noted that only 1402 buildings out of 1652 buildings have 3D models, and the remaining 250 buildings lack 3D models or street-view images. In addition, real UAV images of ten buildings were additionally provided.

4. Experiments Results and Discussions

4.1. Matching Accuracy of LCM’s Baseline Model

In the LCM’s baseline model, the output size of the fully connected layer behind the backbone network is set to 512. Although that the fully connected layer (feature size) is set to different dimensions in

Section 4.3, the output size of the fully connected layer in other experiments defaults to 512. The initial learning rates of the backbone network and newly added layers are set to 0.001 and 0.01, respectively. During the training, we gradually decay the learning rate every 80 epochs. The decay rate is 0.1. The dropout rate is set to 0.5. The model optimization method is a stochastic gradient descent with a momentum of 0.9. In addition, all images are resampled to 384 × 384 before being input to the network.

As mentioned above, there is only one satellite-view image of each target location, but there are 54 UAV-view images. Therefore, the satellite-view image accounts for a very low proportion of the training sample (this proportion is 1.8%). Thus, the model has fewer chances to learn feature expressions from satellite-view images during the training. The model that is trained based on the training dataset with unbalanced samples may not fully express the features of the satellite-view image, which may decrease the matching accuracy. The number of satellite-view images of a target location has been expanded from 1 to 54 by generating new satellite-view images through randomly rotating, cropping, and erasing. Through the strategy above, the target location of the training dataset has 54 UAV-view images and 54 satellite-view images.

The variations of the two significant parameters, i.e., loss and classification error, during the training process are shown in

Figure 5. Loss is the average value of the cross entropy; classification error is the average probability of the image being misclassified. Because the LCM is not a classification model, the loss and classification error in the test dataset cannot be calculated. To enable the model to learn the optimal parameters, 250 epochs are used in this study. The smaller loss and classification error means that the model can more classify satellite-view images and UAV-view images of the same target location into one class accurately, which suggests that the model can map image features from two views to the same space. Besides, if a target building is included in the training dataset, the method is able to classify images of the building with the same origin (both UAV or both satellite) into the same class. If a target building is not included in the training dataset, the method will classify images of the building with the same origin (both UAV or both satellite) into the same class by relying on the feature similarity between the image of the target building and the image in the relevant gallery. After 50 epochs, loss and classification errors are quickly reduced to 0.198 and 3.56%, respectively. After 100 epochs, loss and classification errors tend to converge. After 250 epochs (approximately 58 min), loss and classification error were reduced to 0.045 and 1.16%, respectively. These results show that LCM model has the advantages of fast convergence and short training time. In addition, the results also indicate that inputting the satellite view and UAV view of the same target location to the classification model without differences performs well. In other words, the proposed LCM can map image features from two different views to the same space through classification.

The comparison results of the LCM’s baseline model and Zheng model are shown in

Table 2. When single satellite-view images are used as the query, the Recall@1, Recall@10, and AP of the LCM are higher by 5.42%, 6.65%, and 5.93% than the Zheng model, respectively. When single UAV-view images are used as the query, the Recall@1, Recall@10, and AP of our model are also higher by 8.16%, 4.79%, and 7.69% than those of the Zheng model, respectively. Overall, the LCM can more effectively extract the image features of the two views, thereby improving the matching accuracy. In addition, the Recall@1 and AP in UAV-to-satellite are more significantly improved than satellite-to-UAV.

At present, the probability that the first image in the ranking of the matching result is the exact true-matched image of the query image cannot be 100%. When the first image in the ranking of the matching result is not the true-matched image, we need to manually find the true-matched image of the query image according to the ranking of the matching results.

Table 2 shows that, for the LCM, the probability of finding the true-matched image of the query in the top ten of the ranking of the matching result is higher than 90%. This means that although the LCM cannot automatically give the correct location of the query in some cases, it still allows us to find the true-matched image and significantly improves the accuracy of the matching with high efficiency.

To further quantitatively evaluate the LCM’s baseline model, the visualization results of two cases are shown in

Figure 6 and

Figure 7. For each location (a line of images), the image on the left of the red line in the figure is the query image, and the five images on the right of the red line are the top five images in the ranking of the matching result.

For satellite-to-UAV (

Figure 6), the LCM baseline model can find the images at the same target location as the query image in the UAV-view gallery. For satellite-to-UAV,

Figure 6 shows that the top five images in the ranking of matching result have multiple true-matched images. The reason is that there are 54 images that can be correctly matched with each satellite image in the UAV-view gallery. For UAV-to-satellite,

Figure 7 shows that the top five images in the ranking of the matching result have only one true-matched image. The reason is that there is only one image that can be correctly matched with each UAV image in the satellite-view gallery. A closer look at

Figure 7 shows that these false-matched images have similar structures and features to the query images. This indicates that the LCM’s baseline model can effectively capture images with similar characteristics to the query image, and therefore benefit the cross-view image matching.

To further compare and analyze the influence of different types and depths of CNNs on the LCM, we used ResNet-50, VGG-16, and DensNet-121 as the LCM’s backbone network [

52,

53]. The matching accuracies of the LCM using these three different backbone networks are shown in

Table 3. VGG-16 has the worst performance among the three backbone networks. For satellite-to-UAV (UAV-to-satellite), the Recall@1, Recall@5, Recall@10, and AP of the LCM are lower by 11.84% (18.46%), 7.31% (12.24%), 7.01% (9.67%), and 17.55% (11.37%), respectively, when VGG-16 rather than ResNet-50 is used as the backbone network. The possible reason is that the VGG-16 network has fewer layers, resulting in its weaker ability of feature expression than ResNet-50. The accuracy of DensNet-121 is slightly lower than ResNet-50. For satellite-to-UAV (UAV-to-satellite), the Recall@1, Recall@5, Recall@10, and AP of LCM are lower by 2.14% (1.64%), 12.24% (0.3%), 0.59% (1.15%), and 6.12% (1.33%), respectively, when the DensNet-121 rather than ResNet-50 is used as the backbone network. Although DensNet-121 has more network layers, it does not perform better than ResNet-50 in this task. The possible reason is that the features extracted by DensNet-121 are not suitable for the LCM. This conclusion shows that the networks that are deeper and more complex than ResNet-50 cannot improve the accuracy of the LCM’s baseline. ResNet-50 has the best and most stable performance in the three backbone networks for the LCM’s baseline. In the following experiments, if we do not specify otherwise, ResNet-50 will serve as our backbone network.

4.2. Influence of Satellite Image Expansion on the Matching Accuracy

In the LCM’s baseline model, we expanded the number of satellite-view images to 54 (the same number as the UAV-view images) of a target location. Will different numbers of satellite-view images affect matching accuracy? Do 54 satellite images of each target location as a training dataset have the best matching accuracy for the LCM? To answer the above questions, we conducted the following comparative experiment. Random rotation, cropping, and erasing operations were performed on a satellite-view image of each target location (it is consistent with the satellite-view image expansion method in the LCM’s baseline model). In this way, new satellite-view images were generated by increasing the number of images associated with each target location in several ways, namely to 1, 3, 9, 18, 27, 54, and 81 images per target. These training datasets containing different numbers of satellite-view images of a target location were used to train models. The matching accuracies of these models on the test dataset were also tested, and the test results are shown in

Table 4.

According to

Table 4, it is clear that when we do not expand the number of the satellite-view images (namely, only using one satellite image of each target location), the LCM model has the lowest matching accuracy. With the increase in satellite-view image samples, Recall@K and AP gradually increase and the upward trend gradually slows down. When the numbers of satellite-view images and the UAV-view images of a target location are the same, Recall@K and AP reach their maximum values. When the number of satellite-view images at a target location increases from 1 to 54 for satellite-to-UAV, Recall@1, Recall@5, Recall@10, and AP increase by 9.99%, 8.60%, 8.31%, and 6.94%, respectively; for UAV-to-satellite, Recall@1, Recall@5, Recall@10, and AP increase by 7.04%, 5.65%, 5.09%, and 6.71%, respectively. When the number of satellite-view images of a target location increases from 54 to 81, Recall@K and AP show a slight decrease. For satellite-to-UAV, Recall@1, Recall@5, Recall@10, and AP decrease by 0.29%, 0.46%, 0.59%, and 1.29%, respectively; for UAV-to-satellite, Recall@1, Recall@5, Recall@10, and AP decrease by 2.03%, 1.24%, 1.60%, and 1.89%. The possible reason for this phenomenon is the imbalance of the image samples of the two views. When there are more image samples of view A than of view B, the feature expressed by the classification model will be closer to the feature space of view A.

In general, the Recall@K of satellite-to-UAV is higher than that of UAV-to-satellite, especially Recall@1. This phenomenon can be attributed to the difference in the number of true-matched images of the two views at the same target location. For satellite-to-UAV, when we use a satellite-view image as the query, there are 54 true-matched images in the UAV-view gallery. In this case, if any of the 54 true-matched images appear before the (K + 1)-th images in the ranking of the matching result, we can set Recall@K to 1. For UAV-to-satellite, when we use a UAV-view image as the query, there is only one true-matched image in the satellite-view gallery. In this case, we can set Recall@K to 1 only if this true-matched satellite-view image appears before the (K + 1)-th images in the ranking of the matching result. Therefore, the Recall@K of satellite-to-UAV is expected to be higher than the Recall@K of UAV-to-satellite in the same test dataset.

According to the above comparative experiment, it is evident that when the number of the satellite-view images at a target location is expanded to 54 (the number is the same as the number of UAV-view images), the trained model will show the best performance.

4.3. Matching Accuracy of Different Feature Sizes

In

Section 4.1, the size of the feature vector we used to calculate the CS is 512 dimensions (the output size of the fully connected layer after the backbone network is 512 dimensions). Thus, we want to explore the following questions: does the size of the feature vector used to calculate the CS have an effect on the experimental results? To answer the question, we embedded the fully connected layers with different output sizes into the LCM and trained them. Finally, the obtained models were used to test. Feature vectors of five sizes of 256, 384, 512, 768, and 1024 are selected for comparison.

Figure 8 shows the relationship between the cross-view matching accuracy and the feature size.

As shown in

Figure 8, we cannot find the phenomenon that a larger feature vector size can lead to a higher Recall@K and AP. The relationship between the matching accuracy and the feature size can be divided into two cases. The first case is when the feature size ranges from 256 to 512, Recall@K and the AP become larger as the feature size increases. When the feature size is 512, the LCM has an optimal matching accuracy. However, the matching accuracy exhibits relatively low variations in this case. For satellite-to-UAV, Recall@1, Recall@5, Recall@10, and AP increase by 0.57%, 1.32%, 0.59%, and 0.51%, respectively; for UAV-to-satellite, Recall@1, Recall@5, Recall@10, and AP increase by 1.51%, 0.57%, 1.07%, and 1.29%, respectively. In the second case, when the feature size ranges from 512 to 1024, Recall@K and AP decrease as the feature size increases. When the feature size is set to 1024, the lowest matching accuracy is shown. Compared with the first case, the matching accuracy varies relatively more rapidly in this case. For satellite-to-UAV, Recall@1, Recall@5, Recall@10, and AP decrease by 7.56%, 5.60%, 6.01%, and 9.77%, respectively; for UAV-to-satellite, Recall@1, Recall@5, Recall@10, and AP decrease by approximately 10.42%, 8.32%, 7.01%, and 9.91%, respectively. Obviously, 512 is the optimal feature size. This is why we used this feature size in the LCM model (see

Section 4.1).

4.4. Matching Accuracy of Multiple Queries

In previous matching experiments, a single UAV-view image was used as a query for UAV-to-satellite. In University-1652, the synthesized UAV-view images were viewed obliquely all around the target building; thus, it is difficult for a single UAV-view query to provide comprehensive information about the target building. Fortunately, University-1652 provides synthetic UAV images at different heights and angles for each target building. These UAV-view images from different viewpoints can provide comprehensive information and characteristics of each target building. This means that for UAV-to-satellite, we can use multiple UAV-view images as a query at the same time. To explore whether multiple queries can improve the matching accuracy of UAV-to-satellite, all synthetic UAV images of a target building were used as the query, and then the image of the same location as the query in the satellite-view gallery was retrieved.

To facilitate the measurement of the correlation between the multi-query images and the image in the gallery, the feature of multiple queries is set as the mean value of the single image feature of a target building. In our experiment, the features of 54 UAV-view images were averaged and used as the feature of multiple queries. The test accuracy of multiple queries is shown in

Table 5. From the table, it is clear that compared with the original single query, the matching accuracy of multiple queries can be improved by about 10% in both Recall@1 and AP. Besides, Recall@5 and Recall@10 are also significantly improved. We also compared the matching results of multiple queries of the LCM with the results of the Zheng model. The comparison results show that the LCM performs better than the Zheng model when using multiple queries: Recall@1, Recall@5, Recall@10, and AP are increased by 8.56%, 4.57%, 3.42%, and 7.91%, respectively.

To investigate the effectiveness of using multiple queries more clearly, we visualized the matching results of multiple queries and a single query of three target buildings (targets A, B, and C). The visual details are shown in

Figure 9. For the target buildings A and B, the true-matched satellite-view images appeared in the third and fourth position in the ranking of the matching results when we use a single query. When we use multiple queries, the true-matched satellite-view images all appeared in the first position in the ranking of the matching results. For target building C, the true-matched satellite-view image does not appear in the top five in the ranking of the matching results. When we use multiple queries, the true-matched satellite-view image appeared in the third position in the ranking of the matching results. These results demonstrate that multiple queries can indeed improve the matching accuracy of UAV-to-satellite when we use the LCM. For satellite-to-UAV, because only one satellite-view image can be used as a query of the target location, the experiments of multiple queries cannot be conducted.

4.5. Matching Result of the Real UAV-View Image

In previous experiments, the UAV-view images used in the training and testing of the model were all synthetic UAV-view images based on 3D buildings. To further evaluate the performance of the LCM on real UAV-view images, we conducted the following two experiments. Firstly, we used the real UAV-view query to match the synthetic UAV-view images of the same location (hereinafter referred to as RUAV-to-SUAV). Secondly, we used real UAV-view queries to match the satellite-view images of the same location (hereinafter referred to as RUAV-to-Sat). Real UAV-view images are also provided by University-1652. Due to the restrictions of airspace control and privacy protection policies, there are only 10 real UAV-view images; therefore, we only selected a few target buildings with real UAV-view images for experiments. Because there are few real UAV-view images that can be used as queries, Recall@K and AP were not employed as quantitative evaluation indicators here. We only show the visual matching results of the two experiments.

Figure 10 shows the matching results of RUAV-to-SUAV. When real UAV-view images are used as the query, the LCM can accurately match the images of the same location in the synthetic UAV-view gallery. This result not only shows that the LCM is effective in the feature expression of the real UAV-view images but also shows that the synthetic UAV-view images in University-1652 are close to the real scenes.

The matching results of RUAV-to-Sat are shown in

Figure 11. The performance of the experimental results is consistent with the one in which we use the synthetic UAV-view image as a query to match the satellite-view image. The LCM can successfully match the real UAV-view images with the satellite-view images. The false-matched satellite-view image and the true-matched satellite-view image have similar structural and color features. It further shows that the LCM trained based on the synthetic UAV-view images also has a good matching performance in the real images.

Because the University-1652 dataset does not provide the height and angle of the UAV-view images, we cannot obtain the scale differences of different UAV-view images and the scale differences between satellite-view and UAV-view images. In addition, the scale of different UAV-view images from different heights and the scale of satellite-view and UAV-view images used in this study are close. Therefore, the influence of the scale difference was not considered. However, the scale difference is important for cross-view image matching. Therefore, we will consider the issue in the practical application of the method in future studies.

5. Conclusions

UAV technology has been developed rapidly in recent years. The localization of UAV images without geo-tags and UAV navigation without geographic coordinates are crucial for users. Cross-view image matching is an effective method to realize UAV-view image localization and UAV navigation. However, the algorithms of cross-view image matching between the UAV view and the satellite view are still in their beginning stage, and the matching accuracy is expected to be further improved when applied in real situations.

This study explores the problem of cross-view image matching between UAV-view images and satellite-view images. Based on University-1652, a cross-view image matching method (LCM) for UAV-view image localization and UAV navigation was proposed. The LCM is based on the idea of classification and has the advantages of fast training and high matching accuracy. There are two findings from the experiment: (1) expanding the satellite-view image can improve the sample imbalance between the satellite-view image and the UAV-view image, thereby improving the matching accuracy of the LCM; (2) appropriate feature dimensions and multiple queries can significantly improve the matching accuracy of the LCM. In addition, the LCM trained based on synthetic UAV-view images also shows a good performance in matching real UAV-view images and satellite-view images. Compared with one previous study, various accuracy indicators of matching the UAV-view image and the satellite-view image based on the LCM have been improved by about 5–10%. Therefore, the LCM can better serve the UAV-view image localization and UAV navigation. In the future, we will continue to explore how to further improve the matching accuracy of UAV-view images and satellite-view images and how to use UAV images as an intermediate bridge to improve the matching accuracy of general street-view images and satellite-view images. In addition, please note that the UAV height in University-1652 is unrealistic for practical applications due to airspace regulations. Therefore, in the future, we will consider this issue and simulate the UAV images at a lower flight height.