1. Introduction

Remote sensing systems have effectively assisted different areas of applications over a long period of time. Nevertheless, in recent years, the integration of these systems with machine learning techniques has been considered state-of-the-art to attend diverse areas of knowledge, including precision agriculture [

1,

2,

3,

4,

5,

6,

7]. A subgroup inside machine learning methods refers to DL, which is usually architectured to consider a deeper network in task-solving with many layers of non-linear transformations [

8]. A DL model has three main characteristics: (1) can extract features directly from the dataset itself; (2) can learn hierarchical features which increase in complexity through the deep network, and (3) can be more generalized in comparison with a shallower machine learning approach, like support vector machine, random forest, decision tree, among others [

8,

9].

Feature extraction with deep learning-based methods is found in several applications with remote sensing imagery [

10,

11,

12,

13,

14,

15,

16,

17,

18]. These deep networks are built with different types of architectures that follow a hierarchical type of learning. In this aspect, frequently adopted architectures in recent years include Unsupervised Pre-Trained Networks (UPN), Recurrent Neural Networks (RNN), and Convolutional Neural Networks (CNN) [

19]. CNN is a DL class, being the most used for image analysis [

20]. CNN’s can be used for segmentation, classification, and object detection problems. The use of object detection methods in remote sensing has been increasing in recent years. Li et al. [

21] conducted a review survey and proposed a large benchmark for object detection. The authors considered orbital imagery and 20 classes of objects. They investigated 12 methods and verified that RetinaNet slightly outperformed the others in the proposed benchmark. However, novel methods have already been proposed that were not investigated yet in several contexts.

In precision farming problems, the integration of DL methods and remote sensing data has also presented notable improvements. Recently, Osco et al. [

15] proposed a CNN-based method to count and geolocate citrus trees in a highly dense orchard using images acquired by a remotely piloted aircraft (RPA). More specifically to fruit counting, Apolo-Apolo et al. [

22] performed a faster region CNN technique for the estimation of the yield and size of citrus fruits using images collected by RPA. Similarly, Apolo-Apolo et al. [

23] generated yield estimation maps from apple orchards using RPA imagery and a region CNN technique.

Many other examples are presented in the literature regarding object detection and counting in agriculture context [

2,

24,

25,

26], like for strawberry [

27,

28], orange and apple [

29], apple and mango [

30], mango [

31,

32], among others. A recent literature review [

32] regarding the usage of DL methods for fruit detection highlighted the importance of creating novel methods to easy-up the labeling process since the manually annotation to obtain training datasets is a labor-intensive and time-consuming task. Additionally, the authors argued that DL models usually outperformed pixel-wise segmentation approaches based on traditional (shallow) machine learning methods in the fruit-on-plant detection task.

In the context of apple orchards, Dias et al. [

33] developed a CNN-based method to detect flowers. They proposed a three-step based approach—superpixels to propose regions with spectral similarities; CNN, for the generation of the feature; and support vector machines (SVM) to classify the superpixels. Also, Wu et al. [

34] developed a YOLO v4-based approach for the real-time detection of apple flowers. Regarding apple leaf disease analysis, another research was also conducted [

35], and a DL approach to edge detection was proposed to apple growth monitoring by Wang et al. [

36]. Therefore, in apple fruit detection, recent works were developed based on CNN adoption. Tian et al. [

37] improved the Yolo-v3 method to detect apples at different growth stages. An approach to the real-time detection of apple fruits in orchards was proposed by Kang and Chen [

38] showing an accuracy ranging from 0.796 to 0.853. The Faster R-CNN approach showed slightly superior to the remaining methods. Gené-Mola et al. [

39] adapted the Faster R-CNN method to detect apple fruits using an RGB-D sensor. In this aspect, these papers pointed out that occlusions may difficult the process of detecting apple fruits. To cope with this, Gao et al. [

40] investigated the use of Faster R-CNN considering four fruit classes: non-occluded, leaf-occluded, branch/wire-occluded, and fruit-occluded.

Even providing very accurate results in the current application, Faster R-CNN [

41], and RetinaNet [

42] are dated before 2018, and, recently, novel methods have been proposed but not yet evaluated in apple fruit detection. One of these refers to the Adaptive Training Sample Selection (ATSS) [

43]. ATSS, different from other methods, consider an individual intersection over union (IoU) threshold for each ground-truth bounding box. Consequently, improvements in the detection of small objects or objects that occupy a small bounding box area are expected. Although being a recent method, few works explored it, mainly in remote sensing applications, to attend to precision agriculture-related problems. Also, when considering RGB imagery, the method may be useful for low-cost sensing systems.

In fact, the prediction of fruit production is made manually by counting the fruits in selected trees, and then a generalization is made for the entire orchard. This procedure is still a reality for most (not to say totally) of the orchards in Southern Brazil. Evidently, there is some imprecise forecasting due to the natural variability of the orchards. Therefore, techniques that aim to count apple fruits more efficiently for each tree, even having some occluded apple fruits, helps in the prediction yield. The fruit monitoring is then important immediately from the fruit-set until the ripe stage. After the fruit setting, it can be monitored weekly. Still, losses may occur with apples dropping and lying on the ground due to natural conditions or mechanical injuries until the harvesting process. It is important to mention that the apple fruits are only visible after the fruit setting stage because the ovary is retained under the stimulus of pollination [

44]. The final formed shapes occur one month before harvesting and can be monitored to check losses in productivity. This statistic is also an important key-information for the orchard manager and the fruit production forecasting.

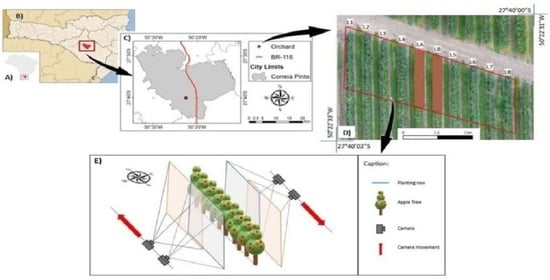

However, the increasing use of anti-hail plastic net cover in orchards helps to prevent damage caused by hailstorms. The installation of protective netting over high-density apple tree orchards is strongly recommended in regions where heavy convective storms occur to avoid losses in both production and fruit quality [

45,

46]. Therefore, the shield reduces the percentage of injuries in apple trees during critical growth development stages, mainly at both flowering and fruit development phases. As a result, protective netting has been implemented in different regions of the world, and in Southern Brazil [

47,

48]. On the other hand, the use of nets hinders the use of remote sensing images acquired at orbital, airborne, and even from RPA systems for fruit detection and fruit production forecasting. Therefore, this highlights the importance of using close-range and low-cost terrestrial RGB images acquired by semi or professional cameras. Such devices can be operated either manually or even be fixed in agricultural machinery or tractors.

Terrestrial remote sensing is also important in this specific case study because of the scale. The proximal remote sensing also allows the detection of apple fruits occluded by leaves and small branches. Complementary, the high spatial resolution also enables the detection of eventual injuries caused by diseases, pests, and even climate effects on fruits itself besides leaves, branches, and trunks that would not be possible at all in images acquired by aerial systems.

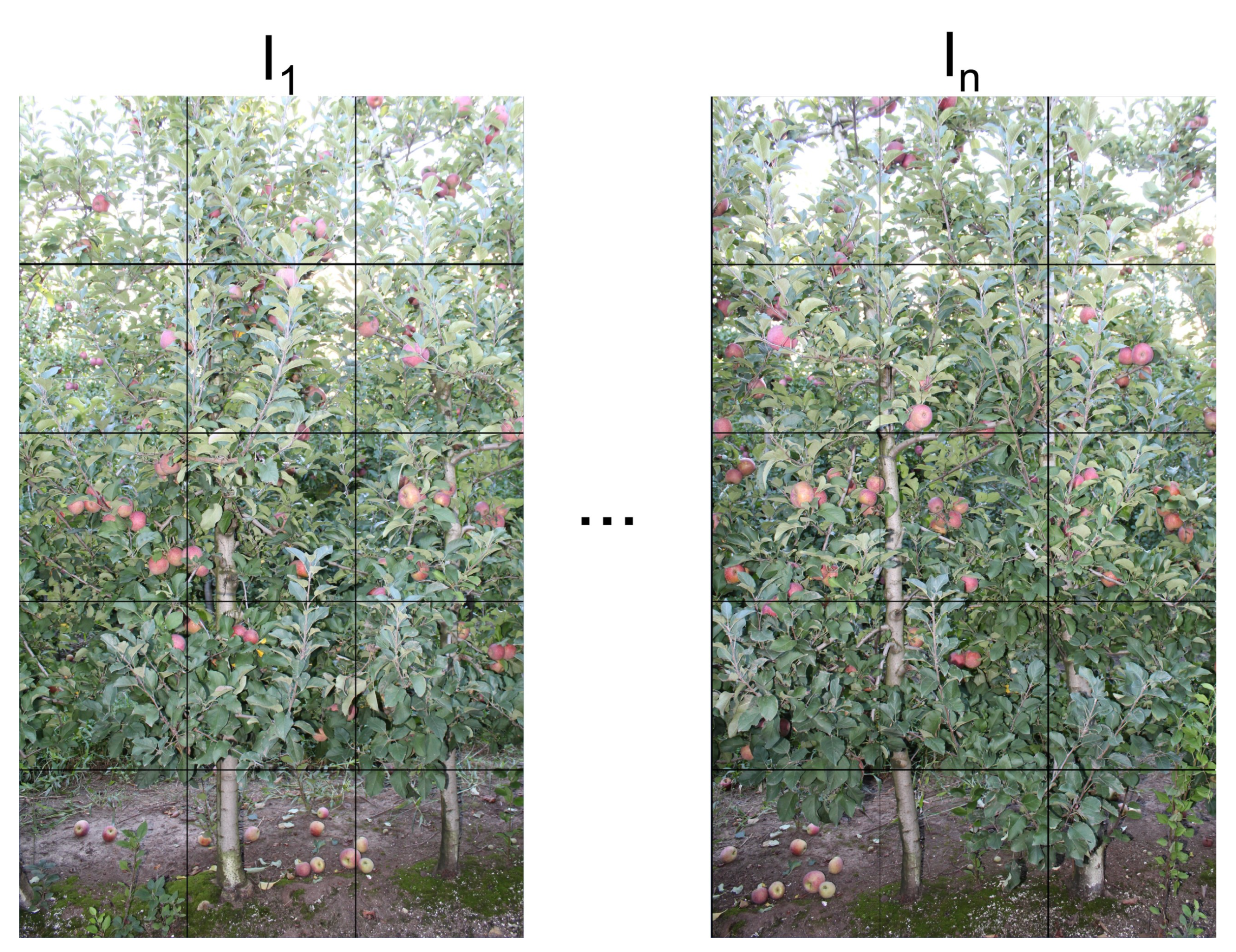

This paper proposes an approach based on the ATSS DL method applied to close-range and low-cost terrestrial RGB images for automatic apple fruit detection. The main ATSS characteristic is that only the center point of the objects is labeled. It is a very interesting approach since bounding box annotation, mainly in heavily-dense fruit areas, is difficult to annotate manually besides being time-consuming.

Experiments were conducted comparing this approach to other object detection methods, considered the state-of-the-art in object detection proposals in the last years. The methods are: Faster-RCNN [

41], Libra-RCNN [

49], Cascade-RCNN [

50], RetinaNet [

42], Feature Selective Anchor-Free—FSAF [

51], and HRNet [

52]. Moreover, we evaluated the generalization capability of the proposed method by applying simulated corruptions with different severity levels, such as: noise (Gaussian, shot and impulse), blurs (defocus, glass, and motion), weather conditions (snow, frost, fog, and brightness) and digital processing (elastic transform, pixelate, and JPEG compression). Finally, we evaluate the bounding box size variation on the ATSS method application, ranging from 80 × 80 to 180 × 180 pixel-size. Another important contribution from this paper is the availability of the used dataset to be implemented in future research that compares novel DL networks in the same context.

3. Results and Discussion

3.1. Apple Detection Performance Using Different Object Detection Methods

The summary results of the apple fruit detection methods are shown in

Table 4. For each deep learning-based method, AP for each plantation line is presented in addition to its average and standard deviation (std). Experimental results showed that ATSS obtained the highest AP of 0.925(±0.011), followed by HRNet and FSAF. Traditional object detection methods, such as Faster-RCNN and RetinaNet, obtained slightly lower results with AP of 0.918(±0.016) and 0.903(±0.020), respectively. Despite this, all methods showed results above 0.9, which indicates that apple counting automatically is a possible task. Compared to manual counting by a human inspection, automatic counting is much faster, with similar accuracy.

The last column of

Table 4 shows the estimated number of apples detected by each method in the test set. We can see that ATSS detected approximately 50 more apples than the second best method (HRNet or FSAF). It is important to highlight that this result was obtained in three plantation lines, while the impact on the entire plantation would be much greater. In addition, the financial impact on an automatic harvesting system using ATSS, for example, would be considerable despite the small difference in AP.

Examples of apple detection are shown in

Figure 7 and

Figure 8 for all object detection methods. These images presented challenging scenarios with high apple occlusion and different lighting conditions. Occlusion and differences in lighting are probably the most common problems faced by this type of detection, as observed in similar applications [

29,

30,

31]. The accuracy obtained by the ATSS method in our data-set was similar or superior when compared against other tree-fruit detection tasks [

67].

These images are challenging even for humans, who would take considerable time to perform a visual inspection. Despite the challenges imposed on these images, the detection methods presented good visual results, including ATSS (

Figure 7a). On the other hand, the lower AP of RetinaNet is generally due to false positives, as shown in

Figure 8c.

It is important to highlight that the methods were trained with all bounding boxes of the same size (

Table 2). However, the estimated bounding boxes were not all the same size. For example, for Faster-RCNN, the calculated width and height of all predicted bounding boxes in the test set have an average size of 114.60(±15.44) × 114.96(±17.40). The standard deviation, therefore, indicates that there is a variation between the predicted bounding boxes. The bounding boxes average area was 13,233 (±4232.4), indicating the variation between the predicted boxes.

3.2. Influence of the Bounding Box Size

The results of the previous section were obtained with a bounding box size of

pixels. In this section, we evaluated the influence of the bounding box size on the ATSS, as it obtained the best results among all of the object detection methods.

Table 5 shows the results returned when using sizes from

to

.

Bounding boxes with small size (e.g., pixels) did not achieve good results as they did not cover most of the apple fruit edges. The contrast and the edges of the object are important for the adequate generalization of the methods. On the other hand, large sizes (e.g., ) cause two or more apples to be covered by a single bounding box. Therefore, the methods cannot detect apple fruits individually, especially small ones or in occlusion. The experiments showed that the best result was obtained with a size of pixels.

Despite the challenges imposed by the application (e.g., fruit apples with large occlusion, scales, lighting, agglomeration), the results showed that the AP is high even without labeling a bounding box for each object. Since previous fruit-tree object detection studies [

29,

31] used different box-sizes as label mainly because of perspective differences in the fruit position, this type of information should be considered, as manual labeling is an intensive and laborious operation.

Here, we demonstrated that, with the ATSS method, when objects have a regular shape, which is generally the case with apples, using a fixed-size bounding box is sufficient to obtain acceptable AP. This, by itself, may facilitate labeling these fruits since the specialist can use a point-type of approach and, later, a fixed size can be adopted by the bounding-box-based methods what implies a significant time reduction.

3.3. Detection at Density Levels

In this section, we evaluated the results of ATSS with a bounding box of size

in patches with different numbers of apples (density).

Table 6 presents the results for patches with numbers of apples between 0–9, 10–19, ⋯, 30–42. The greater the number of apples in a patch, the greater the challenge in detection.

We can see that the method maintains precision around 0.94–0.95 for densities between 0–29 apples.

Figure 9 shows examples of detection in patches without apples, for example. This shows that the method is consistent in detecting a few apples to a considerable amount of 29 apples in a single patch. As expected, the method’s precision decreases at the last density level (patches with 30–42 apples). However, the precision is still adequate due to the large amount of apples.

3.4. Robustness against Corruptions

In this section, we evaluated the model robustness of the ATSS with a bounding box of size pixels in different corruptions only for the images in the test set. These corruptions simulate possible conditions to occur in image acquisition in situ, among adverse environmental factors, sensor attitude, and degradation in data recording.

Table 7 and

Table 8 shows the results for different corruptions and severity levels. The noise results among the three severity levels (

Table 7) indicated a reduction in averaged precision between 2.7% and 11.1% compared to non-corruption values. When considering gaussian and shot noise, the precisions were reduced in the same proportion, while the reduction caused by the impulse noise was higher in the first and second levels. Despite the severity levels of noise corruption, the precision was over 84%.

The reduction in the precision for all the blur components (

Table 7) was very similar, showing a slight decrease compared with the non-corruption condition. The reduction values were between 0% and 4.8%. In all three severity levels, the obtained results from motion blur impacted less when compared to those results achieved from defocus and glass. The lowest precision here was caused due to the defocus blur. All results showed precision values above 90%, even considering all the severity levels.

According to the results shown in

Table 8, the fog resulted in less precision in the different simulated weather conditions. The precision reductions were approximately 15.5% to 35.5% from the first to the third severity level, respectively.

Figure 10 shows the apple detection in two images corrupted by fog. By increasing the severity level of the fog, some apples are not detected. This loss of precision is mainly expected when there are apples with large leaf occlusion.

The brightness implied a slight precision reduction, between 0.5% and 2.4%, compared to the no-corruption condition. In the second severity level, the snow condition achieved the second-worst precision for all weather conditions. The obtained precisions from digital processing, in the elastic transform and pixelate, were near 95% for all severity levels, and the obtained results from JPEG compression were near to 92%. The pixelate showed the best precision results for all severity levels than other digital conditions, with the lowest precision reduction. The JPEG compression precisions reductions were between 1.4% to 3.2% for the severities levels 1 to 3, respectively, compared to the no-corruption condition. In general, the weather condition affected more precision than digital processing.

Previous works [

37,

39,

40] investigated and adapted Faster R-CNN and Yolo-v3 methods. Here, we investigated a novel method based on ATSS, and presented its potential for apple fruit detection. In a general sense, the results indicated that the ATSS-based method slightly outperformed the other deep learning methods, with the best result obtained with a bounding box size of 160 × 160 pixels. The proposed method was robust regarding most of the corruption, except for snow, frost, and fog weather conditions. However, the results remain still satisfactory what implies good accuracy records for apple fruit detection even when RGB pictures are acquired in periods when weather conditions are not that favorable such as frost, fog, and even snow events that are frequent during winter in some areas of the southern Santa Catarina Plateau in southern Brazil.

3.5. Further Research Perspectives

This research made all the close-range and low-cost terrestrial RGB images available, including the annotations. These images can be acquired manually in the field. However, cameras can also be installed in agricultural implements such as sprayers, or even in tractors and autonomous vehicles [

68]. This would be interesting to check the applicability of such images in the previous phases or even during the apple fruits’ harvesting. As the inspection process of the apple fruits is performed locally, it is also subjective. Interestingly would be to count fruits that fall in the ground and the impact in the estimation of the final apple fruit production. Another important task is to segment each fruit, which can be performed using a region growing strategy or based on DL instance segmentation methods.

Some orchards with plums and grapes would present similar changes as shown here by apples due to their particular shape and color. Both orchards also used the anti-hail plastic net cover to prevent further damages after the fruit setting until the harvesting period. However, some challenges may particularly occur when dealing with avocado, pear, and feijoa since the typical green color of these fruits is very similar to their leaves green color. These specific fruits would surely require adjustments in this manuscript’s proposed methodology and are also encouraged for further studies. Interestingly, the time demanding and performance of using both the marking point, annotated in the center of each fruit, and a bounding box annotation using bounding boxes is also recommended for completeness of comparisons.

Besides fruit identification, other fruit attributes such as shape, weight, and color are also very relevant information targeted by the market and are recommended for further studies. The addition of quantitative information such as shape could support average fruit weight estimation. Such information would provide better and more realistic fruit production estimates. However, the images must contain the entire apple tree plant what sometimes was not the case due to the tree height. Due to the limited space between the planting lines (i.e., 3.5 m), it is sometimes impossible to cover the entire apple tree in the picture frame. Therefore, camera devices are suggested to be installed at different platform heights in agricultural implements or tractors to overcome this problem. Alternatively, further studies could also explore the possibility of acquiring oblique images or the use of fish-eye lens.

Interestingly, the use of photogrammetric techniques with DL would make it possible to locate the geospatial position of the visible fruits [

29], as well as to model their surface. This would enable the individual assessment of the fruit size allowing a more realistic fruit counting. It also eliminates any possibility of counting twice a single fruit eventually in sequential images with overlapping. This may also occur in the images on the backside of the planting row that is also often computed twice [

69]. The technique used in this study is encouraged to be analyzed with other fruit varieties that are commercially important in the southern highlands of Brazil, such as pears, grapes, plums, and feijoa [

70,

71]. Finally, our dataset is available to the general community. The details for accessing the dataset are described in

Table A1. It will allow further analysis of the robustness of the reported approaches to counting objects and compares them with forthcoming up-to-date methods.

4. Conclusions

In this study, we proposed an approach based on the ATSS DL method to detect apple fruits using a center points labeling strategy. The method was compared to other state-of-the-art deep learning-based networks, including RetinaNet, Libra R-CNN, Cascade R-CNN, Faster R-CNN, FSAF, and HRNet.

We also evaluated different occlusion conditions and noise corruption in different image sets. The ATSS-based approach outperformed all of the other methods, achieving a maximum average precision of 0.946 with a bounding box size of 160 × 160 pixels. When re-creating the adversity conditions at the time of data acquisition, the approach provided a robust response to most corrupted data, except for snow, frost, and fog weather conditions.

Further studies are suggested with other fruit varieties in which color plays an important role in differentiating them from leaves. Additional fruit attributes such as shape, weight, and color are also important information for determining the market price and are also recommended for future investigations.

To conclude, our study’s relevant contribution is the availability of our current dataset to be publicly accessible. This may help others to evaluate the robustness of their respective approaches to count objects, specifically in fruits with highly-dense conditions.