Spatial Super Resolution of Real-World Aerial Images for Image-Based Plant Phenotyping

Abstract

1. Introduction

- A novel dataset of paired LR/HR aerial images of agricultural crops, employing two different sensors co-mounted on a UAV. The dataset consists of paired images of canola, wheat and lentil crops from early to late growing seasons.

- A fully automated image processing pipeline for images captured by co-mounted sensors on a UAV. The algorithm provides image matching with maximum spatial overlap, sensor data radiometric calibration and pixel-wise image alignment to contribute a paired real-world LR and HR dataset, essential for curating other UAV-based datasets.

- Extensive quantitative and qualitative evaluations across three different experiments including training and testing super resolution models with synthetic and real-world images to evaluate the efficacy of real-world dataset in a more accurate analysis of image-based plant phenotyping.

2. Background and Related Work

2.1. Traditional Super Resolution Methods

2.2. Example-Based Super Resolution

2.3. Deep Learning-Based Super Resolution

2.4. Image Pre-Processing for Raw Data

3. Materials and Methods

3.1. Dataset Collection

3.2. Image Pre-Processing

3.2.1. Image Matching

3.2.2. Radiometric Calibration

3.2.3. Multi-Sensor Image Registration

3.3. Experiments

3.3.1. Model Selection

3.3.2. Experimental Setup

Experiment 1: Synthetic-Synthetic

Experiment 2: Synthetic-Real

Experiment 3: Real-Real

3.3.3. Model Training

3.3.4. Evaluation Measures

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fróna, D.; Szenderák, J.; Harangi-Rákos, M. The challenge of feeding the world. Sustainability 2019, 11, 5816. [Google Scholar] [CrossRef]

- Giovannucci, D.; Scherr, S.J.; Nierenberg, D.; Hebebrand, C.; Shapiro, J.; Milder, J.; Wheeler, K. Food and Agriculture: The future of sustainability. In The Sustainable Development in the 21st Century (SD21) Report for Rio; United Nations: New York, NY, USA, 2012; Volume 20. [Google Scholar]

- Rising, J.; Devineni, N. Crop switching reduces agricultural losses from climate change in the United States by half under RCP 8.5. Nat. Commun. 2020, 11, 1–7. [Google Scholar] [CrossRef]

- Snowdon, R.J.; Wittkop, B.; Chen, T.W.; Stahl, A. Crop adaptation to climate change as a consequence of long-term breeding. Theor. Appl. Genet. 2020, 1–11. [Google Scholar] [CrossRef]

- Furbank, R.T.; Sirault, X.R.; Stone, E.; Zeigler, R. Plant phenome to genome: A big data challenge. In Sustaining Global Food Security: The Nexus of Science and Policy; CSIRO Publishing: Collingwood, VIC, Australia, 2019; p. 203. [Google Scholar]

- Furbank, R.T.; Tester, M. Phenomics–technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Alvarez-Mendoza, C.I.; Teodoro, A.; Quintana, J.; Tituana, K. Estimation of Nitrogen in the Soil of Balsa Trees in Ecuador Using Unmanned Aerial Vehicles. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 4610–4613. [Google Scholar]

- Huang, J.; Tichit, M.; Poulot, M.; Darly, S.; Li, S.; Petit, C.; Aubry, C. Comparative review of multifunctionality and ecosystem services in sustainable agriculture. J. Environ. Manag. 2015, 149, 138–147. [Google Scholar] [CrossRef]

- Sylvester, G.; Rambaldi, G.; Guerin, D.; Wisniewski, A.; Khan, N.; Veale, J.; Xiao, M. E-Agriculture in Action: Drones for Agriculture; Food and Agriculture Organization of the United Nations and International Telecommunication Union: Bangkok, Thailand, 2018; Volume 19, p. 2018. [Google Scholar]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M.; et al. Unmanned aerial vehicle to estimate nitrogen status of turfgrasses. PLoS ONE 2016, 11, e0158268. [Google Scholar]

- Wang, H.; Mortensen, A.K.; Mao, P.; Boelt, B.; Gislum, R. Estimating the nitrogen nutrition index in grass seed crops using a UAV-mounted multispectral camera. Int. J. Remote Sens. 2019, 40, 2467–2482. [Google Scholar] [CrossRef]

- Corrigan, F. Multispectral Imaging Camera Drones in Farming Yield Big Benefits. Drone Zon-Drone Technology. 2019. Available online: https://www.dronezon.com/learn-about-drones-quadcopters/multispectral-sensor-drones-in-farming-yield-big-benefits/ (accessed on 9 June 2021).

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Messina, G.; Modica, G. Applications of UAV Thermal Imagery in Precision Agriculture: State of the Art and Future Research Outlook. Remote Sens. 2020, 12, 1491. [Google Scholar] [CrossRef]

- Camino, C.; González-Dugo, V.; Hernández, P.; Sillero, J.; Zarco-Tejada, P.J. Improved nitrogen retrievals with airborne-derived fluorescence and plant traits quantified from VNIR-SWIR hyperspectral imagery in the context of precision agriculture. Int. J. Appl. Earth Obs. Geoinf. 2018, 70, 105–117. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017. [Google Scholar] [CrossRef]

- Farjon, G.; Krikeb, O.; Hillel, A.B.; Alchanatis, V. Detection and counting of flowers on apple trees for better chemical thinning decisions. Precis. Agric. 2019, 21, 503–521. [Google Scholar] [CrossRef]

- Deery, D.M.; Rebetzke, G.; Jimenez-Berni, J.A.; Bovill, B.; James, R.A.; Condon, A.G.; Furbank, R.; Chapman, S.; Fischer, R. Evaluation of the phenotypic repeatability of canopy temperature in wheat using continuous-terrestrial and airborne measurements. Front. Plant Sci. 2019, 10, 875. [Google Scholar] [CrossRef]

- Madec, S.; Baret, F.; De Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerlé, M.; Colombeau, G.; Comar, A. High-throughput phenotyping of plant height: Comparing unmanned aerial vehicles and ground LiDAR estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef]

- Hu, P.; Guo, W.; Chapman, S.C.; Guo, Y.; Zheng, B. Pixel size of aerial imagery constrains the applications of unmanned aerial vehicle in crop breeding. ISPRS J. Photogramm. Remote Sens. 2019, 154, 1–9. [Google Scholar] [CrossRef]

- Liu, M.; Yu, T.; Gu, X.; Sun, Z.; Yang, J.; Zhang, Z.; Mi, X.; Cao, W.; Li, J. The impact of spatial resolution on the classification of vegetation types in highly fragmented planting areas based on unmanned aerial vehicle hyperspectral images. Remote Sens. 2020, 12, 146. [Google Scholar] [CrossRef]

- Dong, Z.; Wang, M.; Wang, Y.; Zhu, Y.; Zhang, Z. Object detection in high resolution remote sensing imagery based on convolutional neural networks with suitable object scale features. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2104–2114. [Google Scholar] [CrossRef]

- Tayara, H.; Chong, K.T. Object detection in very high-resolution aerial images using one-stage densely connected feature pyramid network. Sensors 2018, 18, 3341. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; Van Aardt, J.; Kunneke, A.; Seifert, T. Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef]

- Garrigues, S.; Allard, D.; Baret, F.; Weiss, M. Quantifying spatial heterogeneity at the landscape scale using variogram models. Remote Sens. Environ. 2006, 103, 81–96. [Google Scholar] [CrossRef]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep laplacian pyramid networks for fast and accurate super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar]

- Dai, T.; Cai, J.; Zhang, Y.; Xia, S.T.; Zhang, L. Second-order attention network for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11065–11074. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Deep back-projection networks for super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1664–1673. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 416–423. [Google Scholar]

- Liu, G.; Wei, J.; Zhu, Y.; Wei, Y. Super-Resolution Based on Residual Dense Network for Agricultural Image. J. Phys. Conf. Ser. 2019, 1345, 022012. [Google Scholar] [CrossRef]

- Cap, Q.H.; Tani, H.; Uga, H.; Kagiwada, S.; Iyatomi, H. LASSR: Effective Super-Resolution Method for Plant Disease Diagnosis. arXiv 2020, arXiv:2010.06499. [Google Scholar]

- Yamamoto, K.; Togami, T.; Yamaguchi, N. Super-resolution of plant disease images for the acceleration of image-based phenotyping and vigor diagnosis in agriculture. Sensors 2017, 17, 2557. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Chen, Q.; Ng, R.; Koltun, V. Zoom to learn, learn to zoom. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3762–3770. [Google Scholar]

- Cai, J.; Zeng, H.; Yong, H.; Cao, Z.; Zhang, L. Toward real-world single image super-resolution: A new benchmark and a new model. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3086–3095. [Google Scholar]

- NTIRE Contributors. NTIRE 2020 Real World Super-Resolution Challenge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Park, S.C.; Park, M.K.; Kang, M.G. Super-resolution image reconstruction: A technical overview. IEEE Signal Process. Mag. 2003, 20, 21–36. [Google Scholar] [CrossRef]

- Zhang, B.; Sun, X.; Gao, L.; Yang, L. Endmember extraction of hyperspectral remote sensing images based on the ant colony optimization (ACO) algorithm. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2635–2646. [Google Scholar] [CrossRef]

- Liu, J.; Luo, B.; Douté, S.; Chanussot, J. Exploration of planetary hyperspectral images with unsupervised spectral unmixing: A case study of planet Mars. Remote Sens. 2018, 10, 737. [Google Scholar] [CrossRef]

- Wang, P.; Zhang, G.; Hao, S.; Wang, L. Improving remote sensing image super-resolution mapping based on the spatial attraction model by utilizing the pansharpening technique. Remote Sens. 2019, 11, 247. [Google Scholar] [CrossRef]

- Suresh, M.; Jain, K. Subpixel level mapping of remotely sensed image using colorimetry. Egypt. J. Remote Sens. Space Sci. 2018, 21, 65–72. [Google Scholar] [CrossRef]

- Siu, W.C.; Hung, K.W. Review of image interpolation and super-resolution. In Proceedings of the 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference, Hollywood, CA, USA, 3–6 December 2012; pp. 1–10. [Google Scholar]

- Hardeep, P.; Swadas, P.B.; Joshi, M. A survey on techniques and challenges in image super resolution reconstruction. Int. J. Comput. Sci. Mob. Comput. 2013, 2, 317–325. [Google Scholar]

- Freeman, W.T.; Jones, T.R.; Pasztor, E.C. Example-based super-resolution. IEEE Comput. Graph. Appl. 2002, 22, 56–65. [Google Scholar] [CrossRef]

- Salvador, J. Example-Based Super Resolution; Academic Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Guo, K.; Yang, X.; Lin, W.; Zhang, R.; Yu, S. Learning-based super-resolution method with a combining of both global and local constraints. IET Image Process. 2012, 6, 337–344. [Google Scholar] [CrossRef]

- Shao, W.Z.; Elad, M. Simple, accurate, and robust nonparametric blind super-resolution. In International Conference on Image and Graphics; Springer: Berlin/Heidelberg, Germany, 2015; pp. 333–348. [Google Scholar]

- Wang, Z.; Yang, Y.; Wang, Z.; Chang, S.; Yang, J.; Huang, T.S. Learning super-resolution jointly from external and internal examples. IEEE Trans. Image Process. 2015, 24, 4359–4371. [Google Scholar] [CrossRef]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the 23rd British Machine Vision Conference (BMVC), Surrey, UK, 3–7 September 2012. [Google Scholar]

- Freedman, G.; Fattal, R. Image and Video Upscaling from Local Self-Examples. ACM Trans. Graph. 2010, 28, 1–10. [Google Scholar] [CrossRef]

- Glasner, D.; Bagon, S.; Irani, M. Super-resolution from a single image. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; pp. 349–356. [Google Scholar]

- Lee, H.; Battle, A.; Raina, R.; Ng, A.Y. Efficient sparse coding algorithms. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 3–6 December 2007; pp. 801–808. [Google Scholar]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image super-resolution via sparse representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef]

- Yang, J.; Wright, J.; Huang, T.; Ma, Y. Image super-resolution as sparse representation of raw image patches. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On single image scale-up using sparse-representations. In International Conference on Curves and Surfaces; Springer: Berlin/Heidelberg, Germany, 2010; pp. 711–730. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Kim, J.; Kwon Lee, J.; Mu Lee, K. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X. Image super-resolution via deep recursive residual network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3147–3155. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 391–407. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Gu, J.; Lu, H.; Zuo, W.; Dong, C. Blind super-resolution with iterative kernel correction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1604–1613. [Google Scholar]

- Michaeli, T.; Irani, M. Nonparametric blind super-resolution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 945–952. [Google Scholar]

- He, H.; Siu, W.C. Single Image super-resolution using Gaussian process regression. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 449–456. [Google Scholar]

- He, Y.; Yap, K.H.; Chen, L.; Chau, L.P. A soft MAP framework for blind super-resolution image reconstruction. Image Vis. Comput. 2009, 27, 364–373. [Google Scholar] [CrossRef]

- Wang, Q.; Tang, X.; Shum, H. Patch based blind image super resolution. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05), Beijing, China, 17–21 October 2005; Volume 1, pp. 709–716. [Google Scholar]

- Begin, I.; Ferrie, F. Blind super-resolution using a learning-based approach. In Proceedings of the 17th International Conference on Pattern Recognition, ICPR 2004, Cambridge, UK, 26 August 2004; Volume 2, pp. 85–89. [Google Scholar]

- Joshi, N.; Szeliski, R.; Kriegman, D.J. PSF estimation using sharp edge prediction. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Hussein, S.A.; Tirer, T.; Giryes, R. Correction Filter for Single Image Super-Resolution: Robustifying Off-the-Shelf Deep Super-Resolvers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1428–1437. [Google Scholar]

- Bell-Kligler, S.; Shocher, A.; Irani, M. Blind super-resolution kernel estimation using an internal-gan. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 284–293. [Google Scholar]

- Zhou, R.; Susstrunk, S. Kernel modeling super-resolution on real low-resolution images. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2433–2443. [Google Scholar]

- Luo, Z.; Huang, Y.; Li, S.; Wang, L.; Tan, T. Unfolding the Alternating Optimization for Blind Super Resolution. arXiv 2020, arXiv:2010.02631. [Google Scholar]

- Farsiu, S.; Elad, M.; Milanfar, P. Multiframe demosaicing and super-resolution of color images. IEEE Trans. Image Process. 2005, 15, 141–159. [Google Scholar] [CrossRef]

- Gharbi, M.; Chaurasia, G.; Paris, S.; Durand, F. Deep joint demosaicking and denoising. ACM Trans. Graph. 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Mildenhall, B.; Barron, J.T.; Chen, J.; Sharlet, D.; Ng, R.; Carroll, R. Burst denoising with kernel prediction networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2502–2510. [Google Scholar]

- Zhou, R.; Achanta, R.; Süsstrunk, S. Deep residual network for joint demosaicing and super-resolution. Color and Imaging Conference. Soc. Imaging Sci. Technol. 2018, 2018, 75–80. [Google Scholar]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to see in the dark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3291–3300. [Google Scholar]

- Aslahishahri, M.; Paul, T.; Stanley, K.G.; Shirtliffe, S.; Vail, S.; Stavness, I. KL-Divergence as a Proxy for Plant Growth. In Proceedings of the 2019 IEEE 10th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 17–19 October 2019; pp. 0120–0126. [Google Scholar]

- Rajapaksa, S.; Eramian, M.; Duddu, H.; Wang, M.; Shirtliffe, S.; Ryu, S.; Josuttes, A.; Zhang, T.; Vail, S.; Pozniak, C.; et al. Classification of crop lodging with gray level co-occurrence matrix. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 251–258. [Google Scholar]

- Kwan, C. Methods and Challenges Using Multispectral and Hyperspectral Images for Practical Change Detection Applications. Information 2019, 10, 353. [Google Scholar] [CrossRef]

- Honkavaara, E.; Khoramshahi, E. Radiometric correction of close-range spectral image blocks captured using an unmanned aerial vehicle with a radiometric block adjustment. Remote Sens. 2018, 10, 256. [Google Scholar] [CrossRef]

- MicaSense Contributors. MicaSense RedEdge and Altum Image Processing Tutorials; MicaSense Inc.: Seattle, WA, USA, 2018. [Google Scholar]

- Lowe, G. SIFT-the scale invariant feature transform. Int. J. 2004, 2, 91–110. [Google Scholar]

- Rohde, G.K.; Pajevic, S.; Pierpaoli, C.; Basser, P.J. A comprehensive approach for multi-channel image registration. In International Workshop on Biomedical Image Registration; Springer: Berlin/Heidelberg, Germany, 2003; pp. 214–223. [Google Scholar]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 898–916. [Google Scholar] [CrossRef]

- Fujimoto, A.; Ogawa, T.; Yamamoto, K.; Matsui, Y.; Yamasaki, T.; Aizawa, K. Manga109 dataset and creation of metadata. In Proceedings of the 1st International Workshop on coMics ANalysis, Processing and Understanding; ACM: New York, NY, USA, 2016; p. 2. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Irani, M.; Peleg, S. Motion analysis for image enhancement: Resolution, occlusion, and transparency. J. Vis. Commun. Image Represent. 1993, 4, 324–335. [Google Scholar] [CrossRef]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Lu, L. Why is image quality assessment so difficult? In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA, 13–17 May 2002; Volume 4, p. IV-3313. [Google Scholar]

- Jack, K. Video Demystified: A Handbook for the Digital Engineer; Elsevier: Amsterdam, The Netherlands, 2011. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Zhang, C.; Craine, W.; Davis, J.B.; Khot, L.R.; Marzougui, A.; Brown, J.; Hulbert, S.H.; Sankaran, S. Detection of canola flowering using proximal and aerial remote sensing techniques. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping III; International Society for Optics and Photonics: Bellingham, DC, USA, 2018; Volume 10664, p. 1066409. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Najjar, A.; Zagrouba, E. Flower image segmentation based on color analysis and a supervised evaluation. In Proceedings of the 2012 International Conference on Communications and Information Technology (ICCIT), Hammamet, Tunisia, 26–28 June 2012; pp. 397–401. [Google Scholar]

- Engel, J.; Hoffman, M.; Roberts, A. Latent constraints: Learning to generate conditionally from unconditional generative models. arXiv 2017, arXiv:1711.05772. [Google Scholar]

- Dai, D.; Wang, Y.; Chen, Y.; Van Gool, L. Is image super-resolution helpful for other vision tasks? In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–9. [Google Scholar]

- Ghaffar, M.; McKinstry, A.; Maul, T.; Vu, T. Data augmentation approaches for satellite image super-resolution. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 47–54. [Google Scholar] [CrossRef]

- Sundareshan, M.K.; Zegers, P. Role of over-sampled data in superresolution processing and a progressive up-sampling scheme for optimized implementations of iterative restoration algorithms. In Passive Millimeter-Wave Imaging Technology III; International Society for Optics and Photonics: Bellingham, DC, USA, 1999; Volume 3703, pp. 155–166. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Feng, W.; Huang, W.; Bao, W. Imbalanced hyperspectral image classification with an adaptive ensemble method based on SMOTE and rotation forest with differentiated sampling rates. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1879–1883. [Google Scholar] [CrossRef]

| Date | Canola | Lentil and Wheat | Matched Images | ||

|---|---|---|---|---|---|

| Rededge (LR) | IXU-1000 (HR) | Rededge (LR) | IXU-1000 (HR) | Canola/Lentil-Wheat | |

| 28 June 2018 | 293 | 287 | 306 | 303 | 239/159 |

| 27 July 2018 | - | - | 228 | 229 | - /127 |

| 30 July 2018 | 335 | 337 | - | - | 262/- |

| 29 August 2018 | - | - | 228 | 228 | -/130 |

| 31 August 2018 | 370 | 364 | - | - | 283/- |

| 10 July 2019 | 474 | 457 | - | - | 417/- |

| Total | 1472 | 1445 | 762 | 760 | 1201/416 |

| Phase One IXU-1000 | Micasense Rededge | |

|---|---|---|

| Capture rate | ∼1 fps | ∼1 fps |

| Field of view | 64° | 47.2° |

| Sensor resolution | 100 MP | MP |

| Spectral bands | RGB | B, G, R, rededge, NIR |

| Canola flight altitude | 20 m | 20 m |

| Canola ground sample distance (GSD) | 1.7 mm/pixel | 12.21 mm/pixel |

| Wheat/lentil flight altitude | 30 m | 30 m |

| Wheat/lentil ground sample distance (GSD) | 2.6 mm/pixel | 18.31 mm/pixel |

| Algorithm | Scale | PSNR (dB) | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Canola | Lentil | Wheat | Three-Crop | ||||||||||

| Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | ||

| Bicubic | 8 | 34.68 | 29.10 | 29.10 | 35.14 | 28.83 | 28.83 | 34.21 | 29.63 | 29.63 | 34.64 | 29.05 | 29.05 |

| LapSRN [26] | 8 | 35.32 | 28.61 | 32.50 | 34.75 | 28.67 | 32.69 | 33.73 | 29.90 | 32.26 | 35.24 | 29.06 | 32.59 |

| SAN [27] | 8 | 35.57 | 29.16 | 32.88 | - | - | - | - | - | - | 35.41 | 29.07 | 32.84 |

| DBPN [28] | 8 | 35.63 | 29.15 | 32.88 | 34.66 | 28.75 | 32.90 | 33.89 | 29.55 | 32.37 | 35.46 | 29.08 | 32.97 |

| Algorithm | Scale | SSIM | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Canola | Lentil | Wheat | Three-Crop | ||||||||||

| Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | ||

| Bicubic | 8 | 0.822 | 0.743 | 0.743 | 0.821 | 0.763 | 0.763 | 0.804 | 0.746 | 0.746 | 0.816 | 0.747 | 0.747 |

| LapSRN [26] | 8 | 0.85 | 0.688 | 0.772 | 0.816 | 0.728 | 0.773 | 0.794 | 0.725 | 0.754 | 0.845 | 0.742 | 0.773 |

| SAN [27] | 8 | 0.858 | 0.74 | 0.78 | - | - | - | - | - | - | 0.851 | 0.745 | 0.777 |

| DBPN [28] | 8 | 0.86 | 0.731 | 0.776 | 0.806 | 0.723 | 0.758 | 0.789 | 0.708 | 0.731 | 0.853 | 0.731 | 0.777 |

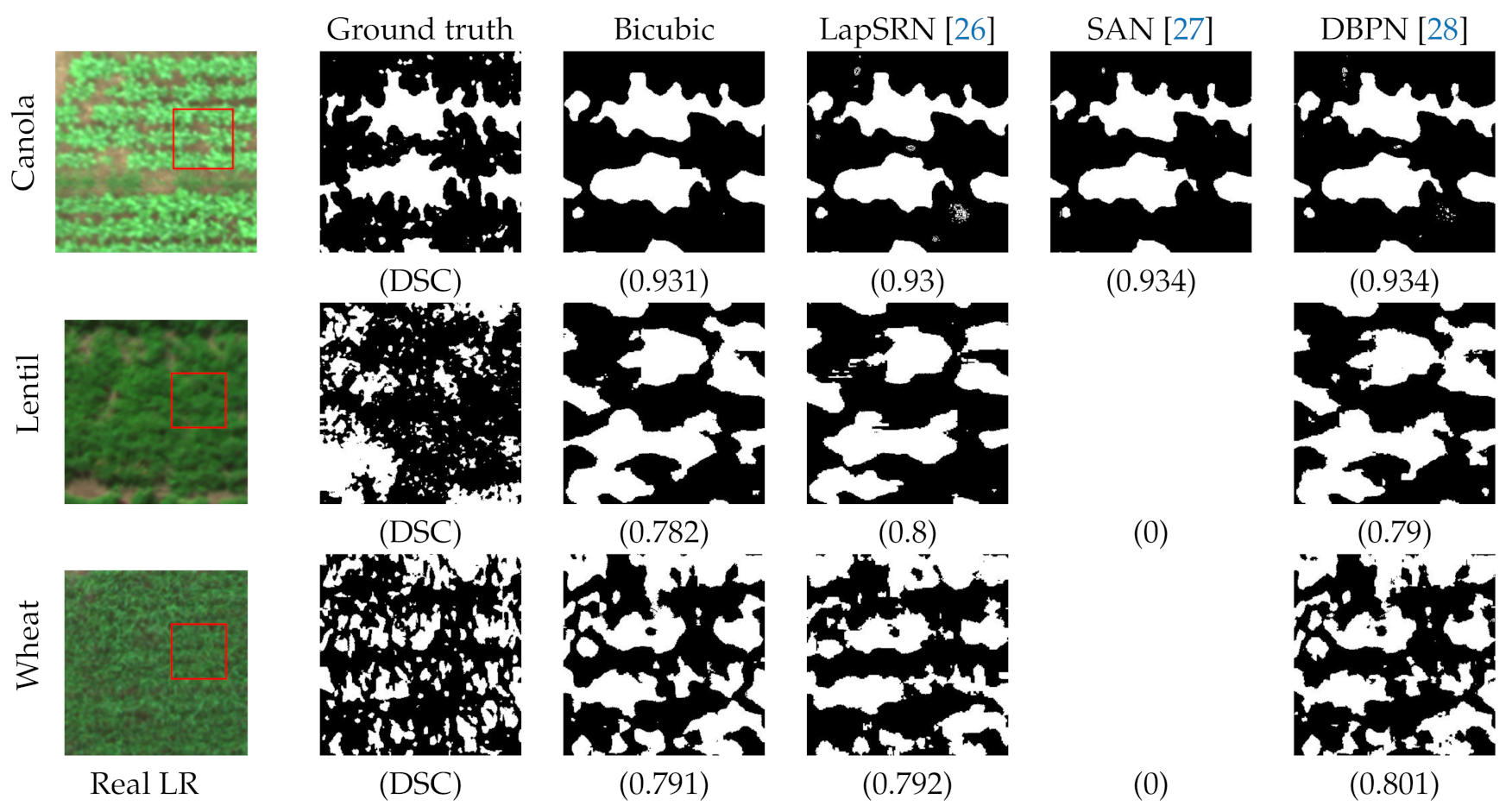

| Algorithm | Scale | Flower Segmentation | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Canola | Lentil | Wheat | Three-Crop | ||||||||||

| Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | ||

| Bicubic | 8 | 0.854 | 0.7 | 0.7 | - | - | - | - | - | - | 0.863 | 0.698 | 0.698 |

| LapSRN [26] | 8 | 0.887 | 0.694 | 0.763 | - | - | - | - | - | - | 0.886 | 0.692 | 0.74 |

| SAN [27] | 8 | 0.897 | 0.696 | 0.793 | - | - | - | - | - | - | 0.892 | 0.695 | 0.752 |

| DBPN [28] | 8 | 0.894 | 0.699 | 0.79 | - | - | - | - | - | - | 0.89 | 0.68 | 0.754 |

| Algorithm | Scale | Vegetation Segmentation | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Canola | Lentil | Wheat | Three-Crop | ||||||||||

| Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | Syn-Syn | Syn-Real | Real-Real | ||

| Bicubic | 8 | 0.849 | 0.687 | 0.687 | 0.478 | 0.319 | 0.319 | 0.622 | 0.288 | 0.288 | 0.779 | 0.537 | 0.537 |

| LapSRN [26] | 8 | 0.86 | 0.663 | 0.833 | 0.469 | 0.437 | 0.433 | 0.588 | 0.481 | 0.552 | 0.801 | 0.54 | 0.74 |

| SAN [27] | 8 | 0.866 | 0.685 | 0.846 | - | - | - | - | - | - | 0.809 | 0.537 | 0.752 |

| DBPN [28] | 8 | 0.868 | 0.688 | 0.846 | 0.561 | 0.354 | 0.456 | 0.645 | 0.371 | 0.577 | 0.812 | 0.546 | 0.754 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aslahishahri, M.; Stanley, K.G.; Duddu, H.; Shirtliffe, S.; Vail, S.; Stavness, I. Spatial Super Resolution of Real-World Aerial Images for Image-Based Plant Phenotyping. Remote Sens. 2021, 13, 2308. https://doi.org/10.3390/rs13122308

Aslahishahri M, Stanley KG, Duddu H, Shirtliffe S, Vail S, Stavness I. Spatial Super Resolution of Real-World Aerial Images for Image-Based Plant Phenotyping. Remote Sensing. 2021; 13(12):2308. https://doi.org/10.3390/rs13122308

Chicago/Turabian StyleAslahishahri, Masoomeh, Kevin G. Stanley, Hema Duddu, Steve Shirtliffe, Sally Vail, and Ian Stavness. 2021. "Spatial Super Resolution of Real-World Aerial Images for Image-Based Plant Phenotyping" Remote Sensing 13, no. 12: 2308. https://doi.org/10.3390/rs13122308

APA StyleAslahishahri, M., Stanley, K. G., Duddu, H., Shirtliffe, S., Vail, S., & Stavness, I. (2021). Spatial Super Resolution of Real-World Aerial Images for Image-Based Plant Phenotyping. Remote Sensing, 13(12), 2308. https://doi.org/10.3390/rs13122308