Studying the Feasibility of Assimilating Sentinel-2 and PlanetScope Imagery into the SAFY Crop Model to Predict Within-Field Wheat Yield

Abstract

:1. Introduction

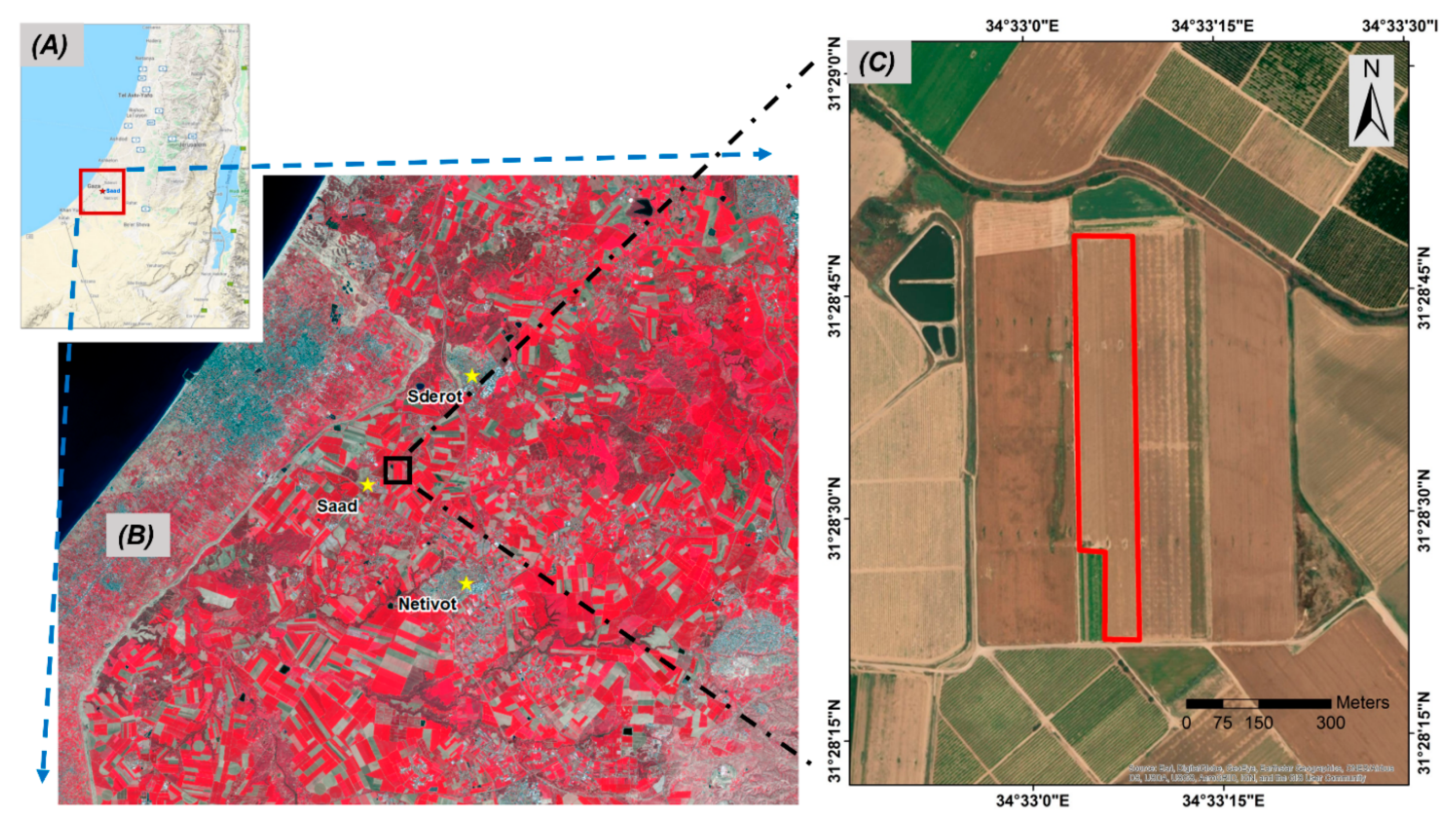

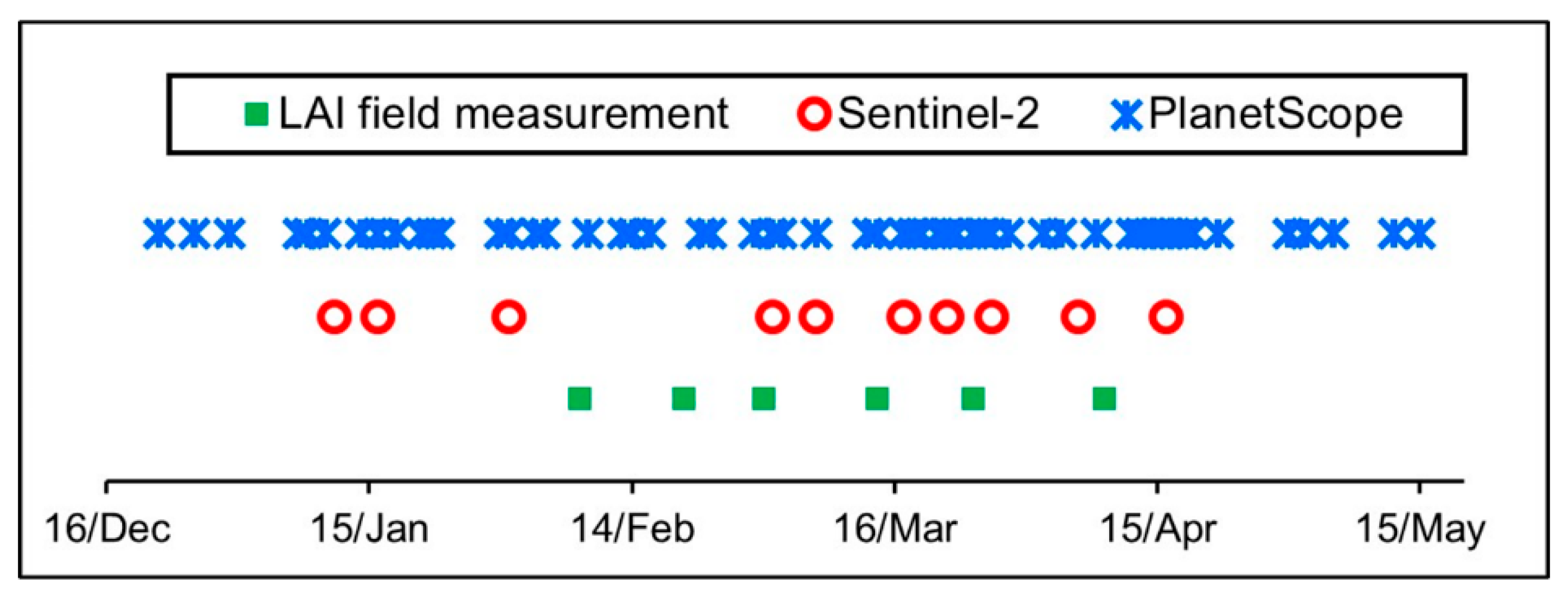

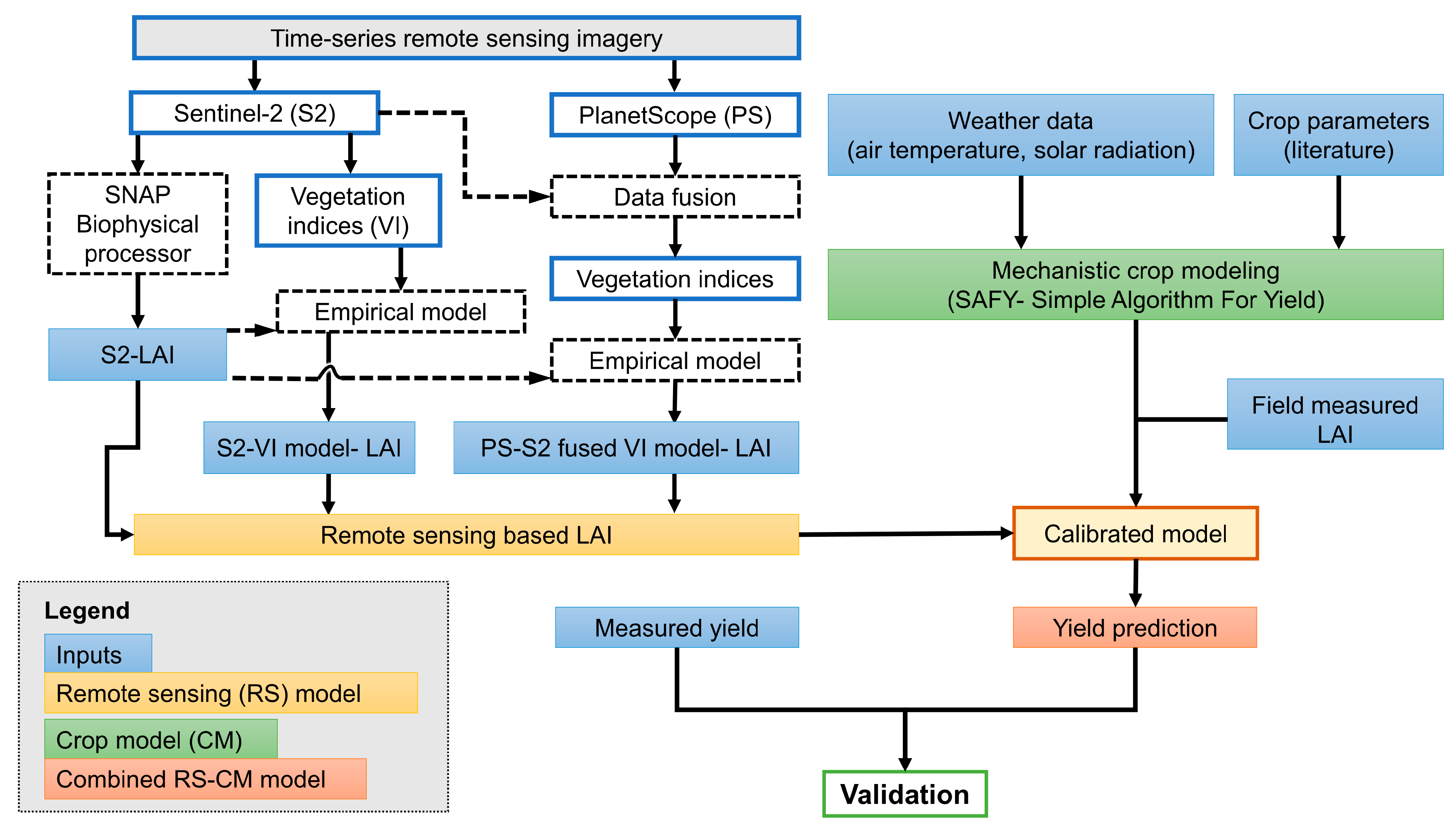

2. Materials and Methods

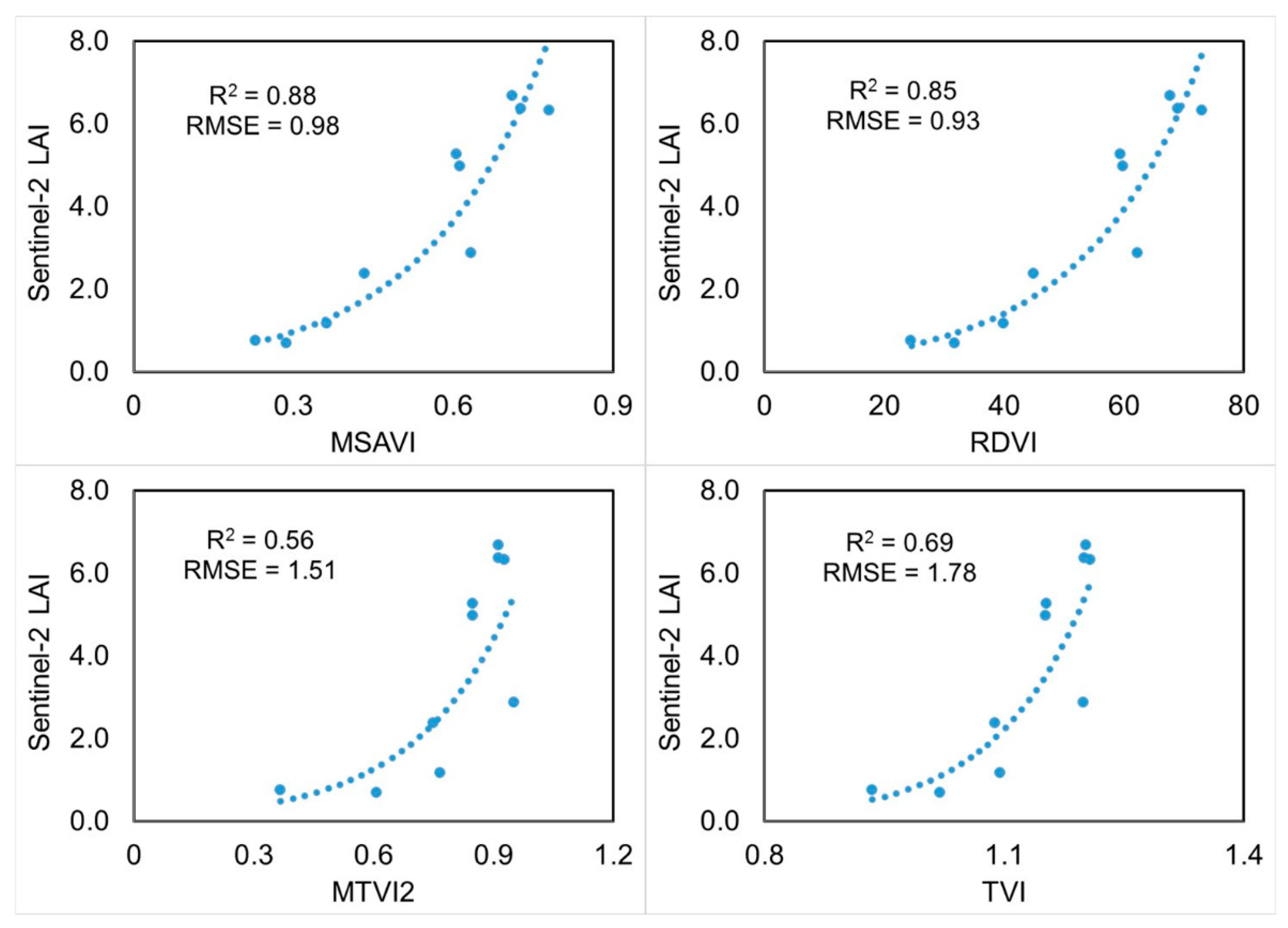

2.1. LAI Maps Creation

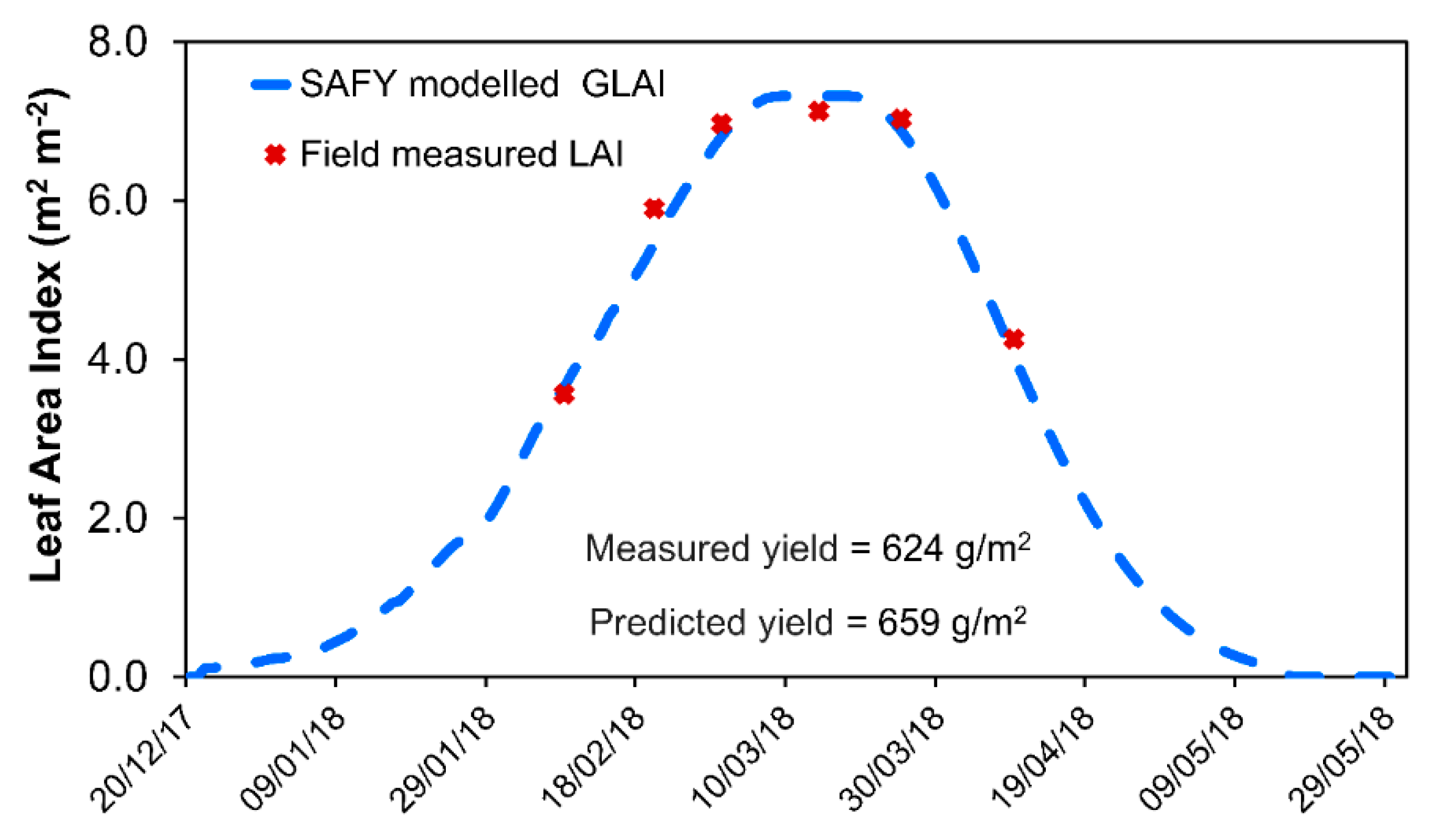

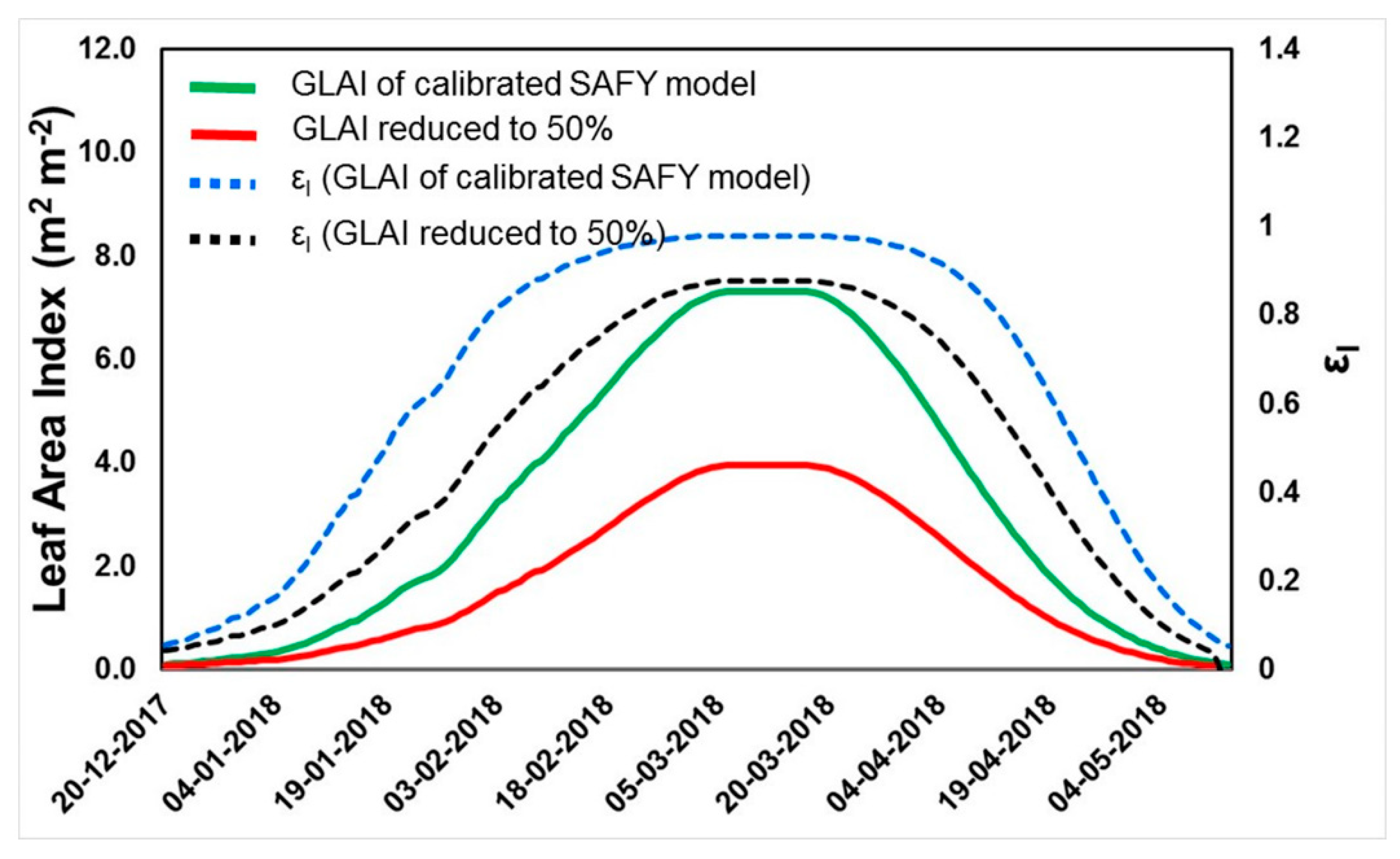

2.2. Simple Algorithm for Yield Estimate (SAFY) Model

2.3. Pre-Processing of Yield Monitor Data

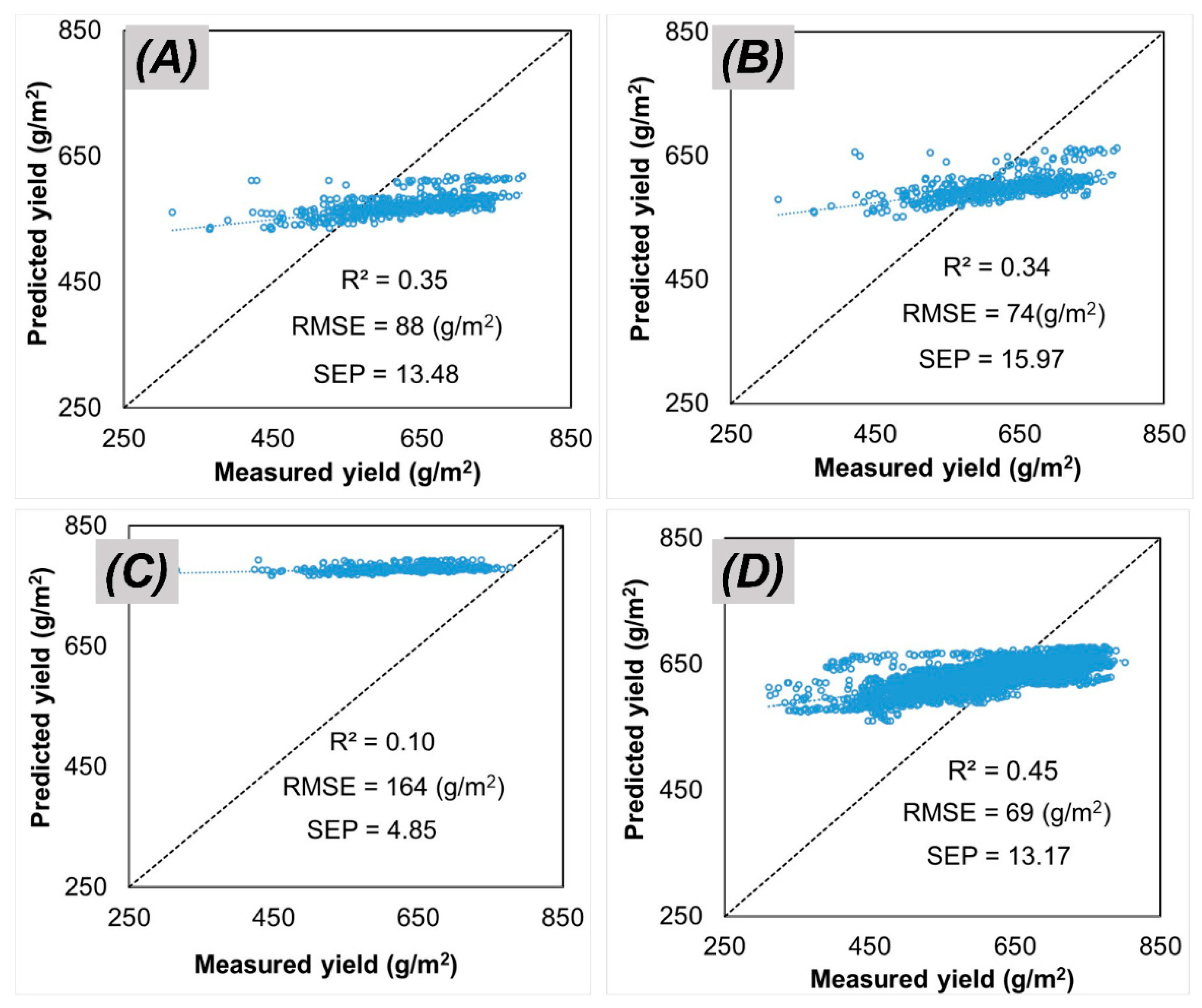

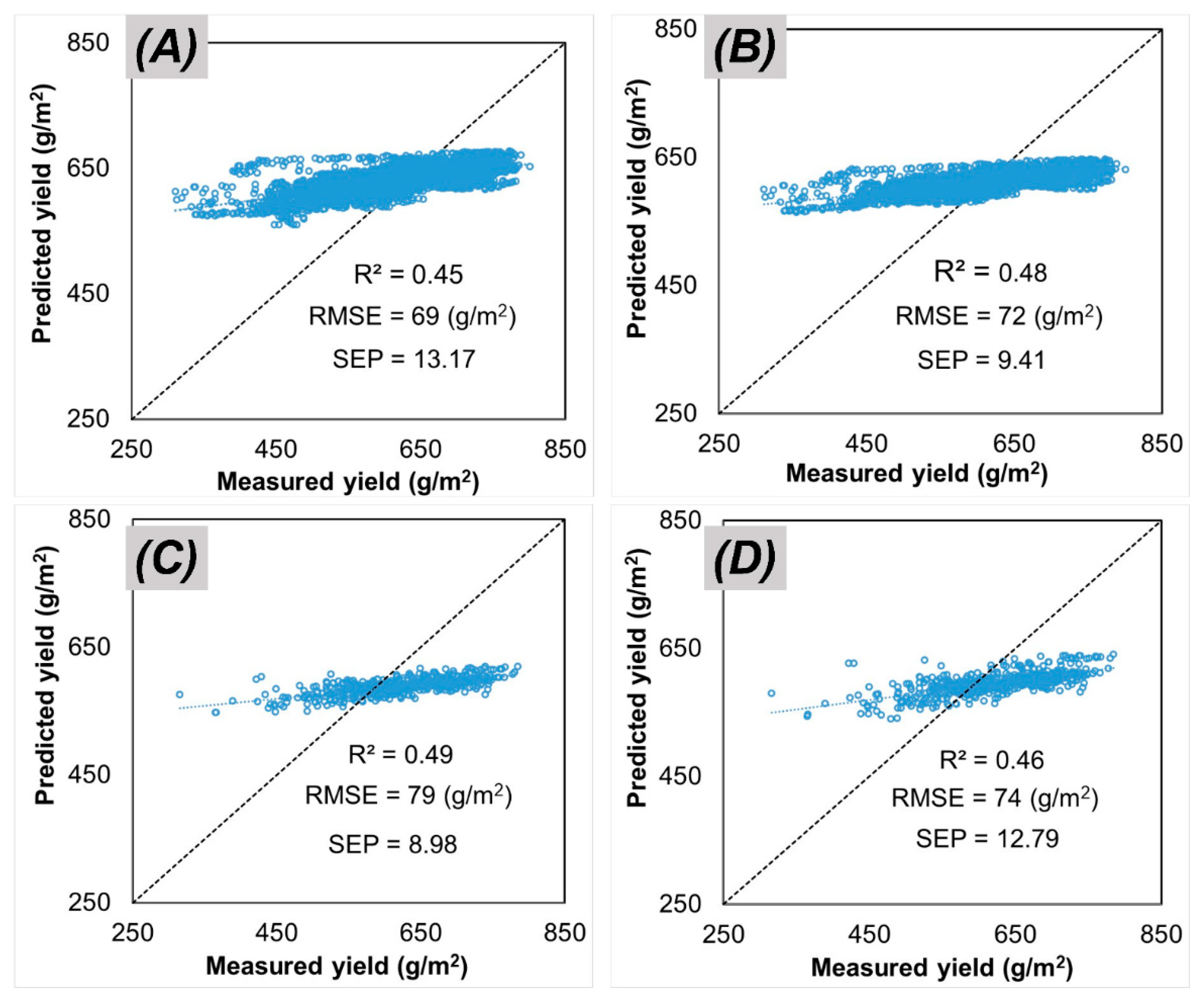

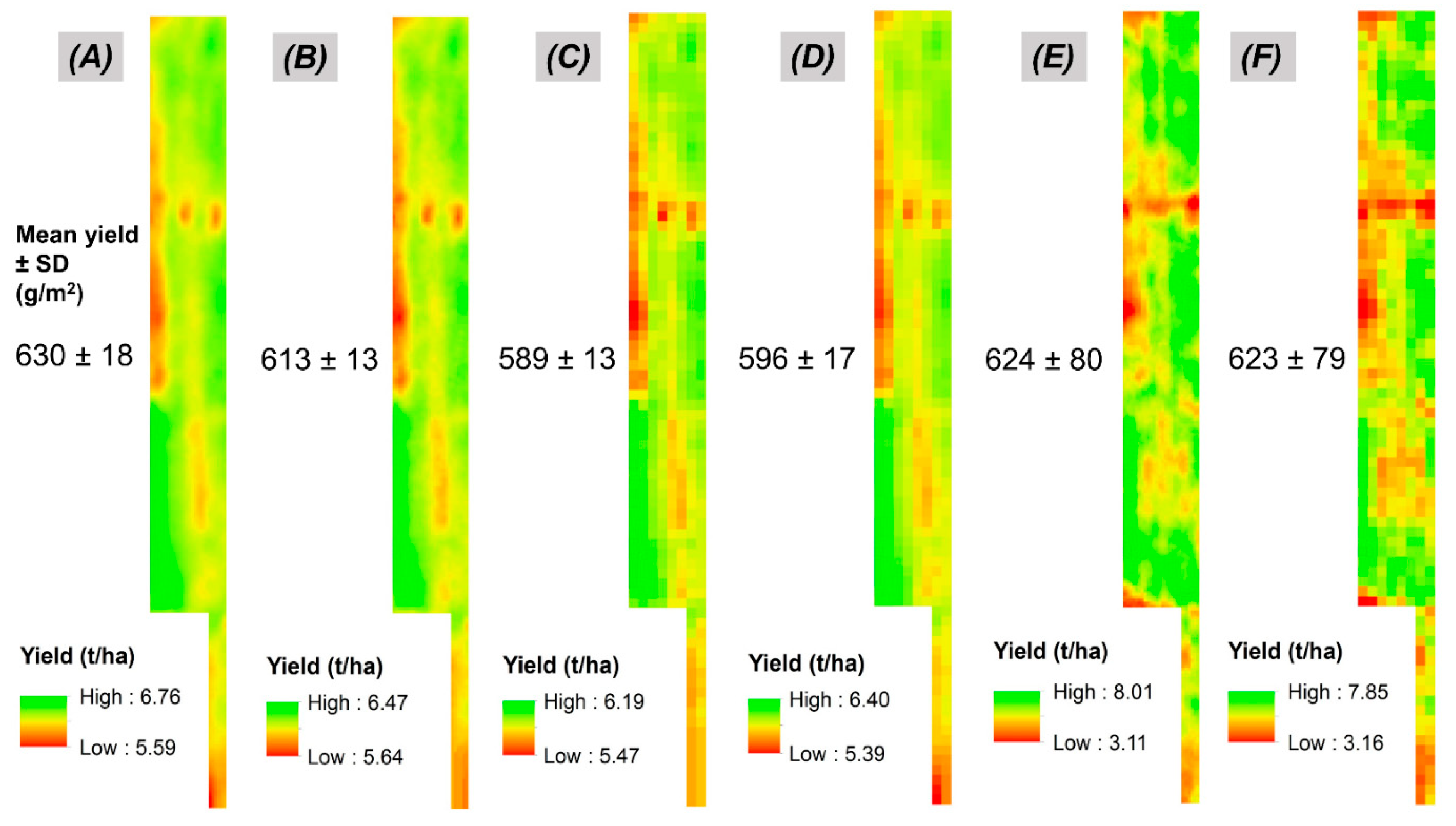

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Vegetation Index | Equation | Reference |

|---|---|---|

| Simple ratio (SR) | Jordan [61] | |

| Enhanced vegetation index 2 (EVI2) | Jiang et al. [62]; Nguy-Robertson et al. [63] | |

| Green chlorophyll vegetation index (GCVI) | Gitelson et al. [64]; Gitelson et al. [65] | |

| Normalized difference vegetation index (NDVI) | Rouse et al. [66] | |

| Modified triangular vegetation index 2 (MTVI2) | Haboudane et al. [67] | |

| Modified soil-adjusted vegetation index (MSAVI) | Haboudane et al. [67]; Qi et al. [68] | |

| Wide dynamic range vegetation index (WDRVI) | Gitelson [69]; Nguy-Robertson et al. [14] | |

| Green wide dynamic range vegetation index (Green-WDRVI) | Nguy-Robertson et al. [14]; Peng and Gitelson [70] | |

| Optimized soil-adjusted vegetation index (OSAVI) | Rondeaux et al. [71] | |

| Green simple ratio (GSR) | Sripada et al. [72] | |

| Green NDVI (GNDVI) | Gitelson and Merzlyak [73] | |

| Renormalized difference vegetation index (RDVI) | Roujean et al. [74] | |

| Transformed vegetative index (TVI) | Rouse et al. [66]; Haas et al. [75] |

References

- Basso, B.; Liu, L. Seasonal crop yield forecast: Methods, applications, and accuracies. In Advances in Agronomy; 2019; Volume 154, pp. 201–255. [Google Scholar]

- Lobell, D.B.; Burke, M.B. On the use of statistical models to predict crop yield responses to climate change. Agric. For. Meteorol. 2010, 150, 1443–1452. [Google Scholar] [CrossRef]

- Lobell, D.B.; Thau, D.; Seifert, C.; Engle, E.; Little, B. A scalable satellite-based crop yield mapper. Remote Sens. Environ. 2015, 164, 324–333. [Google Scholar] [CrossRef]

- Kamilaris, A.; Kartakoullis, A.; Prenafeta-Boldú, F.X. A review on the practice of big data analysis in agriculture. Comput. Electron. Agric. 2017, 143, 23–37. [Google Scholar] [CrossRef]

- Rozenstein, O.; Karnieli, A. Comparison of methods for land-use classification incorporating remote sensing and GIS inputs. Appl. Geogr. 2011, 31, 533–544. [Google Scholar] [CrossRef]

- Duchemin, B.; Hadria, R.; Erraki, S.; Boulet, G.; Maisongrande, P.; Chehbouni, A.; Escadafal, R.; Ezzahar, J.; Hoedjes, J.C.B.; Kharrou, M.H.; et al. Monitoring wheat phenology and irrigation in Central Morocco: On the use of relationships between evapotranspiration, crops coefficients, leaf area index and remotely-sensed vegetation indices. Agric. Water Manag. 2006, 79, 1–27. [Google Scholar] [CrossRef]

- Kaplan, G.; Rozenstein, O. Spaceborne Estimation of Leaf Area Index in Cotton, Tomato, and Wheat Using Sentinel-2. Land 2021, 10, 505. [Google Scholar] [CrossRef]

- Manivasagam, V.S.; Rozenstein, O. Practices for upscaling crop simulation models from field scale to large regions. Comput. Electron. Agric. 2020, 175, 105554. [Google Scholar] [CrossRef]

- Bonfil, D.J. Wheat phenomics in the field by RapidScan: NDVI vs. NDRE. Isr. J. Plant Sci. 2017, 64, 41–54. [Google Scholar] [CrossRef]

- Di Paola, A.; Valentini, R.; Santini, M. An overview of available crop growth and yield models for studies and assessments in agriculture. J. Sci. Food Agric. 2016, 96, 709–714. [Google Scholar] [CrossRef]

- Jin, X.; Kumar, L.; Li, Z.; Feng, H.; Xu, X.; Yang, G.; Wang, J. A review of data assimilation of remote sensing and crop models. Eur. J. Agron. 2018, 92, 141–152. [Google Scholar] [CrossRef]

- Azzari, G.; Jain, M.; Lobell, D.B. Towards fine resolution global maps of crop yields: Testing multiple methods and satellites in three countries. Remote Sens. Environ. 2017, 202, 129–141. [Google Scholar] [CrossRef]

- Ines, A.V.M.; Das, N.N.; Hansen, J.W.; Njoku, E.G. Assimilation of remotely sensed soil moisture and vegetation with a crop simulation model for maize yield prediction. Remote Sens. Environ. 2013, 138, 149–164. [Google Scholar] [CrossRef] [Green Version]

- Nguy-Robertson, A.L.; Peng, Y.; Gitelson, A.A.; Arkebauer, T.J.; Pimstein, A.; Herrmann, I.; Karnieli, A.; Rundquist, D.C.; Bonfil, D.J. Estimating green LAI in four crops: Potential of determining optimal spectral bands for a universal algorithm. Agric. For. Meteorol. 2014, 192–193, 140–148. [Google Scholar] [CrossRef]

- Ma, G.; Huang, J.; Wu, W.; Fan, J.; Zou, J.; Wu, S. Assimilation of MODIS-LAI into the WOFOST model for forecasting regional winter wheat yield. Math. Comput. Model. 2013, 58, 634–643. [Google Scholar] [CrossRef]

- Jégo, G.; Pattey, E.; Liu, J. Using Leaf Area Index, retrieved from optical imagery, in the STICS crop model for predicting yield and biomass of field crops. Field Crop. Res. 2012, 131, 63–74. [Google Scholar] [CrossRef]

- Huang, J.; Tian, L.; Liang, S.; Ma, H.; Becker-Reshef, I.; Huang, Y.; Su, W.; Zhang, X.; Zhu, D.; Wu, W. Improving winter wheat yield estimation by assimilation of the leaf area index from Landsat TM and MODIS data into the WOFOST model. Agric. For. Meteorol. 2015, 204, 106–121. [Google Scholar] [CrossRef] [Green Version]

- Tripathy, R.; Chaudhari, K.N.; Mukherjee, J.; Ray, S.S.; Patel, N.K.; Panigrahy, S.; Parihar, J.S. Forecasting wheat yield in Punjab state of India by combining crop simulation model WOFOST and remotely sensed inputs. Remote Sens. Lett. 2013, 4, 19–28. [Google Scholar] [CrossRef]

- Thorp, K.R.; Hunsaker, D.J.; French, A.N. Assimilating Leaf Area Index Estimates from Remote Sensing into the Simulations of a Cropping Systems Model. Trans. ASABE 2010, 53, 251–262. [Google Scholar] [CrossRef]

- Duchemin, B.; Maisongrande, P.; Boulet, G.; Benhadj, I. A simple algorithm for yield estimates: Evaluation for semi-arid irrigated winter wheat monitored with green leaf area index. Environ. Model. Softw. 2008, 23, 876–892. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Liu, J.; Dong, T.; Pattey, E.; Shang, J.; Tang, M.; Cai, H.; Saddique, Q. Coupling hyperspectral remote sensing data with a crop model to study winter wheat water demand. Remote Sens. 2019, 11, 1684. [Google Scholar] [CrossRef] [Green Version]

- Silvestro, P.C.; Pignatti, S.; Pascucci, S.; Yang, H.; Li, Z.; Yang, G.; Huang, W.; Casa, R. Estimating wheat yield in China at the field and district scale from the assimilation of satellite data into the Aquacrop and simple algorithm for yield (SAFY) models. Remote Sens. 2017, 9, 509. [Google Scholar] [CrossRef] [Green Version]

- Silvestro, P.C.; Pignatti, S.; Yang, H.; Yang, G.; Pascucci, S.; Castaldi, F.; Casa, R. Sensitivity analysis of the Aquacrop and SAFYE crop models for the assessment of water limited winter wheat yield in regional scale applications. PLoS ONE 2017, 12, e0187485. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dong, T.; Liu, J.; Qian, B.; Zhao, T.; Jing, Q.; Geng, X.; Wang, J.; Huffman, T.; Shang, J. Estimating winter wheat biomass by assimilating leaf area index derived from fusion of Landsat-8 and MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 63–74. [Google Scholar] [CrossRef]

- Chahbi, A.; Zribi, M.; Lili-Chabaane, Z.; Duchemin, B.; Shabou, M.; Mougenot, B.; Boulet, G. Estimation of the dynamics and yields of cereals in a semi-arid area using remote sensing and the SAFY growth model. Int. J. Remote Sens. 2014, 35, 1004–1028. [Google Scholar] [CrossRef] [Green Version]

- Claverie, M.; Demarez, V.; Duchemin, B.; Hagolle, O.; Ducrot, D.; Marais-Sicre, C.; Dejoux, J.F.; Huc, M.; Keravec, P.; Béziat, P.; et al. Maize and sunflower biomass estimation in southwest France using high spatial and temporal resolution remote sensing data. Remote Sens. Environ. 2012, 124, 844–857. [Google Scholar] [CrossRef]

- Battude, M.; Al Bitar, A.; Morin, D.; Cros, J.; Huc, M.; Sicre, C.M.; Le Dantec, V.; Demarez, V. Estimating maize biomass and yield over large areas using high spatial and temporal resolution Sentinel-2 like remote sensing data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar] [CrossRef]

- Kang, Y.; Özdoğan, M.; Zipper, S.C.; Román, M.O.; Walker, J.; Hong, S.Y.; Marshall, M.; Magliulo, V.; Moreno, J.; Alonso, L.; et al. How universal is the relationship between remotely sensed vegetation indices and crop leaf area index? A global assessment. Remote Sens. 2016, 8, 597. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Helman, D.; Lensky, I.M.; Bonfil, D.J. Early prediction of wheat grain yield production from root-zone soil water content at heading using Crop RS-Met. Field Crop. Res. 2019, 232, 11–23. [Google Scholar] [CrossRef]

- Waldner, F.; Horan, H.; Chen, Y.; Hochman, Z. High temporal resolution of leaf area data improves empirical estimation of grain yield. Sci. Rep. 2019, 9, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Sadeh, Y.; Zhu, X.; Chenu, K.; Dunkerley, D. Sowing date detection at the field scale using CubeSats remote sensing. Comput. Electron. Agric. 2019, 157, 568–580. [Google Scholar] [CrossRef]

- Helman, D.; Bahat, I.; Netzer, Y.; Ben-Gal, A.; Alchanatis, V.; Peeters, A.; Cohen, Y. Using time series of high-resolution planet satellite images to monitor grapevine stem water potential in commercial vineyards. Remote Sens. 2018, 10, 1615. [Google Scholar] [CrossRef] [Green Version]

- Houborg, R.; McCabe, M.F. High-Resolution NDVI from planet’s constellation of earth observing nano-satellites: A new data source for precision agriculture. Remote Sens. 2016, 8, 768. [Google Scholar] [CrossRef] [Green Version]

- Houborg, R.; McCabe, M.F. Daily retrieval of NDVI and LAI at 3 m resolution via the fusion of CubeSat, Landsat, and MODIS data. Remote Sens. 2018, 10, 890. [Google Scholar] [CrossRef] [Green Version]

- Sadeh, Y.; Zhu, X.; Dunkerley, D.; Walker, J.P.; Zhang, Y.; Rozenstein, O.; Manivasagam, V.S.; Chenu, K. Fusion of Sentinel-2 and PlanetScope time-series data into daily 3 m surface reflectance and wheat LAI monitoring. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102260. [Google Scholar] [CrossRef]

- Hunt, M.L.; Blackburn, G.A.; Carrasco, L.; Redhead, J.W.; Rowland, C.S. High resolution wheat yield mapping using Sentinel-2. Remote Sens. Environ. 2019, 233, 111410. [Google Scholar] [CrossRef]

- Burke, M.; Lobell, D.B. Satellite-based assessment of yield variation and its determinants in smallholder African systems. Proc. Natl. Acad. Sci. USA 2017, 114, 2189–2194. [Google Scholar] [CrossRef] [Green Version]

- Jin, Z.; Azzari, G.; Burke, M.; Aston, S.; Lobell, D.B. Mapping smallholder yield heterogeneity at multiple scales in eastern Africa. Remote Sens. 2017, 9, 931. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Y.; Potgieter, A.B.; Zhang, M.; Wu, B.; Hammer, G.L. Predicting wheat yield at the field scale by combining high-resolution Sentinel-2 satellite imagery and crop modelling. Remote Sens. 2020, 12, 1024. [Google Scholar] [CrossRef] [Green Version]

- Fieuzal, R.; Bustillo, V.; Collado, D.; Dedieu, G. Combined use of multi-temporal Landsat-8 and Sentinel-2 images for wheat yield estimates at the intra-plot spatial scale. Agronomy 2020, 10, 327. [Google Scholar] [CrossRef] [Green Version]

- Gilardelli, C.; Stella, T.; Confalonieri, R.; Ranghetti, L.; Campos-Taberner, M.; García-Haro, F.J.; Boschetti, M. Downscaling rice yield simulation at sub-field scale using remotely sensed LAI data. Eur. J. Agron. 2019, 103, 108–116. [Google Scholar] [CrossRef]

- Kayad, A.; Sozzi, M.; Gatto, S.; Marinello, F.; Pirotti, F. Monitoring within-field variability of corn yield using Sentinel-2 and machine learning techniques. Remote Sens. 2019, 11, 2873. [Google Scholar] [CrossRef] [Green Version]

- Zhang, P.P.; Zhou, X.X.; Wang, Z.X.; Mao, W.; Li, W.X.; Yun, F.; Guo, W.S.; Tan, C.W. Using HJ-CCD image and PLS algorithm to estimate the yield of field-grown winter wheat. Sci. Rep. 2020, 10, 1–10. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gaso, D.V.; Berger, A.G.; Ciganda, V.S. Predicting wheat grain yield and spatial variability at field scale using a simple regression or a crop model in conjunction with Landsat images. Comput. Electron. Agric. 2019, 159, 75–83. [Google Scholar] [CrossRef]

- Skakun, S.; Vermote, E.; Roger, J.-C.; Franch, B. Combined Use of Landsat-8 and Sentinel-2A Images for Winter Crop Mapping and Winter Wheat Yield Assessment at Regional Scale. AIMS Geosci. 2017, 3, 163–186. [Google Scholar] [CrossRef]

- Lambert, M.J.; Blaes, X.; Traore, P.S.; Defourny, P. Estimate yield at parcel level from S2 time series in sub-Saharan smallholder farming systems. In Proceedings of the 2017 9th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Brugge, Belgium, 27–29 June 2017. [Google Scholar]

- Kaplan, G.; Fine, L.; Lukyanov, V.; Manivasagam, V.S.; Malachy, N.; Tanny, J.; Rozenstein, O. Estimating Processing Tomato Water Consumption, Leaf Area Index, and Height Using Sentinel-2 and VENµS Imagery. Remote Sens. 2021, 13, 1046. [Google Scholar] [CrossRef]

- Planet Team. Planet Surface Reflectance Product, version 2.0; Planet Labs, Inc.: San Francisco, CA, USA, 2020; Available online: https://assets.planet.com/marketing/PDF/Planet_Surface_Reflectance_Technical_White_Paper.pdf (accessed on 30 November 2020).

- Weiss, M.; Baret, F. S2ToolBox Level 2 Products: LAI, FAPAR, FCOVER, version 1.1; 2016, p. 53. Available online: http://step.esa.int/docs/extra/ATBD_S2ToolBox_L2B_V1.1.pdf (accessed on 19 April 2021).

- Gascon, F.; Ramoino, F.; Deanos, Y. Sentinel-2 data exploitation with ESA’s Sentinel-2 Toolbox. In Proceedings of the 19th EGU General Assembly, EGU2017, Vienna, Austria, 23–28 April 2017; p. 19548. [Google Scholar]

- Pan, Z.; Hu, Y.; Cao, B. Construction of smooth daily remote sensing time series data: A higher spatiotemporal resolution perspective. Open Geospat. Data Softw. Stand. 2017, 2, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Toscano, P.; Castrignanò, A.; Di Gennaro, S.F.; Vonella, A.V.; Ventrella, D.; Matese, A. A precision agriculture approach for durum wheat yield assessment using remote sensing data and yield mapping. Agronomy 2019, 9, 437. [Google Scholar] [CrossRef] [Green Version]

- Vega, A.; Córdoba, M.; Castro-Franco, M.; Balzarini, M. Protocol for automating error removal from yield maps. Precis. Agric. 2019, 20, 1030–1044. [Google Scholar] [CrossRef]

- Curnel, Y.; De Wit, A.J.W.; Duveiller, G.; Defourny, P. Potential performances of remotely sensed LAI assimilation in WOFOST model based on an OSS Experiment. Agric. For. Meteorol. 2011, 151, 1843–1855. [Google Scholar] [CrossRef]

- Huang, J.; Ma, H.; Sedano, F.; Lewis, P.; Liang, S.; Wu, Q.; Su, W.; Zhang, X.; Zhu, D. Evaluation of regional estimates of winter wheat yield by assimilating three remotely sensed reflectance datasets into the coupled WOFOST–PROSAIL model. Eur. J. Agron. 2019, 102, 1–13. [Google Scholar] [CrossRef]

- Manivasagam, V.S.; Kaplan, G.; Rozenstein, O. Developing Transformation Functions for VENμS and Sentinel-2 Surface Reflectance over Israel. Remote Sens. 2019, 11, 1710. [Google Scholar] [CrossRef] [Green Version]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.-C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Deines, J.M.; Patel, R.; Liang, S.-Z.; Dado, W.; Lobell, D.B. A million kernels of truth: Insights into scalable satellite maize yield mapping and yield gap analysis from an extensive ground dataset in the US Corn Belt. Remote Sens. Environ. 2020, 253, 112174. [Google Scholar] [CrossRef]

- Bellakanji, A.C.; Zribi, M.; Lili-Chabaane, Z.; Mougenot, B. Forecasting of cereal yields in a semi-arid area using the simple algorithm for yield estimation (SAFY) agro-meteorological model combined with optical SPOT/HRV images. Sensors 2018, 18, 2138. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Monaghan, J.M.; Daccache, A.; Vickers, L.H.; Hess, T.M.; Weatherhead, E.K.; Grove, I.G.; Knox, J.W. More “crop per drop”: Constraints and opportunities for precision irrigation in European agriculture. J. Sci. Food Agric. 2013, 93, 977–980. [Google Scholar] [CrossRef]

- Vicent, J.; Verrelst, J.; Sabater, N.; Alonso, L.; Rivera-caicedo, J.P.; Martino, L.; Muñoz-marí, J.; Moreno, J. Comparative analysis of atmospheric radiative transfer models using the Atmospheric Look-up table Generator (ALG) toolbox (version 2.0). Geosci. Model Dev. 2019, 13, 1945–1957. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Nguy-Robertson, A.; Gitelson, A.; Peng, Y.; Viña, A.; Arkebauer, T.; Rundquist, D. Green leaf area index estimation in maize and soybean: Combining vegetation indices to achieve maximal sensitivity. Agron. J. 2012, 104, 1336–1347. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Vina, A.; Arkebauer, T.J.; Rundquist, D.C.; Keydan, G.; Leavitt, B. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 4–7. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Viña, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef] [Green Version]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS; NASA SP-351; NASA: Washington, DC, USA, 1974; pp. 309–317. [Google Scholar]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A Modified Soil Adjusted Vegetation Index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide Dynamic Range Vegetation Index for Remote Quantification of Biophysical Characteristics of Vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [Green Version]

- Peng, Y.; Gitelson, A.A. Application of chlorophyll-related vegetation indices for remote estimation of maize productivity. Agric. For. Meteorol. 2011, 151, 1267–1276. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Sripada, R.P.; Heiniger, R.W.; White, J.G.; Meijer, A.D. Aerial color infrared photography for determining early in-season nitrogen requirements in corn. Agron. J. 2006, 98, 968–977. [Google Scholar] [CrossRef]

- Gitelson, A.; Merzlyak, M.N. Spectral Reflectance Changes Associated with Autumn Senescence of Aesculus hippocastanum L. and Acer platanoides L. Leaves. Spectral Features and Relation to Chlorophyll Estimation. J. Plant Physiol. 1994, 143, 286–292. [Google Scholar] [CrossRef]

- Roujean, J.L.; Breon, F.M. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Haas, R.H.; Deering, D.W.; Rouse, J.W.; Schell, J.A. Monitoring vegetation conditions from LANDSAT for use in range management. In Proceedings of the NASA Earth Resources Survey Symposium, Houston, TX, USA, 1 June 1975; pp. 43–52. [Google Scholar]

| Parameters | Unit | Range | Calibrated Value | Source |

|---|---|---|---|---|

| Climate efficiency | - | 0.48 | [20] | |

| Initial dry above-ground mass | g m2 | 5.3 | Calibrated | |

| Light-interception coefficient | - | 0.3–1 | 0.53 | Calibrated |

| Temperature for growth (minimal, Tmin; maximal, Tmax; and optimal, Topt) | °C | 0, 18, 26 | Calibrated | |

| Specific leaf area (SLA) | m2 g−1 | 0.022 | [20] | |

| Partition-to-leaf function: parameter a (PLa) | - | 0.01–0.3 | 0.056 | Calibrated |

| Partition-to-leaf function: parameter b (PLb) | - | 10−5–10−2 | 0.0024 | Calibrated |

| Sum of temperature for senescence (STT) | °C | 800–2000 | 1350 | Calibrated |

| Rate of senescence (Rs) | °C day | 0–105 | 8500 | Calibrated |

| Effective light-use efficiency (ELUE) | g MJ−1 | 1.5– 3.5 | 2.5 | Calibrated |

| References | Satellite | Indices | RMSE (g/m2) |

|---|---|---|---|

| This study | Sentinel-2 | S2-LAI | 88 |

| MSAVI | 74 | ||

| PlanetScope and Sentinel-2 fused data | MSAVI | 69 | |

| Chahbi et al. [25] | SPOT5 | NDVI | 90 |

| Dong et al. [24] | Fusion of Landsat-8 and MODIS (MOD09Q1) | EVI2 | 176–231 |

| Silvestro et al. [22] | Landsat-8, HJ1A, and HJ1B | SR | 109 |

| Chahbi Bellakanji et al. [59] | SPOT4 and SPOT5 | NDVI | 80 |

| Gaso et al. [44] | Landsat-7 and Landsat-8 | NDVI and CI | 153 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manivasagam, V.S.; Sadeh, Y.; Kaplan, G.; Bonfil, D.J.; Rozenstein, O. Studying the Feasibility of Assimilating Sentinel-2 and PlanetScope Imagery into the SAFY Crop Model to Predict Within-Field Wheat Yield. Remote Sens. 2021, 13, 2395. https://doi.org/10.3390/rs13122395

Manivasagam VS, Sadeh Y, Kaplan G, Bonfil DJ, Rozenstein O. Studying the Feasibility of Assimilating Sentinel-2 and PlanetScope Imagery into the SAFY Crop Model to Predict Within-Field Wheat Yield. Remote Sensing. 2021; 13(12):2395. https://doi.org/10.3390/rs13122395

Chicago/Turabian StyleManivasagam, V.S., Yuval Sadeh, Gregoriy Kaplan, David J. Bonfil, and Offer Rozenstein. 2021. "Studying the Feasibility of Assimilating Sentinel-2 and PlanetScope Imagery into the SAFY Crop Model to Predict Within-Field Wheat Yield" Remote Sensing 13, no. 12: 2395. https://doi.org/10.3390/rs13122395