Deep Learning for Automated Detection and Identification of Migrating American Eel Anguilla rostrata from Imaging Sonar Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Collection

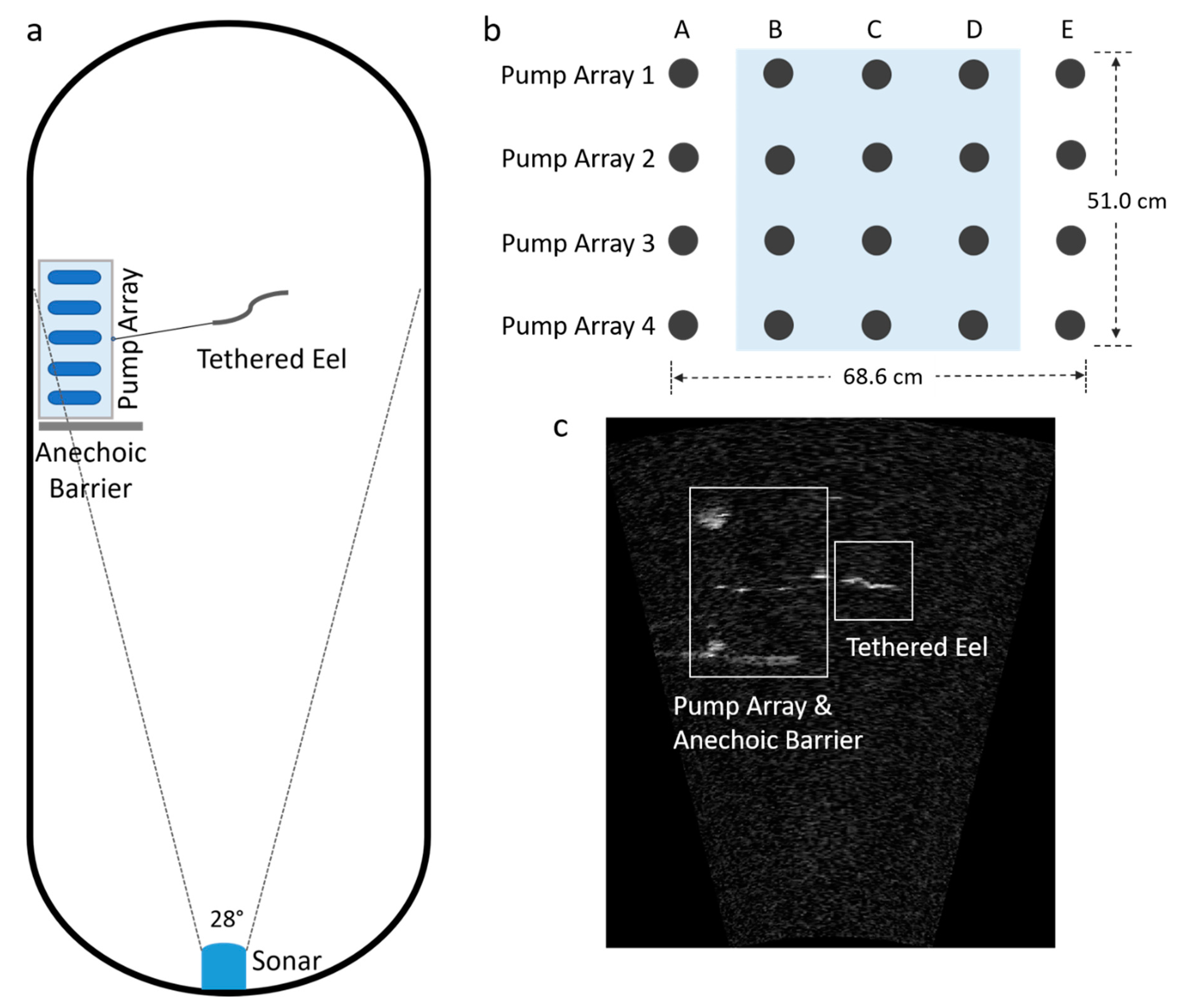

2.1.1. Laboratory Experiments

2.1.2. Field Experiments

2.2. Data Analysis

2.2.1. Data Cleaning and Labeling

2.2.2. Sonar Image Processing

2.2.3. Object Extraction

2.2.4. Statistical Analysis of Sonar Images: Aspect Ratio and Orientation Angle of Objects

2.2.5. Convolutional Neural Network

3. Results

3.1. Statistical Analysis of Sonar Images: Aspect Ratio and Orientation Angle of Targets in the Laboratory

3.2. CNN Performance Evaluation with the Laboratory Data

3.3. CNN Performance Evaluation with the Field Data

3.4. Transferability from the Laboratory Data to the Field Data

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dixon, D.A. (Ed.) Biology, management, and protection of catadromous eels. In Proceedings of the First International Symposium Biology, Management, and Protection of Catadromous Eels, St. Louis, MO, USA, 21–22 August 2000; Amer Fisheries Society: Bethesda, MD, USA, 2003. Volume 33. [Google Scholar]

- ASMFC (Atlantic States Marine Fisheries Commission). Update of the American Eel Stock Assessment Report; ASMFC: Washington, DC, USA, 2006; p. 51. [Google Scholar]

- MacGregor, R.J.; Casselman, J.; Greig, L.; Dettmers, J.; Allen, W.A.; McDermott, L.; Haxton, T. Recovery Strategy for the American Eel (Anguilla rostrata) in Ontario; Ontario Recovery Strategy Series; Prepared for Ontario Ministry of Natural Resources; Ontario Ministry of Natural Resources: Peterborough, ON, Canada, 2013. [Google Scholar]

- ASMFC (Atlantic States Marine Fisheries Commission). American eel Benchmark Stock Assessment; ASMFC: Washington, DC, USA, 2012. [Google Scholar]

- Jacoby, D.; Casselman, J.; DeLucia, M.; Gollock, M. Anguilla rostrata (amended version of 2014 assessment). In IUCN Red List of Threatened Species; International Union for the Conservation of Nature: Gland, Switzerland, 2017; Volume 517. [Google Scholar] [CrossRef]

- Miller, M.J.; Feunteun, E.; Tsukamoto, K. Did a “perfect storm” of oceanic changes and continental anthropogenic impacts cause northern hemisphere anguillid recruitment reductions? ICES J. Mar. Sci. 2016, 73, 43–56. [Google Scholar] [CrossRef] [Green Version]

- Haro, A.; Richkus, W.A.; Whalen, K.; Hoar, A.; Busch, W.-D.N.; Lary, S.J.; Brush, T.; Dixon, D. Population decline of the American eel: Implications for research and management. Fisheries 2000, 25, 7–16. [Google Scholar] [CrossRef]

- Turner, S.M.; Chase, B.C.; Bednarski, M.S. Evaluating the effect of dam removals on yellow-phase American Eel abundance in a northeastern U.S. watershed. N. Am. J. Fish. Manag. 2018, 38, 424–431. [Google Scholar] [CrossRef]

- Økland, F.; Havn, T.B.; Thorstad, E.B.; Heermann, L.; Sæther, S.A.; Tambets, M.; Teichert, M.A.K.; Borcherding, J. Mortality of downstream migrating European eel at power stations can be low when turbine mortality is eliminated by protection measures and safe bypass routes are available. Hydrobiologia 2019, 104, 68–79. [Google Scholar] [CrossRef]

- Heisey, P.G.; Mathur, D.; Phipps, J.L.; Avalos, J.C.; Hoffman, C.E.; Adams, S.W.; De-Oliveira, E. Passage survival of European and American eels at Francis and propeller turbines. J. Fish Biol. 2019, 95, 1172–1183. [Google Scholar] [CrossRef] [PubMed]

- Richkus, W.A.; Dixon, D.A. Review of research and technologies on passage and protection of downstream migrating catadromous eel at hydroelectric facilities. In Biology, Management, and Protection of Catadromouseels; Symposium 33; American Fisheries Society: Bethesda, MD, USA, 2003; pp. 377–388. [Google Scholar]

- EPRI (Electric Power Research Institute). Assessment of Technologies to Study Downstream Migrating American eel Approach and Behavior at Iroquois Dam and Beauharnois Power Canal; EPRI (Electric Power Research Institute): Palo Alto, CA, USA, 2017. [Google Scholar]

- Holmes, J.A.; Cronkite, G.M.W.; Enzenhofer, H.J.; Mulligan, T.J. Accuracy and precision of fish-count data from a “dual-frequency identification sonar” (DIDSON) imaging system. ICES J. Mar. Sci. 2006, 63, 543–555. [Google Scholar] [CrossRef] [Green Version]

- Keefer, M.L.; Caudill, C.C.; Johnson, E.L.; Clabough, T.S.; Boggs, C.T.; Johnson, P.N.; Nagy, W.T. Interobserver Bias in Fish Classification and Enumeration Using Dual-frequency Identification Sonar (DIDSON): A Pacific Lamprey Case Study. Northwest Sci. 2017, 91, 41–53. [Google Scholar] [CrossRef]

- Egg, L.; Mueller, M.; Pander, J.; Knott, J.; Geist, J. Improving European silver eel (Anguilla anguilla) downstream migration by undershot sluice gate management at a small-scale hydropower plant. Ecol. Eng. 2017, 106, 349–357. [Google Scholar] [CrossRef]

- Mueller, A.M.; Mulligan, T.; Withler, P.K. Classifying Sonar Images: Can a Computer-Driven Process Identify Eels? N. Am. J. Fish. Manag. 2008, 28, 1876–1886. [Google Scholar] [CrossRef] [Green Version]

- Bothmann, L.; Windmann, M.; Kauermann, G. Realtime classification of fish in underwater sonar videos. J. R. Stat. Soc. Ser. C Appl. Stat. 2016, 65, 565–584. [Google Scholar] [CrossRef]

- Christin, S.; Hervet, E.; Lecomte, N. Applications for deep learning in ecology. Methods Ecol. Evol. 2019, 10, 1632–1644. [Google Scholar] [CrossRef]

- Cabaneros, S.M.; Calautit, J.K.; Hughes, B.R. A review of artificial neural network models for ambient air pollution prediction. Environ. Model. Softw. 2019, 119, 285–304. [Google Scholar] [CrossRef]

- Xu, G.; Zhu, X.; Fu, D.; Dong, J.; Xiao, X. Automatic land cover classification of geo-tagged field photos by deep learning. Environ. Model. Softw. 2017, 91, 127–134. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backprop agation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Wang, S.; Kang, B.; Ma, J.; Zeng, X.; Xiao, M.; Guo, J.; Cai, M.; Yang, J.; Li, Y.; Meng, X.; et al. A deep learning algorithm using CT images to screen for Corona virus disease (COVID-19). Eur. Radiol. 2021. [Google Scholar] [CrossRef]

- Ozturk, T.; Talo, M.; Yildirim, E.A.; Baloglu, U.B.; Yildirim, O.; Rajendra Acharya, U. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput. Biol. Med. 2020, 121, 103792. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 1995. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Qin, H.W.; Li, X.; Liang, J.; Peng, Y.G.; Zhang, C.S. DeepFish: Accurate underwater live fish recognition with a deep architecture. Neurocomputing 2016, 187, 49–58. [Google Scholar] [CrossRef]

- Handegard, N.O.; Williams, K. Automated tracking of fish in trawls using the DIDSON (Dual frequency IDentification SONar). ICES J. Marin. Sci. 2008, 65, 636–644. [Google Scholar] [CrossRef] [Green Version]

- Atallah, L.; Smith, P.P.; Bates, C.R. Wavelet analysis of bathymetric sidescan sonar data for the classifi-cation of seafloor sediments in Hopvagen Bay-Norway. Marine Geophys. Res. 2002, 23, 431–442. [Google Scholar] [CrossRef]

- Azimi-Sadjadi, M.R.; Yao, D.; Huang, Q.; Dobeck, G.J. Underwater target classification using wave-let packets and neural networks. IEEE Trans. Neural Netw. 2000, 11, 784–794. [Google Scholar] [CrossRef] [Green Version]

- Hou, Z.; Makarov, Y.V.; Samaan, N.A.; Etingov, P.V. Standardized Software for Wind Load Forecast Error Analyses and Predictions Based on Wavelet-ARIMA Models—Applications at Multiple Geographically Distributed Wind Farms. In Proceedings of the 46th IEEE Hawaii International Conference on System Sciences (HICSS), Wailea, HI, USA, 7–10 January 2003; pp. 5005–5011. [Google Scholar]

- Agaian, S.S.; Panetta, K.; Grigoryan, A.M. Transform-based image enhancement algorithms with per-formance measure. IEEE Trans. Image Process. 2001, 10, 367–382. [Google Scholar] [CrossRef] [Green Version]

- Kekre, H.; Sarode, T.K.; Thepade, S.D.; Shroff, S. Instigation of Orthogonal Wavelet Transforms using Walsh, Cosine, Hartley, Kekre Transforms and their use in Image Compression. Int. J. Comput. Sci. Inf. Secur. 2011, 9, 125. [Google Scholar]

- Haar, A. Zur theorie der orthogonalen funktionensysteme. Math. Ann. 1991, 69, 331–371. [Google Scholar] [CrossRef]

- Daubechies, I. Ten Lectures on Wavelets; SIAM: Philadelphia, PA, USA, 1992. [Google Scholar]

- Baldi, P.; Sadowski, P.J. Understanding Dropout. Proc. Neural Inf. Process. Syst. 2013, 26, 2814–2822. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back-Propagating Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980v9. [Google Scholar]

- Yin, T.; Zang, X.; Hou, Z.; Jacobson, P.T.; Mueller, R.P.; Deng, Z. Bridging the Gap between Laboratory and Field Experiments in American Eel Detection Using Transfer Learning and Convolutional Neural Network. In Proceedings of the 53rd Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2020. [Google Scholar]

| Parameters | Values |

|---|---|

| Flow speed in the fish swimming zone | High flow: 0.76 m/s; Low flow: 0.53 m/s |

| Range from the sonar to the fish swimming zone | 5.5 m |

| Detection range | 2.8–6.7 m |

| Focus range | 5.7 m |

| Operating frequency | 1.1 MHz |

| Number of beams | 96 |

| Number of samples per beam | 537 or 482 |

| Resolution | 5.8 mm or 7.3 mm |

| Object ID | Water Flow Speed (m/s) | Number of Images |

|---|---|---|

| Eel_1 | 0.76 | 124 |

| Eel_1 | 0.53 | 110 |

| Eel_2 | 0.76 | 36 |

| Eel_2 | 0.53 | 360 |

| Eel_3 | 0.76 | 686 |

| Eel_3 | 0.53 | 328 |

| Eel_4 | 0.76 | 222 |

| Eel_4 | 0.53 | 26 |

| Stick_1 | 0.76 | 785 |

| Stick_1 | 0.53 | 869 |

| Stick_2 | 0.76 | 972 |

| Stick_2 | 0.53 | 760 |

| Water Flow Speed | Image Processing | Image-Based Accuracy |

|---|---|---|

| Original | 97.33% ± 1.78% | |

| Two flow speeds (0.76 m/s and 0.53 m/s) | Wavelet denoising only | 97.65% ± 1.74% |

| Background subtraction only | 97.62% ± 1.61% | |

| Background subtraction and wavelet denoising | 98.42% ± 1.29% | |

| High flow (0.76 m/s) | Background subtraction and wavelet denoising | 97.88% ± 2.30% |

| Low flow (0.53 m/s) | Background subtraction and wavelet denoising | 99.15% ± 1.30% |

| Hyperparameters | Explored Values | Optimal Values |

|---|---|---|

| Batch size | 16, 32 | 32 |

| Number of epochs | 4, 5, 6, 7, 8 | 5 |

| Learning rate | 0.00005, 0.0001, 0.001 | 0.0001 |

| Weights | 0.4 and 0.6; 0.5 and 0.5; 0.6 and 0.4 | 0.4 and 0.6 |

| Training vs. testing split | 80% and 20%; 70% and 30%; 60% and 40% | 80% and 20% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zang, X.; Yin, T.; Hou, Z.; Mueller, R.P.; Deng, Z.D.; Jacobson, P.T. Deep Learning for Automated Detection and Identification of Migrating American Eel Anguilla rostrata from Imaging Sonar Data. Remote Sens. 2021, 13, 2671. https://doi.org/10.3390/rs13142671

Zang X, Yin T, Hou Z, Mueller RP, Deng ZD, Jacobson PT. Deep Learning for Automated Detection and Identification of Migrating American Eel Anguilla rostrata from Imaging Sonar Data. Remote Sensing. 2021; 13(14):2671. https://doi.org/10.3390/rs13142671

Chicago/Turabian StyleZang, Xiaoqin, Tianzhixi Yin, Zhangshuan Hou, Robert P. Mueller, Zhiqun Daniel Deng, and Paul T. Jacobson. 2021. "Deep Learning for Automated Detection and Identification of Migrating American Eel Anguilla rostrata from Imaging Sonar Data" Remote Sensing 13, no. 14: 2671. https://doi.org/10.3390/rs13142671

APA StyleZang, X., Yin, T., Hou, Z., Mueller, R. P., Deng, Z. D., & Jacobson, P. T. (2021). Deep Learning for Automated Detection and Identification of Migrating American Eel Anguilla rostrata from Imaging Sonar Data. Remote Sensing, 13(14), 2671. https://doi.org/10.3390/rs13142671