Figure 1.

The locations of the three in situ stations. Venise and WaveCIS are AERONET-OC SeaPRISM sites, while MOBY is a moored buoy.

Figure 1.

The locations of the three in situ stations. Venise and WaveCIS are AERONET-OC SeaPRISM sites, while MOBY is a moored buoy.

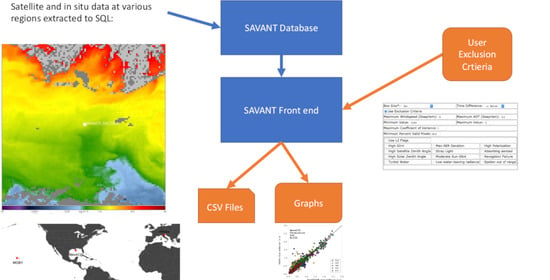

Figure 2.

SAVANT exclusion criteria selection. This table represents the following possible exclusion criteria used by SAVANT: (a) satellite box size, (b) maximum time difference between in situ and satellite measurements, (c) maximum observed windspeed and maximum observed aerosol optical thickness, (d) minimum and maximum value of normalized water-leaving radiance, (e) maximum coefficient of variation of satellite box, (f) minimum percent of valid pixels in the satellite box, and (g) additional data quality control flags.

Figure 2.

SAVANT exclusion criteria selection. This table represents the following possible exclusion criteria used by SAVANT: (a) satellite box size, (b) maximum time difference between in situ and satellite measurements, (c) maximum observed windspeed and maximum observed aerosol optical thickness, (d) minimum and maximum value of normalized water-leaving radiance, (e) maximum coefficient of variation of satellite box, (f) minimum percent of valid pixels in the satellite box, and (g) additional data quality control flags.

Figure 3.

WaveCIS SeaPRISM vs. VIIRS comparison, June 2014–May 2019. Left: permissive protocol; right: strict protocol. The number of match-ups decreased by 65% when applying the strict constraints. Note that in the presented scatter plots there is a black 1:1 line shown for reference purposes, and the colors represent 410, 443, 486, 551, and 671 nm, respectively.

Figure 3.

WaveCIS SeaPRISM vs. VIIRS comparison, June 2014–May 2019. Left: permissive protocol; right: strict protocol. The number of match-ups decreased by 65% when applying the strict constraints. Note that in the presented scatter plots there is a black 1:1 line shown for reference purposes, and the colors represent 410, 443, 486, 551, and 671 nm, respectively.

Figure 4.

WaveCIS–VIIRS comparison, June 2014–May 2019, center (in situ) pixel only. Left: permissive protocol; right: strict protocol. The number of match-ups decreased by almost 65%.

Figure 4.

WaveCIS–VIIRS comparison, June 2014–May 2019, center (in situ) pixel only. Left: permissive protocol; right: strict protocol. The number of match-ups decreased by almost 65%.

Figure 5.

Venise–VIIRS comparison, June 2014–May 2019. Permissive protocol. Left: 5 × 5 box; right: center pixel only. There is a reduction in some outliers in the 1 × 1 box.

Figure 5.

Venise–VIIRS comparison, June 2014–May 2019. Permissive protocol. Left: 5 × 5 box; right: center pixel only. There is a reduction in some outliers in the 1 × 1 box.

Figure 6.

Venise–VIIRS comparison, June 2014−May 2019. Permissive protocol. Left: 443 nm 5 × 5 box mean; right: center (in situ) pixel only. This is the only wavelength in which the 5 × 5 box has statistics closer to the 1:1 line than the center pixel comparison.

Figure 6.

Venise–VIIRS comparison, June 2014−May 2019. Permissive protocol. Left: 443 nm 5 × 5 box mean; right: center (in situ) pixel only. This is the only wavelength in which the 5 × 5 box has statistics closer to the 1:1 line than the center pixel comparison.

Figure 7.

Venise–VIIRS comparison, June 2014–May 2019. Strict protocol, left: 5 × 5 box; right: center (in situ) pixel only. The 5 × 5 box is performing closer to the 1:1 line than the center pixel match-ups.

Figure 7.

Venise–VIIRS comparison, June 2014–May 2019. Strict protocol, left: 5 × 5 box; right: center (in situ) pixel only. The 5 × 5 box is performing closer to the 1:1 line than the center pixel match-ups.

Figure 8.

Venise–VIIRS comparison, June 2014−May 2019, 5 × 5 statistical box comparison. Left: permissive; right: strict. There is a 67% drop once again in available match-ups.

Figure 8.

Venise–VIIRS comparison, June 2014−May 2019, 5 × 5 statistical box comparison. Left: permissive; right: strict. There is a 67% drop once again in available match-ups.

Figure 9.

MOBY–VIIRS comparison, June 2014–May 2019. Permissive protocol, (a) 5 × 5 statistical box, (b) center (in situ) pixel, and (c) strict protocol. Slightly more than 70% reduction in match-ups from the permissive protocol. Strict 1 × 1 is not presented as the differences are in many cases a few tenths of a percent.

Figure 9.

MOBY–VIIRS comparison, June 2014–May 2019. Permissive protocol, (a) 5 × 5 statistical box, (b) center (in situ) pixel, and (c) strict protocol. Slightly more than 70% reduction in match-ups from the permissive protocol. Strict 1 × 1 is not presented as the differences are in many cases a few tenths of a percent.

Figure 10.

Time series of WaveCIS in situ and satellite match-ups, June 2014 through to May 2019. The highs in both sensors occur during winter time, with spring and summer lows. Also note data holidays caused by the instrument being calibrated or issues at the ground-truth station.

Figure 10.

Time series of WaveCIS in situ and satellite match-ups, June 2014 through to May 2019. The highs in both sensors occur during winter time, with spring and summer lows. Also note data holidays caused by the instrument being calibrated or issues at the ground-truth station.

Table 1.

Exclusion criteria for the permissive and strict protocols.

Table 1.

Exclusion criteria for the permissive and strict protocols.

| Protocol | Permissive | Strict |

|---|

| Box Size | 5 × 5 and 1 × 1 | 5 × 5 and 1 × 1 |

| Time Difference | +/−3 h, +/−1 h, +/−0.5 h | +/−3 h, +/−1 h, +/−0.5 h |

| Flags | CLDICE, LAND, ATMFAIL, HILT (processing default) | Permissive and HIGLINT, STRAYLIGHT, NAVFAIL, LOWLW |

| nLw | 0–3 | 0–3 |

| Windspeed | ≤8 ms−1 | ≤8 ms−1 |

| AOT | ≤0.2 | ≤0.2 |

| in situ Pixel Valid | Yes | Yes |

| Solar Zenith | Not restricted | ≤75° |

| Sensor Zenith | Not restricted | ≤60° |

| Additional Requirements | Chlorophyll, all nLw wavelengths valid | Chlorophyll, all nLw wavelengths valid. |

Table 2.

Strict and permissive results at WaveCIS. June 2014–May 2019 data. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 2.

Strict and permissive results at WaveCIS. June 2014–May 2019 data. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| 5 × 5 Permissive @ 410 nm | 235 | 0.8918 | 0.4667 | 1.0587 | −0.006 | 0.1078 |

| 1 × 1 Permissive @ 410 nm | 236 | 0.9012 | 0.4562 | 1.0939 | 0.0017 | 0.1093 |

| 5 × 5 Strict @ 410 nm | 82 | 0.9672 | 0.5251 | 1.0921 | 0.0106 | 0.1108 |

| 1 × 1 Strict @ 410 nm | 84 | 0.9957 | 0.5348 | 1.1201 | 0.0214 | 0.1074 |

| 5 × 5 Permissive @ 443 nm | 235 | 0.8803 | 0.7528 | 0.9174 | −0.0579 | 0.1093 |

| 1 × 1 Permissive @ 443 nm | 236 | 0.8842 | 0.7423 | 0.9377 | −0.0525 | 0.1115 |

| 5 × 5 Strict @ 443 nm | 82 | 0.9105 | 0.7226 | 0.9517 | −0.0396 | 0.1113 |

| 1 × 1 Strict @ 443 nm | 84 | 0.9173 | 0.7184 | 0.9696 | −0.0316 | 0.1096 |

| 5 × 5 Permissive @ 486 nm | 235 | 1.0207 | 0.9138 | 1.007 | 0.0077 | 0.099 |

| 1 × 1 Permissive @ 486 nm | 236 | 1.0217 | 0.9038 | 1.0169 | 0.0109 | 0.1054 |

| 5 × 5 Strict @ 486 nm | 82 | 1.041 | 0.8895 | 1.0436 | 0.0259 | 0.1089 |

| 1 × 1 Strict @ 486 nm | 84 | 1.0467 | 0.8734 | 1.069 | 0.0377 | 0.1142 |

| 5 × 5 Permissive @ 551 nm | 235 | 0.9822 | 0.9426 | 0.9617 | −0.0253 | 0.0915 |

| 1 × 1 Permissive @ 551 nm | 236 | 0.9826 | 0.9314 | 0.9693 | −0.0223 | 0.0956 |

| 5 × 5 Strict @ 551 nm | 82 | 0.9974 | 0.9304 | 1.0028 | −0.0018 | 0.0947 |

| 1 × 1 Strict @ 551 nm | 84 | 0.9991 | 0.9166 | 1.0232 | 0.007 | 0.1046 |

| 5 × 5 Permissive @ 671 nm | 235 | 0.9007 | 0.8919 | 0.8586 | −0.0258 | 0.0369 |

| 1 × 1 Permissive @ 671 nm | 236 | 0.8906 | 0.8611 | 0.8679 | −0.0259 | 0.0389 |

| 5 × 5 Strict @ 671 nm | 82 | 0.9057 | 0.8558 | 0.9142 | −0.0183 | 0.0353 |

| 1 × 1 Strict @ 671 nm | 84 | 0.9173 | 0.8768 | 0.9418 | −0.0147 | 0.0331 |

Table 3.

Venise data, June 2014–May 2019. Permissive data protocol at 5 × 5 and 1 × 1 box sizes. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 3.

Venise data, June 2014–May 2019. Permissive data protocol at 5 × 5 and 1 × 1 box sizes. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| 5 × 5 @ 410 nm | 281 | 1.077 | 0.4416 | 1.3317 | 0.1136 | 0.2131 |

| 1 × 1 @ 410 nm | 280 | 1.0578 | 0.4156 | 1.3567 | 0.109 | 0.2181 |

| 5 × 5 @ 443 nm | 281 | 0.9989 | 0.7714 | 1.0759 | 0.027 | 0.1586 |

| 1 × 1 @ 443 nm | 280 | 0.9965 | 0.758 | 1.0847 | 0.0268 | 0.167 |

| 5 × 5 @ 486 nm | 281 | 1.1562 | 0.8777 | 1.1926 | 0.1899 | 0.2279 |

| 1 × 1 @ 486 nm | 280 | 1.1525 | 0.8708 | 1.1955 | 0.1897 | 0.2331 |

| 5 × 5 @ 551 nm | 281 | 1.0426 | 0.9067 | 1.0689 | 0.06 | 0.1258 |

| 1 × 1 @ 551 nm | 280 | 1.038 | 0.9112 | 1.0733 | 0.0603 | 0.1279 |

| 5 × 5 @ 671 nm | 281 | 1.0414 | 0.7744 | 1.5243 | 0.023 | 0.0431 |

| 1 × 1 @ 671 nm | 280 | 1.0161 | 0.7149 | 1.7937 | 0.0247 | 0.0482 |

Table 4.

Venise permissive protocol, June 2014−May 2019. Center (in situ) pixel only, at +/−3, 1, and 0.5 h. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 4.

Venise permissive protocol, June 2014−May 2019. Center (in situ) pixel only, at +/−3, 1, and 0.5 h. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| +/−3 h, 410 nm | 281 | 1.0578 | 0.4156 | 1.3567 | 0.109 | 0.2181 |

| +/−1 h, 410 nm | 229 | 1.0783 | 0.431 | 1.3813 | 0.1222 | 0.2189 |

| +/−0.5 h, 410 nm | 183 | 1.0524 | 0.3705 | 1.3548 | 0.1141 | 0.2283 |

| +/−3 h, 443 nm | 281 | 0.9965 | 0.758 | 1.0847 | 0.0268 | 0.167 |

| +/−1 h, 443 nm | 229 | 1.0008 | 0.7633 | 1.0966 | 0.0357 | 0.1664 |

| +/−0.5 h, 443 nm | 183 | 0.9847 | 0.7173 | 1.087 | 0.0268 | 0.1765 |

| +/−3 h, 486 nm | 281 | 1.1525 | 0.8708 | 1.1955 | 0.1897 | 0.2331 |

| +/−1 h, 486 nm | 229 | 1.1594 | 0.871 | 1.2074 | 0.1983 | 0.2349 |

| +/−0.5 h, 486 nm | 183 | 1.1507 | 0.84 | 1.1995 | 0.1895 | 0.2405 |

| +/−3 h, 551 nm | 281 | 1.038 | 0.9112 | 1.0733 | 0.0603 | 0.1279 |

| +/−1 h, 551 nm | 229 | 1.0369 | 0.9102 | 1.0771 | 0.0613 | 0.1227 |

| +/−0.5 h, 551 nm | 183 | 1.0265 | 0.8856 | 1.0721 | 0.0527 | 0.1308 |

| +/−3 h, 671 nm | 281 | 1.0161 | 0.7149 | 1.7937 | 0.0247 | 0.0482 |

| +/−1 h, 671 nm | 229 | 1.0011 | 0.6668 | 1.7658 | 0.0246 | 0.0478 |

| +/−0.5 h, 671 nm | 183 | 0.8564 | 0.4715 | 1.7587 | 0.0185 | 0.0522 |

Table 5.

Venise data, strict protocol. June 2014–May 2019. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 5.

Venise data, strict protocol. June 2014–May 2019. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| 5 × 5 @ 410 nm | 94 | 1.1191 | 0.5439 | 1.3365 | 0.1421 | 0.1999 |

| 1 × 1 @ 410 nm | 96 | 1.093 | 0.458 | 1.3471 | 0.1374 | 0.2058 |

| 5 × 5 @ 443 nm | 94 | 0.995 | 0.8265 | 1.0702 | 0.0242 | 0.144 |

| 1 × 1 @ 443 nm | 96 | 0.9966 | 0.7887 | 1.0748 | 0.03 | 0.1563 |

| 5 ×5 @ 486 nm | 94 | 1.18 | 0.9152 | 1.2253 | 0.2294 | 0.2459 |

| 1 × 1 @ 486 nm | 96 | 1.2001 | 0.9112 | 1.2389 | 0.2417 | 0.2568 |

| 5 × 5 @ 551 nm | 94 | 1.0468 | 0.9026 | 1.0714 | 0.0683 | 0.1313 |

| 1 × 1 @ 551 nm | 96 | 1.0537 | 0.891 | 1.0847 | 0.0785 | 0.1361 |

| 5 × 5 @ 671 nm | 94 | 1.055 | 0.8011 | 1.4529 | 0.0253 | 0.0437 |

| 1 × 1 @ 671 nm | 96 | 1.0823 | 0.7888 | 2.0668 | 0.358 | 0.05 |

Table 6.

Venise data, strict protocol. June 2014−May 2019. Center (in situ) pixel only, at +/−3, 1, and 0.5 h. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 6.

Venise data, strict protocol. June 2014−May 2019. Center (in situ) pixel only, at +/−3, 1, and 0.5 h. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| +/−3 h, 410 nm | 96 | 1.093 | 0.458 | 1.3471 | 0.1421 | 0.1999 |

| +/−1 h, 410 nm | 75 | 1.1184 | 0.5336 | 1.3545 | 0.148 | 0.2065 |

| +/−0.5 h, 410 nm | 61 | 1.0979 | 0.4764 | 1.3732 | 0.1484 | 0.2192 |

| +/−3 h, 443 nm | 96 | 0.9966 | 0.7887 | 1.0748 | 0.0242 | 0.144 |

| +/−1 h, 443 nm | 75 | 0.9911 | 0.8322 | 1.0745 | 0.0229 | 0.1433 |

| +/−0.5 h, 443 nm | 61 | 0.9795 | 0.8082 | 1.0767 | 0.0173 | 0.154 |

| +/−3 h, 486 nm | 96 | 1.2001 | 0.9112 | 1.2389 | 0.2294 | 0.2459 |

| +/−1 h, 486 nm | 75 | 1.1794 | 0.9216 | 1.2253 | 0.2292 | 0.246 |

| +/−0.5 h, 486 nm | 61 | 1.175 | 0.9263 | 1.2208 | 0.2216 | 0.2391 |

| +/−3 h, 551 nm | 96 | 1.0537 | 0.891 | 1.0847 | 0.0683 | 0.1313 |

| +/−1 h, 551 nm | 75 | 1.0434 | 0.9261 | 1.0708 | 0.065 | 0.1177 |

| +/−0.5 h, 551 nm | 61 | 1.0394 | 0.928 | 1.0737 | 0.0618 | 0.1112 |

| +/−3 h, 671 nm | 96 | 1.0823 | 0.7888 | 2.0668 | 0.0253 | 0.0437 |

| +/−1 h, 671 nm | 75 | 1.0319 | 0.7785 | 1.4574 | 0.0231 | 0.0409 |

| +/−0.5 h, 671 nm | 61 | 1.0762 | 0.7764 | 1.5449 | 0.0272 | 0.0406 |

Table 7.

Venise 5 × 5 permissive versus 5 × 5 strict. June 2014–May 2019. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 7.

Venise 5 × 5 permissive versus 5 × 5 strict. June 2014–May 2019. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| Permissive, 410 nm | 281 | 1.077 | 0.4416 | 1.3317 | 0.1136 | 0.2131 |

| Strict, 410 nm | 94 | 1.1191 | 0.5439 | 1.3365 | 0.1421 | 0.1999 |

| Permissive, 443 nm | 281 | 0.9989 | 0.7714 | 1.0759 | 0.027 | 0.1586 |

| Strict, 443 nm | 94 | 0.995 | 0.8265 | 1.0702 | 0.0242 | 0.144 |

| Permissive, 486 nm | 281 | 1.1562 | 0.8777 | 1.1926 | 0.1899 | 0.2279 |

| Strict, 486 nm | 94 | 1.18 | 0.9152 | 1.2253 | 0.2294 | 0.2459 |

| Permissive, 551 nm | 281 | 1.0426 | 0.9067 | 1.0689 | 0.06 | 0.1258 |

| Strict, 551 nm | 94 | 1.0468 | 0.9026 | 1.0714 | 0.0683 | 0.1313 |

| Permissive, 671 nm | 281 | 1.0414 | 0.7744 | 1.5243 | 0.023 | 0.0431 |

| Strict, 671 nm | 94 | 1.055 | 0.8011 | 1.4529 | 0.0253 | 0.0437 |

Table 8.

MOBY data, June 2014–May 2019. Permissive data protocol at 5 × 5 and 1 × 1 box sizes. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 8.

MOBY data, June 2014–May 2019. Permissive data protocol at 5 × 5 and 1 × 1 box sizes. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| 5 × 5, 410 nm | 232 | 1.0207 | 0.3314 | 1.0762 | −0.0176 | 0.272 |

| 1 × 1, 410 nm | 239 | 1.0173 | 0.325 | 1.0778 | −0.0212 | 0.2795 |

| 5 × 5, 443 nm | 232 | 0.9994 | 0.2468 | 1.043 | 0.0343 | 0.2194 |

| 1 × 1, 443 nm | 239 | 0.9981 | 0.2479 | 1.0444 | 0.0337 | 0.2221 |

| 5 × 5, 486 nm | 232 | 1.0238 | 0.0788 | 1.0607 | −0.0052 | 0.1328 |

| 1 × 1, 486 nm | 239 | 1.0223 | 0.0869 | 1.0623 | −0.0053 | 0.1348 |

| 5 × 5, 551 nm | 232 | 0.8743 | 0.0002 | 0.9738 | −0.0227 | 0.0536 |

| 1 × 1, 551 nm | 239 | 0.8655 | 0.001 | 0.9972 | −0.0221 | 0.0584 |

| 5 × 5, 671 nm | 232 | 0.3015 | 0.0004 | 0.5797 | −0.0158 | 0.0159 |

| 1 × 1, 671 nm | 239 | 0.2008 | 0 | 0.6233 | −0.0167 | 0.0169 |

Table 9.

MOBY data, June 2014−May 2019. Strict data protocol at 5 × 5 and 1 × 1 box sizes. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 9.

MOBY data, June 2014−May 2019. Strict data protocol at 5 × 5 and 1 × 1 box sizes. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean Ratio | Bias | MAE |

|---|

| 5 × 5, 410 nm | 68 | 0.9983 | 0.3727 | 1.0538 | −0.0599 | 0.2782 |

| 1 × 1, 410 nm | 69 | 0.9953 | 0.3856 | 1.0409 | −0.0752 | 0.2604 |

| 5 × 5, 443 nm | 68 | 0.99 | 0.271 | 1.0306 | 0.016 | 0.2175 |

| 1 × 1, 443 nm | 69 | 0.9902 | 0.2891 | 1.022 | 0.0096 | 0.1987 |

| 5 × 5, 486 nm | 68 | 1.0067 | 0.0896 | 1.0439 | −0.0267 | 0.129 |

| 1 × 1, 486 nm | 69 | 0.9975 | 0.0816 | 1.0307 | −0.0375 | 0.1294 |

| 5 × 5, 551 nm | 68 | 0.9042 | 0.015 | 0.9947 | −0.0138 | 0.0483 |

| 1 × 1, 551 nm | 69 | 0.8739 | 0.0153 | 1.01 | −0.0177 | 0.0529 |

| 5 × 5, 671 nm | 68 | 0.2411 | 0.0021 | 0.6907 | −0.0139 | 0.0148 |

| 1 × 1, 671 nm | 69 | 0.105 | 0.0002 | 0.8026 | −0.0181 | 0.0193 |

Table 10.

MOBY data. Permissive versus strict, 5 × 5 statistical box. June 2014–May 2019. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

Table 10.

MOBY data. Permissive versus strict, 5 × 5 statistical box. June 2014–May 2019. Values closest to one in bold, for slope, R2, and mean ratio. Lowest values in bold for bias and mean absolute error.

| Protocol/Wavelength | Match-Ups | Slope | R2 | Mean-Ratio | Bias | MAE |

|---|

| Permissive, 410 nm | 232 | 1.0207 | 0.3314 | 1.0762 | −0.0176 | 0.272 |

| Strict, 410 nm | 68 | 0.9983 | 0.3727 | 1.0538 | −0.0599 | 0.2782 |

| Permissive, 443 nm | 232 | 0.9994 | 0.2468 | 1.043 | 0.0343 | 0.2194 |

| Strict, 443 nm | 68 | 0.99 | 0.271 | 1.0306 | 0.016 | 0.2175 |

| Permissive, 486 nm | 232 | 1.0238 | 0.0788 | 1.0607 | −0.0052 | 0.1328 |

| Strict, 486 nm | 68 | 1.0067 | 0.0896 | 1.0439 | −0.0267 | 0.129 |

| Permissive, 551 nm | 232 | 0.8743 | 0.0002 | 0.9738 | −0.0227 | 0.0536 |

| Strict, 551 nm | 68 | 0.9042 | 0.015 | 0.9947 | −0.0138 | 0.0483 |

| Permissive, 671 nm | 232 | 0.3015 | 0.0004 | 0.5797 | −0.0158 | 0.0159 |

| Strict, 671 nm | 68 | 0.0021 | 0.0021 | 0.6907 | −0.0139 | 0.0148 |