Learning the Incremental Warp for 3D Vehicle Tracking in LiDAR Point Clouds

Abstract

:1. Introduction

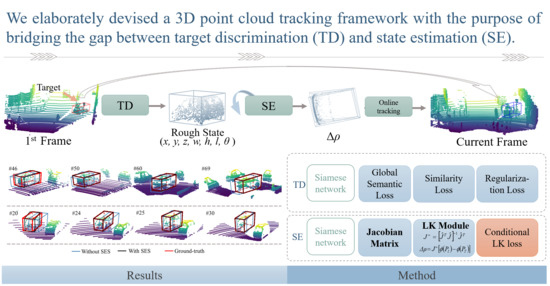

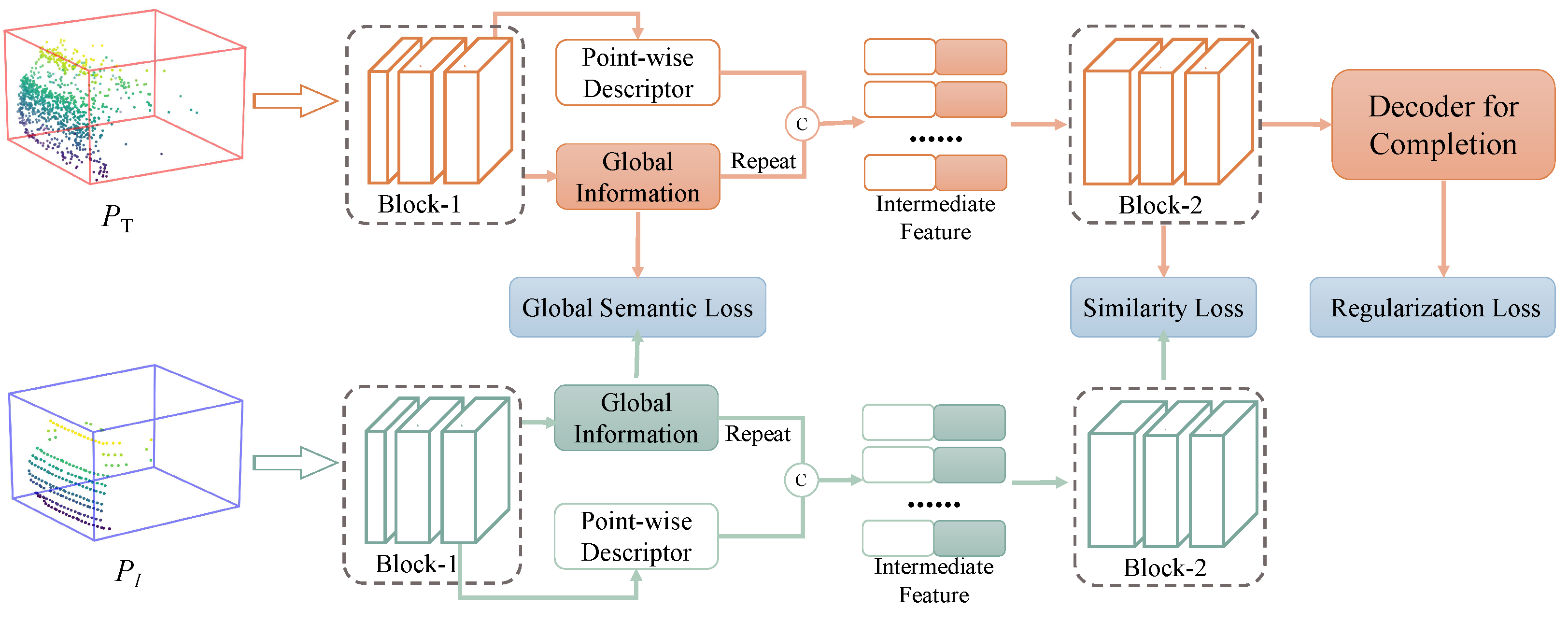

- A novel state estimation subnetwork was designed, which extends the 2D LK algorithm to 3D point cloud tracking. In particular, based on the Siamese architecture, this subnetwork can learn the incremental warp for meliorating the coarse target state.

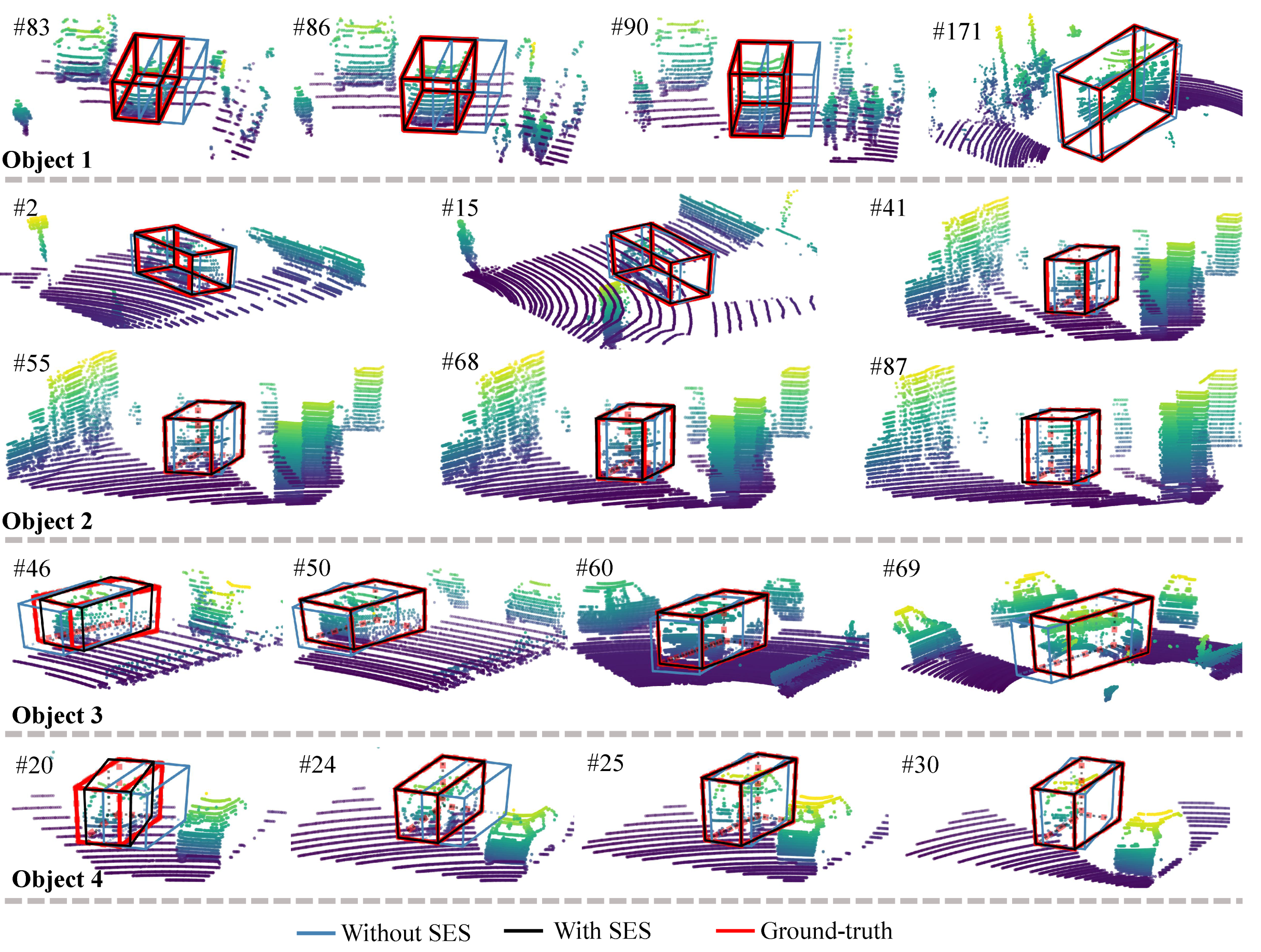

- A simple yet powerful discrimination subnetwork architecture is introduced, which projects 3D shapes into a more discriminatory latent space by integrating the global semantic feature into each point-wise feature. More importantly, it surpasses the 3D tracker using sole shape completion regularization [2].

- An efficient framework for 3D point cloud tracking is proposed to bridge the performance difference between the state estimation component and the target discrimination component. Due to the complementarity of these two components, our method achieved a significant improvement, from 40.09%/56.17% to 53.77%/69.65% (success/precision), on the KITTI tracking dataset.

2. Related Work

2.1. 2D Object Tracking

2.2. 3D Point Cloud Tracking

2.3. Jacobian Matrix Estimation

3. Method

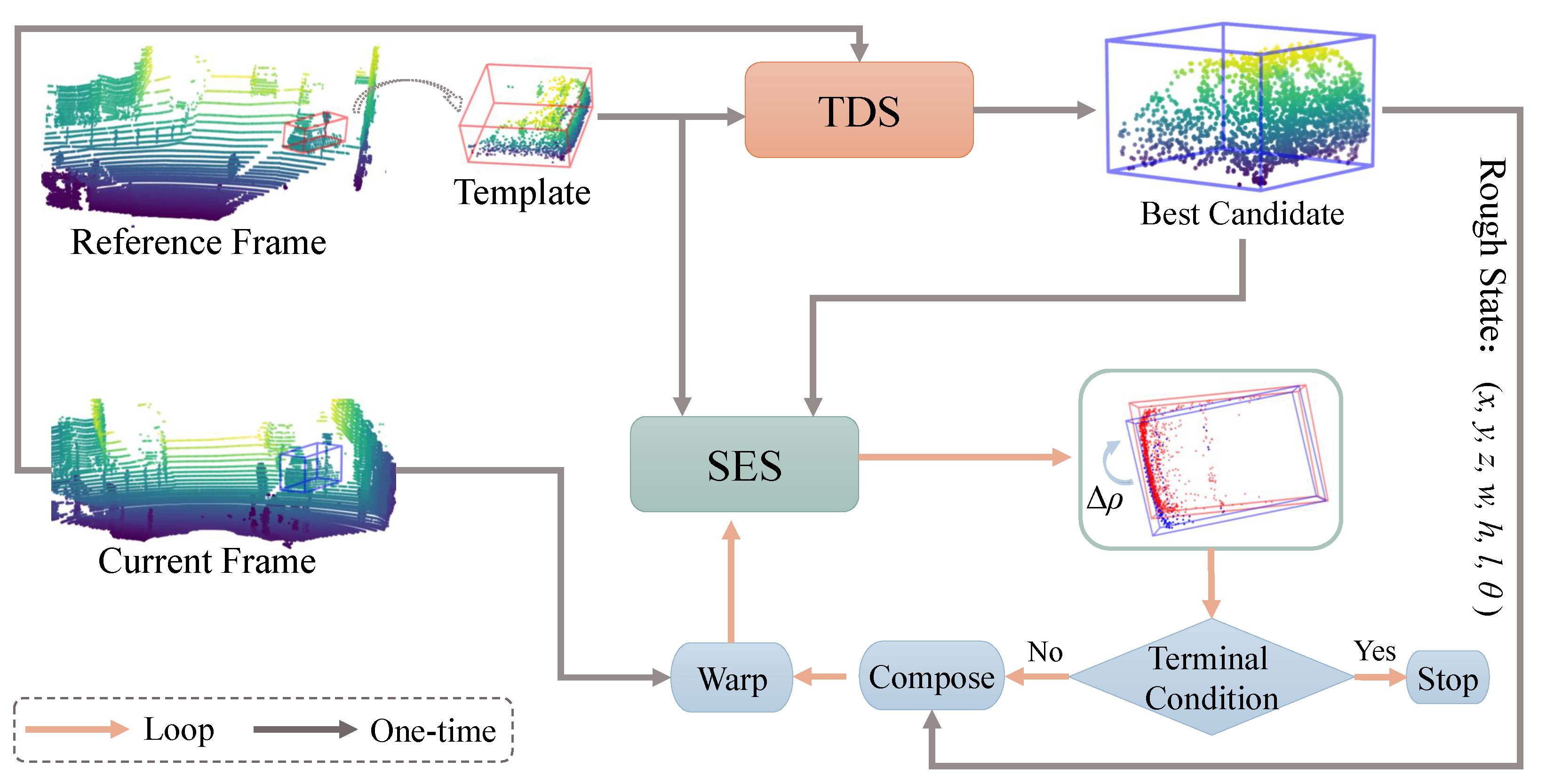

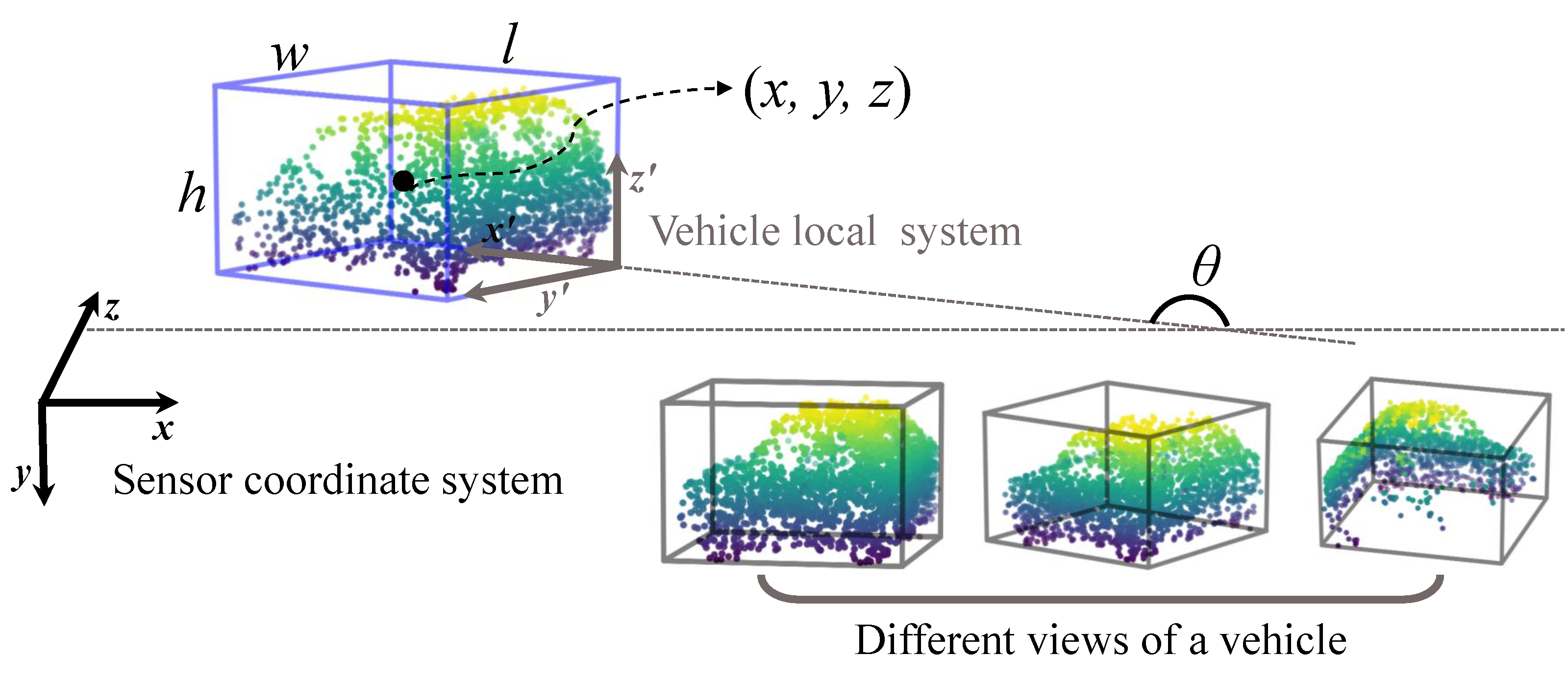

3.1. Overview

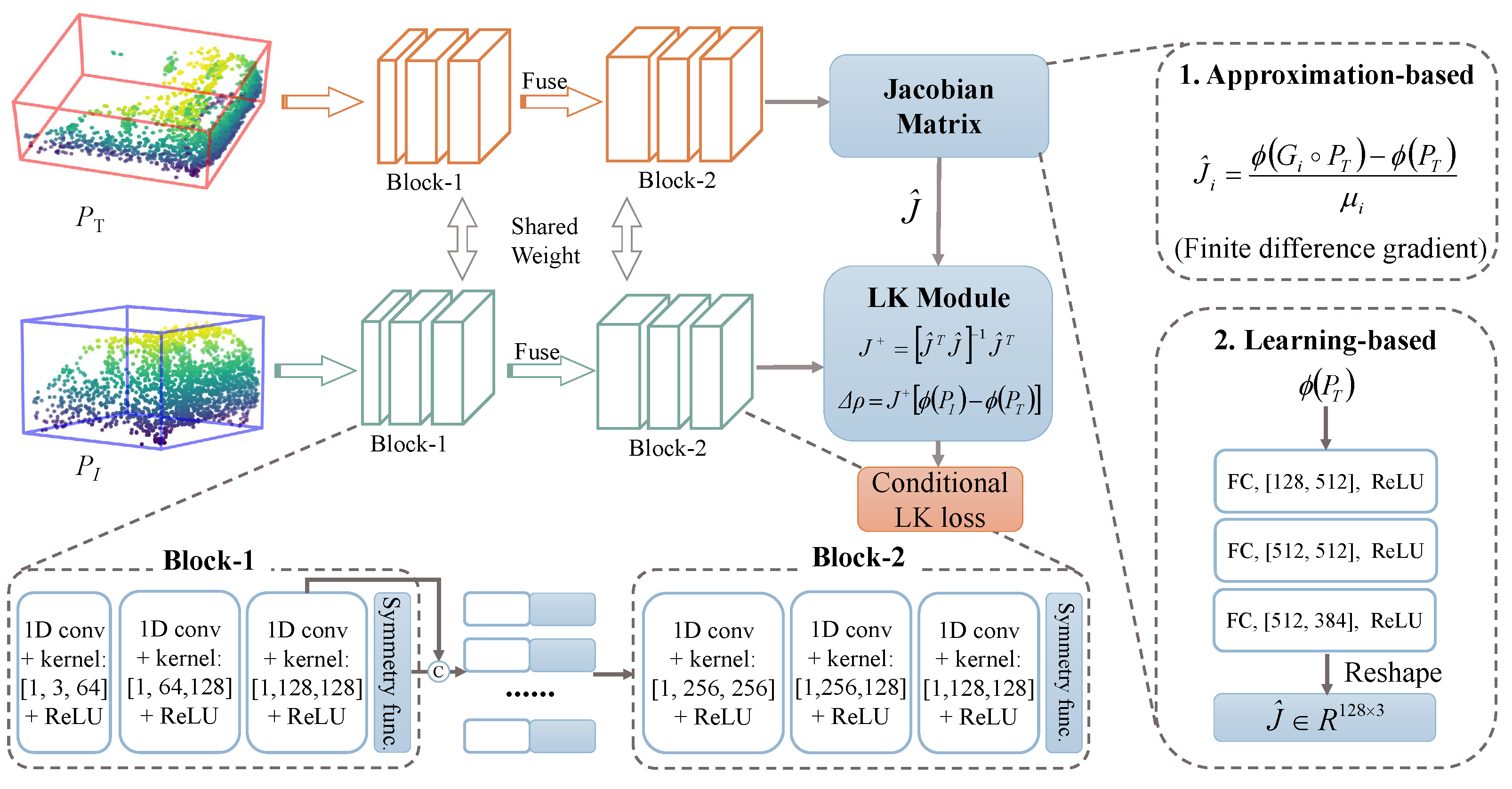

3.2. State Estimation Subnetwork

3.3. Target Discrimination Subnetwork

3.4. Online Tracking

| Algorithm 1: SETD online tracking. |

|

4. Experiments

4.1. Evaluation Metrics

4.2. Implementation Details

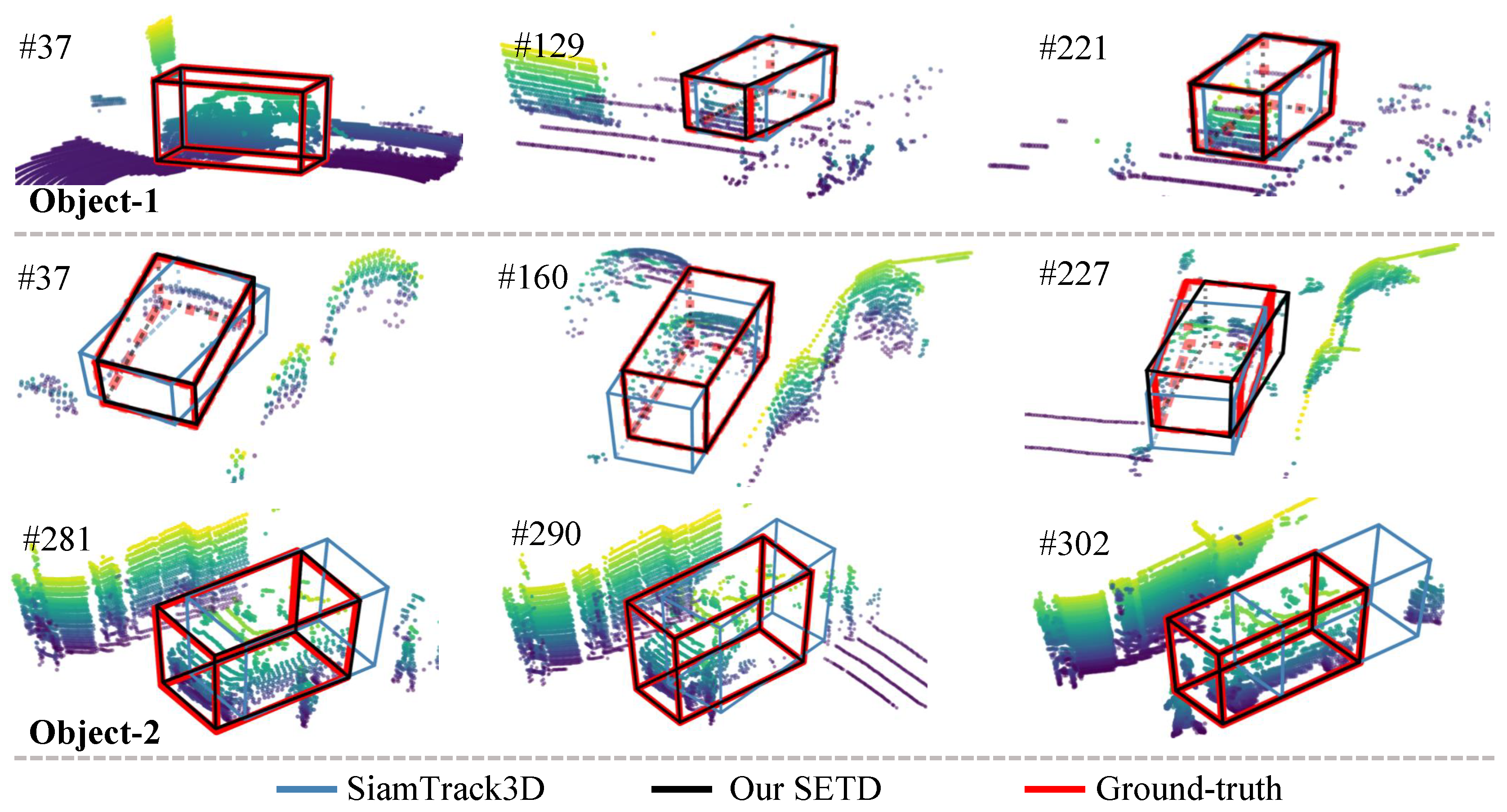

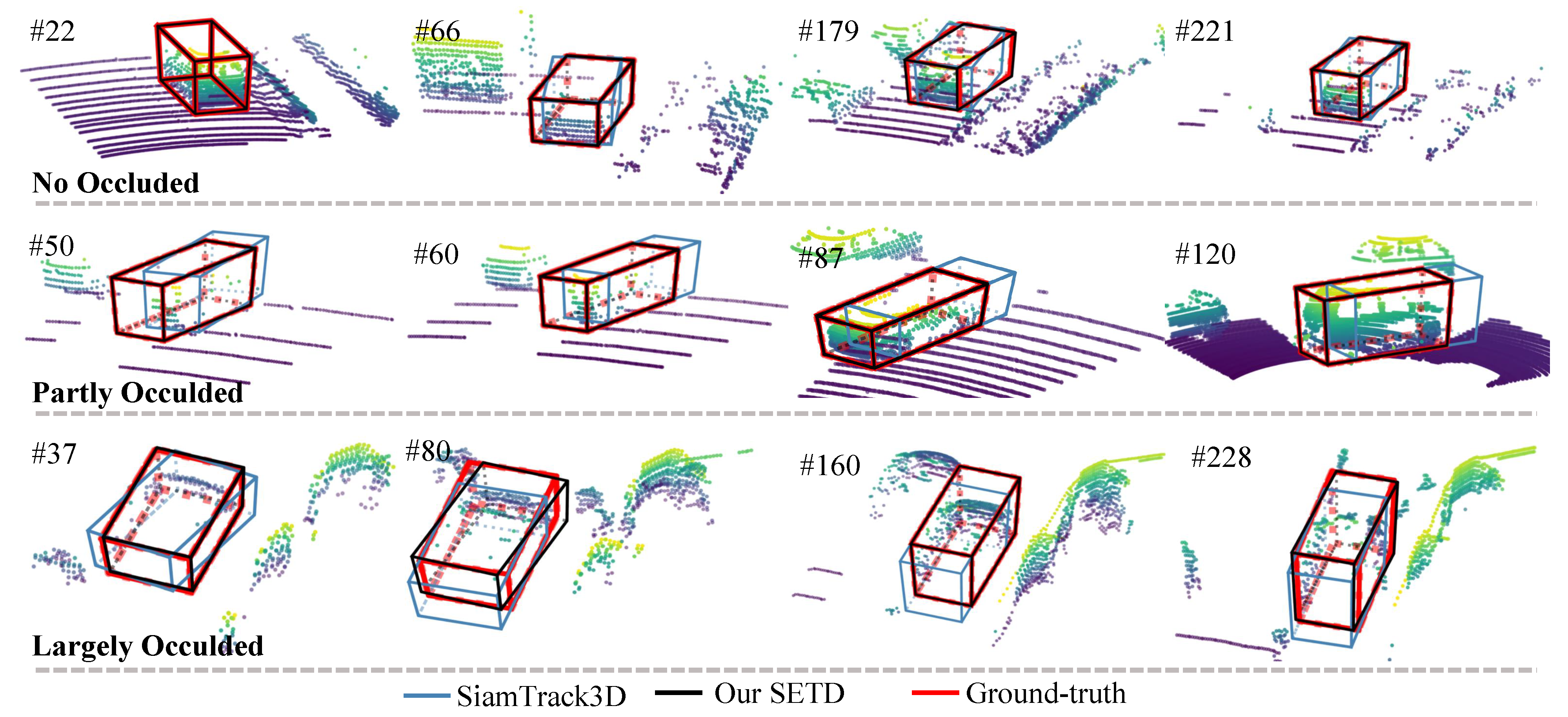

4.3. Performance Comparison

4.3.1. Comparison with Baseline

4.3.2. Comparison with Recent Methods

4.4. Ablation Studies

4.5. Failure Cases

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Details of Approximation-Based Solution

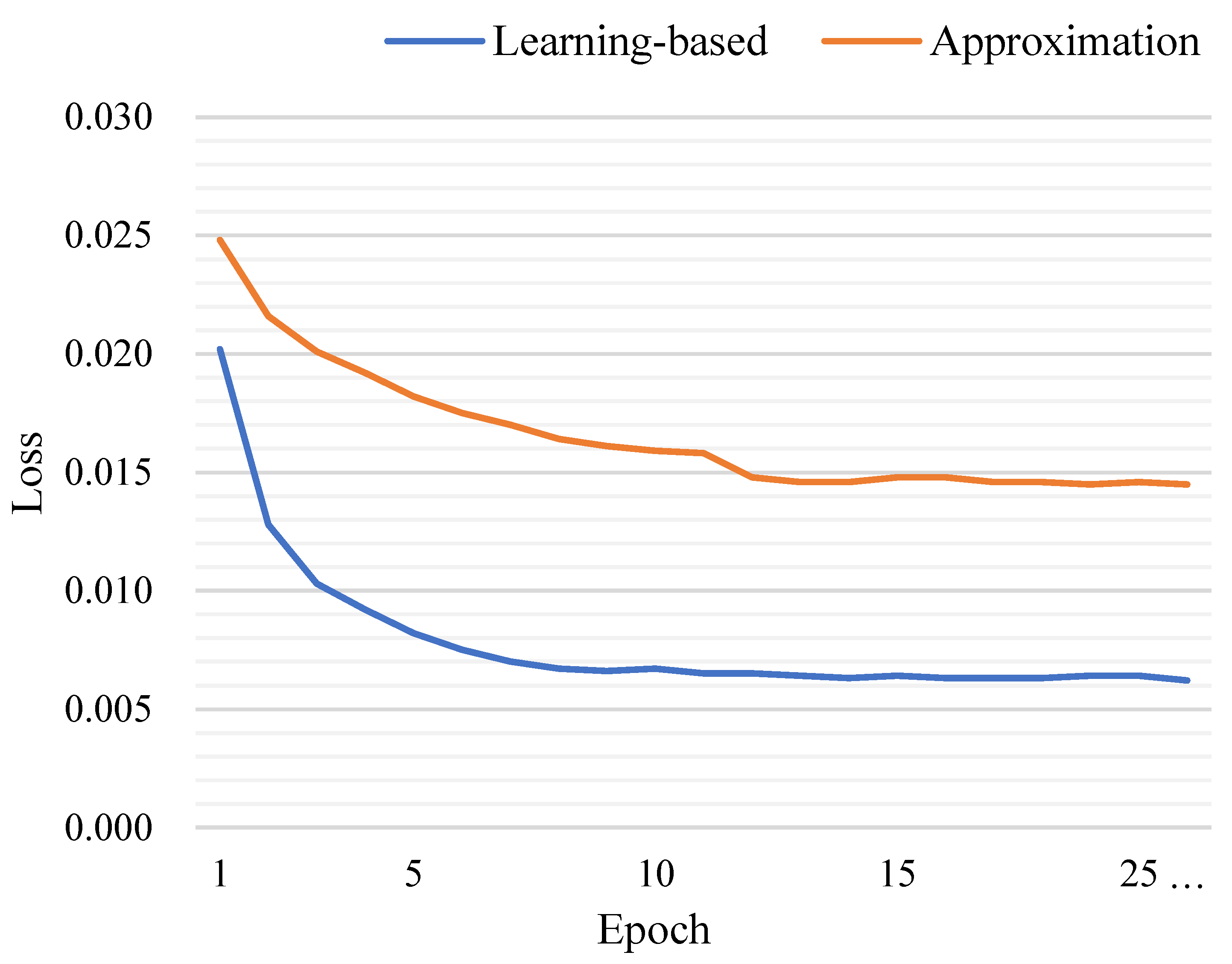

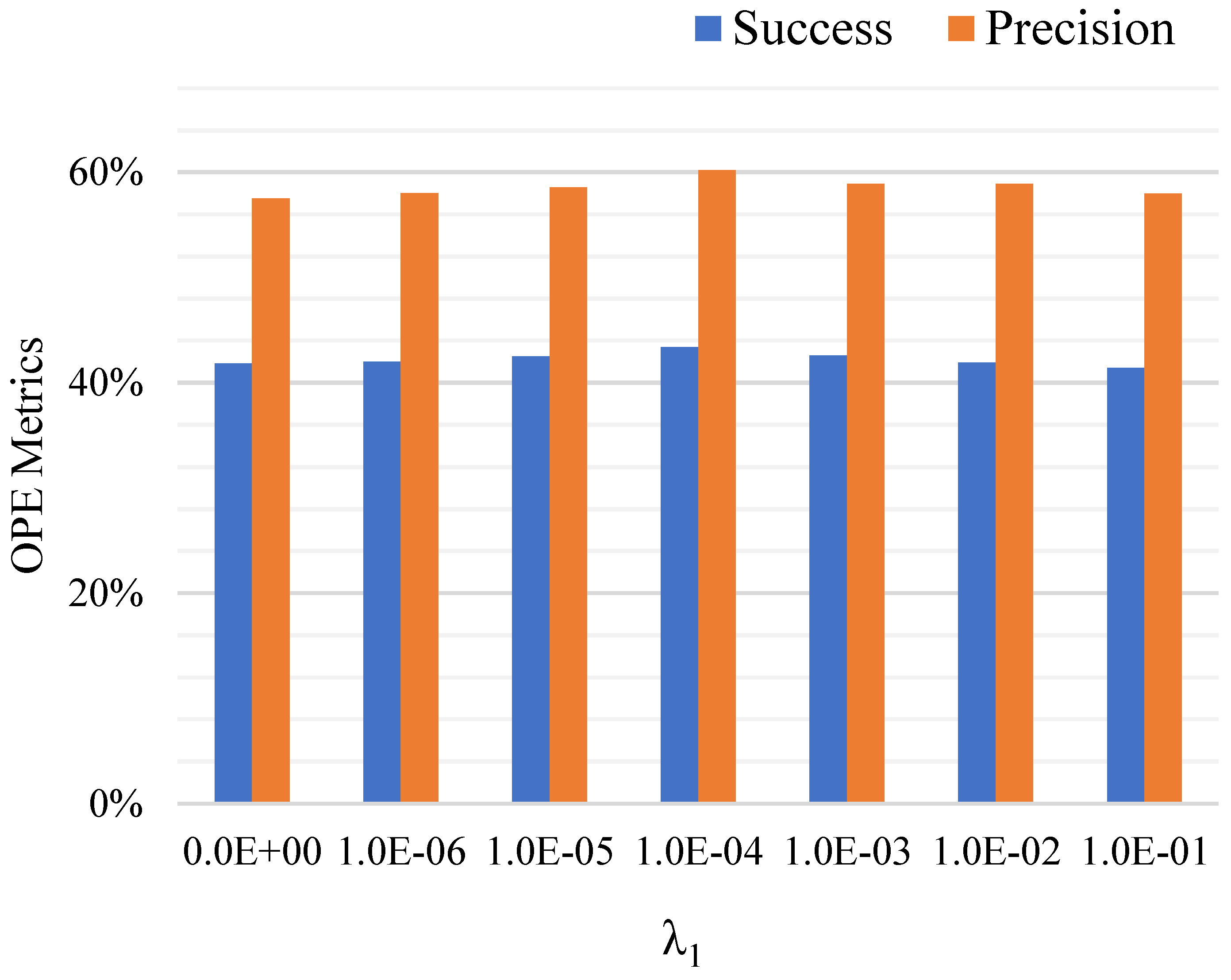

Appendix B. Analysis of the Jacobian Module

References

- Ma, Y.; Anderson, J.; Crouch, S.; Shan, J. Moving Object Detection and Tracking with Doppler LiDAR. Remote Sens. 2019, 11, 1154. [Google Scholar] [CrossRef] [Green Version]

- Giancola, S.; Zarzar, J.; Ghanem, B. Leveraging Shape Completion for 3D Siamese Tracking; CVPR: Salt Lake City, UT, USA, 2019; pp. 1359–1368. [Google Scholar]

- Comport, A.I.; Marchand, E.; Chaumette, F. Robust Model-Based Tracking for Robot Vision; IROS: Prague, Czech Republic, 2004; pp. 692–697. [Google Scholar]

- Wang, M.; Su, D.; Shi, L.; Liu, Y.; Miró, J.V. Real-time 3D Human Tracking for Mobile Robots with Multisensors; ICRA: Philadelphia, PA, USA, 2017; pp. 5081–5087. [Google Scholar]

- Luo, W.; Yang, B.; Urtasun, R. Fast and Furious: Real Time End-to-End 3D Detection, Tracking and Motion Forecasting with a Single Convolutional Net; CVPR: Salt Lake City, UT, USA, 2018; pp. 3569–3577. [Google Scholar]

- Schindler, K.; Ess, A.; Leibe, B.; Gool, L.V. Automatic detection and tracking of pedestrians from a moving stereo rig. ISPRS J. Photogramm. Remote Sens. 2010, 65, 523–537. [Google Scholar] [CrossRef]

- Nam, H.; Han, B. Learning Multi-Domain Convolutional Neural Networks for Visual Tracking; CVPR: Salt Lake City, UT, USA, 2016; pp. 4293–4302. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking; CVPR: Salt Lake City, UT, USA, 2016; pp. 1401–1409. [Google Scholar]

- Liu, Y.; Jing, X.Y.; Nie, J.; Gao, H.; Liu, J.; Jiang, G.P. Context-Aware Three-Dimensional Mean-Shift With Occlusion Handling for Robust Object Tracking in RGB-D Videos. IEEE Trans. Multimed. 2019, 21, 664–676. [Google Scholar] [CrossRef]

- Kart, U.; Kamarainen, J.K.; Matas, J. How to Make an RGBD Tracker? ECCV: Munich, Germany, 2018; pp. 148–161. [Google Scholar]

- Bibi, A.; Zhang, T.; Ghanem, B. 3D Part-Based Sparse Tracker with Automatic Synchronization and Registration; CVPR: Salt Lake City, UT, USA, 2016; pp. 1439–1448. [Google Scholar]

- Luber, M.; Spinello, L.; Arras, K.O. People Tracking in RGB-D Data With On-Line Boosted Target Models; IROS: Prague, Czech Republic, 2011; pp. 3844–3849. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3D Proposal Generation and Object Detection from View; ICRA: Philadelphia, PA, USA, 2018; pp. 5750–5757. [Google Scholar]

- Yang, B.; Luo, W.; Urtasun, R. PIXOR: Real-Time 3D Object Detection from Point Clouds; CVPR: Salt Lake City, UT, USA, 2018; pp. 7652–7660. [Google Scholar]

- Qi, H.; Feng, C.; Cao, Z.; Zhao, F.; Xiao, Y. P2B: Point-to-Box Network for 3D Object Tracking in Point Clouds; CVPR: Salt Lake City, UT, USA, 2020; pp. 6328–6337. [Google Scholar]

- Qi, C.R.; Litany, O.; He, K.; Guibas, L.J. Deep Hough Voting for 3D Object Detection in Point Clouds; ICCV: Seoul, Korea, 2019; pp. 9276–9285. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate Tracking by Overlap Maximization; CVPR: Salt Lake City, UT, USA, 2019; pp. 4655–4664. [Google Scholar]

- Wang, C.; Galoogahi, H.K.; Lin, C.H.; Lucey, S. Deep-LK for Efficient Adaptive Object Tracking; ICRA: Brisbane, Australia, 2018; pp. 626–634. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking; CVPR: Salt Lake City, UT, USA, 2017; pp. 6931–6939. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M. The Sixth Visual Object Tracking VOT2018 Challenge Results; ECCV: Munich, Germany, 2018; pp. 3–53. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. End-to-End Representation Learning for Correlation Filter Based Tracking; CVPR: Salt Lake City, UT, USA, 2017; pp. 5000–5008. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-Convolutional Siamese Networks for Object Tracking; ECCV: Munich, Germany, 2016; pp. 850–865. [Google Scholar]

- Held, D.; Thrun, S.; Savarese, S. Learning to Track at 100 FPS with Deep Regression Networks; ECCV: Munich, Germany, 2016; pp. 749–765. [Google Scholar]

- Jiang, B.; Luo, R.; Mao, J.; Xiao, T.; Jiang, Y. Acquisition of Localization Confidence for Accurate Object Detection; ECCV: Munich, Germany, 2018; pp. 816–832. [Google Scholar]

- Zhao, S.; Xu, T.; Wu, X.J.; Zhu, X.F. Adaptive feature fusion for visual object tracking. Pattern Recognit. 2021, 111, 107679. [Google Scholar] [CrossRef]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning Discriminative Model Prediction for Tracking; ICCV: Seoul, Korea, 2019; pp. 6181–6190. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation; CVPR: Salt Lake City, UT, USA, 2017; pp. 77–85. [Google Scholar]

- Lee, J.; Cheon, S.U.; Yang, J. Connectivity-based convolutional neural network for classifying point clouds. Pattern Recognit. 2020, 112, 107708. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection; CVPR: Salt Lake City, UT, USA, 2018; pp. 4490–4499. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3D Object Proposal Generation and Detection from Point Cloud; CVPR: Salt Lake City, UT, USA, 2019; pp. 770–779. [Google Scholar]

- Yi, L.; Zhao, W.; Wang, H.; Sung, M.; Guibas, L. GSPN: Generative Shape Proposal Network for 3D Instance Segmentation in Point Cloud; CVPR: Salt Lake City, UT, USA, 2019; pp. 3942–3951. [Google Scholar]

- Wang, W.; Yu, R.; Huang, Q.; Neumann, U. SGPN: Similarity Group Proposal Network for 3D Point Cloud Instance Segmentation; CVPR: Salt Lake City, UT, USA, 2018; pp. 2569–2578. [Google Scholar]

- Song, S.; Xiao, J. Tracking Revisited Using RGBD Camera: Unified Benchmark and Baselines; ICCV: Seoul, Korea, 2013; pp. 233–240. [Google Scholar]

- Held, D.; Levinson, J.; Thrun, S. Precision Tracking with Sparse 3D and Dense Color 2D Data; ICRA: Karlsruhe, Germany, 2013; pp. 1138–1145. [Google Scholar]

- Held, D.; Levinson, J.; Thrun, S.; Savarese, S. Robust real-time tracking combining 3D shape, color, and motion. Int. J. Robot. Res. 2016, 35, 30–49. [Google Scholar] [CrossRef]

- Spinello, L.; Arras, K.O.; Triebel, R.; Siegwart, R. A Layered Approach to People Detection in 3D Range Data; AAAI: Palo Alto, CA, USA, 2010; pp. 1625–1630. [Google Scholar]

- Xiao, W.; Vallet, B.; Schindler, K.; Paparoditis, N. Simultaneous detection and tracking of pedestrian from velodyne laser scanning data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 295–302. [Google Scholar] [CrossRef] [Green Version]

- Zou, H.; Cui, J.; Kong, X.; Zhang, C.; Liu, Y.; Wen, F.; Li, W. F-Siamese Tracker: A Frustum-based Double Siamese Network for 3D Single Object Tracking; IROS: Prague, Czech Republic, 2020; pp. 8133–8139. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space; NeurIPS: Vancouver, BC, Canada, 2017; pp. 5100–5109. [Google Scholar]

- Chaumette, F.; Seth, H. Visual servo control, Part I: Basic approaches. IEEE Robot. Autom. Mag. 2006, 13, 82–90. [Google Scholar] [CrossRef]

- Quentin, B.; Eric, M.; Juxi, L.; François, C.; Peter, C. Visual Servoing from Deep Neural Networks. In Proceedings of the Robotics: Science and Systems Workshop, Cambridge, MA, USA, 12–16 July 2017; pp. 1–6. [Google Scholar]

- Xiong, X.; la Torre, F.D. Supervised Descent Method and Its Applications to Face Alignment; CVPR: Salt Lake City, UT, USA, 2013; pp. 532–539. [Google Scholar]

- Lin, C.H.; Zhu, R.; Lucey, S. The Conditional Lucas-Kanade Algorithm; ECCV: Amsterdam, The Netherlands, 2016; pp. 793–808. [Google Scholar]

- Han, L.; Ji, M.; Fang, L.; Nießner, M. RegNet: Learning the Optimization of Direct Image-to-Image Pose Registration. arXiv 2018, arXiv:1812.10212. [Google Scholar]

- Baker, S.; Matthews, I. Lucas-Kanade 20 years on: A unifying framework. Int. J. Comput. Vis. 2004, 56, 221–255. [Google Scholar] [CrossRef]

- Aoki, Y.; Goforth, H.; Srivatsan, R.A.; Lucey, S. PointNetLK: Robust & Efficient Point Cloud Registration using PointNet; CVPR: Salt Lake City, UT, USA, 2019; pp. 7156–7165. [Google Scholar]

- Girshick, R.B. Fast R-CNN; ICCV: Santiago, Chile, 2015; pp. 1440–1448. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Hesai, I.S. PandaSet by Hesai and Scale AI. Available online: https://pandaset.org/ (accessed on 24 June 2021).

- Zarzar, J.; Giancola, S.; Ghanem, B. Efficient Bird Eye View Proposals for 3D Siamese Tracking. arXiv 2019, arXiv:1903.10168. [Google Scholar]

- Besl, P.J.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Sun, X.; Wei, Y.; Liang, S.; Tang, X.; Sun, J. Cascaded Hand Pose Regression; CVPR: Salt Lake City, UT, USA, 2015; pp. 824–832. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Qi, C.R.; Guibas, L.J. FlowNet3D: Learning Scene Flow in 3D Point Clouds; CVPR: Salt Lake City, UT, USA, 2019; pp. 529–537. [Google Scholar]

| Attribute | SiamTrack3D | SETD | ||

|---|---|---|---|---|

| Success (%) | Precision (%) | Success (%) | Precision (%) | |

| Visible | 37.38 | 55.14 | 53.87 | 68.75 |

| Occluded | 42.45 | 55.90 | 53.76 | 70.33 |

| Static | 38.01 | 53.37 | 54.55 | 70.10 |

| Dynamic | 40.78 | 58.42 | 48.46 | 66.34 |

| Method | 3D Bounding Box | 2D BEV Box | ||

|---|---|---|---|---|

| Success (%) | Precision (%) | Success (%) | Precision (%) | |

| SiamTrack3D-RPN | 36.30 | 51.00 | - | - |

| AVODTrack | 63.16 | 69.74 | 67.46 | 69.74 |

| P2B | 56.20 | 72.80 | - | - |

| SiamTrack3D | 40.09 | 56.17 | 48.89 | 60.13 |

| SiamTrack3D-Dense | 76.94 | 81.38 | 76.86 | 81.37 |

| ICP&TDS | 15.55 | 20.19 | 17.08 | 20.60 |

| ICP&TDS-Dense | 51.07 | 64.82 | 51.07 | 64.82 |

| SETD | 53.77 | 69.65 | 61.14 | 71.56 |

| SETD-Dense | 81.98 | 88.14 | 81.98 | 88.14 |

| Method | Easy | Middle | Hard | |||

|---|---|---|---|---|---|---|

| Success (%) | Precision (%) | Success (%) | Precision (%) | Success (%) | Precision (%) | |

| P2B | 53.49 | 59.97 | 35.76 | 40.56 | 19.13 | 19.64 |

| SiamTrack3D | 51.61 | 62.09 | 40.55 | 49.73 | 25.09 | 30.11 |

| SETD | 54.34 | 65.12 | 42.53 | 49.90 | 24.39 | 28.60 |

| Variants | TDS | SES | Iter. | Success (%) | Precision (%) |

|---|---|---|---|---|---|

| TDS-only | √ | 43.36 | 60.19 | ||

| SES-only | √ | 41.09 | 49.70 | ||

| Iter-non | √ | √ | 44.67 | 59.13 | |

| SETD | √ | √ | √ | 53.77 | 69.65 |

| Descriptor | Learning-Based | Approximation-Based | ||

|---|---|---|---|---|

| Success (%) | Precision (%) | Success (%) | Precision (%) | |

| Block-1 | 51.29 | 66.59 | 46.33 | 61.51 |

| Block-2 | 53.77 | 69.65 | 49.93 | 67.15 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, S.; Liu, X.; Liu, M.; Bian, Y.; Gao, J.; Yin, B. Learning the Incremental Warp for 3D Vehicle Tracking in LiDAR Point Clouds. Remote Sens. 2021, 13, 2770. https://doi.org/10.3390/rs13142770

Tian S, Liu X, Liu M, Bian Y, Gao J, Yin B. Learning the Incremental Warp for 3D Vehicle Tracking in LiDAR Point Clouds. Remote Sensing. 2021; 13(14):2770. https://doi.org/10.3390/rs13142770

Chicago/Turabian StyleTian, Shengjing, Xiuping Liu, Meng Liu, Yuhao Bian, Junbin Gao, and Baocai Yin. 2021. "Learning the Incremental Warp for 3D Vehicle Tracking in LiDAR Point Clouds" Remote Sensing 13, no. 14: 2770. https://doi.org/10.3390/rs13142770

APA StyleTian, S., Liu, X., Liu, M., Bian, Y., Gao, J., & Yin, B. (2021). Learning the Incremental Warp for 3D Vehicle Tracking in LiDAR Point Clouds. Remote Sensing, 13(14), 2770. https://doi.org/10.3390/rs13142770