1. Introduction

Coastal managers, engineers and scientists need coastal state information at small scales of days to weeks and meters to kilometers [

1]. Among others, the reasons are for determining storm impacts [

2], monitoring beach nourishment performed to mitigate coastal erosion [

3], recognizing rip currents [

4] and estimating the density and daily distribution of users in the beaches during summer [

5]. In the early 1980s, video remote sensing systems were introduced for monitoring of the coastal zone [

6,

7,

8,

9] in order to obtain data with higher temporal resolutions and lower economical and human efforts than the ones required by traditional field studies.

Qualitative information about beach dynamics [

10] or the presence of hydrodynamic [

11] and morphological [

12] patterns can be obtained from raw video images. Images have also been used, in a quantitative way, to locate the shoreline and study its evolution [

13,

14,

15], to determine the intertidal morphology [

16,

17,

18], to estimate the wave period, celerity and propagation direction [

19,

20] and to infer bathymetries [

21,

22]. For these latter applications, in which magnitudes in physical space are required, the accurate georeferencing of images is essential [

23,

24].

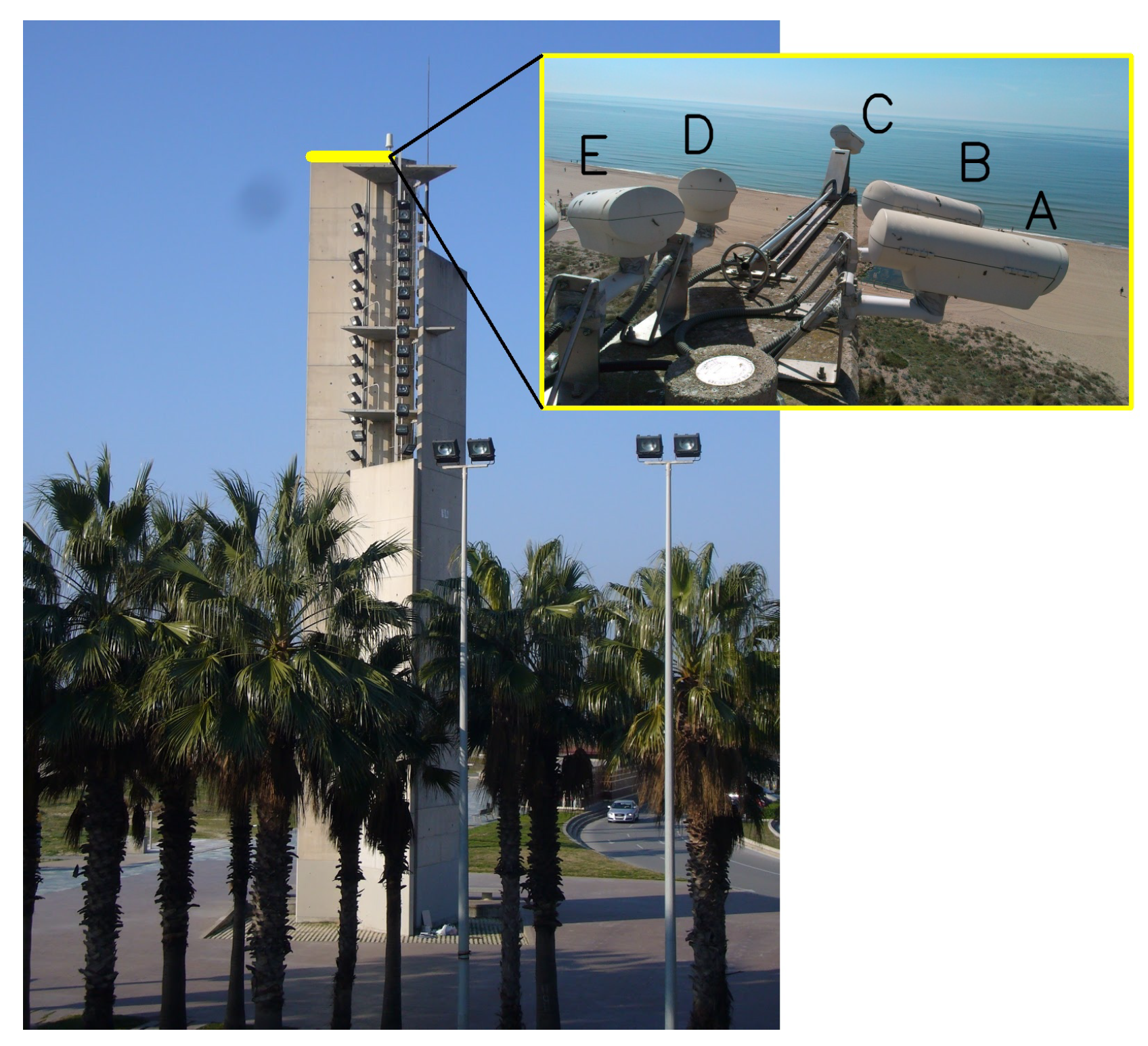

The transformation of images (2D) to real physical space (3D) is usually performed following photogrammetric procedures in which the characteristics of the optics (intrinsic parameters) and the location and orientation of the camera (extrinsic parameters) have to be obtained [

25,

26]. Intrinsic calibration yields optics parameters of the camera (distortion, pixel size and decentering) and allow elimination of the image distortion induced by the camera lens. Extrinsic calibration allows determining the camera position (

,

,

) and orientation (

,

,

),

Figure 1, which allows associating each pixel of the undistorted image with real world coordinates (providing, usually, the elevation

z).

Extrinsic calibrations are obtained using Ground Control Points (GCPs, pixels whose real-world coordinates are known). The GCPs can also be used for intrinsic calibration, which is often obtained experimentally in laboratory [

27,

28,

29]. Generally, Argus-like video monitoring systems are fully (intrinsic and extrinsic) calibrated at the time of installation, and then extrinsic calibrations are performed at a certain frequency (bianually, e.g., [

14]) or when a significant camera movement is noticed.

However, it has already been observed that calibration parameters change throughout the day for a variety of reasons, including thermal and wind effects [

30,

31], as well as over longer time periods, due to natural factors and/or human disturbance [

31,

32]. If the calibration of all individual images is not adjusted, the quantitative information obtained could have a significant error, leading to inaccurate quantification in shoreline trends, hydrodynamic data such as longshore currents, wave celerity or runup and, in turn, nearshore bathymetries.

Although the importance of intra-day fluctuations was already reported by Holman and Stanley [

7] in 2007, this problem has been disregarded in most studies with coastal video monitoring systems. Recently, Bouvier et al. [

31] analyzed, in a station consisting of five cameras, variations in the orientation angles of each of the cameras during one year. From the manual calibration of about 400 images per camera, they identified the primary environmental parameters (solar azimuthal angle and cloudiness) affecting the image displacements and developed an empirical model to successfully correct the camera motions.

This approach has the disadvantage that it does not automatically correct variations over long periods of time, in addition to requiring manual calibration of a large number of images. In order to achieve the highest number of calibrated images while minimizing human intervention, the strategy followed in other studies [

30,

32,

33,

34] has been to automatically identify objects and to use their location in calibrated images for their stabilization.

Pearre and Puleo [

30] located some features at selected Regions Of Interest (ROI) from a distorted calibrated image into other images to obtain the relative camera displacements between images and then recalculate the orientation of the cameras (tilt and azimuth angles) for each image. Relative shifts of the ROIs were then obtained by finding the correlation peak of correlation matrices. Accurate recognition of pixels corresponding to GCPs in images, using automatic algorithms such as SIFT (Scale-Invariant Feature Transform, [

35,

36]) or SURF (Speeded-Up Robust Features, [

37]), allowed not only to re-orient the cameras but also to compute the extrinsic calibration parameters of each individual image [

33,

34].

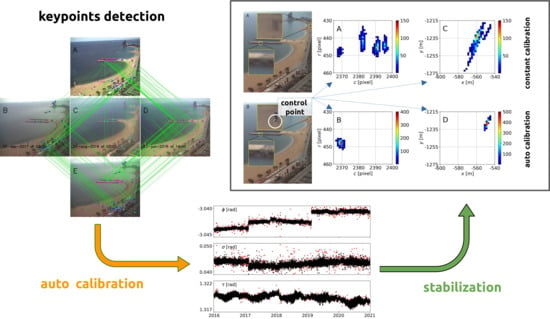

Recently, Rodriguez-Padilla et al. [

32] proposed a method to stabilize 5 years of Argus-like station images by identifying fixed elements on images and then correcting the orientation of the cameras by computing deviations with respect a reference image. In this study, CED (Canny Edge Detector, [

38]) was used to identify permanent features, such as corners or salients, under variable lighting conditions at given ROIs. In all imaging stabilization studies carried out to date in the coastal zone, they assumed that identifiable features were permanently present, which were used to correct the orientation of cameras or to carry out the complete calibration of the extrinsic parameters. However, in many Argus-like stations, when installed in natural environments, such as beaches or estuaries, the number of fixed features is very limited or non-existent over long periods.

In this paper, we explore image calibration by automatically identifying arbitrary features, i.e., without pre-selection, in the images to be calibrated and in previously calibrated images. Provided that fixed features will be considered very limited, it will not be possible to calibrate the images on the standard GCPs approach, as was done in [

24]. Alternatively, we relate pixels of pairs of images through homographies, the main assumption of this work being that the position of the camera position is nearly invariant.

As a counterpoint, there is no need to impose any constraint on either the intrinsic calibration parameters of the camera (lens distortion, pixel size and decentering) or on its rotation. The automatic camera calibration was applied to three video monitoring stations. Two of them operate on beaches of the city of Barcelona (Spain), where there are many fixed and permanent features, and the third one was on the beach of Castelldefels, located southwest of Barcelona, where the number of fixed points is very limited.

The main aim of this paper is to present a methodology, departing from a small set of manually calibrated images, to automatically calibrate images without the need of prescribing reference objects and to evaluate their feasibility. Next,

Section 2 presents the basics of mapping pixels corresponding to arbitrary objects between images and the methodology to process points in pairs of images in order to obtain automatically the calibration of an image.

Section 3 presents the results that will be discussed in

Section 4.

Section 5 draws our main conclusions for this work.

5. Conclusions

In this paper, an automatic calibration procedure was proposed to stabilize images from video monitoring stations. The proposed methodology was based on well-known feature detecting and matching algorithms and allows for massive automatic calibrations of an Argus camera provided a set, or basis, of calibrated images. From a theoretical point of view regarding computer vision, the single hypothesis supporting the approach is that the camera position can be regarded to be nearly constant. In the cases considered here (Argus-like station), we proved that the intrinsic parameters and the camera position can actually be considered constant (case 0). However, the procedure proposed here was able to manage the case in which intrinsic calibration parameters change in time, which makes the approach valid for CoastSnap stations.

The number of images of the basis can be chosen arbitrarily (here, through the required pairs, ) and, the higher this is, the more images can be properly calibrated. All the automatic calibrations are performed directly through the basis of images, i.e., second or higher order generations of automatic calibrations have not been considered to avoid error accumulations. If the calibrations are to be applied to analyze the water zone (e.g., for bathymetric inversion), we recommend that the horizon line is introduced as an input in the basis calibration.

The proposed methodology offers the automatic calibration of an image together with the homography error f and the number of pairs K that give a measure of the reliability of the calibration itself. Imposing and , the percentage of calibrated images ranges from for the worst conditioned case (Castelldefels beach, with very few features) to (high resolution cameras in Barcelona, where there are plenty of fixed features), the errors in pixels being significantly reduced (e.g., from to in the analyzed case).