Inverse Synthetic Aperture Radar Sparse Imaging Exploiting the Group Dictionary Learning

Abstract

:1. Introduction

2. Imaging Model

2.1. Model of ISAR Measurements

2.2. Sparse Imaging Model

3. DL-Based Sparse Imaging

3.1. Off-Line DL Based Sparse Imaging

3.2. On-Line DL-Based Sparse Imaging

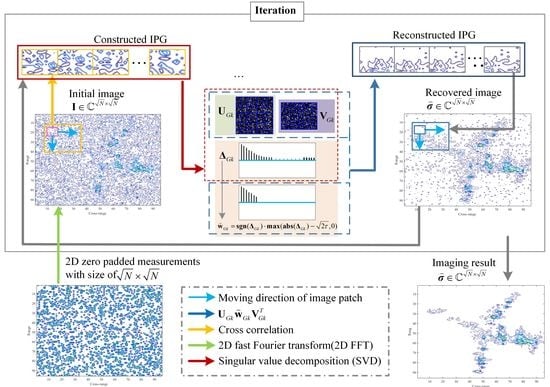

4. GDL-Based Sparse Imaging

4.1. Construction of Image Patch Group

4.2. ISAR Image Patch Group Based Imaging Model

4.3. Group Dictionary Learning Based Sparse Imaging

4.4. Group Dictionary Learning

4.5. Group Sparse Representation and Target Image Reconstruction

5. Experimental Results

5.1. Imaging Data and Parameters

5.2. Image Quality Evaluation

5.3. Imaging Results of Real Data

5.4. Quantitative Evaluation of Image Quality

5.5. Discussion on the Parameter Setting

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix B

References

- Chen, V.C.; Martorella, M. Principles of Inverse Synthetic Aperture Radar SAR Imaging; Scitech: Raleigh, NC, USA, 2014. [Google Scholar]

- Cetin, M.; Karl, W.C. Feature-enhanced synthetic aperture radar image formation based on nonquadratic regularization. IEEE Trans. Image Process. 2001, 10, 623–631. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cetin, M.; Karl, W.C.; Castanon, D.A. Feature enhancement and ATR performance using nonquadratic optimization-based SAR imaging. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1375–1395. [Google Scholar]

- Zhang, X.; Bai, T.; Meng, H.; Chen, J. Compressive Sensing-Based ISAR Imaging via the Combination of the Sparsity and Nonlocal Total Variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 990–994. [Google Scholar] [CrossRef]

- Xu, Z.; Wu, Y.; Zhang, B.; Wang, Y. Sparse radar imaging based on L1/2 regularization theory. Chin. Ence Bull. 2018, 63, 1306–1319. [Google Scholar] [CrossRef]

- Wang, M.; Yang, S.; Liu, Z.; Li, Z. Collaborative Compressive Radar Imaging With Saliency Priors. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1245–1255. [Google Scholar] [CrossRef]

- Samadi, S.; Cetin, M.; Masnadi-Shirazi, M.A. Sparse representation-based synthetic aperture radar imaging. IET Radar Sonar Navig. 2011, 5, 182–193. [Google Scholar] [CrossRef] [Green Version]

- Raj, R.G.; Lipps, R.; Bottoms, A.M. Sparsity-based image reconstruction techniques for ISAR imaging. In Proceedings of the 2014 IEEE Radar Conference, Cincinnati, OH, USA, 19–23 May 2014; pp. 0974–0979. [Google Scholar] [CrossRef]

- Wang, L.; Loffeld, O.; Ma, K.; Qian, Y. Sparse ISAR imaging using a greedy Kalman filtering. Signal Process. 2017, 138, 1. [Google Scholar] [CrossRef]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An Algorithm for Designing Overcomplete Dictionaries for Sparse Representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Rubinstein, R.; Peleg, T.; Elad, M. Analysis K-SVD: A Dictionary-Learning Algorithm for the Analysis Sparse Model. IEEE Trans. Signal Process. 2013, 61, 661–677. [Google Scholar] [CrossRef] [Green Version]

- Ojha, C.; Fusco, A.; Pinto, I.M. Interferometric SAR Phase Denoising Using Proximity-Based K-SVD Technique. Sensors 2019, 19, 2684. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM Review 2001, 43, 129–159. [Google Scholar] [CrossRef] [Green Version]

- Soğanlui, A.; Cetin, M. Dictionary learning for sparsity-driven SAR image reconstruction. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 1693–1697. [Google Scholar] [CrossRef]

- Hu, C.; Wang, L.; Loffeld, O. Inverse synthetic aperture radar imaging exploiting dictionary learning. In Proceedings of the 2018 IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 1084–1088. [Google Scholar] [CrossRef]

- Kindermann, S.; Osher, S.; Jones, P.W. Deblurring and Denoising of Images by Nonlocal Functionals. Multiscale Model. Simul. 2005, 4, 1091–1115. [Google Scholar] [CrossRef]

- Elmoataz, A.; Lezoray, O.; Bougleux, S. Nonlocal Discrete Regularization on Weighted Graphs: A Framework for Image and Manifold Processing. IEEE Trans. Image Process. 2008, 17, 1047–1060. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peyré, G. Image Processing with Nonlocal Spectral Bases. Multiscale Model. Simul. 2008, 7, 703–730. [Google Scholar] [CrossRef] [Green Version]

- Jung, M.; Bresson, X.; Chan, T.F.; Vese, L.A. Nonlocal Mumford-Shah Regularizers for Color Image Restoration. IEEE Trans. Image Process. 2011, 20, 1583–1598. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.; Zhao, D.; Jiang, F.; Gao, W. Structural Group Sparse Representation for Image Compressive Sensing Recovery. In Proceedings of the 2013 Data Compression Conference, Snowbird, UT, USA, 20–22 March 2013; pp. 331–340. [Google Scholar] [CrossRef]

- Varanasi, M.K.; Aazhang, B. Parametric generalized Gaussian density estimation. J. Acoust. Soc. Am. 1989, 86, 1404–1415. [Google Scholar] [CrossRef]

- Hu, C.; Wang, L.; Sun, L.; Loffeld, O. Inverse Synthetic Aperture Radar Imaging Using Group Based Dictionary Learning. In Proceedings of the 2018 China International SAR Symposium (CISS), Shanghai, China, 10–12 October 2018; pp. 1–5. [Google Scholar]

- Lazarov, A.; Minchev, C. ISAR geometry, signal model, and image processing algorithms. IET Radar Sonar Navig. 2017, 11, 1425–1434. [Google Scholar] [CrossRef]

- Tran, H.T.; Giusti, E.; Martorella, M.; Salvetti, F.; Ng, B.W.H.; Phan, A. Estimation of the total rotational velocity of a non-cooperative target using a 3D InISAR system. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, VA, USA, 10–15 May 2015; pp. 0937–0941. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, D.; Zhao, C.; Xiong, R.; Ma, S.; Gao, W. Image Compressive Sensing Recovery via Collaborative Sparsity. IEEE J. Emerg. Sel. Top. Circuits Syst. 2012, 2, 380–391. [Google Scholar] [CrossRef]

- Zhu, D.; Wang, L.; Yu, Y.; Tao, Q.; Zhu, Z. Robust ISAR Range Alignment via Minimizing the Entropy of the Average Range Profile. IEEE Geosci. Remote Sens. Lett. 2009, 6, 204–208. [Google Scholar] [CrossRef]

- Ling, W.; Dai, Y.Z.; Zhao, D.Z. Study on Ship Imaging Using SAR Real Data. J. Electron. Inf. Technol. 2007, 29, 401. [Google Scholar] [CrossRef]

- Wang, L.; Loffeld, O. ISAR imaging using a null space l1 minimizing Kalman filter approach. In Proceedings of the 2016 4th International Workshop on Compressed Sensing Theory and its Applications to Radar, Sonar and Remote Sensing (CoSeRa), Aachen, Germany, 19–22 September 2016; pp. 232–236. [Google Scholar] [CrossRef]

- Bacci, A.; Giusti, E.; Cataldo, D.; Tomei, S.; Martorella, M. ISAR resolution enhancement via compressive sensing: A comparison with state of the art SR techniques. In Proceedings of the 2016 4th International Workshop on Compressed Sensing Theory and Its Applications to Radar, Sonar and Remote Sensing (CoSeRa), Aachen, Germany, 19–22 September 2016; pp. 227–231. [Google Scholar] [CrossRef]

| Data | S_r_data | S_ratios (Measurements) | Sparsity |

|---|---|---|---|

| Plane data | 100 × 80 | 25% (2000), 50% (4000) | 900 |

| Ship data | 96 × 96 | 50% (4608) | 841 |

| Data | P_size | P_step | l | |||

|---|---|---|---|---|---|---|

| Plane data 1 | 64 | 2 | 16 × 16 | 64 | 17 | 0.02 |

| Plane data 2 | 64 | 2 | 16 × 16 | 64 | 17 | 0.03 |

| Ship data 1 | 64 | 2 | 16 × 16 | 64 | 17 | 0.035 |

| Methods | FA | MD | TCR(dB) | ENT | IC | Time(s) | |

|---|---|---|---|---|---|---|---|

| GKF | 144 | 209 | 50.0179 | 5.3205 | 8.0850 | 1.3408 × 10 | |

| Plane | ONDL | 170 | 173 | 48.6525 | 5.4674 | 7.7229 | 8.2370 |

| data 1 | OFDL | 173 | 130 | 48.9775 | 5.5550 | 7.5045 | 5.9370 |

| GDL | 70 | 102 | 57.1222 | 5.0482 | 9.5376 | 3.3620 | |

| GKF | 86 | 133 | 55.5930 | 5.3800 | 8.1449 | 1.221 × 10 | |

| Plane | ONDL | 45 | 187 | 53.4593 | 5.3152 | 8.4299 | 7.2510 |

| data 2 | OFDL | 55 | 125 | 59.9737 | 5.3395 | 8.3847 | 5.8510 |

| GDL | 52 | 104 | 60.3972 | 5.2580 | 8.7278 | 3.3620 | |

| GKF | 88 | 132 | 56.3161 | 5.6036 | 7.5965 | 1.3301 × 10 | |

| Ship | ONDL | 155 | 126 | 53.6296 | 5.6388 | 7.3239 | 6.3620 |

| data 1 | OFDL | 148 | 154 | 52.5180 | 5.7109 | 7.3772 | 4.3620 |

| GDL | 20 | 55 | 68.4828 | 5.1813 | 9.1454 | 4.1526 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, C.; Wang, L.; Zhu, D.; Loffeld, O. Inverse Synthetic Aperture Radar Sparse Imaging Exploiting the Group Dictionary Learning. Remote Sens. 2021, 13, 2812. https://doi.org/10.3390/rs13142812

Hu C, Wang L, Zhu D, Loffeld O. Inverse Synthetic Aperture Radar Sparse Imaging Exploiting the Group Dictionary Learning. Remote Sensing. 2021; 13(14):2812. https://doi.org/10.3390/rs13142812

Chicago/Turabian StyleHu, Changyu, Ling Wang, Daiyin Zhu, and Otmar Loffeld. 2021. "Inverse Synthetic Aperture Radar Sparse Imaging Exploiting the Group Dictionary Learning" Remote Sensing 13, no. 14: 2812. https://doi.org/10.3390/rs13142812

APA StyleHu, C., Wang, L., Zhu, D., & Loffeld, O. (2021). Inverse Synthetic Aperture Radar Sparse Imaging Exploiting the Group Dictionary Learning. Remote Sensing, 13(14), 2812. https://doi.org/10.3390/rs13142812