A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5

Abstract

:1. Introduction

2. Materials and Methods

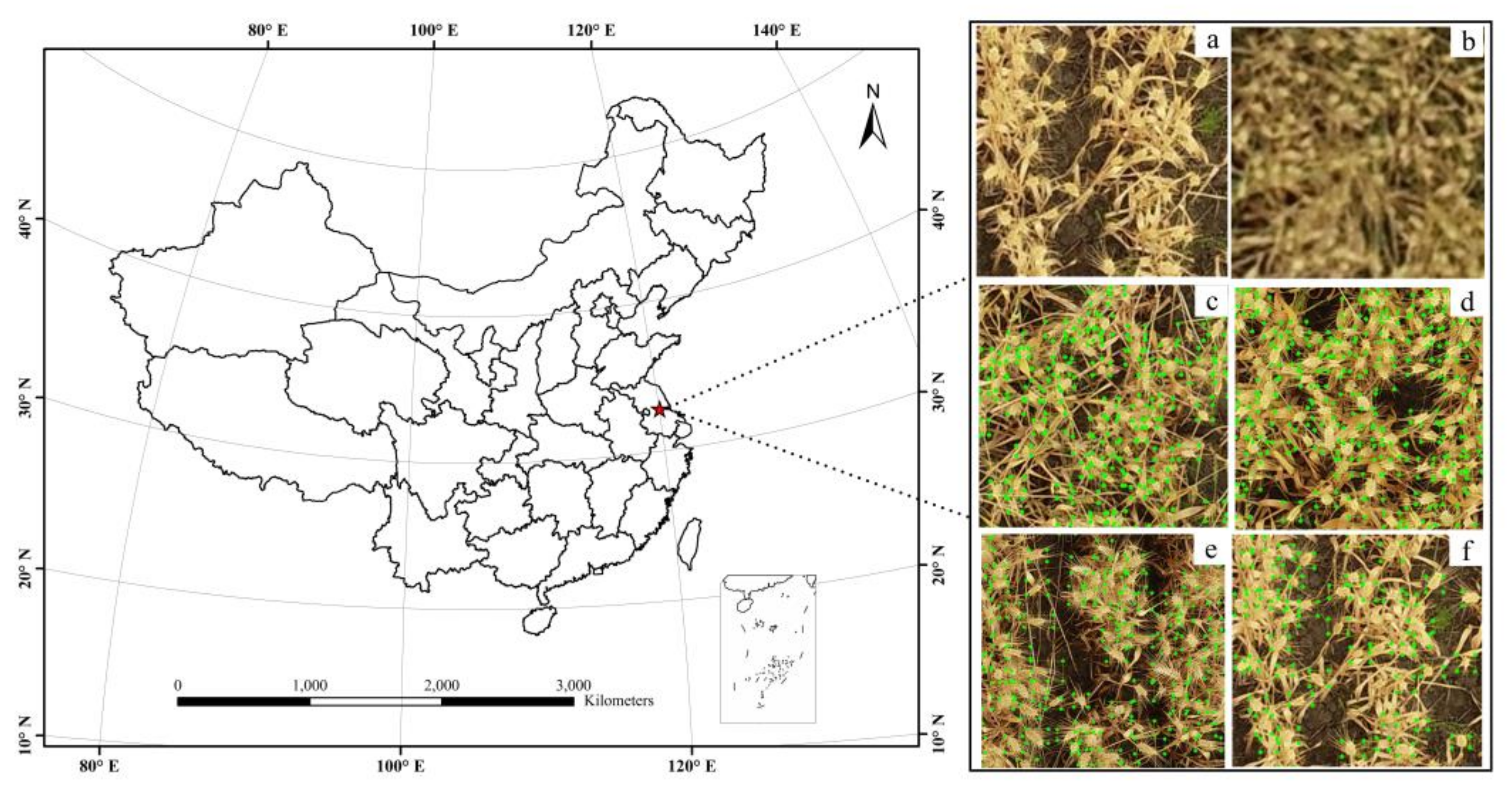

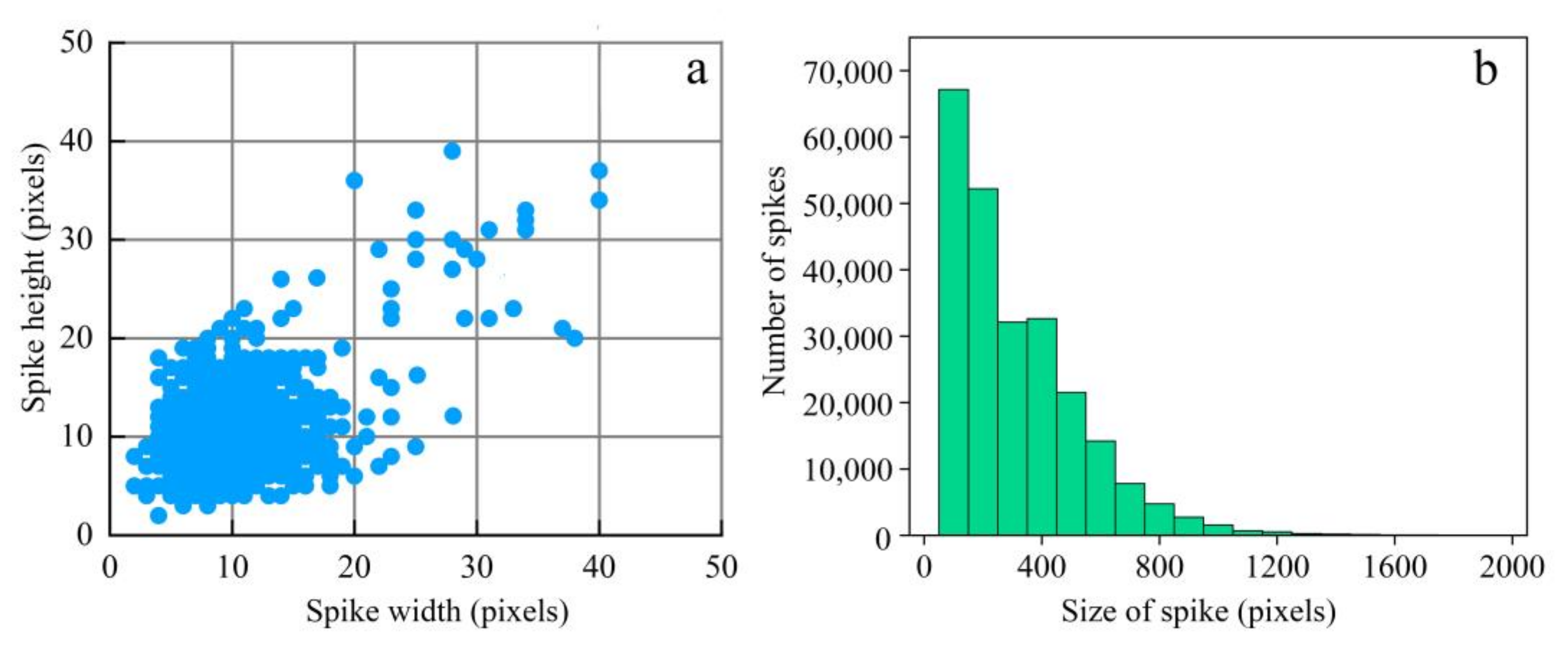

2.1. UAV Wheat Spike Images

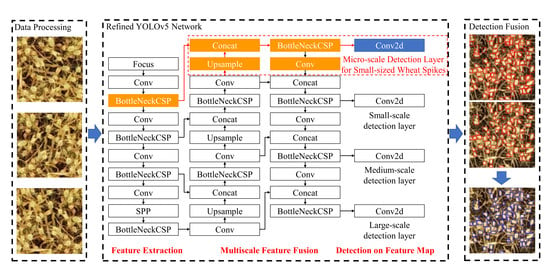

2.2. Wheat Spike Detection Method

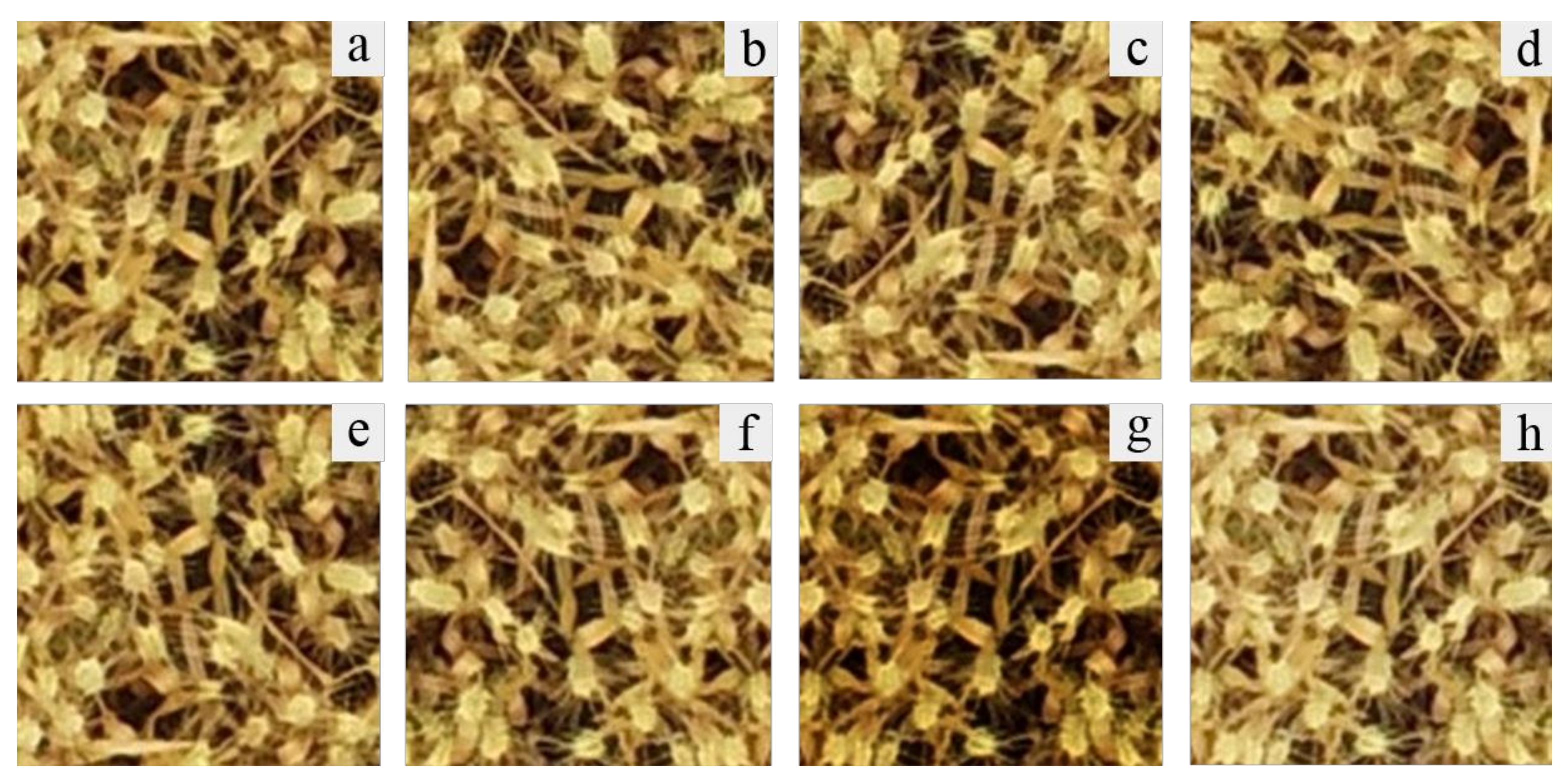

2.3. Data Augmentation

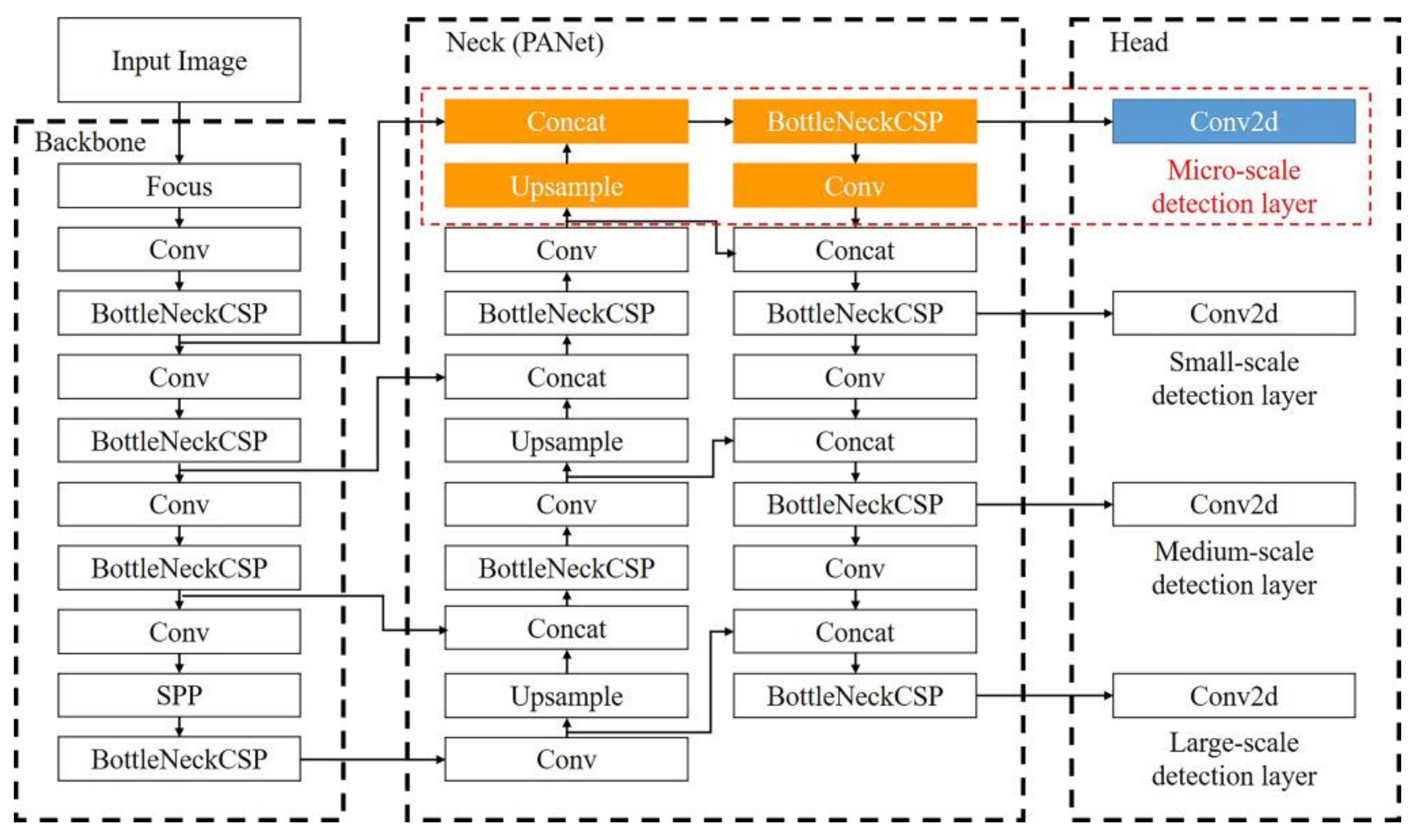

2.4. YOLOv5 Network Structure and Refinements

2.4.1. Microscale Detection Layer

2.4.2. Hierarchical Setting of Anchor Box Size Based on k-Means

| Algorithm 1. The procedure for setting sizes of anchors |

| Input: ground truth boxes Output: anchor boxes 1: Select S cluster center points of anchor boxes 2: repeat 3: Step: 4: Calculate the distance between and by Equations (1) and (2) 5: Recalculate the cluster center of S by Equations (3) and (4) 6: until clusters converge |

2.4.3. Improvement of Confidence Loss Function of Detection Layer Based on IoU

| Algorithm 2. The procedure of setting weights for confidence loss ei |

| Input: a set of UAV images I Output: weights of detection layers 1: Input the images I into the network for training 2: repeat 3: Step: 4: Calculate p and qm for detection layers 5: until training epochs reach K 6: Calculate pi and for each detection layers Di 7: Normalize final weights of detection layers by Equations (7) and (8) |

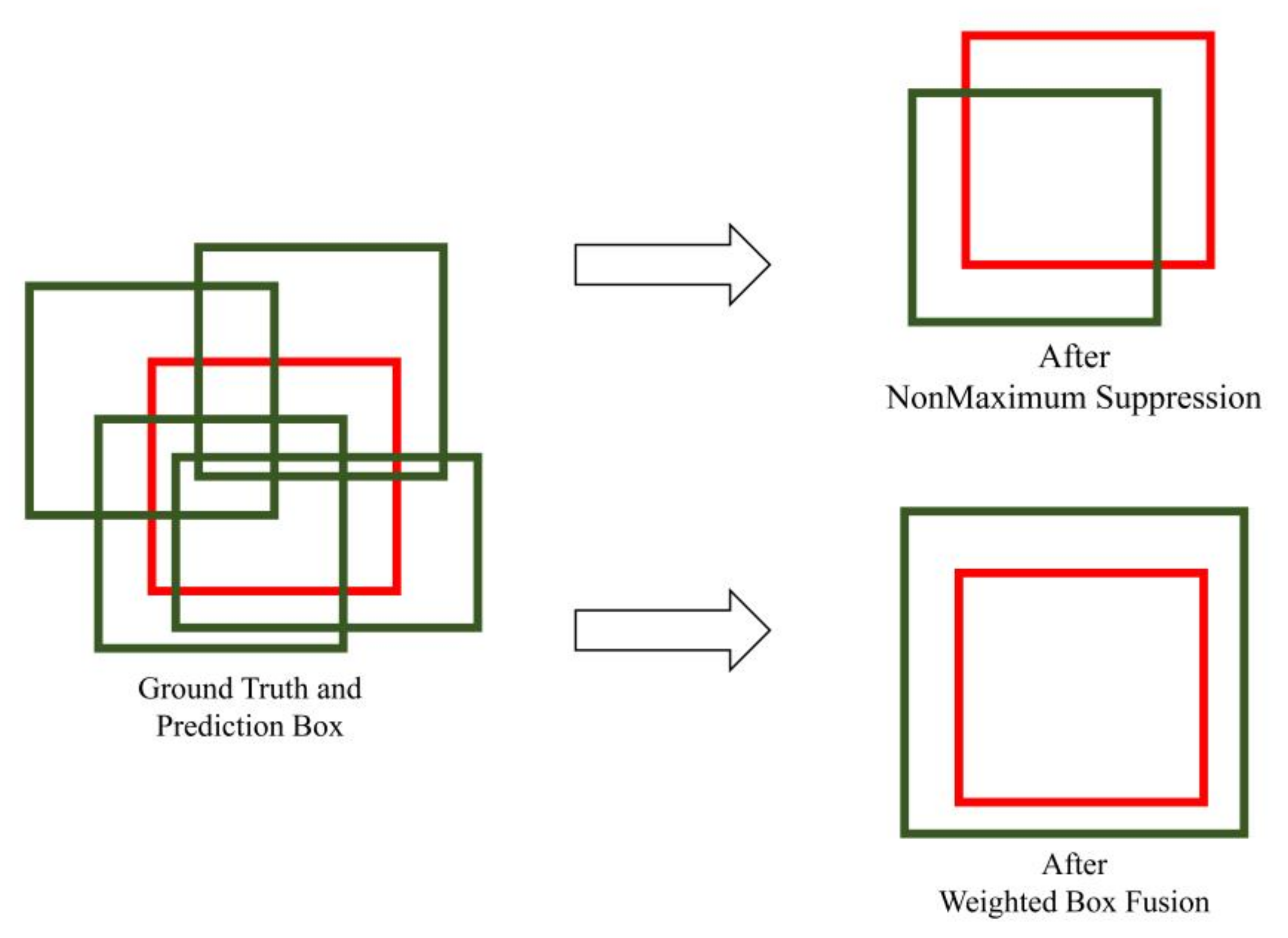

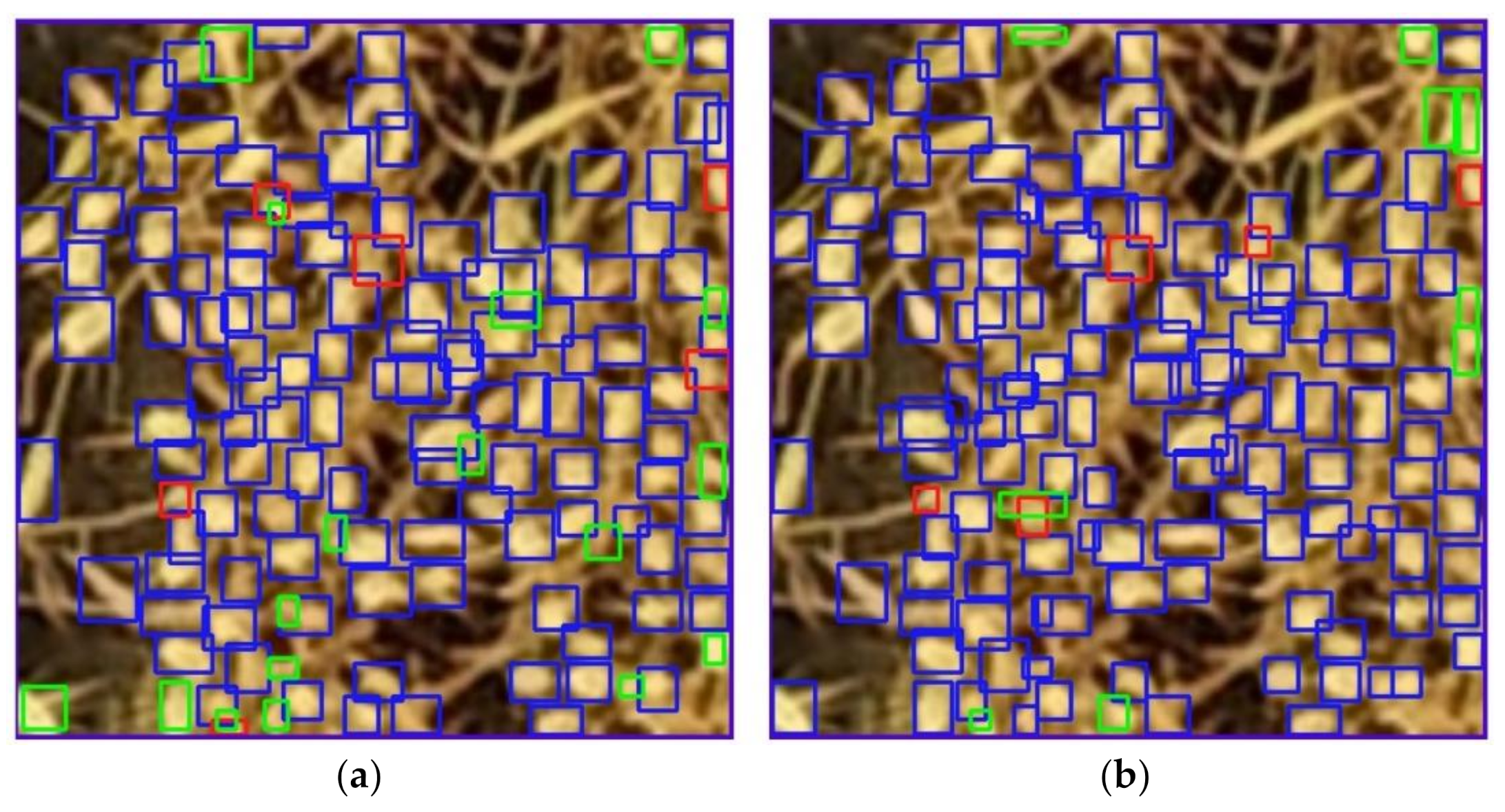

2.4.4. Detection Box Fusion Based on Confidence Weight

3. Experimental Setup and Results

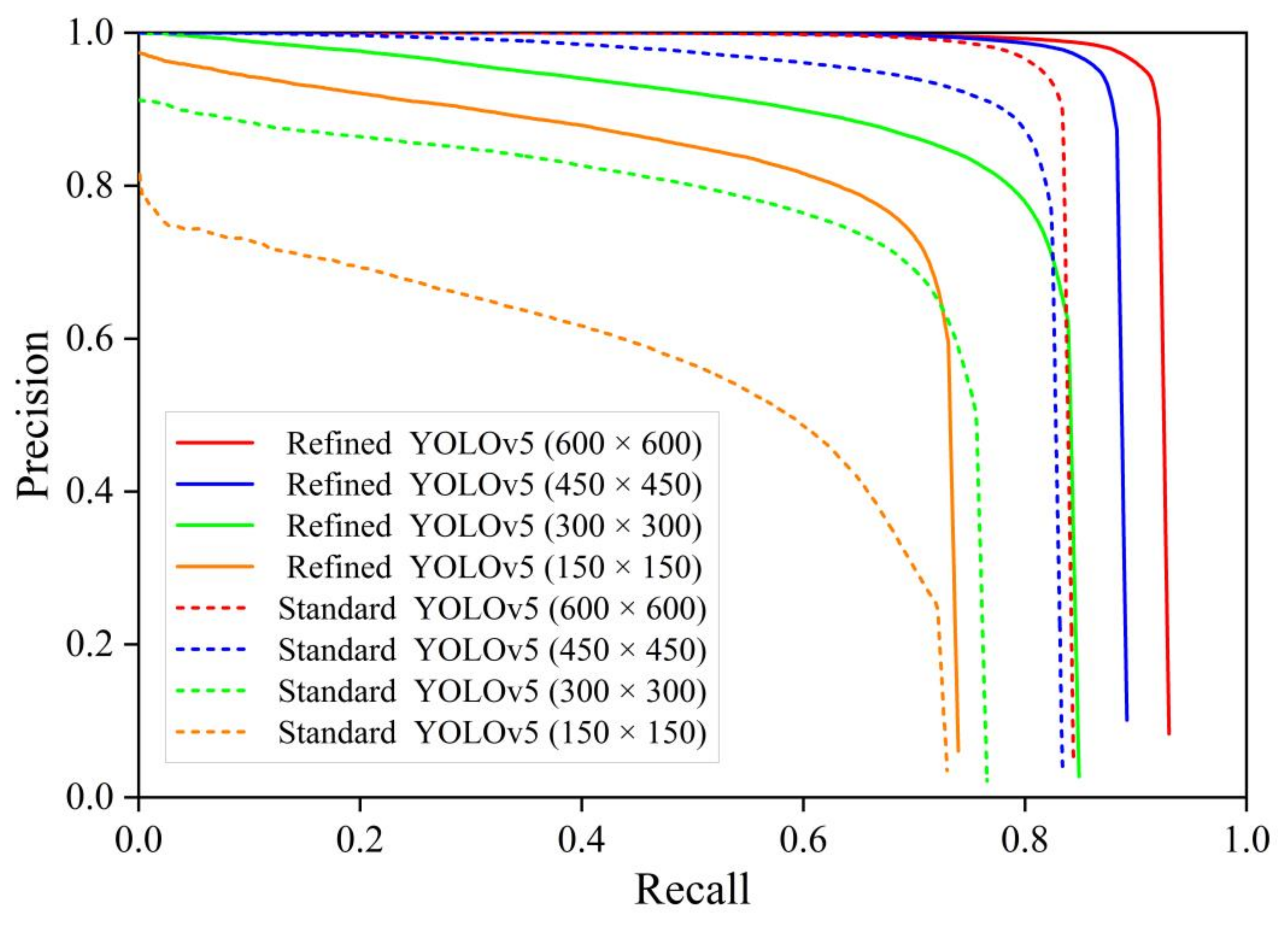

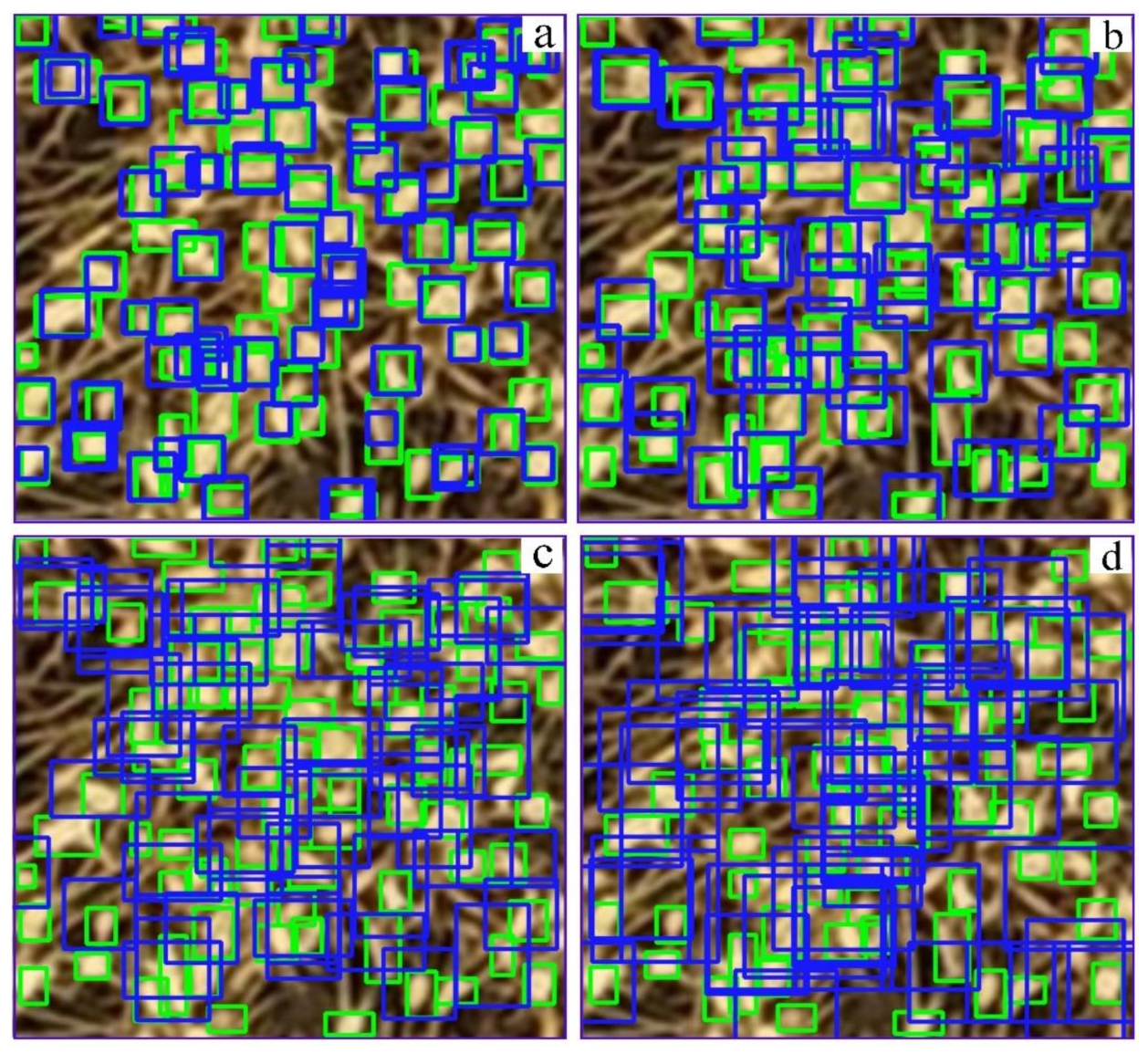

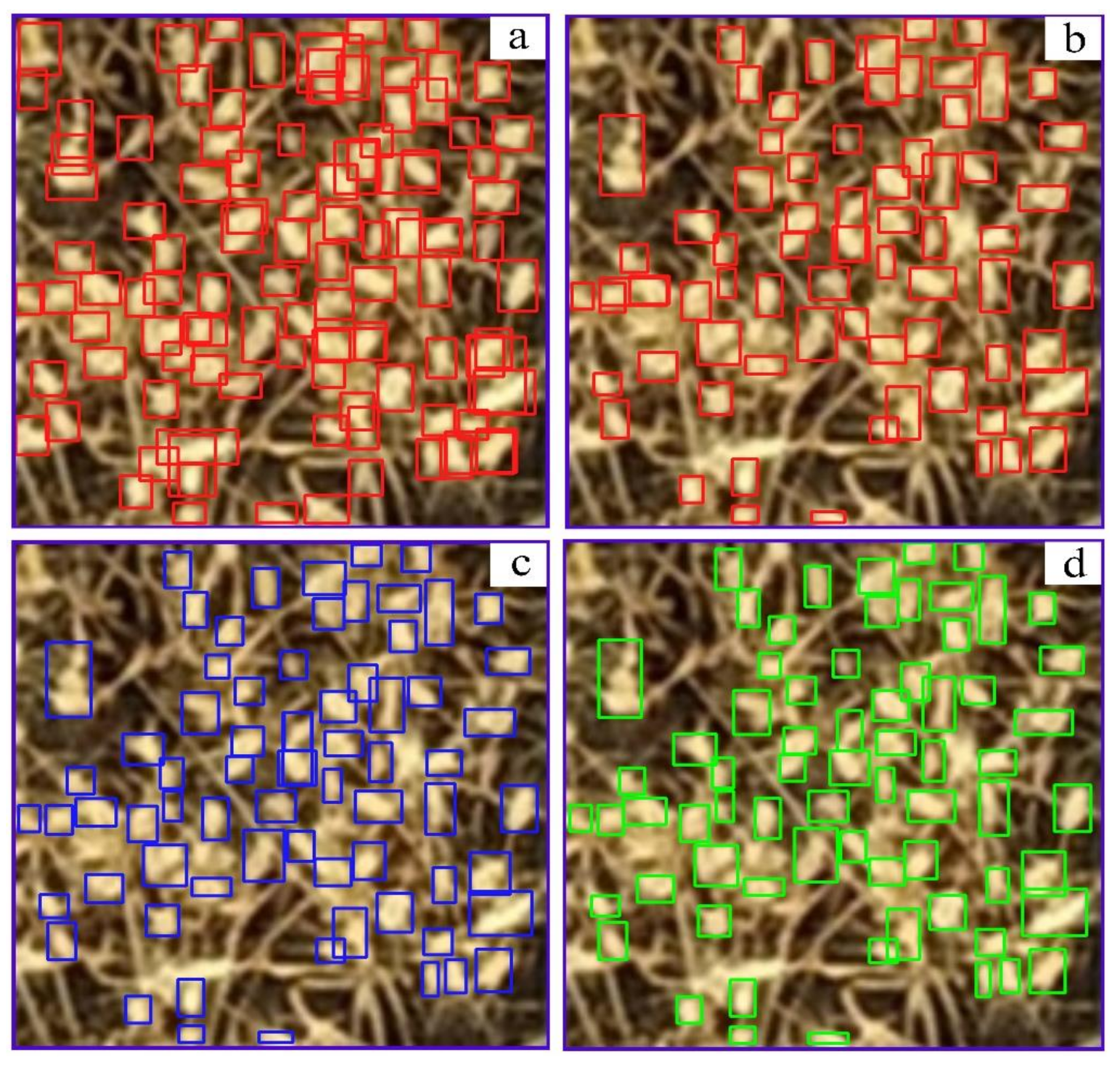

3.1. Multiresolution Image Training

3.2. Network Performance Evaluation

3.3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- FAOSTAT. Available online: http://www.fao.org/faostat/en/ (accessed on 22 June 2021).

- Diacono, M.; Rubino, P.; Montemurro, F. Precision nitrogen management of wheat: A review. Agron. Sustain. Dev. 2013, 33, 219–241. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Rawson, H.M. Spikelet number, its control and relation to yield per ear in wheat. Aust. J. Biol. Sci. 1970, 23, 1–16. [Google Scholar] [CrossRef]

- Li, Y.; Cui, Z.; Ni, Y.; Zheng, M.; Yang, D.; Jin, M.; Chen, J.; Wang, Z.; Yin, Y. Plant density effect on grain number and weight of two winter wheat cultivars at different spikelet and grain positions. PLoS ONE 2016, 11, e0155351. [Google Scholar] [CrossRef] [PubMed]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A compilation of UAV applications for precision agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar] [CrossRef]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.H. Monitoring agronomic parameters of winter wheat crops with low-cost UAV imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef] [Green Version]

- Hassan, M.A.; Yang, M.; Rasheed, A.; Yang, G.; Reynolds, M.; Xia, X.; Xiao, Y.; He, Z. A rapid monitoring of NDVI across the wheat growth cycle for grain yield prediction using a multi-spectral UAV platform. Plant Sci. 2019, 282, 95–103. [Google Scholar] [CrossRef]

- Perich, G.; Hund, A.; Anderegg, J.; Roth, L.; Boer, M.P.; Walter, A.; Liebisch, F.; Aasen, H. Assessment of multi-image UAV based high-throughput field phenotyping of canopy temperature. Front. Plant Sci. 2020, 11, 150. [Google Scholar] [CrossRef] [PubMed]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Y.; Cao, Z.; Lu, H.; Li, Y.; Xiao, Y. In-field automatic observation of wheat heading stage using computer vision. Biosyst. Eng. 2016, 143, 28–41. [Google Scholar] [CrossRef]

- Genaev, M.A.; Komyshev, E.G.; Smirnov, N.V.; Kruchinina, Y.V.; Goncharov, N.P.; Afonnikov, D.A. Morphometry of the wheat spike by analyzing 2D images. Agronomy 2019, 9, 390. [Google Scholar] [CrossRef] [Green Version]

- Grillo, O.; Blangiforti, S.; Venora, G. Wheat landraces identification through glumes image analysis. Comput. Electron. Agric. 2017, 141, 223–231. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Su, B.; Mi, Z.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.H. Aerial visual perception in smart farming: Field study of wheat yellow rust monitoring. IEEE Trans. Ind. Inform. 2020, 17, 2242–2249. [Google Scholar] [CrossRef] [Green Version]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef] [Green Version]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Gutierrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Wheat ear counting in-field conditions: High throughput and low-cost approach using RGB images. Plant Methods 2018, 14, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Zhou, C.; Liang, D.; Yang, X.; Yang, H.; Yue, J.; Yang, G. Wheat ears counting in field conditions based on multi-feature optimization and TWSVM. Front. Plant Sci. 2018, 9, 1024. [Google Scholar] [CrossRef] [PubMed]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Liu, Y.; Gong, C.; Chen, Y.; Yu, H. Applications of deep learning for dense scenes analysis in agriculture: A review. Sensors 2020, 20, 1520. [Google Scholar] [CrossRef] [Green Version]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE Conference on Computer Vision (ICCV 2015), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Wu, X.; Sahoo, D.; Hoi, S.C.H. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. Available online: https://arxiv.org/abs/1804.02767 (accessed on 8 April 2018).

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. Available online: https://arxiv.org/abs/2004.10934 (accessed on 23 April 2020).

- Ultralytics. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 November 2020).

- Madec, S.; Jin, X.; Lu, H.; De Solan, B.; Liu, S.; Duyme, F.; Heritier, E.; Baret, F. Ear density estimation from high resolution RGB imagery using deep learning technique. Agric. For. Meteorol. 2019, 264, 225–234. [Google Scholar] [CrossRef]

- He, M.X.; Hao, P.; Xin, Y.Z. A robust method for wheatear detection using UAV in natural scenes. IEEE Access 2020, 8, 189043–189053. [Google Scholar] [CrossRef]

- Khoroshevsky, F.; Khoroshevsky, S.; Bar-Hillel, A. Parts-per-Object Count in Agricultural Images: Solving Phenotyping Problems via a Single Deep Neural Network. Remote Sens. 2021, 13, 2496. [Google Scholar] [CrossRef]

- Zhou, C.; Liang, D.; Yang, X.; Xu, B.; Yang, G. Recognition of Wheat Spike from Field Based Phenotype Platform Using Multi-Sensor Fusion and Improved Maximum Entropy Segmentation Algorithms. Remote Sens. 2018, 10, 246. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Liu, L.; Li, Y.N.; Zhao, X.M.; Wang, X.Q.; Cao, Z.G. TasselNetV3: Explainable Plant Counting With Guided Upsampling and Background Suppression. IEEE Trans. Geosci. Remote Sens. 2021, 1–15. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, D.; Yang, G.; Xu, B.; Luo, Y.; Yang, X. SSRNet: In-field counting wheat ears using multi-stage convolutional neural network. IEEE Trans. Geosci. Remote Sens. 2021, 1–11. [Google Scholar] [CrossRef]

- Pech-Pacheco, J.L.; Cristóbal, G.; Chamorro-Martinez, J.; Fernandez-Valdivia, J. Diatom autofocusing in brightfield microscopy: A comparative study. In Proceedings of the 15th International Conference on Pattern Recognition (ICPR 2000), Troy, NY, USA, 3–7 September 2000; Volume 3, pp. 314–317. [Google Scholar] [CrossRef]

- Tzutalin. LabelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 3 December 2018).

- Ma, J.; Li, Y.; Chen, Y.; Du, K.; Zheng, F.; Zhang, L.; Sun, Z. Estimating above ground biomass of winter wheat at early growth stages using digital images and deep convolutional neural network. Eur. J. Agron. 2019, 103, 117–129. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016; p. 82. [Google Scholar]

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7482–7491. [Google Scholar] [CrossRef] [Green Version]

- Cai, Q.; Pan, Y.; Wang, Y.; Liu, J.; Yao, T.; Mei, T. Learning a unified sample weighting network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 14–19 June 2020; pp. 14173–14182. [Google Scholar] [CrossRef]

- Solovyev, R.; Wang, W.; Gabruseva, T. Weighted boxes fusion: Ensembling boxes from different object detection models. Image Vis. Comput. 2021, 107, 104117. [Google Scholar] [CrossRef]

- Pound, M.P.; Atkinson, J.A.; Wells, D.M.; Pridmore, T.P.; French, A.P. Deep learning for multi-task plant phenotyping. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 2055–2063. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Li, C.; Xu, R.; Sun, S.; Robertson, J.S.; Paterson, A.H. DeepFlower: A deep learning-based approach to characterize flowering patterns of cotton plants in the field. Plant Methods 2020, 16, 156. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef] [Green Version]

- Cao, Y.; Chen, K.; Loy, C.C.; Lin, D. Prime sample attention in object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 14–19 June 2020; pp. 11583–11591. [Google Scholar]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. arXiv 2019, arXiv:1905.05055. Available online: https://arxiv.org/abs/1905.05055 (accessed on 13 May 2019).

- Ren, Y.; Zhu, C.; Xiao, S. Small object detection in optical remote sensing images via modified Faster R-CNN. Appl. Sci. 2018, 8, 813. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Cen, C.; Che, Y.; Ke, R.; Ma, Y.; Ma, Y. Detection of maize tassels from UAV RGB imagery with faster R-CNN. Remote Sens. 2020, 12, 338. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar] [CrossRef] [Green Version]

- Tong, K.; Wu, Y.; Zhou, F. Recent advances in small object detection based on deep learning: A review. Image Vis. Comput. 2020, 97, 103910. [Google Scholar] [CrossRef]

- Singh, B.; Najibi, M.; Davis, L.S. Sniper: Efficient multi-scale training. arXiv 2018, arXiv:1805.09300. Available online: https://arxiv.org/abs/1805.09300 (accessed on 23 May 2018).

- Hasan, M.M.; Chopin, J.P.; Laga, H.; Miklavcic, S.J. Detection and analysis of wheat spikes using convolutional neural networks. Plant Methods 2018, 14, 100. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, Q.; Cai, J.; Berger, B.; Okamoto, M.; Miklavcic, S.J. Detecting spikes of wheat plants using neural networks with Laws texture energy. Plant Methods 2017, 13, 83. [Google Scholar] [CrossRef] [Green Version]

- Okun, O.; Valentini, G.; Re, M. Ensembles in Machine Learning Applications; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar] [CrossRef]

| Input Resolution | Batch Size | Learning Rate | Training Epochs | Momentum | Weight Decay |

|---|---|---|---|---|---|

| 150 × 150 | 32 | 0.02 | 1200 | 0.9 | 0.0001 |

| 300 × 300 | 16 | 0.01 | 1200 | 0.9 | 0.0001 |

| 450 × 450 | 8 | 0.005 | 1200 | 0.9 | 0.0001 |

| 600 × 600 | 4 | 0.0025 | 1200 | 0.9 | 0.0001 |

| Method | AP (%) | FPS |

|---|---|---|

| Proposed | 94.1 | 30 |

| Faster RCNN | 36.9 | 15 |

| RetinaNet | 53.6 | 18 |

| SSD | 55.3 | 35 |

| YOLOv3 | 53.4 | 35 |

| YOLOv5 | 83.3 | 30 |

| Input Resolution | Method | AP (%) | FPS |

|---|---|---|---|

| 150 × 150 | Refined YOLOv5 | 64.0 | 45 |

| Standard YOLOv5 | 43.6 | 45 | |

| 300 × 300 | Refined YOLOv5 | 77.5 | 37 |

| Standard YOLOv5 | 61.6 | 37 | |

| 450 × 450 | Refined YOLOv5 | 88.1 | 32 |

| Standard YOLOv5 | 80.2 | 32 | |

| 600 × 600 | Refined YOLOv5 | 91.9 | 30 |

| Standard YOLOv5 | 83.3 | 30 |

| Microscale Detection Layer Creation | Anchor Prior Size Setting | Confidence Loss Function Adaption | Multiresolution Detection Results Fusion | AP (%) |

|---|---|---|---|---|

| 83.3 | ||||

| √ | 87.1 | |||

| √ | √ | 89.6 | ||

| √ | √ | √ | 91.9 | |

| √ | √ | √ | √ | 94.1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. https://doi.org/10.3390/rs13163095

Zhao J, Zhang X, Yan J, Qiu X, Yao X, Tian Y, Zhu Y, Cao W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sensing. 2021; 13(16):3095. https://doi.org/10.3390/rs13163095

Chicago/Turabian StyleZhao, Jianqing, Xiaohu Zhang, Jiawei Yan, Xiaolei Qiu, Xia Yao, Yongchao Tian, Yan Zhu, and Weixing Cao. 2021. "A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5" Remote Sensing 13, no. 16: 3095. https://doi.org/10.3390/rs13163095

APA StyleZhao, J., Zhang, X., Yan, J., Qiu, X., Yao, X., Tian, Y., Zhu, Y., & Cao, W. (2021). A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sensing, 13(16), 3095. https://doi.org/10.3390/rs13163095