Building Change Detection Method to Support Register of Identified Changes on Buildings

Abstract

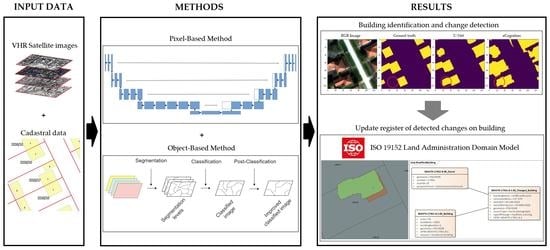

:1. Introduction

2. Materials and Methods

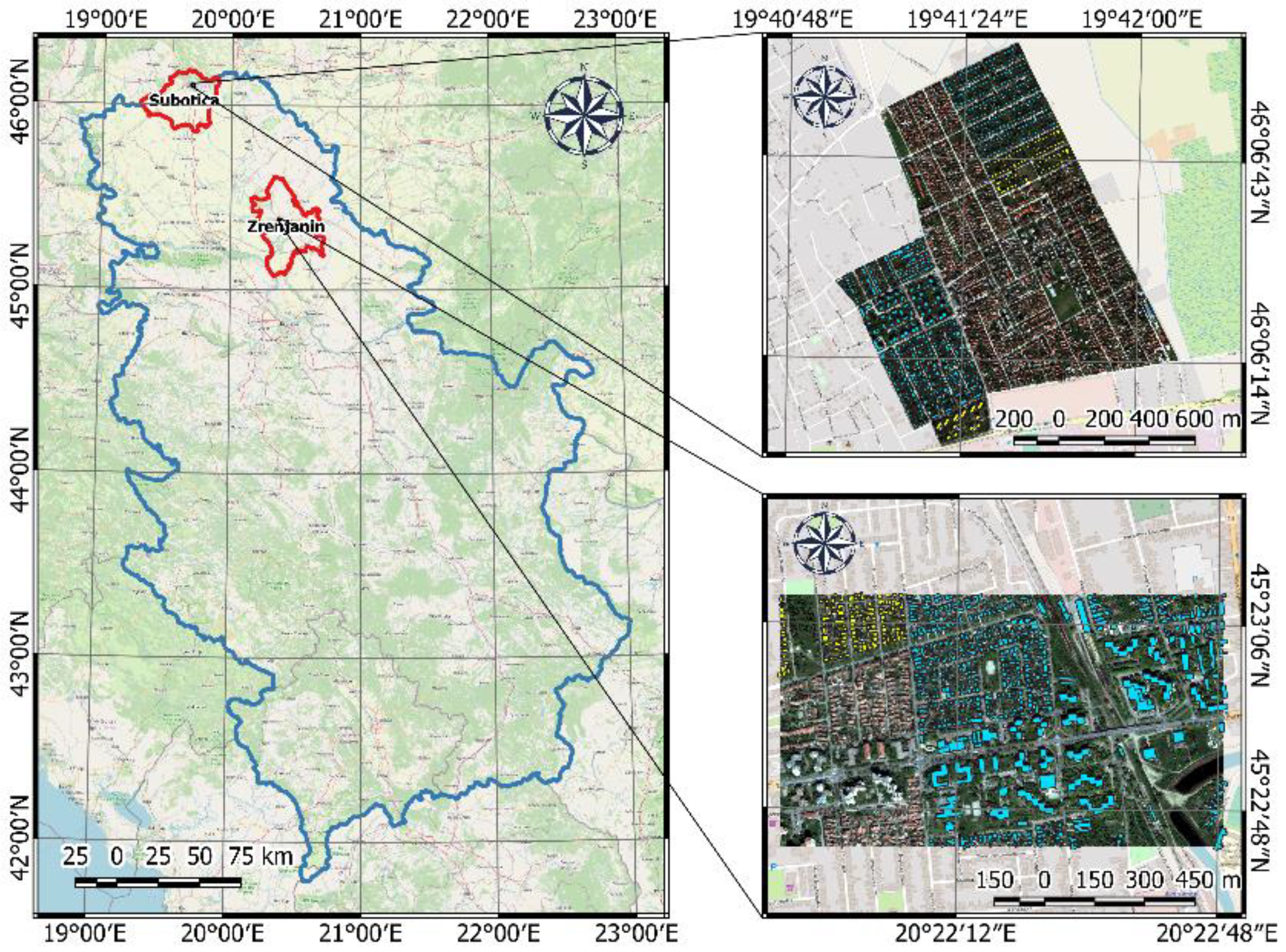

2.1. Study Areas and Datasets

2.2. Methods

2.2.1. Object-Based Classification Method

2.2.2. Pixel-Based Method

2.2.3. Accuracy Assessment

Metrics

- <0—No agreement

- 0—0.20 Slight

- 0.21—0.40 Fair

- 0.41—0.60 Moderate

- 0.61—0.80 Substantial

- 0.81–1.0—Perfect

Loss Function

2.2.4. Data Model for the Register on Determined Changes on Buildings

- Buildings which are not registered in the real estate cadastre.

- Buildings which are registered in the real estate cadastre, but their base dimension has changed in relation to buildings registered in the real estate cadastre.

- Buildings which are registered in the real estate cadastre but are demolished in the field.

3. Results

3.1. Preprocessing

3.2. Training and Accuracy

3.3. Building Identification Results

3.4. Identification of Objects According to the Rulebook

3.4.1. Objects That Exist in Cadastral Records but Are Not Visible on the Orthophoto

3.4.2. Objects That do Not Exist in Cadastre but Are Visible on the Orthophoto

3.4.3. Objects Exist in Cadastre and in Orthophoto, but with Different Surfaces

3.5. Verification of the Results in the Register on Determined Changes on Buildings

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Yan, L.; Fan, B.; Liu, H.; Huo, C.; Xiang, S.; Pan, C. Triplet Adversarial Domain Adaptation for Pixel-Level Classification of VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3558–3573. [Google Scholar] [CrossRef]

- Li, W.; He, C.; Fang, J.; Zheng, J.; Fu, H.; Yu, L. Semantic Segmentation-Based Building Footprint Extraction Using Very High-Resolution Satellite Images and Multi-Source GIS Data. Remote Sens. 2019, 11, 403. [Google Scholar] [CrossRef] [Green Version]

- Inglada, J. Automatic recognition of man-made objects in high resolution optical remote sensing images by SVM classification of geometric image features. ISPRS J. Photogramm. Remote Sens. 2007, 62, 236–248. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE Inst. Electr. Electron Eng. 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Schiewe, J. Segmentation of high-resolution remotely sensed data-concepts, applications and problems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 4, 380–385. [Google Scholar]

- Meinel, G.; Neubert, M. A Comparison of segmentation programs for high resolution remote sensing data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 1097–1102. [Google Scholar]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation—An Optimization Approach for High Quality Multi-Scale Image Segmentation. In Angewandte Geographische Informations-Verarbeitung; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmanm Verlag: Karlsruhe, Germany, 2000; Volume 38, pp. 12–23. [Google Scholar]

- Cheng, G.; Han, J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote. Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef] [Green Version]

- Trimble. Trimble eCognition Developer for Windows Operating System; Trimble Germany GmbH: Munich, Germany, 2017; ISBN 2008000834. Available online: https://bit.ly/3ldP6bc (accessed on 20 April 2020).

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Zhou, X.; Jancso, T.A.; Chen, C.H.; Verone, M.W. Urban land cover mapping based on object oriented classification using WorldView 2 satellite remote sensing images. In Proceedings of the International Scientific Conference on Sustainable Development & Ecological Footprint, Sopron, Hungary, 26–27 March 2012; p. 10. Available online: https://bit.ly/3j23Ozs (accessed on 1 June 2021).

- Norman, M.; Shahar, H.M.; Mohamad, Z.; Rahim, A.; Mohd, F.A.; Shafri, H.Z.M. Urban building detection using object-based image analysis (OBIA) and machine learning (ML) algorithms. IOP Conf. Ser. Earth Environ. Sci. 2021, 620, 012010. [Google Scholar] [CrossRef]

- Norman, M.; Shafri, H.Z.M.; Idrees, M.O.; Mansor, S.; Yusuf, B. Spatio-statistical optimization of image segmentation process for building footprint extraction using very high-resolution WorldView 3 satellite data. IEEE Trans. Geosci. Remote Sens. 2019, 35, 1124–1147. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Stein, A. Recurrent Multiresolution Convolutional Net-works for VHR Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6361–6374. [Google Scholar] [CrossRef] [Green Version]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic Building Segmentation of Aerial Imagery Using Multi-Constraint Fully Convolutional Networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef] [Green Version]

- Kokeza, Z.; Vujasinović, M.; Govedarica, M.; Milojević, B.; JakovljeviĆ, G. Automatic building footprint extraction from UAV images using neural networks. Geod. Vestn. 2020, 64, 4. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. ArXiv 2015, 9351, 234–241. [Google Scholar] [CrossRef] [Green Version]

- Xiao, P.; Yuan, M.; Zhang, X.; Feng, X.; Guo, Y. Cosegmentation for Object-Based Building Change Detection from High-Resolution Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1587–1603. [Google Scholar] [CrossRef]

- Official Gazette of the Republic of Serbia No 102/20. The Rulebook on Established Changes on Buildings. Available online: https://bit.ly/3j6pxpF (accessed on 10 April 2021).

- Database of Illegally Constructed Buildings. Ministry of Building, Transportation and Infrastructure of Serbia, Belgrade. 2017. Available online: https://bit.ly/3rI00XP (accessed on 1 April 2021).

- Devereux, B.J.; Amable, G.S.; Posada, C.C. An efficient image segmentation algorithm for landscape analysis. Int. J. Appl. Earth Obs. Geoinf. 2004, 6, 47–61. [Google Scholar] [CrossRef]

- You, Y.; Cao, J.; Zhou, W. A Survey of Change Detection Methods Based on Remote Sensing Images for Multi-Source and Multi-Objective Scenarios. Remote Sens. 2020, 12, 2460. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, X.; Xin, Q.; Sun, Y.; Zhang, P. Automatic building extraction from high-resolution aerial images and LiDAR data using gated residual refinement network. ISPRS J. Photogramm. Remote Sens. 2019, 151, 91–105. [Google Scholar] [CrossRef]

- Shao, Z.; Tang, P.; Wang, Z.; Saleem, N.; Yam, S.; Sommai, C. BRRNet: A Fully Convolutional Neural Network for Automatic Building Extraction from High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 1050. [Google Scholar] [CrossRef] [Green Version]

- Wierzbicki, D.; Matuk, O.; Bielecka, E. Polish Cadastre Modernization with Remotely Extracted Buildings from High-Resolution Aerial Orthoimagery and Airborne LiDAR. Remote Sens. 2021, 13, 611. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G.-S. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Wang, S.; Hou, X.; Zhao, X. Automatic building extraction from high-resolution aerial imagery via fully convolutional encoder-decoder network with non-local block. IEEE Access 2020, 8, 7313–7322. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, F.; Xia, J.; Xu, Y.; Li, G.; Xie, J.; Du, Z.; Liu, R. Building Damage Detection Using U-Net with Attention Mechanism from Pre and Post-Disaster Remote Sensing Datasets. Remote Sens. 2021, 13, 905. [Google Scholar] [CrossRef]

- Jin, Y.; Xu, W.; Zhang, C.; Luo, X.; Jia, H. Boundary-Aware Refined Network for Automatic Building Extraction in Very High-Resolution Urban Aerial Images. Remote Sens. 2021, 13, 692. [Google Scholar] [CrossRef]

- Ivanovsky, L.; Khryashchev, V.; Pavlov, V.; Ostrovskaya, A. Building Detection on Aerial Images Using U-NET Neural Networks. In Proceedings of the 24th Conference of Open Innovations Association (FRUCT), Moscow, Russia, 8–12 April 2019; pp. 116–122. Available online: https://bit.ly/2UWpQf2 (accessed on 1 June 2021).

- De Jong, K.L.; Sergeevna Bosman, A. Unsupervised Change Detection in Satellite Images Using Convolutional Neural Networks. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–8. Available online: https://bit.ly/3fbOuii (accessed on 15 June 2021). [CrossRef]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Semantic Labeling in Remote Sensing Corpora Using Feature Fusion-Based En-hanced Global Convolutional Network with High-Resolution Representations and Depthwise Atrous Convolution. Remote Sens. 2020, 12, 1233. [Google Scholar] [CrossRef] [Green Version]

- Ulmas, P.; Liiv, I. Segmentation of Satellite Imagery using U-Net Models for Land Cover Classification. Comput. Sci. 2020, 3, 1–2. [Google Scholar] [CrossRef]

- Kulkarni, A.; Chong, D.; Batarseh, F.A. Foundations of data imbalance and solutions for a data democracy. ScienceDirect 2020, 6, 83–106. [Google Scholar] [CrossRef]

- Julius, S.; Wright, C.C. The Kappa Statistic in Reliability Studies: Use, Interpretation, and Sample Size Requirements. Phys. Ther. 2005, 85, 257–268. [Google Scholar] [CrossRef] [Green Version]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Int. J. Biom. 1977, 3, 159–174. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Fritz, K. Instance Segmentation of Buildings in Satellite Images. Master’s Thesis, Linköping University, Linköping, Sweden, January 2020. Available online: https://bit.ly/3fbdHcV (accessed on 15 April 2021).

- Radulović, A.; Sladić, D.; Govedarica, M. Towards 3D Cadastre in Serbia: Development of Serbian Cadastral Domain Model. ISPRS Int. J. Geo-Inf. 2017, 6, 312. [Google Scholar] [CrossRef] [Green Version]

- Official Gazette of the Republic of Serbia. The Law on State Survey and Cadastre. 2009. Available online: https://bit.ly/3xjMXxe (accessed on 30 May 2021).

- ISO 19152:2012 Geographic Information—Land Administration Domain Model (LADM). Available online: https://bit.ly/3fcfM8r (accessed on 24 May 2021).

- Cloud Computing Services. Available online: https://cloud.google.com/ (accessed on 15 April 2021).

- Serbia-Real Estate Management Project. The World Bank. Available online: https://bit.ly/3j7EUOZ (accessed on 15 April 2021).

- Lucian, D.; Mariana, B.; George, P.; Peter, B. Sensitivity of multiresolution segmentation to spatial extent. Int. J. Appl. Earth Obs. Geoinf. 2019, 81, 146–153. [Google Scholar] [CrossRef]

- Mariana, B.; Lucian, D. Comparing supervised and unsupervised multiresolution segmentation approaches for extracting buildings from very high resolution imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 65–75. [Google Scholar] [CrossRef] [Green Version]

- Divyesh, V. Change Detection of Buildings Using Satellite Images and DSMs. Master’s Thesis, Technische Universität München, München, Germany, 2011. [Google Scholar]

- Khosravi, I.; Momeni, M.; Rahnemoonfar, M. Performance Evaluation of Object-based and Pixel-based Building Detection Algorithms from Very High Spatial Resolution Imagery. Photogramm. Eng. Remote Sens. 2014, 6, 519–528. [Google Scholar] [CrossRef] [Green Version]

- Kriti, R.; Pankaj, B.; Shashikant, A.S. Automatic Building Footprint extraction from Very High-Resolution Imagery using Deep Learning Techniques. Geocarto Int. 2020, 5, 1–4. [Google Scholar] [CrossRef]

| City | Number of Images | Number of Objects | |||

|---|---|---|---|---|---|

| Training | Test | Prediction | Total | ||

| Zrenjanin | 269 | 32 | 106 | 407 | 780 |

| Subotica | 321 | 80 | 604 | 1005 | 778 |

| City | Total Number of Objects | Number of Correctly Identified Objects | |

|---|---|---|---|

| U-Net | eCognition | ||

| Zrenjanin | 141 | 127 | 126 |

| Subotica | 120 | 104 | 111 |

| City | Accuracy | Kappa Statistic | ||

|---|---|---|---|---|

| U-Net | eCognition | U-Net | eCognition | |

| Zrenjanin | 86.08 | 86.02 | 89 | 89 |

| Subotica | 83.99 | 88.04 | 96.84 | 96 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jovanović, D.; Gavrilović, M.; Sladić, D.; Radulović, A.; Govedarica, M. Building Change Detection Method to Support Register of Identified Changes on Buildings. Remote Sens. 2021, 13, 3150. https://doi.org/10.3390/rs13163150

Jovanović D, Gavrilović M, Sladić D, Radulović A, Govedarica M. Building Change Detection Method to Support Register of Identified Changes on Buildings. Remote Sensing. 2021; 13(16):3150. https://doi.org/10.3390/rs13163150

Chicago/Turabian StyleJovanović, Dušan, Milan Gavrilović, Dubravka Sladić, Aleksandra Radulović, and Miro Govedarica. 2021. "Building Change Detection Method to Support Register of Identified Changes on Buildings" Remote Sensing 13, no. 16: 3150. https://doi.org/10.3390/rs13163150