Correcting the Eccentricity Error of Projected Spherical Objects in Perspective Cameras

Abstract

:1. Introduction: Spheres in Images

2. Materials and Methods

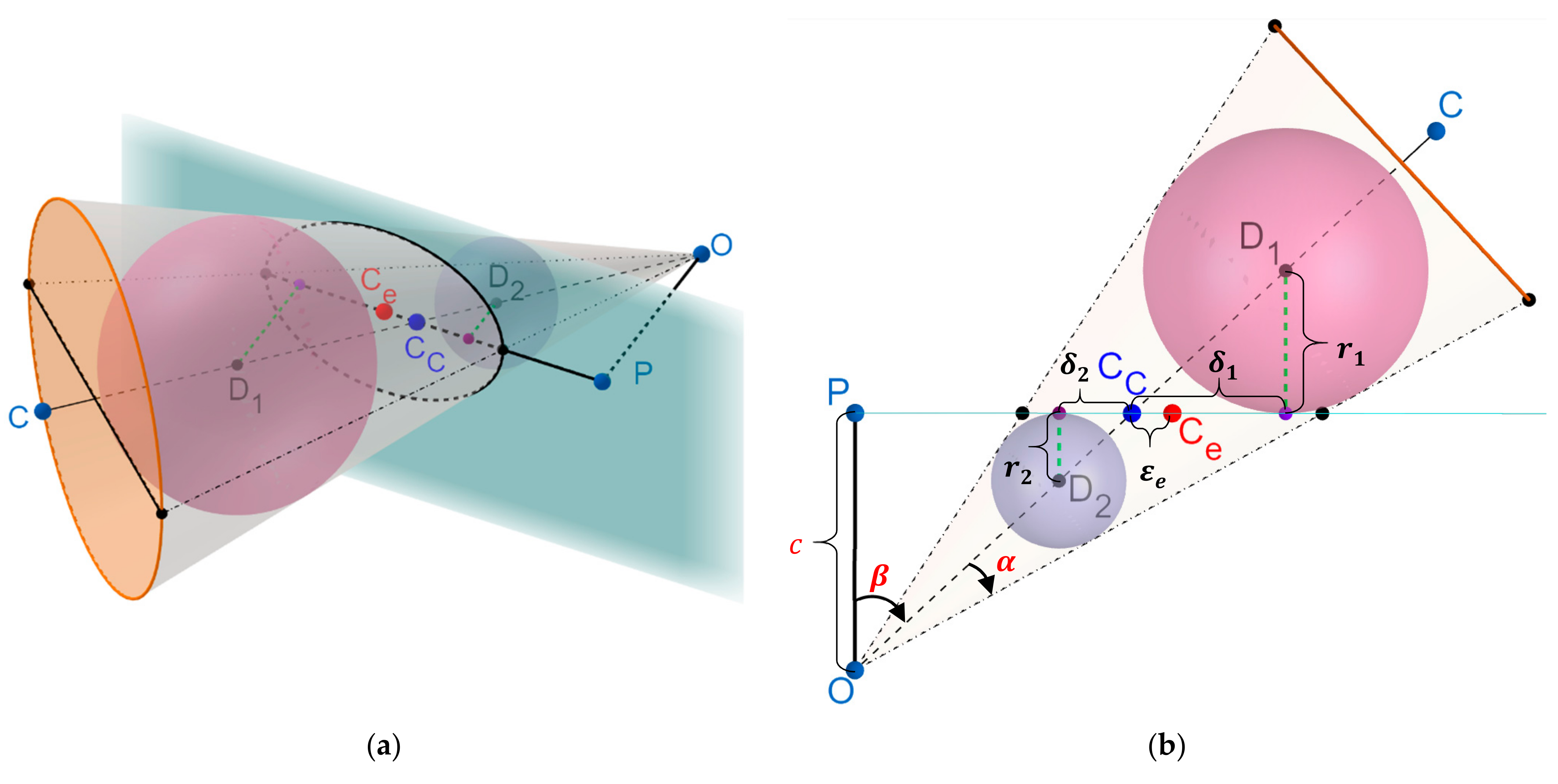

2.1. Modeling the Eccentricity Error

- The cone’s vertex, and the two centers of the Dandelin spheres, always lie on the line passing through the center of the cone’s base.

- The two Dandelin spheres are also tangential (orthogonal) to the ellipse’s major axis at the focal points.

- The line connecting the cone’s vertex and the center of the ellipse is also orthogonal to the ellipse’s major axis (marginal case of center passing through the principal point).

- Because the spheres’ centers lie on one side of the cone’s vertex, and the ellipse’s center lies between the two focal points, the only acceptable arrangement is when the center and the focal points are the same, which suggests no eccentricity.

- Condition 4 can only hold if Condition 3 is satisfied. Condition 3 can only occur as a special case when the camera’s optical axis passes through the sphere’s center, which is expected to produce no eccentricity error [3]. As mentioned, in this case, the projection of the sphere onto the image plane will be a circle.

| Algorithm 1 Corrected Ellipse Center |

| Inputs: Camera’s principal distance, , principal point, , best fit ellipse geometric parameters, . |

| Output: Corrected center of the ellipse, . |

|

2.2. Data Collection and Validation

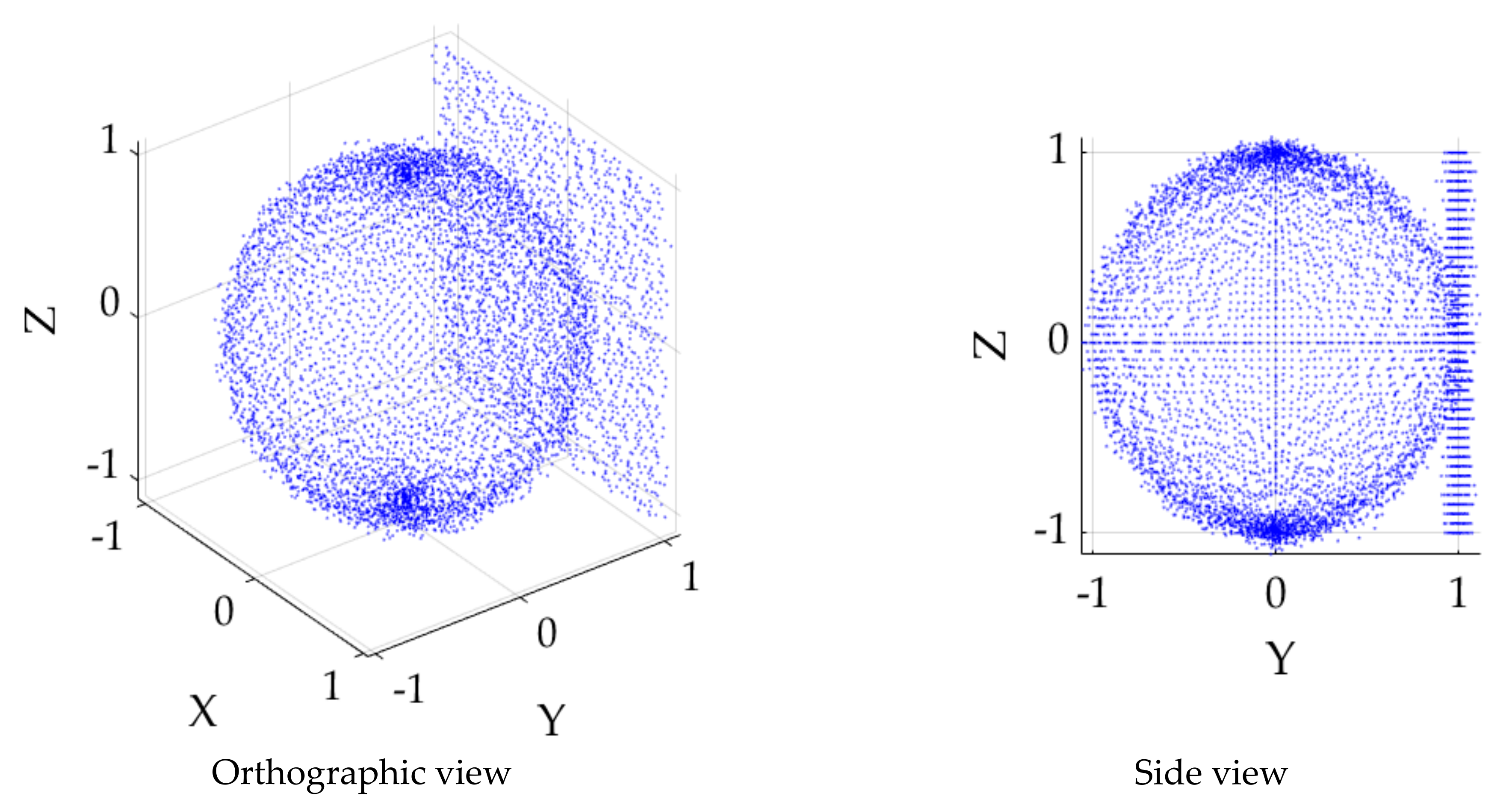

2.3. Robust Sphere Detection in 3D Point Clouds

| Algorithm 2 Hyperaccurate Direct Algebraic Sphere Fit |

| Inputs: Point cloud, , , where is the number of observations. |

| Outputs: Estimated best fit least squares sphere’s radius, , and center, . |

|

3. Experimental Design

3.1. Experiment 1: Robust Sphere Fitting Evaluation

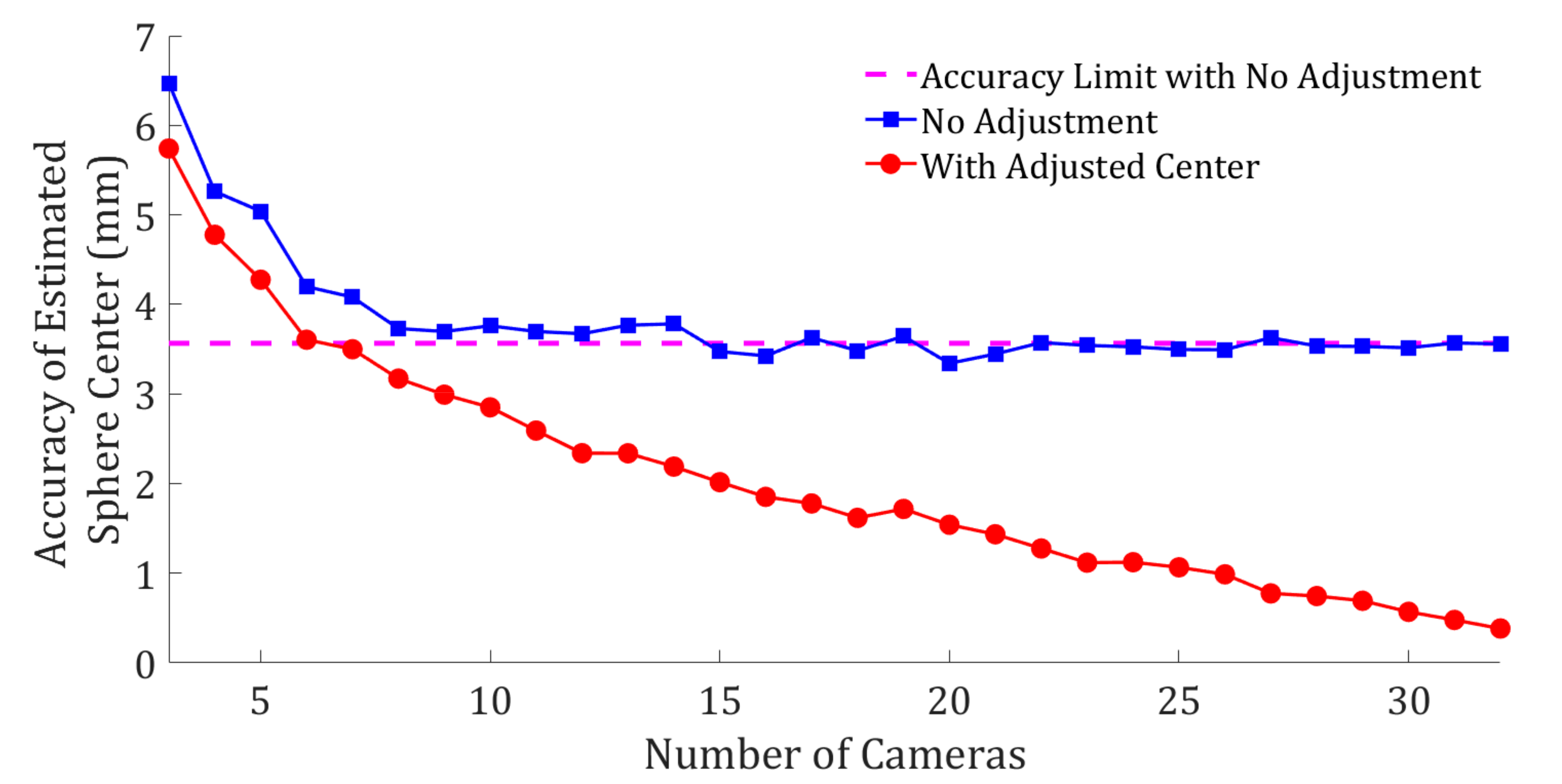

3.2. Experiment 2: Eccentricity Error Correction vs. Number of Images

- Randomly select different combination of images from the 32 images.

- Calculate the Euclidian distance between the object space coordinates of the adjusted and unadjusted centers from the ground truth (Figure 2e).

- For a given number of image views, , record the mean of the distances obtained from Step 3.

4. Experimental Results and Discussions

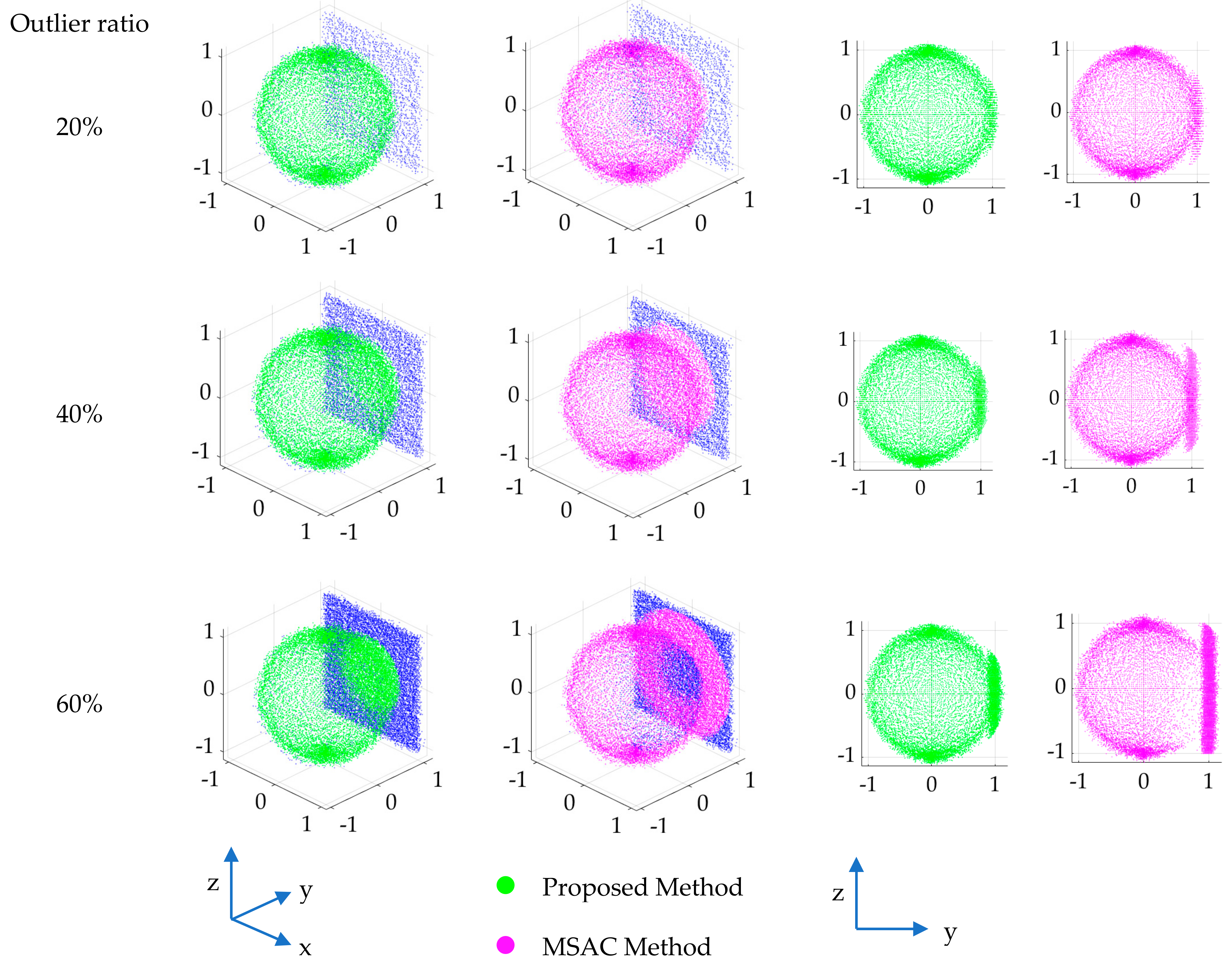

4.1. Experiment 1: Robust Sphere Fitting Evaluation

4.1.1. Experiment 1: Accuracy of the Estimated Radius

4.1.2. Experiment 1: Accuracy of the Estimated Center

4.1.3. Experiment 1: Quality of Sphere Detection

4.2. Experiment 2: Eccentricity Error Correction vs. Number of Images

5. Discussions and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ahn, S.J.; Warnecke, H.J.; Kotowski, R. Systematic Geometric Image Measurement Errors of Circular Object Targets: Mathematical Formulation and Correction. Photogramm. Rec. 1999, 16, 485–502. [Google Scholar] [CrossRef]

- FIFA Quality. FIFA Quality Programme for Goal-Line Technology Testing Manual; Fédération Internationale de Football Association (FIFA): Zurich, Switzerland, 2014. [Google Scholar]

- Luhmann, T. Eccentricity in images of circular and spherical targets and its impact on spatial intersection. Photogramm. Rec. 2014, 29, 417–433. [Google Scholar] [CrossRef]

- Lichti, D.D.; Glennie, C.L.; Jahraus, A.; Hartzell, P. New Approach for Low-Cost TLS Target Measurement. J. Surv. Eng. 2019, 145, 04019008. [Google Scholar] [CrossRef]

- Miller, J.; Goldman, R. Using tangent balls to find plane sections of natural quadrics. IEEE Eng. Med. Biol. Mag. 1992, 12, 68–82. [Google Scholar] [CrossRef]

- Hohenwarter, M.; Jarvis, D.; Lavicza, Z. Linking Geometry, Algebra and Mathematics Teachers: GeoGebra Software and the Establishment of the International GeoGebra Institute. Int. J. Technol. Math. Educ. 2009, 16, 83–87. [Google Scholar]

- Botana, F.; Hohenwarter, M.; Janičić, P.; Kovács, Z.; Petrović, I.; Recio, T.; Weitzhofer, S. Automated Theorem Proving in GeoGebra: Current Achievements. J. Autom. Reason. 2015, 55, 39–59. [Google Scholar] [CrossRef]

- Lichti, D.D.; Jarron, D.; Tredoux, W.; Shahbazi, M.; Radovanovic, R. Geometric modelling and calibration of a spherical camera imaging system. Photogramm. Rec. 2020, 35, 123–142. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D. Automated calibration of smartphone cameras for 3D reconstruction of mechanical pipes. Photogramm. Rec. 2021, 36, 124–146. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D. New confocal hyperbola-based ellipse fitting with applications to estimating parameters of mechanical pipes from point clouds. Pattern Recognit. 2021, 116, 107948. [Google Scholar] [CrossRef]

- Schönberger, J.L. Robust Methods for Accurate and Efficient 3D Modeling from Unstructured Imagery; ETH Zurich: Zurich, Switzerland, 2018. [Google Scholar]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1979, 203, 405–426. [Google Scholar] [CrossRef]

- Al-Sharadqah, A.; Chernov, N. Error analysis for circle fitting algorithms. Electron. J. Stat. 2009, 3, 886–911. [Google Scholar] [CrossRef]

- Chernov, N. Circular and Linear Regression; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Maalek, R. Field Information Modeling (FIM)™: Best Practices Using Point Clouds. Remote Sens. 2021, 13, 967. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D.; Walker, R.; Bhavnani, A.; Ruwanpura, J.Y. Extraction of pipes and flanges from point clouds for automated verification of pre-fabricated modules in oil and gas refinery projects. Autom. Constr. 2019, 103, 150–167. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.D.; Maalek, S. Towards automatic digital documentation and progress reporting of mechanical construction pipes using smartphones. Autom. Constr. 2021, 127, 103735. [Google Scholar] [CrossRef]

- Torr, P.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef] [Green Version]

- Maalek, R.; Lichti, D.D.; Ruwanpura, J.Y. Robust Segmentation of Planar and Linear Features of Terrestrial Laser Scanner Point Clouds Acquired from Construction Sites. Sensors 2018, 18, 819. [Google Scholar] [CrossRef] [PubMed]

- Olson, D.L.; Delen, D. Advanced Data Mining Techniques; Springer Science and Business Media LLC: Berlin, Germany, 2008. [Google Scholar]

- Hartley, R.; Zisserman, A.; Faugeras, O. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004; ISBN 978-0-521-54051-3. [Google Scholar]

| Configuration Category | Parameter Selection Domain | |

|---|---|---|

| From | To | |

| number of points | 100 | 10,000 |

| noise | 0 | 0.05 |

| outlier ratio | 10% | 60% |

| Statistic | Accuracy of Radius Estimation | |

|---|---|---|

| Proposed | MSAC | |

| mean | 0.002 | 0.022 |

| median | 0.001 | 0.013 |

| 95th percentile | 0.007 | 0.074 |

| Statistic | Accuracy of Radius Estimation | |

|---|---|---|

| Proposed | MSAC | |

| mean | 0.004 | 0.057 |

| median | 0.003 | 0.045 |

| 95th percentile | 0.013 | 0.151 |

| Method | Precision (%) | Recall (%) | Accuracy (%) | F_Measure (%) |

|---|---|---|---|---|

| proposed | 96.26 | 95.21 | 94.18 | 95.44 |

| MSAC | 96.70 | 80.93 | 84.23 | 87.79 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maalek, R.; Lichti, D.D. Correcting the Eccentricity Error of Projected Spherical Objects in Perspective Cameras. Remote Sens. 2021, 13, 3269. https://doi.org/10.3390/rs13163269

Maalek R, Lichti DD. Correcting the Eccentricity Error of Projected Spherical Objects in Perspective Cameras. Remote Sensing. 2021; 13(16):3269. https://doi.org/10.3390/rs13163269

Chicago/Turabian StyleMaalek, Reza, and Derek D. Lichti. 2021. "Correcting the Eccentricity Error of Projected Spherical Objects in Perspective Cameras" Remote Sensing 13, no. 16: 3269. https://doi.org/10.3390/rs13163269

APA StyleMaalek, R., & Lichti, D. D. (2021). Correcting the Eccentricity Error of Projected Spherical Objects in Perspective Cameras. Remote Sensing, 13(16), 3269. https://doi.org/10.3390/rs13163269