2.1. Modeling the Eccentricity Error

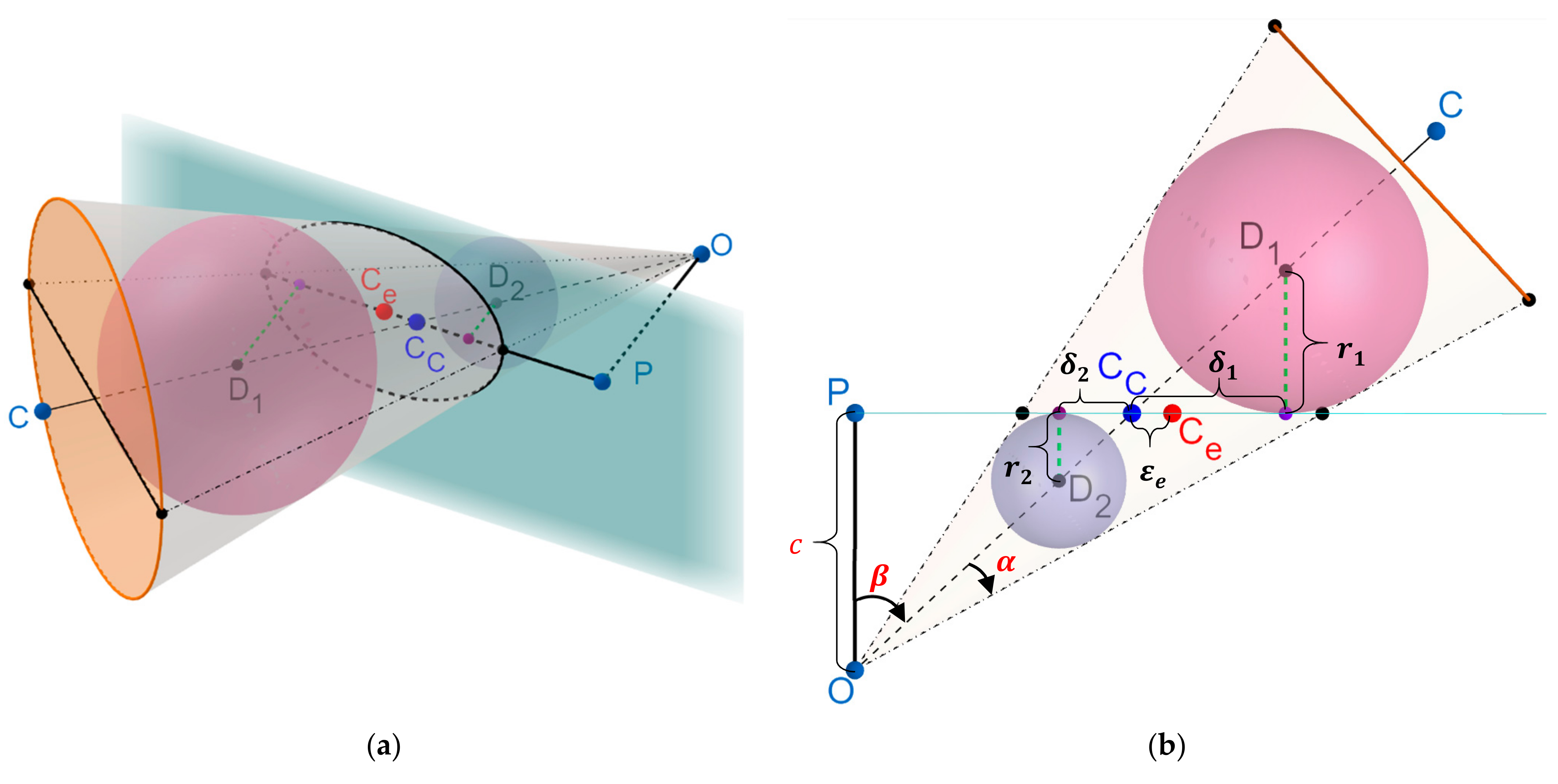

The ellipse formed by the perspective projection of a sphere onto an image is equivalent to the ellipse formed by the intersection of the tangential cone to the sphere with the plane. Miller and Goldman [

5] explained the relationship between a randomly oriented plane intersecting a cone. They showed that the centers of the two tangential Dendelin spheres (see

Figure 1a,b), and the major axis of the generated ellipse, lie on a plane whose normal vector is parallel to the minor axis of the projected ellipse. As illustrated in

Figure 1, the camera center,

, the centers of the Dandelin spheres,

and

, and the true center of the sphere,

, lie on the same line. The center of the sphere, projected onto the intersecting plane,

, is, hence, the intersection of the line, connecting the centers of the Dendelin spheres with the major axis of the ellipse. Because the center of the ellipse,

, is always on the major axis of the projected ellipse, the eccentricity error has only one component in the direction of the ellipse’s major axis, and no eccentricity in the direction of the orthogonal minor axis. To this end, the true target’s center in the image plane can be derived by translating the estimated ellipse’s center in the direction of the major axis by the eccentricity error,

, shown in

Figure 1b.

To provide a closed-form solution for

, the following two relationships from [

5] are employed:

where

and

are the radii of the two tangential Dandelin spheres,

is the half-angle of the cone,

is the angle between the cone’s axis and the intersecting plane’s normal,

is the semi-minor length of the best fit ellipse, and

is the camera’s principal distance (focal length). Using the notations of

Figure 1b, the eccentricity error,

, can be derived as follows:

where

is the semi-major length of the best fit ellipse,

is the distance of the ellipse’s center to one of the focal points, and

is shown in

Figure 1b. Based on the similarity of the two right angled triangles, we have:

The ratio

can be directly derived from Equation (2):

Substituting Equations (5) and (4) into Equation (3) provides the closed-form solution to the magnitude of the eccentricity error:

The ellipse center must now be translated onto the major axis with magnitude

. The direction of the translation, as observed in

Figure 1, must always be towards the principal point,

. Equation (6) also shows that the eccentricity is zero when the focal length of the projected ellipse is zero (the ellipse is a circle). This occurs in the marginal case when the estimated ellipse center lies exactly on the principal point. The latter can be geometrically explained from the following generic relationships:

The cone’s vertex, and the two centers of the Dandelin spheres, always lie on the line passing through the center of the cone’s base.

The two Dandelin spheres are also tangential (orthogonal) to the ellipse’s major axis at the focal points.

The line connecting the cone’s vertex and the center of the ellipse is also orthogonal to the ellipse’s major axis (marginal case of center passing through the principal point).

Because the spheres’ centers lie on one side of the cone’s vertex, and the ellipse’s center lies between the two focal points, the only acceptable arrangement is when the center and the focal points are the same, which suggests no eccentricity.

Condition 4 can only hold if Condition 3 is satisfied. Condition 3 can only occur as a special case when the camera’s optical axis passes through the sphere’s center, which is expected to produce no eccentricity error [

3]. As mentioned, in this case, the projection of the sphere onto the image plane will be a circle.

From Condition 3, it can be inferred that the major axis of the projected ellipse also passes through the camera’s principal point. Predicated on the aforementioned discussions, the corrected image coordinates of the spherical target center,

are obtained using Algorithm 1, given the camera’s principal distance,

, and principal point,

, and the geometric parameters of a best fit ellipse,

, representing the (

coordinates of the center, semi-major length, semi-minor length, and the major axis’ rotation angle in the image plane, respectively.

| Algorithm 1 Corrected Ellipse Center |

| Inputs: Camera’s principal distance, , principal point, , best fit ellipse geometric parameters, . |

| Output: Corrected center of the ellipse, . |

Calculate the distance vector from the ellipse’s center to the principal point:

If , , and exit the algorithm. Else, perform the following steps: - 3.1.

Determine the unit vector representing the direction of the major axis:

- 3.2.

Calculate the focal point of the ellipse, . - 3.3.

Estimate the magnitude of the eccentricity error, , using Equation (6). - 3.4.

Estimate the corrected ellipse center, , using the following equation:

|

Algorithm 1 requires an estimate of the interior orientation parameters (IOPs) of the camera,

, and

. This initial estimate can be obtained from pre-calibration [

8,

9], manufacturer’s specifications, or the Exchangeable Image File (EXIF). In addition, it is possible to use Algorithm 1 to help correct camera positions in applications involving tracking spheres using cameras. In case the application involves tracking, say, a rigid spherical ball, the corrected center can be used to guide the direction of the movement of the camera to always face the center of the ball (i.e., the only position with no eccentricity error).

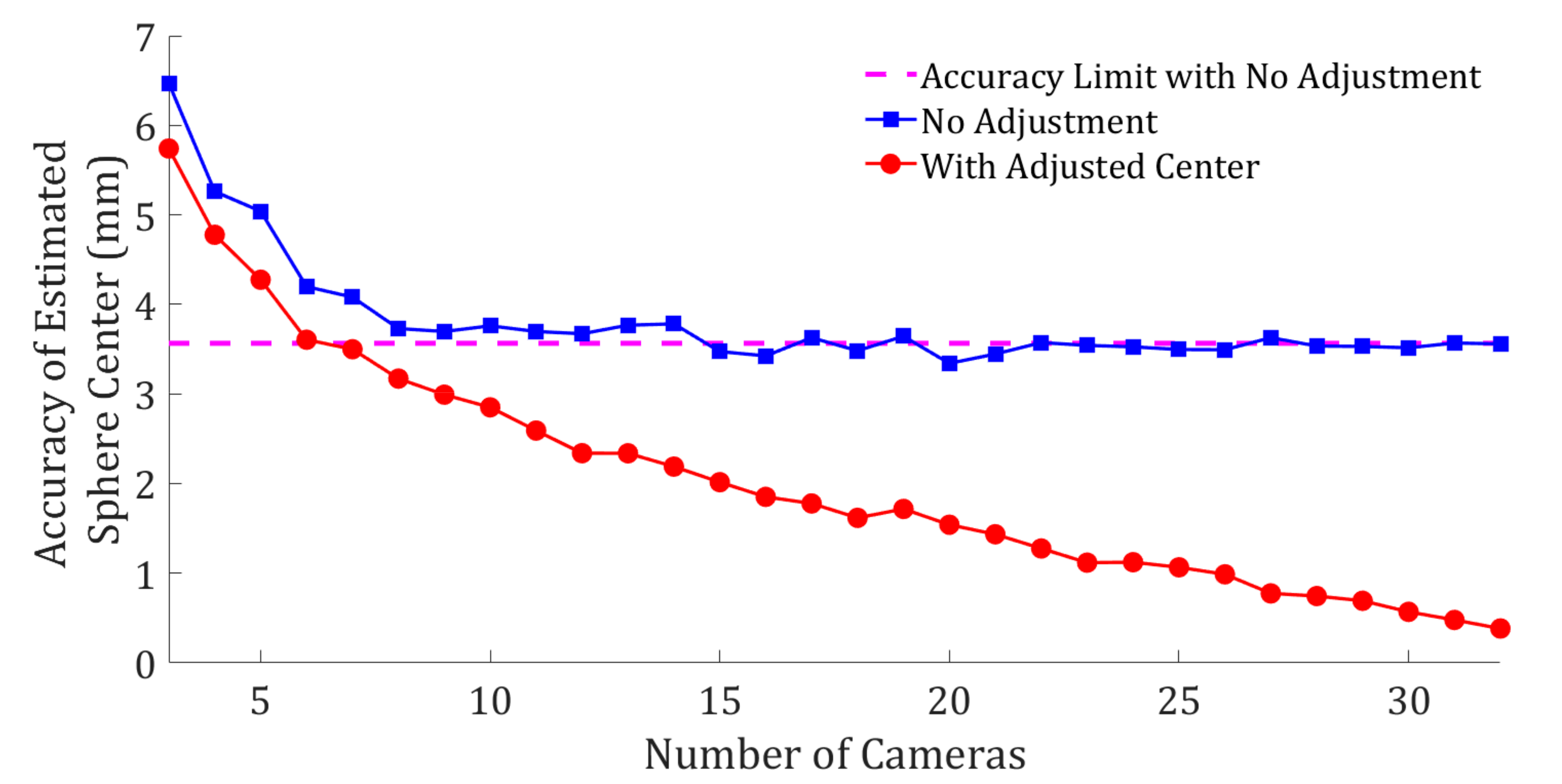

2.2. Data Collection and Validation

A white Styrofoam spherical target on a black background was attached to the wall as the subject of the main experiment presented in this study (

Figure 2a). The true radius of the spherical target was 50 mm (to 0.01 mm measurement precision). The color contrast between the spherical target and its background were purposefully designed to guarantee a clear detectable boundary for the ellipse representing the projected sphere on the image. In each image, an ellipse is fitted to the boundary of the sphere using the reliable confocal hyperbola ellipse fitting method proposed in [

10]. The center of the best fit ellipse is then adjusted using Algorithm 1 (

Figure 2b).

A pre-calibrated Huawei P30 mobile phone camera was used to acquire thirty-two 4K images of the spherical target. The calibration of the smartphone camera was performed using the method and IOPs presented in [

9]. The position and orientation of the camera for each image view (exterior orientation parameters) were estimated automatically (independent of the spherical target’s center) using COLMAP [

11], a reliable open source structure-from-motion [

12] software. The camera positions are shown in

Figure 2c with red pyramids.

Figure 2d shows the point cloud of the target after dense 3D reconstruction.

Figure 2e shows the best fit sphere (shown in green) to the point cloud of the spherical target. The center of the best fit sphere is then used as ground truth in the presented analysis. The reconstruction object space scale is defined using the ratio between the real-world and the best fit radius of the spherical target.

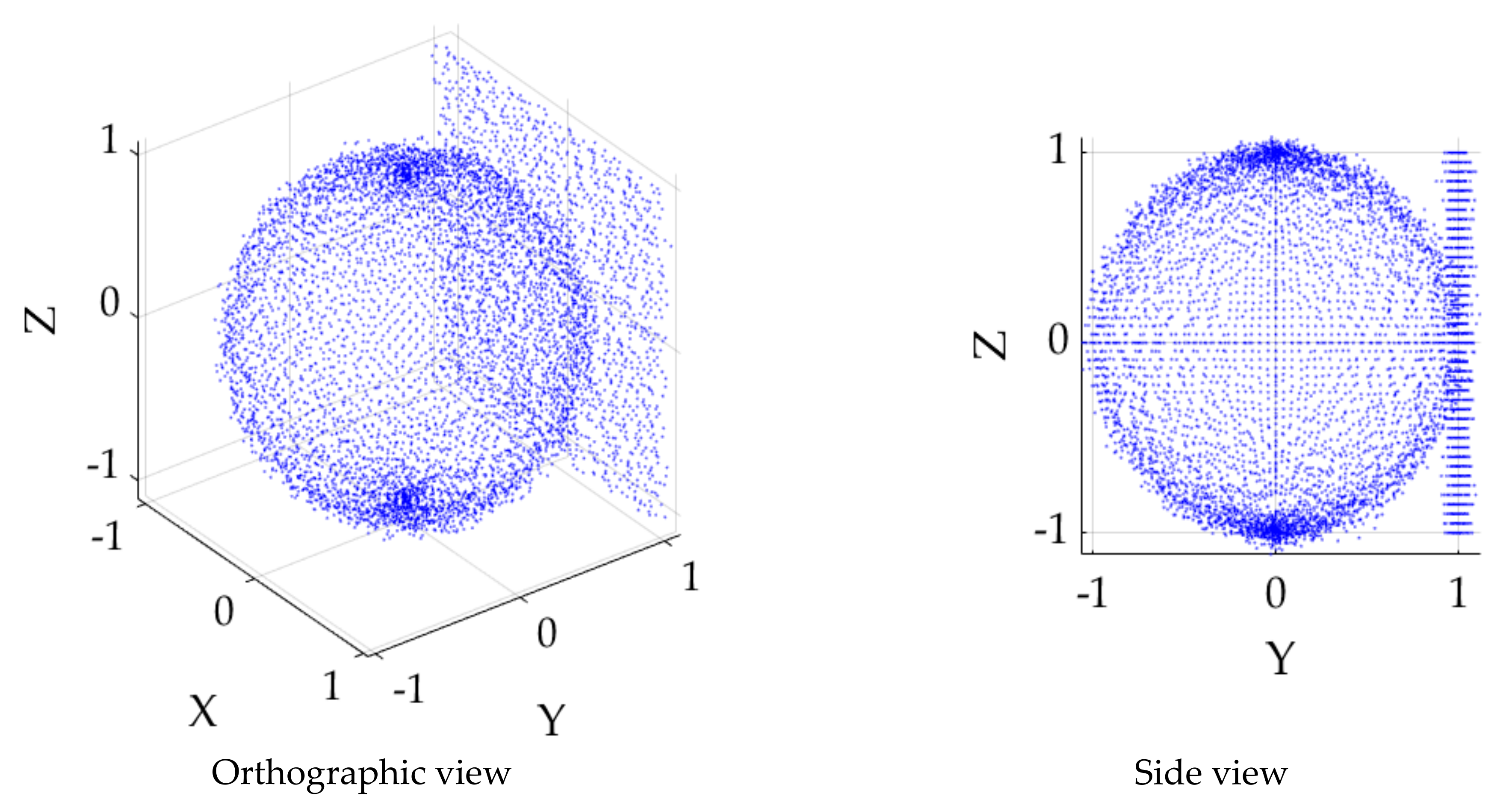

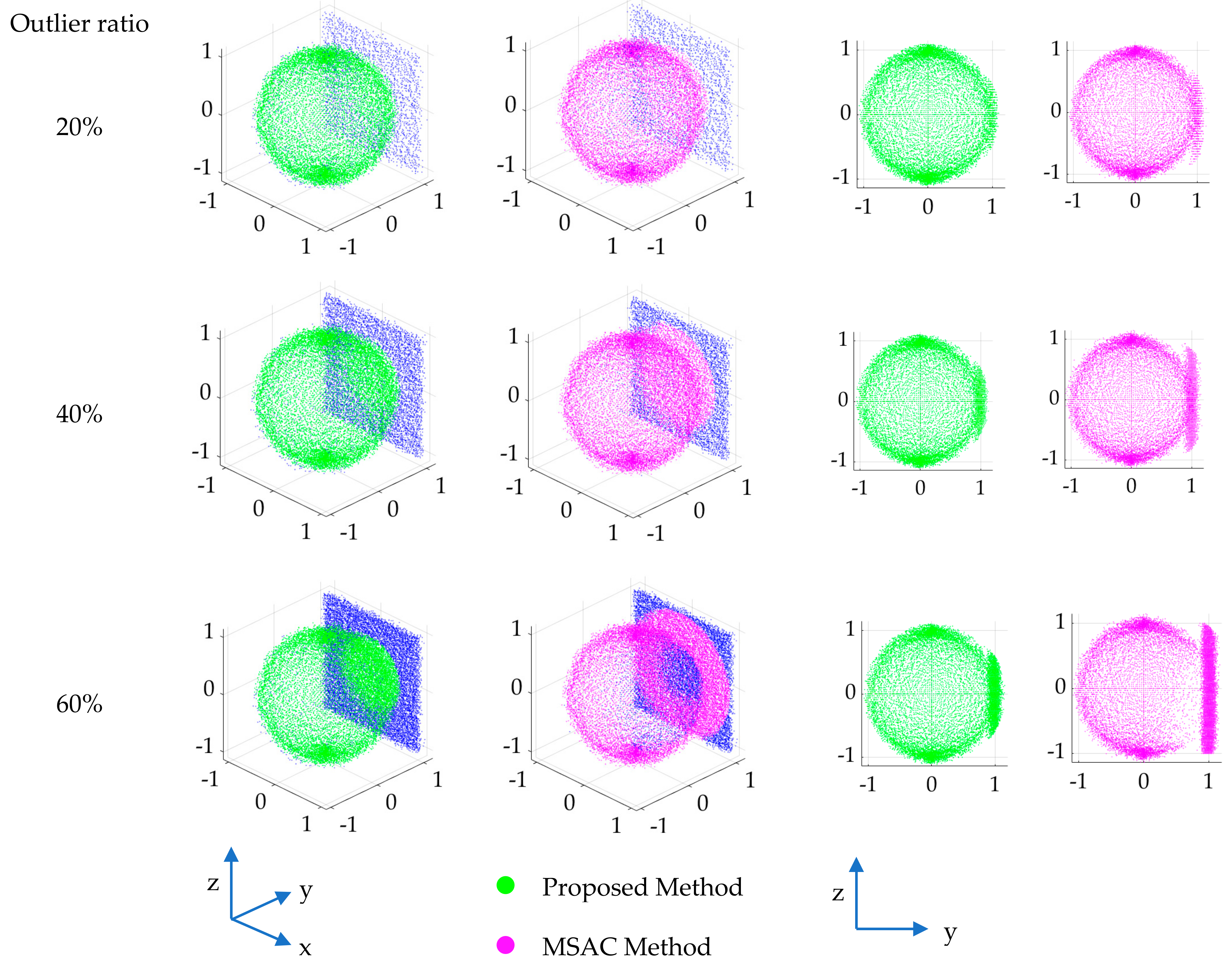

2.3. Robust Sphere Detection in 3D Point Clouds

The validation of the estimated 3D reconstructed center of the sphere for the experiment presented in

Section 2.2 is predicated on an accurate and reliable methodology to fit spheres to 3D reconstructed point clouds. To this end, a new methodology for robust fitting of spheres to 3D point clouds was developed. The proposed method extends the direct hyperaccurate least squares circle fit of Chernov [

13,

14] to spheres. The extension of Chernov’s hyperaccurate method was combined with the robust model fitting algorithm of Maalek, presented in Appendix B (Algorithm A1) of [

15] to minimize the impact of outliers on the estimated sphere parameters (i.e., radius and center). The hyperaccurate direct circle fitting to 2D points was extended here for fitting spheres to 3D points because the original method was proven to eliminate the essential bias of the estimated radius of the best fit circle [

14]. The new extension to the direct hyperaccurate sphere fitting is presented in Algorithm 2 using singular value decomposition to provide optimal numerical stability [

14]. Furthermore, reliable outlier detection is key because it provides the necessary basis to fit the sphere to only the spherical points and eliminate the adverse effects of outliers on the estimated sphere parameters. The methodology of Maalek for outlier detection was utilized here because this method was shown to be superior to popular robust methodologies, such as random sample and consensus (RANSAC) shape detection [

15] as well as the least trimmed squared (LTS) method in linear regression [

16]. The hyperaccurate circle fitting together with Maalek’s outlier detection method was also proven reliable for robust circle and cylinder fitting to 3D point clouds [

16,

17]. It is, hence, hypothesized that this method will produce reliable and robust sphere fitting results for the experiment presented in this study. The effectiveness of the newly proposed sphere fitting will also be investigated in this study on simulated point cloud datasets.

| Algorithm 2 Hyperaccurate Direct Algebraic Sphere Fit |

| Inputs: Point cloud, , , where is the number of observations. |

| Outputs: Estimated best fit least squares sphere’s radius, , and center, . |

Calculate the mean of the observations . Compute for (). Construct the matrix as follows:

Perform the singularvalue decomposition on . Calculate the algebraic parameter vector of the best fit sphere, , as follows:

- 5.1.

If (the singular case), set , where is the element corresponding the the th row and th column of , is the vector corresponding to the 5 th column of , and is the algebraic paramer vector of the sphere. is custumary for practical applications [ 14]. - 5.2.

Else, perform the following steps:

- 5.2.1.

Calculate the mean of the first column of : (: defined in Step 2). - 5.2.2.

Construct matrix as follows:

- 5.2.3.

Calculate the matrix multiplication using steps 4 and 6.2. - 5.2.4.

Find the eigenvector, , corresponding to the smallest non-negative eigenvalue of . - 5.2.5.

Calculate the algebraic parameter vector of the sphere: .

Use the following equations to calculate the radius, , and center, , of the sphere:

where represents the th element of the sphere’s algebraic parameter vector, .

|