UAV-Based Land Cover Classification for Hoverfly (Diptera: Syrphidae) Habitat Condition Assessment: A Case Study on Mt. Stara Planina (Serbia)

Abstract

:1. Introduction

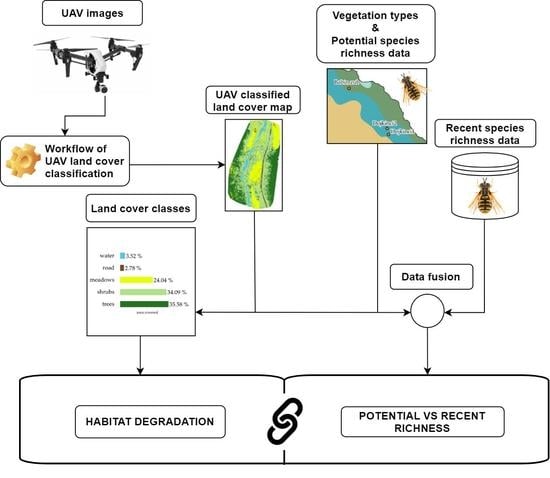

1.1. General Overview and Objectives of the Study

- Obtain very high resolution (VHR) land cover maps in three study sites using UAV acquired imagery and the framework proposed by De Luca et al [38];

- Determine the hoverfly habitat degradation coverage in the three designated study sites using precise land cover classification and biodiversity expert knowledge;

- Compare corresponding habitat degradation coverage with the difference in potential and recent richness of hoverfly species in three study sites and evaluate its utility for the habitat condition assessment;

- Provide guidance in a state-of-the art remote sensing toolbox specifically targeting research in spatial and landscape ecology that requires such tools. Data processing workflows are also available in the form of free tutorials for researchers interested in replicating the study or using the same/similar experimental settings, providing research reproducibility.

1.2. Background

2. Materials and Methods

2.1. Study Sites

2.2. Methodology, UAV Data Acquisition, and Processing Outputs

2.3. Object-Based Image Detection

Selection of the Polygons for Training and Validation Set

2.4. Accuracy Assessment Metrics

2.5. The Map of the Natural Vegetation of Europe (EPNV)

2.6. Studied Hoverfly Material

3. Results

3.1. Accuracies of the Classification Algorithms

3.2. Land Cover Classification Maps

4. Discussion

4.1. Data Acquisition in Complex Landscapes

4.2. Segmentation, Classifier’s Optimization, and Performances

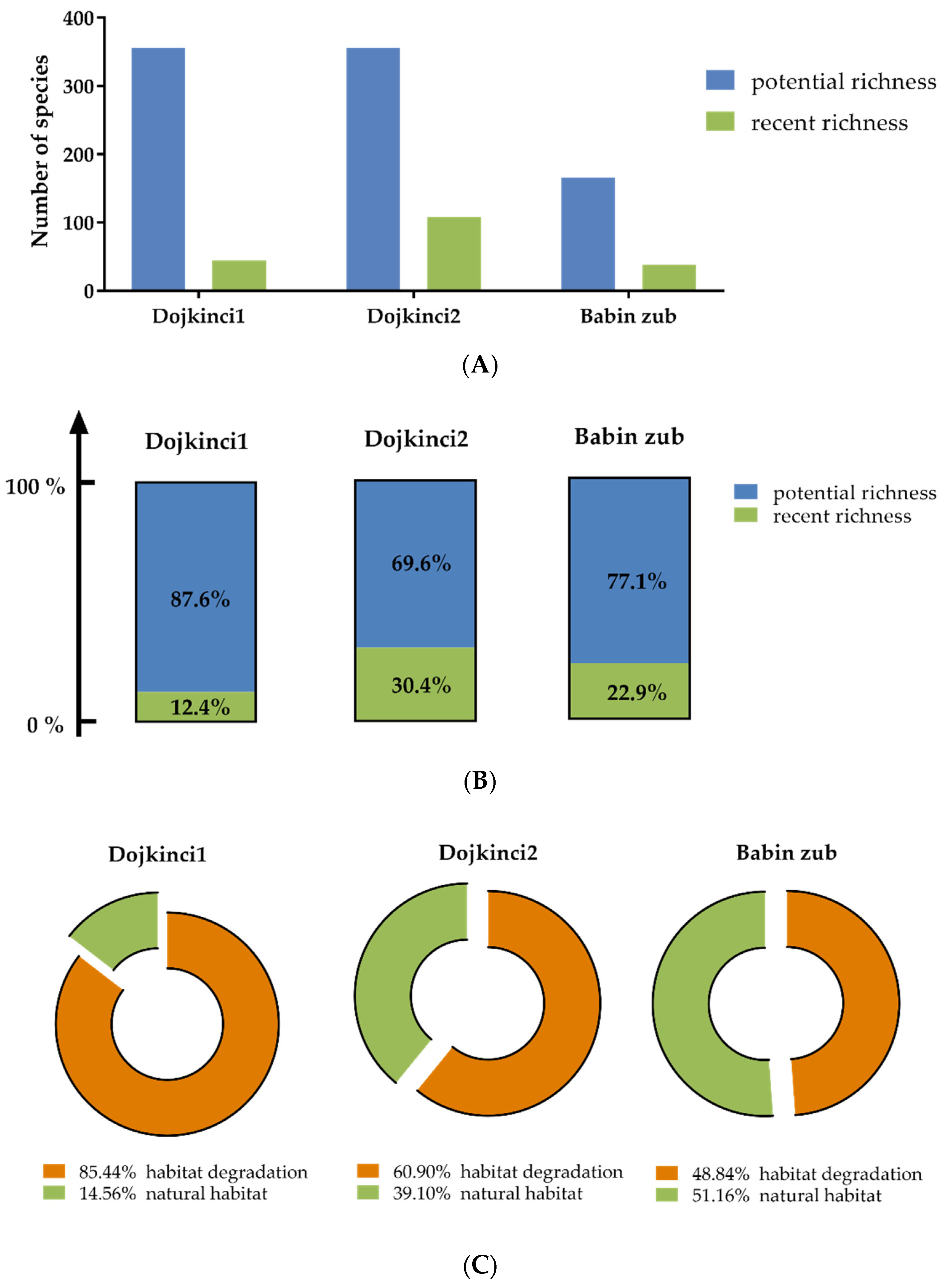

4.3. Potential and Recent Hoverfly Species Richness in Relation to Habitat Condition Assessment

4.4. Publicly Available Data

5. Conclusions

- It is possible to obtain VHR UAV land cover classified maps in more heterogeneous study sites, but pinpointing limiting factors of data acquired in a complex area with a high slope of the terrain needs to be addressed;

- Proposed UAV-based land cover classification, along with both the potential vegetation types obtained from the EPNV map and biodiversity expert knowledge, can be applied in order to quantify habitat degradation in selected study areas;

- The initial results of linking the quantified habitat degradation with the biodiversity loss indicate the utility of the proposed framework;

- Comprehensive supplementary materials, including image processing steps for producing the land cover classified map in the form of a video recording guidance, along with raw data, ensure research reproducibility.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Harwood, T.D.; Donohue, R.J.; Williams, K.J.; Ferrier, S.; McVicar, T.R.; Newell, G.; White, M. Habitat Condition Assessment System: A new way to assess the condition of natural habitats for terrestrial biodiversity across whole regions using remote sensing data. Methods Ecol. Evol. 2016, 7, 1050–1059. [Google Scholar] [CrossRef]

- Komisija. Eiropas Commission Note on Setting Conservation Objectives for Natura 2000 Sites; Final Version 23, 8; European Commission: Brussels, Belgium, 2012. [Google Scholar]

- Fischer, J.; Lindenmayer, D.B. Landscape modification and habitat fragmentation: A synthesis. Glob. Ecol. Biogeogr. 2007, 16, 265–280. [Google Scholar] [CrossRef]

- Fritz, A.; Li, L.; Storch, I.; Koch, B. UAV-derived habitat predictors contribute strongly to understanding avian species-habitat relationships on the Eastern Qinghai-Tibetan Plateau. Remote Sens. Ecol. Conserv. 2018, 4, 53–65. [Google Scholar] [CrossRef] [Green Version]

- Laliberte, A.S.; Herrick, J.E.; Rango, A.; Winters, C. Acquisition, Orthorectification, and Object-based Classification of Unmanned Aerial Vehicle (UAV) Imagery for Rangeland Monitoring. Photogramm. Eng. Remote Sens. 2010, 76, 661–672. [Google Scholar] [CrossRef]

- Shahbazi, M.; Theau, J.; Ménard, P. Recent applications of unmanned aerial imagery in natural resource management. GIScience Remote Sens. 2014, 51, 339–365. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Moon, H.-G.; Lee, S.-M.; Cha, J.-G. Land cover classification using UAV imagery and object-based image analysis-focusing on the Maseo-Myeon, Seocheon-Gun, Chungcheongnam-Do. J. Korean Assoc. Geogr. Inf. Stud. 2017, 20, 1–14. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef] [Green Version]

- Vincent, J.B.; Werden, L.; Ditmer, M.A. Barriers to adding UAVs to the ecologist’s toolbox. Front. Ecol. Environ. 2015, 13, 74–75. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of Drone Ecology: Low-Cost Autonomous Aerial Vehicles for Conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Ogden, L.E. Drone ecology. BioScience 2013, 63, 776. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Horning, N.; Fleishman, E.; Ersts, P.J.; Fogarty, F.A.; Zillig, M.W. Mapping of land cover with open-source software and ultra-high-resolution imagery acquired with unmanned aerial vehicles. Remote Sens. Ecol. Conserv. 2020, 6, 487–497. [Google Scholar] [CrossRef] [Green Version]

- Ivosevic, B.; Han, Y.-G.; Cho, Y.; Kwon, O. The use of conservation drones in ecology and wildlife research. J. Ecol. Environ. 2015, 38, 113–118. [Google Scholar] [CrossRef] [Green Version]

- Nex, F.C.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2013, 6, 1–15. [Google Scholar] [CrossRef]

- Shi, J.; Wang, J.; Xu, Y. Object-based change detection using georeferenced UAV images. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 38, 177–182. [Google Scholar] [CrossRef] [Green Version]

- European Environment Agency (EEA). The Pan-European Component. Available online: https://land.copernicus.eu/pan-european (accessed on 1 March 2021).

- Bohn, U.; Gollub, G.; Hettwer, C.; Neuhäuslová, Z.; Raus, T.; Schlüter, H.; Weber, H. Map of the Natural Vegetation of Europe. Scale 1: 2500000; Bundesamt für Naturschutz: Bonn, Germany, 2000. [Google Scholar]

- IPBES. Intergovernmental Science-Policy Platform on Biodiversity and Ecosystem Services; Brondizio, E.S., Settele, J., Díaz, S., Ngo, N.T., Eds.; IPBES Secretariat: Bonn, Germany, 2019. [Google Scholar]

- Ghazoul, J. Buzziness as usual? Questioning the global pollination crisis. Trends. Ecol. Evol. 2005, 20, 367–373. [Google Scholar] [CrossRef]

- Kluser, S.; Pascal, P. Global Pollinator Decline: A Literature Review; UNEP/GRID-Europe: Geneva, Switzerland, 2007; pp. 1–10. [Google Scholar]

- Potts, S.G.; Biesmeijer, J.C.; Kremen, C.; Neumann, P.; Schweiger, O.; Kunin, W.E. Global pollinator declines: Trends, impacts and drivers. Trends Ecol. Evol. 2010, 25, 345–353. [Google Scholar] [CrossRef]

- Radenkovic, S.; Schweiger, O.; Milic, D.; Harpke, A.; Vujić, A. Living on the edge: Forecasting the trends in abundance and distribution of the largest hoverfly genus (Diptera: Syrphidae) on the Balkan Peninsula under future climate change. Biol. Conserv. 2017, 212, 216–229. [Google Scholar] [CrossRef]

- Rhodes, C.J. Pollinator Decline—An Ecological Calamity in the Making? Sci. Prog. 2018, 101, 121–160. [Google Scholar] [CrossRef]

- Speight, M.C.D. Species Accounts of European Syrphidae. Syrph Net Database Eur. Syrphidae 2018, 103, 302–305. [Google Scholar]

- Rotheray, G.E.; Gilbert, F. The Natural History of Hoverflies; Forrest Text: Cardigan, UK, 2011; pp. 333–422. [Google Scholar]

- Van Veen, M.P. Hoverflies of Northwest Europe: Identification Keys to the Syrphidae; KNNV Publishing: Utrecht, The Netherlands, 2004; ISBN 978-90-5011-199-7. [Google Scholar]

- Kaloveloni, A.; Tscheulin, T.; Vujić, A.; Radenković, S.; Petanidou, T. Winners and losers of climate change for the genus Merodon (Diptera: Syrphidae) across the Balkan Peninsula. Ecol. Model. 2015, 313, 201–211. [Google Scholar] [CrossRef]

- Jovičić, S.; Burgio, G.; Diti, I.; Krašić, D.; Markov, Z.; Radenković, S.; Vujić, A. Influence of landscape structure and land use on Merodon and Cheilosia (Diptera: Syrphidae): Contrasting responses of two genera. J. Insect Conserv. 2017, 21, 53–64. [Google Scholar] [CrossRef]

- Popov, S.; Miličić, M.; Diti, I.; Marko, O.; Sommaggio, D.; Markov, Z.; Vujić, A. Phytophagous hoverflies (Diptera: Syrphidae) as indicators of changing landscapes. Community Ecol. 2017, 18, 287–294. [Google Scholar] [CrossRef]

- Naderloo, M.; Rad, S.P. Diversity of Hoverfly (Diptera: Syrphidae) Communities in Different Habitat Types in Zanjan Province, Iran. ISRN Zoöl. 2014, 2014, 1–5. [Google Scholar] [CrossRef]

- Vujić, A.; Radenković, S.; Nikolić, T.; Radišić, D.; Trifunov, S.; Andrić, A.; Markov, Z.; Jovičić, S.; Stojnić, S.M.; Janković, M.; et al. Prime Hoverfly (Insecta: Diptera: Syrphidae) Areas (PHA) as a conservation tool in Serbia. Biol. Conserv. 2016, 198, 22–32. [Google Scholar] [CrossRef]

- Schweiger, O.; Musche, M.; Bailey, D.; Billeter, R.; Diekötter, T.; Hendrickx, F.; Herzog, F.; Liira, J.; Maelfait, J.-P.; Speelmans, M.; et al. Functional richness of local hoverfly communities (Diptera, Syrphidae) in response to land use across temperate Europe. Oikos 2006, 116, 461–472. [Google Scholar] [CrossRef] [Green Version]

- Karr, A.F. Why data availability is such a hard problem. Stat. J. IAOS 2014, 30, 101–107. [Google Scholar] [CrossRef]

- De Luca, G.; Silva, J.M.N.; Cerasoli, S.; Araújo, J.; Campos, J.; Di Fazio, S.; Modica, G. Object-Based Land Cover Classification of Cork Oak Woodlands using UAV Imagery and Orfeo ToolBox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Use of Unmanned Aerial Systems for multispectral survey and tree classification: A test in a park area of northern Italy. Eur. J. Remote Sens. 2014, 47, 251–269. [Google Scholar] [CrossRef]

- Natesan, S.; Benari, G.; Armenakis, C.; Lee, R. Land cover classification using a UAV-borne spectrometer. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 269–273. [Google Scholar] [CrossRef] [Green Version]

- Shin, J.S.; Lee, T.H.; Jung, P.M.; Kwon, H.S. A Study on Land Cover Map of UAV Imagery using an Object-based Classification Method. J. Korean Soc. Geospat. Inf. Syst. 2015, 23, 25–33. [Google Scholar] [CrossRef] [Green Version]

- Kalantar, B.; Bin Mansor, S.; Sameen, M.; Pradhan, B.; Shafri, H.Z.M. Drone-based land-cover mapping using a fuzzy unordered rule induction algorithm integrated into object-based image analysis. Int. J. Remote Sens. 2017, 38, 2535–2556. [Google Scholar] [CrossRef]

- Devereux, B.; Amable, G.; Posada, C.C. An efficient image segmentation algorithm for landscape analysis. Int. J. Appl. Earth Obs. Geoinformation 2004, 6, 47–61. [Google Scholar] [CrossRef]

- Wang, L.; Sousa, W.P.; Gong, P. Integration of object-based and pixel-based classification for mapping mangroves with IKONOS imagery. Int. J. Remote Sens. 2004, 25, 5655–5668. [Google Scholar] [CrossRef]

- Weih, R.C.; Riggan, N.D. Object-based classification vs. pixel-based classification: Comparative importance of multi-resolution imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 6. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Hay, G.J.; Castilla, G. Geographic object-based image analysis (GEOBIA): A new name for a new discipline. In Object-Based Image Analysis; Blaschke, T., Lang, S., Hay, G.J., Eds.; Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2008; pp. 75–89. ISBN 978-3-540-77057-2. [Google Scholar]

- Addink, E.A.; Van Coillie, F.M.; De Jong, S.M. Introduction to the GEOBIA 2010 special issue: From pixels to geographic objects in remote sensing image analysis. Int. J. Appl. Earth Obs. Geoinf. 2012, 15, 1–6. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V.; Halin, A.A.; Ueda, N.; Mansor, S. Land Cover Classification from fused DSM and UAV Images Using Convolutional Neural Networks. Remote Sens. 2019, 11, 1461. [Google Scholar] [CrossRef] [Green Version]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and Classification of Ecologically Sensitive Marine Habitats Using Unmanned Aerial Vehicle (UAV) Imagery and Object-Based Image Analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef] [Green Version]

- Bohn, U.; Zazanashvili, N.; Nakhutsrishvili, G. The map of the natural vegetation of Europe and its application in the Caucasus ecoregion. Bull. Georgian Natl. Acad. Sci. 2007, 175, 11. [Google Scholar]

- FAO Forestry Paper 163 FAO (Food and Agriculture Organization of the United Nations). Global Forest Resources Assessment Main Report; FAO: Rome, Italy, 2010. [Google Scholar]

- Smith, G.; Harriet, G. European Forests and Protected Areas: Gap Analysis; UNEP World Conservation Monitoring Centre: Cambridge, UK, 2000. [Google Scholar]

- Bohn, U.; Gollub, G. The Use and Application of the Map of the Natural Vegetation of Europe with Particular Reference to Germany; Biology and Environment: Proceedings of the Royal Irish Academy; Royal Irish Academy: Dublin, Ireland, 2006. [Google Scholar]

- Marinova, E.; Thiebault, S. Anthracological analysis from Kovacevo, southwest Bulgaria: Woodland vegetation and its use during the earliest stages of the European Neolithic. Veg. Hist. Archaeobot. 2007, 17, 223–231. [Google Scholar] [CrossRef] [Green Version]

- Miličić, M.; Popov, S.; Vujić, A.; Ivošević, B.; Cardoso, P. Come to the dark side! The role of functional traits in shaping dark diversity patterns of south-eastern European hoverflies. Ecol. Èntomol. 2019, 45, 232–242. [Google Scholar] [CrossRef]

- Stumph, B.; Virto, M.H.; Medeiros, H.; Tabb, A.; Wolford, S.; Rice, K.; Leskey, T. Detecting Invasive Insects with Unmanned Aerial Vehicles. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA); IEEE: Montreal, QC, Canada, 20 May 2019; pp. 648–654. [Google Scholar]

- Ivošević, B.; Han, Y.-G.; Kwon, O. Monitoring butterflies with an unmanned aerial vehicle: Current possibilities and future potentials. J. Ecol. Environ. 2017, 41, 12. [Google Scholar] [CrossRef]

- Kim, H.G.; Park, J.-S.; Lee, D.-H. Potential of Unmanned Aerial Sampling for Monitoring Insect Populations in Rice Fields. Fla. Èntomol. 2018, 101, 330–334. [Google Scholar] [CrossRef]

- De Castro, A.I.; Jiménez-Brenes, F.M.; Torres-Sánchez, J.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. 3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications. Remote Sens. 2018, 10, 584. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep learning versus Object-based Image Analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Yurtseven, H.; Akgul, M.; Coban, S.; Gülci, S. Determination and accuracy analysis of individual tree crown parameters using UAV based imagery and OBIA techniques. Measurement 2019, 145, 651–664. [Google Scholar] [CrossRef]

- Sibaruddin, H.I.; Shafri, H.Z.M.; Pradhan, B.; Haron, N.A. UAV-based approach to extract topographic and as-built information by utilising the OBIA technique. J. Geosci. Geomat. 2018, 6, 21. [Google Scholar]

- Papakonstantinou, A.; Stamati, C.; Topouzelis, K. Comparison of True-Color and Multispectral Unmanned Aerial Systems Imagery for Marine Habitat Mapping Using Object-Based Image Analysis. Remote Sens. 2020, 12, 554. [Google Scholar] [CrossRef] [Green Version]

- Ivanišević, B.; Savić, S.; Saboljević, M.; Niketić, M.; Zlatković, B.; Tomović, G.; Lakušić, D.; Ranđelović, V.; Ćetković, A.; Pavićević, D.; et al. “Biodiversity of the Stara Planina Mt. in Serbia”—Results of the Project “Trans-Boundary Cooperation through the Management of Shared Natural Resources”; The Regional Environmental Center for Central and Eastern Europe (REC): Belgrade, Serbia, 2007; ISBN 978-86-7550-050-6. [Google Scholar]

- Popović, M.; Đurić, M. Butterflies of Stara Planina (Lepidoptera: Papilionoidea); Srbijašume: Belgrade, Serbia, 2014. [Google Scholar]

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open source processing of remote sensing images. Open Geospat. Data Softw. Stand. 2017, 2, 15. [Google Scholar] [CrossRef] [Green Version]

- Torres-Sánchez, J.; Lopez-Granados, F.; Peña-Barragan, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- OTB Development Team. OTB CookBook Documentation; CNES: Pais, France, 2019; Available online: https://www.orfeo-toolbox.org/ (accessed on 15 January 2021).

- CNES OTB Development Team. Software Guide; CNES: Paris, France, 2018. [Google Scholar]

- Cresson, R. A Framework for Remote Sensing Images Processing Using Deep Learning Techniques. IEEE Geosci. Remote Sens. Lett. 2018, 16, 25–29. [Google Scholar] [CrossRef] [Green Version]

- Michel, J.; Youssefi, D.; Grizonnet, M. Stable Mean-Shift Algorithm and Its Application to the Segmentation of Arbitrarily Large Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 952–964. [Google Scholar] [CrossRef]

- Kecman, V. Support Vector Machines–An introduction. In Support Vector Machines: Theory and Applications; Wang, L., Ed.; Studies in Fuzziness and Soft Computing; Springer: Berlin/Heidelberg, Germany, 2005; Volume 177, pp. 1–47. ISBN 978-3-540-24388-5. [Google Scholar]

- Mountrakis, G. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 13. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Kramer, O. K-nearest neighbors. In Dimensionality Reduction with Unsupervised Nearest Neighbors; Intelligent Systems Reference Library; Springer: Berlin/Heidelberg, Germany, 2013; Volume 51, pp. 13–23. ISBN 978-3-642-38651-0. [Google Scholar]

- Peterson, L.E. K-nearest neighbor. Scolarpedia 2009, 4, 1883. [Google Scholar] [CrossRef]

- Noi, P.T.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2017, 18, 18. [Google Scholar] [CrossRef] [Green Version]

- Tadele, H.; Mekuriaw, A.; Selassie, Y.G.; Tsegaye, L. Land Use/Land Cover Factor Values and Accuracy Assessment Using a GIS and Remote Sensing in the Case of the Quashay Watershed in Northwestern Ethiopia. J. Nat. Resour. Dev. 2017, 38–44. [Google Scholar] [CrossRef] [Green Version]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 276–282. [Google Scholar] [CrossRef]

- De Mast, J. Agreement and Kappa-Type Indices. Am. Stat. 2007, 61, 148–153. [Google Scholar] [CrossRef] [Green Version]

- Nichols, T.R.; Wisner, P.M.; Cripe, G.; Gulabchand, L. Putting the Kappa Statistic to Use. Qual. Assur. J. 2010, 13, 57–61. [Google Scholar] [CrossRef]

- Law on Nature Protection. Strictly Protected Wild Species of Plants, Animals and Fungi; Official Gazette of RS, no. 36/2009, 88/2010 and 91/2010; corr. and 14/2016; Official Gazette of the Republic of Serbia: Belgrade, Serbia, 2016. [Google Scholar]

- Soumya, A.; Kumar, G.H. Performance Analysis of Random Forests with SVM and KNN in Classification of Ancient Kannada Scripts. Int. J. Comput. Technol. 2014, 13, 4907–4921. [Google Scholar] [CrossRef]

- Siebring, J.; Valente, J.; Franceschini, M.H.D.; Kamp, J.; Kooistra, L. Object-Based Image Analysis Applied to Low Altitude Aerial Imagery for Potato Plant Trait Retrieval and Pathogen Detection. Sensors 2019, 19, 5477. [Google Scholar] [CrossRef] [Green Version]

- Distortions and Artifacts in the Orthomosaic. Available online: https://support.pix4d.com/hc/en-us/articles/202561099-Distortions-and-Artifacts-in-the-Orthomosaic (accessed on 1 March 2021).

- Using GCPs. Available online: https://support.pix4d.com/hc/en-us/articles/202558699-Using-GCPs (accessed on 15 March 2021).

- Hung, I.-K.; Unger, D.; Kulhavy, D.; Zhang, Y. Positional Precision Analysis of Orthomosaics Derived from Drone Captured Aerial Imagery. Drones 2019, 3, 46. [Google Scholar] [CrossRef] [Green Version]

- Momeni, R.; Aplin, P.; Boyd, D.S. Mapping Complex Urban Land Cover from Spaceborne Imagery: The Influence of Spatial Resolution, Spectral Band Set and Classification Approach. Remote Sens. 2016, 8, 88. [Google Scholar] [CrossRef] [Green Version]

- Husson, E.; Reese, H.; Ecke, F. Combining Spectral Data and a DSM from UAS-Images for Improved Classification of Non-Submerged Aquatic Vegetation. Remote Sens. 2017, 9, 247. [Google Scholar] [CrossRef] [Green Version]

- Vujić, A. Genus Cheilosia Meigen and Related Genera (Diptera: Syrphidae) on the Balkan Peninsula: Rod Cheilosia Meigen i Srodni Rodovi (Diptera: Syrphidae) Na Balkanskom Poluostrvu; Matica Srpska: Novi Sad, Serbia, 1996. [Google Scholar]

- Tsaftaris, S.A.; Scharr, H. Sharing the Right Data Right: A Symbiosis with Machine Learning. Trends Plant Sci. 2019, 24, 99–102. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Technical Characteristics of Inspire 1 | |

| Max weight | 3060 g |

| Max take-off weight | 3500 g |

| Max flight time | Approx. 18 min |

| Max speed | 49 mph |

| Max wind speed resistance | 10 m/s |

| Max tilt angle | 35° |

| Zenmuse X3 RGB | |

| Bands | Red, Green, Blue |

| Sensor size | 1/2.3′′ |

| Effective pixels | 12.4M |

| Lens | 20 mm |

| Field of view | 94° |

| Image max size | 4000 × 3000 |

| Zenmuse X3 NDVI | |

| Bands | Red, Green, NIR |

| Sensor size | 1/2.3′′ |

| Effective pixels | 12.4M |

| Lens | 20mm |

| Field of view | 94° |

| Image max size | 4000 × 3000 |

| Peak transmissions | 90.41% (446 nm) + 93.28% (800 nm) |

| Dojkinci1 | Dojkinci2 | Babin Zub |

|---|---|---|

| Class 1—trees | Class 1—trees | Class 1—trees |

| Class 2—shrubs | Class 2—shrubs | Class 2—shrubs |

| Class 3—meadows | Class 3—meadows | Class 3—meadows |

| Class 4—road | Class 4—road | Class 4—road |

| Class 5—water | Class 5—water | Class 5—forest patches * |

| Class 6—agricultural land |

| Dojkinci1 | Dojkinci2 | Babin Zub | ||||

|---|---|---|---|---|---|---|

| Class | Training | Validation | Training | Validation | Training | Validation |

| 1 | 2537 | 744 | 2905 | 500 | 1204 | 212 |

| 2 | 2585 | 384 | 3205 | 487 | 1048 | 190 |

| 3 | 2837 | 502 | 2900 | 500 | 1200 | 200 |

| 4 | 2487 | 501 | 2976 | 500 | 1061 | 200 |

| 5 | 2246 | 571 | 2890 | 500 | 1016 | 206 |

| 6 | 1928 | 404 | / | / | / | / |

| Total | 14,620 | 3106 | 14,876 | 2487 | 5529 | 1008 |

| Sum of all polygons | 345,829 | 340,321 | 117,239 | |||

| Dojkinci1 | Dojkinci2 | Babin Zub | ||||

|---|---|---|---|---|---|---|

| OA | K | OA | K | OA | k | |

| SVM | 0.89 | 0.87 | 0.81 | 0.77 | 0.86 | 0.83 |

| RF | 0.96 | 0.95 | 0.87 | 0.84 | 0.91 | 0.89 |

| k-NN | 0.91 | 0.89 | 0.82 | 0.77 | 0.83 | 0.79 |

| Time Duration for Training [s] | |||

|---|---|---|---|

| SVM | RF | k-NN | |

| Dojkinci1 | 1174.15 | 4.20 | 1.32 |

| Dojkinci2 | 1399.76 | 4.65 | 1.19 |

| Babin zub | 154.47 | 1.60 | 0.46 |

| Average | 909.46 | 3.48 | 0.99 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ivošević, B.; Lugonja, P.; Brdar, S.; Radulović, M.; Vujić, A.; Valente, J. UAV-Based Land Cover Classification for Hoverfly (Diptera: Syrphidae) Habitat Condition Assessment: A Case Study on Mt. Stara Planina (Serbia). Remote Sens. 2021, 13, 3272. https://doi.org/10.3390/rs13163272

Ivošević B, Lugonja P, Brdar S, Radulović M, Vujić A, Valente J. UAV-Based Land Cover Classification for Hoverfly (Diptera: Syrphidae) Habitat Condition Assessment: A Case Study on Mt. Stara Planina (Serbia). Remote Sensing. 2021; 13(16):3272. https://doi.org/10.3390/rs13163272

Chicago/Turabian StyleIvošević, Bojana, Predrag Lugonja, Sanja Brdar, Mirjana Radulović, Ante Vujić, and João Valente. 2021. "UAV-Based Land Cover Classification for Hoverfly (Diptera: Syrphidae) Habitat Condition Assessment: A Case Study on Mt. Stara Planina (Serbia)" Remote Sensing 13, no. 16: 3272. https://doi.org/10.3390/rs13163272

APA StyleIvošević, B., Lugonja, P., Brdar, S., Radulović, M., Vujić, A., & Valente, J. (2021). UAV-Based Land Cover Classification for Hoverfly (Diptera: Syrphidae) Habitat Condition Assessment: A Case Study on Mt. Stara Planina (Serbia). Remote Sensing, 13(16), 3272. https://doi.org/10.3390/rs13163272